Predicting Progress: A Pilot of Expected Utility Forecasting in Science Funding

Read more about expected utility forecasting and science funding innovation here.

The current process that federal science agencies use for reviewing grant proposals is known to be biased against riskier proposals. As such, the metascience community has proposed many alternate approaches to evaluating grant proposals that could improve science funding outcomes. One such approach was proposed by Chiara Franzoni and Paula Stephan in a paper on how expected utility — a formal quantitative measure of predicted success and impact — could be a better metric for assessing the risk and reward profile of science proposals. Inspired by their paper, the Federation of American Scientists (FAS) collaborated with Metaculus to run a pilot study of this approach. In this working paper, we share the results of that pilot and its implications for future implementation of expected utility forecasting in science funding review.

Brief Description of the Study

In fall 2023, we recruited a small cohort of subject matter experts to review five life science proposals by forecasting their expected utility. For each proposal, this consisted of defining two research milestones in consultation with the project leads and asking reviewers to make three forecasts for each milestone:

- the probability of success;

- The scientific impact of the milestone, if it were reached; and

- The social impact of the milestone, if it were reached.

These predictions can then be used to calculate the expected utility, or likely impact, of a proposal and design and compare potential portfolios.

Key Takeaways for Grantmakers and Policymakers

The three main strengths of using expected utility forecasting to conduct peer review are

- For reviewers, it’s a relatively light-touch approach that encourages rigor and reduces anti-risk bias in scientific funding.

- The review criteria allow program managers to better understand the risk-reward profile of their grant portfolios and more intentionally shape them according to programmatic goals.

- Quantitative forecasts are resolvable, meaning that program officers can compare the actual outcomes of funded proposals with reviewers’ predictions. This generates a feedback/learning loop within the peer review process that incentivizes reviewers to improve the accuracy of their assessments over time.

Despite the apparent complexity of this process, we found that first-time users were able to successfully complete their review according to the guidelines without any additional support. Most of the complexity occurs behind-the-scenes, and either aligns with the responsibilities of the program manager (e.g., defining milestones and their dependencies) or can be automated (e.g., calculating the total expected utility). Thus, grantmakers and policymakers can have confidence in the user friendliness of expected utility forecasting.

How Can NSF or NIH Run an Experiment on Expected Utility Forecasting?

An initial pilot study could be conducted by NSF or NIH by adding a short, non-binding expected utility forecasting component to a selection of review panels. In addition to the evaluation of traditional criteria, reviewers would be asked to predict the success and impact of select milestones for the proposals assigned to them. The rest of the review process and the final funding decisions would be made using the traditional criteria.

Afterwards, study facilitators could take the expected utility forecasting results and construct an alternate portfolio of proposals that would have been funded if that approach was used, and compare the two portfolios. Such a comparison would yield valuable insights into whether—and how—the types of proposals selected by each approach differ, and whether their use leads to different considerations arising during review. Additionally, a pilot assessment of reviewers’ prediction accuracy could be conducted by asking program officers to assess milestone achievement and study impact upon completion of funded projects.

Findings and Recommendations

Reviewers in our study were new to the expected utility forecasting process and gave generally positive reactions. In their feedback, reviewers said that they appreciated how the framing of the questions prompted them to think about the proposals in a different way and pushed them to ground their assessments with quantitative forecasts. The focus on just three review criteria–probability of success, scientific impact, and social impact–was seen as a strength because it simplified the process, disentangled feasibility from impact, and eliminated biased metrics. Overall, reviewers found this new approach interesting and worth investigating further.

In designing this pilot and analyzing the results, we identified several important considerations for planning such a review process. While complex, engaging with these considerations tended to provide value by making implicit project details explicit and encouraging clear definition and communication of evaluation criteria to reviewers. Two key examples are defining the proposal milestones and creating impact scoring systems. In both cases, reducing ambiguities in terms of the goals that are to be achieved, developing an understanding of how outcomes depend on one another, and creating interpretable and resolvable criteria for assessment will help ensure that the desired information is solicited from reviewers.

Questions for Further Study

Our pilot only simulated the individual review phase of grant proposals and did not simulate a full review committee. The typical review process at a funding agency consists of first, individual evaluations by assigned reviewers, then discussion of those evaluations by the whole review committee, and finally, the submission of final scores from all members of the committee. This is similar to the Delphi method, a structured process for eliciting forecasts from a panel of experts, so we believe that it would work well with expected utility forecasting. The primary change would therefore be in the definition and approach for eliciting criterion scores, rather than the structure of the review process. Nevertheless, future implementations may uncover additional considerations that need to be addressed or better ways to incorporate forecasting into a panel environment.

Further investigation into how best to define proposal milestones is also needed. This includes questions such as, who should be responsible for determining the milestones? If reviewers are involved, at what part(s) of the review process should this occur? What is the right balance between precision and flexibility of milestone definitions, such that the best outcomes are achieved? How much flexibility should there be in the number of milestones per proposal?

Lastly, more thought should be given to how to define social impact and how to calibrate reviewers’ interpretation of the impact score scale. In our report, we propose a couple of different options for calibrating impact, in addition to describing the one we took in our pilot.

Interested grantmakers, both public and private, and policymakers are welcome to reach out to our team if interested in learning more or receiving assistance in implementing this approach.

Introduction

The fundamental concern of grantmakers, whether governmental or philanthropic, is how to make the best funding decisions. All funding decisions come with inherent uncertainties that may pose risks to the investment. Thus, a certain level of risk-aversion is natural and even desirable in grantmaking institutions, especially federal science agencies which are responsible for managing taxpayer dollars. However, without risk, there is no reward, so the trade-off must be balanced. In mathematics and economics, expected utility is the common metric assumed to underlie all rational decision making. Expected utility has two components: the probability of an outcome occurring if an action is taken and the value of that outcome, which roughly corresponds with risk and reward. Thus, expected utility would seem to be a logical choice for evaluating science funding proposals.

In the debates around funding innovation though, expected utility has largely flown under the radar compared to other ideas. Nevertheless, Chiara Franzoni and Paula Stephan have proposed using expected utility in peer review. Building off of their paper, the Federation of American Scientists (FAS) developed a detailed framework for how to implement expected utility into a peer review process. We chose to frame the review criteria as forecasting questions, since determining the expected utility of a proposal inherently requires making some predictions about the future. Forecasting questions also have the added benefit of being resolvable–i.e., the true outcome can be determined after the fact and compared to the prediction–which provides a learning opportunity for reviewers to improve their abilities and identify biases. In addition to forecasting, we incorporated other unique features, like an exponential scale for scoring impact, that we believe help reduce biases against risky proposals.

With the theory laid out, we conducted a small pilot in fall of 2023. The pilot was run in collaboration with Metaculus, a crowd forecasting platform and aggregator, to leverage their expertise in designing resolvable forecasting questions and to use their platform to collect forecasts from reviewers. The purpose of the pilot was to test the mechanics of this approach in practice, see if there are any additional considerations that need to be thought through, and surface potential issues that need to be solved for. We were also curious if there would be any interesting or unexpected results that arise based on how we chose to calculate impact and total expected utility. It is important to note that this pilot was not an experiment, so we did not have a control group to compare the results of the review with.

Since FAS is not a grantmaking institution, we did not have a ready supply of traditional grant proposals to use. Instead, we used a set of two-page research proposals for Focused Research Organizations (FROs) that we had sourced through separate advocacy work in that area.1 With the proposal authors’ permission, we recruited a cohort of twenty subject matter experts to each review one of five proposals. For each proposal, we defined two research milestones in consultation with the proposal authors. Reviewers were asked to make three forecasts for each milestone:

- The probability of success;

- The scientific impact, conditional on success; and

- The social impact, conditional on success.

Reviewers submitted their forecasts on Metaculus’ platform; in a separate form they provided explanations for their forecasts and responded to questions about their experience and impression of this new approach to proposal evaluation. (See Appendix A for details on the pilot study design.)

Insights from Reviewer Feedback

Overall, reviewers liked the framing and criteria provided by the expected utility approach, while their main critique was of the structure of the research proposals. Excluding critiques of the research proposal structure, which are unlikely to apply to an actual grant program, two thirds of the reviewers expressed positive opinions of the review process and/or thought it was worth pursuing further given drawbacks with existing review processes. Below, we delve into the details of the feedback we received from reviewers and their implications for future implementation.

Feedback on Review Criteria

Disentangling Impact from Feasibility

Many of the reviewers said that this model prompted them to think differently about how they assess the proposals and that they liked the new questions. Reviewers appreciated that the questions focused their attention on what they think funding agencies really want to know and nothing more: “can it occur?” and “will it matter?” This approach explicitly disentangles impact from feasibility: “Often, these two are taken together, and if one doesn’t think it is likely to succeed, the impact is also seen as lower.” Additionally, the emphasis on big picture scientific and social impact “is often missing in the typical review process.” Reviewers also liked that this approach eliminates what they consider biased metrics, such as the principal investigator’s reputation, track record, and “excellence.”

Reducing Administrative Burden

The small set of questions was seen as more efficient and less burdensome on reviewers. One reviewer said, “I liked this approach to scoring a proposal. It reduces the effort to thinking about perceived impact and feasibility.” Another reviewer said, “On the whole it seems a worthwhile exercise as the current review processes for proposals are onerous.”

Quantitative Forecasting

Reviewers saw benefits to being asked to quantify their assessments, but also found it challenging at times. A number of reviewers enjoyed taking a quantitative approach and thought that it helped them be more grounded and explicit in their evaluations of the proposals. However, some reviewers were concerned that it felt like guesswork and expressed low confidence in their quantitative assessments, primarily due to proposals lacking details on their planned research methods, which is an issue discussed in the section “Feedback on Proposals.” Nevertheless, some of these reviewers still saw benefits to taking a quantitative approach: “It is interesting to try to estimate probabilities, rather than making flat statements, but I don’t think I guess very well. It is better than simply classically reviewing the proposal [though].” Since not all academics have experience making quantitative predictions, we expect that there will be a learning curve for those new to the practice. Forecasting is a skill that can be learned though, and we think that with training and feedback, reviewers can become better, more confident forecasters.

Defining Social Impact

Of the three types of questions that reviewers were asked to answer, the question about social impact seemed the harder one for reviewers to interpret. Reviewers noted that they would have liked more guidance on what was meant by social impact and whether that included indirect impacts. Since questions like these are ultimately subjective, the “right” definition of social impact and what types of outcomes are considered most valuable will depend on the grantmaking institution, their domain area, and their theory of change, so we leave this open to future implementers to clarify in their instructions.

Calibrating Impact

While the impact score scale (see Appendix A) defines the relative difference in impact between scores, it does not define the absolute impact conveyed by a score. For this reason, a calibration mechanism is necessary to provide reviewers with a shared understanding of the use and interpretation of the scoring system. Note that this is a challenge that rubric-based peer review criteria used by science agencies also face. Discussion and aggregation of scores across a review committee helps align reviewers and average out some of this natural variation.2

To address this, we surveyed a small, separate set of academics in the life sciences about how they would score the social and scientific impact of the average NIH R01 grant, which many life science researchers apply to and review proposals for. We then provided the average scores from this survey to reviewers to orient them to the new scale and help them calibrate their scores.

One reviewer suggested an alternative approach: “The other thing I might change is having a test/baseline question for every reviewer to respond to, so you can get a feel for how we skew in terms of assessing impact on both scientific and social aspects.” One option would be to ask reviewers to score the social and scientific impact of the average grant proposal for a grant program that all reviewers would be familiar with; another would be to ask reviewers to score the impact of the average funded grant for a specific grant program, which could be more accessible for new reviewers who have not previously reviewed grant proposals. A third option would be to provide all reviewers on a committee with one or more sample proposals to score and discuss, in a relevant and shared domain area.

When deciding on an approach for calibration, a key consideration is the specific resolution criteria that are being used — i.e., the downstream measures of impact that reviewers are being asked to predict. One option, which was used in our pilot, is to predict the scores that a comparable, but independent, panel of reviewers would give the project some number of years following its successful completion. For a resolution criterion like this one, collecting and sharing calibration scores can help reviewers get a sense for not just their own approach to scoring, but also those of their peers.

Making Funding Decisions

In scoring the social and scientific impact of each proposal, reviewers were asked to assess the value of the proposal to society or to the scientific field. That alone would be insufficient to determine whether a proposal should be funded though, since it would need to be compared with other proposals in conjunction with its feasibility. To do so, we calculated the total expected utility of each proposal (see Appendix C). In a real funding scenario, this final metric could then be used to compare proposals and determine which ones get funded. Additionally, unlike a traditional scoring system, the expected utility approach allows for the detailed comparison of portfolios — including considerations like the expected proportion of milestones reached and the range of likely impacts.

In our pilot, reviewers were not informed that we would be doing this additional calculation based on their submissions. As a result, one reviewer thought that the questions they were asked failed to include other important questions, like “should it occur?” and “is it worth the opportunity cost?” Though these questions were not asked of reviewers explicitly, we believe that they would be answered once the expected utility of all proposals is calculated and considered, since the opportunity cost of one proposal would be the expected utility of the other proposals. Since each reviewer only provided input on one proposal, they may have felt like the scores they gave would be used to make a binary yes/no decision on whether to fund that one proposal, rather than being considered as a part of a larger pool of proposals, as it would be in a real review process.

Feedback on Proposals

Missing Information Impedes Forecasting

The primary critique that reviewers expressed was that the research proposals lacked details about their research plans, what methods and experimental protocols would be used, and what preliminary research the author(s) had done so far. This hindered their ability to properly assess the technical feasibility of the proposals and their probability of success. A few reviewers expressed that they also would have liked to have had a better sense of who would be conducting the research and each team member’s responsibilities. These issues arose because the FRO proposals used in our pilot had not originally been submitted for funding purposes, and thus lacked the requirements of traditional grant proposals, as we noted above. We assume this would not be an issue with proposals submitted to actual grantmakers.3

Improving Milestone Design

A few reviewers pointed out that some of the proposal milestones were too ambiguous or were not worded specifically enough, such that there were ways that researchers could technically say that they had achieved the milestone without accomplishing the spirit of its intent. This made it more challenging for reviewers to assess milestones, since they weren’t sure whether to focus on the ideal (i.e., more impactful) interpretation of the milestone or to account for these “loopholes.” Moreover, loopholes skew the forecasts, since they increase the probability of achieving a milestone, while lowering the impact of doing so if it is achieved through a loophole.

One reviewer suggested, “I feel like the design of milestones should be far more carefully worded – or broken up into sub-sentences/sub-aims, to evaluate the feasibility of each. As the questions are currently broken down, I feel they create a perverse incentive to create a vaguer milestone, or one that can be more easily considered ‘achieved’ for some ‘good enough’ value of achieved.” For example, they proposed that one of the proposal milestones, “screen a library of tens of thousands of phage genes for enterobacteria for interactions and publish promising new interactions for the field to study,” could be expanded to

- “Generate a library of tens of thousands of genes from enterobacteria, expressed in E. coli

- “Validate their expression under screenable conditions

- “Screen the library for their ability to impede phage infection with a panel of 20 type phages

- “Publish …

- “Store and distribute the library, making it as accessible to the broader community”

We agree with the need for careful consideration and design of milestones, given that “loopholes” in milestones can detract from their intended impact and make it harder for reviewers to accurately assess their likelihood. In our theoretical framework for this approach, we identified three potential parties that could be responsible for defining milestones: (1) the proposal author(s), (2) the program manager, with or without input from proposal authors, or (3) the reviewers, with or without input from proposal authors. This critique suggests that the first approach of allowing proposal authors to be the sole party responsible for defining proposal milestones is vulnerable to being gamed, and the second or third approach would be preferable. Program managers who take on the task of defining milestones should have enough expertise to think through the different potential ways of fulfilling a milestone and make sure that they are sufficiently precise for reviewers to assess.

Benefits of Flexibility in Milestones

Some flexibility in milestones may still be desirable, especially with respect to the actual methodology, since experimentation may be necessary to determine the best technique to use. For example, speaking about the feasibility of a different proposal milestone – “demonstrate that Pro-AG technology can be adapted to a single pathogenic bacterial strain in a 300 gallon aquarium of fish and successfully reduce antibiotic resistance by 90%” – a reviewer noted that

“The main complexity and uncertainty around successful completion of this milestone arises from the native fish microbiome and whether a CRISPR delivery tool can reach the target strain in question. Due to the framing of this milestone, should a single strain be very difficult to reach, the authors could simply switch to a different target strain if necessary. Additionally, the mode of CRISPR delivery is not prescribed in reaching this milestone, so the authors have a host of different techniques open to them, including conjugative delivery by a probiotic donor or delivery by engineered bacteriophage.”

Peer Review Results

Sequential Milestones vs. Independent Outcomes

In our expected utility forecasting framework, we defined two different ways that a proposal could structure its outcomes: as sequential milestones where each additional milestone builds off of the success of the previous one, or as independent outcomes where the success of one is not dependent on the success of the other(s). For proposals with sequential milestones in our pilot, we would expect the probability of success of milestone 2 to be less than the probability of success of milestone 1 and for the opposite to be true of their impact scores. For proposals with independent outcomes, we do not expect there to be a relationship between the probability of success and the impact scores of milestones 1 and 2. There are different equations for calculating the total expected utility, depending on the relationship between outcomes (see Appendix C).

For each of the proposals in our study, we categorized them based on whether they had sequential milestones or independent outcomes. This information was not shared with reviewers. Table 1 presents the average reviewer forecasts for each proposal. In general, milestones received higher scientific impact scores than social impact scores, which makes sense given the primarily academic focus of research proposals. For proposals 1 to 3, the probability of success of milestone 2 was roughly half of the probability of success of milestone 1; reviewers also gave milestone 2 higher scientific and social impact scores than milestone 1. This is consistent with our categorization of proposals 1 to 3 as sequential milestones.

Further Discussion on Designing and Categorizing Milestones

We originally categorized proposal 4’s milestones as sequential, but one reviewer gave milestone 2 a lower scientific impact score than milestone 1 and two reviewers gave it a lower social impact score. One reviewer also gave milestone 2 roughly the same probability of success as milestone 1. This suggests that proposal 4’s milestones can’t be considered strictly sequential.

The two milestones for proposal 4 were

- Milestone 1: Develop a tool that is able to perturb neurons in C. elegans and record from all neurons simultaneously, automated w/ microfluidics, and

- Milestone 2: Develop a model of the C. elegans nervous system that can predict what every neuron will do when stimulating one neuron with R2 > 0.8

The reviewer who gave milestone 2 a lower scientific impact score explained: “Given the wording of the milestone, I do not believe that if the scientific milestone was achieved, it would greatly improve our understanding of the brain.” Unlike proposals 1-3, in which milestone 2 was a scaled-up or improved-upon version of milestone 1, these milestones represent fundamentally different categories of output (general-purpose tool vs specific model). Thus, despite the necessity of milestone 1’s tool for achieving milestone 2, the reviewer’s response suggests that the impact of milestone 2 was being considered separately rather than cumulatively.

To properly address this case of sequential milestones with different types of outputs, we recommend that for all sequential milestones, latter milestones should be explicitly defined as inclusive of prior milestones. In the above example, this would imply redefining milestone 2 as “Complete milestone 1 and develop a model of the C. elegans nervous system…” This way, reviewers know to include the impact of milestone 1 in their assessment of the impact of milestone 2.

To help ensure that reviewers are aligned with program managers in how they interpret the proposal milestones (if they aren’t directly involved in defining milestones), we suggest that either reviewers be informed of how program managers are categorizing the proposal outputs so they can conduct their review accordingly or allow reviewers to decide the category (and thus how the total expected utility is calculated), whether individually or collectively or both.

We chose to use only two of the goals that proposal authors provided because we wanted to standardize the number of milestones across proposals. However, this may have provided an incomplete picture of the proposals’ goals, and thus an incomplete assessment of the proposals. We recommend that future implementations be flexible and allow the number of milestones to be determined based on each proposal’s needs. This would also help accommodate one of the reviewers’ suggestion that some milestones should be broken down into intermediary steps.

Importance of Reviewer Explanations

As one can tell from the above discussion, reviewers’ explanation of their forecasts were crucial to understanding how they interpreted the milestones. Reviewers’ explanations varied in length and detail, but the most insightful responses broke down their reasoning into detailed steps and addressed (1) ambiguities in the milestone and how they chose to interpret ambiguities if they existed, (2) the state of the scientific field and the maturity of different techniques that the authors propose to use, and (3) factors that improve the likelihood of success versus potential barriers or challenges that would need to be overcome.

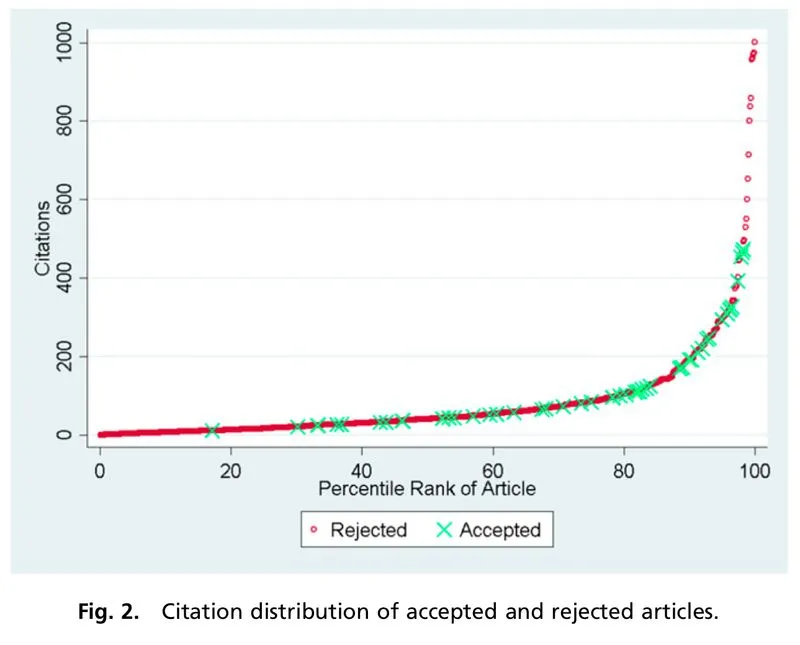

Exponential Impact Scales Better Reflect the Real Distribution of Impact

The distribution of NIH and NSF proposal peer review scores tends to be skewed such that most proposals are rated above the center of the scale and there are few proposals rated poorly. However, other markers of scientific impact, such as citations (even with all of its imperfections), tend to suggest a long tail of studies with high impact. This discrepancy suggests that traditional peer review scoring systems are not well-structured to capture the nonlinearity of scientific impact, resulting in score inflation. The aggregation of scores at the top end of the scale also means that very negative scores have a greater impact than very positive scores when averaged together, since there’s more room between the average score and the bottom end of the scale. This can generate systemic bias against more controversial or risky proposals.

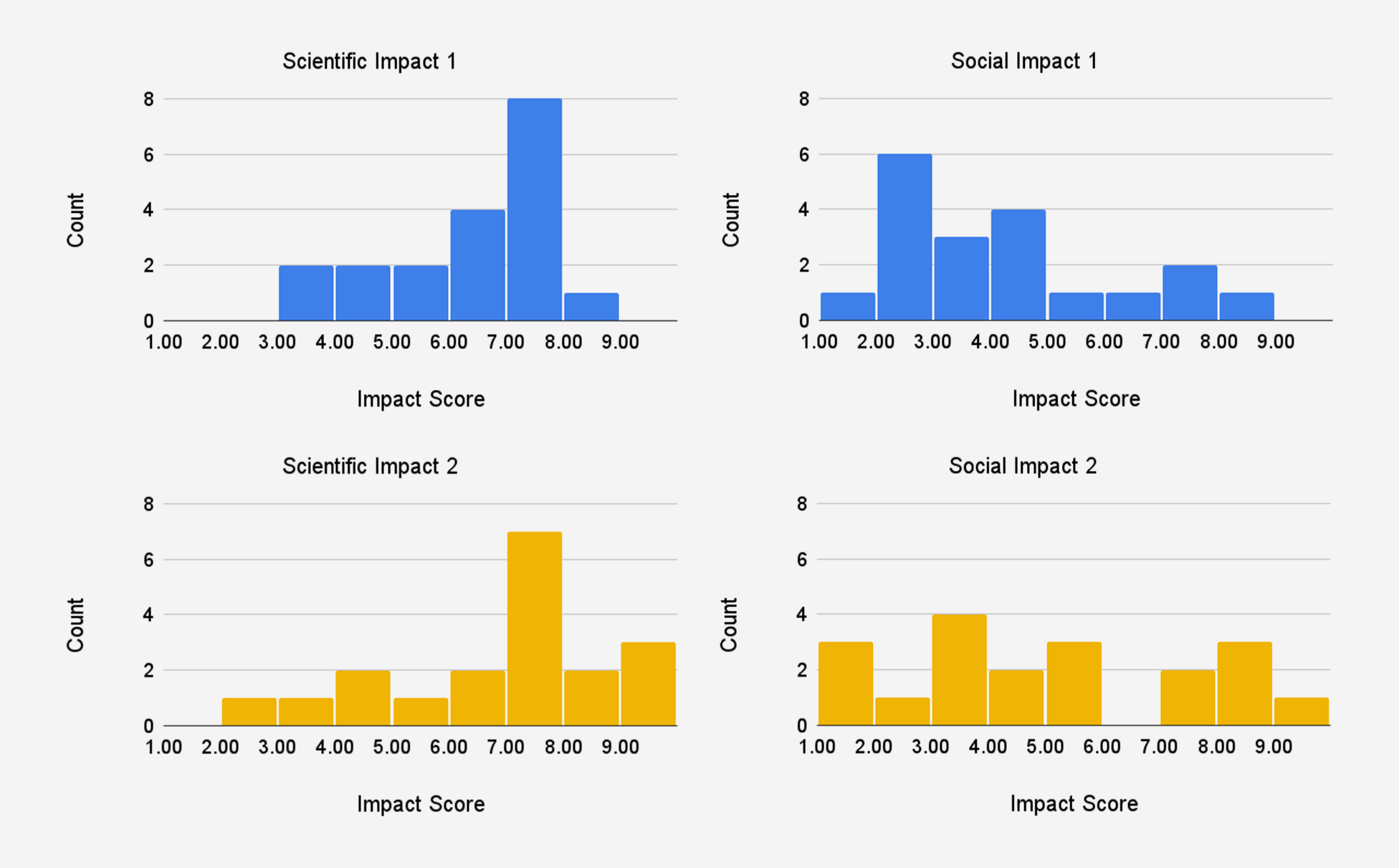

In our pilot, we chose to use an exponential scale with a base of 2 for impact to better reflect the real distribution of scientific impact. Using this exponential impact scale, we conducted a survey of a small pool of academics in the life sciences about how they would rate the impact of the average funded NIH R01 grant. They responded with an average scientific impact score 5 and an average social impact score of 3, which are much lower on our scale compared to traditional peer review scores4, suggesting that the exponential scale may be beneficial for avoiding score inflation and bunching at the top. In our pilot, the distribution of scientific impact scores was centered higher than 5, but still less skewed than NIH peer review scores for significance and innovation typically are. This partially reflects the fact that proposals were expected to be funded at one to two orders of magnitude more than NIH R01 proposals are, so impact should also be greater. The distribution of social impact scores exhibits a much wider spread and lower center.

Conclusion

In summary, expected utility forecasting presents a promising approach to improving the rigor of peer review and quantitatively defining the risk-reward profile of science proposals. Our pilot study suggests that this approach can be quite user-friendly for reviewers, despite its apparent complexity. Further study into how best to integrate forecasting into panel environments, define proposal milestones, and calibrate impact scales will help refine future implementations of this approach.

More broadly, we hope that this pilot will encourage more grantmaking institutions to experiment with innovative funding mechanisms. Reviewers in our pilot were more open-minded and quick-to-learn than one might expect and saw significant value in this unconventional approach. Perhaps this should not be so much of a surprise given that experimentation is at the heart of scientific research.

Interested grantmakers, both public and private, and policymakers are welcome to reach out to our team if interested in learning more or receiving assistance in implementing this approach.

Acknowledgements

Many thanks to Jordan Dworkin for being an incredible thought partner in designing the pilot and providing meticulous feedback on this report. Your efforts made this project possible!

Appendix A: Pilot Study Design

Our pilot study consisted of five proposals for life science-related Focused Research Organizations (FROs). These proposals were solicited from academic researchers by FAS as part of our advocacy for the concept of FROs. As such, these proposals were not originally intended as proposals for direct funding, and did not have as strict content requirements as traditional grant proposals typically do. Researchers were asked to submit one to two page proposals discussing (1) their research concept, (2) the motivation and its expected social and scientific impact, and (3) the rationale for why this research can not be accomplished through traditional funding channels and thus requires a FRO to be funded.

Permission was obtained from proposal authors to use their proposals in this study. We worked with proposal authors to define two milestones for each proposal that reviewers would assess: one that they felt confident that they could achieve and one that was more ambitious but that they still thought was feasible. In addition, due to the brevity of the proposals, we included an additional 1-2 pages of supplementary information and scientific context. Final drafts of the milestones and supplementary information were provided to authors to edit and approve. Because this pilot study could not provide any actual funding to proposal authors, it was not possible to solicit full length research proposals from proposal authors.

We recruited four to six reviewers for each proposal based on their subject matter expertise. Potential participants were recruited over email with a request to help review a FRO proposal related to their area of research. They were informed that the review process would be unconventional but were not informed of the study’s purpose. Participants were offered a small monetary compensation for their time.

Confirmed participants were sent instructions and materials for the review process on the same day and were asked to complete their review by the same deadline a month and a half later. Reviewers were told to assume that, if funded, each proposal would receive $50 million in funding over five years to conduct the research, consistent with the proposed model for FROs. Each proposal had two technical milestones, and reviewers were asked to answer the following questions for each milestone:

- Assuming that the proposal is funded by 2025, will the milestone be achieved before 2031?

- What will be the average scientific impact score, as judged in 2032, of accomplishing the milestone?

- What will be the average social impact score, as judged in 2032, of accomplishing the milestone?

The impact scoring system was explained to reviewers as follows:

Please consider the following in determining the impact score: the current and expected long-term social or scientific impact of a funded FRO’s outputs if a funded FRO accomplishes this milestone before 2030.

The impact score we are using ranges from 1 (low) to 10 (high). It is base 2 exponential, meaning that a proposal that receives a score of 5 has double the impact of a proposal that receives a score of 4, and quadruple the impact of a proposal that receives a score of 3. In a small survey we conducted of SMEs in the life sciences, they rated the scientific and social impact of the average NIH R01 grant — a federally funded research grant that provides $1-2 million for a 3-5 year endeavor — on this scale to be 5.2 ± 1.5 and 3.1 ± 1.3, respectively. The median scores were 4.75 and 3.00, respectively.

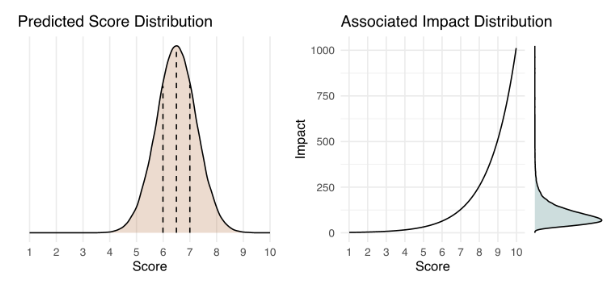

Below is an example of how a predicted impact score distribution (left) would translate into an actual impact distribution (right). You can try it out yourself with this interactive version (in the menu bar, click Runtime > Run all) to get some further intuition on how the impact score works. Please note that this is meant solely for instructive purposes, and the interface is not designed to match Metaculus’ interface.

The choice of an exponential impact scale reflects the tendency in science for a small number of research projects to have an outsized impact. For example, studies have shown that the relationship between the number of citations for a journal article and its percentile rank scales exponentially.

Scientific impact aims to capture the extent to which a project advances the frontiers of knowledge, enables new discoveries or innovations, or enhances scientific capabilities or methods. Though each is imperfect, one could consider citations of papers, patents on tools or methods, or users of software or datasets as proxies of scientific impact.

Social impact aims to capture the extent to which a project contributes to solving important societal problems, improving well-being, or advancing social goals. Some proxy metrics that one might use to assess a project’s social impact are the value of lives saved, the cost of illness prevented, the number of job-years of employment generated, economic output in terms of GDP, or the social return on investment.

You may consider any or none of these proxy metrics as a part of your assessment of the impact of a FRO accomplishing this milestone.

Reviewers were asked to submit their forecasts on Metaculus’ website and to provide their reasoning in a separate Google form. For question 1, reviewers were asked to respond with a single probability. For questions 2 and 3, reviewers were asked to provide their median, 25th percentile, and 75th percentile predictions, in order to generate a probability distribution. Metaculus’ website also included information on the resolution criteria of each question, which provided guidance to reviewers on how to answer the question. Individual reviewers were blind to other reviewers’ responses until after the submission deadline, at which point the aggregated results of all of the responses were made public on Metaculus’ website.

Additionally, in the Google form, reviewers were asked to answer a survey question about their experience: “What did you think about this review process? Did it prompt you to think about the proposal in a different way than when you normally review proposals? If so, how? What did you like about it? What did you not like? What would you change about it if you could?”

Some participants did not complete their review. We received 19 complete reviews in the end, with each proposal receiving three to six reviews.

Study Limitations

Our pilot study had certain limitations that should be noted. Since FAS is not a grantmaking institution, we could not completely reproduce the same types of research proposals that a grantmaking institution would receive nor the entire review process. We will highlight these differences in comparison to federal science agencies, which are our primary focus.

- Review Process: There are typically two phases to peer review at NIH and NSF. First, at least three individual reviewers with relevant subject matter expertise are assigned to read and evaluate a proposal independently. Then, a larger committee of experts is convened. There, the assigned reviewers present the proposal and their evaluation, and then the committee discusses and determines the final score for the proposal. Our pilot study only attempted to replicate the first phase of individual review.

- Sample Size: In our pilot, the sample size was quite small, since only five proposals were reviewed, and they were all in different subfields, so different reviewers were assigned to each proposal. NIH and NSF peer review committees typically focus on one subfield and review on the order of twenty or so proposals. The number of reviewers per proposal–three to six–in our pilot was consistent with the number of reviewers typically assigned to a proposal by NIH and NSF. Peer review committees are typically larger, ranging from six to twenty people, depending on the agency and the field.

- Proposals: The FRO proposals plus supplementary information were only two to four pages long, which is significantly shorter than the 12 to 15 page proposals that researchers submit for NIH and NSF grants. Proposal authors were asked to generally describe their research concept, but were not explicitly required to describe the details of the research methodology they would use or any preliminary research. Some proposal authors volunteered more information on this for the supplementary information, but not all authors did.

- Grant Size: For the FRO proposals, reviewers were asked to assume that funded proposals would receive $50 million over five years, which is one to two orders of magnitude more funding than typical NIH and NSF proposals.

Appendix B: Feedback on Study-Specific Implementation

In addition to feedback about the review framework, we received feedback on how we implemented our pilot study, specifically the instructions and materials for the review process and the submission platforms. This feedback isn’t central to this paper’s investigation of expected value forecasting, but we wanted to include it in the appendix for transparency.

Reviewers were sent instructions over email that outlined the review process and linked to Metaculus’ webpage for this pilot. On Metaculus’ website, reviewers could find links to the proposals on FAS’ website and the supplementary information in Google docs. Reviewers were expected to read those first and then read through the resolution criteria for each forecasting question before submitting their answers on Metaculus’ platform. Reviewers were asked to submit the explanations behind their forecasts in a separate Google form.

Some reviewers had no problem navigating the review process and found Metaculus’ website easy to use. However, feedback from other reviewers suggested that the different components necessary for the review were spread out over too many different websites, making it difficult for reviewers to keep track of where to find everything they needed.

Some had trouble locating the different materials and pieces of information needed to conduct the review on Metaculus’ website. Others found it confusing to have to submit their forecasts and explanations in two separate places. One reviewer suggested that the explanation of the impact scoring system should have been included within the instructions sent over email rather than in the resolution criteria on Metaculus’ website so that they could have read it before reading the proposal. Another reviewer suggested that it would have been simpler to submit their forecasts through the same Google form that they used to submit their explanations rather than through Metaculus’ website.

Based on this feedback, we would recommend that future implementations streamline their submission process to a single platform and provide a more extensive set of instructions rather than seeding information across different steps of the review process. Training sessions, which science funding agencies typically conduct, would be a good supplement to written instructions.

Appendix C: Total Expected Utility Calculations

To calculate the total expected utility, we first converted all of the impact scores into utility by taking two to the exponential of the impact score, since the impact scoring system is base 2 exponential:

Utility=2Impact Score.

We then were able to average the utilities for each milestone and conduct additional calculations.

To calculate the total utility of each milestone, ui, we averaged the social utility and the scientific utility of the milestone:

ui = (Social Utility + Scientific Utility)/2.

The total expected utility (TEU) of a proposal with two milestones can be calculated according to the general equation:

TEU = u1P(m1 ∩ not m2) + u2P(m2 ∩ not m1) + (u1+u2)P(m1m2),

where P(mi) represents the probability of success of milestone i and

P(m1 ∩ not m2) = P(m1) – P(m1 ∩ m2)

P(m2 ∩ not m1) = P(m2) – P(m1 ∩ m2).

For sequential milestones, milestone 2 is defined as inclusive of milestone 1 and wholly dependent on the success of milestone 1, so this means that

u2, seq = u1+u2

P(m2) = Pseq(m1 ∩ m2)

P(m2 ∩ not m1) = 0.

Thus, the total expected utility of sequential milestones can be simplified as

TEU = u1P(m1)-u1P(m2) + (u2, seq)P(m2)

TEU = u1P(m1) + (u2, seq-u1)P(m2)

This can be generalized to

TEUseq = Σi(ui, seq-ui-1, seq)P(mi).

Otherwise, the total expected utility can be simplified to

TEU = u1P(m1) + u2P(m2) – (u1+u2)P(m1 ∩ m2).

For independent outcomes, we assume

Pind(m1 ∩ m2) = P(m1)P(m2),

so

TEUind = u1P(m1) + u2P(m2) – (u1+u2)P(m1)P(m2).

To present the results in Tables 1 and 2, we converted all of the utility values back into the impact score scale by taking the log base 2 of the results.

Working with academics: A primer for U.S. government agencies

Collaboration between federal agencies and academic researchers is an important tool for public policy. By facilitating the exchange of knowledge, ideas, and talent, these partnerships can help address pressing societal challenges. But because it is rarely in either party’s job description to conduct outreach and build relationships with the other, many important dynamics are often hidden from view. This primer provides an initial set of questions and topics for agencies to consider when exploring academic partnership.

Why should agencies consider working with academics?

- Accessing the frontier of knowledge: Academics are at the forefront of their fields, and their insights can provide fresh perspectives on agency work.

- Leveraging innovative methods: From data collection to analysis, academics may have access to the new technologies and approaches that can enhance governmental efforts.

- Enhancing credibility: By incorporating research and external expertise, policy decisions gain legitimacy and trust, and align with evidence-based policy guidelines.

- Generating new insights: Collaboration between agencies and outside researchers can lead to discoveries that advance both knowledge and practice..

- Developing human capital: Collaboration can enhance the skills of both public servants and academics, creating a more robust workforce and potentially leading to longer-term talent exchange.

What considerations may arise when working with academics?

- Designing collaborative relationships that are targeted to the incentives of both the agency and the academic partners;

- Navigating different rules and regulations that may impact academic-government collaboration, e.g. rules on external advisory groups, university guidelines, and data/information confidentiality;

- Understanding the different structures and mechanisms that enable academic-government collaboration, such as sabbaticals, fellowships, consultancies, grants, or contracts;

- Identifying and approaching the right academics for different projects and needs.

Academic faculty progress through different stages of professorship — typically assistant, associate, and full — that affect their research and teaching expectations and opportunities. Assistant professors are tenure-track faculty who need to secure funding, publish papers, and meet the standards for tenure. Associate professors have job security and academic freedom, but also more mentoring and leadership responsibilities; associate professors are typically tenured, though this is not always the case. Full professors are senior faculty who have a high reputation and recognition in their field, but also more demands for service and supervision. The nature of agency-academic collaboration may depend on the seniority of the academic. For example, junior faculty may be more available to work with agencies, but primarily in contexts that will lead to traditional academic outputs; while senior faculty may be more selective, but their academic freedom will allow for less formal and more impact-oriented work.

Soft money positions are those that depend largely or entirely on external funding sources, typically research grants, to support the salary and expenses of the faculty. Hard money positions are those that are supported by the academic institution’s central funds, typically tied to more explicit (and more expansive) expectations for teaching and service than soft-money positions. Faculty in soft money positions may face more pressure to secure funding for research, while faculty in hard money positions may have more autonomy in their research agenda but more competing academic activities. Federal agencies should be aware of the funding situation of the academic faculty they collaborate with, as it may affect their incentives and expectations for agency engagement.

A sabbatical is a period of leave from regular academic duties, usually for one or two semesters, that allows faculty to pursue an intensive and unstructured scope of work — this can include research in their own field or others, as well as external engagements or tours of service with non-academic institutions . Faculty accrue sabbatical credits based on their length and type of service at the university, and may apply for a sabbatical once they have enough credits. The amount of salary received during a sabbatical depends on the number of credits and the duration of the leave. Federal agencies may benefit from collaborating with academic faculty who are on sabbatical, as they may have more time and interest to devote to impact-focused work.

Consulting limits & outside activity limits are policies that regulate the amount of time that academic faculty can spend on professional activities outside their university employment. These policies are intended to prevent conflicts of commitment or interest that may interfere with the faculty’s primary obligations to the university, such as teaching, research, and service, and the specific limits vary by university. Federal agencies may need to consider these limits when engaging academic faculty in ongoing or high-commitment collaborations.

Some academic faculty are paid on a 9-month basis, meaning that they receive their annual salary over nine months and have the option to supplement their income with external funding or other activities during the summer months. Other faculty are paid on a 12-month basis, meaning that they receive their annual salary over twelve months and have less flexibility to pursue outside opportunities. Federal agencies may need to consider the salary structure of the academic faculty they work with, as it may affect their availability to engage on projects and the optimal timing with which they can do so.

Informal advising

Advisory relationships consist of an academic providing occasional or periodic guidance to a federal agency on a specific topic or issue, without being formally contracted or compensated. This type of collaboration can be useful for agencies that need access to cutting-edge expertise or perspectives, but do not have a formal deliverable in mind.

Academic considerations

- Career stage: Informal advising can be done by faculty at any level of seniority, as long as they have relevant knowledge and experience. However, junior faculty may be more cautious about engaging in informal advising, as it may not count towards their tenure or promotion criteria. Senior faculty, who have established expertise and secured tenure, may be more willing to engage in impact-focused advisory relationships.

- Incentives: Advisory relationships can offer some benefits for faculty regardless of career stage, such as expanding their network, increasing their visibility, and influencing policy or practice. Informal advising can also stimulate new research questions, and create opportunities for future access to data or resources. Some agencies may also acknowledge the contributions of academic advisors in their reports or publications, which may enhance researchers’ academic reputation.

- Conflicts of interest: Informal advising may pose potential conflicts of interest or commitment for faculty, especially if they have other sources of funding or collaboration related to the same topic or issue. Faculty may need to consult with their department chair or dean before engaging in formal conversations, and should also avoid any activities that may compromise their objectivity, integrity, or judgment in conducting or reporting their university research.

- Timing: Faculty on 9-month salaries might be more willing/able to engage during summer months, when they have minimal teaching requirements and are focused on research and impact outputs.

Regulatory & structural considerations

- Contracting: An advisory relationship may not require a formal agreement or contract between the agency and the academic. For some topics or agencies, however, it may require a non-disclosure agreement or consulting agreement if the agency wants to ensure the exclusivity or confidentiality of the conversation.

- Advisory committee rules: Depending on the scope and scale of the academic engagement, agencies should be sure to abide by Federal Advisory Committee Act regulations. With informal one-on-one conversations that are focused on education and knowledge exchange, this is unlikely to be an issue.

- University approval: An NDA or consulting agreement may require approval from the university’s office of sponsored programs or office of technology transfer before engaging in informal advising. These offices may review and approve the agreement between the agency and the academic institution, ensuring compliance with university policies and regulations.

- Compensation: Informal advising typically does not involve compensation for the academic, but it may involve reimbursement for travel or other expenses related to the advisory role. This work is unlikely to count towards the consulting limit for faculty, but it may count towards the outside professional activity limit, depending on the nature and frequency of the advising.

Federal agencies and academic institutions are subject to various laws and regulations that affect their research collaboration, and the ownership and use of the research outputs. Key legislation includes the Federal Advisory Committee Act (FACA), which governs advisory committees and ensures transparency and accountability; the Federal Acquisition Regulation (FAR), which controls the acquisition of supplies and services with appropriated funds; and the Federal Grant and Cooperative Agreement Act (FGCAA), which provides criteria for distinguishing between grants, cooperative agreements, and contracts. Agencies should ensure that collaborations are structured in accordance with these and other laws.

Federal agencies may use various contracting mechanisms to engage researchers from non-federal entities in collaborative roles. These mechanisms include the IPA Mobility Program, which allows the temporary assignment of personnel between federal and non-federal organizations; the Experts & Consultants authority, which allows the appointment of qualified experts and consultants to positions that require only intermittent and/or temporary employment; and Cooperative Research and Development Agreements (CRADAs), which allow agencies to enter into collaborative agreements with non-federal partners to conduct research and development projects of mutual interest.

Offices of Sponsored Programs are units within universities that provide administrative support and oversight for externally funded research projects. OSPs are responsible for reviewing and approving proposals, negotiating and accepting awards, ensuring compliance with sponsor and university policies and regulations, and managing post-award activities such as reporting, invoicing, and auditing. Federal agencies typically interact with OSPs as the authorized representative of the university in matters related to sponsored research.

When engaging with academics, federal agencies may use NDAs to safeguard sensitive information. Agencies each have their own rules and procedures for using and enforcing NDAs involving their grantees and contractors. These rules and procedures vary, but generally require researchers to sign an NDA outlining rights and obligations relating to classified information, data, and research findings shared during collaborations.

Study groups

A study group is a type of collaboration where an academic participates in a group of experts convened by a federal agency to conduct analysis or education on a specific topic or issue. The study group may produce a report or hold meetings to present their findings to the agency or other stakeholders. This type of collaboration can be useful for agencies that need to gather evidence or insights from multiple sources and disciplines with expertise relevant to their work.

Academic considerations

- Career stage: Faculty at any level of seniority can participate in a study group, but junior faculty may be more selective about joining, as they have limited time and resources to devote to activities that may not count towards their tenure or promotion criteria. Senior faculty may be more willing to join a study group, as they have more established expertise and reputation, and may seek to have more impact on policy or practice.

- Soft vs. hard money: Faculty in soft money positions, where their salary and research expenses depend largely on external funding sources, may be more interested in joining a study group if it provides funding or other resources that support their research. Faculty in hard money positions, where their salary and research expenses are supported by institutional funds, may be less motivated by funding, but more by the recognition and impact that comes from participating.

- Incentives: Study groups can offer some benefits for faculty, such as expanding their network, increasing their visibility, and influencing policy or practice. Study groups can also stimulate new research ideas or questions for faculty, and create opportunities for future access to data or resources. Some study groups may also result in publication of output or other forms of recognition (e.g., speaking engagements) that may enhance the academic reputation of the faculty.

- Conflicts of interest: Study groups may pose potential conflicts of interest or commitment for academics, especially if they have other sources of funding related to the same topic. Faculty may also be cautious about entering into more formal agreements if it may impact their ability to apply for & receive federal research funding in the future. Agencies should be aware of any such impacts of academic participation, and faculty should be encouraged to consult with their department chair or dean before joining a study group.

Regulatory & structural considerations

- Contracting and compensation: The optimal contracting mechanism for a study group will depend on the agency, the topic, and the planned output of the group. Some possible contracting mechanisms are extramural grants, service contracts, cooperative agreements, or memoranda of understanding. The mechanism will determine the amount and type of compensation that participants (or the organizing body) receive, and could include salary support, travel reimbursement, honoraria, or overhead costs.

- Advisory committee rules: When setting up study groups, agencies should work carefully to ensure that the structure abides by Federal Advisory Committee Act regulations. To ensure that study groups are distinct from Advisory Committees , these groups should be limited in size, and should be tasked with providing knowledge, research, and education — rather than specific programmatic guidance — to agency partners.

- University approval: Depending on the contracting mechanism and the compensation involved, academic participants may need to obtain approval from their university’s office of sponsored programs or office of technology transfer before joining a study group. These offices may review the terms and conditions of the agreement between the agency and the academic institution, such as the scope of work, the budget, and the reporting requirements.

Case study

In 2022, the National Science Foundation (NSF) awarded the National Bureau of Economic Research (NBER) a grant to create the EAGER: Place-Based Innovation Policy Study Group. This group, led by two economists with expertise in entrepreneurship, innovation, and regional development — Jorge Guzman from Columbia University and Scott Stern from MIT — aimed to provide “timely insight for the NSF Regional Innovation Engines program.” During Fall 2022, the group met regularly with NSF staff to i) provide an assessment of the “state of knowledge” of place-based innovation ecosystems, ii) identify the insights of this research to inform NSF staff on design of their policies, and iii) surface potential means by which to measure and evaluate place-based innovation ecosystems on a rigorous and ongoing basis. Several of the academic leads then completed a paper synthesizing the opportunities and design considerations of the regional innovation engine model, based on the collaborative exploration and insights developed throughout the year. In this case, the study group was structured as a grant, with funding provided to the organizing institution (NBER) for personnel and convening costs. Yet other approaches are possible; for example, NSF recently launched a broader study group with the Institute for Progress, which is structured as a no-cost Other Transaction Authority contract.

Collaborative research

Active collaboration covers scenarios in which an academic engages in joint research with a federal agency, either as a co-investigator, a subrecipient, a contractor, or a consultant. This type of collaboration can be useful for agencies that need to leverage the expertise, facilities, data, or networks of academics to conduct research that advances their mission, goals, or priorities.

Academic considerations

- Career stage: Collaborative research is likely to be attractive to junior faculty, who are seeking opportunities to access data that might not be otherwise available, and to foster new relationships with partners. This is particularly true if there is a commitment that findings or evaluations will be publishable, and if the collaboration does not interfere with teaching and service obligations. Collaborative projects are also likely to be of interest to senior faculty — if work aligns with their established research agenda — and publication of findings may be (slightly) less of a requirement.

- Soft vs. hard money: Researchers on hard money contracts, where their salary and research expenses are supported by institutional funds, may be more motivated by the opportunity to use and publish internal data from the agency. Researchers on soft money contracts, where their salary and research expenses depend largely on external funding sources, may be more motivated by the availability of grants and financial support from the agency.

- Timing: Depending on the scope of the collaboration, and the availability of funding for the researcher, efforts could be targeted for academics’ summer months or their sabbaticals. Alternatively, collaborative research could be integrated into the regular academic year, as part of the researcher’s ongoing research activities.

- Incentives: As mentioned above, collaborative research can offer some benefits for faculty, such as access to data and information, publication opportunities, funding sources, and partnership networks. Collaborative research can also provide faculty with more direct and immediate impact on policy or practice, as well as recognition from the agency and stakeholders (and, perhaps to a lesser extent, the academic community).

Regulatory & structural considerations

- Contracting: The contracting requirements for collaborative research will vary greatly depending on the structure and scope of the collaboration, the partnering agency, and the use of internal government data or resources. Readers are encouraged to explore agency-specific guidance when considering the ideal mechanism for a given project. Some possible contracting mechanisms are extramural grants, service contracts, or cooperative research and development agreements. Each mechanism has different terms and conditions regarding the scope of work, the budget, the intellectual property rights, the reporting requirements, and the oversight responsibilities.

- Regulatory compliance: Collaborative research involving both governmental and non-governmental partners will require compliance with various laws, regulations, and authorities. These include but are not limited to:

- Federal Acquisition Regulation (FAR), which establishes the policies and procedures for acquiring supplies and services with appropriated funds;

- Federal Grant and Cooperative Agreement Act (FGCAA), which provides criteria for determining whether to use a grant or a cooperative agreement to provide assistance to non-federal entities;

- Other Transaction Authority (OTA), a contracting mechanism that provides (most) agencies with the ability to enter into flexible research & development agreements that are not subject to the regulations on standard contracts, grants, or cooperative agreements

- OMB’s Uniform Guidance, which set forth the administrative requirements, cost principles, and audit requirements for federal awards

- Bayh-Dole Act, which allows academic institutions to retain title to inventions made with federal funding, subject to certain conditions and obligations.

- Collaborative research may also require compliance with ethical standards and guidelines for human subjects research, such as the Belmont Report and the Common Rule.

Case studies

External collaboration between academic researchers and government agencies has repeatedly proven fruitful for both parties. For example, in May 2020, the Rhode Island Department of Health partnered with researchers at Brown University’s Policy Lab to conduct a randomized controlled trial evaluating the effectiveness of different letter designs in encouraging COVID-19 testing. This study identified design principles that improved uptake of testing by 25–60% without increasing cost, and led to follow-on collaborations between the institutions. The North Carolina Office of Strategic Partnerships provides a prime example of how government agencies can take steps to facilitate these collaborations. The office recently launched the North Carolina Project Portal, which serves as a platform for the agency to share their research needs, and for external partners — including academics — to express interest in collaborating. Researchers are encouraged to contact the relevant project leads, who then assess interested parties on their expertise and capacity, extend an offer for a formal research partnership, and initiate the project.

Short-term placements

Short-term placements allow for an academic researcher to work at a federal agency for a limited period of time (typically one year or less), either as a fellow, a scholar, a detailee, or a special government employee. This type of collaboration can be useful for agencies that need to fill temporary gaps in expertise, capacity, or leadership, or to foster cross-sector exchange and learning.

Academic considerations

- Career stage: Short-term placements may be more appealing to senior faculty, who have more established and impact-focused research agendas, and who may seek to influence policy or practice at the highest levels. Junior faculty may be less interested in placements, particularly if they are still progressing towards tenure — unless the position offers opportunities for publication, funding, or recognition that are relevant to their tenure or promotion criteria.

- Soft vs. hard money: Faculty in soft money positions may face more challenges in arranging short-term placement if they have ongoing grants or labs to maintain; but placements where external resources are available (e.g., established fellowships), could be an attractive option when ongoing commitments are manageable. The impact of hard money will depend largely on the type of placement and the expectations for whether institutional support or external resources will cover a faculty member’s time away from the university.

- Timing: Sabbaticals are an ideal time for short-term placements, as they allow faculty to pursue intensive research or external engagement, without interfering with their regular academic duties. However, convincing faculty to use their sabbaticals for short-term placement may require a longer discovery and recruitment period, as well as a strong value proposition that highlights the benefits and incentives of the collaboration. Because most faculty are subject to the academic calendar, June and January tend to be ideal start dates for this type of engagement.

- Incentives: Short-term placements can offer benefits for academics, such as having an impact on policy or practice, gaining access to new data or research areas, and building relationships with agency officials and other stakeholders. However, short-term placements can also involve some costs and/or risks for participating faculty, including logistical complications, relocation, confidentiality constraints, and publication restrictions.

Regulatory & structural considerations

- Contracting: Short-term placements require a formal agreement or contract between the agency and the academic. There are several contracting & hiring mechanisms that can facilitate short-term placement, such as the Intergovernmental Personnel Act (IPA) Mobility Program, the Experts & Consultants authority, Schedule A(r), or the Special Government Employee (SGE) designation. Each mechanism has different eligibility criteria, terms and conditions, and administrative processes. Alternatively, many fellowship programs already exist within agencies or through outside organizations, which can streamline the process and handle logistics on behalf of both the academic institution and the agency.

- Compensation: The payment of salary support, travel, overhead, etc. will depend on the contracting mechanism and the agreement between the agency and the academic institution. Costs are generally covered by the organization that is expected to benefit most from the placement, which is often the agency itself; though some authorities for facilitating cross-sector exchange (e.g., the IPA program and Experts and Consultants authority) allow research institutions to cost-share or cover the expense of an expert’s compensation when appropriate. External fellowship programs also occasionally provide external resources to cover costs.

- Role and expectations: Placements, more so than more informal collaborations, require clear communication and understanding of the role and expectations. The academic should also be prepared to adapt to the agency’s norms and processes, which will differ from those in academia, and to perform work that may not reflect their typical contribution. The academic should also be aware of their rights and obligations as a federal employee or contractor.

- Confidentiality: Placements may involve access to confidential or sensitive information from the agency, such as classified data or personal information. Academics will likely be required to sign a non-disclosure agreement (NDA) that defines the scope and terms of confidentiality, and will often be subject to security clearance or background check procedures before entering their role.

Case studies

Various programs exist throughout government to facilitate short-term rotations of outside experts into federal agencies and offices. One of the most well-known examples is the American Association for the Advancement of Science (AAAS) Science & Technology Policy Fellowship (STPF) program, which places scientists and engineers from various disciplines and career stages in federal agencies for one year to apply their scientific knowledge and skills to inform policy making and implementation. The Schedule A(r) hiring authority tends to be well-suited for these kinds of fellowships; it is used, for example, by the Bureau of Economic Analysis to bring on early career fellows through the American Economic Association’s Summer Economics Fellows Program. In some circumstances, outside experts are brought into government “on loan” from their home institution to do a tour of service in a federal office or agency; in these cases, the IPA program can be a useful mechanism. IPAs are used by the National Science Foundation (NSF) in its Rotator Program, which brings outside scientists into the agency to serve as temporary Program Directors and bring cutting-edge knowledge to the agency’s grantmaking and priority-setting. IPA is also used for more ad-hoc talent needs; for example, the Office of Evaluation Sciences (OES) at GSA often uses it to bring in fellows and academic affiliates.

Long-term rotations

Long-term rotations allow an academic to work at a federal agency for an extended period of time (more than one year), either as a fellow, a scholar, a detailee, or a special government employee. This type of collaboration can be useful for agencies that need to recruit and retain expertise, capacity, or leadership in areas that are critical to their mission, goals, or priorities.

Academic considerations

- Career stage: Long-term rotations may be more feasible for senior faculty, who have more experience in their discipline and are likely to have more flexibility and support from their institutions to take a leave of absence. Junior faculty may face more barriers and risks in pursuing long-term rotations, such as losing momentum in their research productivity, missing opportunities for tenure or promotion, or losing connection with their academic peers and mentors.

- Soft vs. hard money: Faculty in soft money positions may have more ability to seek longer-term rotations, as the provision of external support is more in line with their institutions’ expectations. Faculty in hard money positions may face difficulties seeking long-term rotations, as institutional provision of resources comes with expectations for teaching and service that administrations may be wary of pausing for extended periods of time.

- Timing: Long-term rotations require careful planning and coordination with the academic institution and the federal agency, as it may involve significant changes in the academic’s schedule, workload, and responsibilities. These rotations may be easier to arrange during sabbaticals or other periods of leave from the academic institution, but will often still require approval from the institution’s administration. Because most faculty are subject to the academic calendar, June and January tend to be ideal start dates for sabbatical or secondment engagements.

- Incentives: Long-term rotations offer an opportunity for faculty to gain valuable experience and insight into the impact frontier — both in terms of policy and practice — of their field or discipline. These experiences can yield new skills or competencies that enhance their academic performance or career advancement, can help academics build strong relationships and networks with agency officials and other stakeholders, and can provide a lasting impact on public good. However, long-term roles involve challenges for faculty, such as adjusting to a different organizational structure, balancing expectations from both the agency and the academy, and transitioning back into academic work and productivity following the rotation.

Regulatory & structural considerations

- Regulatory and structural considerations — including contracting, compensation, and expectations — are similar to those of short-term placements, and tend to involve the same mechanisms and processes.

- The desired length of a long-term rotation will affect how agencies select and apply the appropriate mechanism. For example, IPA assignments are initially made for up to two years, and can then be extended for another two years when relevant — yielding a maximum continuous term length of four years.

- Longer time frames typically require additional structural considerations. Specifically, extensions of mechanisms like the IPA may be required, or more formal governmental employment may be prioritized at the outset. Given that these types of placements are often bespoke, these considerations should be explored in depth for the agency’s specific needs and regulatory context.

Case study

One example of a long-term rotation that draws experts from academia into federal agency work is the Advanced Research Projects Agency (ARPA) Program Manager (PM) role. ARPA PMs — across DARPA, IARPA, ARPA-E, and now ARPA-H — are responsible for leading high-risk, high-reward research programs, and have considerable autonomy and authority in defining their research vision, selecting research performers, managing their research budget, and overseeing their research outcomes. PMs are typically recruited from academia, industry, or government for a term of three to five years, and are expected to return to their academic institutions or pursue other career opportunities after their term at the agency. PMs coming from academia or nonprofit organizations are often brought on through the IPA mobility program, and some entities also have unique term-limited, hiring authorities for this purpose. PMs can also be hired as full government employees; this mechanism is primarily used for candidates coming from the private sector.

Incorporate open science standards into the identification of evidence-based social programs

Evidence-based policy uses peer-reviewed research to identify programs that effectively address important societal issues. For example, several agencies in the federal government run clearinghouses that review and assess the quality of peer-reviewed research to identify programs with evidence of effectiveness. However, the replication crisis in the social and behavioral sciences raises concerns that research publications may contain an alarming rate of false positives (rather than true effects), in part due to selective reporting of positive results. The use of open and rigorous practices — like study registration and availability of replication code and data — can ensure that studies provide valid information to decision-makers, but these characteristics are not currently collected or incorporated into assessments of research evidence.

To rectify this issue, federal clearinghouses should incorporate open science practices into their standards and procedures used to identify evidence-based social programs eligible for federal funding.

Details