Advisory Committees for the 21st Century Recommendations Toolkit

From January 2024 to July 2024, the Federation of American Scientists interviewed 30 current and former Advisory Committee (AdComm) members. Based on these discussions, we were able to source potential policy recommendations for the executive level, for the executive level, that may assist with enhancing the FDA’s ability to obtain valuable advice for evidence-based decision-making. In this toolkit, we build off of those discussions by providing you with actionable policy reform recommendations. We hope that these recommendations catapult the Advisory Committee structure into one best suited to equip all AdComms with the necessary tools needed to continue providing the government with the best advice.

Download a PDF version of these recommendations on the left.

Recommendations and Best Practices for Actionable Policy Implementation

Voting for FDA Advisory Committees

Update Type: Process

- Maintain voting as an integral function, allowing FDA Advisory Committee members to convey their collective expertise and advice, aiding the FDA in informed decision-making on scientific and regulatory matters.

- Revise the guidance for FDA Advisory Committee Members and FDA Staff to explicitly define circumstances for which voting should occur and eliminate sequential voting

Best Practices for Implementation

The United States Food and Drug Administration can uphold their voting mechanism by updating their document entitled, “Guidance for FDA Advisory Committee members and FDA staff: Voting Procedures for Advisory Committee Meetings” to include language that clearly states a vote should be taken at all Advisory Committee meetings where a medical product is being reviewed. This guidance should also indicate that the absence of voting should only occur if an Advisory Committee meeting has been convened to discuss issues of policy. Further, this guidance should be considered a level 2 guidance as it falls into the category of addressing a “controversial issue”. To effectuate these changes, a notice of availability (NOA) may be submitted to the Federal Register for public input (public input is not a requirement before implementation).

Potential Language to be Utilized for Guidance

In an effort to continue to allow Advisory Committee members to provide unbiased, evidence-based feedback and uphold such an integral part of the Advisory Committee process, voting is hereby mandatory for all Advisory Committee meetings that are convened where the purpose is to review and assess the safety and efficacy of medical products.

Involved Stakeholders

In order for this process to successfully occur, the FDA will need to amend their guidance with the aforementioned updates. Consideration should be given to incurred costs for personnel required to complete these updates. Personnel needed for these amendments may include, but are not limited to, the (a) Office of the Commissioner, Office of Clinical Policy and Programs, Office of Clinical Policy, (b) Center for Devices and Radiological Health, (c) Center for Biologics Evaluation and Research, and (d) Center for Drug Evaluation and Research.

FDA Staff, Leadership, and AdComm Disagreements

Update Type: Process

- Ensure that all FDA staff and leadership are fully cognizant of the existence and details of the Scientific Dispute Resolution at FDA guidance and the process for submitting disputes for review.

- Develop guidance that clearly explains a transparent process to communicate effectively with AdComm members regarding decision making when parties have opposing viewpoints

Best Practices for Implementation

Implementing these recommendations will improve conflict resolution internally and between the Agency and Advisory Committee members. Best practices for implementation include (a) building the Scientific Dispute Resolution at FDA guidance into the official FDA onboarding process for new hires to raise awareness, (b) provide annual employee trainings in an effort to stay up-to-date with dispute resolution processes and procedures, and (c) develop a guidance that delineates the process for resolving conflicts between the Agency and Advisory Committees when there are differing opinions.

Note: Guidance for resolving disputes between the Agency and Advisory Committees should be submitted to the Federal Register for public comment. Guidance should also include plain language that designates the avenue to be used for official decision notifications, the timeliness of these notifications after convenings have concluded, and circumstances in which the FDA cannot notify Advisory Committees that their decision is in direct opposition of the Committee’s vote (e.g., – if this notification would breach a confidentiality agreement with the applicant). Implementing a transparent process to communicate with AdComm members regarding differences between the Agency and the AdComm will assist in improving morale between both parties, but also encourage continued support of the AdComm.

Involved Stakeholders

Successful implementation of these recommendations will require the capacity of human resources personnel and individual center leadership.

Update Type: Regulatory

- Incorporate the Scientific Dispute Resolution at FDA guidance into FDA regulations

- Amend the Scientific Dispute Resolution at FDA guidance to dictate the mandatory execution of best practices within the dispute resolution process

- This guidance should identify additional non-biased parties (that may not be government-affiliated) to provide impartial guidance on complex scientific matters affecting public safety.

Best Practices for Implementation

Center leadership can assign FDA staff to make the necessary guidance amendments which should include the requirements for inclusion in the onboarding of all FDA employees. Staff should also be responsible for obtaining feedback on amendments from all necessary internal parties and submitting proposed amendments to OMB for review and addition to the Federal Register. Federal register comments will then be reviewed by FDA staff, guidance will be updated accordingly, and a final draft submitted to OMB for review. If approved, the final regulation will be published in the Code of Federal Regulations. Congressional involvement should not be necessary.

FDA Center leadership should delegate the task of creating an annual mandatory training program for all FDA employees to review this guidance in an effort to stay abreast of the procedures for dispute resolution.

Involved Stakeholders

FDA Center leadership, FDA staff, and the Office of Management and Budget (OMB) are the intended stakeholders for implementation of these recommendations. To incorporate this guidance into FDA regulations, Center leadership will need to assign FDA staff to amend guidance and submit to OMB.

Leveraging AdComm Membership

Update Type: Process

Expanding Committee Representation

- If there is flexibility and committee composition is not bound by law, include a patient representative and pharmacist and/or pharmacologist on each Committee

- Patient representatives provide a needed perspective due to lived experiences and understanding of how specific drugs and devices affect their day-to-day life

- Drugs and devices will usually pass through the hands of pharmacists and pharmacologists. Therefore, they should have the opportunity to serve on these Committees and provide feedback. Pharmacologists also understand the clinical application of drugs.

- Have a roster of temporary members that can be used when additional expertise is needed for Committee meetings or when there is a conflict of interest (COI)

Amplifying the Role of the Chair

- Expand the role of the Committee chair that will encompass the task of recruiting both standing and ad hoc members, as well as identifying prominent issues and products for Committee consideration, thereby allowing for specialized input from their Committee

Establishing Training and Regulatory Procedures for Incoming Members

- Institute a basic 101 training for all newly appointed Advisory Committee members that covers statistical analysis, clinical trial design, and elucidates the partnership between the FDA and AdComm

- Include an overview of the regulatory process and how the FDA’s decision-making process is performed

Best Practices for Implementation

With respect to Committee composition, the FDA should consider adding patient representatives to all Committees that review medical products. The addition of a patient representative will ensure that the voice of the population who the medical product affects is heard. The FDA can select individuals best suited to fill these roles through connecting with patient advocacy organizations. If patient representatives are selected, the FDA should develop an onboarding program to familiarize the patient representative with basic knowledge of the federal regulation process. This program should educate the patient representative on (a) the types of questions presented to Advisory Committees, (b) how the FDA views the role of the patient representative in the process, (c) the internal review process for data that is submitted, and (d) other pertinent topics related to medical product regulation.

Leveraging the role of the Advisory Committee Chair can help the FDA fully optimize the use of their Committee. “Chairs possess extensive networks that could support the identification of permanent or temporary expert participants for AdComms” (Banks, 2024). Allowing Chairs the ability to identify relevant issues or products for their respective committees to review can provide an additional layer for the FDA to keep abreast of critical public concerns via appropriate committee evaluation (Banks, 2024).

Finally, while Committee members may be experts in their own right, training should be provided for all. The FDA should provide basic 101 training courses that can cater to the needs of members with various knowledge bases. Training should include information on the relationship between the FDA and Advisory Committee members, best practices for understanding statistical analysis, and the different types of clinical trial designs. Training should provide real-world examples of statistical analysis and trial design in use (this can be done by providing examples from prior medical product review).

Involved Stakeholders

FDA Center staff (including statisticians and scientists for the development of training programs).

Conflict of Interest (COI) Auditing

UPDATE TYPE: PROCESS

- Develop a process that can quickly replace individuals who have a known conflict of interest

- Clearly delineate criteria for committee service acceptance regarding individuals with potential or actualconflicts of interest

Best Practices for Implementation

To prevent recurring COIs, the FDA should develop a database of experts for various categories of expertise that can be selected to replace those with known COIs. This database should include names, contact information, credentials, all areas of expertise for each expert, and should link to public financial interest databases that can serve as a source for identifying conflicts (e.g., Open Payments, Dollars for Docs).

To prevent public confusion, the FDA should amend their Guidance for the Public, FDA Advisory Committee Members, and FDA Staff: Public Availability of Advisory Committee Members’ Financial Interest Information and Waivers to specify circumstances that warrant a COI waiver being administered. This will increase transparency and help Advisory Committee members and the public understand the reasoning in allowing members with conflicts to participate in meetings. This guidance can then be submitted to the Federal Register for public comment

UPDATE TYPE: PROCESS | REGULATORY

- Streamline the COI process to prevent duplicative work that may act as a deterrent to experts volunteering to serve on the AdComm (work with GSA on this matter if necessary)

Best Practices for Implementation

Streamlining the COI process will assist the FDA with retention efforts for Advisory Committees while maintaining compliance with conflict of interest regulations. A digital system should be developed that allows Advisory Committee members to select whether their financial information has changed through the use of a dropdown or check box. This will prevent duplicative work and also contribute to a sustainable (green) process.

Involved Stakeholders

The Office of the Commissioner, Office of Clinical Policy and Programs, Office of Clinical Policy would be the interested stakeholder to issue updates to the COI policy and would work with the General Services Administration if necessary.

The Role of Patient Advocacy in the AdComm Process

Update Type: Process

- Dedicate staff to identifying crucial public comments from patient advocates that should be considered for regulatory decision-making

- Include a patient representative on all committees that are reviewing medical products

- If possible, ensure that patient representatives selected have basic knowledge of the federal regulation process

- Establish criteria for which all public comments must abide by

- This can be done by creating a checklist for the public to review and consider before forming their comment and that clearly delineates the mandatory criteria that a medical product must meet to be considered for approval

- A disclaimer can also be added to state that there is a specific threshold or sample size of a population who must benefit from the medical product

- Promote patient focused medical product development through the use of incorporating patient perspectives into the life cycle of the regulatory process

- This can be done through hosting patient town hall discussions for areas such as rare diseases and expanding initiatives such as the Patient Focused Drug Development (PFDD) public meetings

- Make patient engagement ongoing, rather than only allowing patient engagement quarterly or annually as done with most FDA-led programs and initiatives

Best Practices for Implementation

Public comments are a crucial part of the regulatory process. The FDA should focus on increasing participation in this process, as well as notifying participants of what should be expected from the public comment period. By law, the FDA must allow a public comment period for all Advisory Committee convenings. To increase participation, the FDA should allow public comments to be vocalized in-person and virtually, in addition to the submission of public comments to the docket.

Regarding Committee composition, please refer to Best Practices for Implementation under Leveraging AdComm Membership for information on the addition of patient representatives to Advisory Committees (see prior section above).

Patient engagement in the regulatory process is necessary to inform evidence-based decisions. While the FDA currently has ways to engage these communities through the use of public comment and initiatives such as Patient Focused Drug Development (PFDD), engagement should be an ongoing process. As mentioned, the FDA can develop and leverage existing relationships with public health agencies and advocacy organizations who can then serve as the liaison of feedback to the FDA. The FDA can also consider expanding their current initiatives and programs to engage communities twice a quarter instead of quarterly or annually. Consistent engagement in this form will help to establish trust between the FDA and the public who they serve, as well as give them the needed information from the communities who are most impacted from their decisions.

Involved Stakeholders

Implementation of these recommendations and expansion of current initiatives will require the involvement of FDA Center leadership and Center staff.

Improving Public Awareness and Understanding of Advisory Committees

Update Type: Process

- FDA can leverage social media platforms to increase awareness and understanding of AdComms through the use of disseminating information (engagement, ads, etc.)

- FDA should include a disclaimer on all communications and marketing materials regarding AdComms

- These disclaimers should also be made at all public meetings

- Disclaimers should emphasize the purpose of AdComm votes (disclaimer should state that votes allow AdComm members to provide an official stance to the FDA as experts, but those votes are also non-binding)

- Develop a webpage that allows people to be placed on listserv regarding upcoming meetings

- Partner w/state and local public health agencies and advocacy organizations to spread awareness

Best Practices for Implementation

The FDA should develop a monthly content plan to utilize its current interactive and social media outlets and disseminate information related to the role of Advisory Committees and their convenings, while also maintaining compliance with the FDA’s social media policy. The social media content plan should be centered around (a) what an Advisory Committee is, (b) how members are selected, (c) information regarding votes of Advisory Committees and how they are specific to safety and efficacy, but are not voting on the approval of a medical product, (d) discussing upcoming Advisory Committee meetings, their location, and inviting the public to participate via public comment, (e) sharing information about what a public comment is, requirements for making public comments, and how the FDA reviews them, and (f) sharing a webpage where the general public can input their personal email to be notified of upcoming Advisory Committee meetings.

FDA staff should develop a plain language disclaimer to be placed on all social media posts, meeting materials, and websites related to Advisory Committees. This disclaimer should illustrate that Advisory Committee members will provide unbiased expertise to assist the FDA with their decision. However, while the Committee’s vote is included in consideration for the decision, their vote is non-binding which leaves the FDA as the final decision maker for approval.

Finally, the FDA should identify state/local public health agencies, as well as advocacy organizations that they can potentially partner with to disseminate information more broadly. These agencies and advocacy organizations have strong relationships with various communities who should be engaged in the regulatory process. Developing a relationship with these agencies and organizations in an attempt to engage the community will assist the FDA with building connections and trust, as well as mutual understanding of Advisory Committee roles. Potential partners can be identified using the linked list under involved stakeholders.

Involved Stakeholders

Implementation of these recommendations and expansion of current initiatives will require the involvement of FDA Center leadership, Center staff, Office of External Affairs (OEA) Web and Digital Media staff, Office of Information Management and Technology (OIMT), state/local public health departments, and advocacy organizations.

Note: These listings of state/local public health departments and advocacy organizations are intended to be used as a starting point in the identification of potential partners and not to be considered an exhaustive list.

For questions related to this toolkit, please contact

Cheri Banks

Health Regulatory Specialist

cbanks@fas.org

Grace Wickerson

Health Equity Policy Manager

gwickerson@fas.org

Reclaiming Privacy Rights: A Roadmap for Organizations Fighting Digital Surveillance

Surveillance has been used on civil rights activists, organizations, and protesters for decades by federal and local law enforcement. Some past victims of government spying include Martin Luther King Jr., Angela Davis, Jane Fonda, American Indian Movement, United Farm Workers, and the National Lawyers Guild. These activists and organizations were subjected to traditional surveillance tactics such as wiretapping and infiltration.

Today, surveillance looks different as technological advances have made it increasingly easy to track someone’s whereabouts, communications, and inner thoughts based on browser history, all without leaving an office. This level of digital surveillance has a chilling effect on people’s First Amendment rights, because a person may choose to censor themselves online or be reluctant to engage in political expression, such as attending a protest, due to their fear of being watched and retaliated against.

This report is the result of research that tries to answer the fundamental question: what can civil society do to fight back against the growing trend of widespread digital surveillance, particularly in the state of New York? New York is the focus of this research project because of the state’s widespread use of surveillance technology, particularly in New York City, and the strong activism within the state that works to improve the lives of marginalized communities.

Social justice organizations play an instrumental role in society through their organizing and fighting for civil rights. This report provides these organizations information on current surveillance practices and how these practices may impact the communities that they serve. The first section of the report provides a short roadmap on the recent history of digital surveillance in different contexts such as immigration, environmental justice, criminal legal system, housing, and the workplace. The next section will speak on pending and finalized legislation that could be helpful or harmful towards achieving the obliteration of surveillance. The third section will describe strategies organizations can take to help combat surveillance in their communities. Lastly, the report provides a list of legal organizations that are well versed in this arena and attuned to technological advances.

A Current History of Digital Surveillance

Before diving into action, it’s important to provide an overview of the types of surveillance that many communities may be subjected to. This section will demonstrate how widespread surveillance is and provide background stories on the surveillance activist communities face within the immigration, environmental rights, criminal legal system, housing, and workplace context.

Immigration

There have been a growing number of surveillance tactics used against activists, migrants, journalists, and attorneys in the immigration space. In 2019, NBC 7 San Diego reported that federal agencies were keeping and sharing a secret database of an attorney, journalists, organizers, and “instigators” who had previously worked at the U.S.-Mexico border. The database contained photos of each person, obtained from the person’s passport or social media accounts. It also included personal information such as the person’s work and travel history, names of their family members, and the kind of vehicle they drive. Some of these individuals reported that while traveling across the border, they were targeted for secondary screening. Border agents took their electronic devices and some individuals believed that the agents performed a warrantless search of their device, though they were unable to verify this. Journalists reported that these invasive actions affected their ability to protect their confidential sources. It’s easy to imagine that this unfounded suspicion and investigation could deter activists and journalists from continuing their work.

This isn’t the only incident of ICE keeping an eye on activists. In July 2021, The Intercept reported that U.S. Immigration and Customs Enforcement (ICE) had been surveilling activists and advocacy groups, such as Project South and Georgia Detention Watch, online and in person. This was done under the guise of safety and security as an ICE spokesperson stated “[l]ike all other law enforcement agencies, ICE follows planned protests to ensure the safety and security of its infrastructure, personnel, officers and all those involved.” Internal emails revealed that ICE officials were using Facebook to follow advocacy groups and ICE was tracking the attendees of their events.

Migrants have also been subjected to government surveillance. Over the last several years, ICE has increased its use of electronic monitoring as an alternative to holding migrants in detention centers. Since March 2024,183,935 people have been subjected to electronic monitoring by ICE, with 18,518 of those required to wear GPS ankle monitors. In 2018, ICE launched SmartLINK, an app that allows the agency to track a migrant’s whereabouts. Since April 2024, ICE has monitored over 700,000 people through the app. The agency requires migrants to do periodic check-ins using SmartLINK to confirm the user’s identity through voice recognition, geolocation, and facial recognition technology (FRT). The app has access to the user’s phone camera and has the ability to record audio. If a migrant complies with their check-ins for around 14 to 18 months, ICE may remove the person from the app to make room for new migrants who have just arrived in the country. Users of the app have expressed concern about the app’s location tracking, as it may put their undocumented family members at risk. Users have also stated that the app feels just as restrictive and invasive as an ankle monitor. Thirteen immigrant rights organizations found that electronic monitoring is not only harmful to the user’s livelihood but also hampers their personal relationships and their ability to organize in their community.

Surveillance in the immigration space interferes with the ability of migrants to organize and affects journalistic reporting. It also has the tendency to make migrants afraid of being a part of a community or spending time with their undocumented family members because they are aware that they are being watched. This kind of surveillance puts everyone in their circle at risk.

Criminal Legal System

Surveillance has been used in the criminal legal system for decades, as police often use various spying tools to investigate suspects. However, whereas before police would use agents to track a suspect’s movements, today, law enforcement is able to track a suspect from their desk. Law enforcement has been able to use private companies to obtain a person’s personal data such as their cell phone records, location data, web browsing history, and more. This tracking is not limited to suspects, as law enforcement agencies have been reported to subject activists to this level of surveillance as well.

In 2018, Memphis police were accused of spying on Black activists from 2016 until 2017. Memphis Police Department’s Office of Homeland Security (MPD) was accused of creating a Facebook profile to monitor activists in the area. There was one incident in which a community organizer posted a book on their page, and MPD collected the names of everyone who liked the post. With that list, they created a dossier of those individuals and called it “Blue Suede Shoes”. MPD is far from the only law enforcement agency that has collected a list of organizers, but it is unclear what happens with these lists after they’ve been created.

During the 2020 protests, the world experienced a new level of surveillance at the hands of local law enforcement and federal agencies. In 2021, it was reported that six federal agencies used FRT during the 2020 Black Lives Matter (BLM) protests across the United States. The agencies admitted that they did use this technology to identify individuals but they stated it was used to identify those who they suspected had violated laws. In one instance, police officers were able to arrest a protester after using FRT and receiving a match. NYPD has also been accused of using the technology to identify protesters after the event and charge them with crimes.

Environmental Justice

Surveillance has also been found in the environmental justice space, from both law enforcement and private companies. Shanai Matteson is an artist and climate activist based in Palisade, Minnesota. In 2021, Matteson spoke at the “Rally for the Rivers” event which was organized around protesting a pipeline construction. At the conclusion of the rally, 200 people left and went to the construction site to protest. At some point, the police arrested a number of protestors although Matteson was not one. However, five months later, law enforcement officials found livestream videos of the event, identified who was at the rally, and charged Matteson with a misdemeanor accusing her of conspiring trespass.

During the Dakota Access Pipeline protests, we saw a private company conducting mass surveillance on individuals, in an unprecedented way. In 2016, private security firm, TigerSwan was hired by Energy Transfer Partners to surveil Dakota Access Pipeline protesters. TigerSwan monitored protesters’ social media posts, utilized aerial surveillance, employed informants, and used radio eavesdropping to spy on activists. TigerSwan used this information to make lists of “persons of interests” and pressure law enforcement to be more aggressive against the protesters. The firm also shared their intel with local law enforcement agencies and provided evidence to prosecutors to help them build cases against the protesters. After learning that Lee County, Iowa increased bail for protesters, TigerSwan stated in one of their documents that they needed to work closer with other counties to make sure protesters would be fined or arrested in order to deter them. Because TigerSwan is a private company, it was able to conduct this level of mass surveillance on protesters without much government or judicial oversight.

Housing

One of the areas people may least expect surveillance is within their housing, however those in private and public housing may deal with this issue in the near future. Between 2018 and 2019, residents of a Brooklyn apartment complex organized and resisted their landlord’s attempts to install facial recognition cameras within the building. In retaliation, the landlord threatened the organizers with loitering fines and told them, wrongfully so, that handing out flyers to fellow residents was unlawful behavior. The apartment complex justified their actions by stating that this technology would provide safety and security for their residents.

Public housing facilities have also been accused of installing surveillance systems in their communities without the consent of residents. Some of these systems contain FRT or other forms of artificial intelligence. In Scott County, Virginia, cameras at a public housing facility have FRT that searches for people barred from the facility. In New Bedford, Massachusetts, a surveillance system searches through hours of recordings to locate movement near the doorways in order to identify residents who violate overnight guest rules. The footage has been used to punish and evict residents, who may have a difficult time securing housing in the future as a result of their eviction. While the cameras are only installed in public spaces within these facilities, they still violate people’s privacy rights as residents and their guests are tracked walking to and from their homes, a place that many people consider sacred.

Workplace Surveillance

Workers have been subjected to increasing surveillance over the last few years and one of the most infamous infringers is Amazon. The company has been accused of deploying many tactics in order to stop union organizing such as monitoring employee message boards and private Facebook groups. Amazon has also been accused of posting a job for an intelligence analyst who would be in charge of monitoring labor organizing threats.

Amazon has several resources within their facilities to monitor their employees such as employee ID badges which can be used to track an employee’s location and can allow the company to discover which of their employees are participating in organizing. Amazon facilities have surveillance cameras that are capable of allowing supervisors to track their workers and human monitors who walk around the facilities in order to keep an eye on the workers. Amazon has been accused of identifying union organizers and rotating them throughout the workplace, to prevent the organizers from having prolonged contact with the same employees. One source stated that workers were not allowed to socialize with each other as a manager would come and break them up.

Whole Foods, which is owned by Amazon, has also been accused of using surveillance to track union organizing. It was reported in 2020 that Whole Foods was using a heat map to track stores that could be at risk of unionization based on the distance from the store to the closest union, diversity within the store, team member sentiment, and additional factors.

Digital Surveillance: Where we are now

There have been a few promising federal and state bills introduced in the last few years that would provide vast protections for activists and journalists. On the other hand, there are also recent bills that have been passed that would increase government surveillance and cause more harm to these communities. This section provides a brief overview on where things currently stand.

Federal Legislation

In April 2021, U.S. Senators Ron Wyden (D-OR), Rand Paul (R-KY), and 18 additional senators introduced the Fourth Amendment is Not For Sale Act. For years, data brokers have been able to sell people’s personal information, such as their location data, to law enforcement and intelligence agencies without judicial oversight. Federal law fails to protect people’s data from being sold in this matter, so this bill would work to close this legal loophole and require the government to obtain a court order in order to buy a person’s data. This bill would prohibit law enforcement agencies from purchasing a person’s information from a third party, prohibit government agencies from sharing a person’s records with law enforcement and intelligence agencies, and require the agencies to obtain a court order before obtaining someone’s records. This bill was passed in the House and received by the Senate in April 2024 with little movement since then.

Another promising bill is the Protect Reporters from Exploitive State Spying (PRESS) Act, which was introduced in June 2023 by U.S. Senators Ron Wyden (D-OR), Mike Lee (R-UT), and Richard Durbin (D-IL). Law enforcement agencies have been secretly obtaining subpoenas for reporters’ emails and phone records in order to determine their confidential sources. The bill would protect a reporter’s data that is held by a third party from being secretly obtained from the government without having an opportunity to challenge the subpoena. As of now, this bill has passed the House and has been received in the Senate and referred to the Committee on the Judiciary.

On the opposite end of the spectrum, there has been legislation passed that expands surveillance such as the National Security Supplemental Appropriations Act bill, which was introduced in February 2024 and passed in April 2024. The bill provides $204 million to the FBI for DNA collection at the border. $170 million goes towards autonomous surveillance towers, mobile video surveillance systems, and drones at the border.

Digital Surveillance in New York State

Turning to New York specifically, there has been some positive movement towards obtaining information on the prevalence of government surveillance and curtailing the recent overreach as well. Recently, the NYPD was ordered by the New York Supreme Court to disclose 2,700 documents and emails related to its surveillance of the 2020 BLM protests between March and September 2020. This information can provide some clarity into the mystery around what surveillance tools were used during this time period, since much of the information known about this time period has come from FOIA requests instead of the NYPD voluntarily disclosing their surveillance practices.

In 2020, the Public Oversight of Surveillance Technology (POST) Act passed. This act required the NYPD to disclose the surveillance tools it uses and publish the impact of those technologies. NYPD is required to publish reports on these surveillance tools, informing the public about how it plans to use these tools and the potential impacts on New Yorkers’ civil liberties and rights. The Brennan Center has written about the shortcomings of the law, largely due to the NYPD failing to adhere to the provisions. In February 2024, a bill adding provisions to the POST Act was introduced to the New York City Council. The provisions would require NYPD to provide the Department of Investigation a list of all surveillance technologies currently in use and provide their retention policies for the information they collected from the technologies. This bill was referred to the Committee on Public Safety in February 2024.

How to Take Action Against Surveillance

There are numerous ways organizations can take action in order to combat the use of mass surveillance in their communities. This section will provide a few examples of actions that organizations can undertake in protecting their community right now, such as legislative action, forming working groups, sharing protest safety procedures, conducting Freedom of Information Act (FOIA) requests, and spreading the word.

Legislation

As demonstrated above, legislation can provide a promising avenue towards ending the overreach of widespread government surveillance of vulnerable communities. It’s important for organizations to have journalists who are willing to report on the issues their community may be facing, such as in the immigration space. The PRESS Act can help journalists who travel to the U.S.-Mexico border to report on issues affecting migrants and humanitarian organizations. Unfortunately, these journalists have been subjected to intimidation tactics while working on their stories which may prevent them from continuing their work. The PRESS Act would prevent government agencies from secretly obtaining subpoenas for reporters’ sources, but there is additional legislation needed to prevent law enforcement agencies from targeting journalists, activists, and attorneys who are providing assistance to migrants. Law enforcement should be prevented from performing warrantless searches, interrogating these individuals about their work without just cause, and creating dossiers of these individuals with illegitimately obtained personal information.

Legislation would also immensely benefit future protesters exercising their rights to free speech and assembly, and could have prevented many harms that occurred during the BLM protests. Since those protests, a few states and around 18 cities, such as Boston and Portland, have passed legislation banning government agencies from using FRT or layed out restrictions on how the technology can be used. But years later, some of these governments would roll back this legislation and allow law enforcement to utilize the technology to investigate crimes, such as New Orleans and Virginia which initially banned local police from using the tool. Vermont, a state that previously had a near complete ban on police use of FRT, passed legislation that would allow the police to use it for investigations in certain instances. Pushes can be made in New York and elsewhere to persuade legislators to care about privacy concerns as much as they care about crime.

Legislation can also be pushed to prevent government agencies from surveilling residents in public housing while they are at their homes. Additionally, legislation can prevent law enforcement agencies from making dossiers of individuals based on the content the person follows or likes on social media. There is a lot of room for growth in this arena since the law has failed to keep up with technological advances. Advocacy organizations can propose or draft bill text with other organizations, meet with legislators, or sign onto letters in support or opposition of pending bills related to digital surveillance and data privacy rights.

Form a local working group to review proposed technology

In 2020, Syracuse mayor Ben Walsh formed the Syracuse Surveillance Technology Working Group, which provides residents an opportunity to comment on proposed uses of surveillance technology by city departments. The group is composed of 12-15 individuals from different community groups in Syracuse, as well as some City of Syracuse employees that are selected by the mayor.

When a city department is interested in utilizing a technology, they submit the request to the working group for review. The group advertises to the public through social media and local news channels to get widespread input. The group obtains comments from the public about their opinion and concerns about the technology and the group conducts their own research as well. The group then produces a report for the city with recommendations and explains how the technology may affect the Syracuse community. The mayor then approves or disapproves of the technology based on the report. Thus far, the group has reviewed automated license plate readers, body-worn cameras, street cameras, and more.

This working group provides the public an opportunity to conduct their own research on the proposed technologies and voice their opinions in a public forum. With many local government agencies wanting to explore the use of technologies like facial recognition, this could give activists a chance to have their opinions heard on these issues before they are implemented. This working group concept could be incorporated in other cities and provide some oversight and input into surveillance technologies that local agencies are utilizing on their residents.

Share protest safety procedures

There are a few measures organizations can recommend to help individuals protect their privacy while they are at a protest. The Surveillance Technology Oversight Project has done a wonderful job creating a safety guide for protesters who wish to protect their digital privacy while organizing. The guide provides information on protecting location data, DNA, and cell phone data. Some of the tips include turning one’s cell phone on airplane mode so that location cannot be tracked, considering what information one posts and shares on social media since it can be observed, and consider what transportation one takes to the protest as vehicles could be tracked via automated license plate readers. This information could be shared by organizations within their communities to ensure activists are doing what they can to protect their information as well as their fellow co-activists. Following these recommendations could prevent activists from being unjustly targeted by law enforcement, such as in the case of Shanai Matteson, the climate activist and artist in Minnesota referenced earlier.

FOIA requests

Another avenue organizations may want to explore is FOIA requests, which can help an organization and the public understand what kind of surveillance their community is being subjected to. There is a cloud of secrecy surrounding which tools government agencies use to surveil people, largely because agencies refuse to share this information with the public without legal force. As stated above, the NYPD was recently ordered to turn over records that would reveal how they used FRT against BLM protesters. It is essential to have this kind of information as it will help organizations discover how law enforcement utilized this tool and help organizations fight against future use. Almost all of the stories featured above were derived from an organization submitting a FOIA request and obtaining internal documents that revealed how communities were being harmed by a government agency.

Sometimes, a party may refuse to comply with a FOIA request and the situation will escalate to legal action. As an example, in 2024, Just Futures Law, Mijente Support Group, and the Samuelson Clinic filed a lawsuit to force ICE to comply with a 2021 FOIA request that ICE failed to respond to. Because these situations can turn contentious, it’s important to have legal support when pursuing a FOIA, which can come from an attorney, a law firm, or law school clinic.

Spread the word

In order to combat these issues, people have to be informed about the mass surveillance that they are subjected to on a daily basis. Many people have expressed the sentiment “If you’re not doing anything wrong, you have nothing to hide”; however, they may not be fully versed on the implications of surveillance on vulnerable communities who have done nothing to warrant this invasion into their privacy. Some ways to spread the word can include holding public meetings on various surveillance topics with speakers, organizing against local surveillance tactics and publicizing the action, speaking with community members to see if they’ve noticed any surveillance tactics in their neighborhood, and working with other social justice, tech, or legal organizations. As stated above, a legal organization or clinic can help social justice organizations litigate FOIA requests that are not complied with as well as provide assistance with other kinds of litigation as needed. Social justice organizations can also work with think tank organizations to produce reports on civil rights violations and inform the public of rising issues. After the report is released, organizations can sign onto a letter calling on the government to stop an action or support an action.

Conclusion

There is much work to be done in the digital privacy space as the law has failed to keep up with the advancement of technology and rising surveillance concerns. However, everyone is capable of becoming well-versed in these issues, pushing for change on the state and federal level, and spreading the word throughout their communities. Privacy can be nearly impossible to achieve on an individual level, but together we can fight against efforts that degrade, dehumanize, and obstruct freedoms in our society.

Appendix 1. Legal Organizations to Know

Legal organizations in the privacy space are well versed on surveillance issues and could help social justice organizations know where to turn when individuals they serve come under surveillance. The following organizations are prominent in the privacy rights space and are performing groundbreaking work to combat government overreach.

ACLU

The ACLU has a Project on Speech, Privacy, and Technology department that focuses on the right to privacy, ensuring individuals have control of their personal information, and protecting individual’s civil liberties as new advances are made in science and technology. The project focuses on consumer privacy, internet privacy, location tracking, medical and genetic privacy, national ID, privacy at borders and checkpoints, surveillance technologies, and workplace privacy.

S.T.O.P

The Surveillance Technology Oversight Project fights government surveillance through advocacy and litigation and hopes to transform New York into a pro privacy state. S.T.O.P. organized over 100 organizations to get the POST Act approved in the state, has sued city and state agencies for records pertaining to a variety of issues such as NYPD’s use of FRT, and also publishes research papers on different surveillance technologies.

Brennan Center for Justice

The Brennan Center for Justice is a nonpartisan law and policy organization that works to defend democracy and justice. One of their initiatives is privacy and free expression. Through that project, the Brennan Center works to challenge mass surveillance policies that are overreaching and works to inform the public about these issues. The Brennan Center has been a leader in challenging the structure of the Foreign Intelligence Surveillance Court and the fact that the court only hears from the government when government agencies seek to obtain people’s data.

Center on Privacy and Technology

The Center on Privacy and Technology is a think tank focused on privacy and surveillance law and policy. The Center fights back against surveillance by conducting long-term investigations, research, and publishing reports with their findings. Their most recent report, Raiding the Genome: How the United States is abusing its immigration powers to amass DNA for Future Policing, discusses migrants having their privacy rights invaded by the Department of Homeland Security (DHS). DHS is taking DNA samples from detainees, which is later stored in the FBI’s database, CODIS.

Just Futures Law

Just Futures Law works alongside activists, organizers, and community groups to dismantle mass surveillance, incarceration, and deportation via advocacy and legal support. They have worked on ending ICE digital prisons, stopping data brokers from selling people’s data to ICE which could lead to deportation, ending the digital border wall, protecting driver data from being turned over to ICE, amongst many other projects.

Expected Utility Forecasting for Science Funding

The typical science grantmaker seeks to maximize their (positive) impact with a limited amount of money. The decision-making process for how to allocate that funding requires them to consider the different dimensions of risk and uncertainty involved in science proposals, as described in foundational work by economists Chiara Franzoni and Paula Stephan. The Von Neumann-Morgenstern utility theorem implies that there exists for the grantmaker — or the peer reviewer(s) assessing proposals on their behalf — a utility function whose expected value they will seek to maximize.

Common frameworks for evaluating proposals leave this utility function implicit, often evaluating aspects of risk, uncertainty, and potential value independently and qualitatively. Empirical work has suggested that such an approach may lead to biases, resulting in funding decisions that deviate from grantmakers’ ultimate goals. An expected utility approach to reviewing science proposals aims to make that implicit decision-making process explicit, and thus reduce biases, by asking reviewers to directly predict the probability and value of different potential outcomes occurring. Implementing this approach through forecasting brings the added benefits of providing (1) a resolution and scoring process that could help incentivize reviewers to make better, more accurate predictions over time and (2) empirical estimates of reviewers’ accuracy and tendency to over or underestimate the value and probability of success of proposals.

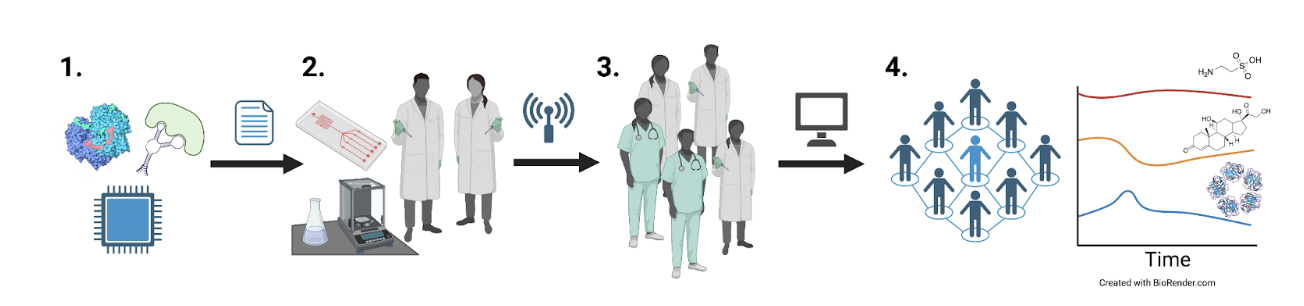

At the Federation of American Scientists, we are currently piloting this approach on a series of proposals in the life sciences that we have collected for Focused Research Organizations (FROs), a new type of non-profit research organization designed to tackle challenges that neither academia or industry is incentivized to work on. The pilot study was developed in collaboration with Metaculus, a forecasting platform and aggregator, and is hosted on their website. In this paper, we provide the detailed methodology for the approach that we have developed, which builds upon Franzoni and Stephan’s work, so that interested grantmakers may adapt it for their own purposes. The motivation for developing this approach and how we believe it may help address biases against risk in traditional peer review processes is discussed in our article “Risk and Reward in Peer Review”.

Defining Outcomes

To illustrate how an expected utility forecasting approach could be applied to scientific proposal evaluation, let us first imagine a research project consisting of multiple possible outcomes or milestones. In the most straightforward case, the outcomes that could arise are mutually exclusive (i.e., only a single one will be observed). Indexing each outcome with the letter 𝑖, we can define the expected value of each as the product of its value (or utility; 𝓊𝑖) and the probability of it occurring, 𝑃(𝑚𝑖). Because the outcomes in this example are mutually exclusive, the total expected utility (TEU) of the proposed project is the sum of the expected value of each outcome1:

𝑇𝐸𝑈 = 𝛴𝑖𝓊𝑖𝑃(𝑚𝑖).

However, in most cases, it is easier and more accurate to define the range of outcomes of a research project as a set of primary and secondary outcomes or research milestones that are not mutually exclusive, and can instead occur in various combinations.

For instance, science proposals usually highlight the primary outcome(s) that they aim to achieve, but may also involve important secondary outcome(s) that can be achieved in addition to or instead of the primary goals. Secondary outcomes can be a research method, tool, or dataset produced for the purpose of achieving the primary outcome; a discovery made in the process of pursuing the primary outcome; or an outcome that researchers pivot to pursuing as they obtain new information from the research process. As such, primary and secondary outcomes are not necessarily mutually exclusive. In the simplest scenario with just two outcomes (either two primary or one primary and one secondary), the total expected utility becomes

𝑇𝐸𝑈 = 𝓊1𝑃(𝑚1⋂ not 𝑚2) + 𝓊2𝑃(𝑚2⋂ not 𝑚1) + (𝓊1 + 𝓊2)𝑃(𝑚1⋂𝑚2),

𝑇𝐸𝑈 = 𝓊1𝑃(𝑚1) – (𝑚1⋂ 𝑚2)) + 𝓊2𝑃(𝑚2) – 𝑃(𝑚1⋂ 𝑚2)) + (𝓊1 + 𝓊2)𝑃(𝑚1⋂𝑚2)

𝑇𝐸𝑈 = 𝓊1𝑃(𝑚1) + 𝓊2𝑃(𝑚2) – (𝓊1 + 𝓊2)𝑃(𝑚1⋂𝑚2).

As the number of outcomes increases, the number of joint probability terms increases as well. Assuming the outcomes are independent though, they can be reduced to the product of the probabilities of individual outcomes. For example,

𝑃(𝑚1⋂𝑚2) = 𝑃(𝑚1) * 𝑃(𝑚2)

On the other hand, milestones are typically designed to build upon one another, such that achieving later milestones necessitates the achievement of prior milestones. In these cases, the value of later milestones typically includes the value of prior milestones: for example, the value of demonstrating a complete pilot of a technology is inclusive of the value of demonstrating individual components of that technology. The total expected utility can thus be defined as the sum of the product of the marginal utility of each additional milestone and its probability of success:

𝑇𝐸𝑈 = 𝛴𝑖(𝓊𝑖 + 𝓊𝑖-1)𝑃(𝑚𝑖),

where 𝓊0 = 0.

Depending on the science proposal, either of these approaches — or a combination — may make the most sense for determining the set of outcomes to evaluate.

In our FRO Forecasting pilot, we worked with proposal authors to define two outcomes for each of their proposals. Depending on what made the most sense for each proposal, the two outcomes reflected either relatively independent primary and secondary goals, or sequential milestone outcomes that directly built upon one another (though for simplicity, we called all of the outcomes milestones).

Defining Probability of Success

Once the set of potential outcomes have been defined, the next step is to determine the probability of success between 0% and 100% for each outcome if the proposal is funded. A prediction of 50% would indicate the highest level of uncertainty about the outcome, whereas the closer the predicted probability of success is to 0% or 100%, the more certainty there is that the outcome will be one over the other.

Furthermore, Franzoni and Stephan decompose probability of success into two components: the probability that the outcome can actually occur in nature or reality and the probability that the proposed methodology will succeed in obtaining the outcome (conditional on it being possible in nature). The total probability is then the product of these two components:

𝑃(𝑚𝑖) = 𝑃nature(𝑚𝑖) * 𝑃proposal(𝑚𝑖)

Depending on the nature of the proposal (e.g., more technology-driven, or more theoretical/discovery driven), each component may be more or less relevant. For example, our forecasting pilot includes a proposal to perform knockout validation of renewable antibodies for 10,000 to 15,000 human proteins; for this project, 𝑃nature(𝑚𝑖) approaches 1 and 𝑃proposal(𝑚𝑖) drives the overall probability of success.

Defining Utility

Similarly, the value of an outcome can be separated into its impact on the scientific field and its impact on society at large. Scientific impact aims to capture the extent to which a project advances the frontiers of knowledge, enables new discoveries or innovations, or enhances scientific capabilities or methods. Social impact aims to capture the extent to which a project contributes to solving important societal problems, improving well-being, or advancing social goals.

In both of these cases, determining the value of an outcome entails some subjective preferences, so there is no “correct” choice, at least mathematically speaking. However, proxy metrics may be helpful in considering impact. Though each is imperfect, one could consider citations of papers, patents on tools or methods, or users of method, tools, and datasets as proxies of scientific impact. For social impact, some proxy metrics that one might consider are the value of lives saved, the cost of illness prevented, the number of job-years of employment generated, economic output in terms of GDP, or the social return on investment.

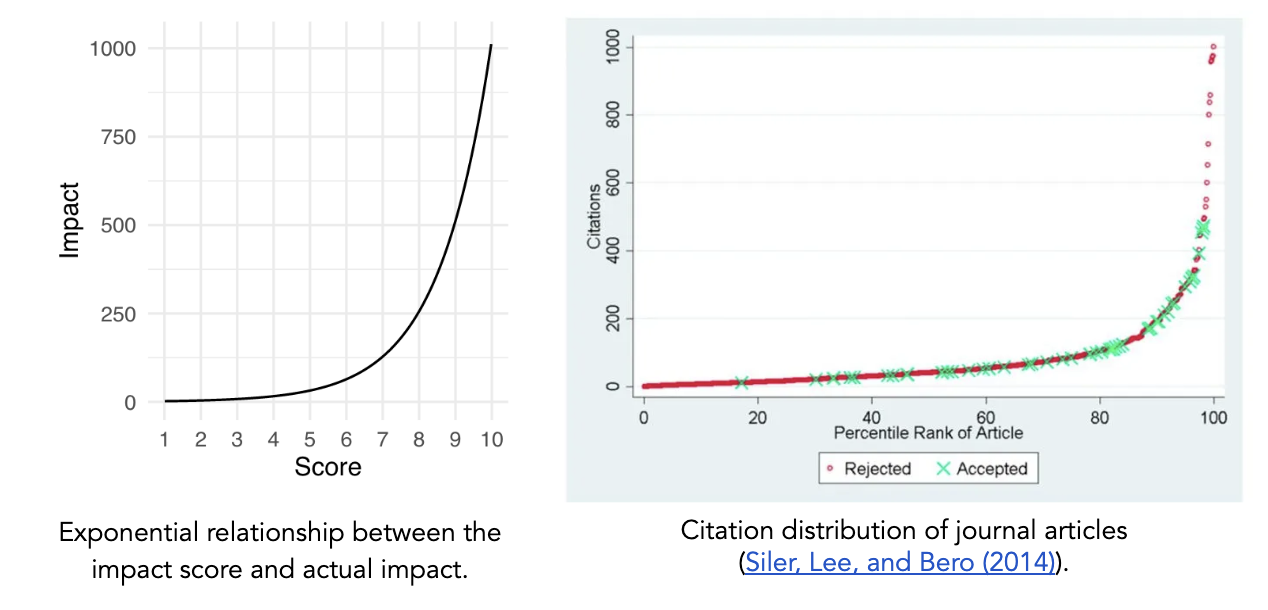

The approach outlined by Franzoni and Stephan asks reviewers to assess scientific and social impact on a linear scale (0-100), after which the values can be averaged to determine the overall impact of an outcome. However, we believe that an exponential scale better captures the tendency in science for a small number of research projects to have an outsized impact and provides more room at the top end of the scale for reviewers to increase the rating of the proposals that they believe will have an exceptional impact.

As such, for our FRO Forecasting pilot, we chose to use a framework in which a simple 1–10 score corresponds to real-world impact via a base 2 exponential scale. In this case, the overall impact score of an outcome can be calculated according to

𝓊𝑖 = log[2science impact of 𝑖 + 2social impact of 𝑖] – 1.

For an exponential scale with a different base, one would substitute that base for two in the above equation. Depending on each funder’s specific understanding of impact and the type(s) of proposals they are evaluating, different relationships between scores and utility could be more appropriate.

In order to capture reviewers’ assessment of uncertainty in their evaluations, we asked them to provide median, 25th, and 75th percentile predictions for impact instead of a single prediction. High uncertainty would be indicated by a narrow confidence interval, while low uncertainty would be indicated by a wide confidence interval.

Determining the “But For” Effect of Funding

The above approach aims to identify the highest impact proposals. However, a grantmaker may not want to simply fund the highest impact proposals; rather, they may be most interested in understanding where their funding would make the highest impact — i.e., their “but for” effect. In this case, the grantmaker would want to fund proposals with the maximum difference between the total expected utility of the research proposal if they chose to funded it versus if they chose not to:

“But For” Impact = 𝑇𝐸𝑈(funding) – 𝑇𝐸𝑈(no funding).

For TEU(funding), the probability of the outcome occurring with this specific grantmaker’s funding using the proposed approach would still be defined as above

𝑃(𝑚𝑖 | funding) = 𝑃nature(𝑚𝑖) * 𝑃proposal(𝑚𝑖),

but for 𝑇𝐸𝑈(no funding), reviewers would need to consider the likelihood of the outcome being achieved through other means. This could involve the outcome being realized by other sources of funding, other researchers, other approaches, etc. Here, the probability of success without this specific grantmaker’s funding could be described as

𝑃(𝑚𝑖 | no funding) = 𝑃nature(𝑚𝑖) * 𝑃other mechanism(𝑚𝑖).

In our FRO Forecasting pilot, we assumed that 𝑃other mechanism(𝑚𝑖) ≈ 0. The theory of change for FROs is that there exists a set of research problems at the boundary of scientific research and engineering that are not adequately supported by traditional research and development models and are unlikely to be pursued by academia or industry. Thus, in these cases it is plausible to assume that,

𝑃(𝑚𝑖 | no funding) ≈ 0

𝑇𝐸𝑈(no funding) ≈ 0

“But For” Impact ≈ 𝑇𝐸𝑈(funding).

This assumption, while not generalizable to all contexts, can help reduce the number of questions that reviewers have to consider — a dynamic which we explore further in the next section.

Designing Forecasting Questions

Once one has determined the total expected utility equation(s) relevant for the proposal(s) that they are trying to evaluate, the parameters of the equation(s) must be translated into forecasting questions for reviewers to respond to. In general, for each outcome, reviewers will need to answer the following four questions:

- If this proposal is funded, what is the probability that this outcome will occur?

- If this proposal is not funded, what is the probability that this outcome will still occur?

- What will be the scientific impact of this outcome occurring?

- What will be the social impact of this outcome occurring?

For the probability questions, one could alternatively ask reviewers about the different probability components (𝑃nature(𝑚𝑖), 𝑃proposal(𝑚𝑖), 𝑃other mechanism(𝑚𝑖), etc.), but in most cases it will be sufficient — and simpler for the reviewer — to focus on the top-level probabilities that feed into the TEU calculation.

In order for the above questions to tap into the benefits of the forecasting framework, they must be resolvable. Resolving the forecasting questions means that at a set time in the future, reviewers’ predictions will be compared to a ground truth based on the actual events that have occurred (i.e., was the outcome actually achieved and, if so, what was its actual impact?). Consequently, reviewers will need to be provided with the resolution date and the resolution criteria for their forecasts.

Resolution of the probability-based questions hinges mostly on a careful and objective definition of the potential outcomes, and is otherwise straightforward — though note that only one of the probability questions will be resolved, since they are mutually exclusive. The optimal resolution of the scientific and social impact questions may depend on the context of the project and the chosen approach to defining utility. A widely applicable approach is to resolve the utility forecasts by having either program managers or subject matter experts evaluate the results of the completed project and score its impact at the resolution date.

For our pilot, we asked forecasting questions only about the probability of success given funding (question 1 above) and the scientific and social impact of each outcome (questions 3 and 4); since we assumed that the probability of success without funding was zero, we did not ask question 2. Because outcomes for the FRO proposals were designed to be either independent or sequential, we did not have to ask additional questions on the joint probability of multiple outcomes being achieved. We chose to resolve our impact questions with a post-project panel of subject matter experts.

Additional Considerations

In general, there is a tradeoff in implementing this approach between simplicity and thoroughness, efficiency and accuracy. Here are some additional considerations on that tradeoff for those looking to use this approach:

- The responsibility of determining the range of potential outcomes for a proposal could be assigned to three different parties: the proposal author, the proposal reviewers, or the program manager. First, grantmakers could ask proposal authors to comprehensively define within their proposal the potential primary and secondary outcomes and/or project milestones. Alternatively, reviewers could be allowed to individually — or collectively — determine what they see as the full range of potential outcomes. The third option would be for program managers to define the potential outcomes based on each proposal, with or without input from proposal authors. In our pilot, we chose to use the third approach with input from proposal authors, since it simplified the process for reviewers and allowed us to limit the number of outcomes under consideration to a manageable amount.

- In many cases, a “failed” or null outcome may still provide meaningful value by informing other scientists that the research method doesn’t work or that the hypothesis is unlikely to be true. Considering the replication crises in multiple fields, this could be an important and unaddressed aspect of peer review. Grantmakers could choose to ask reviewers to consider the value of these null outcomes alongside other outcomes to obtain a more complete picture of the project’s utility. We chose not to address this consideration in our pilot for the sake of limiting the evaluation burden on reviewers.

- If grant recipients’ are permitted greater flexibility in their research agendas, this expected value approach could become more difficult to implement, since reviewers would have to consider a wider and more uncertain range of potential outcomes. This was not the case for our FRO Forecasting pilot, since FROs are designed to have specific and well-defined research goals.

Other Similar Efforts

Currently, forecasting is an approach rarely used in grantmaking. Open Philanthropy is the only grantmaking organization we know of that has publicized their use of internal forecasts about grant-related outcomes, though their forecasts do not directly influence funding decisions and are not specifically of expected value. Franzoni and Stephan are also currently piloting their Subjective Expected Utility approach with Novo Nordisk.

Conclusion

Our goal in publishing this methodology is for interested grantmakers to freely adapt it to their own needs and iterate upon our approach. We hope that this paper will help start a conversation in the science research and funding communities that leads to further experimentation. A follow up report will be published at the end of the FRO Forecasting pilot sharing the results and learnings from the project.

Acknowledgements

We’d like to thank Peter Mühlbacher, former research scientist at Metaculus, for his meticulous feedback as we developed this approach and for his guidance in designing resolvable forecasting questions. We’d also like to thank the rest of the Metaculus team for being open to our ideas and working with us on piloting this approach, the process of which has helped refine our ideas to their current state. Any mistakes here are of course our own.

Culture Blast at the Kurt Vonnegut Museum

Charlotte Yeung is a Purdue student and New Voices in Nuclear Weapons fellow at FAS. Her multimedia show, Culture Blast, opens this week at the Kurt Vonnegut Museum in Indianapolis.

Best known for his anti-war novel Slaughterhouse-Five (1969), Kurt Vonnegut’s experience serving in World War II informed his work and his life. He acted as a powerful spokesman for the preservation of our Constitutional freedoms, for nuclear arms control, and for the protection of the earth’s fragile biosphere throughout the 1980s and 1990s. He remained engaged in these issues throughout his life.

FAS: Tell us about this project and its goals…

Charlotte: My exhibit, Culture Blast, weaves Kurt Vonnegut’s stance on nuclear weapons with current issues we face today. It serves as a space for preservation and linkage, asking the viewer to connect Vonnegut’s concerns to the modern day. It is also a place of artistic protest and education, meant to inform the viewer of the often ignored complexity of nuclear weapons and how they affect many different parts of society. I worked with Lovely Umayam and the team at the Federation of American Scientists (FAS) to ideate and research what underpins this exhibit.

Why this medium?

I explore nuclear history and literary protest through blackout poetry, free verse, and digital illustration – contemporary forms of written and visual art that carries on Vonnegut’s artistic protest into the modern day.

In particular, I wanted to create art that can be accessible to everyone and not just people who go to the museum. Seeing a photo of a pencil drawing or a statue online is experiencing just the surface-level nature of a work of art. It lacks the other sensory components like seeing how the art fits with the wider space and how others react to it. A similar issue comes up if my poems were spoken word. That art form lives off of a crowd. My digital art and poetry is meant to be seen in both a museum setting and online setting. Viewers can watch a time lapse of my art and see the process of creating this work. The poetry is meant to be read rather than performed.

Can you share some of the backstory on a few of the multimedia pieces? How did the concept evolve as you worked on them?

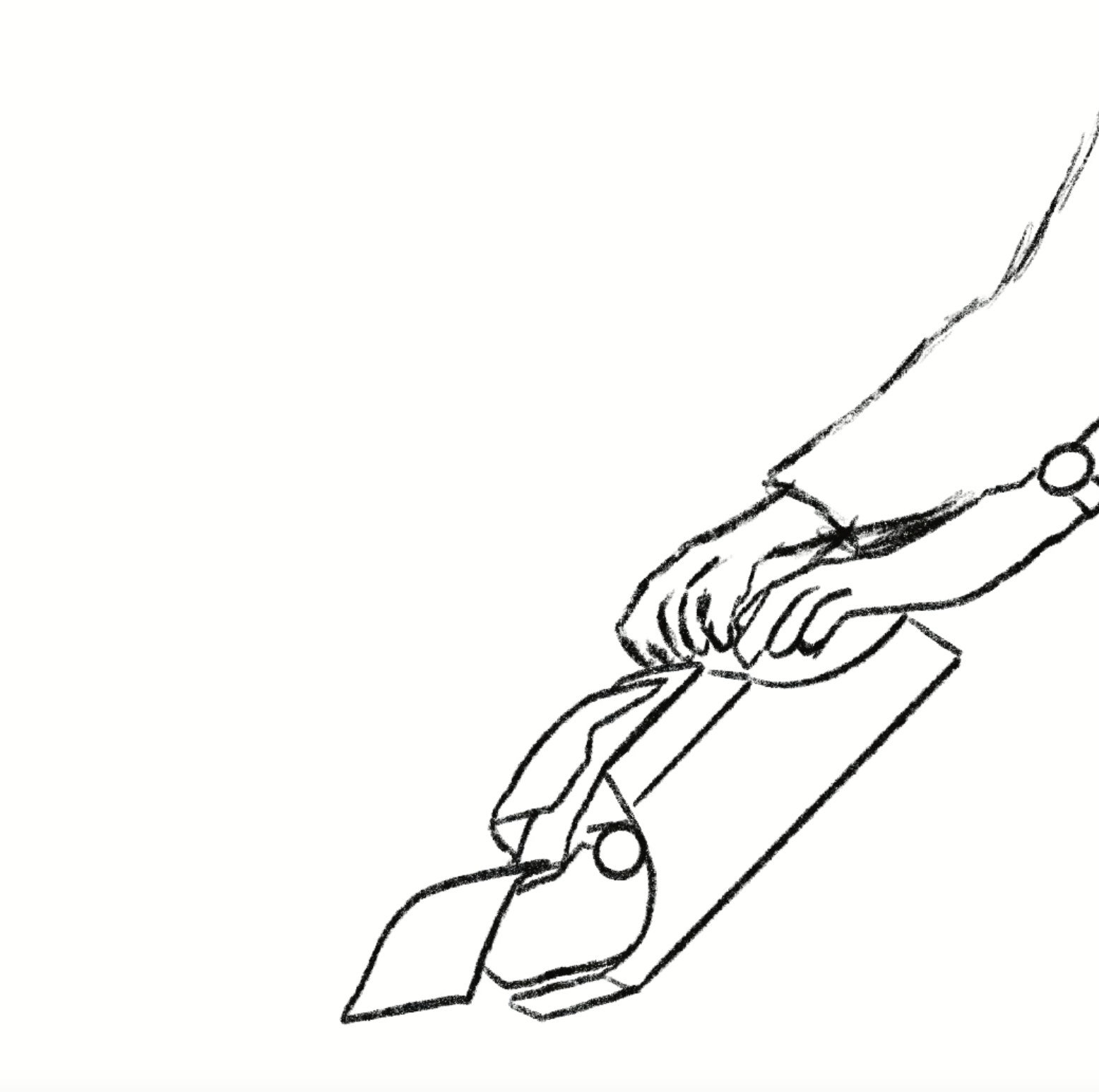

My favorite pair in the collection is Sketch 1 (the typewriter) and the blackout poem Protection in the name of public interest. I drew Vonnegut on his typewriter because I felt this to be the most symbolic image of his artistic protest. Though he wrote and sketched on countless personal notebooks, his work commenting on nuclear weapons can be found in some of his published stories. Vonnegut turned to his literary creativity to reflect on nuclear weapons, in particular scientists’ indifference to the suffering caused by the atom bomb, as seen in works such as Cat’s Cradle and Report on The Barnhouse Effect.

The uncensored version of my poem (Protection in the name of public interest) meditates on the bomb’s harm and also the long association between science and violence. I chose to create a blackout poem because in the immediate aftermath of World War II, the American government censored many of the photos and stories about what happened to the people in Hiroshima and Nagasaki, in effect erasing the lived experience of these people. It wasn’t until writer John Hersey’s account of the U.S. nuclear attacks, titled Hiroshima, was published in The New Yorker in 1946 that the American public had an uncensored understanding of what had happened in Japan.

In terms of the process, I began this thinking that I would draw a hand and a pencil but Vonnegut is strongly associated with his typewriter so I felt that it wouldn’t be as accurate to draw something else. I knew I would write a blackout poem and link it to censorship but I wasn’t sure what it would be called or what it would say. I ultimately wrote how I felt about the exhibit and what I’ve learned so far in the FAS fellowship and what I wanted to convey. I started blacking out the poem and left the main themes I wanted viewers to have from this exhibit.

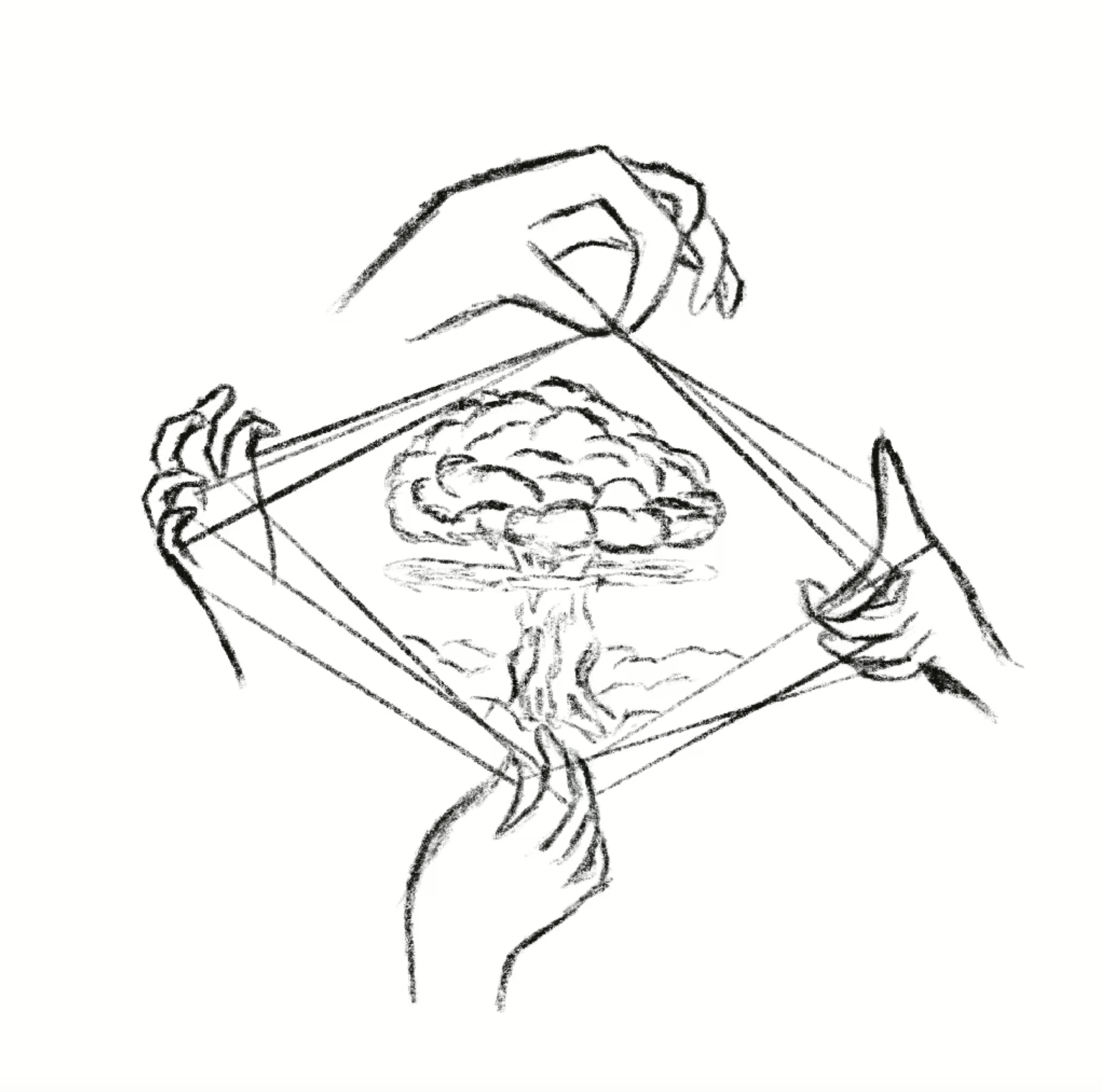

I am very fond of Sketch 2 (the cat’s cradle with the mushroom cloud inside) and the poem Labels. Labels was inspired by a lunch I had last year with a hibakusha, Yoshiko Kajimoto. She was 14 when the bomb fell and she spoke of the following days in vivid detail. I believe it was her testimony in particular that started me on this path towards researching the societal implications of nuclear weapons.

I drew an artistic interpretation of a cat’s cradle seeking to capture a mushroom cloud to communicate the concept of hyperobjects. According to Timothy Morton, a hyperobject is a real event or phenomenon so vast that it is beyond human comprehension. Nuclear weapons are an example of a hyperobject – its existence and use has had devastating ramifications touching different aspects of life that may be hard to fully comprehend all at once.

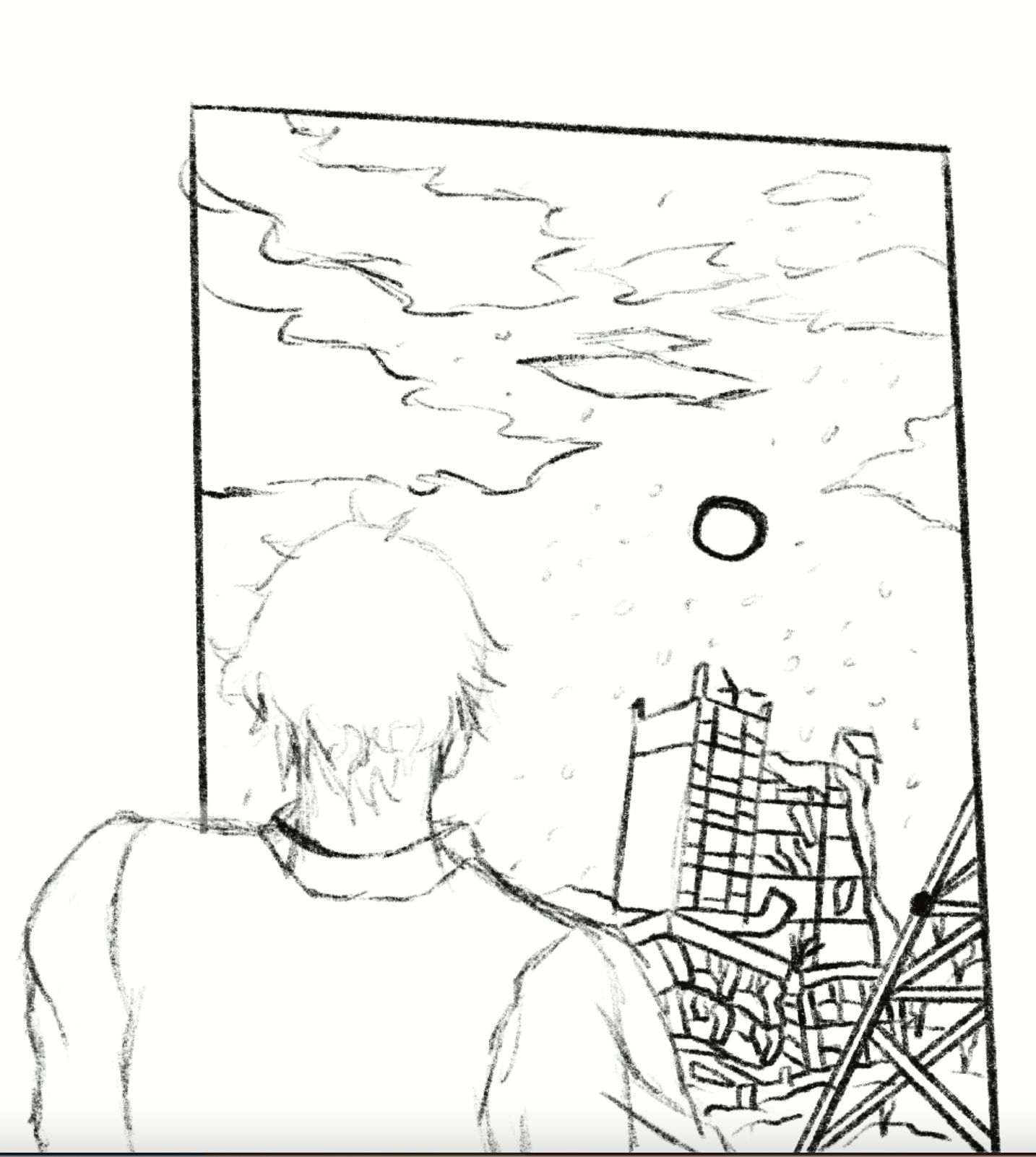

Nuclear weapons are both deeply present and hidden. On one hand, nuclear weapons are a constant security threat and have left deep scars on cities and bodies ranging from people in Japan to Utah downwinders. On the other hand, some of the information around them is clouded in mystery, and censored by different governments. Nuclear and fallout shelters built for nuclear warfare are considered to be Cold War relics; in the United States, they are largely abandoned or hidden, tucked in the basements of homes and schools, or located far out in the countryside away from cities. Some nuclear bunkers are parking lots in New York City and rumored rooms in DC. Secrecy and hidden locality are reasons why there isn’t as much widespread public knowledge or understanding of nuclear weapons.

Why is nuclear weapon risk a conversation we’re still having today? Where can people new to this issue learn more?

As a young person, I encounter many people my age who ask me why I care about nuclear weapons and why they matter in this day and age. It is, in a sense, meaningless to them. The violence of the bomb cannot be completely understood without hearing about the pain it wrought on people who experienced it firsthand. From burning skin and bodies to radiation poisoning, nuclear weapons have left permanent physical and psychological scars that are rarely spoken about, but have affected generations of families and communities.

I suggest reading more about what happened to individual A-bomb survivors to truly understand the effects of nuclear weapons. Humanizing those affected by war combats the practice of minimizing lives and experience to death counts. Great works to look at include Barefoot Gen (a manga on the Hiroshima bombings inspired by the hibakusha author’s experience), Grave of the Fireflies (a film about children grappling with the effects of the bombing), and the poem Bringing Forth New Life (生ましめんかな) by hibakusha poet Sadako Kurihara (which is about a woman giving birth in the ruins while the midwife dies from injuries in the middle of the process).

I knew the design from the beginning. It had to be a cat’s cradle because Vonnegut equates scientific irreverence to the bomb’s humanitarian effects to a cat’s cradle (an essentially useless game of moving strings with your fingers). The poem centers around hyperobjects, a term I grappled with in a university seminar with Dr. Brite from Purdue’s Honors College. I had never heard of the term before that class but I thought it was fitting for something like nuclear weapons. My research is interdisciplinary in nature and it seeks to analyze the cultural aspects of nuclear weapons that aren’t traditionally used by the political science community.

The third piece I’ll discuss is Sketch 5 and Rebuilding. This sketch of a rose is reminiscent of the Duftwolke roses sent from Germany to Hiroshima after the war as a symbol of rebuilding. It is also symbolic of Vonnegut’s experiences in World War II. He was caught in the firestorm that engulfed Dresden and he sheltered in a slaughterhouse. As one of the remaining survivors, he was forced to burn dead bodies in the aftermath. Vonnegut became an anti-war activist as a result of this experience. He also wrote Slaughterhouse-Five a book that grapples with trauma and PTSD after war. His writing was his way of finding closure and rebuilding, hence the title of the poem.

I wanted to end this collection on an optimistic note because, as dark and grim as war and nuclear weapons can be, there is great resilience in humanity. It takes monumental courage and hope to rebuild a city or mind or soul after facing the devastation of an all-consuming weapon.

I actually drew this while talking with FAS fellows and FAS advisors. I often draw to pay attention to important conversations (so all of my notebooks are filled with drawings). I thought the deep, complex observations about nuclear weapons and ethics and misinformation and other fields was so fascinating and I think as a result, I created my favorite drawing. I felt very hopeful during the conversation, because I saw so many people who were invested in this topic and were actively researching and discussing the implication of nuclear weapons.

Where can people see your show/contact you?

The showcase can be seen at the Kurt Vonnegut Museum and Library in Indianapolis, Indiana. If someone wants to speak about this topic with me, they can reach me at X or Instagram at @cmyeungg.

A Focused Research Organization to Measure Complete Neuronal Input-Output Functions

Measuring how neurons integrate their inputs and respond to them is key to understanding the impressive and complex behavior of humans and animals. However, a complete measurement of neuronal Input-Output Functions (IOFs) has not been achieved in any animal. Undertaking the complete measurement of IOFs in the model system C. elegans could refine critical methods and discover principles that will generalize across neuroscience.

Systems neuroscience aims to understand the complex interplay of neurons in the brain, which enables the impressive behaviors of animals. A critical component of this understanding is the Input-Output Function (IOF) of neurons, which characterizes how a neuron integrates its inputs and responds to them. However, despite its importance, a complete measurement of IOFs in any animal has not been achieved, creating a significant blind spot in our understanding of brain function. While parts of IOFs have been measured through various experiments, these efforts only control a narrow subset of the inputs to any given neuron, providing only a small slice of the true IOF. Furthermore, the output of neurons is a complex nonlinear function of their inputs, adding to the complexity of the problem. To truly understand the computation and function of the brain, we need a detailed functioning of the IOFs, which requires controlling all of the inputs and observing the factors that shape IOFs. Pursuing this in a single animal model would uncover new methods, tools, and scientific principles that could catalyze large-scale innovation across neuroscience.

Project Concept