Bold Goals Require Bold Funding Levels. The FY25 Requests for the U.S. Bioeconomy Fall Short

Over the past year, there has been tremendous momentum in policy for the U.S. bioeconomy – the collection of advanced industry sectors, like pharmaceuticals, biomanufacturing, and others, with biology at their core. This momentum began in part with the Bioeconomy Executive Order (EO) and the programs authorized in CHIPS and Science, and continued with the Office of Science and Technology Policy (OSTP) release of the Bold Goals for U.S. Biotechnology and Biomanufacturing (Bold Goals) report. The report highlighted ambitious goals that the Department of Energy (DOE), Department of Commerce (DOC), Human Health Services (HHS), National Science Foundation (NSF), and the Department of Agriculture (USDA) have committed to in order to further the U.S. bioeconomical enterprise.

However, these ambitious goals set by various agencies in the Bold Goals report will also require directed and appropriate funding, and this is where we have been falling short. Multiple bioeconomy-related programs were authorized through the bipartisan CHIPS & Science legislation but have yet to receive anywhere near their funding targets. Underfunding and the resulting lack of capacity has also led to a delay in the tasks under the Bioeconomy EO. In order for the bold goals outlined in the report to be realized, it will be imperative for the U.S. to properly direct and fund the many different endeavors under the U.S. bioeconomy.

Despite this need for funding for the U.S. bioeconomy, the recently-completed FY2024 (FY24) appropriations were modest for some science agencies but abysmal for others, with decreases seen across many different scientific endeavors across agencies. The DOC, and specifically the National Institute of Standards and Technology (NIST), saw massive cuts in funding base program funding, with earmarks swamping core activities in some accounts.

There remains some hope that the FY2025 (FY25) budget will alleviate some of the cuts that have been seen to science endeavors, and in turn, to programs related to the bioeconomy. But the strictures of the Fiscal Responsibility Act, which contributed to the difficult outcomes in FY24, remain in place for FY25 as well.

Bioeconomy in the FY25 Request

With this difficult context in mind, the Presidential FY25 Budget was released as well as the FY25 budgets for DOE, DOC, HHS, NSF, and USDA.

The President’s Budget makes strides toward enabling a strong bioeconomy by prioritizing synthetic biology metrology and standards within NIST and by directing OSTP to establish the Initiative Coordination Office to support the National Engineering Biology Research and Development Initiative. However, beyond these two instances, the President’s budget only offers limited progress for the bioeconomy because of mediocre funding levels.

The U.S. bioeconomy has a lot going on, with different agencies prioritizing different areas and programs depending on their jurisdiction. This makes it difficult to properly grasp all the activity that is ongoing (but we’re working on it, stay tuned!). However, we do know that the FY25 budget requests from the agencies themselves have been a mix bag for bioeconomy activities related to the Bold Goals Report. Some agencies are asking for large appropriations, while some agencies are not investing enough to support these goals:

Department of Energy supports Bold Goals Report efforts in biotech & biomanufacturing R&D to further climate change solutions

The increase in funding levels requested for FY25 for BER and MESC will enable increased biotech and biomanufacturing R&D, supporting DOE efforts to meet its proposed objectives in the Bold Goals Report.

- INCREASE: $945M for Biological and Environmental Research (BER) in FY25, a substantial increase of 5% from FY24 appropriations of $900M

- INCREASE: $113M for Manufacturing & Energy Supply Chains (MESC) in FY25, a substantial expansion from FY24 appropriations of $18 million

Department of Commerce falls short in support of biotech & biomanufacturing R&D supply chain resilience

One budgetary increase request is offset by two flat funding levels.

- INCREASE: $645M for the International Trade Administration in FY25, a 6% increase from the FY24 enacted amount

- Specifically they are asking for $103M for the Supply Chain Resiliency Program. This is important because, as seen during the COVID-19 pandemic, our supply chain currently is not resilient. Ensuring that we have the proper inputs, outputs, and materials required to continue our day-to-day endeavors will be vital in maintaining and leading a strong bioeconomy.

- FLAT: $1.5B for NIST in FY25, a 3% increase from FY24 enacted of $1.46 billion

- $37M for NIST’s Manufacturing USA program and $175M for the Manufacturing Extension Partnership, both flat from the FY24 appropriations levels. Flat funding won’t keep pace with the amount of work that needs to be done to create a resilient supply chain and programs like BioMADE and NIIMBL need to guide their industries forward.

Department of Agriculture falls short in support of biotech & biomanufacturing R&D to further food & Ag innovation

- INCREASE: $1.74B for the National Institute of Food and Agriculture, a 3.5% increase from the FY24 enacted amount

- DECREASE: $1.78B for the Agricultural Research Service program, a 3% decrease from the FY24 appropriated $1.85B

- NO FUNDING: Once again the Agriculture Advanced Research and Development Authority (AgARDA) has not been funded at all in FY25, despite being authorized at $50M a year through FY23 through the 2018 Farm Bill – this is a missed opportunity to build much needed agriculture innovation

Human Health Services falls short in support of biotech & biomanufacturing R&D to further human health

- DECREASE: $47.9B for the National Institutes of Health, a 1.4% decrease from the FY24 appropriated $48.6B

- Specifically, the Advanced Research Projects Agency for Health (ARPA-H) would receive $1.5B, maintaining funding levels seen in FY24

- DECREASE: $970M for the Biomedical Advanced Research & Development Authority (BARDA), a 4% decrease from the FY24 appropriated $1B

National Science Foundation supports Bold Goals Report efforts in biotech & biomanufacturing R&D to further cross-cutting advances

- INCREASE: $8B for Research and Related Activities within NSF, a 12% increase from the FY24 enacted amounts

- $900M for Technology, Innovation, and Partnerships (TIP), where in FY24 Congress supported the program without specifying funds for it

- $258M for the Established Program to Stimulate Competitive Research (EPSCoR), a 3% increase from the FY24 enacted amount

- INCREASE: $387M for Advanced Manufacturing across NSF, a 9% increase from FY23 amounts*

- INCREASE: $421M for Biotechnology across NSF, a 9.4% increase from FY23 amounts*

- The NSF Engines will be vital in shaping regional microbioeconomies and furthering cross-cutting advances and information on their FY25 budgets can be found here.

* FY23 amounts are listed due to FY24 appropriations not being finalized at the time that this document was created.

Overall, the DOE and NSF have asked for FY25 budgets that could potentially achieve the goals stated in the Bold Goals Report, while the DOC, USDA and HHS have unfortunately limited their budgets and it remains questionable if they will be able to achieve the goals listed with the funding levels requested. The DOC, and specifically NIST, faces one of the biggest challenges this upcoming year. NIST has to juggle tasks assigned to it from the AI EO as well as the Bioeconomy EO and the Presidential Budget. The 8% decrease in funding for NIST does not paint a promising picture for either the Bioeconomy EO and should be something that Congress rectifies when they enact their appropriation bills. Furthermore, the USDA faces cuts in funding for vital programs related to their goals and AgARDA continues to be unfunded. In order for USDA to achieve the goals listed in the Bold Goals report, it will be imperative that Congress prioritize these areas for the benefit of the U.S. bioeconomy.

Predicting Progress: A Pilot of Expected Utility Forecasting in Science Funding

Read more about expected utility forecasting and science funding innovation here.

The current process that federal science agencies use for reviewing grant proposals is known to be biased against riskier proposals. As such, the metascience community has proposed many alternate approaches to evaluating grant proposals that could improve science funding outcomes. One such approach was proposed by Chiara Franzoni and Paula Stephan in a paper on how expected utility — a formal quantitative measure of predicted success and impact — could be a better metric for assessing the risk and reward profile of science proposals. Inspired by their paper, the Federation of American Scientists (FAS) collaborated with Metaculus to run a pilot study of this approach. In this working paper, we share the results of that pilot and its implications for future implementation of expected utility forecasting in science funding review.

Brief Description of the Study

In fall 2023, we recruited a small cohort of subject matter experts to review five life science proposals by forecasting their expected utility. For each proposal, this consisted of defining two research milestones in consultation with the project leads and asking reviewers to make three forecasts for each milestone:

- the probability of success;

- The scientific impact of the milestone, if it were reached; and

- The social impact of the milestone, if it were reached.

These predictions can then be used to calculate the expected utility, or likely impact, of a proposal and design and compare potential portfolios.

Key Takeaways for Grantmakers and Policymakers

The three main strengths of using expected utility forecasting to conduct peer review are

- For reviewers, it’s a relatively light-touch approach that encourages rigor and reduces anti-risk bias in scientific funding.

- The review criteria allow program managers to better understand the risk-reward profile of their grant portfolios and more intentionally shape them according to programmatic goals.

- Quantitative forecasts are resolvable, meaning that program officers can compare the actual outcomes of funded proposals with reviewers’ predictions. This generates a feedback/learning loop within the peer review process that incentivizes reviewers to improve the accuracy of their assessments over time.

Despite the apparent complexity of this process, we found that first-time users were able to successfully complete their review according to the guidelines without any additional support. Most of the complexity occurs behind-the-scenes, and either aligns with the responsibilities of the program manager (e.g., defining milestones and their dependencies) or can be automated (e.g., calculating the total expected utility). Thus, grantmakers and policymakers can have confidence in the user friendliness of expected utility forecasting.

How Can NSF or NIH Run an Experiment on Expected Utility Forecasting?

An initial pilot study could be conducted by NSF or NIH by adding a short, non-binding expected utility forecasting component to a selection of review panels. In addition to the evaluation of traditional criteria, reviewers would be asked to predict the success and impact of select milestones for the proposals assigned to them. The rest of the review process and the final funding decisions would be made using the traditional criteria.

Afterwards, study facilitators could take the expected utility forecasting results and construct an alternate portfolio of proposals that would have been funded if that approach was used, and compare the two portfolios. Such a comparison would yield valuable insights into whether—and how—the types of proposals selected by each approach differ, and whether their use leads to different considerations arising during review. Additionally, a pilot assessment of reviewers’ prediction accuracy could be conducted by asking program officers to assess milestone achievement and study impact upon completion of funded projects.

Findings and Recommendations

Reviewers in our study were new to the expected utility forecasting process and gave generally positive reactions. In their feedback, reviewers said that they appreciated how the framing of the questions prompted them to think about the proposals in a different way and pushed them to ground their assessments with quantitative forecasts. The focus on just three review criteria–probability of success, scientific impact, and social impact–was seen as a strength because it simplified the process, disentangled feasibility from impact, and eliminated biased metrics. Overall, reviewers found this new approach interesting and worth investigating further.

In designing this pilot and analyzing the results, we identified several important considerations for planning such a review process. While complex, engaging with these considerations tended to provide value by making implicit project details explicit and encouraging clear definition and communication of evaluation criteria to reviewers. Two key examples are defining the proposal milestones and creating impact scoring systems. In both cases, reducing ambiguities in terms of the goals that are to be achieved, developing an understanding of how outcomes depend on one another, and creating interpretable and resolvable criteria for assessment will help ensure that the desired information is solicited from reviewers.

Questions for Further Study

Our pilot only simulated the individual review phase of grant proposals and did not simulate a full review committee. The typical review process at a funding agency consists of first, individual evaluations by assigned reviewers, then discussion of those evaluations by the whole review committee, and finally, the submission of final scores from all members of the committee. This is similar to the Delphi method, a structured process for eliciting forecasts from a panel of experts, so we believe that it would work well with expected utility forecasting. The primary change would therefore be in the definition and approach for eliciting criterion scores, rather than the structure of the review process. Nevertheless, future implementations may uncover additional considerations that need to be addressed or better ways to incorporate forecasting into a panel environment.

Further investigation into how best to define proposal milestones is also needed. This includes questions such as, who should be responsible for determining the milestones? If reviewers are involved, at what part(s) of the review process should this occur? What is the right balance between precision and flexibility of milestone definitions, such that the best outcomes are achieved? How much flexibility should there be in the number of milestones per proposal?

Lastly, more thought should be given to how to define social impact and how to calibrate reviewers’ interpretation of the impact score scale. In our report, we propose a couple of different options for calibrating impact, in addition to describing the one we took in our pilot.

Interested grantmakers, both public and private, and policymakers are welcome to reach out to our team if interested in learning more or receiving assistance in implementing this approach.

Introduction

The fundamental concern of grantmakers, whether governmental or philanthropic, is how to make the best funding decisions. All funding decisions come with inherent uncertainties that may pose risks to the investment. Thus, a certain level of risk-aversion is natural and even desirable in grantmaking institutions, especially federal science agencies which are responsible for managing taxpayer dollars. However, without risk, there is no reward, so the trade-off must be balanced. In mathematics and economics, expected utility is the common metric assumed to underlie all rational decision making. Expected utility has two components: the probability of an outcome occurring if an action is taken and the value of that outcome, which roughly corresponds with risk and reward. Thus, expected utility would seem to be a logical choice for evaluating science funding proposals.

In the debates around funding innovation though, expected utility has largely flown under the radar compared to other ideas. Nevertheless, Chiara Franzoni and Paula Stephan have proposed using expected utility in peer review. Building off of their paper, the Federation of American Scientists (FAS) developed a detailed framework for how to implement expected utility into a peer review process. We chose to frame the review criteria as forecasting questions, since determining the expected utility of a proposal inherently requires making some predictions about the future. Forecasting questions also have the added benefit of being resolvable–i.e., the true outcome can be determined after the fact and compared to the prediction–which provides a learning opportunity for reviewers to improve their abilities and identify biases. In addition to forecasting, we incorporated other unique features, like an exponential scale for scoring impact, that we believe help reduce biases against risky proposals.

With the theory laid out, we conducted a small pilot in fall of 2023. The pilot was run in collaboration with Metaculus, a crowd forecasting platform and aggregator, to leverage their expertise in designing resolvable forecasting questions and to use their platform to collect forecasts from reviewers. The purpose of the pilot was to test the mechanics of this approach in practice, see if there are any additional considerations that need to be thought through, and surface potential issues that need to be solved for. We were also curious if there would be any interesting or unexpected results that arise based on how we chose to calculate impact and total expected utility. It is important to note that this pilot was not an experiment, so we did not have a control group to compare the results of the review with.

Since FAS is not a grantmaking institution, we did not have a ready supply of traditional grant proposals to use. Instead, we used a set of two-page research proposals for Focused Research Organizations (FROs) that we had sourced through separate advocacy work in that area.1 With the proposal authors’ permission, we recruited a cohort of twenty subject matter experts to each review one of five proposals. For each proposal, we defined two research milestones in consultation with the proposal authors. Reviewers were asked to make three forecasts for each milestone:

- The probability of success;

- The scientific impact, conditional on success; and

- The social impact, conditional on success.

Reviewers submitted their forecasts on Metaculus’ platform; in a separate form they provided explanations for their forecasts and responded to questions about their experience and impression of this new approach to proposal evaluation. (See Appendix A for details on the pilot study design.)

Insights from Reviewer Feedback

Overall, reviewers liked the framing and criteria provided by the expected utility approach, while their main critique was of the structure of the research proposals. Excluding critiques of the research proposal structure, which are unlikely to apply to an actual grant program, two thirds of the reviewers expressed positive opinions of the review process and/or thought it was worth pursuing further given drawbacks with existing review processes. Below, we delve into the details of the feedback we received from reviewers and their implications for future implementation.

Feedback on Review Criteria

Disentangling Impact from Feasibility

Many of the reviewers said that this model prompted them to think differently about how they assess the proposals and that they liked the new questions. Reviewers appreciated that the questions focused their attention on what they think funding agencies really want to know and nothing more: “can it occur?” and “will it matter?” This approach explicitly disentangles impact from feasibility: “Often, these two are taken together, and if one doesn’t think it is likely to succeed, the impact is also seen as lower.” Additionally, the emphasis on big picture scientific and social impact “is often missing in the typical review process.” Reviewers also liked that this approach eliminates what they consider biased metrics, such as the principal investigator’s reputation, track record, and “excellence.”

Reducing Administrative Burden

The small set of questions was seen as more efficient and less burdensome on reviewers. One reviewer said, “I liked this approach to scoring a proposal. It reduces the effort to thinking about perceived impact and feasibility.” Another reviewer said, “On the whole it seems a worthwhile exercise as the current review processes for proposals are onerous.”

Quantitative Forecasting

Reviewers saw benefits to being asked to quantify their assessments, but also found it challenging at times. A number of reviewers enjoyed taking a quantitative approach and thought that it helped them be more grounded and explicit in their evaluations of the proposals. However, some reviewers were concerned that it felt like guesswork and expressed low confidence in their quantitative assessments, primarily due to proposals lacking details on their planned research methods, which is an issue discussed in the section “Feedback on Proposals.” Nevertheless, some of these reviewers still saw benefits to taking a quantitative approach: “It is interesting to try to estimate probabilities, rather than making flat statements, but I don’t think I guess very well. It is better than simply classically reviewing the proposal [though].” Since not all academics have experience making quantitative predictions, we expect that there will be a learning curve for those new to the practice. Forecasting is a skill that can be learned though, and we think that with training and feedback, reviewers can become better, more confident forecasters.

Defining Social Impact

Of the three types of questions that reviewers were asked to answer, the question about social impact seemed the harder one for reviewers to interpret. Reviewers noted that they would have liked more guidance on what was meant by social impact and whether that included indirect impacts. Since questions like these are ultimately subjective, the “right” definition of social impact and what types of outcomes are considered most valuable will depend on the grantmaking institution, their domain area, and their theory of change, so we leave this open to future implementers to clarify in their instructions.

Calibrating Impact

While the impact score scale (see Appendix A) defines the relative difference in impact between scores, it does not define the absolute impact conveyed by a score. For this reason, a calibration mechanism is necessary to provide reviewers with a shared understanding of the use and interpretation of the scoring system. Note that this is a challenge that rubric-based peer review criteria used by science agencies also face. Discussion and aggregation of scores across a review committee helps align reviewers and average out some of this natural variation.2

To address this, we surveyed a small, separate set of academics in the life sciences about how they would score the social and scientific impact of the average NIH R01 grant, which many life science researchers apply to and review proposals for. We then provided the average scores from this survey to reviewers to orient them to the new scale and help them calibrate their scores.

One reviewer suggested an alternative approach: “The other thing I might change is having a test/baseline question for every reviewer to respond to, so you can get a feel for how we skew in terms of assessing impact on both scientific and social aspects.” One option would be to ask reviewers to score the social and scientific impact of the average grant proposal for a grant program that all reviewers would be familiar with; another would be to ask reviewers to score the impact of the average funded grant for a specific grant program, which could be more accessible for new reviewers who have not previously reviewed grant proposals. A third option would be to provide all reviewers on a committee with one or more sample proposals to score and discuss, in a relevant and shared domain area.

When deciding on an approach for calibration, a key consideration is the specific resolution criteria that are being used — i.e., the downstream measures of impact that reviewers are being asked to predict. One option, which was used in our pilot, is to predict the scores that a comparable, but independent, panel of reviewers would give the project some number of years following its successful completion. For a resolution criterion like this one, collecting and sharing calibration scores can help reviewers get a sense for not just their own approach to scoring, but also those of their peers.

Making Funding Decisions

In scoring the social and scientific impact of each proposal, reviewers were asked to assess the value of the proposal to society or to the scientific field. That alone would be insufficient to determine whether a proposal should be funded though, since it would need to be compared with other proposals in conjunction with its feasibility. To do so, we calculated the total expected utility of each proposal (see Appendix C). In a real funding scenario, this final metric could then be used to compare proposals and determine which ones get funded. Additionally, unlike a traditional scoring system, the expected utility approach allows for the detailed comparison of portfolios — including considerations like the expected proportion of milestones reached and the range of likely impacts.

In our pilot, reviewers were not informed that we would be doing this additional calculation based on their submissions. As a result, one reviewer thought that the questions they were asked failed to include other important questions, like “should it occur?” and “is it worth the opportunity cost?” Though these questions were not asked of reviewers explicitly, we believe that they would be answered once the expected utility of all proposals is calculated and considered, since the opportunity cost of one proposal would be the expected utility of the other proposals. Since each reviewer only provided input on one proposal, they may have felt like the scores they gave would be used to make a binary yes/no decision on whether to fund that one proposal, rather than being considered as a part of a larger pool of proposals, as it would be in a real review process.

Feedback on Proposals

Missing Information Impedes Forecasting

The primary critique that reviewers expressed was that the research proposals lacked details about their research plans, what methods and experimental protocols would be used, and what preliminary research the author(s) had done so far. This hindered their ability to properly assess the technical feasibility of the proposals and their probability of success. A few reviewers expressed that they also would have liked to have had a better sense of who would be conducting the research and each team member’s responsibilities. These issues arose because the FRO proposals used in our pilot had not originally been submitted for funding purposes, and thus lacked the requirements of traditional grant proposals, as we noted above. We assume this would not be an issue with proposals submitted to actual grantmakers.3

Improving Milestone Design

A few reviewers pointed out that some of the proposal milestones were too ambiguous or were not worded specifically enough, such that there were ways that researchers could technically say that they had achieved the milestone without accomplishing the spirit of its intent. This made it more challenging for reviewers to assess milestones, since they weren’t sure whether to focus on the ideal (i.e., more impactful) interpretation of the milestone or to account for these “loopholes.” Moreover, loopholes skew the forecasts, since they increase the probability of achieving a milestone, while lowering the impact of doing so if it is achieved through a loophole.

One reviewer suggested, “I feel like the design of milestones should be far more carefully worded – or broken up into sub-sentences/sub-aims, to evaluate the feasibility of each. As the questions are currently broken down, I feel they create a perverse incentive to create a vaguer milestone, or one that can be more easily considered ‘achieved’ for some ‘good enough’ value of achieved.” For example, they proposed that one of the proposal milestones, “screen a library of tens of thousands of phage genes for enterobacteria for interactions and publish promising new interactions for the field to study,” could be expanded to

- “Generate a library of tens of thousands of genes from enterobacteria, expressed in E. coli

- “Validate their expression under screenable conditions

- “Screen the library for their ability to impede phage infection with a panel of 20 type phages

- “Publish …

- “Store and distribute the library, making it as accessible to the broader community”

We agree with the need for careful consideration and design of milestones, given that “loopholes” in milestones can detract from their intended impact and make it harder for reviewers to accurately assess their likelihood. In our theoretical framework for this approach, we identified three potential parties that could be responsible for defining milestones: (1) the proposal author(s), (2) the program manager, with or without input from proposal authors, or (3) the reviewers, with or without input from proposal authors. This critique suggests that the first approach of allowing proposal authors to be the sole party responsible for defining proposal milestones is vulnerable to being gamed, and the second or third approach would be preferable. Program managers who take on the task of defining milestones should have enough expertise to think through the different potential ways of fulfilling a milestone and make sure that they are sufficiently precise for reviewers to assess.

Benefits of Flexibility in Milestones

Some flexibility in milestones may still be desirable, especially with respect to the actual methodology, since experimentation may be necessary to determine the best technique to use. For example, speaking about the feasibility of a different proposal milestone – “demonstrate that Pro-AG technology can be adapted to a single pathogenic bacterial strain in a 300 gallon aquarium of fish and successfully reduce antibiotic resistance by 90%” – a reviewer noted that

“The main complexity and uncertainty around successful completion of this milestone arises from the native fish microbiome and whether a CRISPR delivery tool can reach the target strain in question. Due to the framing of this milestone, should a single strain be very difficult to reach, the authors could simply switch to a different target strain if necessary. Additionally, the mode of CRISPR delivery is not prescribed in reaching this milestone, so the authors have a host of different techniques open to them, including conjugative delivery by a probiotic donor or delivery by engineered bacteriophage.”

Peer Review Results

Sequential Milestones vs. Independent Outcomes

In our expected utility forecasting framework, we defined two different ways that a proposal could structure its outcomes: as sequential milestones where each additional milestone builds off of the success of the previous one, or as independent outcomes where the success of one is not dependent on the success of the other(s). For proposals with sequential milestones in our pilot, we would expect the probability of success of milestone 2 to be less than the probability of success of milestone 1 and for the opposite to be true of their impact scores. For proposals with independent outcomes, we do not expect there to be a relationship between the probability of success and the impact scores of milestones 1 and 2. There are different equations for calculating the total expected utility, depending on the relationship between outcomes (see Appendix C).

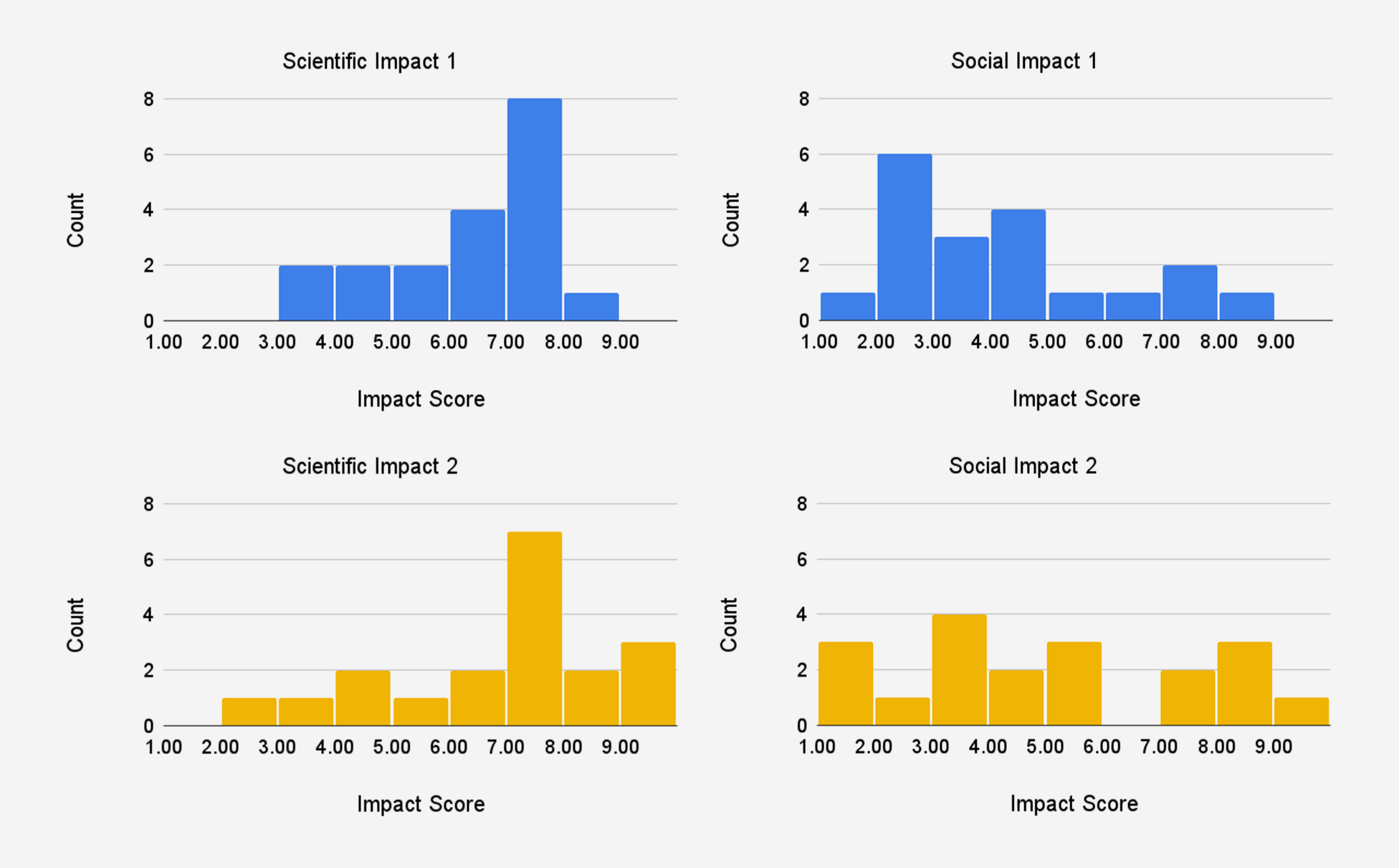

For each of the proposals in our study, we categorized them based on whether they had sequential milestones or independent outcomes. This information was not shared with reviewers. Table 1 presents the average reviewer forecasts for each proposal. In general, milestones received higher scientific impact scores than social impact scores, which makes sense given the primarily academic focus of research proposals. For proposals 1 to 3, the probability of success of milestone 2 was roughly half of the probability of success of milestone 1; reviewers also gave milestone 2 higher scientific and social impact scores than milestone 1. This is consistent with our categorization of proposals 1 to 3 as sequential milestones.

Further Discussion on Designing and Categorizing Milestones

We originally categorized proposal 4’s milestones as sequential, but one reviewer gave milestone 2 a lower scientific impact score than milestone 1 and two reviewers gave it a lower social impact score. One reviewer also gave milestone 2 roughly the same probability of success as milestone 1. This suggests that proposal 4’s milestones can’t be considered strictly sequential.

The two milestones for proposal 4 were

- Milestone 1: Develop a tool that is able to perturb neurons in C. elegans and record from all neurons simultaneously, automated w/ microfluidics, and

- Milestone 2: Develop a model of the C. elegans nervous system that can predict what every neuron will do when stimulating one neuron with R2 > 0.8

The reviewer who gave milestone 2 a lower scientific impact score explained: “Given the wording of the milestone, I do not believe that if the scientific milestone was achieved, it would greatly improve our understanding of the brain.” Unlike proposals 1-3, in which milestone 2 was a scaled-up or improved-upon version of milestone 1, these milestones represent fundamentally different categories of output (general-purpose tool vs specific model). Thus, despite the necessity of milestone 1’s tool for achieving milestone 2, the reviewer’s response suggests that the impact of milestone 2 was being considered separately rather than cumulatively.

To properly address this case of sequential milestones with different types of outputs, we recommend that for all sequential milestones, latter milestones should be explicitly defined as inclusive of prior milestones. In the above example, this would imply redefining milestone 2 as “Complete milestone 1 and develop a model of the C. elegans nervous system…” This way, reviewers know to include the impact of milestone 1 in their assessment of the impact of milestone 2.

To help ensure that reviewers are aligned with program managers in how they interpret the proposal milestones (if they aren’t directly involved in defining milestones), we suggest that either reviewers be informed of how program managers are categorizing the proposal outputs so they can conduct their review accordingly or allow reviewers to decide the category (and thus how the total expected utility is calculated), whether individually or collectively or both.

We chose to use only two of the goals that proposal authors provided because we wanted to standardize the number of milestones across proposals. However, this may have provided an incomplete picture of the proposals’ goals, and thus an incomplete assessment of the proposals. We recommend that future implementations be flexible and allow the number of milestones to be determined based on each proposal’s needs. This would also help accommodate one of the reviewers’ suggestion that some milestones should be broken down into intermediary steps.

Importance of Reviewer Explanations

As one can tell from the above discussion, reviewers’ explanation of their forecasts were crucial to understanding how they interpreted the milestones. Reviewers’ explanations varied in length and detail, but the most insightful responses broke down their reasoning into detailed steps and addressed (1) ambiguities in the milestone and how they chose to interpret ambiguities if they existed, (2) the state of the scientific field and the maturity of different techniques that the authors propose to use, and (3) factors that improve the likelihood of success versus potential barriers or challenges that would need to be overcome.

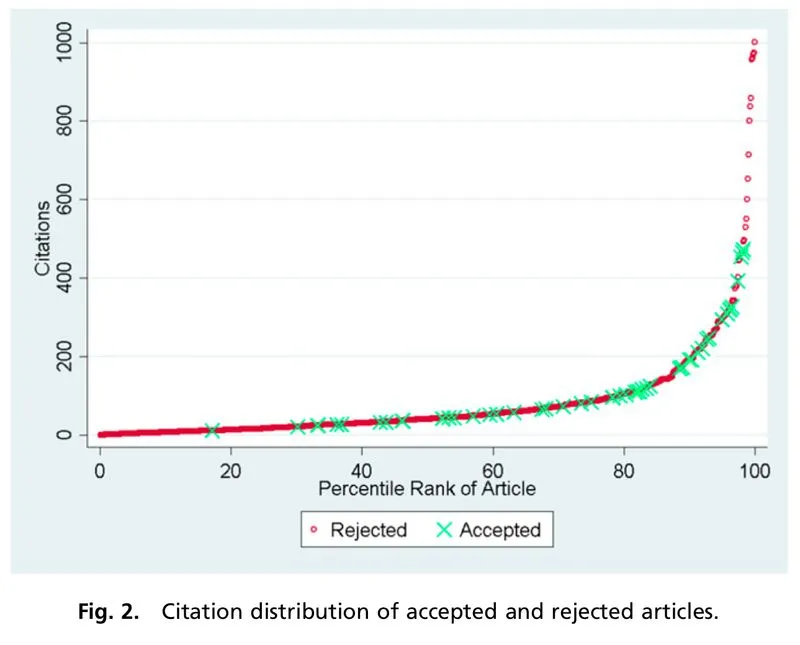

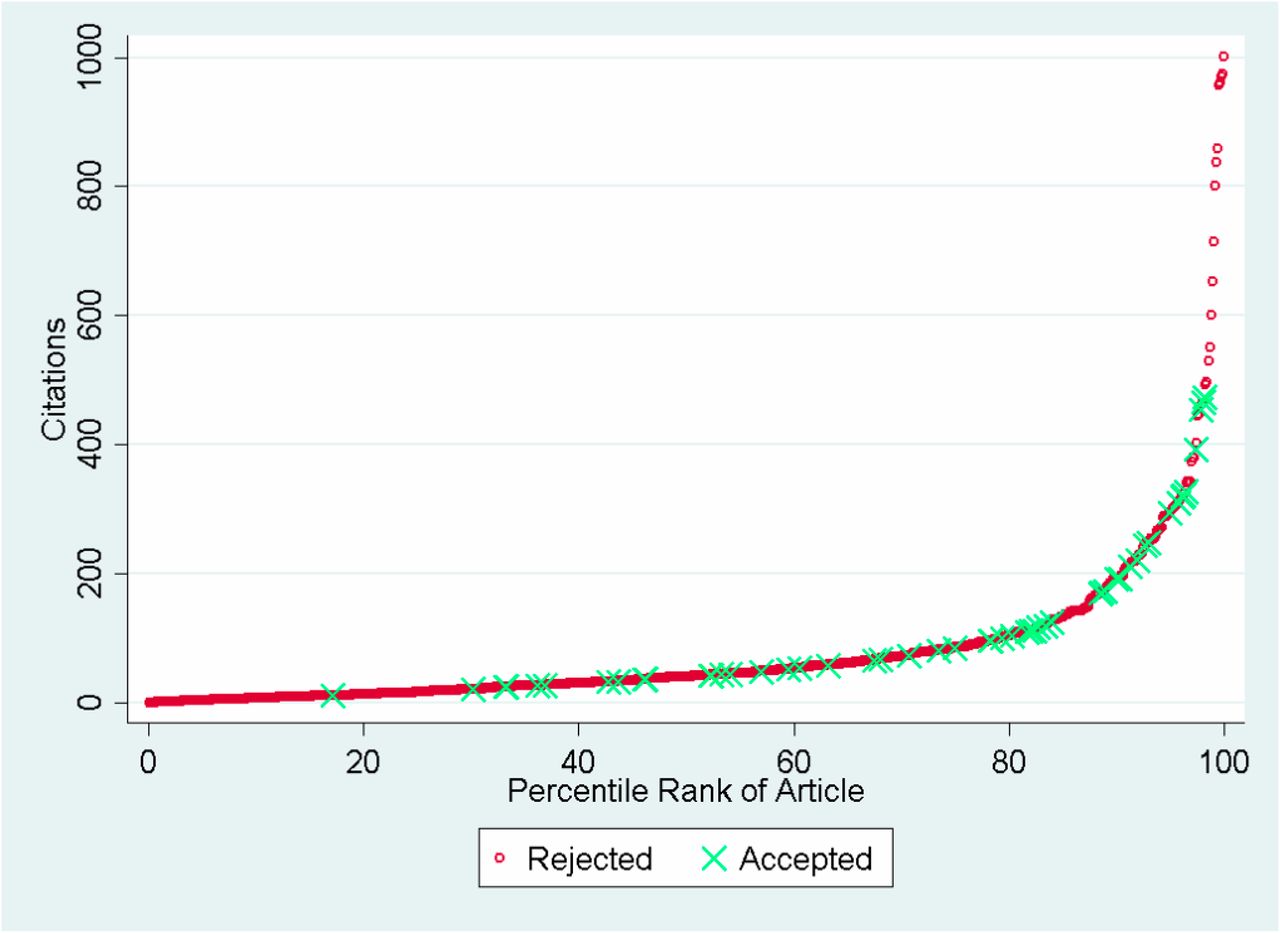

Exponential Impact Scales Better Reflect the Real Distribution of Impact

The distribution of NIH and NSF proposal peer review scores tends to be skewed such that most proposals are rated above the center of the scale and there are few proposals rated poorly. However, other markers of scientific impact, such as citations (even with all of its imperfections), tend to suggest a long tail of studies with high impact. This discrepancy suggests that traditional peer review scoring systems are not well-structured to capture the nonlinearity of scientific impact, resulting in score inflation. The aggregation of scores at the top end of the scale also means that very negative scores have a greater impact than very positive scores when averaged together, since there’s more room between the average score and the bottom end of the scale. This can generate systemic bias against more controversial or risky proposals.

In our pilot, we chose to use an exponential scale with a base of 2 for impact to better reflect the real distribution of scientific impact. Using this exponential impact scale, we conducted a survey of a small pool of academics in the life sciences about how they would rate the impact of the average funded NIH R01 grant. They responded with an average scientific impact score 5 and an average social impact score of 3, which are much lower on our scale compared to traditional peer review scores4, suggesting that the exponential scale may be beneficial for avoiding score inflation and bunching at the top. In our pilot, the distribution of scientific impact scores was centered higher than 5, but still less skewed than NIH peer review scores for significance and innovation typically are. This partially reflects the fact that proposals were expected to be funded at one to two orders of magnitude more than NIH R01 proposals are, so impact should also be greater. The distribution of social impact scores exhibits a much wider spread and lower center.

Conclusion

In summary, expected utility forecasting presents a promising approach to improving the rigor of peer review and quantitatively defining the risk-reward profile of science proposals. Our pilot study suggests that this approach can be quite user-friendly for reviewers, despite its apparent complexity. Further study into how best to integrate forecasting into panel environments, define proposal milestones, and calibrate impact scales will help refine future implementations of this approach.

More broadly, we hope that this pilot will encourage more grantmaking institutions to experiment with innovative funding mechanisms. Reviewers in our pilot were more open-minded and quick-to-learn than one might expect and saw significant value in this unconventional approach. Perhaps this should not be so much of a surprise given that experimentation is at the heart of scientific research.

Interested grantmakers, both public and private, and policymakers are welcome to reach out to our team if interested in learning more or receiving assistance in implementing this approach.

Acknowledgements

Many thanks to Jordan Dworkin for being an incredible thought partner in designing the pilot and providing meticulous feedback on this report. Your efforts made this project possible!

Appendix A: Pilot Study Design

Our pilot study consisted of five proposals for life science-related Focused Research Organizations (FROs). These proposals were solicited from academic researchers by FAS as part of our advocacy for the concept of FROs. As such, these proposals were not originally intended as proposals for direct funding, and did not have as strict content requirements as traditional grant proposals typically do. Researchers were asked to submit one to two page proposals discussing (1) their research concept, (2) the motivation and its expected social and scientific impact, and (3) the rationale for why this research can not be accomplished through traditional funding channels and thus requires a FRO to be funded.

Permission was obtained from proposal authors to use their proposals in this study. We worked with proposal authors to define two milestones for each proposal that reviewers would assess: one that they felt confident that they could achieve and one that was more ambitious but that they still thought was feasible. In addition, due to the brevity of the proposals, we included an additional 1-2 pages of supplementary information and scientific context. Final drafts of the milestones and supplementary information were provided to authors to edit and approve. Because this pilot study could not provide any actual funding to proposal authors, it was not possible to solicit full length research proposals from proposal authors.

We recruited four to six reviewers for each proposal based on their subject matter expertise. Potential participants were recruited over email with a request to help review a FRO proposal related to their area of research. They were informed that the review process would be unconventional but were not informed of the study’s purpose. Participants were offered a small monetary compensation for their time.

Confirmed participants were sent instructions and materials for the review process on the same day and were asked to complete their review by the same deadline a month and a half later. Reviewers were told to assume that, if funded, each proposal would receive $50 million in funding over five years to conduct the research, consistent with the proposed model for FROs. Each proposal had two technical milestones, and reviewers were asked to answer the following questions for each milestone:

- Assuming that the proposal is funded by 2025, will the milestone be achieved before 2031?

- What will be the average scientific impact score, as judged in 2032, of accomplishing the milestone?

- What will be the average social impact score, as judged in 2032, of accomplishing the milestone?

The impact scoring system was explained to reviewers as follows:

Please consider the following in determining the impact score: the current and expected long-term social or scientific impact of a funded FRO’s outputs if a funded FRO accomplishes this milestone before 2030.

The impact score we are using ranges from 1 (low) to 10 (high). It is base 2 exponential, meaning that a proposal that receives a score of 5 has double the impact of a proposal that receives a score of 4, and quadruple the impact of a proposal that receives a score of 3. In a small survey we conducted of SMEs in the life sciences, they rated the scientific and social impact of the average NIH R01 grant — a federally funded research grant that provides $1-2 million for a 3-5 year endeavor — on this scale to be 5.2 ± 1.5 and 3.1 ± 1.3, respectively. The median scores were 4.75 and 3.00, respectively.

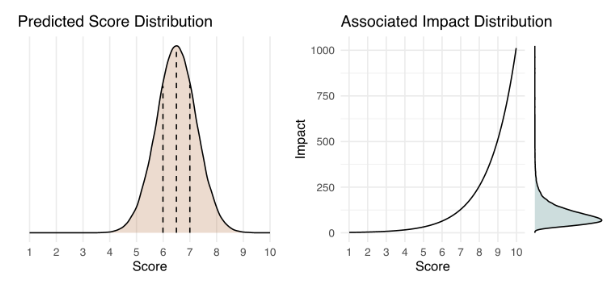

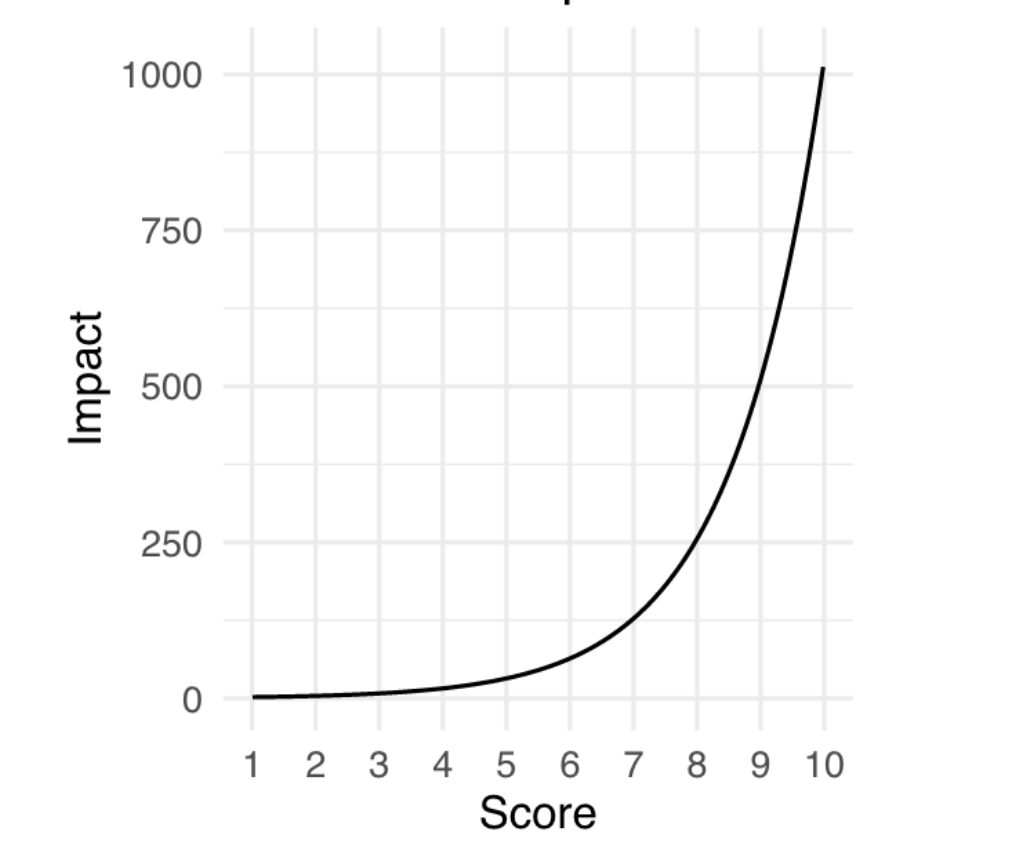

Below is an example of how a predicted impact score distribution (left) would translate into an actual impact distribution (right). You can try it out yourself with this interactive version (in the menu bar, click Runtime > Run all) to get some further intuition on how the impact score works. Please note that this is meant solely for instructive purposes, and the interface is not designed to match Metaculus’ interface.

The choice of an exponential impact scale reflects the tendency in science for a small number of research projects to have an outsized impact. For example, studies have shown that the relationship between the number of citations for a journal article and its percentile rank scales exponentially.

Scientific impact aims to capture the extent to which a project advances the frontiers of knowledge, enables new discoveries or innovations, or enhances scientific capabilities or methods. Though each is imperfect, one could consider citations of papers, patents on tools or methods, or users of software or datasets as proxies of scientific impact.

Social impact aims to capture the extent to which a project contributes to solving important societal problems, improving well-being, or advancing social goals. Some proxy metrics that one might use to assess a project’s social impact are the value of lives saved, the cost of illness prevented, the number of job-years of employment generated, economic output in terms of GDP, or the social return on investment.

You may consider any or none of these proxy metrics as a part of your assessment of the impact of a FRO accomplishing this milestone.

Reviewers were asked to submit their forecasts on Metaculus’ website and to provide their reasoning in a separate Google form. For question 1, reviewers were asked to respond with a single probability. For questions 2 and 3, reviewers were asked to provide their median, 25th percentile, and 75th percentile predictions, in order to generate a probability distribution. Metaculus’ website also included information on the resolution criteria of each question, which provided guidance to reviewers on how to answer the question. Individual reviewers were blind to other reviewers’ responses until after the submission deadline, at which point the aggregated results of all of the responses were made public on Metaculus’ website.

Additionally, in the Google form, reviewers were asked to answer a survey question about their experience: “What did you think about this review process? Did it prompt you to think about the proposal in a different way than when you normally review proposals? If so, how? What did you like about it? What did you not like? What would you change about it if you could?”

Some participants did not complete their review. We received 19 complete reviews in the end, with each proposal receiving three to six reviews.

Study Limitations

Our pilot study had certain limitations that should be noted. Since FAS is not a grantmaking institution, we could not completely reproduce the same types of research proposals that a grantmaking institution would receive nor the entire review process. We will highlight these differences in comparison to federal science agencies, which are our primary focus.

- Review Process: There are typically two phases to peer review at NIH and NSF. First, at least three individual reviewers with relevant subject matter expertise are assigned to read and evaluate a proposal independently. Then, a larger committee of experts is convened. There, the assigned reviewers present the proposal and their evaluation, and then the committee discusses and determines the final score for the proposal. Our pilot study only attempted to replicate the first phase of individual review.

- Sample Size: In our pilot, the sample size was quite small, since only five proposals were reviewed, and they were all in different subfields, so different reviewers were assigned to each proposal. NIH and NSF peer review committees typically focus on one subfield and review on the order of twenty or so proposals. The number of reviewers per proposal–three to six–in our pilot was consistent with the number of reviewers typically assigned to a proposal by NIH and NSF. Peer review committees are typically larger, ranging from six to twenty people, depending on the agency and the field.

- Proposals: The FRO proposals plus supplementary information were only two to four pages long, which is significantly shorter than the 12 to 15 page proposals that researchers submit for NIH and NSF grants. Proposal authors were asked to generally describe their research concept, but were not explicitly required to describe the details of the research methodology they would use or any preliminary research. Some proposal authors volunteered more information on this for the supplementary information, but not all authors did.

- Grant Size: For the FRO proposals, reviewers were asked to assume that funded proposals would receive $50 million over five years, which is one to two orders of magnitude more funding than typical NIH and NSF proposals.

Appendix B: Feedback on Study-Specific Implementation

In addition to feedback about the review framework, we received feedback on how we implemented our pilot study, specifically the instructions and materials for the review process and the submission platforms. This feedback isn’t central to this paper’s investigation of expected value forecasting, but we wanted to include it in the appendix for transparency.

Reviewers were sent instructions over email that outlined the review process and linked to Metaculus’ webpage for this pilot. On Metaculus’ website, reviewers could find links to the proposals on FAS’ website and the supplementary information in Google docs. Reviewers were expected to read those first and then read through the resolution criteria for each forecasting question before submitting their answers on Metaculus’ platform. Reviewers were asked to submit the explanations behind their forecasts in a separate Google form.

Some reviewers had no problem navigating the review process and found Metaculus’ website easy to use. However, feedback from other reviewers suggested that the different components necessary for the review were spread out over too many different websites, making it difficult for reviewers to keep track of where to find everything they needed.

Some had trouble locating the different materials and pieces of information needed to conduct the review on Metaculus’ website. Others found it confusing to have to submit their forecasts and explanations in two separate places. One reviewer suggested that the explanation of the impact scoring system should have been included within the instructions sent over email rather than in the resolution criteria on Metaculus’ website so that they could have read it before reading the proposal. Another reviewer suggested that it would have been simpler to submit their forecasts through the same Google form that they used to submit their explanations rather than through Metaculus’ website.

Based on this feedback, we would recommend that future implementations streamline their submission process to a single platform and provide a more extensive set of instructions rather than seeding information across different steps of the review process. Training sessions, which science funding agencies typically conduct, would be a good supplement to written instructions.

Appendix C: Total Expected Utility Calculations

To calculate the total expected utility, we first converted all of the impact scores into utility by taking two to the exponential of the impact score, since the impact scoring system is base 2 exponential:

Utility=2Impact Score.

We then were able to average the utilities for each milestone and conduct additional calculations.

To calculate the total utility of each milestone, ui, we averaged the social utility and the scientific utility of the milestone:

ui = (Social Utility + Scientific Utility)/2.

The total expected utility (TEU) of a proposal with two milestones can be calculated according to the general equation:

TEU = u1P(m1 ∩ not m2) + u2P(m2 ∩ not m1) + (u1+u2)P(m1m2),

where P(mi) represents the probability of success of milestone i and

P(m1 ∩ not m2) = P(m1) – P(m1 ∩ m2)

P(m2 ∩ not m1) = P(m2) – P(m1 ∩ m2).

For sequential milestones, milestone 2 is defined as inclusive of milestone 1 and wholly dependent on the success of milestone 1, so this means that

u2, seq = u1+u2

P(m2) = Pseq(m1 ∩ m2)

P(m2 ∩ not m1) = 0.

Thus, the total expected utility of sequential milestones can be simplified as

TEU = u1P(m1)-u1P(m2) + (u2, seq)P(m2)

TEU = u1P(m1) + (u2, seq-u1)P(m2)

This can be generalized to

TEUseq = Σi(ui, seq-ui-1, seq)P(mi).

Otherwise, the total expected utility can be simplified to

TEU = u1P(m1) + u2P(m2) – (u1+u2)P(m1 ∩ m2).

For independent outcomes, we assume

Pind(m1 ∩ m2) = P(m1)P(m2),

so

TEUind = u1P(m1) + u2P(m2) – (u1+u2)P(m1)P(m2).

To present the results in Tables 1 and 2, we converted all of the utility values back into the impact score scale by taking the log base 2 of the results.

Risk and Reward in Peer Review

This article was written as a part of the FRO Forecasting project, a partnership between the Federation of American Scientists and Metaculus. This project aims to conduct a pilot study of forecasting as an approach for assessing the scientific and societal value of proposals for Focused Research Organizations. To learn more about the project, see the press release here. To participate in the pilot, you can access the public forecasting tournament here.

The United States federal government is the single largest funder of scientific research in the world. Thus, the way that science agencies like the National Science Foundation and the National Institutes of Health distribute research funding has a significant impact on the trajectory of science as a whole. Peer review is considered the gold standard for evaluating the merit of scientific research proposals, and agencies rely on peer review committees to help determine which proposals to fund. However, peer review has its own challenges. It is a difficult task to balance science agencies’ dual mission of protecting government funding from being spent on overly risky investments while also being ambitious in funding proposals that will push the frontiers of science, and research suggests that peer review may be designed more for the former rather than the latter. We at FAS are exploring innovative approaches to peer review to help tackle this challenge.

Biases in Peer Review

A frequently echoed concern across the scientific and metascientific community is that funding agencies’ current approach to peer review of science proposals tends to be overly risk-averse, leading to bias against proposals that entail high risk or high uncertainty about the outcomes. Reasons for this conservativeness include reviewer preferences for feasibility over potential impact, contagious negativity, and problems with the way that peer review scores are averaged together.

This concern, alongside studies suggesting that scientific progress is slowing down, has led to a renewed effort to experiment with new ways of conducting peer review, such as golden tickets and lottery mechanisms. While golden tickets and lottery mechanisms aim to complement traditional peer review with alternate means of making funding decisions — namely individual discretion and randomness, respectively — they don’t fundamentally change the way that peer review itself is conducted.

Traditional peer review asks reviewers to assess research proposals based on a rubric of several criteria, which typically include potential value, novelty, feasibility, expertise, and resources. These criteria are given a score based on a numerical scale; for example, the National Institutes of Health uses a scale from 1 (best) to 9 (worst). Reviewers then provide an overall score that need not be calculated in any specific way based on the criteria scores. Next, all of the reviewers convene to discuss the proposal and submit their final overall scores, which may be different from what they submitted prior to the discussion. The final overall scores are averaged across all of the reviewers for a specific proposal. Proposals are then ranked based on their average overall score and funding is prioritized for those ranked before a certain cutoff score, though depending on the agency, some discretion by program administrators is permitted.

The way that this process is designed allows for the biases mentioned at the beginning—reviewer preferences for feasibility, contagious negativity, and averaging problems—to influence funding decisions. First, reviewer discretion in deciding overall scores allows them to weigh feasibility more heavily than potential impact and novelty in their final scores. Second, when evaluations are discussed reviewers tend to adjust their scores to better align with their peers. This adjustment tends to be greater when correcting in the negative direction than in the positive direction, resulting in a stronger negative bias. Lastly, since funding tends to be quite limited, cutoff scores tend to be quite close to the best score. This means that even if almost all of the reviewers rate a proposal positively, one very negative review can potentially bring the average below the cutoff.

Designing a New Approach to Peer Review

In 2021, the researchers Chiara Franzoni and Paula Stephan published a working paper arguing that risk in science results from three sources of uncertainty: uncertainty of research outcomes, uncertainty of the probability of success, and uncertainty of the value of the research outcomes. To comprehensively and consistently account for these sources of uncertainty, they proposed a new expected utility approach to peer review evaluations, in which reviewers are asked to

- Identify the primary expected outcome of a research proposal and, optionally, a potential secondary outcome;

- Assess the probability between 0 to 1 of achieving each expected outcome (P(j); and

- Assess the value of achieving each expected outcome (uj) on a numerical scale (e.g., 0 to 100).

From this, the total expected utility can be calculated for each proposal and used to rank them.1 This systematic approach addresses the first bias we discussed by limiting the extent to which reviewers’ preferences for more feasible proposals would impact the final score of each proposal.

We at FAS see a lot of potential in Franzoni and Stephan’s expected value approach to peer review, and it inspired us to design a pilot study using a similar approach that aims to chip away at the other biases in review.

To explore potential solutions for negativity bias, we are taking a cue from forecasting by complementing the peer review process with a resolution and scoring process. This means that at a set time in the future, reviewers’ assessments will be compared to a ground truth based on the actual events that have occurred (i.e., was the outcome actually achieved and, if so, what was its actual impact?). Our theory is that if implemented in peer review, resolution and scoring could incentivize reviewers to make better, more accurate predictions over time and provide empirical estimates of a committee’s tendency to provide overly negative (or positive) assessments, thus potentially countering the effects of contagion during review panels and helping more ambitious proposals secure support.

Additionally, we sought to design a new numerical scale for assessing the value or impact of a research proposal, which we call an impact score. Typically, peer reviewers are free to interpret the numerical scale for each criteria as they wish; Franzoni and Stephan’s design also did not specify how the numerical scale for the value of the research outcome should work. We decided to use a scale ranging from 1 (low) to 10 (high) that was base 2 exponential, meaning that a proposal that receives a score of 5 has double the impact of a proposal that receives a score of 4, and quadruple the impact of a proposal that receives a score of 3.

The choice of an exponential scale reflects the tendency in science for a small number of research projects to have an outsized impact (Figure 2), and provides more room at the top end of the scale for reviewers to increase the rating of the proposals that they believe will have an exceptional impact. We believe that this could help address the last bias we discussed, which is that currently, bad scores are more likely to pull a proposal’s average below the cutoff than good scores are likely to pull a proposal’s average above the cutoff.

Source: Siler, Lee, and Bero (2014)

We are now piloting this approach on a series of proposals in the life sciences that we have collected for Focused Research Organizations, a new type of non-profit research organization designed to tackle challenges that neither academia or industry is incentivized to work on. The pilot study was developed in collaboration with Metaculus, a forecasting platform and aggregator, and will be hosted on their website. We welcome subject matter experts in the life sciences — or anyone interested! — to participate in making forecasts on these proposals here. Stay tuned for the results of this pilot, which we will publish in a report early next year.

FY24 NDAA AI Tracker

As both the House and Senate gear up to vote on the National Defense Authorization Act (NDAA), FAS is launching this live blog post to track all proposals around artificial intelligence (AI) that have been included in the NDAA. In this rapidly evolving field, these provisions indicate how AI now plays a pivotal role in our defense strategies and national security framework. This tracker will be updated following major updates.

Senate NDAA. This table summarizes the provisions related to AI from the version of the Senate NDAA that advanced out of committee on July 11. Links to the section of the bill describing these provisions can be found in the “section” column. Provisions that have been added in the manager’s package are in red font. Updates from Senate Appropriations committee and the House NDAA are in blue.

House NDAA. This table summarizes the provisions related to AI from the version of the House NDAA that advanced out of committee. Links to the section of the bill describing these provisions can be found in the “section” column.

Funding Comparison. The following tables compare the funding requested in the President’s budget to funds that are authorized in current House and Senate versions of the NDAA. All amounts are in thousands of dollars.

On Senate Approps Provisions

The Senate Appropriations Committee generally provided what was requested in the White House’s budget regarding artificial intelligence (AI) and machine learning (ML), or exceeded it. AI was one of the top-line takeaways from the Committee’s summary of the defense appropriations bill. Particular attention has been paid to initiatives that cut across the Department of Defense, especially the Chief Digital and Artificial Intelligence Office (CDAO) and a new initiative called Alpha-1. The Committee is supportive of Joint All-Domain Command and Control (JADC2) integration and the recommendations of the National Security Commission on Artificial Intelligence (NSCAI).

On House final bill provisions

Like the Senate Appropriations bill, the House of Representatives’ final bill generally provided or exceeded what was requested in the White House budget regarding AI and ML. However, in contract to the Senate Appropriations bill, AI was not a particularly high-priority takeaway in the House’s summary. The only note about AI in the House Appropriations Committee’s summary of the bill was in the context of digital transformation of business practices. Program increases were spread throughout the branches’ Research, Development, Test, and Evaluation budgets, with a particular concentration of increased funding for the Defense Innovation Unit’s AI-related budget.

How to Replicate the Success of Operation Warp Speed

Summary

Operation Warp Speed (OWS) was a public-private partnership that produced COVID-19 vaccines in the unprecedented timeline of less than one year. This unique success among typical government research and development (R&D) programs is attributed to OWS’s strong public-private partnerships, effective coordination, and command leadership structure. Policy entrepreneurs, leaders of federal agencies, and issue advocates will benefit from understanding what policy interventions were used and how they can be replicated. Those looking to replicate this success should evaluate the stakeholder landscape and state of the fundamental science before designing a portfolio of policy mechanisms.

Challenge and Opportunity

Development of a vaccine to protect against COVID-19 began when China first shared the genetic sequence in January 2020. In May, the Trump Administration announced OWS to dramatically accelerate development and distribution. Through the concerted efforts of federal agencies and private entities, a vaccine was ready for the public in January 2021, beating the previous record for vaccine development by about three years. OWS released over 63 million doses within one year, and to date more than 613 million doses have been administered in the United States. By many accounts, OWS was the most effective government-led R&D effort in a generation.

Policy entrepreneurs, leaders of federal agencies, and issue advocates are interested in replicating similarly rapid R&D to solve problems such as climate change and domestic manufacturing. But not all challenges are suited for the OWS treatment. Replicating its success requires an understanding of the unique factors that made OWS possible, which are addressed in Recommendation 1. With this understanding, the mechanisms described in Recommendation 2 can be valuable interventions when used in a portfolio or individually.

Plan of Action

Recommendation 1. Assess whether (1) the majority of existing stakeholders agree on an urgent and specific goal and (2) the fundamental research is already established.

Criteria 1. The majority of stakeholders—including relevant portions of the public, federal leaders, and private partners—agree on an urgent and specific goal.

The OWS approach is most appropriate for major national challenges that are self-evidently important and urgent. Experts in different aspects of the problem space, including agency leaders, should assess the problem to set ambitious and time-bound goals. For example, OWS was conceptualized in April and announced in May, and had the specific goal of distributing 300 million vaccine doses by January.

Leaders should begin by assessing the stakeholder landscape, including relevant portions of the public, other federal leaders, and private partners. This assessment must include adoption forecasts that consider the political, regulatory, and behavioral contexts. Community engagement—at this stage and throughout the process—should inform goal-setting and program strategy. Achieving ambitious goals will require commitment from multiple federal agencies and the presidential administration. At this stage, understanding the private sector is helpful, but these stakeholders can be motivated further with mechanisms discussed later. Throughout the program, leaders must communicate the timeline and standards for success with expert communities and the public.

| Example Challenge: Building Capability for Domestic Rare Earth Element Extraction and Processing |

| Rare earth elements (REEs) have unique properties that make them valuable across many sectors, including consumer electronics manufacturing, renewable and nonrenewable energy generation, and scientific research. The U.S. relies heavily on China for the extraction and processing of REEs, and the U.S. Geological Survey reports that 78% of our REEs were imported from China from 2017-2020. Disruption to this supply chain, particularly in the case of export controls enacted by China as foreign policy, would significantly disrupt the production of consumer electronics and energy generation equipment critical to the U.S. economy. Export controls on REEs would create an urgent national problem, making it suitable for an OWS-like effort to build capacity for domestic extraction and processing. |

Criteria 2. Fundamental research is already established, and the goal requires R&D to advance for a specific use case at scale.

Efforts modeled after OWS should require fundamental research to advance or scale into a product. For example, two of the four vaccine platforms selected for development in OWS were mRNA and replication-defective live vector platforms, which had been extensively studied despite never being used in FDA-licensed vaccines. Research was advanced enough to give leaders confidence to bet on these platforms as candidates for a COVID-19 vaccine. To mitigate risk, two more-established platforms were also selected.

Technology readiness levels (TRLs) are maturity level assessments of technologies for government acquisition. This framework can be used to assess whether a candidate technology should be scaled with an OWS-like approach. A TRL of at least five means the technology was successfully demonstrated in a laboratory environment as part of an integrated or partially integrated system. In evaluating and selecting candidate technologies, risk is unavoidable, but decisions should be made based on existing science, data, and demonstrated capabilities.

| Example Challenge: Scaling Desalination to Meet Changing Water Demand |

| Increases in efficiency and conservation efforts have largely kept the U.S.’s total water use flat since the 1980s, but drought and climate variability are challenging our water systems. Desalination, a well-understood process to turn seawater into freshwater, could help address our changing water supply. However, all current desalination technologies applied in the U.S. are energy intensive and may negatively impact coastal ecosystems. Advanced desalination technologies—such as membrane distillation, advanced pretreatment, and advanced membrane cleaning, all of which are at technology readiness levels of 5–6—would reduce the total carbon footprint of a desalination plant. An OWS for desalination could increase the footprint of efficient and low-carbon desalination plants by speeding up development and commercialization of advanced technologies. |

Recommendation 2: Design a program with mechanisms most needed to achieve the goal: (1) establish a leadership team across federal agencies, (2) coordinate federal agencies and the private sector, (3) activate latent private-sector capacities for labor and manufacturing, (4) shape markets with demand-pull mechanisms, and (5) reduce risk with diversity and redundancy.

Design a program using a combination of the mechanisms below, informed by the stakeholder and technology assessment. The organization of R&D, manufacturing, and deployment should follow an agile methodology in which more risk than normal is accepted. The program framework should include criteria for success at the end of each sprint. During OWS, vaccine candidates were advanced to the next stage based on the preclinical or early-stage clinical trial data on efficacy; the potential to meet large-scale clinical trial benchmarks; and criteria for efficient manufacturing.

Mechanism 1: Establish a leadership team across federal agencies

Establish an integrated command structure co-led by a chief scientific or technical advisor and a chief operating officer, a small oversight board, and leadership from federal agencies. The team should commit to operate as a single cohesive unit despite individual affiliations. Since many agencies have limited experience in collaborating on program operations, a chief operating officer with private-sector experience can help coordinate and manage agency biases. Ideally, the team should have decision-making authority and report directly to the president. Leaders should thoughtfully delegate tasks, give appropriate credit for success, hold themselves and others accountable, and empower others to act.

The OWS team was led by personnel from the Department of Health and Human Services (HHS), the Department of Defense (DOD), and the vaccine industry. It included several HHS offices at different stages: the Centers for Disease Control and Prevention (CDC), the Food and Drug Administration (FDA), the National Institutes of Health (NIH), and the Biomedical Advanced Research and Development Authority (BARDA). This structure combined expertise in science and manufacturing with the power and resources of the DOD. The team assigned clear roles to agencies and offices to establish a chain of command.

| Example Challenge: Managing Wildland Fire with Uncrewed Aerial Systems (UAS) |

| Wildland fire is a natural and normal ecological process, but the changing climate and our policy responses are causing more frequent, intense, and destructive fires. Reducing harm requires real-time monitoring of fires with better detection technology and modernized equipment such as UAS. Wildfire management is a complex policy and regulatory landscape with functions spanning multiple federal, state, and local entities. Several interagency coordination bodies exist, including the National Wildfire Coordinating Group, Wildland Fire Leadership Council, and the Wildland Fire Mitigation and Management Commission, but much of these efforts are consensus-based coordination models. The status quo and historical biases against agencies have created silos of effort and prevent technology from scaling to the level required. An OWS for wildland fire UAS would establish a public-private partnership led by experienced leaders from federal agencies, state and local agencies, and the private sector to advance this technology development. The team would motivate commitment to the challenge across government, academia, nonprofits, and the private sector to deliver technology that meets ambitious goals. Appropriate teams across agencies would be empowered to refocus their efforts during the duration of the challenge. |

Mechanism 2: Coordinate federal agencies and the private sector

Coordinate agencies and the private sector on R&D, manufacturing, and distribution, and assign responsibilities based on core capabilities rather than political or financial considerations. Identify efficiency improvements by mapping processes across the program. This may include accelerating regulatory approval by facilitating communication between the private sector and regulators or by speeding up agency operations. Certain regulations may be suspended entirely if the risks are considered acceptable relative to the urgency of the goal. Coordinators should identify processes that can occur in parallel rather than sequentially. Leaders can work with industry so that operations occur under minimal conditions to ensure worker and product safety.

The OWS team worked with the FDA to compress traditional approval timelines by simultaneously running certain steps of the clinical trial process. This allowed manufacturers to begin industrial-scale vaccine production before full demonstration of efficacy and safety. The team continuously sent data to FDA while they completed regulatory procedures in active communication with vaccine companies. Direct lines of communication permitted parallel work streams that significantly reduced the normal vaccine approval timeline.

| Example Challenge: Public Transportation and Interstate Rail |

| Much of the infrastructure across the United States needs expensive repairs, but the U.S. has some of the highest infrastructure construction costs for its GDP and longest construction times. A major contributor to costs and time is the approval process with extensive documentation, such as preparing an environmental impact study to comply with the National Environmental Policy Act. An OWS-like coordinating body could identify key pieces of national infrastructure eligible for support, particularly for near-end-of-lifespan infrastructure or major transportation arteries. Reducing regulatory burden for selected projects could be achieved by coordinating regulatory approval in close collaboration with the Department of Transportation, the Environmental Protection Agency, and state agencies. The program would need to identify and set a precedent for differentiating between expeditable regulations and key regulations, such as structural reviews, that could serve as bottlenecks. |

Mechanism 3: Activate latent private-sector capacities for labor and manufacturing

Activate private-sector capabilities for production, supply chain management, deployment infrastructure, and workforce. Minimize physical infrastructure requirements, establish contracts with companies that have existing infrastructure, and fund construction to expand facilities where necessary. Coordinate with the Department of State to expedite visa approval for foreign talent and borrow personnel from other agencies to fill key roles temporarily. Train staff quickly with boot camps or accelerators. Efforts to build morale and ensure commitment are critical, as staff may need to work holidays or perform higher than normally expected. Map supply chains, identify critical components, and coordinate supply. Critical supply chain nodes should be managed by a technical expert in close partnership with suppliers. Use the Defense Production Act sparingly to require providers to prioritize contracts for procurement, import, and delivery of equipment and supplies. Map the distribution chain from the manufacturer to the endpoint, actively coordinate each step, and anticipate points of failure.

During OWS, the Army Corps of Engineers oversaw construction projects to expand vaccine manufacturing capacity. Expedited visa approval brought in key technicians and engineers for installing, testing, and certifying equipment. Sixteen DOD staff also served in temporary quality-control positions at manufacturing sites. The program established partnerships between manufacturers and the government to address supply chain challenges. Experts from BARDA worked with the private sector to create a list of critical supplies. With this supply chain mapping, the DOD placed prioritized ratings on 18 contracts using the Defense Production Act. OWS also coordinated with DOD and U.S. Customs to expedite supply import. OWS leveraged existing clinics at pharmacies across the country and shipped vaccines in packages that included all supplies needed for administration, including masks, syringes, bandages, and paper record cards.

| Example Challenge: EV Charging Network |