Open scientific grant proposals to advance innovation, collaboration, and evidence-based policy

Grant writing is a significant part of a scientist’s work. While time-consuming, this process generates a wealth of innovative ideas and in-depth knowledge. However, much of this valuable intellectual output — particularly from the roughly 70% of unfunded proposals — remains unseen and underutilized. The default secrecy of scientific proposals is based on many valid concerns, yet it represents a significant loss of potential progress and a deviation from government priorities around openness and transparency in science policy. Facilitating public accessibility of grant proposals could transform them into a rich resource for collaboration, learning, and scientific discovery, thereby significantly enhancing the overall impact and efficiency of scientific research efforts.

We recommend that funding agencies implement a process by which researchers can opt to make their grant proposals publicly available. This would enhance transparency in research, encourage collaboration, and optimize the public-good impacts of the federal funding process.

Details

Scientists spend a great deal of time, energy, and effort writing applications for grant funding. Writing grants has been estimated to take roughly 15% of a researcher’s working hours and involves putting together an extensive assessment of the state of knowledge, identifying key gaps in understanding that the researcher is well-positioned to fill, and producing a detailed roadmap for how they plan to fill that knowledge gap over a span of (typically) two to five years. At major federal funding agencies like the National Institutes of Health (NIH) and National Science Foundation (NSF), the success rate for research grant applications tends to fall in the range of 20%–30%.

The upfront labor required of scientists to pursue funding, and the low success rates of applications, has led some to estimate that ~10% of scientists’ working hours are “wasted.” Other scholars argue that the act of grant writing is itself a valuable and generative process that produces spillover benefits by incentivizing research effort and informing future scholarship. Under either viewpoint, one approach to reducing the “waste” and dramatically increasing the benefits of grant writing is to encourage proposals — both funded and unfunded — to be released as public goods, thus unlocking the knowledge, frontier ideas, and roadmaps for future research that are currently hidden from view.

The idea of grant proposals being made public is a sensitive one. Indeed, there are valid reasons for keeping proposals confidential, particularly when they contain intellectual property or proprietary information, or when they are in the early stages of development. However, these reasons do not apply to all proposals, and many potential concerns only apply for a short time frame. Therefore, neither full disclosure nor full secrecy are optimal; a more flexible approach that encourages researchers to choose when and how to share their proposals could yield significant benefits with minimal risks.

The potential benefits to the scientific community, and science funders include:

- Encouraging collaboration by making promising unfunded ideas and shared interests discoverable by disparate researchers

- Supporting early-career scientists by giving them access to a rich collection of successful and unsuccessful proposals from which to learn

- Facilitating cutting-edge science-of-science research to unlock policy-relevant knowledge about research programs and scientific grantmaking

- Allowing for philanthropic crowd-in by creating a transparent and searchable marketplace of grant proposals that can attract additional or alternative funding

- Promoting efficiency in the research planning and budgeting process by increasing transparency

- Giving scientists, science funders, and the public a view into the whole of early-stage scientific thought, above and beyond the outputs of completed projects.

Recommendations

Federal funding agencies should develop a process to allow and encourage researchers to share their grant proposals publicly, within existing infrastructures for grant reporting (e.g., NIH RePORTER). Sharing should be minimally burdensome and incorporated into existing application frameworks. The process should be flexible, allowing researchers to opt in or out — and to specify other characteristics like embargoes — to ensure applicants’ privacy and intellectual property concerns are mitigated.

The White House Office of Management and Budget (OMB) should develop a framework for publicly sharing grant proposals.

- OMB’s Evidence Team — in partnership with federal funding agencies (e.g., NIH, NSF, NASA, DOE) — should review statutory and regulatory frameworks to determine whether there are legal obstacles to sharing proposal content for extramural grant applications with applicant permission.

- OMB should then issue a memo clarifying the manner in which agencies can make proposals public and directing agencies to develop plans to allow and encourage the public availability of scientific grant proposals, in alignment with the Foundations for Evidence-Based Policymaking Act and the “Memorandum on Restoring Trust in Government Through Scientific Integrity and Evidence-Based Policymaking.”

The NSF should run a focused pilot program to assess opportunities and obstacles for proposal sharing across disciplines.

- NSF’s Division of Institution and Award Support (DIAS) should work with at least three directorates to launch a pilot study assessing applicants’ perspectives on proposal sharing, their perceived risks and concerns, and disciplinary differences in applicants’ views.

- The National Science Board (NSB) should produce a report outlining the findings of the pilot study and the implications for optimal approaches to facilitating public access of grant proposals.

Based on the NSB’s report, OSTP and OMB should work with federal funding agencies to refine and implement a proposal-sharing process across agencies.

- OSTP should work with funding agencies to develop a unified application section where researchers can indicate their release preferences. The group should agree on a set of shared parameters to align the request across agencies. For example, the guidelines should establish:

- A set of embargo options, such that applicants can choose to make their proposal available after, for example, 2, 5, or 10 years

- Whether the sharing of proposals can be made conditional on acceptance/rejection

- When in the application process applicants should be asked to opt in or out, and an approach for allowing applicants to revise their decision following submission

- OMB should include public access for grant proposals as a budget priority, emphasizing its potential benefits for bolstering innovation, efficiency, and government data availability. It should also provide guidance and technical assistance to agencies on how to implement the open grants process and require agencies to provide evidence of their plans to do so.

Establish data collaboratives to foster meaningful public involvement

Federal agencies are striving to expand the role of the public, including members of marginalized communities, in developing regulatory policy. At the same time, agencies are considering how to mobilize data of increasing size and complexity to ensure that policies are equitable and evidence-based. However, community engagement has rarely been extended to the process of examining and interpreting data. This is a missed opportunity: community members can offer critical context to quantitative data, ground-truth data analyses, and suggest ways of looking at data that could inform policy responses to pressing problems in their lives. Realizing this opportunity requires a structure for public participation in which community members can expect both support from agency staff in accessing and understanding data and genuine openness to new perspectives on quantitative analysis.

To deepen community involvement in developing evidence-based policy, federal agencies should form Data Collaboratives in which staff and members of the public engage in mutual learning about available datasets and their affordances for clarifying policy problems.

Details

Executive Order 14094 and the Office of Management and Budget’s subsequent guidance memo direct federal agencies to broaden public participation and community engagement in the federal regulatory process. Among the aims of this policy are to establish two-way communications and promote trust between government agencies and the public, particularly members of historically underserved communities. Under the Executive Order, the federal government also seeks to involve communities earlier in the policy process. This new attention to community engagement can seem disconnected from the federal government’s long-standing commitment to evidence-based policy and efforts to ensure that data available to agencies support equity in policy-making; assessing data and evidence is usually considered a job for people with highly specialized, quantitative skills. However, lack of transparency about the collection and uses of data can undermine public trust in government decision-making. Further, communities may have vital knowledge that credentialed experts don’t, knowledge that could help put data in context and make analyses more relevant to problems on the ground.

For the federal government to achieve its goals of broadened participation and equitable data, opportunities must be created for members of the public and underserved communities to help shape how data are used to inform public policy. Data Collaboratives would provide such an opportunity. Data Collaboratives would consist of agency staff and individuals affected by the agency’s policies. Each member of a Data Collaborative would be regarded as someone with valuable knowledge and insight; staff members’ role would not be to explain or educate but to learn alongside community participants. To foster mutual learning, Data Collaboratives would meet regularly and frequently (e.g., every other week) for a year or more.

Each Data Collaborative would focus on a policy problem that an agency wishes to address. The Environmental Protection Agency might, for example, form a Data Collaborative on pollution prevention in the oil and gas sector. Depending on the policy problem, staff from multiple agencies may be involved alongside community participants. The Data Collaborative’s goal would be to surface the datasets potentially relevant to the policy problem, understand how they could inform the problem, and identify their limitations. Data Collaboratives would not make formal recommendations or seek consensus; however, ongoing deliberations about the datasets and their affordances can be expected to create a more robust foundation for the use of data in policy development and the development of additional data resources.

Recommendations

The Office of Management and Budget should

- Establish a government-wide Data Collaboratives program in consultation with the Chief Data Officers Council.

- Work with leadership at federal agencies to identify policy problems that would benefit from consideration by a Data Collaborative. It is expected that deputy administrators, heads of equity and diversity offices, and chief data officers would be among those consulted.

- Hire a full-time director of Data Collaboratives to lead such tasks as coordinating with public participants, facilitating meetings, and ensuring that relevant data resources are available to all collaborative members.

- Ensure agencies’ ability to provide the material support necessary to secure the participation of underrepresented community members in Data Collaboratives, such as stipends, childcare, and transportation.

- Support agencies in highlighting the activities and accomplishments of Data Collaboratives through social media, press releases, open houses, and other means.

Conclusion

Data Collaboratives would move public participation and community engagement upstream in the policy process by creating opportunities for community members to contribute their lived experience to the assessment of data and the framing of policy problems. This would in turn foster two-way communication and trusting relationships between government and the public. Data Collaboratives would also help ensure that data and their uses in federal government are equitable, by inviting a broader range of perspectives on how data analysis can promote equity and where relevant data are missing. Finally, Data Collaboratives would be one vehicle for enabling individuals to participate in science, technology, engineering, math, and medicine activities throughout their lives, increasing the quality of American science and the competitiveness of American industry.

Make publishing more efficient and equitable by supporting a “publish, then review” model

Preprinting – a process in which researchers upload manuscripts to online servers prior to the completion of a formal peer review process – has proven to be a valuable tool for disseminating preliminary scientific findings. This model has the potential to speed up the process of discovery, enhance rigor through broad discussion, support equitable access to publishing, and promote transparency of the peer review process. Yet the model’s use and expansion is limited by a lack of explicit recognition within funding agency assessment practices.

The federal government should take action to support preprinting, preprint review, and “no-pay” publishing models in order to make scholarly publishing of federal outputs more rapid, rigorous, and cost-efficient.

Details

In 2022, the Office of Science and Technology Policy (OSTP)’s “Ensuring Free, Immediate, and Equitable Access to Federally Funded Research” memo, written by Dr. Alondra Nelson, directed federal funding agencies to make the results of taxpayer-supported research immediately accessible to readers at no cost. This important development extended John P. Holdren’s 2013 “Increasing Access to the Results of Federally Funded Scientific Research” memo by covering all federal agencies and removing 12-month embargoes to free access and mirrored developments such as the open access provisions of Horizon 2020 in Europe.

One of the key provisions of the Nelson memo is that federal agencies should “allow researchers to include reasonable publication costs … as allowable expenses in all research budgets,” signaling support for the Article Processing Charges (APC) model. Thus, the Nelson memo creates barriers to equitable publishing for researchers with limited access to funds. Furthermore, leaving the definition of “reasonable costs” open to interpretation creates the risk that an increasing proportion of federal research funds will be siphoned by publishing. In 2022, OSTP estimated that American taxpayers are already paying $390 to $798 million annually to publish federally funded research.

Without further interventions, these costs are likely to rise, since publishers have historically responded to increasing demand for open access publishing by shifting from a subscription model to one in which authors pay to publish with article processing charges (APCs). For example, APC charges increased by 50 percent from 2010 to 2019.

The “no pay” model

In May 2023, the European Union’s council of ministers called for a “no pay” academic publishing model, in which costs are paid directly by institutions and funders to ensure equitable access to read and publish scholarship. There are several routes to achieve the no pay model, including transitioning journals to ‘Diamond’ Open Access models, in which neither authors nor readers are charged.

However, in contrast to models that rely on transforming journal publishing, an alternative approach relies on the burgeoning preprint system. Preprints are manuscripts posted online by authors to a repository, without charge to authors or readers. Over the past decade, their use across the scientific enterprise has grown dramatically, offering unique flexibility and speed to scientists and encouraging dynamic conversation. More recently, preprints have been paired with a new system of preprint peer review. In this model, organizations like Peer Community In, Review Commons, and RR\ID organize expert review of preprints from the community. These reviews are posted publicly and independent of a specific publisher or journal’s process.

Despite the growing popularity of this approach, its uptake is limited by a lack of support and incorporation into science funding and evaluation models. Federal action to encourage the “publish, then review” model offers several benefits:

- Research is available sooner, and society benefits more rapidly from new scientific findings. With preprints, researchers share their work with the community months or years ahead of journal publication, allowing others to build off their advances.

- Peer review is more efficient and rigorous because the content of the review reports (though not necessarily the identity of the reviewers) is open. Readers are able to understand the level of scrutiny that went into the review process. Furthermore, an open review process enables anyone in the community to join the conversation and bring in perspectives and expertise that are currently excluded. The review process is less wasteful since reviews are not discarded with journal rejection, making better use of researchers’ time.

- Taxpayer research dollars are used more effectively. Disentangling transparent fees for dissemination and peer reviews from a publishing market driven largely by prestige would result in lower publishing costs, enabling additional funds to be used for research.

Recommendations

To support preprint-based publishing and equitable access to research:

Congress should

- Commission a report from the National Academies of Sciences, Engineering, and Medicine on benefits, risks, and projected costs to American taxpayers of supporting alternative scholarly publishing approaches, including open infrastructure for the “publish, then review” model.

OSTP should

- Coordinate support for key disciplinary infrastructures and peer review service providers with partnerships, discoverability initiatives, and funding.

- Draft a policy in which agencies require that papers resulting from federal funding are preprinted at or before submission to peer review and updated with each subsequent version; then work with agencies to conduct a feasibility study and stakeholder engagement to understand opportunities (including cost savings) and obstacles to implementation.

Science funding agencies should

- Recognize preprints and public peer review. Following the lead of the National Institutes of Health’s 2017 Guide Notice on Reporting Preprints and Other Interim Research Products, revise grant application and report guidelines to allow researchers to cite preprints. Extend this provision to include publicly accessible reviews they have received and authored. Provide guidance to reviewers on evaluating these outputs as scientific contributions within an applicant’s body of work.

Establish grant supplements for open science infrastructure security

Open science infrastructure (OSI), such as platforms for sharing research products or conducting analyses, is vulnerable to security threats and misappropriation. Because these systems are designed to be inclusive and accessible, they often require few credentials of their users. However, this quality also puts OSI at risk for attack and misuse. Seeking to provide quality tools to their users, OSI builders dedicate their often scant funding resources to addressing these security issues, sometimes delaying other important software work.

To support these teams and allow for timely resolution to security problems, science funders should offer security-focused grant supplements to funded OSI projects.

Details

Existing federal policy and funding programs recognize the importance of security to scholarly infrastructure like OSI. For example, in October 2023, President Biden issued an Executive Order to manage the risks of artificial intelligence (AI) and ensure these technologies are safe, secure, and trustworthy. Also, under the Secure and Trustworthy Cyberspace program, the National Science Foundation (NSF) provides grants to ensure the security of cyberinfrastructure and asks scholars who collect data to plan for its secure storage and sharing. Furthermore, agencies like NSF and the National Institutes of Health (NIH) already offer supplements for existing grants. What is still needed is rapid dispersal of funds to address unanticipated security concerns across scientific domains.

Risks like secure shell (SSH) attacks, data poisoning, and the proliferation of mis/disinformation on OSI threaten the utility, sustainability, and reputation of OSI. These concerns are urgent. New access to powerful generative AI tools, for instance, makes it easy to create disinformation that can convincingly mimic the rigorous science shared via OSI. In fact, increased open access to science can accelerate the proliferation of AI-generated scholarly disinformation by improving the accuracy of the models that generate it.

OSI is commonly funded by grants that afford little support for the maintenance work that could stop misappropriation and security threats. Without financial resources and an explicit commitment to a funder, it is difficult for software teams to prioritize these efforts. To ensure uptake of OSI and its continued utility, these teams must have greater access to financial resources and relevant talent to address these security concerns and norms violations.

Recommendations

Security concerns may be unanticipated and urgent, not aligning with calls for research proposals. To provide support for OSI with security risks in a timely manner, executive action should be taken through federal agencies funding science infrastructure (NSF, NIH, NASA, DOE, DOD, NOAA). These agencies should offer research supplements to address OSI misappropriation and security threats. Supplement requests would be subject to internal review by funding agencies but not subject to peer review, allowing teams to circumvent a lengthier review process for a full grant proposal. Research supplements, unlike full grant proposals, will allow researchers to nimbly respond to novel security concerns that arise after they receive their initial funding. Additionally, researchers who are less familiar with security issues but who provide OSI may not anticipate all relevant threats when the project is conceived and initial funding is distributed (managers of from-scratch science gateways are one possible example). Supplying funds through supplements when the need arises can protect sensitive data and infrastructure.

These research supplements can be made available to principal investigators and co-principal investigators with active awards. Supplements may be used to support additional or existing personnel, allowing OSI builders to bring new expertise to their teams as necessary. To ensure that funds can address unanticipated security issues in OSI from a variety of scholarly domains, supplement recipients need not be funded under an existing program to explicitly support open science infrastructure (e.g., NSF’s POSE program).

To minimize the administrative burden of review, applications for supplements should be kept short (e.g., no more than five pages, excluding budget) and should include the following:

- A description of the security issue to be addressed

- A convincing argument that the infrastructure has goals of increasing the inclusion, accessibility, and/or transparency of science and that those goals are exacerbating the relevant security threat

- A description of the steps to be taken to address the security issue and a well-supported argument that the funded researchers have the expertise and tools necessary to carry those steps out

- A brief description of the original grant’s scope, making evident that the supplemental funding will support work outside of the original scope

- An explanation as to why a grant supplement is more appropriate for their circumstances than a new full grant application

- A budget for the work

By appropriating $3 million annually across federal science funders, 40 supplemental awards of $75,000 each could be distributed to OSI projects. While the budget needed to address each security issue will vary, this estimate demonstrates the reach that these supplements could have.

Research software like OSI often struggles to find funding for maintenance. These much-needed supplemental funds will ensure that OSI developers can speedily prioritize important security-related work without doing so at the expense of other planned software work. Without this funding, we risk compromising the reputation of open science, consuming precious development resources allocated to other tasks, and negatively affecting OSI users’ experience. Grant supplements to address OSI security threats and misappropriation ensure the sustainability of OSI going forward.

Expand capacity and coordination to better integrate community data into environmental governance

Frontline communities bear the brunt of harms created by climate change and environmental pollution, but they also increasingly generate their own data, providing critical social and environmental context often not present in research or agency-collected data. However, community data collectors face many obstacles to integrating this data into federal systems: they must navigate complex local and federal policies within dense legal landscapes, and even when there is interest or demonstrated need, agencies and researchers may lack the capacity to find or integrate this data responsibly.

Federal research and regulatory agencies, as well as the White House, are increasingly supporting community-led environmental justice initiatives, presenting an opportunity to better integrate local and contextualized information into more effective and responsive environmental policy.

The Environmental Protection Agency (EPA) should better integrate community data into environmental research and governance by building internal capacity for recognizing and applying such data, facilitating connections between data communities, and addressing misalignments with data standards.

Details

Community science and monitoring are often overlooked yet vital facets of open science. Community science collaborations and their resulting data have led to historic environmental justice victories that underscore the importance of contextualized community-generated data in environmental problem-solving and evidence-informed policy-making.

Momentum around integrating community-generated environmental data has been building at the federal level for the past decade. In 2016, the report “A Vision for Citizen Science at EPA,” produced by the National Advisory Council for Environmental Policy and Technology (NACEPT), thoroughly diagnosed the need for a clear framework for moving community-generated environmental data and information into governance processes. Since then, EPA has developed additional participatory science resources, including a participatory science vision, policy guidelines, and equipment loan programs. More recently, in 2022, the EPA created an Equity Action Plan in alignment with their 2022–2026 Strategic Plan and established an Office of Environmental Justice and External Civil Rights (OEJECR). And, in 2023, as a part of the cross-agency Year of Open Science, the National Aeronautics and Space Administration (NASA)’s Transform to Open Science (TOPS) program lists “broadening participation by historically excluded communities” as a requisite part of its strategic objectives.

It is evident that the EPA and research funding agencies like NASA have a strategic and mission-driven interest in collaborating with communities bearing the brunt of environmental and climate injustice to unlock the potential of their data. It is also clear that current methods aren’t working. Communities that collect and use environmental data still must navigate disjointed reporting policies and data standards and face a dearth of resources on how to share data with relevant stakeholders within the federal government. There is a critical lack of capacity and coordination directed at cross-agency integration of community data and the infrastructure that could enable the use of this data in regulatory and policy-making processes.

Recommendations

To build government capacity to integrate community-generated data into environmental governance, the EPA should:

- Create a memorandum of understanding between the EPA’s OEJECR, National Environmental Justice Advisory Council (NEJAC), Office of Management and Budget (OMB), United States Digital Service (USDS), and relevant research agencies, including NASA, National Atmospheric and Oceanic Administration (NOAA), and National Science Foundation (NSF), to develop a collaborative framework for building internal capacity for generating and applying community-generated data, as well as managing it to enable its broader responsible reuse.

- Develop and distribute guidance on responsible scientific collaboration with communities that prioritizes ethical open science and data-sharing practices that center community and environmental justice priorities.

- Create a capacity-building program, designed by and with environmental justice and data-collecting communities, focused on building translational and intermediary roles within the EPA that can facilitate connections and responsible sharing between data holders and seekers. Incentivize the application of the aforementioned guidance within federally funded research by recruiting and training program staff to act as translational liaisons situated between the OEJECR, regional EPA offices, and relevant research funding agencies, including NASA, NOAA, and NSF.

To facilitate connections between communities generating data, the EPA should:

- Expand the scope of the current Environmental Information Exchange Network (EN) to include facilitation of environmental data sharing by community-based organizations and community science initiatives.

- Initiate a working group including representatives from data-generating community organizations to develop recommendations on how EN might accommodate community data and how its data governance processes can center community and environmental justice priorities.

- Provide grant funding within the EN earmarked for community-based organizations to support data integration with the EN platform. This could include hiring contractors with technical data management expertise to support data uploading within established standards or to build capacity internally within community-based organizations to collect and manage data according to EN standards.

- Expand the resources available for EN partners that support data quality assurance, advocacy, and sharing, for example by providing technical assistance through regional EPA offices trained through the aforementioned capacity-building program.

To address misaligned data standards, the EPA, in partnership with USDS and the OMB, should:

- Update and promote guidance resources for communities and community-based organizations aiming to apply the data standards EPA uses to integrate data in regulatory decisions.

- Initiate a collaborative co-design process for new data standards that can accommodate community-generated data, with representation from communities who collect environmental data. This may require the creation of maps or crosswalks to facilitate translation between standards, including research data standards, as well as internal capacity to maintain these crosswalks.

Community-generated data provides contextualized environmental information essential for evidence-based policy-making and regulation, which in turn reduces wasteful spending by designing effective programs. Moreover, healthcare costs will be reduced for the general public if better evidence is used to address pollution, and climate adaptation costs could be reduced if we can use more localized and granular data to address pressing environmental and climate issues now rather than in the future.

Our recommendations call for the addition of at least 10 full-time employees for each regional EPA office. The additional positions proposed could fill existing vacancies in newly established offices like the OEJECR. Additional budgetary allocations can also be made to the EPA’s EN to support technical infrastructure alterations and grant-making.

While there is substantial momentum and attention on community environmental data, our proposed capacity stimulus can make existing EPA processes more effective at achieving their mission and supports rebuilding trust in agencies that are meant to serve the public.

Expected Utility Forecasting for Science Funding

The typical science grantmaker seeks to maximize their (positive) impact with a limited amount of money. The decision-making process for how to allocate that funding requires them to consider the different dimensions of risk and uncertainty involved in science proposals, as described in foundational work by economists Chiara Franzoni and Paula Stephan. The Von Neumann-Morgenstern utility theorem implies that there exists for the grantmaker — or the peer reviewer(s) assessing proposals on their behalf — a utility function whose expected value they will seek to maximize.

Common frameworks for evaluating proposals leave this utility function implicit, often evaluating aspects of risk, uncertainty, and potential value independently and qualitatively. Empirical work has suggested that such an approach may lead to biases, resulting in funding decisions that deviate from grantmakers’ ultimate goals. An expected utility approach to reviewing science proposals aims to make that implicit decision-making process explicit, and thus reduce biases, by asking reviewers to directly predict the probability and value of different potential outcomes occurring. Implementing this approach through forecasting brings the added benefits of providing (1) a resolution and scoring process that could help incentivize reviewers to make better, more accurate predictions over time and (2) empirical estimates of reviewers’ accuracy and tendency to over or underestimate the value and probability of success of proposals.

At the Federation of American Scientists, we are currently piloting this approach on a series of proposals in the life sciences that we have collected for Focused Research Organizations (FROs), a new type of non-profit research organization designed to tackle challenges that neither academia or industry is incentivized to work on. The pilot study was developed in collaboration with Metaculus, a forecasting platform and aggregator, and is hosted on their website. In this paper, we provide the detailed methodology for the approach that we have developed, which builds upon Franzoni and Stephan’s work, so that interested grantmakers may adapt it for their own purposes. The motivation for developing this approach and how we believe it may help address biases against risk in traditional peer review processes is discussed in our article “Risk and Reward in Peer Review”.

Defining Outcomes

To illustrate how an expected utility forecasting approach could be applied to scientific proposal evaluation, let us first imagine a research project consisting of multiple possible outcomes or milestones. In the most straightforward case, the outcomes that could arise are mutually exclusive (i.e., only a single one will be observed). Indexing each outcome with the letter 𝑖, we can define the expected value of each as the product of its value (or utility; 𝓊𝑖) and the probability of it occurring, 𝑃(𝑚𝑖). Because the outcomes in this example are mutually exclusive, the total expected utility (TEU) of the proposed project is the sum of the expected value of each outcome1:

𝑇𝐸𝑈 = 𝛴𝑖𝓊𝑖𝑃(𝑚𝑖).

However, in most cases, it is easier and more accurate to define the range of outcomes of a research project as a set of primary and secondary outcomes or research milestones that are not mutually exclusive, and can instead occur in various combinations.

For instance, science proposals usually highlight the primary outcome(s) that they aim to achieve, but may also involve important secondary outcome(s) that can be achieved in addition to or instead of the primary goals. Secondary outcomes can be a research method, tool, or dataset produced for the purpose of achieving the primary outcome; a discovery made in the process of pursuing the primary outcome; or an outcome that researchers pivot to pursuing as they obtain new information from the research process. As such, primary and secondary outcomes are not necessarily mutually exclusive. In the simplest scenario with just two outcomes (either two primary or one primary and one secondary), the total expected utility becomes

𝑇𝐸𝑈 = 𝓊1𝑃(𝑚1⋂ not 𝑚2) + 𝓊2𝑃(𝑚2⋂ not 𝑚1) + (𝓊1 + 𝓊2)𝑃(𝑚1⋂𝑚2),

𝑇𝐸𝑈 = 𝓊1𝑃(𝑚1) – (𝑚1⋂ 𝑚2)) + 𝓊2𝑃(𝑚2) – 𝑃(𝑚1⋂ 𝑚2)) + (𝓊1 + 𝓊2)𝑃(𝑚1⋂𝑚2)

𝑇𝐸𝑈 = 𝓊1𝑃(𝑚1) + 𝓊2𝑃(𝑚2) – (𝓊1 + 𝓊2)𝑃(𝑚1⋂𝑚2).

As the number of outcomes increases, the number of joint probability terms increases as well. Assuming the outcomes are independent though, they can be reduced to the product of the probabilities of individual outcomes. For example,

𝑃(𝑚1⋂𝑚2) = 𝑃(𝑚1) * 𝑃(𝑚2)

On the other hand, milestones are typically designed to build upon one another, such that achieving later milestones necessitates the achievement of prior milestones. In these cases, the value of later milestones typically includes the value of prior milestones: for example, the value of demonstrating a complete pilot of a technology is inclusive of the value of demonstrating individual components of that technology. The total expected utility can thus be defined as the sum of the product of the marginal utility of each additional milestone and its probability of success:

𝑇𝐸𝑈 = 𝛴𝑖(𝓊𝑖 + 𝓊𝑖-1)𝑃(𝑚𝑖),

where 𝓊0 = 0.

Depending on the science proposal, either of these approaches — or a combination — may make the most sense for determining the set of outcomes to evaluate.

In our FRO Forecasting pilot, we worked with proposal authors to define two outcomes for each of their proposals. Depending on what made the most sense for each proposal, the two outcomes reflected either relatively independent primary and secondary goals, or sequential milestone outcomes that directly built upon one another (though for simplicity, we called all of the outcomes milestones).

Defining Probability of Success

Once the set of potential outcomes have been defined, the next step is to determine the probability of success between 0% and 100% for each outcome if the proposal is funded. A prediction of 50% would indicate the highest level of uncertainty about the outcome, whereas the closer the predicted probability of success is to 0% or 100%, the more certainty there is that the outcome will be one over the other.

Furthermore, Franzoni and Stephan decompose probability of success into two components: the probability that the outcome can actually occur in nature or reality and the probability that the proposed methodology will succeed in obtaining the outcome (conditional on it being possible in nature). The total probability is then the product of these two components:

𝑃(𝑚𝑖) = 𝑃nature(𝑚𝑖) * 𝑃proposal(𝑚𝑖)

Depending on the nature of the proposal (e.g., more technology-driven, or more theoretical/discovery driven), each component may be more or less relevant. For example, our forecasting pilot includes a proposal to perform knockout validation of renewable antibodies for 10,000 to 15,000 human proteins; for this project, 𝑃nature(𝑚𝑖) approaches 1 and 𝑃proposal(𝑚𝑖) drives the overall probability of success.

Defining Utility

Similarly, the value of an outcome can be separated into its impact on the scientific field and its impact on society at large. Scientific impact aims to capture the extent to which a project advances the frontiers of knowledge, enables new discoveries or innovations, or enhances scientific capabilities or methods. Social impact aims to capture the extent to which a project contributes to solving important societal problems, improving well-being, or advancing social goals.

In both of these cases, determining the value of an outcome entails some subjective preferences, so there is no “correct” choice, at least mathematically speaking. However, proxy metrics may be helpful in considering impact. Though each is imperfect, one could consider citations of papers, patents on tools or methods, or users of method, tools, and datasets as proxies of scientific impact. For social impact, some proxy metrics that one might consider are the value of lives saved, the cost of illness prevented, the number of job-years of employment generated, economic output in terms of GDP, or the social return on investment.

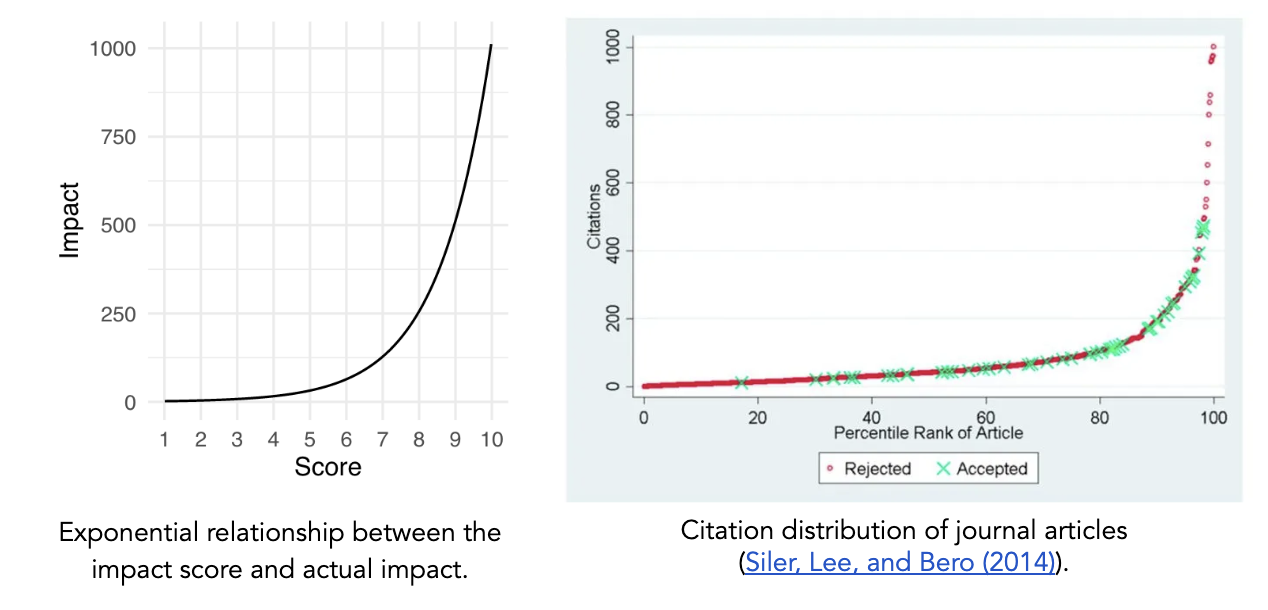

The approach outlined by Franzoni and Stephan asks reviewers to assess scientific and social impact on a linear scale (0-100), after which the values can be averaged to determine the overall impact of an outcome. However, we believe that an exponential scale better captures the tendency in science for a small number of research projects to have an outsized impact and provides more room at the top end of the scale for reviewers to increase the rating of the proposals that they believe will have an exceptional impact.

As such, for our FRO Forecasting pilot, we chose to use a framework in which a simple 1–10 score corresponds to real-world impact via a base 2 exponential scale. In this case, the overall impact score of an outcome can be calculated according to

𝓊𝑖 = log[2science impact of 𝑖 + 2social impact of 𝑖] – 1.

For an exponential scale with a different base, one would substitute that base for two in the above equation. Depending on each funder’s specific understanding of impact and the type(s) of proposals they are evaluating, different relationships between scores and utility could be more appropriate.

In order to capture reviewers’ assessment of uncertainty in their evaluations, we asked them to provide median, 25th, and 75th percentile predictions for impact instead of a single prediction. High uncertainty would be indicated by a narrow confidence interval, while low uncertainty would be indicated by a wide confidence interval.

Determining the “But For” Effect of Funding

The above approach aims to identify the highest impact proposals. However, a grantmaker may not want to simply fund the highest impact proposals; rather, they may be most interested in understanding where their funding would make the highest impact — i.e., their “but for” effect. In this case, the grantmaker would want to fund proposals with the maximum difference between the total expected utility of the research proposal if they chose to funded it versus if they chose not to:

“But For” Impact = 𝑇𝐸𝑈(funding) – 𝑇𝐸𝑈(no funding).

For TEU(funding), the probability of the outcome occurring with this specific grantmaker’s funding using the proposed approach would still be defined as above

𝑃(𝑚𝑖 | funding) = 𝑃nature(𝑚𝑖) * 𝑃proposal(𝑚𝑖),

but for 𝑇𝐸𝑈(no funding), reviewers would need to consider the likelihood of the outcome being achieved through other means. This could involve the outcome being realized by other sources of funding, other researchers, other approaches, etc. Here, the probability of success without this specific grantmaker’s funding could be described as

𝑃(𝑚𝑖 | no funding) = 𝑃nature(𝑚𝑖) * 𝑃other mechanism(𝑚𝑖).

In our FRO Forecasting pilot, we assumed that 𝑃other mechanism(𝑚𝑖) ≈ 0. The theory of change for FROs is that there exists a set of research problems at the boundary of scientific research and engineering that are not adequately supported by traditional research and development models and are unlikely to be pursued by academia or industry. Thus, in these cases it is plausible to assume that,

𝑃(𝑚𝑖 | no funding) ≈ 0

𝑇𝐸𝑈(no funding) ≈ 0

“But For” Impact ≈ 𝑇𝐸𝑈(funding).

This assumption, while not generalizable to all contexts, can help reduce the number of questions that reviewers have to consider — a dynamic which we explore further in the next section.

Designing Forecasting Questions

Once one has determined the total expected utility equation(s) relevant for the proposal(s) that they are trying to evaluate, the parameters of the equation(s) must be translated into forecasting questions for reviewers to respond to. In general, for each outcome, reviewers will need to answer the following four questions:

- If this proposal is funded, what is the probability that this outcome will occur?

- If this proposal is not funded, what is the probability that this outcome will still occur?

- What will be the scientific impact of this outcome occurring?

- What will be the social impact of this outcome occurring?

For the probability questions, one could alternatively ask reviewers about the different probability components (𝑃nature(𝑚𝑖), 𝑃proposal(𝑚𝑖), 𝑃other mechanism(𝑚𝑖), etc.), but in most cases it will be sufficient — and simpler for the reviewer — to focus on the top-level probabilities that feed into the TEU calculation.

In order for the above questions to tap into the benefits of the forecasting framework, they must be resolvable. Resolving the forecasting questions means that at a set time in the future, reviewers’ predictions will be compared to a ground truth based on the actual events that have occurred (i.e., was the outcome actually achieved and, if so, what was its actual impact?). Consequently, reviewers will need to be provided with the resolution date and the resolution criteria for their forecasts.

Resolution of the probability-based questions hinges mostly on a careful and objective definition of the potential outcomes, and is otherwise straightforward — though note that only one of the probability questions will be resolved, since they are mutually exclusive. The optimal resolution of the scientific and social impact questions may depend on the context of the project and the chosen approach to defining utility. A widely applicable approach is to resolve the utility forecasts by having either program managers or subject matter experts evaluate the results of the completed project and score its impact at the resolution date.

For our pilot, we asked forecasting questions only about the probability of success given funding (question 1 above) and the scientific and social impact of each outcome (questions 3 and 4); since we assumed that the probability of success without funding was zero, we did not ask question 2. Because outcomes for the FRO proposals were designed to be either independent or sequential, we did not have to ask additional questions on the joint probability of multiple outcomes being achieved. We chose to resolve our impact questions with a post-project panel of subject matter experts.

Additional Considerations

In general, there is a tradeoff in implementing this approach between simplicity and thoroughness, efficiency and accuracy. Here are some additional considerations on that tradeoff for those looking to use this approach:

- The responsibility of determining the range of potential outcomes for a proposal could be assigned to three different parties: the proposal author, the proposal reviewers, or the program manager. First, grantmakers could ask proposal authors to comprehensively define within their proposal the potential primary and secondary outcomes and/or project milestones. Alternatively, reviewers could be allowed to individually — or collectively — determine what they see as the full range of potential outcomes. The third option would be for program managers to define the potential outcomes based on each proposal, with or without input from proposal authors. In our pilot, we chose to use the third approach with input from proposal authors, since it simplified the process for reviewers and allowed us to limit the number of outcomes under consideration to a manageable amount.

- In many cases, a “failed” or null outcome may still provide meaningful value by informing other scientists that the research method doesn’t work or that the hypothesis is unlikely to be true. Considering the replication crises in multiple fields, this could be an important and unaddressed aspect of peer review. Grantmakers could choose to ask reviewers to consider the value of these null outcomes alongside other outcomes to obtain a more complete picture of the project’s utility. We chose not to address this consideration in our pilot for the sake of limiting the evaluation burden on reviewers.

- If grant recipients’ are permitted greater flexibility in their research agendas, this expected value approach could become more difficult to implement, since reviewers would have to consider a wider and more uncertain range of potential outcomes. This was not the case for our FRO Forecasting pilot, since FROs are designed to have specific and well-defined research goals.

Other Similar Efforts

Currently, forecasting is an approach rarely used in grantmaking. Open Philanthropy is the only grantmaking organization we know of that has publicized their use of internal forecasts about grant-related outcomes, though their forecasts do not directly influence funding decisions and are not specifically of expected value. Franzoni and Stephan are also currently piloting their Subjective Expected Utility approach with Novo Nordisk.

Conclusion

Our goal in publishing this methodology is for interested grantmakers to freely adapt it to their own needs and iterate upon our approach. We hope that this paper will help start a conversation in the science research and funding communities that leads to further experimentation. A follow up report will be published at the end of the FRO Forecasting pilot sharing the results and learnings from the project.

Acknowledgements

We’d like to thank Peter Mühlbacher, former research scientist at Metaculus, for his meticulous feedback as we developed this approach and for his guidance in designing resolvable forecasting questions. We’d also like to thank the rest of the Metaculus team for being open to our ideas and working with us on piloting this approach, the process of which has helped refine our ideas to their current state. Any mistakes here are of course our own.

ICSSI 2023: Hacking the Science of Science

What are the best approaches for structuring, funding, and conducting innovative scientific research? The importance of this question — long pondered by philosophers, historians, sociologists, and scientists themselves — is motivating the rapid growth of a new, interdisciplinary and empirically minded Science of Science that spans academia, industry, and government. At the 2nd annual International Conference on the Science of Science and Innovation, held June 26-29 at Northwestern University, experts from across this diverse community gathered to build new connections and showcase the cutting edge of the field.

At this year’s conference, the Federation of American Scientists aimed to further these goals by partnering with Northwestern’s Kellogg School of Management to host the first Metascience Hackathon. This event brought together participants from eight different countries — representing 20 universities, two federal agencies, and two non-profits — to stimulate cross-disciplinary collaboration and develop new approaches to impact. Diverging from the traditional hackathon model, we encouraged teams to advance the field along one of three distinct dimensions: Policy, Knowledge, and Tools.

Participants rose to the occasion, producing eight creative and impactful projects. In the Policy track, teams proposed transformative strategies to enhance scientific reproducibility, support immigrant STEM researchers, and foster impactful interdisciplinary research. The Knowledge track saw teams leveraging data and AI to explore the dynamics of peer review, scientific collaboration, and the growth of the science of science community. The Tools track introduced novel platforms for fostering global research collaboration and visualizing academic mobility.

These projects, developed in less than a day (!), underscore the potential of the science of science community to drive impactful change. They represent not only the innovative spirit of the participants but also the broader value of interdisciplinary collaboration in addressing complex challenges and shaping the future of science.

We are excited to showcase the teams’ work below.

Policy

A Funders’ Tithe for Reproducibility Centers

Project Team: Shambhobi Battacharya (Northwestern University), Elena Chechik (European University at St. Petersburg), Alexander Furnas (Northwestern University), & Greg Quigg (University of Massachusetts Amherst)

Background: The responsibility for ensuring scientific reproducibility is primarily on individual researchers and academic institutions. However, reproducibility efforts are often inadequate due to limited resources, publication bias, time constraints, and lack of incentives.

Solution: We propose a policy whereby large science funding bodies earmark a certain percentage of their allocated grants towards establishing and maintaining reproducibility centers. These specialized entities would employ dedicated teams of independent scientists to reproduce or replicate high-impact, high-leverage, or suspicious research. The existence of dedicated reproducibility centers with independent scientists conducting post-hoc, self-directed reproducibility and replication studies will alter the incentives for researchers throughout the scientific community, strengthening the body of scientific knowledge and increasing public trust in scientific findings.

Immigrant STEM Training: Crossing the Valley of Death

Project Team: Sujata Emani (Personal Capacity), Takahiro Miura (University of Tokyo), Mengyi Sun (Northwestern University), & Alice Wu (Federation of American Scientists)

Background: Immigrants significantly contribute to the U.S. economy, particularly in STEM entrepreneurship and innovation. However, they often encounter legal, financial, and interpersonal barriers that lead to high rates of mental health disorders and attrition from scientific research.

Solution: To mitigate these challenges, we propose that science funding agencies expand eligibility for major federal science fellowships (e.g., the NSF GRFP and NIH NRSA) to include international students, providing them with more stable funding sources. We also propose a broader shift in federal research funding towards research fellowships, reducing hierarchical power structures and improving the training environment. Implementing these recommendations can empower all graduate students, foster greater scientific progress, and benefit the American economy.

Increasing Interdisciplinary Research through a More Balanced Research Funding and Evaluation Process

Project Team: Jonathan Coopersmith (Texas A&M University), Jari Kuusisto (University of Vaasa), Ye Sun (University College London), & Hongyu Zhou (University of Antwerp)

Background: Solving local, national, and global challenges will increasingly require interdisciplinary research that spans diverse perspectives and insights. Despite the need for impactful interdisciplinary research, it has not reached its full potential due to the persistence of significant obstacles at many levels of the creation of knowledge. This lack of support makes it challenging to develop and utilize the full potential of interdisciplinarity.

Solution: We propose that national funding agencies should launch a Balanced Research Funding and Evaluation Initiative to create and implement national standards for interdisciplinary research development, management, promotion, funding, and evidence-based evaluation. We outline the specific mechanisms such a program could use to unlock more impactful research on global challenges.

Knowledge

Identifying Reviewer Disciplines and their Impact on Peer Review

Project Team: Chenyue Jiao (University of Illinois, Urbana Champaign), Erzhuo Shao (Northwestern University), Louis Shekhtman (Northeastern University), & Satyaki Sikdar (Indiana University, Bloomington)

Background: Given the rise in interdisciplinary and multidisciplinary research, there is an increasing need to obtain the perspectives of multiple peer reviewers with unique expertise. In this project, we explore whether reviewers from particular disciplines tend to be more critical of papers applying a different disciplinary approach.

Solution: Using a dataset of open reviews from Nature Communications, we assign concepts to papers and reviews using the OpenAlex concept tagger, and analyze review sentiment using OpenAI’s ChatGPT API. Our results identify network pairs of review and paper concepts; several pairs correspond to expectations, such as engineers’ negativity towards physicists’ work and economists’ criticisms of biology studies. Further study and collection of additional datasets could improve the utility of these results.

Team Formation: Expected or Unexpected

Project Team: Noly Higashide (University of Tokyo), Oh-Hyun Kwon (Pohang University of Science and Technology), Zeijan Lyu (University of Chicago), & Seokkyun Woo (Northwestern University)

Background: This year’s conference highlighted the importance of studying the interaction of scientists to better understand the scientific ecosystem. Here, we explore the dynamics of scientific collaboration and its influence on the success of resulting research.

Solution: Using SciSciNet data, we investigate how the likelihood of team formation affects the impact, disruption, and novelty of the papers in the field of biology, chemistry, psychology, and sociology. Our results suggest that the relationship between team structure and research impact varies across disciplines. Specifically, in chemistry and biology the relationship between proximity and citations has an inverse U-shape, such that papers with moderate proximity have the highest impact. These findings underline the need for further exploration of how collaboration patterns affect scientific discovery.

SciSciPeople: Identifying New Members of the Science of Science Community

Project Team: Sirag Erkol (Northwestern University), Yifan Qian (Northwestern University), & Henry Xu (Carnegie Mellon University)

Background: The growth and diversification of the science of science community is crucial for fostering innovation and broadening perspectives.

Solution: Our project introduces SciSciPeople, a new pipeline designed to identify potential new members for this community. Using data from the ICSSI website, SciSciNet, and Google Scholar, our pipeline identifies individuals who have shown interest in the science of science — either through citing well-known review papers, or noting the field as a research interest on Google Scholar — but are not yet part of the ICSSI community. Applying this pipeline successfully identified hundreds of relevant individuals. This tool not only enriches the science of science community but also has potential applications for various fields aiming to discover new individuals to expand their communities.

Tools

ScholarConnect: A Platform for Fostering Knowledge Exchange

Project Team: Sai Koneru (Pennsylvania State University), Xuelai Li (Imperial College London), Casey Meyer (OurResearch), Mark Tschopp (Army Research Laboratory)

Background: The rapid growth of the scientific community has made it hard to stay aware of the researchers working on similar projects to your own. As a result, there is a need for new ways to identify researchers doing relevant work in other institutions or fields.

Solution: We created “ScholarConnect”, an open-source tool designed to foster global collaboration among researchers. ScholarConnect recommends potential collaborators based on similarities in research expertise, determined by factors like publication records, concepts, institutions, and countries. The tool offers personalized recommendations and an interactive user interface, allowing users to explore and connect with like-minded researchers from diverse backgrounds and disciplines. We’ve ensured privacy and security by not storing user-entered information and basing recommendations on anonymized, aggregated profiles, and we invite contributions from the wider research community to improve ScholarConnect.

Scientist Map: A Tool for Visualizing Academic Mobility across Institutions

Project Team: Tianji Jiang (University of California Los Angeles), Jesse Tabak (Northwestern University), & Shibo Zhou (Georgia Institute of Technology)

Background: The migration of academic researchers provides a unique window to observe the mobility of knowledge and innovations today, and has been a valuable area of investigation for scholars across various disciplines.

Solution: To study the migration of academic individuals, we introduce a tool designed to allow users to search an academic’s history of affiliation and visualize their historical path on a map. This tool aims to help scientific producers and consumers better understand the migration of experts across institutions, and to support relevant science of science by providing easy access to researchers’ migration history.

Strengthening Policy by Bringing Evidence to Life

Summary

In a 2021 memorandum, President Biden instructed all federal executive departments and agencies to “make evidence-based decisions guided by the best available science and data.” This policy is sound in theory but increasingly difficult to implement in practice. With millions of new scientific papers published every year, parsing and acting on research insights presents a formidable challenge.

A solution, and one that has proven successful in helping clinicians effectively treat COVID-19, is to take a “living” approach to evidence synthesis. Conventional systematic reviews, meta-analyses, and associated guidelines and standards, are published as static products, and are updated infrequently (e.g., every four to five years)—if at all. This approach is inefficient and produces evidence products that quickly go out of date. It also leads to research waste and poorly allocated research funding.

By contrast, emerging “Living Evidence” models treat knowledge synthesis as an ongoing endeavor. By combining (i) established, scientific methods of summarizing science with (ii) continuous workflows and technology-based solutions for information discovery and processing, Living Evidence approaches yield systematic reviews—and other evidence and guidance—products that are always current.

The recent launch of the White House Year of Evidence for Action provides a pivotal opportunity to harness the Living Evidence model to accelerate research translation and advance evidence-based policymaking. The federal government should consider a two-part strategy to embrace and promote Living Evidence. The first part of this strategy positions the U.S. government to lead by example by embedding Living Evidence within federal agencies. The second part focuses on supporting external actors in launching and maintaining Living Evidence resources for the public good.

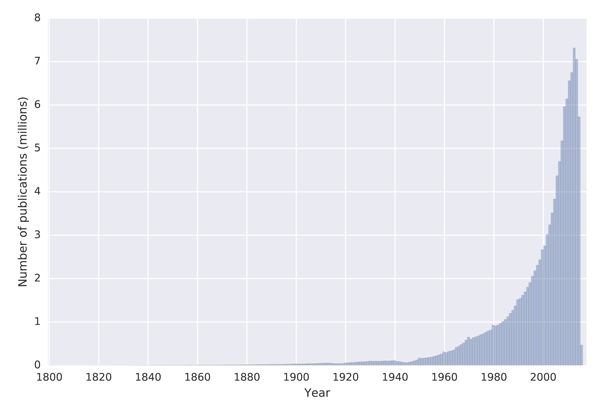

Challenge and Opportunity

We live in a time of veritable “scientific overload”. The number of scientific papers in the world has surged exponentially over the past several decades (Figure 1), and millions of new scientific papers are published every year. Making sense of this deluge of documents presents a formidable challenge. For any given topic, experts have to (i) scour the scientific literature for studies on that topic, (ii) separate out low-quality (or even fraudulent) research, (iii) weigh and reconcile contradictory findings from different studies, and (iv) synthesize study results into a product that can usefully inform both societal decision-making and future scientific inquiry.

This process has evolved over several decades into a scientific method known as “systematic review” or “meta-analysis”. Systematic reviews and meta-analyses are detailed and credible, but often take over a year to produce and rapidly go out of date once published. Experts often compensate by drawing attention to the latest research in blog posts, op-eds, “narrative” reviews, informal memos, and the like. But while such “quick and dirty” scanning of the literature is timely, it lacks scientific rigor. Hence those relying on “the best available science” to make informed decisions must choose between summaries of science that are reliable or current…but not both.

The lack of trustworthy and up-to-date summaries of science constrains efforts, including efforts championed by the White House, to promote evidence-informed policymaking. It also leads to research waste when scientists conduct research that is duplicative and unnecessary, and degrades the efficiency of the scientific ecosystem when funders support research that does not address true knowledge gaps.

Total number of scientific papers published over time, according to the Microsoft Access Graph (MAG) dataset. (Source: Herrmannova and Knoth, 2016)

The emerging Living Evidence paradigm solves these problems by treating knowledge synthesis as an ongoing rather than static endeavor. By combining (i) established, scientific methods of summarizing science with (ii) continuous workflows and technology-based solutions for information discovery and processing, Living Evidence approaches yield systematic reviews that are always up to date with the latest research. An opinion piece published in The New York Times called this approach “a quiet revolution to surface the best-available research and make it accessible for all.”

To take a Living Evidence approach, multidisciplinary teams of subject-matter experts and methods experts (e.g., information specialists and data scientists) first develop an evidence resource—such as a systematic review—using standard approaches. But the teams then commit to regular updates of the evidence resource at a frequency that makes sense for their end users (e.g., once a month). Using technologies such as natural-language processing and machine learning, the teams continually monitor online databases to identify new research. Any new research is rapidly incorporated into the evidence resource using established methods for high-quality evidence synthesis. Figure 2 illustrates how Living Evidence builds on and improves traditional approaches for evidence-informed development of guidelines, standards, and other policy instruments.

Illustration of how a Living Evidence approach to development of evidence-informed policies (such as clinical guidelines) is more current and reliable than traditional approaches. (Source: Author-developed graphic)

Living Evidence products are more trusted by stakeholders, enjoy greater engagement (up to a 300% increase in access/use, based on internal data from the Australian Stroke Foundation), and support improved translation of research into practice and policy. Living Evidence holds particular value for domains in which research evidence is emerging rapidly, current evidence is uncertain, and new research might change policy or practice. For example, Nature has credited Living Evidence with “help[ing] chart a route out” of the worst stages of the COVID-19 pandemic. The World Health Organization (WHO) has since committed to using the Living Evidence approach as the organization’s “main platform” for knowledge synthesis and guideline development across all health issues.

Yet Living Evidence approaches remain underutilized in most domains. Many scientists are unaware of Living Evidence approaches. The minority who are familiar often lack the tools and incentives to carry out Living Evidence projects directly. The result is an “evidence to action” pipeline far leakier than it needs to be. Entities like government agencies need credible and up-to-date evidence to efficiently and effectively translate knowledge into impact.

It is time to change the status quo. The 2019 Foundations for Evidence-Based Policymaking Act (“Evidence Act”) advances “a vision for a nation that relies on evidence and data to make decisions at all levels of government.” The Biden Administration’s “Year of Evidence” push has generated significant momentum around evidence-informed policymaking. Demonstrated successes of Living Evidence approaches with respect to COVID-19 have sparked interest in these approaches specifically. The time is ripe for the federal government to position Living Evidence as the “gold standard” of evidence products—and the United States as a leader in knowledge discovery and synthesis.

Plan of Action

The federal government should consider a two-part strategy to embrace and promote Living Evidence. The first part of this strategy positions the U.S. government to lead by example by embedding Living Evidence within federal agencies. The second part focuses on supporting external actors in launching and maintaining Living Evidence resources for the public good.

Part 1. Embedding Living Evidence within federal agencies

Federal science agencies are well positioned to carry out Living Evidence approaches directly. Living Evidence requires “a sustained commitment for the period that the review remains living.” Federal agencies can support the continuous workflows and multidisciplinary project teams needed for excellent Living Evidence products.

In addition, Living Evidence projects can be very powerful mechanisms for building effective, multi-stakeholder partnerships that last—a key objective for a federal government seeking to bolster the U.S. scientific enterprise. A recent example is Wellcome Trust’s decision to fund suites of living systematic reviews in mental health as a foundational investment in its new mental-health strategy, recognizing this as an important opportunity to build a global research community around a shared knowledge source.

Greater interagency coordination and external collaboration will facilitate implementation of Living Evidence across government. As such, President Biden should issue an Executive Order establishing an Living Evidence Interagency Policy Committee (LEIPC) modeled on the effective Interagency Arctic Research Policy Committee (IARPC). The LEIPC would be chartered as an Interagency Working Group of the National Science and Technology Council (NSTC) Committee on Science and Technology Enterprise, and chaired by the Director of the White House Office of Science and Technology Policy (OSTP; or their delegate). Membership would comprise representatives from federal science agencies, including agencies that currently create and maintain evidence clearinghouses, other agencies deeply invested in evidence-informed decision making, and non-governmental experts with deep experience in the practice of Living Evidence and/or associated capabilities (e.g., information science, machine learning).

The LEIPC would be tasked with (1) supporting federal implementation of Living Evidence, (2) identifying priority areas1 and opportunities for federally managed Living Evidence projects, and (3) fostering greater collaboration between government and external stakeholders in the evidence community. More detail on each of these roles is provided below.

Supporting federal implementation of Living Evidence

Widely accepted guidance for living systematic reviews (LSRs), one type of Living Evidence product, has been published. The LEIPC—working closely with OSTP, the White House Office of Management and Budget (OMB), and the federal Evaluation Officer Council (EOC), should adapt this guidance for the U.S. federal context, resulting in an informational resource for federal agencies seeking to launch or fund Living Evidence projects. The guidance should also be used to update systematic-review processes used by federal agencies and organizations contributing to national evidence clearinghouses.2

Once the federally tailored guidance has been developed, the White House should direct federal agencies to consider and pursue opportunities to embed Living Evidence within their programs and operations. The policy directive could take the form of a Presidential Memorandum, a joint management memo from the heads of OSTP and OMB, or similar. This directive would (i) emphasize the national benefits that Living Evidence could deliver, and (ii) provide agencies with high-level justification for using discretionary funding on Living Evidence projects and for making decisions based on Living Evidence insights.

Identifying priority areas and opportunities for federally managed Living Evidence projects

The LEIPC—again working closely with OSTP, OMB, and the EOC—should survey the federal government for opportunities to deploy Living Evidence internally. Box 1 provides examples of opportunities that the LEIPC could consider.

Below are four illustrative examples of existing federal efforts that could be augmented with Living Evidence.

Example 1: National Primary Drinking Water Regulations. The U.S. Environmental Protection Agency (EPA) currently reviews and updates the National Primary Drinking Water Regulations every six years. But society now produces millions of new chemicals each year, including numerous contaminants of emerging concern (CEC) for drinking water. Taking a Living Evidence approach to drinking-water safety could yield drinking-water regulations that are updated continuously as information on new contaminants comes in, rather than periodically (and potentially after new contaminants have already begun to cause harm).

Example 2: Guidelines for entities participating in the National Wastewater Surveillance System. Australia has demonstrated how valuable Living Evidence can be for COVID-19 management and response. Meanwhile, declines in clinical testing and the continued emergence of new SARS-CoV-2 variants are positioning wastewater surveillance as an increasingly important public-health tool. But no agency or organization has yet taken a Living Evidence approach to the practice of testing wastewater for disease monitoring. Living Evidence could inform practitioners in real time on evolving best protocols and practices for wastewater sampling, concentration, testing, and data analysis.

Example 3: Department of Education Best Practices Clearinghouse. The Best Practices Clearinghouse was launched at President Biden’s direction to support a variety of stakeholders in reopening and operating post-pandemic. Applying Living Evidence analysis to the resources that the Clearinghouse has assembled would help ensure that instruction remains safe and effective in a dramatically transformed and evolving educational landscape.

Example 4: National Climate Assessment. The National Climate Assessment (NCA) is a Congressionally mandated review of climate science and impacts on the United States. The NCA is issued quadrennially, but climate change is presenting itself in new and worrying ways every year. Urgent climate action must be backed up by emergent climate knowledge. While a longer-term goal could be to transition the entire NCA into a frequently updated “living” mode, a near-term effort could focus on transitioning NCA subtopics where the need for new knowledge is especially pressing. For instance, the emergence and intensification of megafires in the West has upended our understanding of fire dynamics. A Living Evidence resource on fire science could give policymakers and program officials critical, up-to-date information on how best to mitigate, respond to, and recover from catastrophic megafires.

The product of this exercise should be a report that describes each of the opportunities identified, and recommends priority projects to pursue. In developing its priority list, the LEIPC should account for both the likely impact of a potential Living Evidence project as well as the near-term feasibility of that project. While the report could outline visions for ambitious Living Evidence undertakings that would require a significant time investment to realize fully (e.g., transitioning the entire National Climate Assessment into a frequently updated “living” mode), it should also scope projects that could be completed within two years and serve as pilots/proofs of concept. Lessons learned from the pilots could ultimately inform a national strategy for incorporating Living Evidence into federal government more systematically. Successful pilots could continue and grow beyond the end of the two-year period, as appropriate.

Fostering greater collaboration between government and external stakeholders

The LEIPC should create an online “LEIPC Collaborations” platform that connects researchers, practitioners, and other stakeholders both inside and outside government. The platform would emulate IARPC Collaborations, which has built out a community of more than 3,000 members and dozens of communities of practice dedicated to the holistic advancement of Arctic science. As one stakeholder has explained: