Fortifying America’s Future: Pathways for Competitiveness

The Federation of American Scientists (FAS) and Alliance for Learning Innovation (ALI) Coalition, in collaboration with the Aspen Strategy Group and Walton Family Foundation, released a new paper “Fortifying America’s Future: Pathways for Competitiveness,” co-authored and edited by Brienne Bellavita, Dan Correa, Emily Lawrence, Alix Liss, Anja Manuel, and Sara Schapiro. The report delves into the intersection of education, workforce, and national security preparedness in the United States, summarizing key findings from roundtable discussions in early 2024. These roundtable discussions gathered field experts from a variety of organizations to enrich the discourse and provide comprehensive recommendations for addressing this challenge. Additionally, a panel of topical experts discussed the subject matter of this report at the Aspen Security Forum on July 18th, 2024.

The United States faces a critical human talent shortage in industries essential for maintaining technological leadership, including workforce sectors related to artificial intelligence, quantum computing, semiconductors, 5G/6G technologies, fintech, and biotechnology. Without a robust education system that prepares our youth for future careers in these sectors, our national security and competitiveness are at risk. Quoting the report, Dr. Katie Jenner, Secretary of Education for the State of Indiana, reiterated the idea that “we must start treating a strong educational system as a national security issue” during the panel discussion. Addressing these challenges requires a comprehensive approach that bridges the gaps between national security, industry, higher education, and K-12 education while leveraging local innovation. The paper outlines strategies for creating and promoting career pathways from K-12 into high-demand industries to maintain the U.S.’s competitive edge in an increasingly global landscape, including:

- Leveraging the national security community to foster a sense of urgency around improving our education ecosystem.

National security has historically driven educational investment (think Sputnik) and remains a bipartisan priority, providing a strong foundation for new legislation addressing emerging technologies like AI. For example, the CHIPS and Science Act, driven by competition with China, has spurred states to innovate, form public-private partnerships, and establish Tech Hubs.

- Providing federal incentives and highlighting successful state approaches, building coalitions around key industries, supporting states in developing K-12 pathways, and scale impactful place-based strategies.

Mapping out workforce opportunities in other critical sectors such as aviation, AI, computer science, and biosecurity can ensure that the future workforce is gaining necessary skills to be successful in high-need careers in national security. For example, Ohio created a roadmap for advanced manufacturing with the Governor’s Office of Workforce Transformation and the Ohio Manufacturers’ Association outlining sector-specific competencies.

- Supporting intermediaries to scale connections between K-12 education and the workforce.

Innovative funding streams, employer incentives, and specialized intermediaries promoting career-connected learning can bridge gaps by encouraging stronger cross-sector ties in education and the workforce. For example, Texas allocated incentive funding to Pathways in Technology Early College High Schools (P-TECH) encouraging explicit career-connected learning opportunities that engage young people in relevant career paths.

- Launching a Technical Assistance Center and developing a Career Counseling Corps to assist states in creating pathways to key industries through place-based ecosystem support.

A Technical Assistance (TA) Center would offer tailored support based on each state’s emerging industries, guided by broader economic and national security needs. The center could bring together stakeholders such as community colleges, education leaders, and industry contacts to build partnerships and cross-sector opportunities.

- Highlighting success stories and place-based strategies through a 50 State Bright Spots campaign.

Virginia streamlined all workforce initiatives under a central state department, enhancing coordination and collaboration. The state also convenes representatives and cabinet members with backgrounds in workforce issues regularly to ensure alignment of education from K-12 through postsecondary.

- Strengthening education R&D by creating and funding an ARPA-ED to advance career-connected learning with innovative solutions, improve data systems, and promote evidence-based practices in career-connected learning.

Education R&D lacks sufficient investment and the infrastructure to support innovative solutions addressing defining challenges in education in the U.S. The New Essential Education Discoveries (NEED) Act would establish an agency called the National Center for Advanced Development in Education (NCADE) that would function as an ARPA-ED, developing and disseminating evidence-based practices supporting workforce pathways and skills acquisition for critical industries.

- Encouraging technology careers by introducing K-12 students to technology-related topics early and communicating the opportunities available in key industries.

Giving young students opportunities to learn about different careers in these sectors will inspire interest and early experiences with diverse options in higher education, manufacturing, and jobs from critical industries ensuring American competitiveness.Implementing these recommendations will require action from a diverse group of stakeholders including the federal government and leadership at the state and local levels. Check out the report to see how these steps will empower our workforce and uphold the United States’ leadership in technology and national security.

22 Organizations Urge Department of Education to Protect Students from Extreme Heat at Schools

Twenty-two organizations and 29 individuals from across 12 states sent a letter calling on the U.S. Department of Education to take urgent action to protect students from the dangers of extreme heat on school campuses

WASHINGTON — With meteorologists predicting a potentially record-breaking hot summer ahead, a coalition of 22 organizations from across 12 states is urgently calling on the Department of Education to use its national platform and coordinating capabilities to help schools prepare for and respond to extreme heat. In a coalition letter sent today, spearheaded by the Federation of American Scientists and UndauntedK12, the groups recommend streamlining funding, enhancing research and data, and integrating heat resilience throughout education policies.

“The heat we’re experiencing today will only get worse. Our nation’s classrooms and campuses were not built to withstand this heat, and students are paying the price when we do not invest in adequate protections. Addressing extreme heat is essential to the Department of Education’s mission of equitable access to healthy, safe, sustainable, 21st century learning environments” says Grace Wickerson, Health Equity Policy Manager at the Federation of American Scientists, who recently authored a policy memo on addressing heat in schools.

Many schools across the country – especially in communities of color – have aging infrastructure that is unfit for the heat. This infrastructure gap exposes millions of students to temperatures where it’s impossible to learn and unhealthy even to exist. Despite the rapidly growing threat of extreme heat fueled by climate change, no national guidance, research and data programs, or dedicated funding source exists to support U.S. schools in adapting to the heat.

“Many of our nation’s school campuses were designed for a different era – they are simply not equipped to keep children safe and learning with the increasing number of 90 and 100 degree days we are now experiencing due to climate change. Our coalition letter outlines common sense steps the Department of Education can take right now to move the needle on this issue, which is particularly pressing in schools serving communities of color. All students deserve access to healthy and climate-resilient classrooms,” said Jonathan Klein, co-founder and CEO of UndauntedK12.

The coalition’s recommendations include:

- Publish guidance on school heat readiness, heat planning best practices, model programs and artifacts, and strategies to build resilience (such as nature-based solutions) in partnership with the Environmental Protection Agency, Federal Emergency Management Agency, the National Oceanic and Atmospheric Administration, NIHHIS, and subject-area expert partners.

- Join the Extreme Heat Interagency Working Group led by the National Integrated Heat Health Information System (NIHHIS).

- Use ED’s platform to encourage states to direct funding resources for schools to implement targeted heat mitigation and increase awareness of existing funds (i.e. from the Inflation Reduction Act and Bipartisan Infrastructure Law) that can be leveraged for heat resilience. Further Ed and the IRS should work together to understand the financing gap between tax credits coverage and true cost for HVAC upgrades in America’s schools.

- Direct research and development funding through the National Center for Educational Statistics and Institute for Education Sciences toward establishing regionally-relevant indoor temperature standards for schools to guide decision making based on rigorous assessments of impacts on children’s health and learning.

- Adapt existing federal mapping tools, like the NCES’ American Community Survey Education Tabulation Maps and NIHHIS’ Extreme Heat Vulnerability Mapping Tool, to provide school district-relevant information on heat and other climate hazards. As an example, NCES just did a School Pulse Panel on school infrastructure and could in future iterations collect data on HVAC coverage and capacity to complete upgrades.

- Evaluate existing priorities and regulatory authority to identify ways that ED can incorporate heat readiness into programs and gaps that would require new statutory authority.

The Federation of American Scientists and UndauntedK12 and our partner organizations welcome the opportunity to meet with the Department of Education to discuss these recommendations and to provide support in developing much needed guidance as we enter another season of unprecedented heat.

###

About UndauntedK12

UndauntedK12 is a nonprofit organization with a mission to support America’s K-12 public schools to make an equitable transition to zero carbon emissions while preparing youth to build a sustainable future in a rapidly changing climate.

About Federation of American Scientists

FAS envisions a world where cutting-edge science, technology, ideas and talent are deployed to solve the biggest challenges of our time. We embed science, technology, innovation, and experience into government and public discourse in order to build a healthy, safe, prosperous and equitable society.

ALI Task Force Findings to Improve Education R&D

The Alliance for Learning Innovation (ALI) coalition, which includes the Federation of American Scientists, EdCounsel, and InnovateEdu, today celebrate the release of three task force briefs aimed at enhancing education research and development (“ed R&D”). With pressing issues such as declining literacy and math scores, chronic absenteeism, and the rise of technologies like AI, a strong ed R&D infrastructure is vital. In 2023, ALI convened three task forces to recommend ways to bolster ed R&D. The task forces focused on state and local ed R&D infrastructure, inclusive ed R&D, and the critical role of Historically Black Colleges and Universities (HBCUs), Minority-Serving Institutions (MSIs), and Tribal Colleges and Universities (TCUs) in this ecosystem.

State and Local Education R&D Infrastructure

Supporting R&D at the local level encourages an environment of continuous learning, accelerating improvements to educational methods based on new evidence and pioneering research. Therefore, given that over 90% of K-12 education funding comes from state and local sources, the ALI task force recommends that capacity-building, vision alignment, and investment in state and local education agencies (SEAs and LEAs) is prioritized. Preparing these entities to leverage R&D resources within their specific locales, in rural and urban contexts, will enable the infrastructure to best meet the unique needs of communities and students across the country. Additionally, supporting human capacity and development, modernizing data systems, and strengthening collaborative partnerships and fellowships across research institutions and key stakeholders in the ecosystem, will set the stage for more context-specific and effective ed R&D infrastructure at the state and local levels.

Inclusive Education R&D

Traditional education R&D is often dominated by privileged institutions and individuals with outsized access to capital and opportunities, sidelining the needs and perspectives of historically marginalized communities. To address this imbalance, intentional efforts are needed to create a more inclusive R&D ecosystem. The task force recommends that government actors implement multidimensional measures of progress and simplify application processes for R&D funding. Continuing dialogue on equity and inclusion will create space for identifying possible biases in approaches and processes. In sum, inclusion is imperative to achieving greater equity in education and supporting all learners of diverse backgrounds and communities.

The Role of HBCUs, MSIs, & TCUs in Education R&D

Achieving collaborative infrastructure and inclusion in ed R&D requires the strong participation of Historically Black Colleges and Universities (HBCUs), Minority-Serving Institutions (MSIs), and Tribal Colleges and Universities (TCUs). An equitable education R&D ecosystem must focus on the representation of these institutions and diverse student populations in research topics, grants, and funding to support learners from all backgrounds, particularly those of disadvantaged circumstances. Actionable steps include establishing diverse peer review panels, incentivizing grant proposals from minority-serving institutions, and creating specialized scholar programs. Additionally, programs should explicitly outline resource accessibility, leadership dynamics, funder relationships, grant processes, and inclusive language to dismantle structural inequalities and make the invisible visible.

Conclusion

Recommendations from the ALI task forces propose that sufficient funding, inclusivity, and diverse representation of higher education institutions are strong first steps in a path toward a more equitable and effective education system. The education R&D ecosystem must be a learning-oriented network committed to the principles of innovation that the system itself strives to promote across best practices in education and learning.

Thinking Big To Solve Chronic Absenteeism

Across the country in small towns and large cities, rural communities and the suburbs, millions of young people are missing school at astounding rates. They’re doing it with such frequency that educators are now tracking “chronic absenteeism.”

It’s an important issue the White House is prioritizing. On May 15, the Biden-Harris Administration will host a summit on addressing chronic absenteeism. You can watch the livestream here, starting at 9:30 am ET.

This brand of truancy – where students are absent more than 10 percent of the time – is a problem in every state: Between 2018 and 2022, rates of chronic absenteeism nearly doubled, meaning an estimated 6.5 million more students are chronically absent today than six years ago. The New York Times recently reported that “something fundamental has shifted in American childhood and the culture of school, in ways that may be long lasting.”

But, like so many other issues in our country, chronic absenteeism hits some places harder than others. According to the non-profit organization Attendance Works, students from low-income and under-served communities are “much more likely to be enrolled in schools facing extreme levels of chronic absence.” When Attendance Works crunched the numbers, it found that in schools where at least 75 percent of students received a free or reduced-price lunch, the rates of chronic absenteeism nearly tripled, increasing from 25 percent to 69 percent between 2017 and 2022.

This alarming trend has educators and policymakers scrambling for solutions, from better bus routes to automated messaging systems for parents to “early warning” attendance tracking. These are important pursuits, but alone they won’t solve the problem.

Why? Because experts and research show that chronic absenteeism is only a symptom of a larger, more complex problem. For too many young people of color, school can be out of touch with the lives they live, so they’ve stopped going, to the point that experts predict that attendance rates won’t return to pre-COVID levels until 2030.

In these schools, the curriculum can lack rigor and their inflexible policies can harm students’ mental health and stifle the inquisitive optimism they might otherwise bring to school each day. Enrichment programs are few and far between, and students lack meaningful relationships with faculty and staff. For many kids, school is irrelevant and unwelcoming.

If schools and policymakers want to solve the problem of chronic absenteeism – particularly in under-served communities – then they must invest in new ideas, research, and tools that will make school a place where kids feel welcomed and engaged, and where learning is relevant. In short, a school needs to be a place where kids want to be. Every. Single. Day.

Teachers, principals, and superintendents know this, and they work to make their schools and classrooms warm, fun, and challenging. But they are swimming against the tide, and they cannot be expected to do this alone. The U.S. must direct and support its brightest minds and boldest innovators to attack this problem. It can do so by making a national investment in research and development efforts to explore new approaches to learning.

The U.S. has already made a big bet on innovation for sectors like defense and health – and in space exploration in the 1960s when JFK challenged the nation to put men on the moon. This kind of “imagine if…” R&D has not yet been applied to education.

Let’s create a National Center for Advanced Development in Education (NCADE), inspired by DARPA, the R&D engine behind the Internet and GPS. This new center would enable informed-risk, high-reward R&D to come up with new approaches and systems that would make learning relevant and fun. It could also produce innovations and creative new ways to increase family engagement – a big factor that contributes to absenteeism – improve access to technology, and even test and assess alternative discipline programs aimed at keeping kids in school rather than suspending them.

As one example, a study shows that texting parents with attendance tips and alerts effectively reduces absenteeism. Another study worked with a school district to send over 32,000 texts to families and saw attendance increase by 15 percent.

As the nation’s schools face the daunting task of post-COVID recovery, efforts to stem chronic absenteeism that tinker around the edges won’t solve the problem. NCADE could drive the transformative solutions that are needed with a nimble, multidisciplinary approach to advance bold, “what if…” R&D projects based on their potential to transform education.

Consider the possibilities of virtual reality. In partnership with edtech startup Transfr, several Boys & Girls Clubs are leveraging virtual reality to help students plan for their future careers. With VR technology, students can peek into a cell or stand on a planet’s surface. Imagine if NCADE could further develop an early concept for an AI-assisted “make your own song” program for students with speech-language development challenges. Or, it could support the creation of customized, culturally relevant assessments, made possible through machine learning, that make test-taking less intimidating.

Chronic absenteeism is a complex problem caused by a number of factors, but the theme running through all of them is that for too many students, schools don’t offer the types of learning opportunities or supports that make learning engaging, meaningful, and relevant to their lives. It doesn’t have to be this way. Let’s act boldly to harness innovation and make school inviting, accessible, and worthwhile for all students.

How the NEED Act Would Ensure CHIPS Doesn’t Crumble

A year and a half after its passage, money is starting to flow from the CHIPS and Science Act to create high-paying, high-tech jobs. In Phoenix, for example, the chip manufacturer Intel will receive billions to help build two new computer chip manufacturing plants that will transform the area into one of the world’s most important players in modern electronics.

That project was one of several – totaling nearly $20 billion – announced recently with Intel for computer chip plants in Arizona, Ohio, New Mexico and Oregon. The company said the investments will create a combined 30,000 manufacturing and construction jobs.

With numbers like that, it’s easy to see why all of the attention and headlines for the legislation thus far have focused on the “CHIPS” part of the law. But now, it is time for Congress to put its bipartisan support behind the “and Science” or risk the momentum the law has created.

That’s because both the law and the semiconductor industry recognize that the U.S. needs a bigger, more inclusive science, technology, engineering, and math (STEM) workforce to fulfill the needs of a robust high-tech manufacturing industry. While CHIPS sets the conditions for a revitalized domestic semiconductor industry, it also calls for improved “access to education, opportunity, and services” to support and develop the workers needed to fill these new jobs.

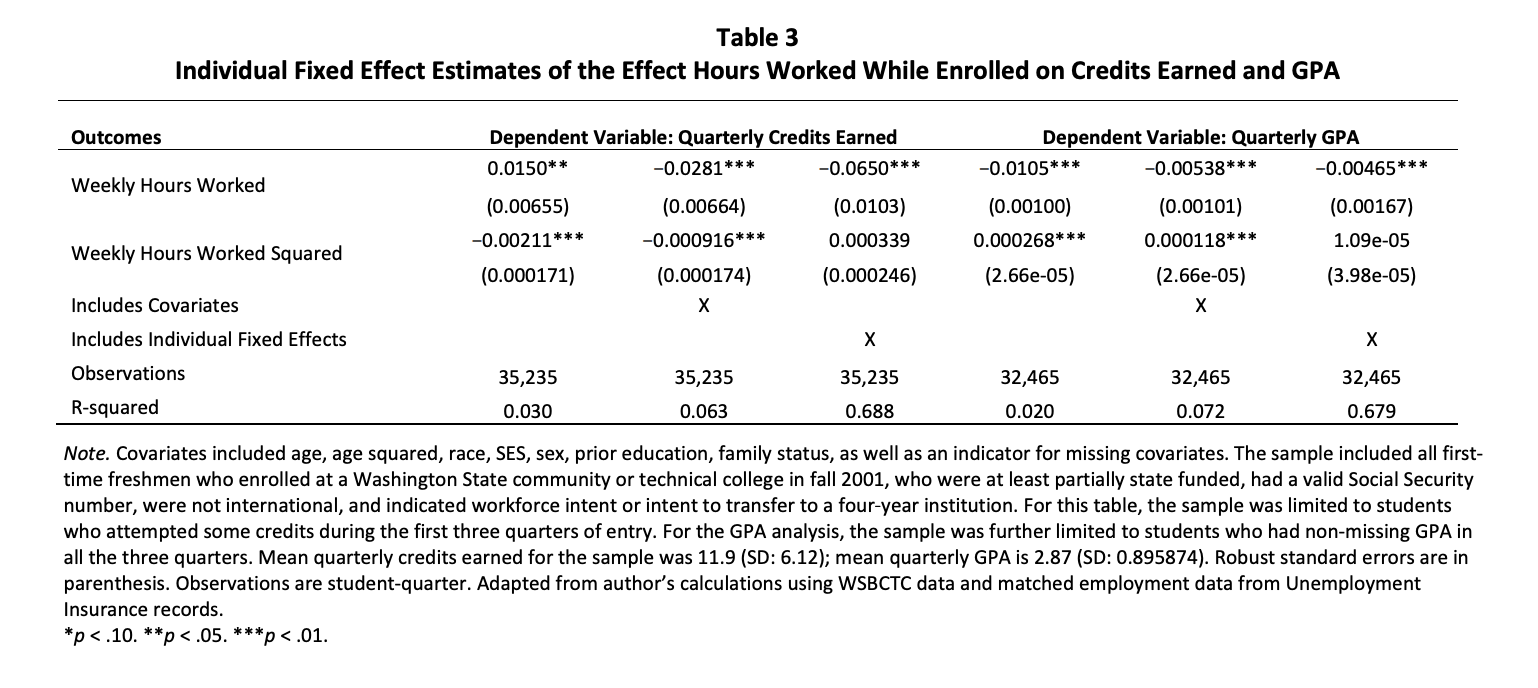

The numbers show the U.S. lags behind its global competitors when it comes to math and science achievement. Middle school math scores are exceptionally low: only 26 percent of all eighth-grade students scored “proficient” on the math portion of the National Assessment of Education Progress in 2022. This presents big problems down the road for higher education.

To put it more bluntly: at a time when CHIPS is poised to ramp up demand for STEM graduates, the nation’s education system is unprepared to produce them.

So what’s a fix? A good first step would be for Congress to pass the New Essential Education Discoveries (NEED) Act to improve the nation’s capabilities to conduct education research and development. NEED would create the National Center for Advanced Development in Education (NCADE), a new Center within the research arm of the U.S. Department of Education to develop innovative practices, tools, systems, and approaches to boost achievement among young people in the wake of the pandemic.

NCADE would enable an informed-risk, high-reward R&D strategy for education – the kind that’s already taking place in other sectors, like health, agriculture, and energy. It’s akin to the approach that fuels the Defense Advanced Research Projects Agency (DARPA), which has led to innovations like GPS, the Internet, stealth technology, and even the computer mouse. Education needs something like this, and NEED will create it – a flexible, nimble research center pushing transformational education innovations.

The passing of the CHIPS and Science Act was a strong indication that Republicans and Democrats can work together to solve big, complex problems when motivated to do so. Passing the NEED Act will show that the same bipartisan spirit can ensure the long-term success of the law while simultaneously setting the course for vast and fundamental improvements to the nation’s schools and universities through improved R&D in education.

President Looks to Education Innovation in the FY25 Budget Request

On March 11, 2024, the President released his budget for Fiscal Year 2025, and it spells good news for advocates and educators who are concerned about research and development opportunities and infrastructure in the education sector. New funding caps imposed by the Fiscal Responsibility Act have tempered many advocates’ expectations. However, by requesting increases for key federal education R&D programs across multiple agencies, the Biden-Harris administration has signaled that it continues to value investments in education innovation, even in a budget-conscious political climate.

An analysis of the proposal by the Alliance for Learning Innovation (ALI) found a lot to like. The President’s Budget would send $815.5 million to the Institute for Education Sciences (IES) to invest in education research, development, dissemination, and evaluation. This is $22.5 million higher than IES received in Fiscal Year 2024. This includes $38.5 million for Statewide Longitudinal Data Systems, a 35 percent increase over Fiscal Year 2024.

Notably, the President is asking for $52.7 million to grow the Accelerate, Transform, and Scale (ATS) Initiative at IES. This is 76 percent higher than the $30 million IES originally put into the initiative in 2023 when Congress directed the agency to “use a portion of its fiscal year 2023 appropriation to support a new funding opportunity for quick turnaround, high-reward scalable solutions intended to significantly improve outcomes for students.”

The ATS Initiative, widely regarded as a pilot for a possible National Center for Advanced Development in Education, is inspired by Advanced Research Project Agencies across the federal government – and around the world – that build insights from basic research to develop and scale breakthrough innovations. Like ARPAs, ATS invests in big ideas that emerge from interdisciplinary, outside-the-box collaboration. It aims to solve the nation’s steepest challenges in education.

The President’s request for ATS includes $2 million for a new research and development center on how generative artificial intelligence is being used in classrooms across the U.S. According to the Congressional Justification for IES, this new center will “develop and test innovative uses of this technology and will establish best practices for evidence building about generative AI in education that not only address the effectiveness of the technology for learning, but also consider issues of bias, fairness, transparency, trust and safety.”

Outside of IES, the President’s Budget calls for additional investments in education innovation. For example, it requests $269 million for the Education Innovation and Research program, housed at the U.S. Department of Education’s Office of Elementary and Secondary Education. If fulfilled, this would be a $10 million increase over last year. The President also wants Congress to send $100 million to the Fund for the Improvement of Postsecondary Education to expand R&D infrastructure at four-year Historically Black Colleges or Universities, Tribally Controlled Colleges or Universities, and Minority-Serving Institutions.

The Biden-Harris administration’s support for education R&D is also reflected in its requests for the National Science Foundation (NSF). The President’s Budget requests $1.3 billion for the NSF’s Directorate for STEM Education – $128 million above its Fiscal Year 2024 level. Moreover, it includes $900 million to fund the important work of NSF’s newest directorate, authorized in the CHIPS and Science Act: the Technology, Innovation, and Partnerships (TIP) Directorate. TIP runs important R&D initiatives, such as the VITAL Prize Challenge and America’s Seed Fund, that support teaching and learning innovations.

ALI looks forward to advocating for a robust investment in education R&D in Fiscal Year 2025. The President’s Budget provides a solid marker for the coalition’s efforts.

K-12 STEM Education For the Future Workforce: A Wish List for the Next Five Year Plan

This report was prepared in partnership with the Alliance for Learning Innovation (ALI), to advocate for building a better research and development (R&D) infrastructure in education. The Federation of American Scientists believes that STEM education evolution is necessary to prepare today’s students for tomorrow’s in-demand scientific and technological careers, as well as being a national security pursuit.

American STEM Education in Context

“This country is in the midst of a STEM and data literacy crisis,” opined Elena Gerstmann and Laura Albert in a recent piece for The Hill. Their sentiment represents a widely held concern that America’s global leadership in scientific and technological innovation, anchored in educational excellence, is being relinquished, thereby jeopardizing our economy and national security. Their message recycles a 65-year-old warning to U.S. policy makers, educators, and employers when the USSR seemingly eclipsed our innovation pace with the launch of Sputnik.

Life magazine devoted their March 1958 edition to a scathing comparison of the playful approach to STEM education in U.S. schools versus the no-nonsense rigor of Russian classrooms. The issue’s theme, “Crisis in Education” was summed up soberly: “The outcomes of the arms race will depend eventually on our schools and those of the Russians.” America answered the bell and came out swinging. Under President Eisenhower, the National Aeronautics and Space Administration (NASA) and the Defense Advanced Research Projects Agency (DARPA) were both established in 1958, as was the National Defense Education Act that channeled billions of dollars into K-12 and collegiate STEM education. By innumerable metrics (the Apollo program, the internet, GPS, and manufacturing dominance, all fueled by an internationally envied higher education system), the United States reclaimed preeminence in STEM innovation.

Over the next four decades tectonic shifts in demographics, economics, and politics rearranged continental competition such that complacent U.S. education systems were once again called on the carpet. In 2001, shortly before terrorists struck the World Trade Center and Pentagon, a U.S. Senate report on homeland vulnerability echoed that of Life magazine decades prior: “The inadequacies of our systems of research and education pose a greater threat to U.S. national security over the next quarter century than any potential conventional war that we might imagine.” The painfully prescient study, product of the Hart-Rudman Commission on National Security/21st Century, identified the advancement of information technology, bioscience, energy production, and space science, all overlain by economic and geopolitical destabilization, as the nation’s greatest challenge and our new Sputnik. The Commission called on reformed education systems to quadruple the number of scientists and engineers and to dramatically increase the number and skills of science and mathematics teachers. As in 1958, leaders responded boldly, creating the Department of Homeland Security in 2001, and planting the seeds for the 2007 America Creating Opportunities to Meaningfully Promote Excellence in Technology, Education, and Science (COMPETES) Act.

Funding for research and development across federal agencies significantly increased over the decade, including a budget boost for the National Science Foundation’s grant programs supporting emergent scholars (Faculty Early Career Development Program, or CAREER), the research capacities of targeted jurisdictions (Established Program to Stimulate Competitive Research, or EPSCoR), Graduate Research Fellowships (GRF), the Robert Noyce Teacher Scholarships, the Advanced Technological Education (ATE) program, and others designed to bolster diverse talent pipelines to STEM careers. Despite increases in the number of students studying science and engineering in the U.S, there is still a significant gap in diverse representation and equitable access to opportunities in the STEM field; ensuring greater inclusion and diversity in the American science and engineering landscape is essential to engaging the “missing millions,” or persistently underrepresented minority groups and women, in the nation’s STEM workforce and education programs.

Nearly a quarter century later, America is once again in a STEM talent crisis. The solutions of Hart-Rudman and of the Eisenhower era need an update. This latest Sputnik moment, unlike the space race that motivated the National Defense Education Act, and the terrorism that spawned Homeland Security, is more perfuse and profound, permeating every aspect of our lives: artificial intelligence and machine learning, CRISPR (clustered regularly interspaced short palindromic repeat), quantum computing, 6G and 7G communications, semiconductors, hydrogen and other energy sources, lithium and other ionic energy storage, robotics, big data, blockchain, biopharmaceuticals, and other emergent technologies.

To relinquish the lead in these arenas would put the U.S. economy, national security, and social fabric in the hands of other nations. Our new USSR is a roulette wheel of friends and foes vying for STEM supremacy including Singapore, Japan, China, Germany, the UK, Taiwan, Saudi Arabia, India, South Korea and many more. Not unlike the education crises that came to a head in 1958 and in 2001, our educational Achilles heel is a lack of exposure to and under-preparedness for STEM career pursuit for the majority of diverse young Americans. Further, the U.S. Bureau of Labor Statistics projects that STEM career opportunities will grow 10.8% by 2032, more than four times faster than non-STEM occupations.

What the United States has going for it in 2024 (and was comparatively lacking in the 1950s and the early 2000s) are STEM-rich local schools, communities, and states. Powered by investments of federal agencies (e.g., Smithsonian, NSF, NASA, DOL, ED and others), state governments (governors in Massachusetts, Iowa, Alabama, for example), nonprofits (Project Lead The Way and the Teaching Institute for Excellence in STEM for example), and industries (Regeneron, Collins Aerospace, John Deere, Google, etc.), STEM is now seen as an imperative field by most Americans.

Today’s STEM education landscape presents significant opportunities and challenges. Existing models of excellence demonstrate readiness to scale. To focus on what works and to channel resources in the direction of broader impacts for maximal benefit is to answer the call of our omni-present 2024 Sputnik.

The Current State: Future STEM Workforce Cultivation

At its root, STEM education is about workforce cultivation for high-demand and high-skill occupations of fundamental importance to American economic vitality and national security. In the ideal state, STEM education also prepares all learners to be critical thinkers who make evidence-based decisions by equipping them with analytical, computational, and scientific ways of knowing. STEM students should learn effective collaboration and problem-solving skills with an interdisciplinary approach, and feel prepared to apply STEM skills and knowledge to everyday life as voters, consumers, parents, and citizens.1

Target Audiences and Service Providers

The early childhood education community (pre-K-grade 3), both in school and out-of-school (at informal learning centers), has emerged over the last decade as a prime target for boosting STEM education as research findings accumulate around the importance of early exposure to and comfort with STEM concepts and processes. Popular providers of kits and activities, curricula, software platforms, and professional development for educators include Hand2Mind (Numberblocks), Robo Wunderkind, StoryTimeSTEM (Dragonland), NewBoCo (Tiny Techies), BirdBrain Tech (Finch robot), FIRST Lego League (Discover), Museum of Science Boston (Wee Engineer), Iowa Regents’ Center for Early Developmental Education (Light & Shadow), and Mind Research (Spatial-Temporal Math).

The elementary to middle school level of STEM education options both in and out of school enjoys the richest menu of STEM programming on the market, reflecting stronger curricular freedom to integrate content compared to high schools. Popular STEM programs include Blackbird Code, Derivita Math, FUSE Studio, Positive Physics, Micro:bit, Nepris (now Pathful), Project Lead The Way (Launch and Gateway), FIRST Tech Challenge, Code.org (CS Discoveries), Bootstrap Data Science, and many more.

The secondary education STEM landscape differs from pre-K-8 in a significant way: although discrete STEM activities and programs are plentiful for integration into secondary science, mathematics, and other classes, the adoption of packaged courses or after-school enrichment opportunities is more common. Project Lead The Way and Code.org offer an array of stand-alone elective STEM courses2, as do local community colleges and universities. Nonprofits and industry sources offer STEM enrichment programs such as the Society of Women Engineers’ SWEnext Leadership Academy, Google’s CodeNext, the Society of Hispanic Professional Engineers’ Virtual STEM Labs, and Girls Who Code’s Summer Immersion. Finally, a number of federal, state, nonprofit and business organizations conduct future workforce programs for targeted students including the federal TRIO program, Advancement Via Individual Determination (AVID), Jobs for America’s Graduates (JAG), and Jobs For the Future (JFF).

Investment in STEM Education

A modestly conservative estimate of the total American investment in STEM education annually is $12 billion, nearly the equivalent of the entire budget of the National Science Foundation or the Environmental Protection Agency.

For fiscal year 2023 the White House budgeted $4.0424 billion for STEM education across 16 agencies that make up the Subcommittee on Federal Coordination in STEM Education (FC-STEM). Total nonprofit and philanthropic investments are more elusive since there are so many, with origins of their dollars often overlapping with state or local government (grants for example), and wildly variable definitions of STEM investments. That said, U.S. charitable giving to the education sector totaled $64 billion in 2019. A reasonable assumption that two percent made its way to STEM education equates to over $1 billion contributed to the overall funding pie. Business and industry in the United States contribute well over $5 billion annually, a conservative estimated proportion of total annual STEM education market share among ten nations, according to a recent study. K-12 schools spend well over $1 billion on STEM, a minimally modest fraction of the $870 billion total spent on K-12 across the U.S. The same figure would likely be true of America’s annual $700 billion higher education expenditure, minimally $1 billion to STEM. Elusive as definitive figures can be in this space, a glaring reality is that funds are streaming into STEM education at a level where measurable results should be expected. Are resources being distributed for maximal impact? Are measures capturing that impact? Is it enough money?

There are approximately 55.4 million K-12 students across the nation. At $12 billion per year on STEM, that comes to about $217 worth of STEM education annually per young American. Is that enough to move the needle? The answer is a qualified “yes” based on Iowa’s experience. The state launched a legislatively funded STEM education program in 2012, investing on average about $4.2 million annually to provide enrichment opportunities for about one-fifth of all K-12 students, or 100,000 per year. To date, about 1.2 million youth have been served through a total investment of about $50 million. That calculates to $42 per student. The result? Among participants: increased standardized test scores in math and science; increased interest in STEM study and careers; a near doubling of post-secondary STEM majors at community colleges and universities. Thus, from Iowa’s experience, the amount of funding toward American STEM education is adequate to expect systemic gains. The qualifier is that Iowa funds flow toward increased equity (most needy are top priority), school-work alignment (career linked curriculum, professional development), and proof of effectiveness (rigorously vetted and carefully monitored programs). Variance in these three factors can separate ambitions from realities.

Ambitions vs. Realities

The federal STEM education strategic plan Charting a Course for Success: America’s Strategy for STEM Education, identified three consensus goals for U.S. STEM education: a strong STEM literacy foundation for all Americans; increased diversity, equity, and inclusion in STEM study and work; and preparation of the STEM workforce of the future. Three challenges lie between those goals and reality.

Elusive equity. The provision of quality STEM education opportunities to Americans most in need is universally embraced yet difficult to achieve at the program level. Unequal funding of school STEM programs across urban, rural, and suburban public and private school districts equate to less experienced educators and diminished material resources (laboratories, computers, transportation to enrichment experiences) in socioeconomically disadvantaged communities. The challenge is then compounded by the lack of role models to inspire and support youth of underserved subpopulations by race, ability, ethnicity, gender, and geography. Bias, whether implicit or explicit, fuels stereotype threat and identity doubt for too many individuals in schools, colleges, and workplaces, countering diversity and equity efforts.

School-work misalignment. For most learners, the school experience can seem quite different from the higher education which follows, and the work and life experiences beyond. Employer and learner polls unearth misalignment in priorities: employers value in new hires skills such as relationship building, dealing with complexity and ambiguity, balancing opposing views, collaboration, co-creativity, and cultural sensitivity, in addition to expectations of work-related experiences. Schools typically proclaim missions like “Educating each student to be a lifelong learner and a caring, responsible citizen” omitting the importance of employability. Learners feel that school taught them time management, academic knowledge, and analytical skills, while experiential learning remains limited.

Elusive proof. Evidence of effect can be vexingly evasive. The 2022 progress report of the federal STEM plan clarified the difficulty in verifying reach to those most in need: the identification of participants in STEM programs can be restricted for privacy/legality reasons. The gathering of racial, ethnic, and demographic data on STEM participants may often be unreliable given self-reported or observational identifications as well as the fleeting, often anonymous encounters typical of “STEM Night” or informal experiences at science centers, zoos, and museums.

Participant profiles aside, variability in program assessments – design and objectives – make meaningful meta-analysis challenging, which creates difficulties in scaling promising STEM programs. “We recommend that states and programs prioritize research and evaluation using a common framework, common language, and common tools” advised a group of evaluators recently.

Exemplars

Plentiful success stories exist at the local, regional, and national levels. The following six exemplars are each funded in whole or in part by federal and/or state grants. The first examples are local education systems (one in-school, one out-of-school) masterfully aligning learning experiences to career preparation. The second pair of examples profile a regional out-of-school STEM program powerfully documenting effects on participants, and an in-school enrichment course demonstrating success. And the final pair of examples are a nationwide equity program successfully preparing STEM educators to effectively serve students of diversity, and an exciting consortium effort aimed at refocusing the entire educational enterprise on skills that matter most.

1.a. School-work alignment at the local level

The Barrow Community School District (BCSD) in Georgia is strongly committed to work-based learning (WBL). All 15,000 students are required to take a sequence of exploratory STEM career classes beginning in ninth grade. Fifteen career pathways are available ranging from computing to health, manufacturing to engineering. It all culminates in an optional senior year internship serving 400 students annually. Interns earn dual-enrollment credits in partnership with local colleges and are paid by the employer host. Interns spend 7.5 to 15 hours per week at work experiences in a hospital, on a construction site, or in a production plant. The district employs a full time WBL coordinator to oversee, administer, and evaluate, as well as to cultivate community employer partners. Teachers are expected to spend one week in an industry externship every three to five years. The BCSD commitment to a school experience aligned to future careers is something that every student in any district ought to be able to experience.

1.b. Diverse workforce of the future – local-to-global level

The World Smarts STEM Challenge is a community-based, after-school, real-world problem-solving experience for student workforce development. Funded by a 2021 National Science Foundation ITEST (Innovative Technology Experiences for Students and Teachers) grant in partnership with North Carolina State University, students in the Washington D.C. area are assigned bi-national groups (arranged through a partnership with the International Research and Exchanges Board) to collaborate in solving local/global STEM issues via virtual communications. Groups are mentored by industry professionals. In the process, students develop skills in innovation, investigation, problem-solving, and global citizenship for careers in STEM. Participant diversity is a primary objective. Learners of underrepresented backgrounds, including Black, Hispanic, economically disadvantaged, and female students, are actively recruited from local schools. Educator-facilitators are treated to professional development opportunities to build mentorship skills that support students. The end-product is a World Smarts STEM Activation Kit for implementing the model elsewhere.

2.a. Proof of effect at the regional level out-of-school

NE STEM 4U is an after-school program serving Omaha, Nebraska regional elementary school youth. Programs are hands-on problem-based challenges relevant to children. The staff were interested in the effect of their activities on the excitement, curiosity, and STEM concept gains of participants. The instrument they chose to use is the Dimensions of Success (DoS) observational tool of the P.E.A.R. Institute (Program in Education, Afterschool & Resiliency). The DoS is conducted by a certified administrator who observes and rates four groups of criteria: the learning environment itself, level of engagement in the activity, STEM knowledge and skills gained, and relevancy. Through multiple cohorts over two years, the DoS findings validated the learning approach at NE STEM 4U across dimensions, though with natural variations in positive effect. The upshot is not only that this after-school model is readily replicable, but that the DoS observation tool is a thoroughly vetted, powerful, and readily available instrument that could become a “common tool” in the STEM education program evaluation community.

2.b. Proof of effect at the regional school level

From a modest New York origin in 1997, Project Lead The Way (PLTW) has blossomed into a nationwide tour de force in STEM education, funded by the Kern Foundation, Chevron, and other philanthropies. Adopted at the community school level where trained educators integrate units at the pre-K-5 and middle school levels (Launch, and Gateway, respectively), or offer courses at the secondary level (Algebra, Computer Science, Engineering, Biomedical), all share a common focus on developing in-demand, transportable skills like problem solving, critical and creative thinking, collaboration, and communication. Career connections are a mainstay. To that end, PLTW is notable for expecting schools to form advisory boards of local employers for feedback and connections. Attitudinal surveys attest to increased student interest in STEM careers.

3.a. Equity at the national level – diversity and inclusion

The National Alliance for Partnerships in Equity (NAPE) offers a wide array of professional development programs related to STEM equity. One module is called Micromessaging to Reach and Teach Every Student. Educators in and out of school convey micro-messages to students at every encounter. Micro-messages are subtle and typically unconscious. Sometimes they are helpful – a smile or eye contact. Sometimes they can be harmful towards individuals or reveal bias towards a group to which a student may belong – a furrowed brow or a stereotypical comment. Exceedingly rare is micro-message expertise in the teacher preparatory pipeline or in standard professional development. Yet micro-messaging is tremendously influential in the self-perceptions of learners as welcome in STEM.

3.b. Equity at the national level – leveling the playing field

Durable skills – e.g., teamwork, collaboration, negotiation, empathy, critical thinking, initiative, risk-taking, creativity, adaptability, leadership, and problem-solving – define jobs of the future. AI and automation cannot replace durable skills. The nonprofit America Succeeds has championed a list of 100 durable skills grouped into 10 competencies, based on industry input. They studied state standards for college and career readiness against those competencies and prescribe remedies to states whose standards fall short (most U.S. states). Durable Skills, packaged by America Succeeds, is an equity service par excellence – every learner can command these 100 enduring skills, setting them up for success.

The Case for Increased Investment in STEM Education R&D at the Federal and State Level

Billions of dollars pour into American STEM education each year. Millions of learners and employers benefit from the investment. Outstanding programs produce undeniably successful results for individuals and organizations. And yet, “This country is in the midst of a STEM and data literacy crisis.” How can that be? Here are some of the factors in play.

Recent STEM Education/Workforce Investment Trends

The bi-annual Science and Technology Indicators compiled by the National Science Board were released in March 2024. Noteworthy findings (necessarily a couple of years old given the retrospective analysis) include:

- When it comes to local K–12 education and STEM workforce outcomes, both are widely variable across regions of the United States.

- The timeframe of 2019 to 2022 was a period of sharp decline in mathematics scores on national tests for U.S. elementary and secondary students.

- Among bachelor’s degree holders in science and engineering fields, Hispanic or Latino, Black or African American, and American Indian or Alaska Native individuals are all underrepresented.

- About one-third of master’s and doctoral degree earners in science and engineering fields at U.S. colleges and universities in 2021 were international students on temporary visas.

- Of all STEM workers, 19% are foreign-born.

- Women accounted for 35% of all STEM workers in 2021, 47% of all workers.

- The U.S. science, technology, engineering, and mathematics (STEM) workforce accounts for 24% of the total U.S. workforce (36.6 million people), up from 21% a decade ago. (Federal STEM does not include medicine/health).

The federal government funds 52% of all academic research and development taking place at colleges and universities (2021).

Contrasting the findings of the NSB against current federal budgets, FY2024 appropriation for STEM education research and development is a work in progress. In comparison to FY23, the budget presented to Congress by the executive branch called for increases for STEM spending across many agencies but not all. The U.S. House and Senate generally propose reductions in spending. The Defense Department’s STEM education line, for example, the National Defense Education Program, is slated for significant reduction (-7.3 percent to -20 percent). The Department of Energy’s Office of Science which funds STEM education, is slated for a slight increase (+1.7 percent). The same is true for the NSF’s STEM education programs (+1.6 percent). NASA’s Office of STEM Engagement is on track for a slight decrease (-.3 percent). The Department of Agriculture’s Research and Education budget is down slightly (-1.7 percent). The U.S. Geological Survey’s Science Support budget that includes human capital development, is down slightly (-1.2 percent). The Department of Education’s Institute for Education Sciences was slated for significant increase by the executive branch though slated for reduction in both the House and Senate budgets. The Department of Homeland Security’s Science and Technology budget which includes funding for university-based centers and minority institution programs is set for reduction (-1.3 percent to -19 percent).

Significant STEM education and workforce development support resides within the CHIPS and Science Act of 2022 which has yet to be fully funded by the Congress. An overall trend in shifting R&D, including education, from federal to private sector support means greater reliance on business and industry to invest in STEM program development. The NSB Indicators report highlights this shift in R&D investment: federal government investment in R&D is at 19 percent in 2021 (down from 30 percent in 2011), while the business sector now funds 75 percent of U.S. R&D funding.

A bottom-line interpretation is that federal investment in STEM education/workforce development, though significant, can hardly be described as a generational response to an economic and national security crisis.

Emergent Frontiers

Meanwhile, economic Sputniks are circling the globe. All driven by semiconducting silicon and germanium chips. Yet another testament to American STEM education is the home-grown invention of chips. But they are built mostly elsewhere – Taiwan, South Korea, and Japan. Semiconductors lie at the heart of our communications (e.g. cell phones, satellites), transportation (e.g. planes, trains, automobiles), defense (e.g. guidance systems and risk analytics), health (e.g. pacemakers, insulin pumps), lifestyle (e.g. dishwashers, Siri and Alexa), and virtually every other aspect of life and commerce. The federal government committed $53 billion through the 2022 CHIPS and Science Act to expand semiconductor talent development, research, and manufacture in the U.S., amplified by $231 billion in commitments to semiconductor development by business and industry. Guidance through the National Strategy on Microelectronics Research was recently released by the White House Office of Science and Technology Policy. When fully realized, the CHIPS Act may come to be a generational response to an international adversarial threat far more profound than Sputnik.

Equally compelling and weighty in terms of life, liberty, and the pursuit of happiness is to lead in research and development as well as governance around artificial intelligence. Extraordinary workplace and homelife evolution are underway resulting from applications of this new technology. For example, AI dramatically increases precision and thus reduces error in health care. Machine learning is far superior to human eyes at image analysis – MRI or x-ray – for detecting cancer early. On a lighter note, machine learning can dramatically increase the likely appeal of new movies by compressing millions of historic data points and a sea of YouTube videos into a sure box office hit. Conversely are the misuses both present and potential, to AI. The displacement of radiologists, movie script writers, and countless others whose routine, analytical, or creative skills can be performed by robots and neural networked sensors is troublesome yes, but a mild effect of AI compared to the proneness of our privacy, our democratic systems, business and finance integrity, and national defense structures for starters.

The White House Blueprint for an AI Bill of Rights plants an important stake in the ground around AI safeguards. But it does not speak to the cultivation of future managers of AI. Similarly, the U.S. Department of Education report Artificial Intelligence and the Future of Teaching and Learning advises on risks of and uses for AI in diagnostics and descriptive statistics. However, guidance for preparing the upcoming generation to manage AI is not included. The National Science Foundation supports several AI-education studies that may prove worthy of scaling.

A potpourri of additional emergent trends fuel the current STEM crisis. Many are technological innovations, unearthing powers of manipulation and control with which society is ill-prepared to manage. Quantum computing is one such innovation – using subatomic particle positioning, qubits, to store information. Computers will become exponentially faster and more powerful, possibly solving climate change while also deciphering everyone’s passwords. Relatedly, revolutions in cybersecurity and data analytics may be out ahead of societal grasp. Many educational programs at the local and national levels have emerged in this space, including eCybermission from the Army Education Outreach Program (AEOP), and Data Science Foundations using sports, finance, and other contexts for sense-making, from EverFi.

Not everyone needs to know how a microwave oven works in order to use it effectively. But U.S. citizens bear the responsibility for weighing ethical, equitable, and legal dimensions of STEM advancements as voters, educators, parents, and consumers. Whether it be CRISPR alterations of individuals’ genetics, socioeconomic dimensions of factory automation, morality aspects of Directed Energy Weaponry (DEW), the cost/benefit balance of climate mitigation technologies such as carbon sequestration, and so on, STEM education and workforce development need to be out front. That requires additional investment.

Supply-Demand Imbalance

Emergent technologies will drive job opportunities in the STEM arena that are expected to grow at four times the rate of jobs in other sectors in the coming decade. While it is encouraging that post-secondary STEM certificates and degrees have increased over the last decade (growing from 982,000 in 2012 to 1,310,000 in 2021), this growth is a ripple when the field needs a wave. Further, significant subpopulations of Americans are underrepresented in STEM majors and jobs. Women make up just about one-third of the science and engineering workforce. While racial and ethnic subgroups including Alaska Native, Black or African American, American Indian, and Hispanic or Latino comprise 30% of the total workforce, just 23% are in STEM jobs. Rural residency exacerbates those disparities for all subpopulations regarding the STEM education pipeline. While 40% of urban adults have at least a bachelor’s degree, only 25% of rural residents do.

The commitment to diversify the STEM talent pipeline is a universal consensus across federal, state, local, corporate, nonprofit, and philanthropic investors in STEM education and workforce development. Numerous programs devoted to equity and inclusion are at work today with promising results, ripe for scaling.

Impact on Individuals and Society

Of all the arguments supporting increased investment in STEM education R&D to solve our current STEM crisis – tepid federal spending, ominously powerful inventions, and the dearth of talent for advancing and managing those inventions – a fourth argument eclipses each of them: STEM education improves the lives of individuals irrespective of their occupation. And in so doing, STEM education improves communities and the country at large.

Learners fortunate to enjoy quality STEM education develop creativity through imaginative design, interpretation, and representation of investigations. The tools they use strengthen technology literacy. The mode of discovery is highly social, honing communication and cooperation skills. With no sage-on-the-stage they develop independence of thought. Failure happens, forging perseverance and resilience in its wake. Asking and answering questions nurtures curiosity. Defending and refuting ideas cultivates critical thinking, Truth and facts are evidence-based yet always tentative. Empathy is cultivated through alternative interpretations or points of view. And confidence to pursue STEM as a career comes from doing STEM.

The prospect of an entire population of Americans thus equipped is the most compelling case for strategically increased R&D investment in STEM education.

Policy Recommendations for Increasing the Efficacy of Education R&D to Support STEM Education

Where do federal, state, local, corporate, nonprofit, and philanthropic STEM investors look for guidance in the alignment and leveraging of their dollars to nationwide priorities? The closest we have to a “master plan” is the federal STEM education strategic plan mandated by the America COMPETES Act. Updated every five years by the White House Office of Science and Technology Policy in close collaboration with federal agencies, the 2018-2023 plan is due for an update, and it is likely the next iteration will be released soon.

While the STEM community waits, valuable input on the next iteration was recently provided to the OSTP from the STEM Education Coalition. Coalition members, (numbering over 600) represent the spectrum of STEM advocates – business and industry, higher education, formal and informal K-12 education, nonprofits, and national/state policy groups – and collectively hold great sway in matters of STEM education nationally. The expiring federal STEM plan is closely reflective of their input, as its successor likely will be as well.

Six of the following ten recommendations build upon the STEM Education Coalition’s priorities, while the remaining four recommendations address gaps in the pipeline from STEM education to workforce pathways.

In order to maximize research and development to improve STEM education, we have distilled ten recommendations:

- Devote resources (human and financial) to both the scaling of, and continued research and development in, interventions that disrupt the status quo when it comes to rural under-reach and under-service in STEM education.

- Devote resources to both the scaling of, and continued research and development in transdisciplinary (a.k.a. Convergent) STEM teaching and learning, formally and informally.

- STEM teacher recruitment and training to support learning characterized on page 11 is a high-value target for investment in both the scaling of existent models as well as research and development on this essential frontier.

- Expand student authentic career-linked or work-based learning experiences to all, earning credits while acquiring job skills, by improving coordination capacity, and crediting – especially earning core (graduation) credits.

- Devote resources to research and development on coordination across components of the STEM education system – in school and out of school, educator preparation – at the local, state and national levels.

- Devote resources to research and development toward improved awareness/communication systems of Federal STEM education agencies.

- Devote resources to research and development on supporting the training of STEM teachers and professionals for career coaching on a real-time, as-needed basis for all youth.

- Devote resources to research and development on the expansion of local/global challenge-solution learning opportunities and how they influence student self-efficacy and STEM career trajectories.

- Devote resources to research and development of a digital platform readily accessible, easily navigable, and comprehensively thorough, for education-providers to harvest effective, vetted STEM programs from across the entire producer spectrum.

- Devote resources to the design and development of a catalog of STEM/workforce education “discoveries” funded by federal grant agencies (e.g., NSF’s I-Test, DR-K12, INCLUDES, CSforAll, etc.) to be used by STEM educators, developers and practitioners.

Recommendation 1. Devote resources (human and financial) to both the scaling of, and continued research and development in, interventions that disrupt the status quo when it comes to rural under-reach and under-service in STEM education.

Aligning to the STEM Ed Coalition’s priority of “Achieving Equity in STEM Education Must Be a National Priority,” this recommendation is central to the success of STEM education. The economic and moral imperative to broaden access to quality STEM education and to high-demand STEM careers is a national consensus. Lack of access and opportunity across rural America, where 20% of all youth attend half of all school districts and where persistent inequality hits members of racial and ethnic minority groups hardest, creates a high-value target.

STEM Excellence and Leadership Project

Identifying and nurturing STEM talent in rural K-12 settings can be a challenge. The Belin-Blank Center for Gifted Education and Talent Development successfully designed and implemented the “STEM Excellence and Leadership Project” at the middle school level. Funded by the NSF’s Advancing Informal STEM Learning program, flexible professional development, wide-net-casting of students, networking within the community, and career-counseling, resulted in increased creatively, critical thinking, and positive perceptions of mathematics and science.

Recommendation 2. Devote resources to both the scaling of, and continued research and development in transdisciplinary (a.k.a. Convergent) STEM teaching and learning, formally and informally.

Aligning to the STEM Ed Coalition’s Priority “Science Education Must Be Elevated as a National Priority within a Transdisciplinary Well-Rounded STEM Education,” we need more investment in R&D to understand the transdisciplinary STEM teaching and learning models that improve student outcomes. America’s formal education model remains largely reflective of the 1894 recommendations of the Committee of Ten: annually teach all students History, English, Mathematics, Physics, Chemistry, etc. This prevailing “layer cake” approach serves transdisciplinary education poorly. Even the Next Generation Science Standards upon which state and district science standards are largely based, focuses on developing… “an in-depth understanding of content and develop key skills…” All modern STEM-related challenges facing Generations Z, Alpha, and Beta require an entirely different brand of education – one of transdisciplinary inquiry.

USPTO Motivates Young Innovators and Entrepreneurs

The United States Patent and Trade Office (USPTO)’s National Summer Teacher Institute (NSTI) on Innovation, STEM, and Intellectual Property (IP) trains teachers to incorporate concepts of making, inventing, and intellectual property creation and protection into classroom instruction, with the goal to inspire and motivate young innovators and entrepreneurs. To date the program claims 22,000 hours of IP and invention education training of 444 teachers in 50 states – 110 of whom have inventions – now equipped to spread the power of invention education and IP to hundreds of thousands of learners across the country and the world. We should better understand the program components that enable this kind of transdisciplinary learning.

Recommendation 3. STEM teacher recruitment and training to support learning is a high-value target for investment in both the scaling of existent models as well as research and development on this essential frontier.

Aligning to the STEM Ed Coalitions’ priority “Increase the Number of STEM Teachers in Our Nation’s Classrooms,” we need to deploy more education R&D to address America’s well-documented STEM teacher shortage. But the shortage is only half of the challenge we face. The other half is equipping teachers to authentically teach STEM, not merely a discipline underneath the STEM umbrella. Efforts such as the NSF’s Robert Noyce Teacher Scholarship program and the UTeach model support the production of excellent teachers of mathematics and science, but not STEM overall. To teach in a convergent (transdisciplinary) fashion through collaborative community partnerships, on local/global complex issues is beyond the scope and capacity of traditional teacher preparatory models.

Example Programs

Two means for equipping educators to teach STEM are (1) in their pre-professional preparation, and (2) as in-service professional development for disciplinary instructors. Promising examples are flourishing.

- STEM Teaching Certificate. A few U.S. states and some national organizations have built STEM licenses and endorsements. Georgia State University’s STEM Certificate program trains teachers to bring a convergent STEM approach to whatever course, “[candidates] figure out how to work across their schools, with the arts, with connections to other subjects.”

- Iowa now offers STEM teaching endorsements featuring integrated methodology coursework, and a field experience in a STEM job internship or research.

- The National Institute for STEM Education offers a certificate based on 15 competencies including “argumentation” and “data utilization.”

- In-service STEM Externships. Teachers in industry externships discover workplace connections and durable skills important to build in classrooms. Numerous businesses (e.g., 3M), organizations (e.g. Aerospace/NASA), and states (e.g., Iowa’s NSF ITEST funded externships) conduct variations on the concept, with compelling results.

Recommendation 4. Expand student authentic career-linked or work-based learning experiences to all, earning credits while acquiring job skills, by improving coordination capacity, and crediting – especially earning core (graduation) credits.

Aligning to the STEM Ed Coalition’s priority to “Support Partnerships with Community Based STEM Organizations, Out of School Providers and Informal Learning Providers” education R&D needs to better understand career based learning models that work and deploy these evidence-based practices at scale.

Example Programs

With all 50 U.S. states aggressively pursuing work-based learning (WBL) policies and support, there is an opportunity to study and codify what states are learning to improve and iterate faster. According to the Education Commission of the States, 33 states have a definition for WBL, though variable. Nearly all states report WBL as a state strategy for their Workforce Innovation and Opportunity Act (WIOA) profile. Twenty-eight states legislate funding to support WBL. Less than half of all states permit WBL to count for graduation credits. Of all states, Tennessee presents a particularly aggressive WBL profile worthy of scale/replication.

Recommendation 5. Devote resources to research and development on coordination across components of the STEM education system – in school and out of school, educator preparation – at the local, state and national levels.

Aligning to the STEM Ed Coalition’s priority to “Take a Systemic Approach to Future STEM Education Interventions,” more R&D should be deployed to study ecosystem models to understand the components that lead to student outcomes

The STEM learning that takes place during the K-12 school day may or may not mesh well with the STEM learning that takes place at museum nights or at summer camp. In both instances, it may or may not align well with local, state, or national assessments. The preparation of educators is widely variable. The curricular content classroom-to-classroom and state-to-state varies. To drop novel grant-funded interventions into the mix is a random act of hope.

Example Programs

STEM Learning Ecosystems now number over 100 across the U.S., providing vertebral backbone to a national coordinative skeleton for STEM education. Formally designated by their membership in the STEM Learning Ecosystems Community of Practice supported by the Teaching Institute for Excellence in STEM (TIES), they each unite “…pre-K-16 schools; community-based organizations, such as after-school and summer programs; institutions of higher education; STEM-expert organizations, such as science centers, museums, corporations, intermediary and non-profit organizations and professional associations; businesses; funders; and informal experiences at home and in a variety of environments” to “…spark young people’s engagement, develop their knowledge, strengthen their persistence and nurture their sense of identity and belonging in STEM disciplines.” Every one of America’s 20,000 cities and towns ought to have a STEM Ecosystem. Just 19,900 to go.

Recommendation 6. Devote resources to research and development toward improved awareness/communication systems of Federal STEM education agencies.

Aligning to the STEM Ed Coalition’s priority to “Clarify and Define the Role of Federal Agencies and OSTP in Supporting STEM Education” we should utilize R&D and inspiration from other fields to ensure we are propagating knowledge and systems in ways that foster increased transparency and evidence-use.

Awareness is the weak link in the chain of federal STEM education outreach to consumers at local levels. Seventeen federal agencies engage in STEM education via 156 programs spanning pre-K-12 formal and informal, higher education, and adult education.

In 2018-19 a strong push was put forth by the OSTP and the Federal Coordination in STEM subcommittee (FC-STEM) to build STEM.gov or STEMeducation.gov in the spirit of AI.gov and Grants.gov. A one-stop clearinghouse through which Americans can explore and discover funding, programs, and expertise in STEM. To date, the closest analog is https://www.ed.gov/stem.

Example Programs

Discrete programs of various federal agencies have employed clever tactics for awareness and communication, as described in the 2022 Progress Report on the Implementation of the Federal STEM Education Strategic Plan. The AmeriCorp program, for example, partnered with Mathematica to build a web-based interactive SCALERtool useable by education professionals, local education agencies, state education agencies, nonprofits, state and local government agencies, universities and colleges, tribal nations, and others to request participants to address local challenges they have identified, including STEM. Similarly, the National Institute of Standards and Technology launched their NIST Educational STEM Resource registry (NEST-R) to provide wide access to NIST educational and workforce development content including STEM resource records. Can the concept be broadened to a grand unifying collective?

Recommendation 7. Devote resources to research and development on supporting the training of STEM teachers and professionals for career coaching on a real-time, as-needed basis for all youth.

Gen Z and Gen Alpha may end up in jobs like machine learning tech, molecular medical therapist, cryptocurrency auditor, big data distiller, climate change mitigator, or jetpack mechanic. From whom can they expect good career coaching? It is unrealistic to expect that their school counselors can keep up, with an average caseload of 385 students across all disciplines, their hands are full. STEM teachers, both the disciplinary and the integrated type, are best positioned to take on more responsibility for career coaching, with the help of counselors, administrators, librarians in fact it is an all-hands-on-deck challenge.

Example Programs

Meaningful Career Conversations is a program begun in Colorado now spreading to other states. It is a light training experience of four hours to equip educators and others with whom youth come into contact to conduct conversations that steer students toward reflection, exploration, and consideration of career pathways of interest. Trainings are based upon starters and prompts that get students talking about and reflecting on their strengths and interests, such as “What activities or places make you feel safe and valued? Why?” Not a silver bullet, but a model of distributed responsibility which, by engaging core teachers and other adults in career guidance, can help more students find their way toward a STEM career.

Recommendation 8. Devote resources to research and development on the expansion of local/global challenge-solution learning opportunities and how they influence student self-efficacy and STEM career trajectories.

The standardization of a vision for STEM in classrooms across America will take time and resources. In the meantime, programs like MIT Solve can fast-track authentic learning experiences in school and after school. It is the ultimate student-centeredness to invite groups of youth to think big – identify challenges for which they are enthused and tap all imaginable resources in dreaming up solutions – to command their own learning.

Example Programs

Common in higher education are capstone projects, applied coursework, even entire college missions (e.g., Olin College) that center the student learning experience around local/global challenges and solutions.

For citizens of all ages there are opportunities like Changemakers Challenges, and the “Reinvent the Toilet” competition of the Gates Foundation.

At the K-12 level, FIRST Lego League teams learn about robotics through humanitarian themes such as adaptive technologies for the disabled. The World Food Prize offers student group projects focused on global food security challenges. Of similar format is Future Cities, and Invention Convention. These well-evaluated programs are prime for expansion or replication.

Recommendation 9. Devote resources to research and development of a digital platform readily accessible, easily navigable, and comprehensively thorough, for education-providers to harvest effective, vetted STEM programs from across the entire producer spectrum.