Saving Billions on the US Nuclear Deterrent

The United States Air Force is replacing its current arsenal of Minuteman III intercontinental ballistic missiles (ICBMs) with an entirely new type of ICBM, known as Sentinel (previously known as the Ground-Based Strategic Deterrent or GBSD). Sentinel’s price tag continues to grow beyond initial expectations, with the program on track to become one of the country’s most expensive nuclear modernization projects ever.

As it stands, the Sentinel program is risky, draws funding away from more urgent priorities, and will exacerbate the Pentagon’s budget crisis. A better approach would be to life-extend a portion of the current ICBM force (the Minuteman III) in the near term in order to spread the costs of nuclear modernization out over the longer term. This approach will ensure that the United States can field a capable ICBM force on a continuous basis without compromising other critical security priorities.

Challenge and Opportunity

The Sentinel ICBM program involves (1) a like-for-like replacement of the 400 Minuteman III ICBMs currently deployed across Colorado, Montana, Nebraska, North Dakota, and Wyoming, (2) the creation of a full set of test-launch missiles, and (3) upgrades to launch facilities, launch control centers, and other supporting infrastructure. Sentinel would keep ICBMs in the United States’ nuclear arsenal until at least 2075.

Unfortunately, the Sentinel program is riddled with challenges and flawed assumptions that have significantly increased both its cost and risk, and that will continue to do so over the coming years, as described below.

Sentinel’s price tag continues to grow beyond initial expectations

The Sentinel program’s ever-increasing price tag indicates that the program is not nearly as cost-effective as initially projected. In 2015, the Air Force issued a preliminary estimate that Sentinel (then “GBSD”) would cost $62.3 billion to acquire. One year later, the Pentagon’s Cost Analysis & Program Evaluation (CAPE) office projected that Sentinel could more realistically cost $85 billion, a 37% increase from the Air Force’s estimate. In August 2020, CAPE’s projected Sentinel acquisition cost jumped again to $95.8 billion, with total life-cycle costs reaching as high as $263.9 billion1. In October 2020, the Pentagon reported that CAPE’s latest life-cycle estimate was $1.9 billion greater than its 2016 estimate, but did not explain why the estimate had grown. In January 2024, the Air Force notified Congress that the Sentinel program would cost 37 percent more than projected and take at least two years longer than estimated–an overrun in “critical” breach of Congress’ Nunn-McCurdy Act. The overrun put Sentinel’s anticipated cost at approximately $130 billion. In July 2024, upon certifying the Sentinel program to continue after its Nunn-McCurdy breach, the Pentagon announced a new CAPE estimate of $140.9 billion, constituting an 81% increase compared to the 2020 estimate.

As Sentinel matures over the coming years and schedule delays compound these cost issues, it will likely incur further cost increases. Sentinel is on track to become one of the country’s most expensive nuclear-related line items over the next decade.

Sentinel draws funding away from more urgent priorities

By its own admission, the Pentagon cannot afford all the weapons it wants to buy. In July 2020, the then-Air Force Chief of Staff, General Dave Goldfein, remarked that the Sentinel program represents “the first time that the nation has tried to simultaneously modernize the nuclear enterprise while it’s trying to modernize an aging conventional enterprise,” and added that “[t]he current budget does not allow you to do both.”

Funding tradeoffs at the Pentagon have already become apparent. In early 2020, for example, a decision to dramatically increase the budget of the National Nuclear Security Administration directly led to a Virginia-class submarine being cut from the Navy’s budget plan. Compounding the problem is the fact that the Pentagon is currently facing a “bow wave” of major expenditures. The bills for several big-ticket procurement projects—including Sentinel, the Long-Range Standoff Weapon, the F-35 fighter, the B-21 bomber, the Columbia-class ballistic missile submarine, and the KC-46A tanker—will all come due over the next decade. With growing recognition that the Pentagon simply cannot afford to foot so many major bills simultaneously, these large procurement projects have been characterized as “fiscal time bombs”,

The Sentinel program is already impacting funding of other defense programs with its latest batch of cost overruns. The Pentagon admitted in a July 2024 press release that they certified the Sentinel program to continue despite its critical cost and schedule overruns, partly because Sentinel “is a higher priority than programs whose funding must be reduced to accommodate the growth in cost of the program.” In reality, however, the Air Force does not yet know which programs will face funding reductions to offset Sentinel’s increase. General James Slife, Vice Chief of Staff of the Air Force, stated in July 2024 that because Sentinel’s cost growth will be realized several years from now, “it is a decision for down the road to decide what trade-offs we’re going to need to make in order to be able to continue to pursue the Sentinel program.”

With these funding issues in mind, it is imperative to think carefully about whether spending $141 billion to acquire the Sentinel right now makes sense. It may well be a better use of funds to focus on pressing security objectives––such as hardening U.S. command-and-control systems against cyber threats.

Life-extending part or all of the Minuteman III ICBM force—instead of moving to acquire Sentinel as quickly as possible—would constitute a cheaper and less risky option for the United States to field a viable ICBM force at New START levels for at least the next two decades. The Pentagon’s primary justification for pursuing the Sentinel program was the assumption that building an entirely new missile force from scratch would be cheaper than life-extending the Minuteman III force. This assumption stands in stark contrast to an Air Force-sponsored analysis that “[a]ny new ICBM alternative will very likely cost almost two times—and perhaps even three times—more than incremental modernization of the current Minuteman III system.”

The Pentagon’s assumption also does not match historical precedent. In 2012, after the completion of a comprehensive round of Minuteman III life-extension programs, the Air Force admitted that it cost only $7 billion to turn the Minuteman III ICBMs into “basically new missiles except for the shell.” There is little public evidence to suggest that a similar round of life-extension programs would cost significantly more. Even if the programs were more expensive, the added expense is unlikely to come anywhere close to Sentinel’s projected $141 billion acquisition fee; tripling the previous $7 billion price tag for Minuteman III upgrades would still amount to less than one-sixth of the acquisition price of Sentinel.

If a life-extension option were pursued in lieu of Sentinel, it is likely that the Minuteman III’s critical subsystems would eventually need to be replaced. Replacement appears to be technologically feasible. Lieutenant General Richard Clark, the Air Force’s deputy chief of staff for strategic deterrence and nuclear integration, testified to the House Armed Services Committee in March 2019 that it would be possible to extend the lives of the Minuteman III’s propulsion and guidance systems one more time, despite his stated preference for proceeding with the GBSD. Furthermore, a 2014 RAND report commissioned by Air Force Global Strike Command found “no evidence that would necessarily preclude the possibility of long-term sustainment.” In fact, the report noted, “we found many who believed the default approach for the future is incremental modernization, that is, updating the sustainability and capability of the Minuteman III system as needed and in perpetuity.”

Plan of Action

The next administration should revise its nuclear employment guidance to accept a slightly higher threshold for risk with regard to its ICBM force. This action is critical for enabling a life-extended Minuteman III force because the Pentagon’s interest in pursuing Sentinel is largely driven by its own interpretation of presidential nuclear-employment guidance. If the Air Force believes that the Minuteman III might dip below a preset reliability threshold, then the service will push for Sentinel in order to meet the current nuclear-employment guidance.

Revising the guidance to accept a slightly higher threshold for risk would reduce the need to pursue Sentinel immediately. This revision would first be publicly reflected in the next administration’s Nuclear Posture Review, and would then be translated into policy by the Pentagon.

It is important to emphasize that (1) presidential revisions to the nuclear-employment guidance are not unusual, and (2) revising the nuclear-employment guidance would have little bearing on strategic stability. In a nuclear first strike, an adversary would still be forced to target every silo. This means that a life-extended Minuteman III force would theoretically produce the same deterrence effect as a brand-new Sentinel force.

To provide additional support for the guidance revision, the next administration could launch a National Security Council-led review of the role of ICBMs in U.S. nuclear strategy. In particular, this review would assess the feasibility and cost of a Minuteman III life-extension program. The review would also consider whether such a program could be further enabled by reducing the number of deployed ICBMs or the number of annual flight tests, or by pursuing new forms of nondestructive booster reliability testing (see FAQ for more details).

Conclusion

Life-extending the nation’s existing arsenal of Minuteman III missiles instead of immediately pursuing the Sentinel program is the best way to ensure that the United States can continue to field a capable ICBM force without sacrificing funding other critical national-security priorities.

This course of action could buy the United States as much as twenty years of additional time to decide whether to pursue or cancel a follow-on Sentinel program, thus allowing the United States to further spread out costs and reconsider the future role of ICBMs in U.S. nuclear posture.

This action-ready policy memo is part of Day One 2025 — our effort to bring forward bold policy ideas, grounded in science and evidence, that can tackle the country’s biggest challenges and bring us closer to the prosperous, equitable and safe future that we all hope for whoever takes office in 2025 and beyond.

PLEASE NOTE (February 2025): Since publication several government websites have been taken offline. We apologize for any broken links to once accessible public data.

While accepting a higher threshold for risk with the ICBM force may sound politically difficult, in reality it has little bearing on strategic stability. The Air Force projects that a 30-year-old missile core has an estimated failure probability of 1.3%, which increases exponentially each year. As long as the expected failure rate did not climb too high, though, an adversary conducting a nuclear first strike would still have to target every silo because there would be no way of knowing which missiles were functional and which were duds. This means that a life-extended Minuteman III force would theoretically produce the same deterrence effect as a brand-new Sentinel force. Additionally, it is extremely unlikely that the United States would ever elect to launch only a small number of ICBMs in a crisis. As a result, even a 10% failure rate across all 400 launched ICBMs would still enable approximately 360 fully functional missiles to reach their targets.

Testing is critical to ensure that the Minuteman III missiles continue to function as designed if they are life-extended. However, there is a limited quantity of Minuteman III boosters that can be used as test assets. This problem was identified early in the Sentinel acquisition process by both internal and external analysts, who noted that increasing the average ICBM test rate from three to four and a half test firings per year – as was done in 2017 – would inevitably exhaust the surplus boosters and lead to a depletion of the currently-deployed ICBM force around 2040., There are several ways to overcome this obstacle without building a brand-new missile force.

One option would be to lower the average test rate from four and a half tests per year back down to three. If the Air Force was prepared to accept a slight additional risk of booster failure––given the fact that, as discussed above, doing so would have no discernible effect on strategic stability––then the number of tests per year could realistically be decreased. To that end, a 2017 Center for Strategic and International Studies report estimated that if the United States chose to re-core its ICBMs and move the firing rate back to three tests per year, then it would be possible to maintain the Minuteman III force at New START levels (400 deployed ICBMs) until 2050.

Another option would be to reduce the number of deployed ICBMs. Again, doing so would not meaningfully affect deterrence but would make a significant quantity of additional missiles available for testing purposes. For example, if the Pentagon reduced its deployed ICBM force from 400 to 300 missiles, it could maintain the current testing rate of four and a half tests per year without the missile inventory dropping below 300 until approximately 2060. A portion of the missiles used for testing could also be converted into commercial or governmental space launch vehicles, thus eliminating the requirement to eventually “re-core” them to ICBM standards.

A third option would be for the Air Force to explore nondestructive methods for testing the reliability of their solid rocket motors. George Perkovich and Pranay Vaddi suggest in their 2021 “Model Nuclear Posture Review” that this could be achieved through technological advances in ultrasound and computed tomography. The Air Force could also consider adapting the Navy’s nondestructive-testing techniques – which involve sending a probe into the bore to measure the elasticity of the propellant – to evaluate the reliability of the Minuteman III force. As Steve Fetter and Kingston Reif noted in 2019, these types of nondestructive testing methodologies “would permit the lifetime of each motor to be estimated on an individual basis. Rather than retire all motors at an age when a small percentage are believed to be no longer reliable, only those particular motors with measurements indicating unacceptable aging could be retired.” Nondestructive testing may be the most effective option, because if successful it would eliminate the attrition problem altogether.

Despite the Pentagon’s repeated claims that the Minuteman III ICBM will become “unviable” after 2030, the Minuteman III’s critical subsystems remain highly reliable with age. There is little evidence to suggest that this will change within the next decade. The Minuteman III’s guidance and propulsion modules were modernized during the 2000s and continue to perform successfully during tests.

A March 2020 Air Force Nuclear Weapons Center briefing to industry partners also acknowledged that the useful life of the Minuteman III force could be extended with “better NS-50 [guidance module] failure data,” because “current age-out on guidance is an engineering ‘best guess’ with no current data.” This suggests that the Air Force’s prediction about the post-2030 “unviability” of these subsystems is based on little actual evidence.

Importantly, the 2030 benchmark for the Minuteman III’s “unviability” appears to have been selected by Congress, not by the Air Force. A consequential amendment inserted into the FY 2007 National Defense Authorization Act directed the Secretary of the Air Force to “modernize Minuteman III intercontinental ballistic missiles in the United States inventory as required to maintain a sufficient supply of launch test assets and spares to sustain the deployed force of such missiles through 2030.” This amendment ultimately had a significant impact on the timeline of Sentinel because, as Air Force historian David N. Spires describes, “Although Air Force leaders had asserted that incremental upgrades, as prescribed in the analysis of land-based strategic deterrent alternatives, could extend the Minuteman’s life span to 2040, the congressionally mandated target year of 2030 became the new standard.”

It is telling that the Navy is not currently contemplating the purchase of a brand-new missile to replace its current arsenal of Trident submarine-launched ballistic missiles, and instead plans to conduct a second life-extension to keep them in service until 2084. This life-extension is enabled in large part by the Navy’s unique nondestructive method of testing its boosters, described above. In January 2021, Vice Admiral Johnny Wolfe Jr., the Navy’s Director for Strategic Systems Programs, remarked that “solid rocket motors, the age of those we can extend quite a while, we understand that very well.”

To demonstrate this fact, in 2015 the Navy conducted a successful Trident SLBM flight test using the oldest 1st-stage solid rocket motor flown to date (over 26 years old), as well as 2nd- and 3rd-stage motors that were 22 years old. Rather than replace these missiles as they exceed the planned design life of 25 years, the Navy stated in 2015 that they “are carefully monitoring the effects of age on our strategic weapons system and continue to perform life extension and maintenance efforts to ensure reliability.”

Rather than conduct similar life-extension operations, the Air Force has elected to completely replace its Minuteman III force with the brand-new, highly expensive Sentinel.

A 2016 report to Congress reveals that the Air Force baked multiple flawed assumptions into its cost-assessment process, the most influential of which was the presumption that the United States would continue deploying 400 ICBMs until 2075. However, as researchers from the Carnegie Endowment for International Peace explained in a January 2021 report, “Basing analysis on a straight-line requirement projected all the way to 2075 practically predetermines the outcome.” Rather than prematurely selecting these benchmarks, the Pentagon’s analysis could have considered which options were most cost-effective under a variety of circumstances.

In reality, ICBM force posture is neither sacred nor immutable, and there is little security rationale behind the Pentagon’s selections of the number 400 and the year 2075. The year 2075 a relatively arbitrary timeframe that is not codified in either the Nuclear Posture Review or in other key strategic documents. Moreover, a 2013 inter-agency review—featuring the participation of the Department of State, the Department of Defense, the National Security Council, the intelligence community, the Joint Chiefs of Staff, U.S. Strategic Command, and then-Vice President Joe Biden’s office—ultimately found that U.S. deterrence requirements could be met by reducing U.S. nuclear forces by up to one-third.

Yet despite their lack of strategic rationale, these pre-selected force requirements and exaggerated timelines heavily bias the Pentagon’s cost-assessment process in favor of Sentinel. In particular, if the Pentagon had selected a different ICBM retention timeline – 2050, for example, or even 2100 – then a revised cost assessment would have suggested that life-extending the Minuteman III force would be significantly more cost-effective than building an entirely new Sentinel missile force from scratch.

How to Prompt New Cross-Agency and Cross-Sector Collaboration to Advance Learning Agendas

The 2018 Foundations for Evidence-Based Policymaking Act (Evidence Act) promotes a culture of evidence within federal agencies. A central part of that culture entails new collaboration between decision-makers and those with diverse forms of expertise inside and outside of the federal government. The challenge, however, is that new cross-agency and cross-sector collaborative relationships don’t always arise on their own. To overcome these challenges, federal evaluation staff can use “unmet desire surveys,” an outreach tool that prompts agency staff to reflect on how the success of their programs relates to what is happening in other agencies and outside government and how engaging with these other programs and organizations would help their work be more effective. It also prompts them to consider the situation from the perspective of potential collaborators—why should they want to engage?

The unmet desire survey is an important data-gathering mechanism that provides actionable information to create new connections between agency staff and people—such as those in other federal agencies, along with researchers, community stakeholders, and others outside the federal government—who have the information they desire. Then, armed with that information, evaluation staff can use the new Evidence Project Portal on Evaluation.gov (to connect with outside researchers) and/or other mechanisms (to connect with other potential collaborators) to conduct matchmaking that will foster new collaborative relationships. Using existing authorities and resources, agencies can pilot unmet desire surveys as a concrete mechanism for advancing federal learning agendas in a way that builds buy-in by directly meeting the needs of agency staff.

Challenge and Opportunity

A core mission of the Evidence Act is to foster a culture of evidence-based decision-making within federal agencies. Since the problems agencies tackle are often complex and multidimensional, new collaborative relationships between decision-makers in the federal government and those in other agencies and in organizations outside the federal government are essential to realizing the Evidence Act’s vision. Along these lines, Office of Management and Budget (OMB) implementation guidance stresses that learning agendas are “an opportunity to align efforts and promote interagency collaboration in areas of joint focus or shared populations or goals” (OMB M-19-23), and that more generally a culture of evidence “cannot happen solely at the top or in isolated analytical offices, but rather must be embedded throughout each agency…and adopted by the hardworking civil servants who serve on behalf of the American people” (OMB M-21-27).

New cross-agency and cross-sector collaborative relationships rarely arise on their own. They are voluntary, and between people who often start off as strangers to one another. Limited resources, lack of explicit permission, poor prior experiences, differing incentives, and stereotypes are all challenges to persuading strangers to engage with each other. In addition, agency staff may not previously have spent much time thinking about how new collaborative relationships could help answer questions posed by their learning agenda, or even that accessible mechanisms exist to form new relationships. This presents an opportunity for new outreach by evaluation staff, to expand a sense of what kinds of collaborative relationships would be both valuable and possible.

For instance, the Department of the Interior (DOI)’s 2024 Learning Agenda asks: What are the primary challenges to training a diverse, highly skilled workforce capable of delivering the department’s mission? The DOI itself has vital historical and other contextual information for answering this question. Yet officials from other departments likely have faced (or currently face) a similar challenge, and are in a position to share what they’ve tried so far, what has worked well, and what has fallen short. In addition, researchers who study human resource development could share insights from literature, as well as possibly partner on a new study to help answer this question in the DOI context.

Each department and agency is different, with its own learning agenda, decision-making processes, capacity constraints, and personnel needs. And so what is needed are new forms of informal collaboration (knowledge exchange) and/or formal collaboration (projects with shared ownership, decision-making authority, and accountability) that foster back-and-forth interaction. The challenge, however, is that agency staff may not consider such possibilities without being prompted to do so or may be uncertain how to communicate the opportunity to potential collaborators in a way that resonates with their goals.

This memo proposes a flexible tool that evaluation staff (e.g., evaluation officers at federal agencies) can use to generate buy-in among agency staff and leadership while also promoting collaboration as emphasized in OMB guidance and in the Evidence Act. The tool, which has already proven valuable in the federal government (see FAQs) , local government, and in the nonprofit sector, is called an “unmet desire survey.” The survey measures unmet desires for collaboration by prompting staff to consider the following types of questions:

- Which learning agenda question(s) are you focused on? Is there information about other programs within the government and/or information that outside researchers and other stakeholders have that would help answer it? What kinds of people would be helpful to connect with?

- Are you looking for informal collaboration (oriented toward knowledge exchange) or formal collaboration (oriented toward projects with shared ownership, decision-making authority, and accountability)?

- What hesitations (perhaps due to prior experiences, lack of explicit permission, stereotypes, and so on) do you have about interacting with other stakeholders? What hesitations do you think they might have about interacting with you?

- Why should they want to connect with you?

- Why do you think these connections don’t already exist?

These questions elicit critical insights about why agency staff value new connection and are highly flexible. For instance, in the first question posed above, evaluation staff can choose to ask about new information that would be helpful for any program or only about information relevant to programs that are top priorities for their agency. In other words, unmet desire surveys need not add one more thing to the plate; rather, they can be used to accelerate collaboration directly tied to current learning priorities.

Unmet desire surveys also legitimize informal collaborative relationships. Too often, calls for new collaboration in the policy sphere immediately segue into overly structured meetings that fail to uncover promising areas for joint learning and problem-solving. Meetings across government agencies are often scripted presentations about each organization’s activities, providing little insight on ways they could collaborate to achieve better results. Policy discussions with outside research experts tend to focus on formal evaluations and long-term research projects that don’t surface opportunities to accelerate learning in the near term. In contrast, unmet desire surveys explicitly legitimize the idea that diverse thinkers may want to connect only for informal knowledge exchange rather than formal events or partnerships. Indeed, even single conversations can greatly impact decision-makers, and, of course, so can more intensive relationships.

Whether the goal is informal or formal collaboration, the problem that needs to be solved is both factual and relational. In other words, the issue isn’t simply that strangers do not know each other—it’s also that strangers do not always know how to talk to one another. People care about how others relate to them and whether they can successfully relate to others. Uncertainty about relationality prevents people from interacting with others they do not know. This is why unmet desire surveys also include questions that directly measure hesitations about interacting with people from other agencies and organizations, and encourage agency staff to think about interactions from others’ perspectives.

The fact that the barriers to new collaborative relationships are both factual as well as relational underscores why people may not initiate them on their own. That’s why measuring unmet desire is only half the battle—it’s also important to ensure that evaluation staff have a plan in place to conduct matchmaking using the data gathered from the survey. One way is to create a new posting on the Evidence Project Portal (especially if the goal is to engage with outside researchers). A second way is to field the survey as part of a convening, which already has as one of its goals the development of new collaborative relationships. A third option is to directly broker connections. Regardless of which option is pursued, note that large amounts of extra capacity are likely unnecessary, at least at first. The key point is simply to ensure that matchmaking is a valued part of the process.

In sum, by deliberately inquiring about connections with others who have diverse forms of relevant expertise—and then making those connections anew—evaluation staff can generate greater enthusiasm and ownership among people who may not consider evaluation and evidence-building as part of their core responsibilities.

Plan of Action

Using existing authorities and resources, evaluation staff (such as evaluation officers at federal agencies) can take three steps to position unmet desire surveys as a standard component of the government’s evidence toolbox.

Step 1. Design and implement pilot unmet desire surveys.

Evaluation staff are well positioned to conduct outreach to assess unmet desire for new collaborative relationships within their agencies. While individual staff can work independently to design unmet desire surveys, it may be more fruitful to work together, via the Evaluation Officer Council, to design a baseline survey template. Individuals could then work with their teams to adapt the baseline template as needed for each agency, including identifying which agency staff to prioritize as well as the best way to phrase particular questions (e.g., regarding the types of connections that employees want in order to improve the effectiveness of their work or the types of hesitancies to ask about). Given that the question content is highly flexible, unmet desire surveys can directly accelerate learning agendas and build buy-in at the same time. Thus, they can yield tangible, concrete benefits with very little upfront cost.

Step 2. Meet unmet desires by matchmaking.

After the pilot surveys are administered, evaluation staff should act on their results. There are several ways to do this without new appropriations. One way is to create a posting for the Evidence Project Portal, which is explicitly designed to advertise opportunities for new collaborative relationships, especially with researchers outside the federal government. Another way is to field unmet desire surveys in advance of already-planned convenings, which themselves are natural places for matchmaking (e.g., the Agency for Healthcare Research and Quality experience described in the FAQs). Lastly, for new cross-agency collaborative relationships along with other situations, evaluation staff may wish to engage in other low-lift matchmaking on their own. Depending upon the number of people they choose to survey, and the prevalence of unmet desire they uncover, they may also wish to bring on short-term matchmakers through flexible hiring mechanisms (e.g., through the Intergovernmental Personnel Act). Regardless of which option is pursued, the key point is that matchmaking itself must be a valued part of this process. Documenting successes and lessons learned then set the stage for using agency-specific discretionary funds to hire one or more in-house matchmakers as longer-term or staff appointments.

Step 3. Collect information on successes and lessons learned from the pilot.

Unmet desire surveys can be tricky to field because they entail asking employees about topics they may not be used to thinking about. It often takes some trial and error to figure out the best ways to ask about employees’ substantive goals and their hesitations about interacting with people they do not know. Piloting unmet desire surveys and follow-on matchmaking can not only demonstrate value (e.g., the impact of new collaborative relationships fostered through these combined efforts) to justify further investment but also suggest how evaluation leads might best structure future unmet desire surveys and subsequent matchmaking.

Conclusion

An unmet desire survey is an adaptable tool that can reveal fruitful pathways for connection and collaboration. Indeed, unmet desire surveys leverage the science of collaboration by ensuring that efforts to broker connections among strangers consider both substantive goals and uncertainty about relationality. Evaluation staff can pilot unmet desire surveys using existing authorities and resources, and then use the information gathered to identify opportunities for productive matchmaking via the Evidence Project Portal or other methods. Ultimately, positioning the survey as a standard component of the government’s evidence toolbox has great potential to support agency staff in advancing federal learning agendas and building a robust culture of evidence across the U.S. government.

This action-ready policy memo is part of Day One 2025 — our effort to bring forward bold policy ideas, grounded in science and evidence, that can tackle the country’s biggest challenges and bring us closer to the prosperous, equitable and safe future that we all hope for whoever takes office in 2025 and beyond.

PLEASE NOTE (February 2025): Since publication several government websites have been taken offline. We apologize for any broken links to once accessible public data.

Yes, the Agency for Healthcare Research and Quality (AHRQ) has used unmet desire surveys several times in 2023 and 2024. Part of AHRQ’s mission is to improve the quality and safety of healthcare delivery. It has prioritized scaling and spreading evidence-based approaches to implementing person-centered care planning for people living with or at risk for multiple chronic conditions. This requires fostering new cross-sector collaborative relationships between clinicians, patients, caregivers, researchers, payers, agency staff and other policymakers, and many others. That’s why, in advance of several recent convenings with these diverse stakeholders, AHRQ fielded unmet desire surveys among the participants. The surveys uncovered several avenues for informal and formal collaboration that stakeholders believed were necessary and, importantly, informed the agenda for their meetings. Relative to many convenings, which are often composed of scripted presentations about individuals’ diverse activities, conducting the surveys in advance and presenting the results during the meeting shaped the agenda in more action-oriented ways.

AHRQ’s experience demonstrates a way to seamlessly incorporate unmet desire surveys into already-planned convenings, which themselves are natural opportunities for matchmaking. While some evaluation staff may wish to hire separate matchmakers or engage in matchmaking using outside mechanisms like the Evidence Project Portal, the AHRQ experience also demonstrates another low-lift, yet powerful, avenue. Lastly, while the majority of this memo and the FAQs focus on measuring unmet desire among agency staff, the AHRQ experience also demonstrates the applicability of this idea to other stakeholders as well.

The best place to start—especially when resources are limited—is with potential evidence champions. These are people who are already committed to answering questions on their agency’s learning agenda and are likely to have an idea of the kinds of cross-agency or cross-sector collaborative relationships that would be helpful. These potential evidence champions may not self-identify as such; rather, they may see themselves as program managers, customer-experience experts, bureaucracy hackers, process innovators, or policy entrepreneurs. Regardless of terminology, the unmet desire survey provides people who are already motivated to collaborate and connect with a clear opportunity to articulate their needs. Evaluation staff can then respond by posting on the Evidence Project portal or other matchmaking on their own to stimulate new and productive relationships for those people.

The administrator should be someone with whom agency staff feel comfortable discussing their needs (e.g., a member of an agency evaluation team) and who is able to effectively facilitate matchmaking—perhaps because of their network, their reputation within the agency, their role in convenings, or their connection to the Evidence Project Portal. The latter criterion helps ensure that staff expect useful follow-up, which in turn motivates survey completion and participation in follow-on activities; it also generates enthusiasm for engaging in new collaborative relationships (as well as creating broader buy-in for the learning agenda). In some cases, it may make the most sense to have multiple people from an evaluation team surveying different agency staff or co-sponsoring the survey with agency innovation offices. Explicit support from agency leadership for the survey and follow-on activities is also crucial for achieving staff buy-in.

Survey content is meant to be tailored and agency-specific, so the sample questions can be adapted as follows:

- Which learning agenda question(s) are you focused on? Is there information about other programs within the government and/or information that outside researchers and other stakeholders have that would help answer it? What kinds of people would be helpful to connect with?

This question can be left entirely open-ended or be focused on particular priorities and/or particular potential collaborators (e.g., only researchers, or only other agency staff, etc.). - Are you looking for informal collaboration (oriented toward knowledge exchange) or formal collaboration (oriented toward projects with shared ownership, decision-making authority, and accountability)?

This question may invite responses related to either informal or formal collaboration, or instead may only ask about knowledge exchange (a relatively lower commitment that may be more palatable to agency leadership). - What hesitations (perhaps due to prior experiences, lack of explicit permission, stereotypes, and so on) do you have about interacting with other stakeholders? What hesitations do you think they might have about interacting with you?

This question should refer to specific types of hesitancy that survey administrators believe are most likely (e.g., ask about a few hesitancies that seem most likely to arise, such as lack of explicit permission, concerns about saying something inappropriate, or concerns about lack of trustworthy information). - Why should they want to connect with you?

- Why do you think these connections don’t already exist?

These last two questions can similarly be left broad or include a few examples to help spark ideas.

Evaluation staff may also choose to only ask a subset of the questions.

Again, the answer is agency-specific. In cases that will use the Evidence Project Portal, agency evaluation staff will take the first stab at crafting postings. In other cases, meeting the unmet desire may occur via already-planned convenings or matchmaking on one’s own. Formalizing this duty as a part of one or more people’s official responsibilities sends a signal about how much this work is valued. Exactly who those people are will depend on the agency’s structure, as well as on whether there are already people in a given agency who see matchmaking as part of their job. The key point is that matchmaking itself should be a valued part of the process.

While unmet desire surveys can be done any time and on a continuous basis, it is best to field them when there is either an upcoming convening (which itself is a natural opportunity for matchmaking) or there is identified staff capacity for follow-on matchmaking and employee willingness to build collaborative relationships.

Many evaluation officers and their staff are already forming collaborative relationships as part of developing and advancing learning agendas. Unmet desire surveys place explicit focus on what kinds of new collaborative relationships agency staff want to have with staff in other programs, either within their agency/department or outside it. These surveys are designed to prompt staff to reflect on how the success of their program relates to what is happening elsewhere and to consider who might have information that is relevant and helpful, as well as any hesitations they have about interacting with those people. Unmet desire surveys measure both substantive goals as well as staff uncertainty about interacting with others.

Better Hires Faster: Leveraging Competencies for Classifications and Assessments

A federal agency takes over 100 days on average to hire a new employee — with significantly longer time frames for some positions — compared to 36 days in the private sector. Factors contributing to extended timelines for federal hiring include (1) difficulties in quickly aligning position descriptions with workforce needs, and (2) opaque and poor processes for screening applicants.

Fortunately, federal hiring managers and HR staffing specialists already have many tools at their disposal to accelerate the hiring process and improve quality outcomes – to achieve better hires faster. Inside and outside their organizations, agencies are already starting to share position descriptions, job opportunity announcements (JOAs), assessment tools, and certificates of eligibles from which they can select candidates. However, these efforts are largely piecemeal and dependent on individual initiative, not a coordinated approach that can overcome the pervasive federal hiring challenges.

The Office of Personnel Management (OPM), Office of Management and Budget (OMB) and the Chief Human Capital Officers (CHCO) Council should integrate these tools into a technology platform that makes it easy to access and implement effective hiring practices. Such a platform would alleviate unnecessary burdens on federal hiring staff, transform the speed and quality of federal hiring, and bring trust back into the federal hiring system.

Challenge and Opportunity

This memo focuses on opportunities to improve two stages in the federal hiring process: (1) developing and posting a position description (PD), and (2) conducting a hiring assessment.

Position Descriptions. Though many agencies require managers to review and revise PDs annually, during performance review time, this requirement often goes unheeded. Furthermore, volatile occupations for which job skills change rapidly – think IT or scientific disciplines with frequent changes to how they practice (e.g., meteorology) or new technologies that upend how analytical skills (e.g., data analytics) are practiced – can result in yet more changes to job skills and competencies embedded in PDs.

When a hiring manager has an open position, a current PD for that job is necessary to proceed with the Job Opportunity Announcement (JOA)/posting. When the PD is not current, the hiring manager must work with an HR staffing specialist to determine the necessary revisions. If the revisions are significant, an agency classification specialist is engaged. The specialist conducts interviews with hiring managers and subject-matter experts and/or performs deeper desk audits, job task analyses, or other evaluations to determine the additional or changed job duties. Because classifiers may apply standards in different ways and rate the complexity of a position differently, a hiring manager can rarely predict how long the revision process will take or what the outcome will be. All this delays and complicates the rest of the hiring process.

Hiring Assessments. Despite a 2020 Executive Order and other directives requiring agencies to engage in skills-based hiring, agencies too often still use applicant self-certification on job skills as a primary screening method. This frequently results in certification lists of candidates who do not meet the qualifications to do the job in the eyes of hiring managers. Indeed, a federal hiring manager cannot find a qualified candidate from a certified list approximately 50% of the time when only a self-assessment questionnaire is used for screening. There are alternatives to self-certification, such as writing samples, multiple-choice questions, exercises that test for particular problem-solving or decision-making skills, and simulated job tryouts. Yet hiring managers and even some HR staffing specialists often don’t understand how assessment specialists decide what methods are best for which positions – or even what assessment options exist.

Both of these stages involve a foundation of occupation- and grade-level competencies – that is, the knowledge, skills, abilities, behaviors, and experiences it takes to do the job. When a classifier recommends PD updates, they apply pre-set classification standards comprising job duties for each position or grade. These job duties are built in turn around competencies. Similarly, an assessment specialist considers competencies when deciding how to evaluate a candidate for a job.

Each agency – and sometimes sub-agency unit – has its own authority to determine job competencies. This has caused different competency analyses, PDs, and assessment methods across agencies to proliferate. Though the job of a marine biologist, Grade 9, at the National Oceanic and Atmospheric Administration (NOAA) is unlikely to be considerably different from the job of a marine biologist, Grade 9 at the Fish and Wildlife Service (FWS), the respective competencies associated with the two positions are unlikely to be aligned. Competency diffusion across agencies is costly, time-consuming, and duplicative.

Plan of Action

An Intergovernmental Platform for Competencies, PDs, Classifications, and Assessment Tools to Accelerate and Improve Hiring

To address the challenges outlined above, the Office of Personnel Management (OPM), Office of Management and Budget (OMB) and the Chief Human Capital Officers (CHCO) should create a web platform that makes it easy for federal agencies to align and exchange competencies, position descriptions, and assessment strategies for common occupations. This platform would help federal hiring managers and staffing specialists quickly compile a unified package that they can use from PD development up to candidate selection when hiring for occupations included on the platform.

To build this platform, the next administration should:

- Invest in creating Position Description libraries starting with the unitary agencies (e.g. the Environmental Protection Agency) then broadening out to the larger, disaggregated ones (e.g., the Department of Health and Human Services). Each agency should assign individuals responsible for keeping PDs in the libraries current at those agencies. Agencies and OPM would look for opportunities to merge common PDs. OPM would then aggregate these libraries into a “master” PD library for use within and across agencies. OPM should also share examples of best-in-class JOAs associated with each PD. This effort could be piloted with the most common occupations by agency.

Adopt competency frameworks and assessment tools already developed by industry associations, professional societies and unions for their professions. These organizations have completed the job task analyses and have developed competency frameworks, definitions, and assessments for the occupations they cover. For example, IEEE has developed competency models and assessment instruments for electrical and computer engineering. Again, this effort could be piloted by starting with the most common occupations by agency, and the occupations for which external organizations have already developed effective competency frameworks and assessment tools. - Create a clearinghouse for assessments at OPM indexed to each occupation associated in the PD Library. Assign responsibility to lead agencies for those occupations responsible for the PDs to keep the assessments current and/or test banks robust to meet the needs of the agencies. Expand USA Hire and funding to provide open access by agencies, hiring managers, HR professionals and program leaders.

- Standardize classification determinations for occupations/grade levels included in the master PD library. This will reduce interagency variation in classification changes by occupation and grade level, increase transparency for hiring managers, and reduce burden on staffing specialists and classifiers.

- Delegate authority to CHCOs to mandate use of shared, common PDs, assessments, competencies, and classification determinations. This means cleaning up the many regulatory mandates that do not already designate the agency-level CHCOs with this delegated authority. The workforce policy and oversight agencies (OPM, OMB, Merit Systems Protection Board (MSPB), Equal Employment Opportunity Commission (EEOC)) need to change the regulations, policies, and practices to reduce duplication, delegate decision making, and lower variation (For example, allow the classifiers and assessment professionals to default to external, standardized occupation and grade-level competencies instead of creating/re-creating them in each instance.)

- Share decision frameworks that determine assessment strategy/tool selection. Clear, public, transparent, and shared decision criteria for determining the best fit assessment strategy will help hiring managers and HR staffing specialists participate more effectively in executing assessments.

- Agree to and implement common data elements for interoperability. Many agencies will need to integrate this platform into their own talent acquisition systems such as ServiceNow, Monster, and USA Staffing. To be able to transfer data between them, the agencies will need to accelerate their work on common HR data elements in these areas of position descriptions, competencies, and assessments.

Data analytics from this platform and other HR talent acquisition systems will provide insights on the effectiveness of competency development, classification determinations, effectiveness of common PDs and joint JOAs, assessment quality, and effectiveness of shared certification of eligible lists. This will help HR leaders and program managers improve how agency staff are using common PDs, shared certs, classification consistency, assessment tool effectiveness, and other insights.

Finally, hiring managers, HR specialists, and applicants need to collaborate and share information better to implement any of these ideas well. Too often, siloed responsibilities and opaque specialization set back mutual accountability, effective communications, and trust. These actions entail a significant cultural and behavior change on the part of hiring managers, HR specialists, Industrial/Organizational psychologists, classifiers, and leaders. OPM and the agencies need to support hiring managers and HR specialists in finding assessments, easing the processes that can support adoption of skills-based assessments, agreeing to common PDs, and accelerating an effective hiring process.

Conclusion

The Executive Order on skills-based hiring, recent training from OPM, OMB and the CHCO Council on the federal hiring experience, and potential legislative action (e.g. Chance to Compete Act) are drivers that can improve the hiring process. Though some agencies are using PD libraries, joint postings, and shared referral certificates to improve hiring, these are far from common practice. A common platform for competencies, classifications, PDs, JOAs, and assessment tools, will make it easier for HR specialists, hiring managers and others to adopt these actions – to make hiring better and faster.

Opportunities to move promising hiring practices to habit abound. Position management, predictive workforce planning, workload modeling, hiring flexibilities and authorities, engaging candidates before, during, and after the hiring process are just some of these. Making these practices everyday habits throughout agency regions, states and programs rather than the exception will improve hiring. Looking to the future, greater delegation of human capital authorities to agencies, streamlining the regulations that support merit systems principles, and stronger commitments to customer experience in hiring, will help remove systemic barriers to an effective customer-/and user-oriented federal hiring process.

Taking the above actions on a common platform for competency development, position descriptions, and assessments will make hiring faster and better. With some of these other actions, this can change the relationship of the federal workforce to their jobs and change how the American people feel about opportunities in their government.

This action-ready policy memo is part of Day One 2025 — our effort to bring forward bold policy ideas, grounded in science and evidence, that can tackle the country’s biggest challenges and bring us closer to the prosperous, equitable and safe future that we all hope for whoever takes office in 2025 and beyond.

PLEASE NOTE (February 2025): Since publication several government websites have been taken offline. We apologize for any broken links to once accessible public data.

The Medicare Advance Healthcare Directive Enrollment (MAHDE) Initiative: Supporting Advance Care Planning for Older Medicare Beneficiaries

Taking time to plan and document a loved one’s preferences for medical treatment and end-of-life care helps respect and communicate their wishes to doctors while reducing unnecessary costs and anxiety. There is currently no federal policy requiring anyone, including Medicare beneficiaries, to complete an Advance Healthcare Directive (AHCD), which documents an individual’s preferences for medical treatment and end-of-life care. At least 40% of Medicare beneficiaries do not have a documented AHCD. In the absence of one, medical professionals may perform major and costly interventions unknowingly against a patient’s wishes.

To address this gap, the Centers for Medicare and Medicaid Services (CMS) should launch the Medicare Advance Healthcare Directive Enrollment (MAHDE) Initiative to support all adults over age 65 who are enrolled in Medicare or Medicare Advantage plans to complete and annually renew, at no extra cost, an electronic AHCD made available and stored on Medicare.gov or an alternative secure digital platform. MAHDE would streamline the process and make it easier for Medicare enrollees to complete and store directives and for healthcare providers to access them when needed. CMS could also work with the National Committee for Quality Assurance (NCQA) to expand Advance Care Planning (ACP) Healthcare Effectiveness Data and Information Set (HEDIS) measures to include all Medicare Advantage plans caring for beneficiaries aged 65 and older.

AHCDs save families unnecessary heartache and confusion at times of great pain and vulnerability. They also aim to improve healthcare decision-making, patient autonomy, and function as a long-term cost-saving strategy by limiting undesired medical interventions among older adults.

Challenge and Opportunity

Advance healthcare directives document an individual’s preferences for medical treatment in medical emergencies or at the end of life.

AHCDs typically include two parts:

- Identifying a healthcare proxy or durable power of attorney, who will make decisions about an individual’s health when they are unable to.

- A living will, which describes the treatments an individual wants to receive in emergencies—such as CPR, breathing machines, and dialysis—as well as decisions on organ and tissue donation.

Other documents complement AHCDs and help communicate treatment wishes during emergencies or at the end of life. These include do-not-resuscitate orders, do-not-hospitalize orders, and Physician (or Medical) Orders for Life-Sustaining Treatment forms, along with similar portable medical order forms for seriously ill or frail individuals (e.g., Medical Orders for Scope of Treatment, Physician Orders for Scope of Treatment, and Transportable Physician Orders for Patient Preferences). These forms are all designed to honor an individual’s healthcare preferences in a future medical emergency.

With the U.S. aging population projected to reach more than 20% of the total population by 2030, addressing end-of-life care challenges is increasingly urgent. As people age, their healthcare needs become more complex and expensive. Notably, 25% of all Medicare spending goes toward treating people in the last 12 months of their life. However, despite commonly receiving more aggressive treatments, many older adults prefer less intensive medical interventions and prioritize quality of life over prolonging life. This discrepancy between care received and a patient’s wishes is common, highlighting the need for clear and proactive communication and planning around medical preferences. Research shows patients with ACPs are less likely to receive unwanted and aggressive treatments in their last weeks of life, are more likely to enroll in hospice for comfort-focused care, and are less likely to die in hospitals or intensive care units.

Established ACP Policies and Support Mechanisms

Historically, some federal policies have underscored the importance of patient decision-making rights and the role of AHCDs in helping patients receive their desired care. These policies reflect the ongoing effort to empower patients to make informed decisions about their healthcare, particularly in end-of-life situations.

The Patient Self-Determination Act (PSDA), a federal law introduced in 1990 as a part of the Omnibus Budget Reconciliation Act, was created to ensure that patients are informed of their rights regarding medical care and their ability to make decisions about that care, especially in situations where they are no longer able to make decisions for themselves.

The Act requires hospitals, skilled nursing facilities (SNFs), home health agencies, hospice programs, and health maintenance organizations to:

- Inform patients of their rights to make decisions under state law about their medical care, including accepting or refusing treatment.

- Periodically inquire whether a patient has completed a legally valid AHCD and make note in their medical record.

- Not discriminate against patients who do or do not have an advance directive.

- Ensure AHCDs and other documented care wishes are carried out, as permitted by state law.

- Provide education to staff, patients, and the community about AHCDs and the right to make their own medical decisions.

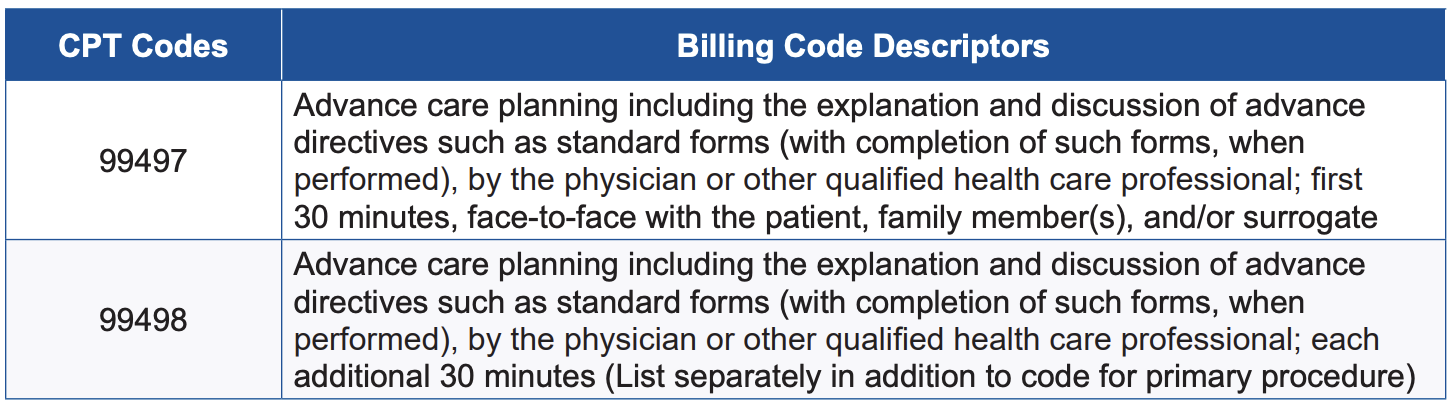

It also directs the Secretary of Health and Human Services to research and assess the implementation of this law and its impact on health decision-making. Additionally, to encourage physicians and qualified health professionals to facilitate ACP conversations and complete AHCDs, CMS introduced and approved two new billing codes in 2016, allowing qualified health providers to bill CMS for advance care planning as a separate service regardless of diagnosis, place of service, or how often services are needed (Figure 1). These codes were expanded in 2017 with the temporary Healthcare Common Procedure Coding System code G0505, followed by CPT code 99483, to offer care planning and cognitive assessment services that include advance care planning for Medicare beneficiaries with cognitive impairment.

Figure 1. Two primary CPT codes and billing descriptors for advance care planning reimbursement. (Source: CMS Medicare Learning Network Fact Sheet)

In 2022, ACP was introduced as one of four key components of the Care for Older Adults (COA) initiative within the Healthcare Effectiveness Data and Information Set measures. HEDIS is a proprietary set of clinical care performance measures developed by the National Committee for Quality Assurance (NCQA), a private, nonprofit accreditation organization that creates standardized measures to help health plans assess and report on the quality of care and services. HEDIS evaluates areas such as chronic disease management, preventive care, and care utilization, enabling reliable comparisons of health plan performance and identifying areas for improvement. It is reported that 235 million Americans are enrolled in plans that report HEDIS results, and reporting HEDIS measures is mandatory for Medicare Advantage plans.

The COA initiative includes the ACP measure as a reporting requirement for Medicare Special Needs Plans (SNPs), which are plans designed for individuals who have complex care needs, are eligible for Medicare and Medicaid, have disabling or chronic conditions, and/or live in an institution. The report includes the percentage of Medicare Advantage members within a specified population who participate in ACP discussions each year. This population currently includes:

- Adults aged 65–80 with advanced illness, signs of frailty, or those receiving palliative care.

- All adults aged 81 and older.

HEDIS measures contribute to the overall STAR rating of Medicare Advantage and other health plans, which helps beneficiaries choose high-quality plans, enables highly rated plans to attract more members, and influences the funding and bonuses CMS provides to these plans.

Despite the PSDA, CMS provider reimbursement codes that incentivize physicians and qualified health professionals to facilitate advance care planning, and its recent inclusion in HEDIS measures for Medicare Special Needs Plans, there remain many barriers to completing AHCDs.

Barriers to AHCD Completion

Although Medicare provides health and financial security to nearly all Americans aged 65 and older, completing a comprehensive AHCD is not universally expected within this population. Conversations about treatment decisions in future emergencies and end-of-life care are often avoided for various cultural, religious, financial, and mental health reasons. When they do happen, preferences are more often shared with loved ones but not documented or communicated to healthcare professionals. It is perhaps unsurprising, then, that only about half of Medicare beneficiaries have completed an AHCD. Studies show that of those who have, most do so in conjunction with estate planning, which may explain increasing cultural and socioeconomic disparities in the completion of AHCDs.

For Medicare beneficiaries who wish to complete an AHCD with a physician or qualified health professional, Medicare only covers planning as part of the annual wellness visit. If ACP is provided outside of this visit, the beneficiary must meet the Medicare Part B deductible, which is $240 in 2024, before coverage begins. If the deductible has not been met through other Part B services (such as doctor visits, preventive care, mental health services, or outpatient procedures), the beneficiary is responsible for the deductible and a 20% coinsurance payment. Additionally, some states may require attorney services or notarization to legally validate an AHCD, which could incur extra costs.

These additional costs can make it challenging for many Medicare beneficiaries to complete an AHCD when they want to. Furthermore, depending on the complexity of their situation and readiness to make decisions, many patients may need more than one visit with their clinical provider to make decisions about critical illness and end of life care, creating more out-of-pocket expenses.

AHCDs can also vary widely, are not uniform across states, and are often stored in paper formats that can be easily lost or damaged, or are embedded in bulky, multipage estate plans. Efforts to centralize AHCDs have been made through state-based AHCD registries, but their availability and management vary significantly and there is limited data on their use and effectiveness. Additionally, private ACP programs through initiatives like the Uniform Health-Care Decisions Act (UHCDA), the Five Wishes program, MyDirectives, and the U.S. Advance Care Plan Registry (USACPR), among others, have contributed to broadening ACP accessibility and awareness. However, data from private ACP programs are not widely published or have shown variable results. The UHCDA, first drafted and approved in 1993 by the Uniform Law Commission—a nonprofit organization focused on promoting consistency in state laws—was updated in 2023 and aims to address these variations in state AHCD policies, however with varying degrees of success. The Five Wishes program reports 40 million copies of their paper and digital advance directives in circulation nationwide, and their digital program recently launched a partnership with MyDirectives, a leader in digital advance care planning, to facilitate electronic access to legally recognized ACP documents. Unfortunately, data on completion and storage of these directives is not consistently reported across all users.

Despite efforts by numerous organizations to improve ACP completion, access, and usability, the lack of updated federal policy supporting advance care planning makes it difficult for patients to complete them and healthcare providers to quickly locate and interpret them in critical situations. When AHCDs are not available, incomplete, or hard to find, medical professionals may be unaware of patients’ care preferences during urgent moments, leading to treatment decisions that may not align with the patients’ wishes.

Plan of Action

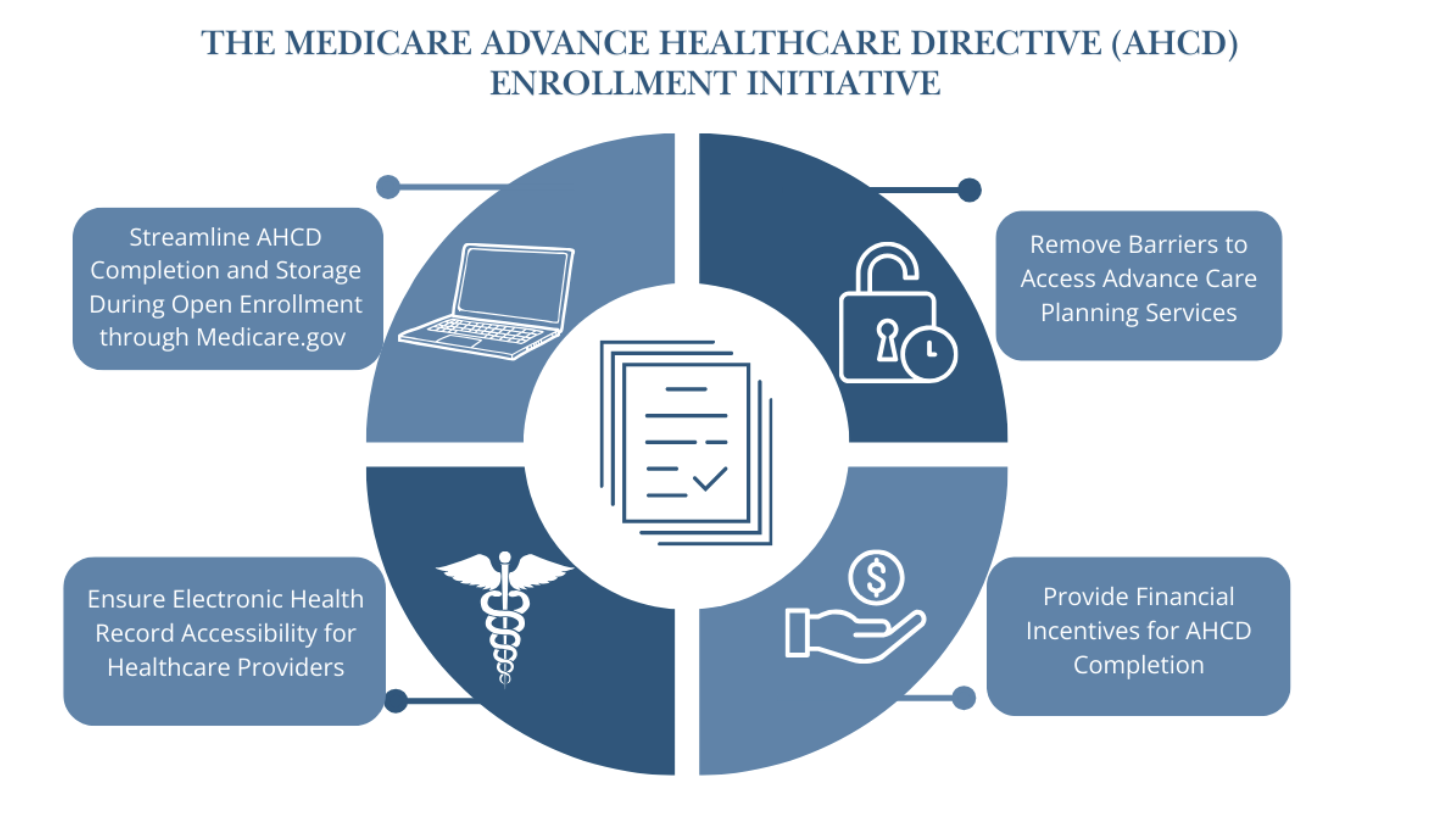

To support all Medicare beneficiaries aged 65 and older in documenting their end-of-life care preferences, encourage the completion of AHCDs, and improve accessibility of AHCDs for healthcare professionals, CMS should launch the Medicare Advance Healthcare Directive Enrollment Initiative to focus on the following four interventions.

Recommendation 1. Streamline the process of AHCD completion and electronic storage during open enrollment through Medicare.gov or an alternative CMS-approved secure ACP digital platform.

To provide more clarity and support to fulfill patients’ wishes in their end-of-life care, CMS should empower all adults over the age of 65 enrolled in Medicare and Medicare Advantage plans to complete an electronic AHCD and renew it annually, at no extra cost, during Medicare’s designated open enrollment period. Though electronic completion is preferred, paper options will continue to be available and can be submitted for electronic upload and storage.

Supporting the completion of an AHCD during open enrollment presents a strategic opportunity to integrate AHCD completion into overall discussions about healthcare options. New open enrollment tools can be made easily available on Medicare.gov or in partnership with an existing digital ACP platform such as the USACPR, the newly established Five Wishes and MyDirectives partnership, or a centralized repository of state registries, enabling beneficiaries to complete and safely store their directives electronically. User-friendly tools and resources should be tailored to guide beneficiaries through the process and should be age-appropriate and culturally sensitive.

Building on this approach, some states are also taking steps to integrate electronic ACP completion and storage into healthcare enrollment processes. For example, in 2022, Maryland unanimously passed legislation that mandates payers to offer ACP options to all members during open enrollment and at regular intervals thereafter. It also requires payers to receive notifications on the completion and updates of ACP documents. Additionally, providers are required to use an electronic platform to create, upload, and store AHCDs.

An annual electronic renewal process during open enrollment would allow Medicare beneficiaries to review their own selections and make appropriate changes to ensure their choices are up to date. The annual review will also allow for educational opportunities around the risks and benefits of life-extending efforts through the secure Medicare enrollment portal and is a time interval that accounts for the abrupt changes in health status as individuals age. The electronic enhancements also provide a better fit with the modern technological healthcare landscape and can be completed in person or via a telehealth ACP visit with a physician or qualified health professional. Updates to AHCDs can also be made at any time outside of the open enrollment period.

CMS could also work across state lines and in collaboration with private ACP organizations, the UHCDA, and state-appointed AHCD representatives to develop a universal template for advance directives that would be acceptable nationwide. Alternatively, Medicare.gov could provide tailored, state-specific electronic forms to meet state legal requirements, like the downloadable forms provided by organizations such as AARP, a nonprofit, nonpartisan organization for Americans over 50, and CaringInfo, a program of the National Hospice and Palliative Care Organization. Either approach would ensure AHCDs are legally compliant while centralizing access to the correct forms for easy completion and secure electronic storage.

Recommendation 2. Remove barriers to access advance care planning services.

CMS should remove the deductible and 20% coinsurance when beneficiaries engage in voluntary ACP services with a physician or other qualified health professional outside of their yearly wellness visit.

The current deductible and coinsurance requirements may discourage participants from completing their AHCDs with the guidance of a medical provider, as these costs can be prohibitive. This is similar to how higher cost-sharing and out-of-pocket health expenses often result in cost-related nonadherence, reducing healthcare engagement and prescription medication adherence. When individuals face higher out-of-pocket costs for care, they are more likely to delay treatments, avoid doctor visits, and fill fewer prescriptions, even if they have insurance coverage. Removing deductibles and coinsurance for ACP visits would allow individuals to complete or update their AHCDs as needed, without financial strain and with support from their clinical team, like preventive services.

Additionally, CMS could consider continued health provider education on facilitating ACPs and partnership with organizations like Institute of Healthcare Improvement’s The Conversation Project, which encourages open discussions about end-of-life care preferences. Partnering with Evolent (formerly Vital Decisions) could also support ongoing telehealth discussions between behavioral health specialists and older adults, focusing on late-life and end-of-life care preferences to encourage formal AHCD completion. Internal studies of the Evolent program, aimed at Medicare Advantage beneficiaries, demonstrated an average savings of $13,956 in the final six months of life and projected a potential Medicare spending reduction of up to $8.3 billion.

These enhancements recognize advance care planning as an ongoing process of discussion and documentation that ensures a patient’s care and interventions reflect their values, beliefs, and preferences when unable to make decisions for themselves. It also emphasizes that goals of care are dynamic, and as they evolve, beneficiaries should feel supported and empowered to update their AHCDs affordably and with guidance from educational tools and trained professionals when needed.

Recommendation 3. Ensure electronic accessibility for healthcare providers.

CMS should also integrate the Medicare.gov AHCD storage system or a CMS-approved alternative with existing electronic health records (EHRs).

EHR systems in the United States currently lack full interoperability, meaning that when patients move through the continuum of care—from preventive services to medical treatment, rehabilitation, ongoing care maintenance—and between healthcare systems, their medical records, including AHCDs, may not transfer with them. This makes it challenging for healthcare providers to efficiently access these directives and deliver care that aligns with a patient’s wishes when the patient is incapacitated. To address this, CMS could encourage the integration of the Medicare.gov AHCD storage system or an alternative CMS-approved secure ACP digital platform to interface with all EHRs.

This storage platform could operate as an external add-on feature, allowing AHCDs to be accessible through any EHR, regardless of the healthcare system. Such external add-ons are typically third-party tools or modules that integrate with existing EHR systems to extend functionality, often addressing needs not covered by the core system. These add-ons are commonly used to connect EHRs with tools like clinical decision support systems, telehealth platforms, health information exchanges, and patient communication tools.

Such a universal, electronic system would prevent AHCDs from being misplaced, make them easily accessible across different states and health systems, and allow for easy updates. This would ensure that Medicare beneficiaries’ end-of-life care preferences are consistently honored, regardless of where they receive care.

Recommendation 4. Provide financial incentives for AHCD completion.

CMS should offer financial incentives for completing an AHCD, including options like tax credits, reduced or waived copayments and deductibles, prescription rebates, or other health-related subsidies.

Medicare’s increasing monthly premiums and cost-sharing requirements are often a substantial burden, especially for beneficiaries on fixed incomes. Nearly one in four Medicare enrollees age 65 and older report difficulty affording premiums, and almost 40% of those with incomes below twice the federal poverty level struggle to cover these costs. Additional financial burdens arise from extended care beyond standard coverage limits.

For example, in 2024, Medicare requires beneficiaries to pay $408 per day for inpatient, rehabilitation, inpatient psychiatric, and long-term acute care between days 61–90 of a hospital stay, totaling $11,832. Beyond 90 days, beneficiaries incur $816 per day for up to 60 lifetime reserve days, amounting to $48,720. Once these lifetime reserve days are exhausted, patients bear all inpatient costs, and these reserve days are never replenished again once they are used. Although the average hospital length of stay is typically shorter, inpatient days under Medicare do not need to be consecutive. This means if a patient is discharged and readmitted within a 60-day period, these patient payment responsibilities still apply and will not reset until there has been at least a 60-day break in care.

Medicare coverage for skilled nursing facilities is similarly limited: While Medicare fully covers the first 20 days when transferred from a qualified inpatient stay (at least three consecutive inpatient days, excluding the day of discharge), days 21–100 require a copayment of $204 per day, totaling $16,116. After 100 days, all SNF costs fall to the beneficiary. These costs are significant, and without out-of-pocket maximums, they can create financial hardship.

Some of these costs can be subsidized with Medicare Supplemental Insurance, or Medigap plans, but they come with additional premiums. By regularly educating patients and families of these costs—and offering tax credits, waived or reduced copayments and deductibles, prescription rebates, or account credits—CMS could provide substantial financial relief while encouraging the completion of AHCDs.

Encourage Expansion of the NCQA’s ACP HEDIS Measure

Finally, the MAHDE Initiative can be coupled with the expansion of the HEDIS measure to establish a comprehensive strategy for advancing proactive healthcare planning among Medicare beneficiaries. By encouraging both the accessibility and completion of AHCDs, while also integrating ACP as a quality measure for all Medicare Advantage enrollees aged 65 and older, CMS would embed ACP into standard patient care. This approach would incentivize health plans to prioritize ACP and help align patients’ care goals with the services they receive, fostering a more patient-centered, value-driven model of care within Medicare.

Figure 2. Four key features of the Medicare Advance Healthcare Directive Enrollment (MAHDE) Initiative. This initiative should also work in tandem with efforts to encourage the NCQA to expand ACP HEDIS measures to include all Medicare Advantage beneficiaries aged 65 and older. (Source: Dr. Tiffany Chioma Anaebere)

Conclusion

When patients and their families are clear on their goals of care, it is much less challenging for medical staff to navigate crises and stressful clinical situations. In unfortunate cases when these decisions have not been discussed and documented before a patient becomes incapacitated, doctors witness families struggle deeply with these choices, often leading to intense disagreements, conflict, and guilt. This uncertainty can also result in care that may not align with the patient’s goals.

Physicians and other qualified health professionals should continue to be trained on best practices to facilitate ACP with patients, and more importantly, the system should be redesigned to support these conversations early and often for all older Americans. The MAHDE Initiative is feasible, empowers patients to engage in ACP, and will reduce medical costs nationwide by allowing patients to be educated about their options and choose the care they want in future emergencies and at the end of life.

Starting an AHCD enrollment initiative with the Medicare population older than age 65 and achieving success in this group can pave the way for expanding ACP efforts to other high-need groups and, eventually, the general population. This approach fosters a healthcare environment where, as a nation, we become more comfortable discussing and managing healthcare decisions during emergencies and at the end of life.

This action-ready policy memo is part of Day One 2025 — our effort to bring forward bold policy ideas, grounded in science and evidence, that can tackle the country’s biggest challenges and bring us closer to the prosperous, equitable and safe future that we all hope for whoever takes office in 2025 and beyond.

PLEASE NOTE (February 2025): Since publication several government websites have been taken offline. We apologize for any broken links to once accessible public data.