Fueling the Bioeconomy: Clean Energy Policies Driving Biotechnology Innovation

The transition to a clean energy future and diversified sources of energy requires a fundamental shift in how we produce and consume energy across all sectors of the U.S. economy. The transportation sector, a sector that heavily relies on fossil-based energy, stands out not only because it is the sector that releases the most carbon into the atmosphere, but also for its progress in adopting next-generation technologies when it comes to new technologies and fuel alternatives.

Over the past several years, the federal government has made concerted efforts to support clean energy innovation in transportation, both for on-road and off-road. Particularly, in hard-to-electrify transportation sub-sectors, there has been added focus such as through the Sustainable Aviation Fuel (SAF) Grand Challenge. These efforts have enabled a wave of biotechnology-driven solutions to move from research labs to commercial markets, such as LanzaJets alcohol-to-jet technology in producing SAF. From renewable fuels to bio-based feedstocks, biotechnologies are enabling the replacement of fossil-derived energy sources and contributing to a more sustainable, secure, and diversified energy system.

SAF in particular has gained traction, enabled in part by public investment and interagency coordination, like the SAF Grand Challenge Roadmap. This increased federal attention demonstrated how strategic federal action, paired with demand signals from government, targeted incentives, and industry buy-in, can create the conditions needed to accelerate biotechnology adoption.

To better understand the factors driving this progress, FAS conducted a landscape analysis at the federal and regional level of biotechnology innovation within the clean energy sector, complemented by interviews with key stakeholders. Several policy mechanisms, public-private partnerships, and investment strategies were identified that were enablers of advanced SAF adoption and production and similar technologies. By identifying the enabling conditions that supported biotechnology’s uptake and commercialization, we aim to inform future efforts on how to accelerate other sectors that utilize biotechnologies and overall, strengthen the U.S. bioeconomy.

Key Findings & Recommendations

An analysis of the federal clean energy landscape reveals several critical insights that are vital for advancing the development and deployment of biotechnologies. Federal and regional strategies are central to driving innovation and facilitating the transition of biotechnologies from research to commercialization. The following key findings and actionable recommendations address the challenges and opportunities in accelerating this transition.

Federal Level Key Findings & Recommendations

The federal government plays a pivotal role in guiding market signals and investment toward national priorities. In the clean energy sector, decarbonizing aviation has emerged as a strategic objective, with SAF serving as a critical lever. Federal initiatives such as the SAF Grand Challenge, the SAF Roadmap, and the SAF Metrics Dashboard have helped to elevate SAF within national climate priorities and enabled greater interagency coordination. These mechanisms not only track progress but also communicate federal commitment. Still, despite these efforts, current SAF production remains far below target levels, with capacity largely concentrated in HEFA, a pathway with constrained feedstock availability and limited scalability.

This production gap reflects deeper structural challenges, many of which parallel broader issues across the clean-energy biotech interface. One of the main challenges is the fragmented, short-duration policy incentives currently in use. Tax credits like 40B and 45Z, while important, lack the longevity and clarity required to unlock large-scale, long-term private investment. The absence of binding fuel mandates further undermines market certainty. These policy gaps limit the ability of the clean energy sector to serve as a sustained demand signal for emerging biotechnologies and slow the transition from pilot to commercial scale.

Importantly, these challenges point to a broader opportunity: SAF as a test case for how the clean energy sector can serve as a driver of biotechnology uptake. Promising biotechnologies, such as alcohol-to-jet and power-to-liquid, are currently stalled by high capital costs, uncertain regulatory pathways, and a lack of coordinated federal support. Addressing these bottlenecks through aligned incentives, technology-neutral mandates, and harmonized accounting frameworks could not only accelerate SAF deployment but also establish a broader policy blueprint for scaling biotechnology across other clean energy applications.

To alleviate some of the challenges identified, the federal government should:

Extend & Clarify Incentives

While tax incentives such as the 45Z Clean Fuel Production Credit offer a promising framework to accelerate low-carbon fuel deployment, current design and implementation challenges limit their impact, particularly for emerging bio-based and synthetic fuels. To fully unlock the climate and market potential of these incentives, Congress and relevant agencies should take the following steps:

- Congress should amend the 45Z tax credit structure to differentiate between fuel types, such as SAF, e-fuels, biofuels, and renewable diesel, based on life cycle CI and production pathways. This would better reflect technology-specific costs and accelerate deployment across multiple clean fuel markets, adding specificity as to how to utilize and earn the credits based on the type of fuel.

- Congress should extend the duration of the 45Z credit and other clean-fuel related incentives to provide long-term policy certainty. Multi-year extensions with a defined minimum value floor would reduce investment risk and enable financing of capital-intensive projects.

- Congress and the Department of Treasury should clarify eligibility to ensure inclusion of co-processing methods and hybrid production systems, which are currently in regulatory gray areas. This would ensure broader participation by innovative fuel producers.

- Federal agencies, including the Department of Energy (DOE), Department of Transportation (DOT), and the Department of Defense, should be directed to enter into long-term (more than 10 years) procurement agreements for low-carbon fuels, including electrofuels and SAF. These offtake mechanisms would complement tax incentives and send strong market signals to producers and investors.

Scale Biotech Commercialization Support

The clean energy transition depends in part on the successful commercialization of enabling biotechnologies, ranging from advanced biofuels to bio-based carbon capture, SAF and biomanufacturing platforms that reduce industrial emissions. Recent or proposed funding cuts to clean energy programs risk stalling this progress and undermining U.S. competitiveness in the bioeconomy.

To accelerate biotechnology deployment and bridge the gap between lab-scale innovation and commercial-scale production, Congress should take the following actions:

- Authorize and appropriate expanded funding to the DOE, particularly through Bioenergy Technologies Office (BETO) and to the Department of Agriculture (USDA) to support pilot, demonstration, and first-of-a-kind commercial scale projects that enable biotechnology applications across clean energy sectors.

- Direct and fund the DOE Loan Programs Office to establish a dedicated loan guarantee program focused on biotechnology commercialization, targeting platforms that can be integrated into the energy system, such as bio-based fuels, bioproducts, carbon utilization technologies, and electrification-enabling materials.

- Encourage DOE and USDA to enter into long-term offtake agreements or structured purchasing mechanisms with qualified bioenergy and biomanufacturing companies. These agreements would help de-risk early commercial projects, crowd in private investment, and provide market certainty during the critical scale-up phase.

- Strengthen public-private coordination mechanisms, such as cross-sector working groups or interagency task forces, to align commercialization support with industry needs, improve program targeting, and reduce time-to-market for promising technologies.

Design and Promote Next-Gen Biofuel Policies

To accelerate the deployment of low-carbon fuels and enable innovation in next-generation bioenergy technologies, Congress and relevant agencies should take the following actions:

- Congress should direct the Environmental Protection Agency (EPA) to modernize the Renewable Fuel Standard by incorporating life cycle carbon intensity as a core metric, moving beyond volume-based mandates. Legislative authority could also support the development of a national Low Carbon Fuel Standard, modeled on successful state-level programs to drive demand for fuels with demonstrable climate benefits.

- EPA should update its emissions accounting framework to reflect the latest science on life cycle greenhouse gas (GHG) emissions, enabling more accurate assessment of advanced biofuels and synthetic fuels.

- DOE should expand R&D and demonstration funding for biofuel pathways that meet stringent carbon performance thresholds, with an emphasis on scalability and compatibility.

Regional Level Key Findings & Recommendations

Regional strengths continue to serve as foundational drivers of clean energy innovation, with localized assets shaping the pace and direction of technology development. Federal designations, such as the Economic Development Administration (EDA) Tech Hub program (Tech Hub), have proven catalytic. These initiatives enable regions to unlock state-level co-investment, attract private capital, and align workforce training programs with local industry needs. Early signs suggest that the Tech Hub framework is helping to seed innovation ecosystems where they are most needed, but long-term impact will depend on sustained funding support and continued regional coordination.

Workforce readiness and enabling infrastructure remain critical differentiators. Regions with deep and committed involvement from major research universities, national labs, or advanced manufacturing clusters are better positioned to scale innovation from prototype to deployment. Real-world testbeds provide environments for stress-testing technologies and accelerating regulatory and market readiness, reinforcing the importance of place-based strategies in federal innovation planning.

At the same time, private investment in clean energy and enabling biotechnologies remains crucial to developing and scaling innovative technologies. High capital costs, regulatory uncertainty, and limited early-stage demand signals continue to inhibit market entry, especially in geographies with less mature innovation ecosystems. Addressing these barriers through coordinated federal procurement, long-term incentives, and regional capacity-building will be essential to supporting growth in regions with strong assets to develop industry clusters that could yield clean energy benefits.

To accomplish this, the federal government and regional governments should:

Strengthen Regional Workforce Pipelines

A skilled and regionally distributed workforce is essential to realizing the full economic and technological potential of clean energy investments, particularly as they intersect with the bioeconomy. While federal funding is accelerating deployment through initiatives such as the IRA and DOE programs, workforce gaps, especially outside major innovation hubs, pose barriers to implementation. Addressing these gaps through targeted education, training, and talent retention efforts will be critical to ensuring that clean energy projects deliver durable, regionally inclusive economic growth. To this end:

- Federal agencies like the Department of Education and National Science Foundation should explore expanding support for STEM programs at community colleges and Minority Serving Institutions, with a focus on biosciences, engineering, and agricultural technologies relevant to the clean energy transition.

- Federally supported training and reskilling programs tailored to regional clean energy and biomanufacturing workforce needs could benefit new and existing cross-sector partnerships between state workforce agencies and regional employers.

- State and local governments should consider implementing talent retention strategies, including local hiring incentives, relocation support, and career placement services, to ensure that skilled workers remain in and contribute to regional clean energy ecosystems.

Strengthen Regional Infrastructure and Foster Cross-Sector Collaboration

Robust regional infrastructure and cross-sector collaboration are essential to accelerating the deployment of clean energy technologies that leverage advancements in biotechnology and manufacturing. Strategic investments in shared facilities, modernized logistics, and coordinated innovation ecosystems will strengthen supply chain resilience and improve technology transfer across sectors. Facilitating access to R&D infrastructure, particularly for small and mid-sized enterprises, will ensure that innovation is not limited to large firms or major metropolitan areas. To support these outcomes:

- Federal support for regional testbeds, prototyping sites, and grid modernization labs, coordinated by agencies such as DOE and EDA, would support the demonstration and scaling of biologically enabled clean energy technologies.

- State and local governments, in coordination with federal agencies including DOT and DOC, explore investment in logistics infrastructure to enhance supply chain reliability and support distributed manufacturing.

- States should consider creating or expanding the use of innovation voucher programs that allow small and mid-sized enterprises to access national lab facilities, pilot-scale infrastructure, and technical expertise, fostering cross-sector collaboration between clean energy, biotech, and advanced manufacturing firms.

Attract and De-Risk Private Capital

Attracting and de-risking private capital is critical for scaling clean energy and biotechnology innovations. By offering targeted financial mechanisms and leveraging federal visibility, governments can reduce the financial uncertainties that often deter private investment. Effective strategies, such as state-backed loan guarantees and co-investment models, can help bridge funding gaps while strategic partnerships with philanthropic and venture capital entities can unlock additional resources for emerging technologies. To this end:

- State governments, in collaboration with the federal agencies such as DOE and Treasury, should consider implementing state-backed loan guarantees and co-investment models to attract private capital into high-risk clean energy and biotech projects.

- Federal agencies like EDA, DOE, and the SBA should explore additional programs and partnerships to attract philanthropic and venture capital to emerging clean energy technologies, particularly in underserved regions.

- Federal agencies should increase efforts to facilitate connecting early-stage companies with potential investors, using federal initiatives to build investor confidence and reduce perceived risks in the clean energy sector.

Cross-Cutting Key Findings

The successful deployment of federal clean energy and biotechnology initiatives, such as the SAF Grand Challenge, relies heavily on the capacity of regional ecosystems and the private sector to absorb and implement national goals. Many regions, particularly those outside established innovation hubs, lack the infrastructure, resources, and technical expertise to effectively utilize federal funding. As a result, the impact of national policies is often limited, and the full potential of federal investments goes unrealized in certain areas.

Federal programs often take a one-size-fits-all approach, overlooking regional variability in feedstocks, industrial bases and cost structures. Programs like tax credits and life cycle analysis models can unintentionally disadvantage regions with different economic contexts, creating disparities in access to federal incentives. This lack of regional customization prevents certain areas from fully benefiting from national clean energy and biotech initiatives.

The diffusion of innovation in clean energy and biotechnology remains concentrated in a few key regions, leaving others underutilized. Despite robust federal R&D investments, commercialization and scaling of innovations are primarily concentrated in regions with established infrastructure, hindering the broader geographic spread of these technologies. In addition, workforce development efforts across federal and regional programs are fragmented, creating misalignments in talent pipelines and further limiting the ability of local industries to leverage available resources effectively. The absence of a unified system for tracking key metrics, such as SAF production and emissions reductions, makes it difficult to coordinate efforts or assess progress consistently across regions. To address this, the federal and regional governments should:

Create a Federal–Regional Clean Energy Deployment Compact

A Federal-Regional Clean Energy Deployment Compact is critical for aligning federal clean energy initiatives with the unique capabilities and needs of regional ecosystems. By establishing formal mechanisms, such as intergovernmental councils and regional liaisons, federal programs can be more effectively tailored to local conditions. These mechanisms will ensure two-way communication between federal agencies and regional stakeholders, fostering a collaborative approach that adapts to evolving technological, economic, and environmental conditions. In addition, treating regional tech hubs and initiatives as testbeds for new policy tools, such as performance-based incentives or carbon standards, will allow for innovative solutions to be tested locally before scaling them nationally, ensuring that policies are effective and contextually relevant across diverse regions. To this end:

- The White House Office of Science and Technology Policy (OSTP), in collaboration with the DOE and EPA, should establish formal intergovernmental councils or regional liaisons to facilitate ongoing dialogue between federal agencies and regional stakeholders. These councils would focus on aligning federal clean energy initiatives with regional needs, ensuring that local priorities, such as feedstock availability or infrastructure readiness, are considered in policy design.

- The DOE and EPA should treat tech hubs and regional clean energy initiatives as testbeds for policy innovation. These regions can pilot performance-based incentives, carbon standards, and other policy tools to assess their effectiveness before scaling them nationally. Successful models developed in these testbeds should be expanded to other regions, with lessons learned shared across state and local governments.

- Regional universities and innovation hubs should collaborate with federal and state agencies to develop pilot programs that test new policy tools and technologies. These institutions can serve as incubators for innovative clean energy solutions, providing valuable data on what policies work best in specific regional contexts.

Build a National Innovation-to-Deployment Pipeline

Creating a seamless innovation-to-deployment pipeline is essential for scaling clean energy technologies and ensuring that regional ecosystems can fully participate in national clean energy transitions. By linking DOE national labs, Tech Hubs, and regional consortia into a coordinated network, the U.S. can support the full life cycle of innovation, from early-stage R&D to commercialization and deployment, across diverse geographies. Additionally, co-developing curricula and training programs between federal agencies, regional tech hubs, and industry partners will ensure that talent pipelines are closely aligned with the evolving needs of the clean energy sector, providing the skilled workforce necessary to implement and scale innovations effectively. To accomplish this the:

- DOE should facilitate the creation of a national network that connects federal labs, regional tech hubs and innovation consortia. This network would provide a clear pathway for the transition of technologies from research to commercialization, ensuring that innovations can be deployed across different regions based on local needs and capacities.

- Regional Tech Hubs, in partnership with local universities and research institutions, should be integrated into the pipeline to provide localized innovation support and commercialization expertise. These hubs can act as nodes in the broader network, offering the infrastructure and expertise necessary for scaling up clean energy technologies.

Develop a Shared Metrics and Monitoring Platform

A centralized dashboard for tracking key metrics related to clean energy and biotechnology initiatives is crucial for guiding investment and policy decisions. By integrating federal and regional data can provide a comprehensive, real-time view of progress across the country. This shared platform would enable better coordination among federal, state, and local agencies, ensuring that resources are allocated efficiently and that policy decisions are informed by accurate, up-to-date data. Moreover, a unified system would allow for more effective tracking of regional performance, enabling tailored solutions and based on localized needs and challenges. To this end:

- The DOE, in partnership with the EPA, should lead the development of a centralized dashboard that integrates existing federal and regional data on SAF production, emissions reductions, workforce needs, and infrastructure gaps. This platform should be publicly accessible, allowing stakeholders at all levels to monitor progress and identify opportunities for improvement.

- State and local governments should contribute relevant data from regional initiatives, including workforce training programs, infrastructure development projects, and emissions reductions efforts. These contributions would help ensure that the platform reflects the full range of activities across different regions, providing a more accurate picture of national progress.

The Department of Labor and the DOC should integrate workforce development and industrial capacity data into the platform. This would include information on training programs, regional workforce readiness, and skills gaps, helping policymakers align talent development efforts with regional needs.

The DOT should ensure that transportation infrastructure data, particularly related to SAF production and distribution networks, is included in the platform. This would provide a comprehensive view of the supply chain and infrastructure readiness necessary to scale clean energy technologies across regions.

The clean energy sector, and specifically SAF, highlights both the promise and the persistent challenges of scaling biotechnologies, reflecting broader issues, such as fragmented regulation, limited commercialization support, and misaligned incentives that hinder the deployment of advanced biotechnologies. Overcoming these systemic barriers requires coordinated, long-term policies including performance-based incentives, and procurement mechanisms that reduce investment risk and free up capital. SAF should be seen not as a standalone initiative but as a model for integrating biotechnology into industrial and energy strategy, supported by a robust innovation pipeline, expanded infrastructure, and shared metrics to guide progress. With sustained federal leadership and strategic alignment, the bioeconomy can become a key pillar of a low-carbon, resilient energy future.

Planning for the Unthinkable: The targeting strategies of nuclear-armed states

This report was produced with generous support from Norwegian People’s Aid.

The quantitative and qualitative enhancements to global nuclear arsenals in the past decade—particularly China’s nuclear buildup, Russia’s nuclear saber-rattling, and NATO’s response—have recently reinvigorated debates about how nuclear-armed states intend to use their nuclear weapons, and against which targets, in what some describe as a new Cold War.

Details about who, what, where, when, why, and how countries target with their nuclear weapons are some of states’ most closely held secrets. Targeting information rarely reaches the public, and discussions almost exclusively take place behind closed doors—either in the depths of military headquarters and command posts, or in the halls of defense contractors and think tanks. The general public is, to a significant extent, excluded from those discussions. This is largely because nuclear weapons create unique expectations and requirements about secrecy and privileged access that, at times, can seem borderline undemocratic. Revealing targeting information could open up a country’s nuclear policies and intentions to intense scrutiny by its adversaries, its allies, and—crucially—its citizens.

This presents a significant democratic challenge for nuclear-armed countries and the international community. Despite the profound implications for national and international security, the intense secrecy means that most individuals—not only including the citizens of nuclear-armed countries and others that would bear the consequences of nuclear use, but also lawmakers in nuclear-armed and nuclear umbrella states that vote on nuclear weapons programs and policies—do not have much understanding of how countries make fateful decisions about what to target during wartime, and how. When lawmakers in nuclear-armed countries approve military spending bills that enhance or increase nuclear and conventional forces, they often do so with little knowledge of how those bills could have implications for nuclear targeting plans. And individuals across the globe do not know whether they live in places that are likely to be nuclear targets, or what the consequences of a nuclear war would be.

While it is reasonable for governments to keep the most sensitive aspects of nuclear policies secret, the rights of their citizens to have access to general knowledge about these issues is equally valid so they may know about the consequences to themselves and their country, and so that they can make informed assessments and decisions about their respective government’s nuclear policies. Under ideal conditions, individuals should reasonably be able to know whether their cities or nearby military bases are nuclear targets and whether their government’s policies make it more or less likely that nuclear weapons will be used.

As an organization that seeks to empower individuals, lawmakers, and journalists with factual information about critical topics that most affect them, the Federation of American Scientists—through this report—aims to help fill some of these significant knowledge gaps. This report illuminates what we know and do not know about each country’s nuclear targeting policies and practices, and considers how they are formulated, how they have changed in recent decades, whether allies play a role in influencing them, and why some countries are more open about their policies than others. The report does not claim to be comprehensive or complete, but rather should be considered as a primer to help inform the public, policymakers, and other stakeholders. This report may be updated as more information becomes available.

Given the secrecy associated with nuclear targeting information, it is important at the outset to acknowledge the limitations of using exclusively open sources to conduct analysis on this topic. Information in and about different nuclear-armed states varies significantly. For countries like the United States—where nuclear targeting policies have been publicly described and are regularly debated inside and outside of government among subject matter experts—official sources can be used to obtain a basic understanding of how nuclear targets are nominated, vetted, and ultimately selected, as well as how targeting fits into the military strategy. However, there is very little publicly available information about the nuclear strike plans themselves or the specific methodology and assumptions that underpin them. For less transparent countries like Russia and China—where targeting strategy and plans are rarely discussed in public—media sources, third-country intelligence estimates, and nuclear force structure analysis can be used, in conjunction with official statements or statements from retired officials, to make educated assumptions about targeting policies and strategies.

It is important to note that a country’s relative level of transparency regarding its nuclear targeting policies does not necessarily echo its level of transparency regarding other aspects of its governance structure. Ironically, some of the most secretive and authoritarian nuclear-armed states are remarkably vocal about what they would target in a nuclear war. This is typically because those same countries use nuclear rhetoric as a means to communicate deterrence signals to their respective adversaries and to demonstrate to their own population that they are standing up to foreign threats. For example, while North Korea keeps many aspects of its nuclear program secret, it has occasionally stated precisely which high-profile targets in South Korea and across the Indo-Pacific region it would strike with nuclear weapons. In contrast, some other countries might consider that frequently issuing nuclear threats or openly discussing targeting policies could potentially undermine their strategic deterrent and even lower the threshold for nuclear use.

The Two-Hundred Billion Dollar Boondoggle

Nearly one year after the Pentagon certified the Sentinel intercontinental ballistic missile program to continue after it incurred critical cost and schedule overruns, the new nuclear missile could once again be in trouble.1

An April 16th article from Defense Daily broke the news that the Air Force will have to dig new holes for the Sentinel silos.2 The service had been planning to refurbish the existing 450 Minuteman silos but recently discovered, as noted in a follow-up article from Breaking Defense, that the silos will “largely not be reusable after all.”3 Brig. Gen. William Rogers, the Air Force’s director of the ICBM Systems Directorate, cited asbestos, lead paint, and other issues with the existing silos that make refurbishment difficult.4 Air Force officials also stated that an ongoing study into missileer cancer rates played a role in the decision to build new silos.5

This news comes shortly after reports that the Air Force is planning to extend the life of the currently deployed Minuteman III ICBMs until “at least” 2050—roughly 20 years beyond their intended service lives—due to delays in the Sentinel program.6

For those who have been tracking the Sentinel development since the Air Force first conceptualized a new ICBM in the early 2010s, the reports of Minuteman life-extension likely made them pause and recall the common refrain from Sentinel proponents over the years that life-extending Minuteman III missiles would be too expensive or even impossible. “You cannot life-extend Minuteman III,” then-commander of US Strategic Command Adm. Charles Richard told reporters in 2021.7 In 2016, the Air Force told Congress that the Minuteman III was aging out, therefore the “GBSD solution” was necessary to ensure the future viability of the ICBM force (GBSD is short for Ground-Based Strategic Deterrent, the programmatic name for the ICBM before Sentinel was chosen in 2022). Air Force officials still maintain that a life-extension program for Minuteman is not possible. In their words, Minuteman will be “sustain[ed] to keep it viable until Sentinel is delivered.”8 Regardless of how the Air Force refers to the effort, it appears that Minuteman III will be made to operate well beyond its planned service life.

For some, like our team at the Federation of American Scientists’ Nuclear Information Project, Sentinel’s newest struggles came as no surprise at all. For years, it has been clear to observers that this program has suffered from chronic unaccountability, overconfidence, poor performance, and mismanagement. Project benchmarks were cherry-picked, viable alternatives were prematurely dismissed, competition was discouraged, and goalposts were continuously moved. Ultimately, it will be U.S. taxpayers who pay the increasingly rising costs, and other—more critical—priorities will suffer as Sentinel continuously sucks money away from other programs.

It comes as no surprise that Sentinel was specifically named in the White House’s recent memo requiring all Major Defense Acquisition Programs more than 15% over-budget or behind schedule to be “reviewed for cancellation;” Sentinel is the poster-child for inefficiency, which the administration claims to be obsessed with eliminating.9 In order to prevent this type of mismanagement for future programs, we must first understand how Sentinel went so wrong.

How We Got Here

The Federation of American Scientists has been intensively tracking the progress of the Sentinel program for years. Throughout the acquisition process, the Air Force clung to its fundamental and counterintuitive assumption that building an entirely new ICBM from scratch would be cheaper than life-extending the current system. We now know that this assumption was wildly incorrect, but how did it reach this point?

Cherry-picked project benchmarks

When seeking to plug a capability gap, the Pentagon is required to consider a range of procurement options before proceeding with its acquisition. This process takes place over several years and culminates in an “Analysis of Alternatives”—a comparative evaluation of the operational effectiveness, suitability, risk, and life-cycle costs of the various options under consideration. This assessment can have tremendous implications for an acquisition program, as it documents the rationale for recommending a particular course of action.

The Air Force’s Analysis of Alternatives for the program that would eventually become Sentinel was conducted between 2013 and 2014, and concluded that the costs of pursuing a Minuteman III life-extension would be nearly the same as those projected for Sentinel.10 Crucially, this cost comparison was pegged to a predetermined requirement to continue deploying the same number of missiles until the year 2075.11

These benchmarks, despite having no apparent inalterable national security imperative, appear to have played a significant role in shaping perceptions of the two options. While it is now clear that Minuteman III could be—and likely will be—life-extended for several more decades, the Air Force does not have enough airframes to keep at least 400 of them in service through 2075 and maintain the testing campaign needed to ensure reliability. As a result, in order to push the ICBM force beyond 2075, the Air Force would need to life-extend Minuteman III and pursue a follow-on system after that point.

This was reportedly reflected in the Air Force’s cost analysis, which explains why the cost of the Minuteman III life-extension option was estimated by the Air Force to be roughly the same as the cost of building an entirely new ICBM.12 The service was not simply comparing the costs of a life-extension and a brand-new system; it was instead comparing the costs of pursuing Sentinel immediately on the one hand, versus a Minuteman III life-extension and development of a follow-on system on the other hand.

Of course, policymakers require benchmarks in order to make estimates: it would not be reasonable to analyze the feasibility of a particular system without considering how long and at what level that system needs to perform. However, in the case of the Sentinel, selecting those particular benchmarks at the beginning of the process essentially pre-baked the analysis before it even began in earnest.

Let’s say a different evaluation benchmark had been selected—2050, for example, rather than 2075.

In January 2025, Defense Daily reported that the Air Force would likely have to keep portions of the Minuteman III fleet in service until 2050 or later.13 This may require altering certain aspects of the Minuteman III’s deployment—such as reducing the number of deployed ICBMs or annual test launches in order to preserve airframes. While no final decisions have been made, the Air Force is clearly evaluating continued reliance on Minuteman III as a potential option, despite years of high-ranking military and political officials stating that doing so was impossible.14

Benchmarking the cost analysis at 2050 rather than 2075 would have thus yielded wildly different results. In 2012, the Air Force admitted that it cost only $7 billion to modernize its Minuteman III ICBMs into “basically new missiles except for the shell.”15 While getting those same missiles past 2050 would certainly add additional cost and complexity—particularly to replace parts whose manufacturers no longer exist—it is unfathomable that the costs would come anywhere close to those of the Sentinel program, which was estimated by the Pentagon’s Director of Cost Assessment and Program Evaluation (CAPE) in 2020 (before the critical cost overrun) to have a total lifecycle cost of $264 billion in then-year dollars.

It is particularly troubling that very few public or independent government-sponsored analyses were conducted to look into the Sentinel program’s flawed assumptions, nor the realistic possibility of a Minuteman III life-extension. Countless congressional and non-governmental attempts to push for one were stymied at every turn. In 2019, for example, dozens of lobbyists from the Sentinel contract bidders successfully helped to eliminate a proposed amendment to the National Defense Authorization Act calling for an independent study on a Minuteman III life-extension program.16

The most comprehensive public study on this issue was a 2022 report published by the Carnegie Endowment for International Peace under contract from the Pentagon; however, the study noted that “the iterative process through which we received information, the unclassified nature of our study, and the limited time available for investigating DOD conclusions left us unable to assess the DOD’s position regarding the technical and cost feasibility of an extended Minuteman III alternative to GBSD;” the authors ultimately concluded that a more detailed technical analysis was required in order to answer these questions.17

While the findings of such a study will never be known, it is likely that they would have supported what was clear to government watchdogs at the time and has been validated in spades since then: the assumptions baked into this program were flawed from the start, and the system’s costs would be significantly larger than initially expected. Given that the Pentagon ultimately went in the opposite direction, taxpayers are now on the hook for both a de facto Minuteman III life-extension program as well as the substantial costs associated with acquiring Sentinel—with limited further possibilities for near-term cost mitigation.

Failure to predict the true costs and needs of the program

In addition to the cherry-picked benchmarks that tipped the scales towards a brand-new ICBM, when comparing costs the Air Force made a key error in its assumptions: it assumed that the Sentinel would be able to reuse much of the original Minuteman launch infrastructure.

Some level of infrastructure modernization for the Sentinel was always planned, including building entirely new launch control centers and additional infrastructure for the launch facilities.18 However, the original plans called for reusing existing copper command and control cabling and the refurbishment—not reconstruction—of 450 silos. Both assumptions have proven incorrect, and perhaps more than anything else, now represent the single greatest driver of Sentinel’s skyrocketing costs.

While both the current cabling and launch facilities work fine for the existing Minuteman III and would presumably function similarly following a life-extension, they are apparently incompatible with Sentinel’s increasingly complex design.

The Air Force must now dig up and replace 7,500 miles of cabling with the latest fiber optic cables. Much of these cables are buried underneath private property, meaning that local landowners must lease 100-foot-wide lines on their property to the Pentagon to be dug up for multi-year periods.19

In addition, both the Air Force and Northrop Grumman have now recognized that it will take more than simple refurbishments to make the existing Minuteman III launch facilities compatible with Sentinel. Both the service and the contractor have stated that several of the assumptions regarding the conversion process that went into the 2020 baseline review have now proved to be incorrect.20

As a result, the Air Force is apparently now planning to build entirely new launch facilities to house the Sentinel, most of which will require digging new holes in the ground.21 As one Northrop Grumman official explained, “When you multiply that by 450, if every silo is a little bit bigger or has an extra component, that actually drives a lot of cost because of the sheer number of them that are being updated.”22 It is unclear whether the costs will increase beyond the new estimate released with the Nunn-McCurdy decision, but the program is clearly trending in the wrong direction.

The Air Force had been publicly teasing the prospect of digging new holes for nearly a year. At the Triad Symposium in Washington, D.C., in September 2024, Maj. Gen. Colin Connor, director of ICBM Modernization at Barksdale Air Force Base, responded to an audience question about the new silos rumor by saying, “we’re looking at all of our options.” Despite the noncommittal answer, the decision to dig new silos seems to have already been made by the time of Connor’s statement.

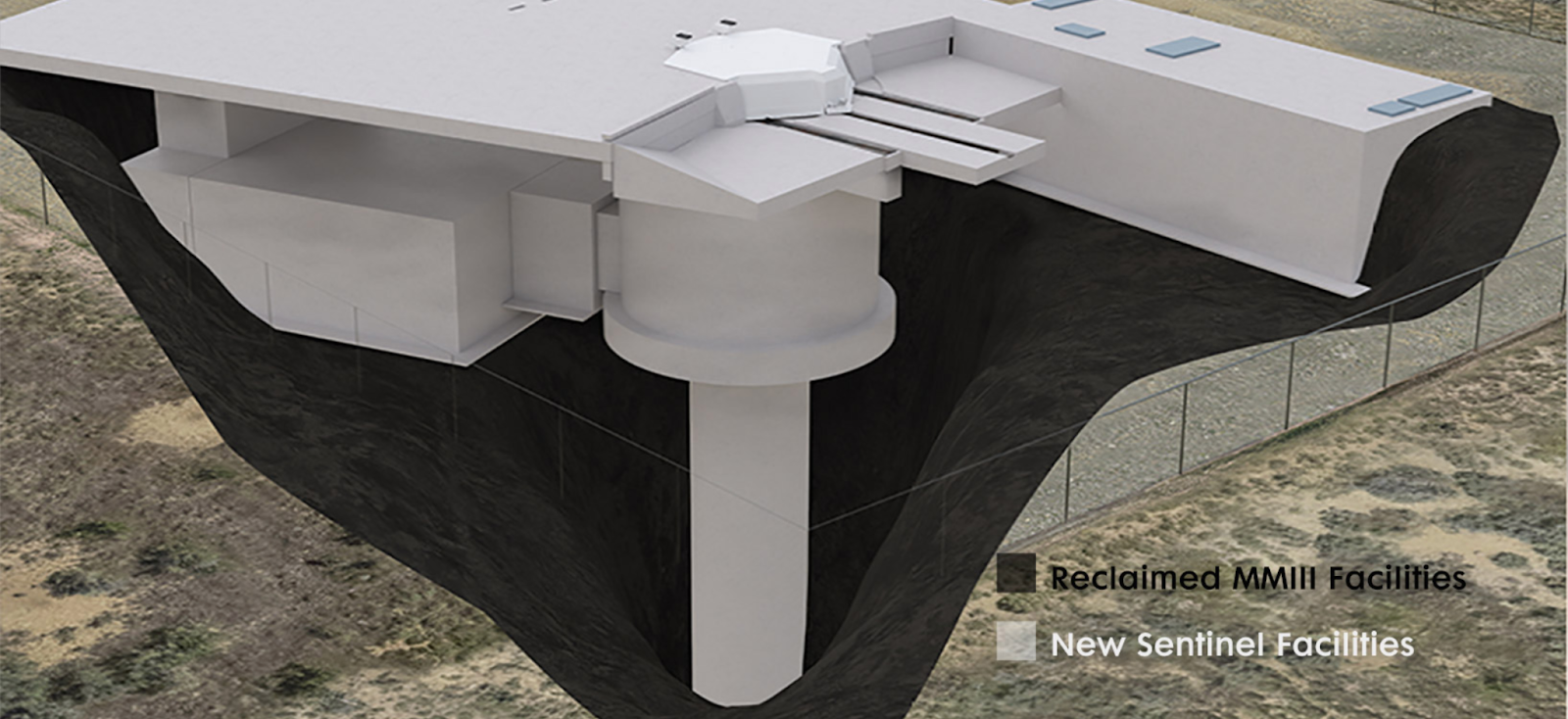

Firstly, it has since been revealed that the estimated costs of the new silos were included in the Nunn-McCurdy review process which concluded in July 2024. Additionally, although the decision was not made public until the April 16 Defense News article, Northrop Grumman may have inadvertently revealed the news much earlier. Included in the gallery of images of the Sentinel program on Northrop’s website is a digital mockup of a Sentinel launch facility. The first version of the image (see Figure A below) illustrates the Air Force’s original plan to refurbish the Minuteman III silos for Sentinel, with a key indicating the silo and silo lid as “Reclaimed MMIII Facilities.” A newer version of the image (see Figure B below) was uploaded to the gallery as early as February 2024 and shows the entire launch facility—including the silo and silo lid—as “New Sentinel Facilities.”

Original rendering of Sentinel launch facility. (Source: Northrop Grumman)

New rendering of Sentinel launch facility. (Source: Northrop Grumman)

Unwarranted overconfidence

Despite the clear concerns outlined above, the Pentagon was remarkably confident in its and Northrop Grumman’s abilities to deliver the Sentinel on-time and on-budget.

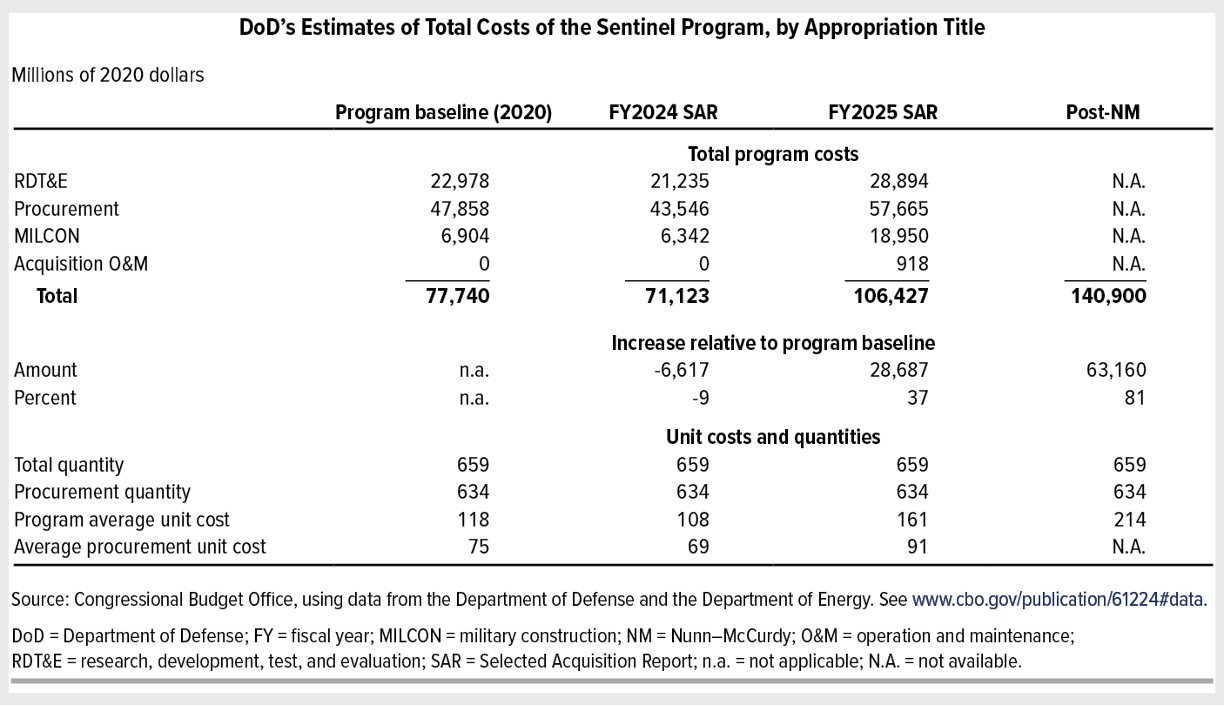

In September 2020, the Pentagon delivered its Milestone B summary report to Congress—a key decision point at which acquisition programs are authorized to enter the Engineering and Manufacturing Development phase, considered to be the official start of a program. The Milestone B report included an estimate of $95.8 billion in then-year dollars to acquire the Sentinel—a significant increase from previous estimates, but not yet the dire situation that we find ourselves in today (Figure C).

The above table from the Congressional Budget Office shows the cost growth for the Sentinel’s acquisition program between the Sentinel’s Milestone B assessment in 2020 and the post-Nunn-McCurdy review process in 2025. All costs are reflected in FY2020 dollars to allow for an accurate comparison between years.

We now know, however, based on recent statements from Pentagon and Air Force officials, that there were “some gaps in maturity” in the Milestone B report.23 Specifically, “in September of 2020, the knowledge of the ground-based segment of this program was insufficient in hindsight to have a high-quality cost estimate.” What this means is that at the most consequential stage of the program to-date, it was approved without a comprehensive understanding of the likely cost growth.

Furthermore, the Air Force was heavily delayed in creating an integrated master schedule for the Sentinel program. An integrated master schedule includes the planned work, the resources necessary to accomplish that work, and the associated budget; from the government’s perspective, it is considered to be the keystone for program management.24 Although the Under Secretary of Defense for Acquisition and Sustainment testified to Congress that “By the time you’re six months after Milestone B, you should have an integrated master schedule,” the Air Force had not met this mark.25 If the Air Force did manage to create such a schedule, it became obsolete with the Nunn-McCurdy Act’s requirement to restructure the program and rescind its Milestone B approval.

During that same hearing, the Air Force’s Deputy Chief of Staff for Strategic Deterrence and Nuclear Integration also admitted that at that time, the service had been experiencing poor communication with Northrop Grumman, the primary contractor for the ICBM.

Performance issues also appear to have had an impact on the program. In June 2024, the Air Force removed the colonel in charge of its Sentinel program—reportedly for a “failure to follow operational procedures”—and replaced him with a two-star general, with the rank change indicating a need for greater high-level attention.26

Throughout this time, the Air Force remained overconfident in its abilities to deliver the program; in December 2020, the Assistant Secretary of the Air Force for Acquisition, Technology, and Logistics told reporters that the Air Force had “godlike insight into all things GBSD.”27 And in September 2022, the Air Force Major General responsible for Sentinel’s strategic planning and requirements said in a Breaking Defense interview that the program was “on cost, on schedule, and the acquisition program baseline is being met.”28

Given everything we now know about the state of the Sentinel program, these statements were either clear obfuscations or just pure fantasy.

Non-competitive disadvantages

When addressing concerns about the rising projected costs of the Sentinel program, Air Force leaders were confident that a competitive and healthy industrial base would be able to keep the overall price tag down. As Gen. Timothy Ray, then-Commander of Air Force Global Strike Command, told reporters in 2019, “our estimates are in the billions of savings over the lifespan of the weapon.”29

These expected savings clearly never materialized, however, nor did the Pentagon help facilitate the conditions for them to be realized. In March 2018, the Air Force Nuclear Weapons Center submitted a document justifying its intention to restrict competition for the Sentinel contract to just two suppliers—Boeing and Northrop Grumman—stating that this limitation would still constrain costs because the two companies would be in competition with one another.30

However, this specter of competition evaporated when Boeing withdrew from the competition following Northrop Grumman’s acquisition of Orbital ATK—one of two independent producers of large solid rocket motors left in the US market.31 As these motors are necessary to make ICBMs fly, the merger put Northrop Grumman in the driver’s seat: it could restrict access to those motors from Boeing, thus tanking its competitor’s chances at the Sentinel bid.

Doing so would not have been allowed by the terms of the Federal Trade Commission, which permitted the merger in 2018 but subsequently investigated it in 2022 under the Biden administration, and also subsequently blocked a similar attempted merger between Lockheed Martin and Aerojet Rocketdyne that same year.32 However, the Pentagon, which had initially included non-exclusionary and pro-competition language in its requirements for an earlier phase of the Sentinel contract, removed that language from future phases.33 By refusing to wield its own power to preserve competition—initially a key driver for promoting Sentinel over a Minuteman III life-extension—the Air Force essentially left the state of the competition in Northrop Grumman’s hands. According to Boeing’s CEO, Northrop Grumman subsequently slow-walked the process of hammering out a competition arrangement with Boeing—apparently not leaving enough time for Boeing to negotiate a competitive price for solid rocket motors before the Sentinel deadline.34

As a result, Boeing pulled out of the competition altogether, and the Air Force awarded the Sentinel engineering and manufacturing development contract to Northrop Grumman through an unprecedented single-source bidding process. As the Under Secretary of Defense for Acquisition and Sustainment admitted during 2024 testimony to Congress, what this amounted to was that “effectively there was not, at the end of the day, competition in this program.”35

Reflecting on the Sentinel procurement process, House Armed Services Committee chairman Adam Smith—who has a sizable Boeing presence in his home state of Washington—suggested in October 2019 that the Air Force is “way too close to the contractors they are working with,” and implied that the service was biased towards Northrop Grumman.36

Predictably, the evaporation of competition has coincided with skyrocketing Sentinel acquisition costs. In July 2024, the Air Force’s acquisition chief Andrew Hunter reportedly told reporters that the Air Force was considering reopening parts of the Sentinel contract to bids. “I think there are elements of the ground infrastructure where there may be opportunities for competition that we can add to the acquisition strategy for Sentinel,” Hunter said.37

The Nunn-McCurdy Saga

In January 2024, the Air Force notified Congress that the Sentinel program had incurred a critical breach of the Nunn-McCurdy Act, legislation designed to keep expensive programs in check.38 One week after notifying Congress of the breach, the Air Force fired the head of the Sentinel program, but said the move was “not directly related” to the Nunn-McCurdy breach.39

At the time of the notification, the Air Force stated that the program was 37% over budget and two years behind schedule. Six months later, after conducting the cost reassessment mandated by Nunn-McCurdy, the Pentagon announced that the Sentinel program would cost 81% more than projected and be delayed by several years.40 Nevertheless, the Secretary of Defense certified the program to continue.

Per the requirements of the Nunn-McCurdy Act, the Under Secretary of Defense for Acquisition and Sustainment, who serves as the Milestone Decision Authority for the program, rescinded Sentinel’s Milestone B approval, which is needed for a program to enter the engineering and manufacturing development phase.41 The Air Force must restructure the program to address the root cause of the cost growth before receiving a new milestone approval, a process the service has said will take approximately 18 to 24 months.42

Where Sentinel Stands Now

Work on the Sentinel program has continued while the Air Force carries out the restructuring effort, but the government can’t seem to decide whether things are going well or not.

On February 10, the Air Force told Defense One that parts of the Sentinel program had been “suspended.”43 Due to “evolving” requirements related to Sentinel launch facilities, the Air Force instructed Northrop Grumman to halt “design, testing, and construction work related to the Command & Launch Segment.” There has been no indication of when the stop work order will be lifted. Nevertheless, during an April 10 Air Force town hall on Sentinel in Kimball, Nebraska, Wing Commander of F.E. Warren AFB Col. Johnny Galbert told attendees that Sentinel “is not on hold; it is moving forward.”44

Just under one month after the stop work order was made, the Air Force announced that the Sentinel program had achieved a “modernization milestone” with the successful static fire test of Sentinel’s stage-one solid rocket motor.45 The test marked the successful test firing of each stage of Sentinel’s rocket motor after the second and third stages were tested in 2024.

On March 27, the same day Bloomberg reported that the Air Force was considering a life-extension program for Minuteman III missiles, President Trump’s nominee for Secretary of the Air Force (confirmed by the Senate on May 13), Troy Meinke, committed in his testimony to pushing Sentinel over the finish line, calling the program “foundational to strategic deterrence and defense of the homeland.”46 During the same hearing, Trump’s nominee for undersecretary of Defense for acquisition and sustainment, Michael P. Duffey, also shared his support for the Sentinel program, saying “nuclear modernization is the backbone of our strategic deterrent,” and endorsing Sentinel as “critical.” Yet, two weeks later, on April 9, President Trump signed an executive order to address defense acquisition programs that mandates, “any program more than 15% behind schedule or 15% over cost will be scrutinized for cancellation.”47 This places Sentinel well beyond the threshold for potential cancellation, and the White House fact sheet detailing the order explicitly called out Sentinel’s cost and schedule overruns.

The next day, the Air Force announced that another “key milestone” for the Sentinel program had been met with the stand-up of Detachment 11 at Malmstrom AFB, which will oversee implementation of the Sentinel program at the base.48 But of course, less than thirty days later, Sentinel took a major blow with the Air Force’s admittance that hundreds of new silos would have to be dug up and constructed for the new ICBM.

The Government Accountability Office’s (GAO) latest Weapon Systems Annual Assessment from June 11 reports that Sentinel’s costs “could swell further” as the Air Force “continues to evaluate its options and develop a new schedule as part of restructuring efforts.” The assessment also notes that the Sentinel program alone accounted for over $36 billion of the $49.3 billion increase from 2024 to 2025 in GAO’s combined total estimate of major defense acquisition program costs, and noted that the first flight test now would not take place until March 2028.49 In a sweeping criticism of the program, the GAO report notes that the continued immaturity of the program’s critical technologies more than 4 years into its development phase “calls into question the level of work required to mature these technologies and the validity of the cost estimate used to certify the program.”50

450 Money Pits

We probably will never know how much money could have been saved if the Air Force had elected from the beginning to life-extend the existing ICBMs rather than build an entirely new system from scratch. The opportunity to have a proactive, independent cost comparison and corresponding public debate was eliminated through intense rounds of Pentagon and industry lobbying. But we certainly now know that the Air Force’s assertion—that the Sentinel would be cheaper and easier than a life-extension—was wrong, and that the suppression of an independent review contributed to these rising costs.

The Sentinel saga, with its seemingly unending series of setbacks and continued uncertainties, begs a crucial question: what incentives exist for the Air Force to get it right? That the program, along with numerous other nuclear modernization programs, was green-lighted to continue despite ever-increasing cost and schedule delays exposes a major flaw in U.S. nuclear weapons acquisition programs – they are too big to fail. The government, evidently, will always write a bigger check, will always move the goalposts, because the alternative is either failing to maintain the U.S. strategic deterrent or admitting that U.S. nuclear strategy and force structure is not as immutable and unquestionable as the public has been made to believe. In such a system of blank checks and industry lobbying, what incentivizes the Pentagon to ensure programs are as cost efficient as possible? The only mechanism for oversight and accountability is Congress. Congress must increase oversight of nuclear modernization programs like Sentinel to ensure a limit is placed on how much taxpayer money can be spent on failing programs in the name of national security.

De-Risking the Clean Energy Transition: Opportunities and Principles for Subnational Actors

Executive Summary

The clean energy transition is not just about technology — it is about trust, timing, and transaction models. As federal uncertainty grows and climate goals face political headwinds, a new coalition of subnational actors is rising to stabilize markets, accelerate permitting, and finance a more inclusive green economy. This white paper, developed by the Federation of American Scientists (FAS) in collaboration with Climate Group and the Center for Public Enterprise (CPE), outlines a bold vision: one in which state and local governments – working hand-in-hand with mission-aligned investors and other stakeholders – lead a new wave of public-private clean energy deployment.

Drawing on insights from the closed-door session “De-Risking the Clean Energy Transition” and subsequent permitting discussions at the 2025 U.S. Leaders Forum, this paper offers strategic principles and practical pathways to scale subnational climate finance, break down permitting barriers, and protect high-potential projects from political volatility. This paper presents both a roadmap and an invitation for continued collaboration. FAS and its partners will facilitate further development and implementation of approaches and ideas described herein, with the goals of (1) directing bridge funding towards valuable and investable, yet at-risk, clean energy projects, and (2) building and demonstrating the capacity of subnational actors to drive continued growth of an equitable clean economy in the United States.

We invite government agencies, green banks and other financial institutions, philanthropic entities, project developers, and others to formally express interest in learning more and joining this work. To do so, contact Zoe Brouns (zbrouns@fas.org).

The Moment: Opportunity Meets Urgency

We are in the complex middle of a global energy transition. Clean energy and technology are growing around the world, and geopolitical competition to consolidate advantage in these sectors is intensifying. The United States has the potential to lead, but that leadership is being tested by erratic federal environmental policies and economic signals. Meanwhile, efforts to chart a lasting domestic clean energy path that resonates with the full American public have fallen short. Demand is rising — fueled by AI, electrification, and industrial onshoring – yet opposition to clean energy buildout is growing, permitting systems are gridlocked, and legacy regulatory frameworks are failing to keep up. This moment calls for new leadership rooted in local and regional capacity and needs. Subnational governments, green and infrastructure banks, and other funders have a critical opportunity to stabilize clean energy investment and sustain progress amid federal uncertainty. Thanks to underlying market trends favoring clean energy and clean technology, and to concerted efforts over the past several years to spur U.S. growth in these sectors, there is now a pipeline of clean projects across the country that are shovel-ready, relatively de-risked and developed, and investable (Box 1). Subnational actors can work together to identify these projects, and to mobilize capital and policy to sustain them in the near term.

The New York Power Authority used a simple, quick Request for Information (RFI) to identify readily investible clean energy projects in New York, and was then able to financially back many of the identified projects thanks to its strong bond rating and ability to access capital. As Paul Williams, CEO of the Center for Public Enterprise, noted, this powerful approach allowed the Authority to “essentially [pull] a 3.5-gigawatt pipeline out of thin air in less than a year.”

States, cities, and financial institutions are already beginning to provide the support and sustained leadership that federal agencies can no longer guarantee. They’re developing bond-backed financing, joint procurement schemes, rapid permitting pilot zones, and revolving loan funds — not just to fill gaps, but to reimagine what clean energy governance looks like in an era of fragmentation. One compelling example is the Connecticut Green Bank, which has successfully blended public and private capital to deploy over $2 billion in clean energy investments since its founding. Through programs like its Commercial Property Assessed Clean Energy (C-PACE) financing and Solar for All initiative, the bank has reduced emissions, created jobs, and delivered energy savings to underserved communities.

Indeed, this kind of mission-oriented strategy – one that harnesses finance and policy towards societally beneficial outcomes, and that entrepreneurially blends public and private capacities – is in the best American tradition. Key infrastructure and permitting decisions are made at the state and local levels, after all. And state and local governments have always been central to creating and shaping markets and underwriting innovation that ultimately powers new economic engines. The upshot is clear and striking: subnational climate finance isn’t just a workaround. It may be the most politically durable and economically inclusive way to future-proof the clean energy transition.

The Role of Subnational Finance in the Clean Energy Transition

Recent years saw heavy reliance on technocratic federal rules to spur a clean energy transition. But a new political climate has forced a reevaluation of where and how federal regulation works best. While some level of regulation is important for creating certainty, demand, and market and investment structures, it is undeniable that the efficacy and durability of traditional environmental regulatory approaches has waned. There is an acute need to articulate and test new strategies for actually delivering clean energy progress (and a renewed economic paradigm for the country) in an ever-more complex society and dynamic energy landscape.

Affirmatively wedding finance with larger public goals will be a key component of this more expansive, holistic approach. Finance is a powerful tool for policymakers and others working in the public interest to shape the forward course of the green economy in a fair and effective way. In the near term, opportunities for subnational investments are ripe because the now partially paused boom in potential firms and projects generated by recent U.S. industrial policy has generated a rich set of already underwritten, due-diligenced projects for re-investment. In the longer term, the success of redesigned regulatory schema will almost certainly depend on creating profitable firms that can carry forward the energy transition. Public entities can assume an entrepreneurial role in ensuring these new economic entities, to the degree they benefit from public support, advance the public interest. Indeed, financial strategies that connect economic growth to shared prosperity will be important guardrails for an “abundance” approach to environmental policy – an approach that holds significant promise to accelerate necessary societal shifts, but also presents risk that those shifts further enrich and empower concentrated economic interests.

To be sure, subnational actors generally cannot fund at the scale of the federal government. However, they can mobilize existing revenue and debt resources, including via state green and infrastructure banks, bonding tools, and direct investment financing strategies, to seed capital for key projects and to provide a basis for larger capital stacks for key endeavors. They are also particularly well suited to provide “pre-development” support to help projects move through start-up phases and reach construction and development. Subnational entities can engage sectorally and in coalition to scale up financing, to draw in private actors, and to support projects along the whole supply and value chain (including, for instance, multi-state transmission and grid projects, multi-state freight and transportation network improvements, and multi-state industrial hubs for key technologies).

A wide range of financing strategies for clean energy projects already exist. For instance:

- Revolving loan funds can help public entities provide lower-cost debt financing to draw in additional private capital.

- Joint procurements or bundled financing can set technological standards, provide pricing power, and reduce the cost of capital for smaller businesses and make it easier for them to break into the clean energy economy.

- Financing programs for projects with public benefits can be designed in ways that allow government investors to take a small equity stake, sharing both risk and revenue over time.

Strategies like these empower states and other subnational actors to de-risk and drive the clean energy transition. The expanding green banking industry in the United States, and similar institutions globally, further augment subnational capacity. What is needed is rapid scaling and ready capitalization.

There is presently tremendous need and opportunity to deploy flexible financing strategies across projects that are shovel-ready or in progress but may need bridge funding or other investments in the wake of federal cuts. The critical path involves quickly identifying valuable, vetted projects in need of support, followed by targeted provision of financing that leverages the superior capital access of public institutions.

Projects could be identified through simple, quick Requests for Information (RFIs) like the one recently used to great effect by the New York Power Authority to build a multi-gigawatt clean energy pipeline (see Box 1, above). This model, which requires no new legislation, could be adopted by other public entities with bonding authority. Projects could also be identified through existing databases, e.g., of projects funded by, or proposed for funding under, the Inflation Reduction Act (IRA) or Infrastructure Investment and Jobs Act (IIJA).

There is even the possibility of establishing a matchmaking platform that connects projects in need of financing with entities prepared to supply it. Projects could be grouped sectorally (e.g., freight or power sector projects) or by potential to address cross-cutting issues (e.g., cutting pollution burdens or managing increasing power grid load and its potential to electrify new economic areas). As economic mobilization around clean energy gains steam and familiarity with flexible financing strategies grows, such strategies can be extended to new projects in ways that are tailored to community interests, capacity, and needs.

Principles for Effective, Equitable Investment

The path outlined above is open now but will substantially narrow in the coming months without concerted, coordinated action. The following principles can help subnational actors capitalize on the moment effectively and equitably. It is worth emphasizing that equitable investment is not only a moral imperative – it is a strategic necessity for maintaining political legitimacy, ensuring community buy-in, and delivering long-term economic resilience across regions.

Funders must clearly state goals and be proactive in pursuing them – starting now to address near-term instability. Rather than waiting for projects to come to them, subnational governments, financial institutions, and other funders should use their platforms and convening power to lay out a “mission” for their investments – with goals like electrifying the industrial sector, modernizing freight terminals and ports, and accelerating transmission infrastructure with storage for renewables. Funders should then use tools like simple RFIs to actively seek out potential participants in that mission.

Public equity is a key part of the capital stack, and targeted investments are needed now. With significant federal climate investments under litigation and Congressional debates on the Inflation Reduction Act ongoing, other participants in the domestic funding ecosystem must step up. Though not all federal capital can (or should) be replaced, targeted near-term investments coupled with multi-year policy and funding roadmaps by these actors can help stabilize projects that might not otherwise proceed and provide reassurance on the long-term direction of travel.

Information is a surprisingly powerful tool. Deep, shared, information architectures and clarity on policy goals are key for institutional investors and patient capital. Shared information on costs, barriers, and rates of return would substantially help facilitate the clean energy transition – and could be gathered and released by current investors in compiled form. Sharing transparent goals, needs, and financial targets will be especially critical in the coming months. Simple RFIs targeted at businesses and developers can also function as dual-purpose information-gathering and outreach tools for these investors. By asking basic questions through these RFIs (which need not be more than a page!), investors can build the knowledge base for shaping their clean technology and energy plans while simultaneously drawing more potential participants into their investment networks.

States should invest to grow long-term businesses. The clean energy transition can only be self-sustaining if it is profitable and generates firms that can stand on their own. Designing state incentive and investment projects for long-term business growth, and aligning complementary policy, is critical – including by designing incentive programs to partner well with other financing tools, and to produce long-term affordability and deployment gains, especially for entities which may otherwise lack capital access. State strategies, like the one New Mexico recently published, that outline energy-transition and economic plans and timelines are crucial to build certainty and align action across the investment and development ecosystem. Metrics for green programs should assess prospects for long-term business sustainability as well as tons of emissions reduced.

States can finance the clean energy transition while securing long-term returns and other benefits. Many clean technology projects may have higher upfront costs balanced by long-term savings. Debt equity, provided through revolving loan funds, can play a large role in accelerating deployment of these technologies by buying down entry costs and paying back the public investor over time. Moreover, the superior bond ratings of state institutions substantially reduce borrowing costs; sharing these benefits is an important role for public finance. State financial institutions can explore taking equity stakes in some projects they fund that provide substantial public benefits (e.g., mega-charging stations, large-scale battery storage, etc.) and securing a rate of return over time in exchange for buying down upfront risk. Diversified subnational institutions can use cash flows from higher-return portions of their portfolios to de-risk lower-return or higher-risk projects that are ultimately in the public interest. Finally, states with operating carbon market programs can consider expanding their funding abilities by bonding against some portion of carbon market revenues, converting immediate returns to long-term collateral for the green economy.

Financing policy can be usefully combined with procurement policy. As electrification reaches individual communities and smaller businesses, many face capital-access problems. Subnational actors should consider packaging similar businesses together to provide financing for multiple projects at once, and can also consider complementary public procurement policies to pull forward market demand for projects and products (Box 2).

Explore contract mechanisms to protect public benefits. Distributive equity is as important as large-scale investment to ensure a durable economic transition. The Biden-Harris Administration substantially conditioned some investments on the existence of binding community benefit plans to ensure that project benefits were broadly shared and possible harms to communities mitigated. Subnational investors could develop parallel contractual agreements. There may also be potential to use contracts to enable revenue sharing between private and public institutions, partially addressing any impacts of changes to the IRA’s current elective pay and transferability provisions by shifting realized income to the public entities that currently use those programs from the private entities that realize revenue from projects.

Joint procurements, whereby two or more purchasers enter into a single contract with a vendor, can bring down prices of emerging clean technologies by increasing purchase volume, and can streamline technology acquisition by sharing contracting workload across partners. Joint procurement and other innovative procurement policies have been used successfully to drive deployment of zero-emission buses in Europe and, more recently, the United States. Procurement strategies can be coupled with public financing. For instance, the Federal Transit Agency’s Low or No Emission Grant Program for clean buses preferences applications that utilize joint procurement, thereby helping public grant dollars go further.

The rising importance of the electrical grid across sectors creates new financial product opportunities. As the economy decarbonizes, more previously independent sectors are being linked to the electric grid, with load increasing (AI developments exacerbate this trend). That means that project developers in the green economy can offer a broader set of services, such as providing battery storage for renewables at vehicle charging points, distributed generation of power to supply new demand, and potential access to utility rate-making. Financial institutions should closely track rate-making and grid policy and explore avenues to accelerate beneficial electrification. There is a surprising but potent opportunity to market and finance clean energy and grid upgrades as a national security imperative, in response to the growing threat of foreign cyberattacks that are exploiting “seams” in fragile legacy energy systems.

Global markets can provide ballast against domestic volatility. The United States has an innovative financial services sector. Even though federal institutions may retreat from clean energy finance globally over the next few years, there remains a substantial opportunity for U.S. companies to provide financing and investment to projects globally, generate trade credit, and to bring some of those revenues back into the U.S. economy.

Financial products and strategies for adaptation and resilience must not be overlooked. Growing climate-linked disasters, and associated adaptation costs, impose substantial revenue burdens on state and local governments as well as on insurers and businesses. Competition for funds between adaptation and mitigation (not to mention other government services) may increase with proposed federal cuts. Financial institutions that design products that reduce risk and strengthen resilience (e.g., by helping relocate or strengthen vulnerable buildings and infrastructure) can help reduce these revenue competitions and provide long-term benefits by tapping into the $1.4 trillion market for adaptation and resilience solutions. Improved cost-benefit estimates and valuation frameworks for these interacting systems are critical priorities.

Conclusion: A Defining Window for Subnational Leadership

Leaders from across the country agree: clean energy and clean technology are investable, profitable, and vital to community prosperity. And there is a compelling lane for innovative subnational finance as not just a stopgap or replacement for federal action, but as a central area of policy in its own right.

The federal regulatory state is, increasingly, just a component of a larger economic transition that subnational actors can help drive, and shape for public benefit. Designing financial strategies for the United States to deftly navigate that transition can buffer against regulatory uncertainty and create a conducive environment for improved regulatory designs going forward. Immediate responses to stabilize climate finance, moreover, can build a foundation for a more engaged, and innovative, coalition of subnational financial actors working jointly for the public good.

Active state and private planning is the key to moving down these paths, with governments setting a clear direction of travel and marshaling their convening powers, capital access, and complementary policy tools to rapidly stabilize key projects and de-risk future capital choices.

There is much to do and no time to lose as governments and investors across the country seek to maintain clean technology progress. The Federation of American Scientists (FAS) and its partners will facilitate further development and implementation of approaches and ideas described above, with the goals of (1) directing bridge funding towards valuable and investable, yet at-risk, clean energy projects, and (2) building and demonstrating the capacity of subnational actors to drive continued growth of an equitable clean economy in the United States.

We invite government agencies, green banks and other financial institutions, philanthropic entities, project developers, and others to formally express interest in learning more and joining this work. To do so, contact Zoe Brouns (zbrouns@fas.org).

Acknowledgements

Thank you to the many partners who contributed to this report, including: Dr. Jedidah Isler and Zoë Brouns at the Federation of American Scientists, Sydney Snow at Climate Group, Yakov Feigin, Chirag Lala, and Advait Arun at the Center for Public Enterprise, and Jayni Hein at Covington and Burling LLP.