Optimizing $4 Billion of Low-Income Home Energy Assistance Program Funding To Protect the Most Vulnerable Households From Extreme Heat

The federal government needs to maximize existing funds to mitigate heat stress and ensure the equitable distribution of these resources to the most vulnerable households. Agencies could increase Low Income Home Energy Assistance Program (LIHEAP) funding allocations through incentives or by mandating a floor of benefits from these programs be distributed to a well-defined set of vulnerable households. This approach is similar to Justice40, the Biden administration’s signature environmental justice initiative that requires federal agencies to ensure a minimum allocation of 40% of program benefits are received by disadvantaged communities.

Challenge and Opportunity

In addition LIHEAP, which is administered by the Department of Health and Human Services (HHS), Justice40 covered programs with high potential to mitigate heat stress include:

- American Climate Corps (Corporation for National & Community Service, CNCS)

- Greenhouse Gas Reduction Fund (GGRF; EPA)

- Energy Efficiency and Conservation Block Grants (EECBG; DOE)

- Weatherization Assistance Program (WAP; DOE)

- Building Resilient Infrastructure and Communities (BRIC; FEMA)

LIHEAP and these five priority programs have combined allocations of about $30 billion in 2024 alone. Allocating even 10% of this collective budget to the most vulnerable communities and households could significantly reduce heat mortality and morbidity.

Since its inception in 1974, LIHEAP has provided more than $100 billion in direct bill payment assistance, more than double the allocation of seven other low-income energy programs combined. LIHEAP provides a formula block grant to all states and territories and more than 150 tribes. The FY24 LIHEAP allocation is $3.6 billion; state allocations vary depending on overall and low-income population and climate.

LIHEAP is disbursed by the HHS Administration for Children and Families (ACF) to state-level HHS counterparts. These in turn distribute funding to subgrantees, including local HHS offices, national NGOs, and community-based organizations. Furthermore, there is often coordination between state and local LIHEAP administrators and utilities, which provide additional low-income energy efficiency, solar and storage programs, and rate discounts.

HHS understands the impetus for LIHEAP to reduce heat stress. For instance, in 2021, HHS published a “Heat Stress Flexibilities and Resources” memo, which outlined the disproportionate impacts of future heat conditions on communities of color and recommended using a portion of the state allocation for cooling assistance, providing or loaning air conditioners, targeting vulnerable households, and a range of public educational activities. In 2016, the agency designated a national Extreme Heat Week on how LIHEAP can be a part of the solution.

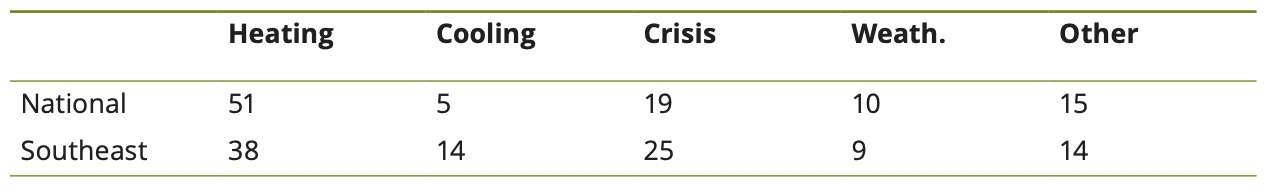

LIHEAP Formula Funding Favors Heating Assistance

However, LIHEAP funds have not been sufficiently used for cooling assistance. Nationally, from 2001 to 2019, just 5% of all LIHEAP funds were used for cooling assistance, with heating receiving ten times more funding than cooling. Even among states in the Southeast, just 14% of the budget was for cooling assistance. In 2019, only 21 states opted to provide cooling assistance, compared to the 49 that allocated funding to weatherization and 26 that used LIHEAP for energy education and supplemental energy efficiency programs.

Some states with the highest heat risk — such as Missouri, Nevada, North Carolina, and Utah — offer no cooling assistance funds from LIHEAP. Despite their warm climates, Arizona, Arkansas, Florida, and Hawai’i all limit LIHEAP cooling assistance per household to less than half the available heating assistance benefit.

Prior efforts to encourage the use of LIHEAP for cooling have not resulted in a sufficient shift of this landmark funding source. LIHEAP was originally developed to provide home heating assistance at a time when winters were more severe and summers less searing. State LIHEAP administrators used the vast majority of their budgets during the heating season; many viewed cooling assistance as a luxury. This is despite the extreme heat events of recent summers, including temperatures topping 115ºF in the Pacific Northwest in 2019 and 31 days in a row of highs above 110ºF in Phoenix in 2023.

With many households still struggling to pay their winter bills, LIHEAP administrators may be reluctant to shift allocations from heating assistance that already cannot serve even 50% of eligible households. States that do not set aside dedicated cooling assistance funds frequently run out of LIHEAP, which they receive in October, well before summer. Even if crisis funds could theoretically be used to support families in crisis during heat waves, these funds are exhausted by high crisis demand in winter.

The majority of LIHEAP allocations are based on a formula rooted in a state’s low-income population, energy costs, and the severity of a state’s winter climate (its heating degree days). Residential energy costs may account for the number of cooling degree days, but they do not account for variation in electricity prices and have no consideration of population sensitivity (e.g., age) or adaptive capacity measures that are included in many Heat Vulnerability Indices (HVIs).

LIHEAP’s website on extreme heat points to the 2021 “heat dome” in the Pacific Northwest, which saw daily hospitalizations 69 times higher than the same week in 2020. In Washington state alone, 441 people died. The event, previously thought to be a 1 in 1,000-year occurrence, could occur every 5 to 10 years with just 2℃ of global warming (2023 was 1.35℃ warmer than the preindustrial average).

With advanced forecasts, LIHEAP could be deployed both to restore disconnected electric service and to make payments on extreme energy bills, which may surge even higher with the increase in demand response pricing. In Michigan, for example, DTE Energy offers a dynamic peak pricing rate that has critical peak periods at $1.03 per kWh, eight times higher than its off-peak rate. Maximum demand for electricity for continuous cooling coupled with time-of-use rate structures is a recipe for exorbitant bills that low-income customers will not be able to afford.

The risk to vulnerable households from the absence of cooling assistance is compounded by a lack of disconnection protections from extreme heat. Forty-one states offer protections for cooling, compared to just 20 that prevent utilities from disconnecting households during extreme heat. Heat protections often only kick in when a specific temperature is reached (e.g., 95°F) or even when a particular alert is issued by the National Weather Service, leaving households uncertain about their status.

In the bi-weekly Pulse Survey of the U.S. Census (June 28 to July 10, 2023, the most recent period of extreme heat nationally), 58.5% of national respondents reported keeping their home at an uncomfortable or unsafe temperature at least some months in the last year, with 18.7% reporting these indoor temperatures almost every month. Households that spend more of their income on energy bills allow indoor temperatures to rise up to 7.5°F more than higher-income households before using air-conditioning, thus dramatically increasing their risk of heat stress.

Plan of Action

Recommendation 1. Maximize LIHEAP funding for cooling assistance

Congressional mandate – Congress could require states to use a specific percentage of their LIHEAP allocations for cooling. This percentage could be derived from FEMA’s National Risk Index (NRI), which accounts for exposure to extreme heat, the vulnerability of the population, and adaptive capacity, such as civic resources to provide emergency response. Heat Factor by First Street and Community Resilience Estimates for Heat by U.S. Census offer more granular estimates of heat vulnerability.

Incentives – LIHEAP regularly receives supplemental federal allocations (e.g. CARES Act); HHS could use these as a pool of matching funds to encourage states to leverage non-federal dollars for cooling assistance. States also have an important role to play in leveraging both public and utility funds to match and expand the impact of LIHEAP.

Emergency funds – HHS should release Emergency Contingency Funds to address extreme heat. In the 1990s and 2000s, these funds were regularly used, sometimes in excess of $700 million per year to direct cooling assistance to extreme heat events; these funds could also be used to provide cooling measures like fans, air conditioners, and insulation. These funds have been authorized but not allocated by Congress and not disbursed since 2011.

Report back to state LIHEAP administrators – Peer comparisons can be a powerful source of information and motivation. A study from the 1990s “targeting index” for the share of LIHEAP delivered to the elderly revealed stark differences between Arizona, where the elderly were underrepresented, and Texas, where they were represented above their proportion of the population. An annual dashboard of how a state compares to its close peers (e.g., to other states in its EPA region) in cooling allocations is a low-effort step that could result in significant shifts in state plans and community outreach.

Expand outreach and education to state LIHEAP administrators and subgrantees – Memos from 2016 and 2021 were insufficient to encourage many state and local budget shifts. Communications should emphasize the significantly greater risk of fatalities from extreme heat than extreme cold. One study simulated indoor temperatures in Phoenix during the 2006 heat wave and showed that by day two, temperatures in single-story homes would peak at 115ºF (46ºC). Another study projected that a five-day heat wave on the order of the record July 2023 temperatures that corresponds with a blackout would result in a fatality rate of ~1% in Phoenix, or about 1,500 deaths.

Recommendation 2. Maximize LIHEAP distribution to the most vulnerable households

While LIHEAP providers do collect significant household data through their intake forms, most states do not have firm guidelines on which households to distribute LIHEAP funds to and use a modified first-come, first-served approach. A small number of questions specific to heat risk could be added to LIHEAP applications and used to generate a household heat vulnerability score (raw or percentile). A list of 10 potential sensitivity factors to assess is included in the Frequently Asked Questions.

In partnership with extreme heat experts, US Digital Service could support the development of an algorithm to assess the likelihood of each household experiencing acute heat stress and the optimal uses of LIHEAP funding to mitigate these threats, at the household level and portfolio-wide.

Existing data from the Community Resilience Estimates for Heat (CRE) by the U.S. Census shows that more than two-thirds of extreme heat vulnerability is concentrated in just 1.5% of U.S. census tracts across 10 states. Even a small amount of LIHEAP cooling assistance, if effectively targeted, could dramatically reduce the risk of heat stroke and death.

Recommendation 3. Congressional Amendments to the LIHEAP Statute

Congress has revisited the LIHEAP formula in the past and should consider revising the formulas to elevate the role of cooling assistance and disconnection prevention during extreme heat. Rep. Watson Coleman (D-NJ) proposed this in the Stay Cool Act introduced in 2022. As the climate has changed dramatically since LIHEAP’s inception and will continue to in coming decades, Congress could peg an updated formula to an HVI as a national standard to ensure shifts in climate and population would be automatically updated in the annual LIHEAP formula. It could also update household data collection requirements under LIHEAP Statute Section 2605(c)(1)(G).

Conclusion

The number of heat-related deaths continues to rise in the U.S. In the long term, a multipronged strategy that increases funding for energy efficiency improvements, distributed generation and storage, and bill assistance is needed. But in the near term, it is critically important to work with existing resources and maximize the value of LIHEAP to mitigate the pressures of extreme heat.

Despite some positive spikes, annual LIHEAP allocations have not kept pace with accelerating demand. The number of households eligible for LIHEAP has grown four times faster than available funding; the number of eligible households served has declined from 36% to 16%. As utility bills outpace inflation, per-household LIHEAP allocations have increased without a corresponding increase in the overall allocation.

States have broad discretion on how to use LIHEAP. Overcoming the inertia of budgets dominated by heating assistance is likely to require significant advocacy, both top-down from the federal government and bottom-up from grassroots community organizations that share concerns about vulnerability to extreme heat.

This idea of merit originated from our Extreme Heat Ideas Challenge. Scientific and technical experts across disciplines worked with FAS to develop potential solutions in various realms: infrastructure and the built environment, workforce safety and development, public health, food security and resilience, emergency planning and response, and data indices. Review ideas to combat extreme heat here.

The process to develop household heat risk assessments and performance reporting could entail:

1) Design a method for assigning household scores for heat risk. Scores might consider risk of mortality, risk of heat sickness requiring medical attention/hospitalization, and risk of chronic impacts (e.g., declines in cognition and sleep quality) from consistent, low to moderate exposure to excessive heat.

Current data: LIHEAP reporting by each state’s lead agency currently includes some data that can be used to analyze prioritization of funds to mitigate extreme heat risks:

- Cooling assistance (dollars; number of households served)

- Weatherization (funded through LIHEAP)

- Assistance distributed to households with a vulnerable person (under age 5, over age 60, or a person with a disability)

- Demographic variables (e.g. race and ethnicity)

- Housing variables, namely occupancy status and for renters, whether utility bills are included in rent

- Disconnections

Proposed data collection:

- Keeping house at an unsafe temperature Medical conditions associated with heat stress (e.g., diabetes)

- Prior experience of heat stress

- Additional age distinctions (children under 2, adults over 70, over 80, over 90)

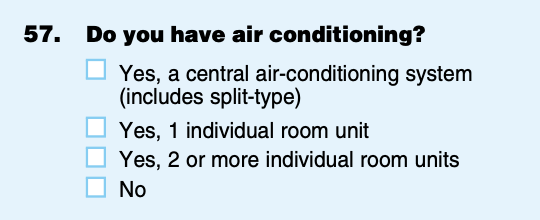

- Presence and adequacy of cooling systems Housing age and type (e.g., masonry, duplex)

- Electricity cost Solar exposure (provided by Google’s Project Sunroof)

- Urban heat island effect

- Employment

- Personal behaviors

2) Design a process so that existing program resources are distributed among the most vulnerable households, as determined by their individual heat risk scores. LIHEAP administrators might decide to spend 25% of cooling assistance funds among the most vulnerable 10% of LIHEAP applicants, for instance.

Yes, the Community Resilience Estimates for Heat (CRE) uses American Community Survey Data to determine the number of vulnerability factors a household possesses, down to census tract resolution. The tool sorts census tracts based on the number of households with zero vulnerabilities, 1–2 vulnerabilities, and 3 or more vulnerabilities. Of 73,060 census tracts, just 1,616 (2.2%) have a majority of households with more than three heat vulnerabilities. The Community Resilience Estimates (CRE) for Heat offers 10 binary risk factors.

Enhancing Public Health Preparedness for Climate Change-Related Health Impacts

The escalating frequency and intensity of extreme heat events, exacerbated by climate change, pose a significant and growing threat to public health. This problem is further compounded by the lack of standardized education and preparedness measures within the healthcare system, creating a critical gap in addressing the health impacts of extreme heat. The Department of Health and Human Services (HHS), especially the Centers for Medicare & Medicaid Services (CMS), the Health Resources and Services Administration (HRSA), and the Office of Climate Change and Health Equity (OCCHE) can enhance public health preparedness for the health impacts of climate change. By leveraging funding mechanisms, incentives, and requirements, HHS can strengthen health system preparedness, improve health provider knowledge, and optimize emergency response capabilities.

By focusing on interagency collaboration and medical education enhancement, strategic measures within HHS, the healthcare system can strengthen its resilience against the health impacts of extreme heat events. This will not only improve coding accuracy, but also enhance healthcare provider knowledge, streamline emergency response efforts, and ultimately mitigate the health disparities arising from climate change-induced extreme heat events. Key recommendations include: establishing dedicated grant programs and incentivizing climate-competent healthcare providers; integrating climate-resilience metrics into quality measurement programs; leveraging the Health Information Technology for Economic and Clinical Health (HITECH) Act to enhance ICD-10 coding education; and collaborating with other federal agencies such as the Department of Veterans Affairs (VA), the Federal Emergency Management Agency (FEMA), and the Department of Defense (DoD) to ensure a coordinated response. The implementation of these recommendations will not only address the evolving health impacts of climate change but also promote a more resilient and prepared healthcare system for the future.

Challenge

The escalating frequency and intensity of extreme heat events, exacerbated by climate change, pose a significant and growing threat to public health. The scientific consensus, as documented by reports from the Intergovernmental Panel on Climate Change (IPCC) and the National Climate Assessment, reveals that vulnerable populations, such as children, pregnant people, the elderly, and marginalized communities including people of color and Indigenous populations, experience disproportionately higher rates of heat-related illnesses and mortality. The Lancet Countdown’s 2023 U.S. Brief underscores the escalating threat of fossil fuel pollution and climate change to health, highlighting an 88% increase in heat-related mortality among older adults and calling for urgent, equitable climate action to mitigate this public health crisis.

Inadequacies in Current Healthcare System Response

Reports from healthcare institutions and public health agencies highlight how current coding practices contribute to the under-recognition of heat-related health impacts in vulnerable populations, exacerbating existing health disparities. The current inadequacies in ICD-10 coding for extreme heat-related health cases hinder effective healthcare delivery, compromise data accuracy, and impede the development of targeted response strategies. Challenges in coding accuracy are evident in existing studies and reports, emphasizing the difficulties healthcare providers face in accurately documenting extreme heat-related health cases. An analysis of emergency room visits during heat waves further indicates a gap in recognition and coding, pointing to the need for improved medical education and coding practices. Audits of healthcare coding practices reveal inconsistencies and inaccuracies that stem from a lack of standardized medical education and preparedness measures, ultimately leading to underreporting and misclassification of extreme heat cases. Comparative analyses of health data from regions with robust coding practices and those without highlight the disparities in data accuracy, emphasizing the urgent need for standardized coding protocols.

There is a crucial opportunity to enhance public health preparedness by addressing the challenges associated with accurate ICD-10 coding in extreme heat-related health cases. Reports from government agencies and economic research institutions underscore the economic toll of extreme heat events on healthcare systems, including increased healthcare costs, emergency room visits, and lost productivity due to heat-related illnesses. Data from social vulnerability indices and community-level assessments emphasize the disproportionate impact of extreme heat on socially vulnerable populations, highlighting the urgent need for targeted policies to address health disparities.

Opportunity

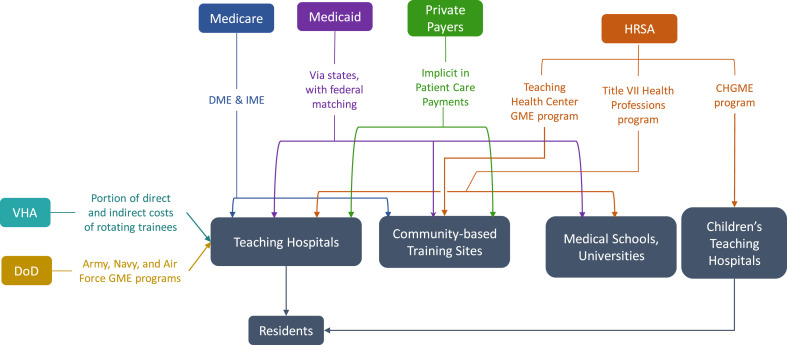

As Medicare is the largest federal source of Graduate Medical Education (GME) funding (Figure 1), the Department of Health and Human Services’ (HHS) Centers for Medicare & Medicaid Services (CMS) and the National Center for Health Statistics (NCHS) play a critical role in developing coding guidelines. Thus, it is essential for HHS, CMS, and other pertinent coordinating agencies to be involved in the process for developing climate change-informed graduate medical curricula.

By focusing on medical education enhancement, strategic measures within HHS, and fostering interagency collaboration, the healthcare system can strengthen its resilience against the health impacts of extreme heat events. Improving coding accuracy, enhancing healthcare provider knowledge, streamlining emergency response efforts, and mitigating health disparities related to extreme heat events will ultimately strengthen the healthcare system and foster more effective, inclusive, and equitable climate and health policies. Improving the knowledge and training of healthcare providers empowers them to respond more effectively to extreme heat-related health cases. This immediate response capability contributes to the overarching goal of reducing morbidity and mortality rates associated with extreme heat events and creates a public health system that is more resilient and prepared for emerging challenges.

The inclusion of ICD-10 coding education into graduate medical education funded by CMS aligns with the precedent set by the Pandemic and All Hazards Preparedness Act (PAHPA), emphasizing the importance of preparedness and response to public health emergencies. Similarly, drawing inspiration from the Health Information Technology for Economic and Clinical Health Act (HITECH Act), which promotes the adoption of electronic health records (EHR) systems, presents an opportunity to modernize medical education and ensure the seamless integration of climate-related health considerations. This collaborative and forward-thinking approach recognizes the interconnectedness of health and climate, offering a model that can be applied to various health challenges. Integrating mandates from PAHPA and the HITECH Act serves as a policy precedent, guiding the healthcare system toward a more adaptive and proactive stance in addressing climate change impacts on health.

Conversely, the consequences of inaction on the health impacts of extreme heat extend beyond immediate health concerns. They permeate through the fabric of society, widening health disparities, compromising the accuracy of health data, and undermining emergency response preparedness. Addressing these challenges requires a proactive and comprehensive approach to ensure the well-being of communities, especially those most vulnerable to the effects of extreme heat.

Plan of Action

The following recommendations aim to facilitate public health preparedness for extreme heat events through enhancements in medical education, strategic measures within the Department of Health and Human Services (HHS), and fostering interagency collaboration.

Recommendation 1a. Integrate extreme heat training into the GME curriculum.

Integrating modules on extreme heat-related health impacts and accurate ICD-10 coding into medical education curricula is essential for preparing future healthcare professionals to address the challenges posed by climate change. This initiative will ensure that medical students receive comprehensive training on identifying, treating, and documenting extreme heat-related health cases. Sec. 304. Core Education and Training of the PAHPA provides policy precedent to develop foundational health and medical response curricula and training materials by modifying relevant existing programs to enhance responses to public health emergencies. Given the prominence of Medicare in funding medical residency training, policies that alter Medicare GME can affect the future physician supply and can be used to address identified healthcare workforce priorities related to extreme heat (Figure 2).

Figure 2: A model for comprehensive climate and medical education (adapted from Jowell et al. 2023)

Recommendation 1b. Collaborate with Veterans Health Administration Training Programs.

Partnering with the Department of Veterans Affairs (VA) to extend climate-related health coding education to Veterans Health Administration (VHA) training programs will enhance the preparedness of healthcare professionals within the VHA system to manage and document extreme heat-related health cases among veteran populations.

Recommendation 2. Collaborate with the Agency for Healthcare Research and Quality (AHRQ)

Establishing a collaborative research initiative with the Agency for Healthcare Research and Quality (AHRQ) will facilitate the in-depth exploration of accurate ICD-10 coding for extreme heat-related health cases. This should be accomplished through the following measures:

Establish joint task forces. CMS, NCHS, and AHRQ should establish joint research initiatives focused on improving ICD-10 coding accuracy for extreme heat-related health cases. This collaboration will involve identifying key research areas, allocating resources, and coordinating research activities. Personnel from each agency, including subject matter experts and researchers from the EPA, NOAA, and FEMA, will work together to conduct studies, analyze data, and publish findings. By conducting systematic reviews, developing standardized coding algorithms, and disseminating findings through AHRQ’s established communication channels, this initiative will improve coding practices and enhance healthcare system preparedness for extreme heat events.

Develop standardized coding algorithms. AHRQ, in collaboration with CMS and NCHS, will lead efforts to develop standardized coding algorithms for extreme heat-related health outcomes. This involves reviewing existing coding practices, identifying gaps and inconsistencies, and developing standardized algorithms to ensure consistent and accurate coding across healthcare settings. AHRQ researchers and coding experts will work closely with personnel from CMS and NCHS to draft, validate, and disseminate these algorithms.

Integrate into Continuous Quality Improvement (CQI) programs. Establish collaborative partnerships between the VA and other federal healthcare agencies, including CMS, HRSA, and DoD, to integrate education on ICD-10 coding for extreme heat-related health outcomes into CQI programs. Regularly assess the effectiveness of training initiatives and adjust based on feedback from healthcare providers. For example, CMS currently requires physicians to screen for the social determinants of health and could include level of climate and/or heat risk within that screening assessment.

Allocate resources. Each agency will allocate financial resources, staff time, and technical expertise to support collaborative activities. Budget allocations will be based on the scope and scale of specific initiatives, with funds earmarked for research, training, data sharing, and evaluation efforts. Additionally, research funding provided through PHSA Titles VII and VIII can support studies evaluating the effectiveness of educational interventions on climate-related health knowledge and practice behaviors among healthcare providers.

Recommendation 3. Leverage the HITECH Act and EHR.

Recommendation 4. Establish climate-resilient health system grants to incentivize state-level climate preparedness initiatives

HHS and OCCHE should create competitive grants for states that demonstrate proactive climate change adaptation efforts in healthcare. These agencies can encourage states to integrate climate considerations into their health plans by providing additional funding to states that prioritize climate resilience.

Within CMS, the Center for Medicare and Medicaid Innovation (CMMI) could help create and administer these grants related to climate preparedness initiatives. Given its focus on innovation and testing new approaches, CMMI could design grant programs aimed at incentivizing state-level climate resilience efforts in healthcare. Given its focus on addressing health disparities and promoting preventive care, the Bureau of Primary Health Care (BPHC) within HRSA could oversee grants aimed at integrating climate considerations into primary care settings and enhancing resilience among vulnerable populations.

Conclusion

These recommendations provide a comprehensive framework for HHS — particularly CMS, HRSA, and OCCHE— to bolster public health preparedness for the health impacts of extreme heat events. By leveraging funding mechanisms, incentives, and requirements, HHS can enhance health system preparedness, improve health provider knowledge, and optimize emergency response capabilities. These strategic measures encompass a range of actions, including establishing dedicated grant programs, incentivizing climate-competent healthcare providers, integrating climate-resilience metrics into quality measurement programs, and leveraging the HITECH Act to enhance ICD-10 coding education. Collaboration with other federal agencies further strengthens the coordinated response to the growing challenges posed by climate change-induced extreme heat events. By implementing these policy recommendations, HHS can effectively address the evolving landscape of climate change impacts on health and promote a more resilient and prepared healthcare system for the future.

This idea of merit originated from our Extreme Heat Ideas Challenge. Scientific and technical experts across disciplines worked with FAS to develop potential solutions in various realms: infrastructure and the built environment, workforce safety and development, public health, food security and resilience, emergency planning and response, and data indices. Review ideas to combat extreme heat here.

- Improved Accuracy in ICD-10 Coding: Healthcare providers consistently apply accurate ICD-10 coding for extreme heat-related health cases.

- Enhanced Healthcare Provider Knowledge: Healthcare professionals possess comprehensive knowledge on extreme heat-related health impacts, improving patient care and response strategies.

- Strengthened Public Health Response: A coordinated effort results in a more effective and equitable public health response to extreme heat events, reducing health disparities.

- Improved Public Health Resilience:

- Short-Term Outcome: Healthcare providers, armed with enhanced knowledge and training, respond more effectively to extreme heat-related health cases.

- Long-Term Outcome: Reduced morbidity and mortality rates associated with extreme heat events lead to a more resilient and prepared public health system.

- Enhanced Data Accuracy and Surveillance:

- Short-Term Outcome: Improved accuracy in ICD-10 coding facilitates more precise tracking and surveillance of extreme heat-related health outcomes.

- Long-Term Outcome: Comprehensive and accurate data contribute to better-informed public health policies, targeted interventions, and long-term trend analysis.

- Reduced Health Disparities:

- Short-Term Outcome: Incentives and education programs ensure that healthcare providers prioritize accurate coding, reducing disparities in the diagnosis and treatment of extreme heat-related illnesses.

- Long-Term Outcome: Health outcomes become more equitable across diverse populations, mitigating the disproportionate impact of extreme heat on vulnerable communities.

- Increased Public Awareness and Education:

- Short-Term Outcome: Public health campaigns and educational initiatives raise awareness about the health risks associated with extreme heat events.

- Long-Term Outcome: Informed communities adopt preventive measures, reducing the overall burden on healthcare systems and fostering a culture of proactive health management.

- Streamlined Emergency Response and Preparedness:

- Short-Term Outcome: Integrating extreme heat preparedness into emergency response plans results in more efficient and coordinated efforts during heatwaves.

- Long-Term Outcome: Improved community resilience, reduced strain on emergency services, and better protection for vulnerable populations during extreme heat events.

- Increased Collaboration Across Agencies:

- Short-Term Outcome: Collaborative efforts between OCCHE, CMS, HRSA, AHRQ, FEMA, DoD, and the Department of the Interior result in streamlined information sharing and joint initiatives.

- Long-Term Outcome: Enhanced cross-agency collaboration establishes a model for addressing complex public health challenges, fostering a more integrated and responsive government approach.

- Empowered Healthcare Workforce:

- Short-Term Outcome: Incentives for accurate coding and targeted education empower healthcare professionals to address the unique challenges posed by extreme heat.

- Long-Term Outcome: A more resilient and adaptive healthcare workforce is equipped to handle emerging health threats, contributing to overall workforce well-being and satisfaction.

- Informed Policy Decision-Making:

- Short-Term Outcome: Policymakers utilize accurate data and insights to make informed decisions related to extreme heat adaptation and mitigation strategies.

- Long-Term Outcome: The integration of health data into broader climate and policy discussions leads to more effective, evidence-based policies at local, regional, and national levels.

A Call for Immediate Public Health and Emergency Response Planning for Widespread Grid Failure Under Extreme Heat

Soaring energy demands and unprecedented heatwaves have placed the U.S. on the brink of a severe threat with the potential to impact millions of lives: widespread grid failure across multiple states. While the North American Electric Reliability Corporation (NERC), tasked with overseeing grid reliability under the Federal Energy Regulatory Commission (FERC), has issued warnings about the heightened risk of grid failures, the prospect of widespread summer blackouts looms large amid the nation’s unpreparedness for such scenarios.

As a proactive measure, there needs to be a mandate for the implementation of an Executive Order or an interagency Memorandum of Understanding (MOU) mandating the expansion of Public Health and Emergency Response Planning for Widespread Grid Failure Under Extreme Heat. This urgently needed action would help mitigate the worst impacts of future grid failures under extreme heat, safeguarding lives, the economy, and national security as the U.S. moves toward a more sustainable, stable, and reliable electric grid system.

When the lights go out, restoring power across America is a complex, intricate process requiring seamless collaboration among various agencies, levels of government, and power providers amid constraints extending beyond just the loss of electricity. In a blackout, access to critical services like telecommunications, transportation, and medical assistance is also compromised, which only intensifies and compounds the urgency for coordinated response efforts. To avert blackouts, operators frequently implement planned and unplanned rolling blackouts, a process for load shedding that eases strain on the grid. However, these actions may lack transparent protocols and criteria for safeguarding critical medical services. Equally crucial and missing are frameworks to prioritize regions for power restoration, ensuring equitable treatment for low-income and socially vulnerable communities affected by grid failure events.

Thus, given the gravity of these high-risk, increasingly probable scenarios facing the United States, it is imperative for the federal government to take a leadership role in assessing and directing planning and readiness capabilities to respond to this evolving disaster.

Challenge

Grids are facing unprecedented strain due to record-high temperatures, which reduce their energy transmission efficiency and spike demand for air conditioning during the summer. On top of this, new industries are pushing grids to their limits. The Washington Post and insights from the utility industry cite the exponential growth of artificial intelligence and data centers for cloud computing and crypto mining as drivers of a nearly twofold increase in electricity consumption over the past decade.

Projections from NERC paint a dire picture: between 2024 and 2028, an alarming 300 million people across the United States could face power outages. This underscores the pressing need for robust emergency response and public health planning.

The impact of power loss is especially profound for vulnerable populations, including those reliant on electricity-dependent medical equipment and life-saving medications that require refrigeration. Extreme heat significantly increases public health risks by exacerbating mental health, behavioral disorders, and chronic illnesses such as heart and respiratory conditions, and increasing the likelihood of preterm births and developmental issues in infants and children. Excessive temperatures also impose burdens on older adults.

Since 2015, national power outages have surged by over 150% owing to demand and extreme weather amplified by climate change. Increasing temperatures can cause transformers to overheat and explode, sometimes sparking fires and cascading outages. Other types of severe weather events, such as lightning strikes, high winds, and flying debris, further escalate the risk of utility infrastructure damage.

In 2020, 22 extreme weather events – from cyclones to hurricanes, heat, and drought – cost the U.S. a combined $95 billion. The following year, disasters like the Texas winter storm and the Pacific Northwest heatwave vividly illustrated the severe consequences of extreme weather on grid stability. To put this into perspective,

- The Pacific Northwest heatwave resulted in thousands of heat-related emergency department visits and over 700 deaths.

- During the Texas winter storm, 4.5 million customers went without power, leading to over 240 deaths and economic damages estimated at $130 billion.

These events led to rolling blackouts, thousands of heat-related emergency room visits, numerous deaths, and substantial economic losses. This remains an actively ongoing paradigm, with the National Oceanic and Atmospheric Administration’s (NOAA) 2023 Billion-dollar disaster report confirming 28 weather and climate disasters in a single year, surpassing the previous record of 22 in 2020, with a price tag of at least $92.9 billion.

Historical disasters, such as Hurricane Maria in 2017 and the Northeastern blackout in 2003, are stark reminders of the devastating impact of prolonged power outages. The aftermath of such events includes loss of life, disruptions to healthcare access, and extensive economic damages.

- Hurricane Maria affected 1.6 million people, with loss of electricity for 328 days, 4600 direct deaths – 70 times the official toll – and an estimated third more excess deaths attributed to the lack of access to health care, and an estimated $90 billion in damages.

- The Northeastern blackout impacted 30 million customers across the U.S. and Canada, causing at least 11 deaths and estimated economic losses of $6 billion.

A stark 2023 study reported that “If a multi-day blackout in Phoenix coincided with a heat wave, nearly half the population would require emergency department care for heat stroke or other heat-related illnesses.” Under such conditions, the researchers estimate that 12,800 people in Phoenix would die.

During these events, restoring power and providing mass care falls on various entities. Utility and power operators are tasked with repairing grid infrastructure, while the Federal Emergency Management Agency (FEMA) coordinates interagency actions through its National Response Framework and Emergency Support Functions (ESFs).

For example, ESF #6 handles mass care missions like sheltering and feeding, while ESF #8 coordinates public health efforts, overseen by the Department of Health and Human Services (HHS). FEMA’s Power Outage Incident Annex (POIA) enables utility operators to request support through ESF #12.While there is some testing of system responses to blackouts, few states have conducted exercises at scale, which is crucial, given the immense complexity of restarting grid infrastructure and coordinating mass care operations simultaneously.

Opportunity

The Department of Energy’s (DOE) Liberty Eclipse Program exemplifies a successful public-private partnership aimed at bolstering energy sector preparedness against cyberattacks on the grid. Similarly, FEMA conducts numerous Incident Command Systems (ICS) training annually, emphasizing collaboration across governments, nongovernmental organizations (NGOs), and the private sector.

By leveraging interagency mechanisms like MOUs, FEMA, DOE, and HHS can integrate and expand exercises addressing heat-induced grid failure into existing training frameworks. Such collaborative efforts would ensure a comprehensive approach to preparedness. Additionally, funds typically earmarked for state and local agency training could cover their participation costs in these exercises, optimizing resource utilization and ensuring widespread preparedness across all government levels.

There are also several federal policy efforts currently aligned with this proposal’s objectives, demonstrating a concerted effort to address related challenges through legislation, executive branch actions, programs, and precedents. Notable legislative initiatives, such as Rep. Ruben Gallego’s proposal to amend the Stafford Act, underscore a growing recognition of the unique threats posed by extreme heat events and the need for proactive federal measures.

Simultaneously, regulatory initiatives, such as those by FERC, signal a proactive stance in enhancing energy infrastructure resilience against extreme weather events. Building on an established precedent, FERC could direct NERC to create extreme heat reliability standards for power sector operators, akin to those established for extreme cold weather in 2024 (E-1 | RD24-1-000), further ensuring the reliable operation of the Bulk Electric System (BES).A pivotal resource informing our proposal is the 2018 report by the President’s National Infrastructure Advisory Council (NIAC), which emphasizes the significance of addressing catastrophic grid failure and underscores ongoing efforts dedicated to this pressing issue. Tasked with assessing the nation’s preparedness for “catastrophic power outages beyond modern experience,” the report offers invaluable insights and recommendations, particularly relevant to the following recommendation.

Plan of Action

To enhance national resilience, save tens of thousands of lives, and prevent significant economic losses, the National Security Council (NSC) should coordinate collaboration between implicated agencies (DOE, HHS, and FEMA) on grid resilience under extreme heat conditions and work to establish an interagency MOU to fortify the nation’s resilience against extreme heat events, with a specific focus on disaster planning for grid failure. This proposal will have minimal direct impact on the federal budget as it will use existing frameworks within agencies such as FEMA, the DOE, and HHS. These agencies already allocate resources towards preparedness training and testing, as evidenced by their annual budgets.

Recommendation 1. NSC should initiate a collaboration between DOE, HHS, and FEMA.

The NSC should direct DOE to assess grid resilience under extreme heat and coordinate and prepare for widespread grid failure events in collaboration with FEMA and HHS. This collaboration would involve multi-state, multi-jurisdictional entities, tribal governments, and utilities in scaling planning and preparedness.

Under this coordinated action, federal agencies, with input from partners in the NSC should undertake the following steps:

The DOE Office of Cybersecurity, Energy Security, and Emergency Response (CESER), in collaboration with FERC and NERC, should develop comprehensive extreme heat guidelines for utilities and energy providers. These guidelines should include protocols for monitoring grid performance, implementing proactive maintenance measures, communicating concerns and emerging issues, and establishing transparent and equitable processes for load shedding during extreme heat events. Equitable and transparent load shedding is critical as energy consumption rises, driven in part by new industries like clean tech manufacturing and data centers.

FEMA should:

- Update the National Incident Management System to include extreme heat-induced grid failure in disaster response scenarios.

- Conduct risk assessments and develop a whole community approach to emergency response and preparedness plans to address the scale of widespread, multi-state grid failure events. This is in line with FEMA’s goal to increase resilience in the face of more frequent, far-reaching, and widespread natural and manmade disasters.

- Collaborate with state, tribal, and local governments, utilities, NGOs, and other partners to identify critical infrastructure vulnerabilities and prioritize mitigation and response measures, incorporating an equity mapping component to ensure equitable distribution of resources and assistance.

HHS should strengthen functions under ESF#8 to deliver public health services during extreme heat-induced grid failure events, with enhanced coordination between the Centers for Disease Control and Prevention (CDC) and the Assistant Secretary for Preparedness and Response (ASPR).

Recommendation 2. Establish an interagency MOU

An interagency MOU should streamline coordination and collaboration on extreme heat disaster planning and preparedness for grid failure. Further, these agreements should prepare agencies to facilitate cross-sector collaboration with states and local governments through the establishment of a national task force.

This MOU should outline the following actions:

- Joint task force: Create a task force comprising lead representatives from relevant agencies and partner organizations to develop, advance, and test extreme heat-induced grid failure disaster planning and preparedness. This task force would lead efforts to formulate planning strategies, conduct disaster training exercises, and facilitate the exchange of critical information among emergency managers, public health emergency preparedness (PHEP) teams, utilities, and medical providers.

- Information sharing protocols: Develop standardized protocols for sharing data and intelligence on heatwave forecasts, grid stability alerts, and response capabilities across agencies and jurisdictions.

- Training and exercise programs: Coordinate the development and implementation of training exercises to test and improve response procedures to extreme heat events across sectors.

- Public outreach and education: Collaborate on public outreach campaigns to raise awareness about heat-related risks and preparedness measures. Disseminate existing communication tools and education programs developed for weather disasters through local agencies to enhance family and neighborhood resilience to extreme heat-induced grid failures. Special attention should be given to meeting the needs of vulnerable populations, with a strong emphasis on equity, public health, and social cohesion. Resources such as the HHS emPOWER Program Platform and ArcGIS mapping tools like the National Integrated Heat Health Information System’s (NIHHIS) “Future Heat Events and Social Vulnerability,” FEMA’s Resilience Analysis and Planning Tool (RAPT), or the CDC’s Environmental Justice and Map Dashboard offer data-driven approaches to achieve this objective.

- Community resilience: FEMA, branches of HHS, CDC, and ASPR, in collaboration with branches of the NOAA, including the National Weather Service and the NIHHIS, should work to help communities fortify their resilience against widespread blackouts during extreme heat events. Leveraging existing weather disaster resources for storms, fires, and hurricanes, resilience planning should be expanded and adapted to address the loss of electricity, water, and sanitation systems; communication breakdowns; transportation disruptions; and interruptions in medical services under extreme heat conditions.

Conclusion

This proposal emphasizes planning for blackouts and response readiness when the lights go out across wide swaths of America during extreme heat. Addressing this critical gap in federal disaster response planning would secure the safety of millions of citizens and prevent billions of dollars in potential economic losses.

An Executive Action or interagency MOU would facilitate coordinated planning and preparedness, leveraging existing frameworks and engaging stakeholders beyond traditional boundaries to effectively manage potential catastrophic, multi-state grid failures during heat waves. Specific steps to advance this initiative include ensuring no ongoing similar exercises, scheduling meetings with pertinent agency leaders, revisiting policy recommendations based on agency feedback, and drafting language to incorporate into interagency MOUs.

Using existing authorities and funding, implementing these recommendations would safeguard lives, protect the economy, and bolster national security, particularly as the U.S. moves toward a more sustainable, stable, and reliable electric grid system.

This idea of merit originated from our Extreme Heat Ideas Challenge. Scientific and technical experts across disciplines worked with FAS to develop potential solutions in various realms: infrastructure and the built environment, workforce safety and development, public health, food security and resilience, emergency planning and response, and data indices. Review ideas to combat extreme heat here.

This proposal is fully aligned with the Biden Administration’s executive actions on climate change, specifically, Executive Orders 14008 and 13990, which have led to significant initiatives aimed at addressing climate-related challenges and promoting environmental justice. These actions resulted in the establishment of key entities such as the Office of Climate Change and Health Equity at the Department of Health and Human Services (HHS), as well as in the development of the HHS’ national Climate and Health Outlook and the CDC’s Heat and Health Tracker, and heat planning and preparedness guides. Furthermore, the launch of Heat.gov and the interagency National Integrated Heat Health Information System (NIHHIS), are significant steps in providing accessible and science-based information to the public and decision-makers to support equitable heat resilience. Heat.gov serves as a centralized platform offering comprehensive resources, including NIHHIS programs, events, news articles, heat and health program funding opportunities, and information tailored to at-risk communities. This initiative underscores President Biden’s dedication to tackling the health risks associated with extreme heat and is a priority of his National Climate Task Force and its Interagency Working Group on Extreme Heat. This proposal complements these efforts and aligns closely with the administration’s broader climate and health equity agenda. By leveraging existing frameworks and collaborating across agencies, it is possible to further advance the administration’s objectives while effectively addressing the urgent challenges posed by climate change.

This policy memo was written by the Federation of American Scientists in collaboration with the Pima County Department of Health (Dr. Theresa Cullen, Dr. Julie Robinson, Kat Davis), which provided research and information support to the authors. The Pima County Department of Health seeks to advance health equity and environmental justice for the citizens of Arizona and beyond.

Protecting Workers from Extreme Heat through an Energy-efficient Workplace Cooling Transformation

Extreme heat is a growing threat to the health and productivity of U.S. workers and businesses. There is a high-impact opportunity to pioneer innovations in energy-efficient worker-centric cooling to protect workers from the growing heat while reducing the costs to businesses to install protections. With the impending Occupational Safety and Health Administration (OSHA) standard, the federal government should ensure that businesses have the necessary support to establish and maintain the infrastructure needed for existing and upcoming worker heat protection requirements while realizing economic, disaster resilience, and climate co-benefits. To achieve this goal, an Executive Order should form a multiagency working group that coordinates federal government and nongovernment partners to define a new building design approach that integrates both worker health and energy-efficiency considerations. The working group should establish roles and a process for coordinating and identifying leaders and funding approaches to advance a policy roadmap to accelerate, scale up, and evaluate equitable deployment and maintenance of energy-efficient worker-centric cooling. This plan presents a unique and timely opportunity to build upon existing national clean energy, climate, and infrastructure commitments and goals to ensure a healthier, more productive, resilient, and sustainable workforce.

Challenge and Opportunity

U.S. workers and businesses face a growing threat of illness, death, and reduced work productivity from extreme heat exposure. There were 436 work-related heat deaths recorded in the U.S. from 2011 to 2021. Workplace heat exposure is linked to heat illnesses, traumatic injuries, and reduced work productivity among otherwise healthy workers, costing the nation an estimated $100 billion each year in lost economic activity. Workers exposed to high heat include those in outdoor occupations in agriculture and construction and those working in hot manufacturing, transportation and warehousing, and food services environments. Spikes in worker heat illness have occurred during recent extreme heat events, such as the “heat dome” event of 2021, which are more likely to occur with climate change. Disproportionately exposed workers and small businesses often do not have the resources or capacity to implement, improve, or maintain existing workplace cooling infrastructure, thus increasing heat exposure inequities.

An energy-efficient workplace cooling transformation is needed to ensure businesses have the support required to comply with existing state heat rules and upcoming federal workplace heat prevention requirements. Several states—California, Colorado, Oregon, Minnesota, and Washington—have already adopted occupational indoor and/or outdoor heat exposure rules to protect workers from heat stress. OSHA is in the process of developing a national workplace heat standard. In addition to requirements for worker rest breaks, training, and hydration, OSHA is considering requirements for employers to implement protections when the measured heat index is 80°F or higher, including engineering controls such as air-conditioned cool-down areas.

Using energy-efficient active or passive cooling systems and building designs in workplaces has numerous benefits. Cooling the environment is one of the most effective methods for reducing the risk of heat illness. Energy-efficient cooling reduces electricity consumption and greenhouse gas emissions compared to conventional systems. Energy-efficient buildings cost less to operate, allowing greater productivity at lower cost and reduced fossil fuel use and community air pollution. Energy-efficient cooling also decreases the amount of electricity on the grid at one time, reducing the chances of blackouts during extreme weather events.

We must develop a new approach to building standards – energy-efficient, worker-centric cooling – that integrates both worker health and energy-efficiency considerations. Existing building-centric approaches that blanket-cool entire buildings to the same fixed temperature are energy inefficient and can lead to overcooling of unoccupied areas and increased costs. The urgent need for energy-efficient worker-centric cooling standards is highlighted, for example, by the 300–900 million ft2 per quarter rate of U.S. warehouse space under construction, and a growing warehouse workforce, in recent years.

There is a gap in standards that address both civilian worker health and energy-efficient cooling simultaneously. The U.S. Green Building Council (USGBC) has incorporated a worker-centric approach in its Leadership in Energy and Environmental Design (LEED) certification program. This approach includes pilot credits for Prevention through Design (PtD), which aims to minimize risks to workers by integrating safety measures into building design and redesign. One such example is ensuring roof features, such as vegetated roofs and solar panel installations, are arranged to minimize hazards like falls for maintenance personnel. However, there are no specific PtD standards or LEED credits for energy-efficient cooling approaches that address worker heat hazards. For example, there are no specific standards that incorporate the proximity of indoor cool-down areas to hot work areas, targeted cooling of certain work areas, or mobile outdoor cooling stations that leverage solar and electrochemical technology.

Although there are several potential mechanisms of support for energy-efficient cooling infrastructure for commercial buildings and small businesses, there is no program to assist employers and small businesses in integrating these technologies into worker-centric cooling infrastructure designs. Under the Inflation Reduction Act of 2022 (IRA), tax deductions are available through Internal Revenue Code (IRC) 179D for building owners to install or retrofit equipment aimed at improving energy efficiency, including HVAC systems such as heat pumps and building envelope improvements to “heat-proof” or weatherize structures. However, tax credits may be difficult to access and may not provide a sufficient degree of immediate support for small business owners struggling with inflation costs. While the Biden-Harris Administration has also launched a $14 billion National Clean Investment Fund that will provide Environmental Protection Agency (EPA) grants to small businesses for deploying clean technology projects, there are no earmarked funds for workplace solutions focused on energy-efficient cooling or resilience to extreme heat events that integrate worker health considerations. Current U.S. Small Business Administration efforts focus primarily on supporting small businesses with disaster recovery rather than resilience.

Effective cross-agency coordination is needed to accomplish an energy-efficient cooling transformation in U.S. workplaces, support small businesses, and contribute to the Healthy People 2030 goal of reducing workplace deaths. Coordination among existing agencies and external partners to address gaps in energy-efficient cooling technology, worker-centric designs, and heat-specific PtD building approaches will support a healthier, more productive, and sustainable U.S. workforce.

Plan of Action

Transforming workplace infrastructure to support a healthy, productive, and sustainable U.S. workforce against extreme heat requires coordination across multiple federal agencies. This plan offers the first steps in developing a structure for coordination, defining the approach, developing a roadmap for future actions, and ultimately catalyzing and piloting innovations and implementing and evaluating solutions.

This plan is guided by the following principles:

- Workplace deaths, illnesses, and injuries from heat exposure are preventable.

- Work equity should be incorporated into decision-making, in alignment with the Justice40 Initiative, to ensure benefit for workers who are most likely to be exposed to dangerous workplace heat and who live and work in communities overburdened by pollution from energy inefficient infrastructure.

- Demand for solutions will be supported by co-benefits of energy-efficient workplace cooling (e.g., reduced workplace costs; increased productivity; reduced greenhouse gas emissions, in alignment with National Climate Task Force Goals and the Department of Energy (DOE) Better Buildings® Better Climate Challenge; and sustainable workplaces that are resilient to extreme events, in alignment with the Biden Administration’s Bipartisan Infrastructure Law).

- High-level sponsorship and clarity of roles are critical to catalyzing the necessary coordination across federal agencies and with public and private partners.

Following an executive order from the President, the Office of Management and Budget should convene a multiagency working group to develop a plan for coordination and to outline a roadmap toward an energy-efficient workplace cooling transformation for a healthy, productive, and sustainable workforce. The working group should:

Recommendation 1. Be chaired by an agency that has experience in convening multisectoral collaborations and advocating for equitable health outcomes, such as the Department of Health and Human Services (HHS) Office of Climate Change and Health Equity. The inclusion of representatives from the following agencies and offices should be considered:

- DOE (e.g., Office of Energy Efficiency and Renewable Energy, Office of State and Community Energy Programs, Office of Small and Disadvantaged Business Utilization)

- Centers for Disease Control (CDC) and its National Institute for Occupational Safety and Health (NIOSH) (e.g., Small Business Assistance Program, National Personal Protective Tech Lab, Division of Science Integration)

- EPA (e.g., Office of Research and Development, Office of Environmental Justice and External Civil Rights)

- U.S. Small Business Administration (SBA) (e.g., Small Business Innovation Research Program, Office of Disaster Recovery and Resilience)

- OSHA (e.g., Directorate of Standards and Guidance)

- National Oceanic and Atmospheric Administration (NOAA) (e.g., Climate Program Office)

- Internal Revenue Service (IRS)

- Bureau of Labor Statistics (BLS) (e.g., Office of Compensation and Working Conditions)

- White House Climate Policy Office

Recommendation 2. Define roles and develop a plan to enhance coordination with public and private partners in developing and evaluating evidence-based worker-centric cooling infrastructure technologies and building designs. Partners should include those that develop or promote voluntary standards and guidelines for:

- buildings (e.g., USGBC LEED Program PtD initiative; American Society of Heating, Refrigerating and Air-Conditioning Engineers [ASHRAE] energy efficiency standard 90.1, 2022 and thermal environment standard 55, 2023; and EnergyStar)

- worker health (e.g., American Conference of Governmental Industrial Hygienist (ACGIH) heat stress and American Industrial Hygiene Association thermal stress working groups)

- and business and labor representatives identified by the working group.

Recommendation 3. Establish a consensus definition of energy-efficient worker-centric cooling using a combination of established metrics, including:

- metrics for energy efficiency and

- metrics for human effects of heat that account for work characteristics such as ambient heat exposure, workload, and clothing.

Recommendation 4. Outline existing pathways to support an energy-efficient workplace cooling transformation, including:

- IRA tax deductions for building owner energy-efficient installation or retrofitting (IRA 13303; IRC 179D)

- National Clean Investment Fund grants through EPA to small businesses deploying clean technology projects

- SBA Office of Disaster Recovery & Resilience business loans,

- and assess gaps, alternative incentive mechanisms for businesses, and the landscape of private and foundation funders to inform Step 5.

Recommendation 5. Articulate follow-on initiatives and identify leaders and potential funding approaches to advance the roadmap of policies to accelerate, scale up, and evaluate equitable deployment, maintenance, and evaluation of worker-centric energy-efficient cooling infrastructure. Policies considerations include:

- Grant funding mechanisms for research, development, and pilot implementation and evaluation of worker-centric building designs and cooling technologies, such as those through the EPA’s National Clean Investment Fund, SBA/SBIR, or NIOSH’s crowdsourcing mechanisms similar to the Respirator Fit Challenge mechanism. Matching of federal and private and foundation funding should be considered to support rapid development, prototyping, and evaluation of promising worker-centric cooling infrastructure technology and building designs.

- Funding for ASHRAE, LEED, and DOE initiatives focused on incorporating worker considerations into standard development and energy code validation processes that ultimately inform local building codes.

- Funding for the IRS to develop and implement alternative incentive mechanisms that reduce barriers and address gaps in support for businesses interested in implementing energy-efficient workplace cooling infrastructure.

- Funding for DOE to:

- develop a centralized clearinghouse to disseminate information on federal programs, incentives, and mechanisms for financing energy-efficient cooling retrofits and upgrades for businesses, including small businesses (similar to H.R. 4092; 113th Congress for schools)

- support coordination of state energy offices with health and labor departments to conduct coordinated outreach on implementation of energy-efficient worker-centric cooling approaches and initiatives, with a focus on small businesses and those with disproportionately exposed workers

Funding for agencies to work together to develop and implement approaches to track progress toward an energy-efficient workplace cooling transformation by combining data sources.

Conclusion

Given the growing threat to U.S. workers and businesses posed by illness, death, and reduced work productivity from increasing heat exposure, it is imperative to catalyze an energy-efficient workplace cooling transformation. There is currently a unique and timely opportunity to build upon national clean energy, climate, and infrastructure commitments and goals to address gaps in energy-efficient worker-centric cooling technology and PtD building standards. The proposed plan will incorporate high-level support, provide infrastructure for coordination among government agencies and nongovernmental partners, define the approach, and lay the groundwork for stimulating innovations in promising worker-centric cooling technologies and designs. This plan will produce a roadmap for an energy-efficient workplace cooling transformation that will support businesses in establishing the infrastructure needed for existing and upcoming workplace heat prevention requirements. The approach will build upon existing occupational health equity initiatives to reduce the risk of heat health effects for workers disproportionately affected by heat and small businesses. This initiative will ensure a healthier, more productive, and sustainable workforce with minimal cost and a substantial potential return on investment.

This idea of merit originated from our Extreme Heat Ideas Challenge. Scientific and technical experts across disciplines worked with FAS to develop potential solutions in various realms: infrastructure and the built environment, workforce safety and development, public health, food security and resilience, emergency planning and response, and data indices. Review ideas to combat extreme heat here.

Under federal OSHA standards for employers, workplaces are currently only required to address workplace heat if it is causing or likely to cause death or serious harm to employees. This OSHA “General Duty Clause” requirement is insufficient, as workers experience negative effects from workplace heat exposure — ranging from heat illness to death. OSHA is in the process of developing a workplace heat standard that considers engineering controls, such as workplace cooling, along with other requirements related to worker breaks, training, and hydration. Workplace cooling is a proposed federal rule element and is already relevant for U.S. states with indoor workplace heat regulations. Energy-efficient workplace cooling infrastructure transitions do not happen overnight. Investment now is important for states with existing heat rules and to prepare for the future state and/or federal heat rules.

Home cooling only partially addresses extreme heat health risks because many working-age adults spend half of their waking hours during the workweek at work. Further, increased energy-efficiency in the industrial sector, which currently accounts for 30% of U.S. greenhouse emissions, can reduce pollution in surrounding communities and blackout risk during extreme weather events.

Existing incentives and grants (e.g., IRA tax deductions for building owner energy-efficient installation or retrofitting, such as IRA 13303; IRC 179D; National Clean Investment Fund grants through EPA to small businesses deploying clean technology projects; and SBA Office of Disaster Recovery and Resilience loans) do not explicitly incorporate worker-centric designs that achieve climate, energy-efficiency, and worker health goals simultaneously. Further, tax deductions and grant programs provide short- and medium-term financial support for energy-efficient workplace cooling transitions. Without a roadmap to address explicit coordination, simplification in processes, and accessibility of incentives, small business owners may be unable to take advantage of these incentives.

Examples of data sources that could be considered are:

- Records of businesses obtaining incentives, such as IRA tax deductions and National Clean Investment Fund EPA grants, for energy-efficient cooling

- Federal databases to track bills and policies that support or integrate worker-centric energy-efficient cooling strategies or building designs

- Rates of reported workplace cooling and heat illness by industry/occupation using existing national survey mechanisms such as data from relevant CDC Behavioral Risk Factor Surveillance System (BRFSS) modules and the BLS Survey of Occupational Injuries and Illnesses

- Worker heat deaths, for example through existing BLS Census of Fatal Occupational Injuries and NIOSH Fatality Assessment and Control Evaluation programs

A Comprehensive Strategy to Address Extreme Heat in Schools

Requiring children to attend school when classroom temperatures are high is unsafe and reduces learning; yet closing schools for extreme heat has wide-ranging consequences for learning, safety, food access, and social determinants of health. Children are vulnerable to heat, and schooling is compulsory in the U.S. Families rely on schools for food, childcare, and safety. In order to protect the health and well-being of the nation’s children, the federal government must facilitate efforts to collect the data required to drive extreme heat mitigation and adaptive capacity, invest in more resilient infrastructure, provide guidance on preparedness and response, and establish enforceable temperature thresholds. To do this, federal agencies can take action through three paths of mitigation: data collection and collaboration, set policy, and investments.

Challenge and Opportunity

Schools are on the forefront of heat-related disasters, and the impact extends beyond the hot days. Extreme heat threatens students’ health and academic achievement and causes rippling effects across the social determinants of health in terms of food access, caregiver employment, and future employment/income for students. Coordinated preparation is necessary to protect the health and well-being of children during extreme heat events.

School Infrastructure Failure

Many schools do not have adequate infrastructure to remain cool during extreme heat events. At the start of the 2023–2024 academic year, schools in multiple locations were already experiencing failure due to extreme heat and were closing or struggling to hold classes in sweltering classrooms. The Center for Climate Integrity identified a 39% increase from 1970 to 2025 in the number of school districts that will have more than 32 school days over 80°F (their temperature cutoff for needing air-conditioning to function). The Government Accountability Office found in 2020 that 41% of public school districts urgently need upgrades to HVAC systems in at least half of their buildings, totaling 36,000 buildings nationally. The National Center for Education Statistics’ (NCES) most recent survey of the Condition of America’s Public School Facilities (2012–2013 school year) found 30% of school buildings did not have adequate air-conditioning. The numbers correlate with the population of disadvantaged students: 34% of schools where at least 75% of students are eligible for free or reduced lunch, and only 25% of schools where less than 35% of students are eligible for free/reduced lunch. NCES’s School Pulse Panel, implemented to document schools’ response to COVID-19, is expanding to include other topics relevant to federal, state, and local decision-makers. The survey includes heat-adjacent questions on indoor air quality, air filtration, and HVAC upgrades, but does not currently document schools’ ability to respond to extreme heat. Schools that are not able to maintain cool temperatures during extreme heat events directly affect child health and safety, and have an upstream impact on health.

Impact on Child Health and Safety

When temperatures rise on school days, local districts must decide whether to remain open or close. Both decisions can affect children’s health and safety. If schools remain open, students may be exposed to uncomfortable and unsustainable high temperatures in rooms with inadequate ventilation. Teachers in New York State reported extreme temperatures up to 94℉ inside the classroom and children passing out during September 2023 heatwaves. Spending time in the schoolyard may only compound the problem. Unshaded playgrounds and asphalt quickly heat up and may be hotter than surrounding areas, with surface temperatures that can cause burns. Similar to neighborhood tree cover, shade on school playgrounds is correlated with income (more income, more shade), leading to a higher risk of heat exposure for low-income and historically marginalized students. Children are vulnerable to heat and may have trouble cooling down when their body temperatures rise. Returning to hot classrooms will not provide them with an opportunity to cool down.

If schools close, children who are unable to access school food may go hungry. Procedures exist to ensure the continuation of school food service during unanticipated school closures, but it is not clear how food service would function if the building is overheated during extreme heat events. In New York City, an assessment of public cooling centers identified that nearly half were in senior centers and not open to children. If schools do not have sufficient heat mitigation and are closed for heat, children from low-income households, who are at higher risk for food insecurity and less likely to have air conditioning at home, may be left hot and hungry.

While some state and local education departments have developed plans for responding to extreme heat on school days, the guidance, topics, and level of detail varies across states. Further, while the National Integrated Heat Health Information System (NIHHIS) and the Centers for Disease Control and Prevention (CDC) have identified children as an at-risk group during heat events, they do not offer specific information on how schools can prepare and respond. A comprehensive playbook that provides guidance on the many challenges schools may encounter during extreme heat, and how to keep children safe, would enhance schools’ ability to function.

Impact on Learning and Social Determinants of Health

The cumulative impact on learning, income, and equity is large. When schools remain open, heat reduces student learning (a 1% reduction in learning for each 1℉ increase across the year). When schools close, children lose learning time. The nation experienced the rippling effects of school closures during the COVID-19 pandemic, when extended closures impacted the achievement gap, projected future earnings, and caregiver employment, particularly for women. Even five days of closure for snow days in a school year has been seen to reduce learning. The projected increase in the number of districts that experience more than 32 school days a year over 80℉ suggests the impact of heat on learning could be substantial, whether it is from school closure or from learning in overheated classrooms.

The impact on learning disproportionately affects students in low-income districts, often correlated with race due to historic redlining, as these districts have fewer funds available for school improvement projects and are more likely to have school buildings that lack sufficient cooling mechanisms. These disproportionate impacts foster increasing academic and economic inequity between students in low- and high-income school districts.

Existing Response: Infrastructure