Increasing Responsible Data Sharing Capacity throughout Government

Deriving insights from data is essential for effective governance. However, collecting and sharing data—if not managed properly—can pose privacy risks for individuals. Current scientific understanding shows that so-called “anonymization” methods that have been widely used in the past are inadequate for protecting privacy in the era of big data and artificial intelligence. The evolving field of Privacy-Enhancing Technologies (PETs), including differential privacy and secure multiparty computation, offers a way forward for sharing data safely and responsibly.

The administration should prioritize the use of PETs by integrating them into data-sharing processes and strengthening the executive branch’s capacity to deploy PET solutions.

Challenge and Opportunity

A key function of modern government is the collection and dissemination of data. This role of government is enshrined in Article 1, Section 2 of the U.S. Constitution in the form of the decennial census—and has only increased with recent initiatives to modernize the federal statistical system and expand evidence-based policymaking. The number of datasets itself has also grown; there are now over 300,000 datasets on data.gov, covering everything from border crossings to healthcare. The release of these datasets not only accomplishes important transparency goals, but also represents an important step toward advancing American society fairer, as data are a key ingredient in identifying policies that benefit the public.

Unfortunately, the collection and dissemination of data comes with significant privacy risks. Even with access to aggregated information, motivated attackers can extract information specific to individual data subjects and cause concrete harm. A famous illustration of this risk occurred in 1997 when Latanya Sweeney was able to identify the medical record of then-Governor of Massachusetts, William Weld, from a public, anonymized dataset. Since then, the power of data re-identification techniques—and incentives for third parties to learn sensitive information about individuals—have only increased, compounding this risk. As a democratic, civil-rights respecting nation, it is irresponsible for our government agencies to continue to collect and disseminate datasets without careful consideration of the privacy implications of data sharing.

While there may appear to be an irreconcilable tension between facilitating data-driven insight and protecting the privacy of individual’s data, an emerging scientific consensus shows that Privacy-Enhancing Technologies (PETs) offer a path forward. PETs are a collection of techniques that enable data to be used while tightly controlling the risk incurred by individual data subjects. One particular PET, differential privacy (DP), was recently used by the U.S. Census Bureau within their disclosure avoidance system for the 2020 decennial census in order to meet their dual mandates of data release and confidentiality. Other PETs, including variations of secure multiparty computation, have been used experimentally by other agencies, including to link long-term income data to college records and understand mental health outcomes for individuals who have earned doctorates. The National Institute of Standards and Technology (NIST) has produced frameworks and reports on data and information privacy, including PETs topics such as DP (see Q&A section). However, these reports still lack a comprehensive and actionable framework on how organizations should consider, use and deploy PETs in organizations.

As artificial intelligence becomes more prevalent inside and outside government and relies on increasingly large datasets, the need for responsible data sharing is growing more urgent. The federal government is uniquely positioned to foster responsible innovation and set a strong example by promoting the use of PETs. The use of DP in the 2020 decennial census was an extraordinary example of the government’s capacity to lead global innovation in responsible data sharing practices. While the promise of continuing this trend is immense, expanding the use of PETs within government poses twin challenges: (1) sharing data within government comes with unique challenges—both technical and legal—that are only starting to be fully understood and (2) expertise on using PETs within government is limited. In this proposal, we outline a concrete plan to overcome these challenges and unlock the potential of PETs within government.

Plan of Action

Using PETs when sharing data should be a key priority for the executive branch. The new administration should encourage agencies to consider the use of PETs when sharing data and build a United States DOGE Service (USDS) “Responsible Data Sharing Corps” of professionals who can provide in-house guidance around responsible data sharing.

We believe that enabling data sharing with PETs requires (1) gradual, iterative refinement of norms and (2) increased capacity in government. With these in mind, we propose the following recommendations for the executive branch.

Strategy Component 1. Build consideration of PETs into the process of data sharing

Recommendation 1. NIST should produce a decision-making framework for organizations to rely on when evaluating the use of PETs.

NIST should provide a step-by-step decision-making framework for determining the appropriate use of PETs within organizations, including whether PETs should be used, and if so, which PET and how it should be deployed. Specifically, this guidance should be at the same level of granularity as NIST Risk Management Framework for Cybersecurity. NIST should consult with a range of stakeholders from the broad data sharing ecosystem to create this framework. This includes data curators (i.e., organizations that collect and share data, within and outside the government); data users (i.e., organizations that consume, use and rely on shared data, including government agencies, special interest groups and researchers); data subjects; experts across fields such as information studies, computer science, and statistics; and decision makers within public and private organizations who have prior experience using PETs for data sharing. The report may build on NIST’s existing related publications and other guides for policymakers considering the use of specific PETs, and should provide actionable guidance on factors to consider when using PETs. The output of this process should be not only a decision, but also a report documenting the execution of decision-making framework (which will be instrumental for Recommendation 3).

Recommendation 2. The Office of Management and Budget (OMB) should mandate government agencies interested in data sharing to use the NIST’s decision-making framework developed in Recommendation 1 to determine the appropriateness of PETs to protect their data pipelines.

The risks to data subjects associated with data releases can be significantly mitigated with the use of PETs, such as differential privacy. Along with considering other mechanisms of disclosure control (e.g., tiered access, limiting data availability), agencies should investigate the feasibility and tradeoffs around using PETs to protect data subjects while sharing data for policymaking and public use. To that end, OMB should require government agencies to use the decision-making framework produced by NIST (in Recommendation 1) for each instance of data sharing. We emphasize that this decision-making process may lead to a decision not to use PETs, as appropriate. Agencies should compile the produced reports such that they can be accessed by OMB as part of Recommendation 3.

Recommendation 3. OMB should produce a PET Use Case Inventory and annual reports that provide insights on the use of PETs in government data-sharing contexts.

To promote transparency and shared learning, agencies should share the reports produced as part of their PET deployments and associated decision-making processes with OMB. Using these reports, OMB should (1) publish a federal government PET Use Case Inventory (similar to the recently established Federal AI Use Case Inventory) and (2) synthesize these findings into an annual report. These findings should provide high-level insights into the decisions that are being made across agencies regarding responsible data sharing, and highlight the barriers to adoption of PETs within various government data pipelines. These reports can then be used to update the decision-making frameworks we propose that NIST should produce (Recommendation 1) and inspire further technical innovation in academia and the private sector.

Strategy Component 2. Build capacity around responsible data sharing expertise

Increasing in-depth decision-making around responsible data sharing—including the use of PETs—will require specialized expertise. While there are some government agencies with teams well-trained in these topics (e.g., the Census Bureau and its team of DP experts), expertise across government is still lacking. Hence, we propose a capacity-building initiative that increases the number of experts in responsible data sharing across government.

Recommendation 4. Announce the creation of a “Responsible Data Sharing Corps.”

We propose that the USDS create a “Responsible Data Sharing Corps” (RDSC). This team will be composed of experts in responsible data sharing practices and PETs. RDSC experts can be deployed into other government agencies as needed to support decision-making about data sharing. They may also be available for as-needed consultations with agencies to answer questions or provide guidance around PETs or other relevant areas of expertise.

Recommendation 5. Build opportunities for continuing education and training for RDSC members.

Given the evolving nature of responsible data practices, including the rapid development of PETs and other privacy and security best practices, members of the RDSC should have 20% effort reserved for continuing education and training. This may involve taking online courses or attending workshops and conferences that describe state-of-the-art PETs and other relevant technologies and methodologies.

Recommendation 6. Launch a fellowship program to maintain the RDSC’s cutting-edge expertise in deploying PETS.

Finally, to ensure that the RDSC stays at the cutting edge of relevant technologies, we propose an RDSC fellowship program similar to or part of the Presidential Innovation Fellows. Fellows may be selected from academia or industry, but should have expertise in PETs and propose a novel use of PETs in a government data-sharing context. During their one-year terms, fellows will perform their proposed work and bring new knowledge to the RDSC.

Conclusion

Data sharing has become a key priority for the government in recent years, but privacy concerns make it critical to modernize technology for responsible data use to leverage data for policymaking and transparency. PETs such as differential privacy, secure multiparty computation, and others offer a promising way forward. However, deploying PETs at a broad scale requires changing norms and increasing capacity in government. The executive branch should lead these efforts by encouraging agencies to consider PETs when making data-sharing decisions and building a “Responsible Data Sharing Corps” who can provide expertise and support for agencies in this effort. By encouraging the deployment of PETs, the government can increase fairness, utility and transparency of data while protecting itself—and its data subjects—from privacy harms.

This action-ready policy memo is part of Day One 2025 — our effort to bring forward bold policy ideas, grounded in science and evidence, that can tackle the country’s biggest challenges and bring us closer to the prosperous, equitable and safe future that we all hope for whoever takes office in 2025 and beyond.

PLEASE NOTE (February 2025): Since publication several government websites have been taken offline. We apologize for any broken links to once accessible public data.

Data sharing requires a careful balance of multiple factors, with privacy and utility being particularly important.

- Data products released without appropriate and modern privacy protection measures could facilitate abuse, as attackers can weaponize information contained in these data products against individuals, e.g., blackmail, stalking, or publicly harassing those individuals.

- On the other hand, the lack of accessible data can also cause harm due to reduced utility: various actors, such as state and local government entities, may have limited access to accurate or granular data, resulting in the inefficient allocation of resources to small or marginalized communities.

Privacy-Enhancing Technologies is a broad umbrella category that includes many different technical tools. Leading examples of these tools include differential privacy, secure multiparty computation, trusted execution environments, and federated learning. Each one of these technologies is designed to address different privacy threats. For additional information, we suggest the UN Guide on Privacy-Enhancing Technologies for Official Statistics and the ICO’s resources on Privacy-Enhancing Technologies.

NIST has multiple publications related to data privacy, such as the Risk Management Framework for Cybersecurity and the Privacy Framework. The report De-Identifying Government Datasets: Techniques and Governance focuses on responsible data sharing by government organizations, while the Guidelines for Evaluating Differential Privacy Guarantees provides a framework to assess the privacy protection level provided by differential privacy for any organization.

Differential privacy is a framework for controlling the amount of information leaked about individuals during a statistical analysis. Typically, random noise is injected into the results of the analysis to hide individual people’s specific information while maintaining overall statistical patterns in the data. For additional information, we suggest Differential Privacy: A Primer for a Non-technical Audience.

Secure multiparty computation is a technique that allows several actors to jointly aggregate information while protecting each actor’s data from disclosure. In other words, it allows parties to jointly perform computations on their data while ensuring that each party learns only the result of the computation. For additional information, we suggest Secure Multiparty Computation FAQ for Non-Experts.

There are multiple examples of PET deployments at both the federal and local levels both domestically and internationally. We list several examples below, and refer interested readers to the in-depth reports by Advisory Committee on Data for Evidence Building (report 1 and report 2):

- The Census Bureau used differential privacy in their disclosure avoidance system to release results from the 2020 decennial census data. Using differential privacy allowed the bureau to provide formal disclosure avoidance guarantees as well as precise information about the impact of this system on the accuracy of the data.

- The Boston Women’s Workforce Council (BWWC) measures wage disparities among employers in the greater Boston area using secure multiparty computation (MPC).

- The Israeli Ministry of Health publicly released its National Life Birth Registry using differential privacy.

- Privacy-preserving record linkage, a variant of secure multiparty computation, has been used experimentally by both the U.S. Department of Education and the National Center for Health Statistics. Additionally, it has been used at the county level in Allegheny County, PA.

Additional examples can also be found in the UN’s case-study repository of PET deployments.

Data-sharing projects are not new to the government, and pockets of relevant expertise—particularly in statistics, software engineering, subject matter areas, and law—already exist. Deploying PET solutions requires technical computer science expertise for building and integrating PETs into larger systems, as well as sociotechnical expertise in communicating the use of PETs to relevant parties and facilitating decision-making around critical choices.

Reforming the Federal Advisory Committee Landscape for Improved Evidence-based Decision Making and Increasing Public Trust

Federal Advisory Committees (FACs) are the single point of entry for the American public to provide consensus-based advice and recommendations to the federal government. These Advisory Committees are composed of experts from various fields who serve as Special Government Employees (SGEs), attending committee meetings, writing reports, and voting on potential government actions.

Advisory Committees are needed for the federal decision-making process because they provide additional expertise and in-depth knowledge for the Agency on complex topics, aid the government in gathering information from the public, and allow the public the opportunity to participate in meetings about the Agency’s activities. As currently organized, FACs are not equipped to provide the best evidence-based advice. This is because FACs do not meet transparency requirements set forth by GAO: making pertinent decisions during public meetings, reporting inaccurate cost data, providing official meeting documents publicly available online, and more. FACs have also experienced difficulty with recruiting and retaining top talent to assist with decision making. For these reasons, it is critical that FACs are reformed and equipped with the necessary tools to continue providing the government with the best evidence-based advice. Specifically, advice as it relates to issues such as 1) decreasing the burden of hiring special government employees 2) simplifying the financial disclosure process 3) increasing understanding of reporting requirements and conflict of interest processes 4) expanding training for Advisory Committee members 5) broadening the roles of Committee chairs and designated federal officials 6) increasing public awareness of Advisory Committee roles 7) engaging the public outside of official meetings 8) standardizing representation from Committee representatives 9) ensuring that Advisory Committees are meeting per their charters and 10) bolstering Agency budgets for critical Advisory Committee issues.

Challenge and Opportunity

Protecting the health and safety of the American public and ensuring that the public has the opportunity to participate in the federal decision-making process is crucial. We must evaluate the operations and activities of federal agencies that require the government to solicit evidence-based advice and feedback from various experts through the use of federal Advisory Committees (FACs). These Committees are instrumental in facilitating transparent and collaborative deliberation between the federal government, the advisory body, and the American public and cannot be done through the use of any other mechanism. Advisory Committee recommendations are integral to strengthening public trust and reinforcing the credibility of federal agencies. Nonetheless, public trust in government has been waning and efforts should be made to increase public trust. Public trust is known as the pillar of democracy and fosters trust between parties, particularly when one party is external to the federal government. Therefore, the use of Advisory Committees, when appropriately used, can assist with increasing public trust and ensuring compliance with the law.

There have also been many success stories demonstrating the benefits of Advisory Committees. When Advisory Committees are appropriately staffed based on their charge, they can decrease the workload of federal employees, assist with developing policies for some of our most challenging issues, involve the public in the decision-making process, and more. However, the state of Advisory Committees and the need for reform have been under question, and even more so as we transition to a new administration. Advisory Committees have contributed to the improvement in the quality of life for some Americans through scientific advice, as well as the monitoring of cybersecurity. For example, an FDA Advisory Committee reviewed data and saw promising results for the treatment of sickle cell disease (SCD) which has been a debilitating disease with limited treatment for years. The Committee voted in favor of gene therapy drugs Casgevy and Lyfgenia which were the first to be approved by the FDA for SCD.

Under the first Trump administration, Executive Order (EO) 13875 resulted in a significant decrease in the number of federal advisory meetings. This limited agencies’ ability to convene external advisors. Federal science advisory committees met less during this administration than any prior administration, met less than what was required from their charter, disbanded long standing Advisory Committees, and scientists receiving agency grants were barred from serving on Advisory Committees. Federal Advisory Committee membership also decreased by 14%, demonstrating the issue of recruiting and retaining top talent. The disbandment of Advisory Committees, exclusion of key scientific external experts from Advisory Committees, and burdensome procedures can potentially trigger severe consequences that affect the health and safety of Americans.

Going into a second Trump administration, it is imperative that Advisory Committees have the opportunity to assist federal agencies with the evidence-based advice needed to make critical decisions that affect the American public. The suggested reforms that follow can work to improve the overall operations of Advisory Committees while still providing the government with necessary evidence-based advice. With successful implementation of the following recommendations, the federal government will be able to reduce administrative burden on staff through the recruitment, onboarding, and conflict of interest processes.

The U.S. Open Government Initiative encourages the promotion and participation of public and community engagement in governmental affairs. However, individual Agencies can and should do more to engage the public. This policy memo identifies several areas of potential reform for Advisory Committees and aims to provide recommendations for improving the overall process without compromising Agency or Advisory Committee membership integrity.

Plan of Action

The proposed plan of action identifies several policy recommendations to reform the federal Advisory Committee (Advisory Committee) process, improving both operations and efficiency. Successful implementation of these policies will 1) improve the Advisory Committee member experience, 2) increase transparency in federal government decision-making, and 3) bolster trust between the federal government, its Advisory Committees, and the public.

Streamline Joining Advisory Committees

Recommendation 1. Decrease the burden of hiring special government employees in an effort to (1) reduce the administrative burden for the Agency and (2) encourage Advisory Committee members, who are also known as special government employees (SGEs), to continue providing the best evidence-based advice to the federal government through reduced onerous procedures

The Ethics in Government Act of 1978 and Executive Order 12674 lists OGE-450 reporting as the required public financial disclosure for all executive branch and special government employees. This Act provides the Office of Government Ethics (OGE) the authority to implement and regulate a financial disclosure system for executive branch and special government employees whose duties have “heightened risk of potential or actual conflicts of interest”. Nonetheless, the reporting process becomes onerous when Advisory Committee members have to complete the OGE-450 before every meeting even if their information remains unchanged. This presents a challenge for Advisory Committee members who wish to continue serving, but are burdened by time constraints. The process also burdens federal staff who manage the financial disclosure system.

Policy Pathway 1. Increase funding for enhanced federal staffing capacity to undertake excessive administrative duties for financial reporting.

Policy Pathway 2. All federal agencies that deploy Advisory Committees can conduct a review of the current OGC-450 process, budget support for this process, and work to develop an electronic process that will eliminate the use of forms and allow participants to select dropdown options indicating if their financial interests have changed.

Recommendation 2. Create and use public platforms such as OpenPayments by CMS to (1) aid in simplifying the financial disclosure reporting process and (2) increase transparency for disclosure procedures

Federal agencies should create a financial disclosure platform that streamlines the process and allows Advisory Committee members to submit their disclosures and easily make updates. This system should also be created to monitor and compare financial conflicts. In addition, agencies that utilize the expertise of Advisory Committees for drugs and devices should identify additional ways in which they can promote financial transparency. These agencies can use Open Payments, a system operated by Centers for Medicare & Medicaid Services (CMS), to “promote a more financially transparent and accountable healthcare system”. The Open Payments system makes payments from medical and drug device companies to individuals, healthcare providers, and teaching hospitals accessible to the public. If for any reason financial disclosure forms are called into question, the Open Payments platform can act as a check and balance in identifying any potential financial interests of Advisory Committee members. Further steps that can be taken to simplify the financial disclosure process would be to utilize conflict of interest software such as Ethico which is a comprehensive tool that allows for customizable disclosure forms, disclosure analytics for comparisons, and process automation.

Policy Pathway. The Office of Government Ethics should require all federal agencies that operate Advisory Committees to develop their own financial disclosure system and include a second step in the financial disclosure reporting process as due diligence, which includes reviewing the Open Payments by CMS system for potential financial conflicts or deploying conflict of interest monitoring software to streamline the process.

Streamline Participation in an Advisory Committee

Recommendation 3. Increase understanding of annual reporting requirements for conflict of interest (COI)

Agencies should develop guidance that explicitly states the roles of Ethics Officers, also known as Designated Agency Ethics Officials (DAEO), within the federal government. Understanding the roles and responsibilities of Advisory Committee members and the public will help reduce the spread of misinformation regarding the purpose of Advisory Committees. In addition, agencies should be encouraged by the Office of Government Ethics to develop guidance that indicates the criteria for inclusion or exclusion of participation in Committee meetings. Currently, there is no public guidance that states what types of conflicts of interests are granted waivers for participation. Full disclosure of selection and approval criteria will improve transparency with the public and draw clear delineations between how Agencies determine who is eligible to participate.

Policy Pathway. Develop conflict of interest (COI) and financial disclosure guidance specifically for SGEs that states under what circumstances SGEs are allowed to receive waivers for participation in Advisory Committee meetings.

Recommendation 4. Expand training for Advisory Committee members to include (1) ethics and (2) criteria for making good recommendations to policymakers

Training should be expanded for all federal Advisory Committee members to include ethics training which details the role of Designated Agency Ethics Officials, rules and regulations for financial interest disclosures, and criteria for making evidence-based recommendations to policymakers. Training for incoming Advisory Committee members ensures that all members have the same knowledge base and can effectively contribute to the evidence-based recommendations process.

Policy Pathway. Agencies should collaborate with the OGE and Agency Heads to develop comprehensive training programs for all incoming Advisory Committee members to ensure an understanding of ethics as contributing members, best practices for providing evidence-based recommendations, and other pertinent areas that are deemed essential to the Advisory Committee process.

Leverage Advisory Committee Membership

Recommendation 5. Uplifting roles of the Committee Chairs and Designated Federal Officials

Expanding the roles of Committee Chairs and Designated Federal Officers (DFOs) may assist federal Agencies with recruiting and retaining top talent and maximizing the Committee’s ability to stay abreast of critical public concerns. Considering the fact that the General Services Administration has to be consulted for the formation of new Committees, renewal, or alteration of Committees, they can be instrumental in this change.

Policy Pathway. The General Services Administration (GSA) should encourage federal Agencies to collaborate with Committee Chairs and DFOs to recruit permanent and ad hoc Committee members who may have broad network reach and community ties that will bolster trust amongst Committees and the public.

Recommendation 6. Clarify intended roles for Advisory Committee members and the public

There are misconceptions among the public and Advisory Committee members about Advisory Committee roles and responsibilities. There is also ambiguity regarding the types of Advisory Committee roles such as ad hoc members, consulting, providing feedback for policies, or making recommendations.

Policy Pathway. GSA should encourage federal Agencies to develop guidance that delineates the differences between permanent and temporary Advisory Committee members, as well as their roles and responsibilities depending on if they’re providing feedback for policies or providing recommendations for policy decision-making.

Recommendation 7. Utilize and engage expertise and the public outside of public meetings

In an effort to continue receiving the best evidence-based advice, federal Agencies should develop alternate ways to receive advice outside of public Committee meetings. Allowing additional opportunities for engagement and feedback from Committee experts or the public will allow Agencies to expand their knowledge base and gather information from communities who their decisions will affect.

Policy Pathway. The General Services Administration should encourage federal Agencies to create opportunities outside of scheduled Advisory Committee meetings to engage Committee members and the public on areas of concern and interest as one form of engagement.

Recommendation 8. Standardize representation from Committee representatives (i.e., industry), as well as representation limits

The Federal Advisory Committee Act (FACA) does not specify the types of expertise that should be represented on all federal Advisory Committees, but allows for many types of expertise. Incorporating various sets of expertise that are representative of the American public will ensure the government is receiving the most accurate, innovative, and evidence-based recommendations for issues and products that affect Americans.

Policy Pathway. Congress should include standardized language in the FACA that states all federal Advisory Committees should include various sets of expertise depending on their charge. This change should then be enforced by the GSA.

Support a Vibrant and Functioning Advisory Committee System

Recommendation 9. Decrease the burden to creating an Advisory Committee and make sure Advisory Committees are meeting per their charters

The process to establish an Advisory Committee should be simplified in an effort to curtail the amount of onerous processes that lead to a delay in the government receiving evidence based advice.

Advisory Committee charters state the purpose of Advisory Committees, their duties, and all aspirational aspects. These charters are developed by agency staff or DFOs with consultation from their agency Committee Management Office. Charters are needed to forge the path for all FACs.

Policy Pathway. Designated Federal Officers (DFOs) within federal agencies should work with their Agency head to review and modify steps to establishing FACs. Eliminate the requirement for FACs to require consultation and/or approval from GSA for the formation, renewal, or alteration of Advisory Committees.

Recommendation 10. Bolster agency budgets to support FACs on critical issues where regular engagement and trust building with the public is essential for good policy

Federal Advisory Committees are an essential component to receive evidence-based recommendations that will help guide decisions at all stages of the policy process. These Advisory Committees are oftentimes the single entry point external experts and the public have to comment and participate in the decision-making process. However, FACs take considerable resources to operate depending on the frequency of meetings, the number of Advisory Committee members, and supporting FDA staff. Without proper appropriations, they have a diminished ability to recruit and retain top talent for Advisory Committees. The Government Accountability Office (GAO) reported that in 2019, approximately $373 million dollars was spent to operate a total of 960 federal Advisory Committees. Some Agencies have experienced a decrease in the number of Advisory Committee convenings. Individual Agency heads should conduct a budget review of average operating and projected costs and develop proposals for increased funding to submit to the Appropriations Committee.

Policy Pathway. Congress should consider increasing appropriations to support FACs so they can continue to enhance federal decision-making, improve public policy, boost public credibility, and Agency morale.

Conclusion

Advisory Committees are necessary to the federal evidence-based decision-making ecosystem. Enlisting the advice and recommendations of experts, while also including input from the American public, allows the government to continue making decisions that will truly benefit its constituents. Nonetheless, there are areas of FACs that can be improved to ensure it continues to be a participatory, evidence-based process. Additional funding is needed to compensate the appropriate Agency staff for Committee support, provide potential incentives for experts who are volunteering their time, and finance other expenditures.

With reform of Advisory Committees, the process for receiving evidence-based advice will be streamlined, allowing the government to receive this advice in a faster and less burdensome manner. Reform will be implemented by reducing the administrative burden for federal employees through the streamlining of recruitment, financial disclosure, and reporting processes.

A Federal Center of Excellence to Expand State and Local Government Capacity for AI Procurement and Use

The administration should create a federal center of excellence for state and local artificial intelligence (AI) procurement and use—a hub for expertise and resources on public sector AI procurement and use at the state, local, tribal, and territorial (SLTT) government levels. The center could be created by expanding the General Services Administration’s (GSA) existing Artificial Intelligence Center of Excellence (AI CoE). As new waves of AI technologies enter the market, shifting both practice and policy, such a center of excellence would help bridge the gap between existing federal resources on responsible AI and the specific, grounded challenges that individual agencies face. In the decades ahead, new AI technologies will touch an expanding breadth of government services—including public health, child welfare, and housing—vital to the wellbeing of the American people. An AI CoE federal center would equip public sector agencies with sustainable expertise and set a consistent standard for practicing responsible AI procurement and use. This resource ensures that AI truly enhances services, protects the public interest, and builds public trust in AI-integrated state and local government services.

Challenge and Opportunity

State, local, tribal, and territorial (SLTT) governments provide services that are critical to the welfare of our society. Among these: providing housing, child support, healthcare, credit lending, and teaching. SLTT governments are increasingly interested in using AI to assist with providing these services. However, they face immense challenges in responsibly procuring and using new AI technologies. While grappling with limited technical expertise and budget constraints, SLTT government agencies considering or deploying AI must navigate data privacy concerns, anticipate and mitigate biased model outputs, ensure model outputs are interpretable to workers, and comply with sector-specific regulatory requirements, among other responsibilities.

The emergence of foundation models (large AI systems adaptable to many different tasks) for public sector use exacerbates these existing challenges. Technology companies are now rapidly developing new generative AI services tailored towards public sector organizations. For example, earlier this year, Microsoft announced that Azure OpenAI Service would be newly added to Azure Government—a set of AI services that target government customers. These types of services are not specifically created for public sector applications and use contexts, but instead are meant to serve as a foundation for developing specific applications.

For SLTT government agencies, these generative AI services blur the line between procurement and development: Beyond procuring specific AI services, we anticipate that agencies will increasingly be tasked with the responsible use of general AI services to develop specific AI applications. Moreover, recent AI regulations suggest that responsibility and liability for the use and impacts of procured AI technologies will be shared by the public sector agency that deploys them, rather than just resting with the vendor supplying them.

SLTT agencies must be well-equipped with responsible procurement practices and accountability mechanisms pivotal to moving forward given the shifts across products, practice, and policy. Federal agencies have started to provide guidelines for responsible AI procurement (e.g., Executive Order 13960, OMB-M-21-06, NIST RMF). But research shows that SLTT governments need additional support to apply these resources.: Whereas existing federal resources provide high-level, general guidance, SLTT government agencies must navigate a host of challenges that are context-specific (e.g., specific to regional laws, agency practices, etc.). SLTT government agency leaders have voiced a need for individualized support in accounting for these context-specific considerations when navigating procurement decisions.

Today, private companies are promising state and local government agencies that using their AI services can transform the public sector. They describe diverse potential applications, from supporting complex decision-making to automating administrative tasks. However, there is minimal evidence that these new AI technologies can improve the quality and efficiency of public services. There is evidence, on the other hand, that AI in public services can have unintended consequences, and when these technologies go wrong, they often worsen the problems they are aimed at solving. For example, by increasing disparities in decision-making when attempting to reduce them.

Challenges to responsible technology procurement follow a historical trend: Government technology has frequently been critiqued for failures in the past decades. Because public services such as healthcare, social work, and credit lending have such high stakes, failures in these areas can have far-reaching consequences. They also entail significant financial costs, with millions of dollars wasted on technologies that ultimately get abandoned. Even when subpar solutions remain in use, agency staff may be forced to work with them for extended periods despite their poor performance.

The new administration is presented with a critical opportunity to redirect these trends. Training each relevant individual within SLTT government agencies, or hiring new experts within each agency, is not cost- or resource-effective. Without appropriate training and support from the federal government, AI adoption is likely to be concentrated in well-resourced SLTT agencies, leaving others with fewer resources (and potentially more low income communities) behind. This could lead to disparate AI adoption and practices among SLTT agencies, further exacerbating existing inequalities. The administration urgently needs a plan that supports SLTT agencies in learning how to handle responsible AI procurement and use–to develop sustainable knowledge about how to navigate these processes over time—without requiring that each relevant individual in the public sector is trained. This plan also needs to ensure that, over time, the public sector workforce is transformed in their ability to navigate complicated AI procurement processes and relationships—without requiring constant retraining of new waves of workforces.

In the context of federal and SLTT governments, a federal center of excellence for state and local AI procurement would accomplish these goals through a “hub and spoke” model. This center of excellence would serve as the “hub” that houses a small number of selected experts from academia, non-profit organizations, and government. These experts would then train “spokes”—existing state and local public sector agency workers—in navigating responsible procurement practices. To support public sector agencies in learning from each others’ practices and challenges, this federal center of excellence could additionally create communication channels for information- and resource-sharing across the state and local agencies.

Procured AI technologies in government will serve as the backbone of local public services for decades to come. Upskilling government agencies to make smart decisions about which AI technologies to procure (and which are best avoided) would not only protect the public from harmful AI systems but would also save the government money by decreasing the likelihood of adopting expensive AI technologies that end up getting dropped.

Plan of Action

A federal center of excellence for state and local AI procurement would ensure that procured AI technologies are responsibly selected and used to serve as a strong and reliable backbone for public sector services. This federal center of excellence can support both intra-agency and inter-agency capacity-building and learning about AI procurement and use—that is, mechanisms to support expertise development within a given public sector agency and between multiple public sector agencies. This federal center of excellence would not be deliberative (i.e., SLTT governments would receive guidance and support but would not have to seek approval on their practices). Rather, the goal would be to upskill SLTT agencies so they are better equipped to navigate their own AI procurement and use endeavors.

To upskill SLTT agencies through inter-agency capacity-building, the federal center of excellence would house experts in relevant domain areas (e.g., responsible AI, public interest technology, and related topics). Fellows would work with cohorts of public sector agencies to provide training and consultation services. These fellows, who would come from government, academia, and civil society, would build on their existing expertise and experiences with responsible AI procurement, integrating new considerations proposed by federal standards for responsible AI (e.g., Executive Order 13960, OMB-M-21-06, NIST RMF). The fellows would serve as advisors to help operationalize these guidelines into practical steps and strategies, helping to set a consistent bar for responsible AI procurement and use practices along the way.

Cohorts of SLTT government agency workers, including existing agency leaders, data officers, and procurement experts, would work together with an assigned advisor to receive consultation and training support on specific tasks that their agency is currently facing. For example, for agencies or programs with low AI maturity or familiarity (e.g., departments that are beginning to explore the adoption of new AI tools), the center of excellence can help navigate the procurement decision-making process, help them understand their agency-specific technology needs, draft procurement contracts, select amongst proposals, and negotiate plans for maintenance. For agencies and programs with high AI maturity or familiarity, the advisor can train the programs about unexpected AI behaviors and mitigation strategies, when this arises. These communication pathways would allow federal agencies to better understand the challenges state and local governments face in AI procurement and maintenance, which can help seed ideas for improving existing resources and create new resources for AI procurement support.

To scaffold intra-agency capacity-building, the center of excellence can build the foundations for cross-agency knowledge-sharing. In particular, it would include a communication platform and an online hub of procurement resources, both shared amongst agencies. The communication platform would allow state and local government agency leaders who are navigating AI procurement to share challenges, learned lessons, and tacit knowledge to support each other. The online hub of resources can be collected by the center of excellence and SLTT government agencies. Through the online hub, agencies can upload and learn about new responsible AI resources and toolkits (e.g., such as those created by government and the research community), as well as examples of procurement contracts that agencies themselves used.

To implement this vision, the new administration should expand the U.S. General Services Administration’s (GSA) existing Artificial Intelligence Center of Excellence (AI CoE), which provides resources and infrastructural support for AI adoption across the federal government. We propose expanding this existing AI CoE to include the components of our proposed center of excellence for state and local AI procurement and use. This would direct support towards SLTT government agencies—which are currently unaccounted for in the existing AI CoE—specifically via our proposed capacity-building model.

Over the next 12 months, the goals of expanding the AI CoE would be three-fold:

1. Develop the core components of our proposed center of excellence within the AI CoE.

- Recruit a core set of fellows with expertise in responsible AI, public interest technology, and related topics from government, academia, and civil society for a 1-2 year placement;

- Develop a centralized onboarding and training program for the fellows to set standards for responsible AI procurement and use guidelines and goals;

- Create a research strategy to streamline documentation of SLTT agencies’ on-the-ground practices and challenges for procuring new AI technologies, which could help prepare future fellows.

2. Launch collaborations for the first sample of SLTT government agencies. Focus on building a path for successful collaborations:

- Identify a small set of state and local government agencies who desire federal support in navigating AI procurement and use (e.g., deciding which AI use cases to adopt, how to effectively evaluate AI deployments through time, what organizational policies to create to help govern AI use);

- Ensure there is a clear communication pathway between the agency and their assigned fellow;

- Have each fellow and agency pair create a customized plan of action to ensure the agency is upskilled in their ability to independently navigate AI procurement and use with time.

3. Build a path for our proposed center of excellence to grow and gain experience. If the first few collaborations show strong reviews, design a scaling strategy that will:

- Incorporate the center of excellence’s core budget into future budget planning;

- Identify additional fellows for the program;

- Roll out the program to additional state and local government agencies.

Conclusion

Expanding the existing AI CoE to include our proposed federal center of excellence for AI procurement and use can help ensure that SLTT governments are equipped to make informed, responsible decisions about integrating AI technologies into public services. This body would provide necessary guidance and training, helping to bridge the gap between high-level federal resources and the context-specific needs of SLTT agencies. By fostering both intra-agency and inter-agency capacity-building for responsible AI procurement and use, this approach builds sustainable expertise, promotes equitable AI adoption, and protects public interest. This ensures that AI enhances—rather than harms—the efficiency and quality of public services. As new waves of AI technologies continue to enter the public sector, touching a breadth of services critical to the welfare of the American people, this center of excellence will help maintain high standards for responsible public sector AI for decades to come.

This action-ready policy memo is part of Day One 2025 — our effort to bring forward bold policy ideas, grounded in science and evidence, that can tackle the country’s biggest challenges and bring us closer to the prosperous, equitable and safe future that we all hope for whoever takes office in 2025 and beyond.

PLEASE NOTE (February 2025): Since publication several government websites have been taken offline. We apologize for any broken links to once accessible public data.

Federal agencies have published numerous resources to support responsible AI procurement, including the Executive Order 13960, OMB-M-21-06, NIST RMF. Some of these resources provide guidance on responsible AI development in organizations broadly, across the public, private, and non-profit sectors. For example, the NIST RMF provides organizations with guidelines to identify, assess, and manage risks in AI systems to promote the deployment of more trustworthy and fair AI systems. Others focus on public sector AI applications. For instance, the OMB Memorandum published by the Office of Management and Budget describes strategies for federal agencies to follow responsible AI procurement and use practices.

Research describes how these forms of resources often require additional skills and knowledge that make it challenging for agencies to effectively use on their own. A federal center of excellence for state and local AI procurement could help agencies learn to use these resources. Adapting these guidelines to specific SLTT agency contexts necessitates a careful task of interpretation which may, in turn, require specialized expertise or resources. The creation of this federal center of excellence to guide responsible SLTT procurement on-the-ground can help bridge this critical gap. Fellows in the center of excellence and SLTT procurement agencies can build on this existing pool of guidance to build a strong theoretical foundation to guide their practices.

The hub and spoke model has been used across a range of applications to support efficient management of resources and services. For instance, in healthcare, providers have used the hub and spoke model to organize their network of services; specialized, intensive services would be located in “hub” healthcare establishments whereas secondary services would be provided in “spoke” establishments, allowing for more efficient and accessible healthcare services. Similar organizational networks have been followed in transportation, retail, and cybersecurity. Microsoft follows a hub and spoke model to govern responsible AI practices and disseminate relevant resources. Microsoft has a single centralized “hub” within the company that houses responsible AI experts—those with expertise on the implementation of the company’s responsible AI goals. These responsible AI experts then train “spokes”—workers residing in product and sales teams across the company, who learn about best practices and support their team in implementing them.

During the training, experts would form a stronger foundation for (1) on-the-ground challenges and practices that public sector agencies grapple with when developing, procuring, and using AI technologies and (2) existing AI procurement and use guidelines provided by federal agencies. The content of the training would be taken from syntheses of prior research on public sector AI procurement and use challenges, as well as existing federal resources available to guide responsible AI development. For example, prior research has explored public sector challenges to supporting algorithmic fairness and accountability and responsible AI design and adoption decisions, amongst other topics.

The experts who would serve as fellows for the federal center of excellence would be individuals with expertise and experience studying the impacts of AI technologies and designing interventions to support more responsible AI development, procurement, and use. Given the interdisciplinary nature of the expertise required for the role, individuals should have an applied, socio-technical background on responsible AI practices, ideally (but not necessarily) for the public sector. The individual would be expected to have the skills needed to share emerging responsible AI practices, strategies, and tacit knowledge with public sector employees developing or procuring AI technologies. This covers a broad range of potential backgrounds.

For example, a professor in academia who studies how to develop public sector AI systems that are more fair and aligned with community needs may be a good fit. A socio-technical researcher in civil society with direct experience studying or developing new tools to support more responsible AI development, who has intuition over which tools and practices may be more or less effective, may also be a good candidate. A data officer in a state government agency who has direct experience procuring and governing AI technologies in their department, with an ability to readily anticipate AI-related challenges other agencies may face, may also be a good fit. The cohort of fellows should include a balanced mix of individuals coming from government, academia, and civil society.

Strengthening Information Integrity with Provenance for AI-Generated Text Using ‘Fuzzy Provenance’ Solutions

Synthetic text generated by artificial intelligence (AI) can pose significant threats to information integrity. When users accept deceptive AI-generated content—such as large-scale false social media posts by malign foreign actors—as factual, national security is put at risk. One way to help mitigate this danger is by giving users a clear understanding of the provenance of the information they encounter online.

Here, provenance refers to any verifiable indication of whether text was generated by a human or by AI, for example by using a watermark. However, given the limitations of watermarking AI-generated text, this memo also introduces the concept of fuzzy provenance, which involves identifying exact text matches that appear elsewhere on the internet. As these matches will not always be available, the descriptor “fuzzy” is used. While this information will not always establish authenticity with certainty, it offers users additional clues about the origins of a piece of text.

To ensure platforms can effectively provide this information to users, the National Institute of Standards and Technology (NIST)’s AI Safety Institute should develop guidance on how to display to users both provenance and fuzzy provenance—where available—within no more than one click. To expand the utility of fuzzy provenance, NIST could also issue guidance on how generative AI companies could allow the records of their free AI models to be crawled and indexed by search engines, thereby making potential matches to AI-generated text easier to discover. Tradeoffs surrounding this approach are explored further in the FAQ section.

By creating a reliable, user-friendly framework for surfacing these details, NIST would empower readers to better discern the trustworthiness of the text they encounter, thereby helping to counteract the risks posed by deceptive AI-generated content.

Challenge and Opportunity

Synthetic Text and Information Integrity

In the past two years, generative AI models have become widely accessible, allowing users to produce customized text simply by providing prompts. As a result, there has been a rapid proliferation of “synthetic” text—AI-generated content—across the internet. As NIST’s Generative Artificial Intelligence Profile notes, this means that there is a “[l]owered barrier of entry to generated text that may not distinguish fact from opinion or fiction or acknowledge uncertainties, or could be leveraged for large scale dis- and mis-information campaigns.”

Information integrity risks stemming from synthetic text—particularly when generated for non-creative purposes—can pose a serious threat to national security. For example, in July 2024 the Justice Department disrupted Russian generative-AI-enabled disinformation bot farms. These Russian bots produced synthetic text, including in the form of social media posts by fake personas, meant to promote messages aligned with the interests of the Russian government.

Provenance Methods For Reducing Information Integrity Risks

NIST has an opportunity to provide community guidance to reduce the information integrity risks posed by all types of synthetic content. The main solution currently being considered by NIST for reducing the risks of synthetic content in general is provenance, which refers to whether a piece of content was generated by AI or a human. As described by NIST, provenance is often ascertained by creating a non-fungible watermark, or a cryptographic signature for a piece of content. The watermark is permanently associated with the piece of content. Where available, provenance information is helpful because knowing the origin of text can help a user know whether to rely on the facts it contains. For example, an AI-generated news report may currently be less trustworthy than a human news report because the former is more prone to fabrications.

However, there are currently no methods widely accepted as effective for determining the provenance of synthetic text. As NIST’s report, Reducing Risks Posed by Synthetic Content, details, “[t]he effectiveness of synthetic text detection is subject to ongoing debate” (Sec. 3.2.2.4). Even if a piece of text is originally AI-generated with a watermark (e.g., by generating words with a unique statistical pattern), people can easily copy a piece of text by paraphrasing (especially via AI), without transferring the original watermark. Text watermarks are also vulnerable to adversarial attacks, with malicious actors able to mimic the watermark signature and make text appear watermarked when it is not.

Plan of Action

To capture the benefits of provenance, while mitigating some of its weaknesses, NIST should issue guidance on how platforms can make available to users both provenance and “fuzzy provenance” of text. Fuzzy provenance is coined here to refer to exact text matches on the internet, which can sometimes reflect provenance but not necessarily (thus “fuzzy”). Optionally, NIST could also consider issuing guidance on how generative AI companies can make their free models’ records available to be crawled and indexed by search engines, so that fuzzy provenance information would show text matches with generative AI model records. There are tradeoffs to this recommendation, which is why it is optional; see FAQs for further discussion. Making both provenance and fuzzy provenance information available (in no more than one click) will give users more information to help them evaluate how trustworthy a piece of text is and reduce information integrity risks.

Combined Provenance and Fuzzy Provenance Approach

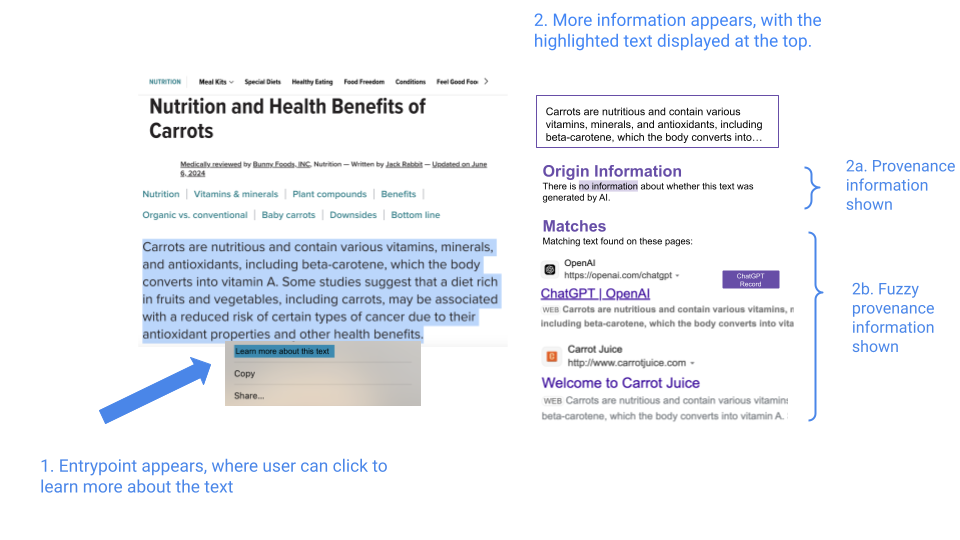

Figure 1. Mock implementation of combined provenance and fuzzy provenance

The above image captures what an implementation of the combined provenance and fuzzy provenance guidance might include. When a user highlights a piece of text that is sufficiently long, they can click “learn more about this text” to find more information.

There are ways to communicate provenance and fuzzy provenance so that it is both useful and easy-to-understand. In this concept showing the provenance of text, for example:

- Provenance information is shown under the heading “Origin Information”. This includes whether there is conclusive metadata (like watermarks) showing the text was generated by AI, according to C2PA standards, where available.

- Fuzzy provenance information is shown under the heading “Matches,” and includes other websites that have an exact text match to the highlighted text, similar to the results that come up when using a search engine. Furthermore, depending on NIST guidance, generative AI companies could have their free models’ records available to be crawled and indexed by search engines. This would enable these records to appear in the results if they contain an exact text match. These records would be clearly labeled (e.g., “ChatGPT Record”) and ranked at the top.

- See the following video for what this might look like for a user: user journeys.

Benefits of the Combined Approach

Showing both provenance and fuzzy provenance information provides users with critical context to evaluate the trustworthiness of a piece of text. Between provenance and fuzzy provenance, users would have access to information about many pieces of high-impact text, especially claims that could be particularly harmful for individuals, groups, or society at large. Making all this information immediately available also reduces friction for users so that they can get this information right where they encounter text.

Provenance information can be helpful to provide to users when it is available. For instance, knowing that a tech support company’s website description was AI-generated may encourage users to check other sources (like reviews) to see if the company is a real entity (and AI was used just to generate the description) or a fake entity entirely, before giving a deposit to hire the company (see user journey 1 in this video for an example).

Where clear provenance information is not available, fuzzy provenance can help fill the gap by providing valuable context to users in several ways:

- First, if there is no exact text match on the internet, it may indicate that the content is original—whether AI-generated or human-created—which can be especially relevant for assessing the trustworthiness of certain documents (see user journey 2 in this video for an example).

- Second, when presented with a misleading claim like “ginger is 10000x more effective than chemotherapy”, seeing that the text matches are from fact-check sites and unreliable sources such as other social media posts can encourage users to further investigate that claim (see user journey 3 in this video for an example).

- Third, absent any text matches from reliable sources, knowing that a claim (e.g., about carrots and cancer) matched an AI model’s record may cause a user to be skeptical, as they may realize that the text has the potential to be false content generated by AI (see user journey 4 in this video for an example).

Fuzzy provenance is also effective because it shows context and gives users autonomy to decide how to interpret that context. Academic studies have found that users tend to be more receptive when presented with further information they can use for their own critical thinking compared to being shown a conclusion directly (like a label), which can even backfire or be misinterpreted. This is why users may trust contextual methods like crowdsourced information more than provenance labels.

Finally, fuzzy provenance methods are generally feasible at scale, since they can be easily implemented with existing search engine capabilities (via an exact text match search). Furthermore, since fuzzy provenance only relies on exact text matching with other sources on the internet, it works without needing coordination among text-producers or compliance from bad actors.

Conclusion

To reduce the information integrity risks posed by synthetic text in a scalable and effective way, the National Institute for Standards and Technology (NIST) should develop community guidance on how platforms hosting text-based digital content can make accessible (in no more than one click) the provenance and “fuzzy provenance” of the piece of text, when available. NIST should also consider issuing guidance on how AI companies could make their free generative AI records available to be crawled by search engines, to amplify the effectiveness of “fuzzy provenance”.

This action-ready policy memo is part of Day One 2025 — our effort to bring forward bold policy ideas, grounded in science and evidence, that can tackle the country’s biggest challenges and bring us closer to the prosperous, equitable and safe future that we all hope for whoever takes office in 2025 and beyond.

PLEASE NOTE (February 2025): Since publication several government websites have been taken offline. We apologize for any broken links to once accessible public data.

Making free generative AI records available to be crawled by search engines includes tradeoffs, which is why it is an optional recommendation to consider. Below are some questions regarding implementation guidance, and trade offs including privacy and proprietary information.

Guidance could instruct AI model companies how to make their free generative AI conversation records available to be crawled and indexed by search engines. Similar to ChatGPT logs or Perplexity threads, a unique URL would be created for each conversation, capturing the date it occurred. The key difference is that all free model conversation records would be made available, but only with the AI outputs of the conversation, after removing personally identifiable information (PII) (see “Privacy considerations” section below). Because users can already choose to share conversations with each other (meaning the conversation logs are retained), and conversation logs for major model providers do not currently appear to have an expiration date, this requirement shouldn’t impose an additional storage burden for AI model companies.

Guidance could instruct search engines how to crawl and index these model logs so that queries with exact text matches to the AI outputs would surface the appropriate model logs. This would not be very different from search engines crawling/indexing other types of new URLs and should be well-within existing search engine capabilities. In terms of storage, since only free model logs would be crawled and indexed, and most free models rate-limit the number of user messages allowed, storage should also not be a concern. For instance, even with 200 million weekly active users for ChatGPT, the number of conversations in a year would only be on the order of billions, which is well-within the current scale that existing search engines have to operate to enable users to “search the web”.

- Output filtering on the AI outputs should be done to remove any personal identifiable information (PII) present in the model’s responses. However, it might still be possible to extrapolate who the original user was just by looking at the AI outputs taken together and inferring some of the user prompts. This is a privacy concern that should be further investigated. Some possible mitigations include additionally removing any location references of a certain granularity (i.e. removing mentions of neighborhoods, but retaining mentions of states) and presenting AI responses in the conversation in a randomized order.

- Removals should be made possible by a user-initiated process demonstrating privacy concerns, similar to existing search engine removal protocols.

- User consent would also be an important consideration here. NIST could propose that free model users must “opt-in”, or that free model record crawling/indexing be “opt-out” by default for users, though this may greatly compromise the reliability of fuzzy provenance.

- Training on AI-generated text: AI companies are concerned about accidentally picking up on too much AI-generated text on the web and training on that instead of higher human-generated text, thus degrading the quality of their own generative models. However, because they would have identifiable domain prefixes (ie chatgpt.com, perplexity.ai), it would be easy to exclude these AI-generated conversation logs if desired during training. Indeed, provenance and fuzzy provenance may help AI companies avoid unintentionally training on AI-generated text.

- Sharing model outputs: On the flipside, AI companies might be concerned that making so many AI-generated model outputs available for competitors to access may result in helping competitors improve their own models. This is a fair concern, though partially mitigated by a) specific user inputs are not available, and only the AI outputs; and b) only free model outputs would be logged, rather than any premium models, thus providing some proprietary protection. However, it is still possible that competitors may be able to enhance their own responses by training on the structure of AI outputs from other models at scale.

Tending Tomorrow’s Soil: Investing in Learning Ecosystems

“Tending soil.”

That’s how Fred Rogers described Mister Rogers’ Neighborhood, his beloved television program that aired from 1968 to 2001. Grounded in principles gleaned from top learning scientists, the Neighborhood offered a model for how “learning ecosystems” can work in tandem to tend the soil of learning.

Today, a growing body of evidence suggests that Rogers’ model was not only effective, but that real-life learning ecosystems – networks that include classrooms, living rooms, libraries, museums, and more – may be the most promising approach for preparing learners for tomorrow. As such, cities and regions around the world are constructing thoughtfully designed ecosystems that leverage and connect their communities’ assets, responding to the aptitudes, needs, and dreams of the learners they serve.

Efforts to study and scale these ecosystems at local, state, and federal levels would position the nation’s students as globally competitive, future-ready learners.

The Challenge

For decades, America’s primary tool for “tending soil” has been its public schools, which are (and will continue to be) the country’s best hope for fulfilling its promise of opportunity. At the same time, the nation’s industrial-era soil has shifted. From the way our communities function to the way our economy works, dramatic social and technological upheavals have remade modern society. This incongruity – between the world as it is and the world that schools were designed for – has blunted the effectiveness of education reforms; heaped systemic, society-wide problems on individual teachers; and shortchanged the students who need the most support.

“Public education in the United States is at a crossroads,” notes a report published by the Alliance for Learning Innovation, Education Reimagined, and Transcend: “to ensure future generations’ success in a globally competitive economy, it must move beyond a one-size-fits-all model towards a new paradigm that prioritizes innovation that holds promise to meet the needs, interests, and aspirations of each and every learner.”

What’s needed is the more holistic paradigm epitomized by Mister Rogers’ Neighborhood: a collaborative ecosystem that sparks engaged, motivated learners by providing the tools, resources, and relationships that every young person deserves.

The Opportunity

With components both public and private, virtual and natural, “learning ecosystems” found in communities around the world reflect today’s connected, interdependent society. These ecosystems are not replacements for schools – rather, they embrace and support all that schools can be, while also tending to the vital links between the many places where kids and families learn: parks, libraries, museums, afterschool programs, businesses, and beyond. The best of these ecosystems function as real-life versions of Mister Rogers’ Neighborhood: places where learning happens everywhere, both in and out of school. Where every learner can turn to people and programs that help them become, as Rogers used to say, “the best of whoever you are.”

Nearly every community contains the components of effective learning ecosystems. The partnerships forged within them can – when properly tended – spark and spread high-impact innovations; support collaboration among formal and informal educators; provide opportunities for young people to solve real-world problems; and create pathways to success in a fast-changing modern economy. By studying and investing in the mechanisms that connect these ecosystems, policymakers can build “neighborhoods” of learning that prepare students for citizenship, work, and life.

Plan of Action

Learning ecosystems can be cultivated at every level. Whether local, state, or federal, interested policymakers should:

Establish a commission on learning ecosystems. Tasked with studying learning ecosystems in the U.S. and abroad, the commission would identify best practices and recommend policy that 1) strengthens an area’s existing learning ecosystems and/or 2) nurtures new connections. Launched at the federal, state, or local level and led by someone with a track record for getting things done, the commission should include representatives from various sectors, including early childhood educators, K-12 teachers and administrators, librarians, researchers, CEOs and business leaders, artists, makers, and leaders from philanthropic and community-based organizations. The commission will help identify existing activities, research, and funding for learning ecosystems and will foster coordination and collaboration to maximize the effectiveness of the ecosystem’s resources.

A 2024 report by Knowledge to Power Catalysts notes that these cross-sector commissions are increasingly common at various levels of government, from county councils to city halls. As policymakers establish interagency working groups, departments of children and youth, and networks of human services providers, “such offices at the county or municipal level often play a role in cross-sector collaboratives that engage the nonprofit, faith, philanthropic, and business communities as well.”

Pittsburgh’s Remake Learning ecosystem, for example, is steered by the Remake Learning Council, a blue-ribbon commission of Southwestern Pennsylvania leaders from education, government, business, and the civic sector committed to “working together to support teaching, mentoring, and design – across formal and informal educational settings – that spark creativity in kids, activating them to acquire knowledge and skills necessary for navigating lifelong learning, the workforce, and citizenship.”

Establish a competitive grant program to support pilot projects. These grants could seed new ecosystems and/or support innovation among proven ecosystems. (Several promising ecosystems are operating throughout the country already; however, many are excluded from funding opportunities by narrowly focused RFPs.) This grant program can be administered by the commission to catalyze and strengthen learning ecosystems at the federal, state, or local levels. Such a program could be modeled after:

- The National Science Foundation’s efforts to nurture “an effective and inclusive STEM education ecosystem that prepares PreK-12 students for STEM careers, fosters entrepreneurship, and provides all people, particularly those from under-served and underrepresented populations, with access to excellent STEM education throughout their lifetimes.”

- The Pennsylvania Department of Education’s PAsmart program, a $30 million initiative designed to enhance the state’s education and workforce development efforts. PAsmart’s “Advancing Grants” of up to $500,000 each support cross-sector ecosystems that include educators from different districts and agencies. The South Fayette Township School District near Pittsburgh received an Advancing Grant to expand computer science not only for its own learners, but also for those in seven neighboring districts across four counties. Representing a microcosm of Pennsylvania, educators from these districts work side-by-side to identify crucial skills and design new ways to teach them, enhancing their collective impact.

- Remake Learning’s Moonshot grants, which award funds that encourage people to take risks, try new things, and explore the limits of what’s possible. These grants have been leveraged to strengthen ecosystem connections in cities and regions around the world.

Host a summit on learning ecosystems. Leveraging the gravitas of a government and/or civic institution such as the White House, a governor’s mansion, or a city hall, bring members of the commission together with learning ecosystem leaders and practitioners, along with cross-sector community leaders. A summit will underscore promising practices, share lessons learned, and highlight monetary and in-kind commitments to support ecosystems. The summit could leverage for learning ecosystems the philanthropic commitments model developed and used by previous presidential administrations to secure private and philanthropic support. Visit remakelearning.org/forge to see an example of one summit’s schedule, activities, and grantmaking opportunities.