A Safe Harbor for AI Researchers: Promoting Safety and Trustworthiness Through Good-Faith Research

Artificial intelligence (AI) companies disincentivize safety research by implicitly threatening to ban independent researchers that demonstrate safety flaws in their systems. While Congress encourages companies to provide bug bounties and protections for security research, this is not yet the case for AI safety research. Without independent research, we do not know if the AI systems that are being deployed today are safe or if they pose widespread risks that have yet to be discovered, including risks to U.S. national security. While companies conduct adversarial testing in advance of deploying generative AI models, they fail to adequately test their models after they are deployed as part of an evolving product or service. Therefore, Congress should promote the safety and trustworthiness of AI systems by establishing bug bounties for AI safety via the Chief Digital and Artificial Intelligence Office and creating a safe harbor for research on generative AI platforms as part of the Platform Accountability and Transparency Act.

Challenge and Opportunity

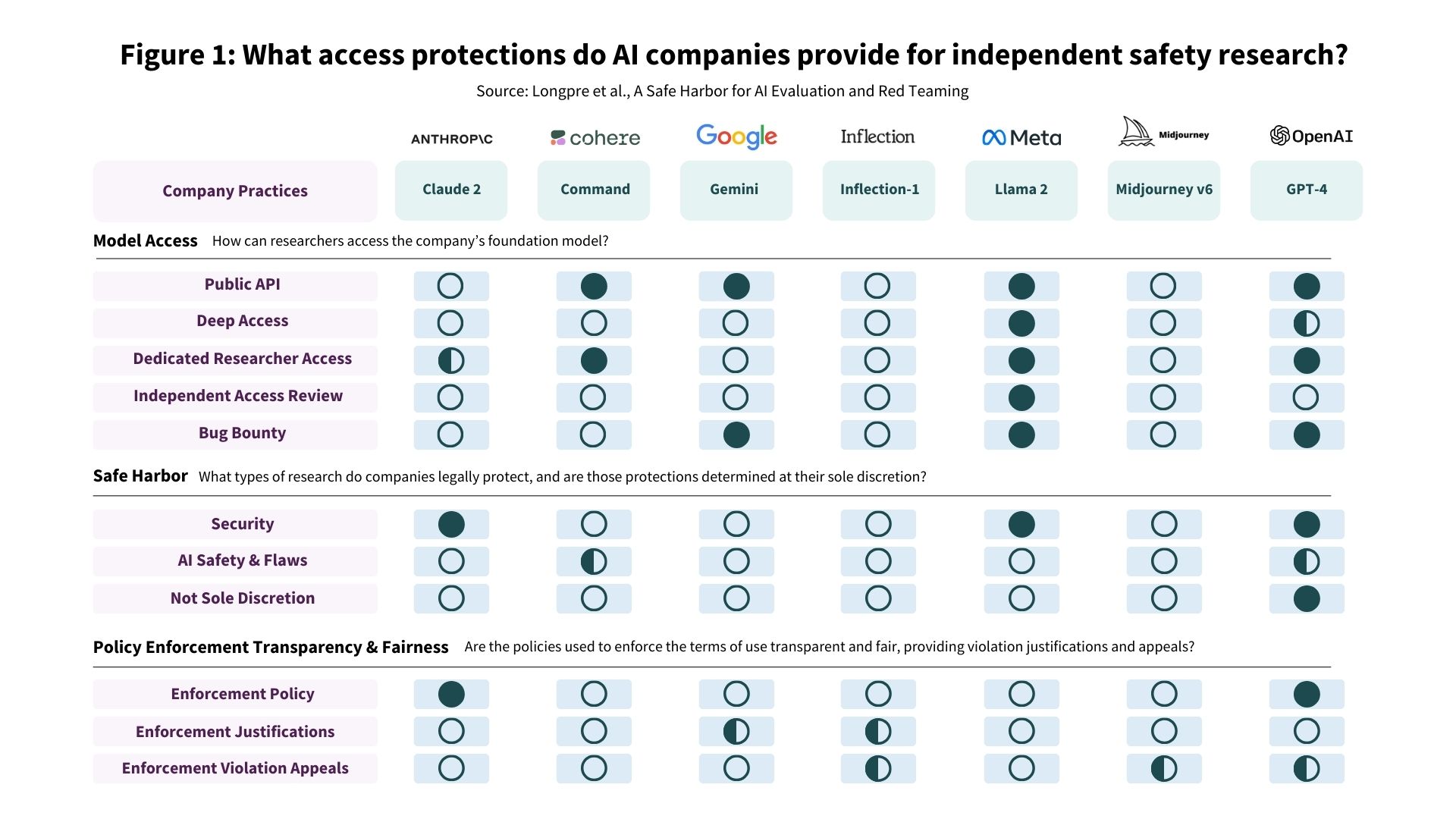

In July 2023, the world’s top AI companies signed voluntary commitments at the White House, pledging to “incent third-party discovery and reporting of issues and vulnerabilities.” Almost a year later, few of the signatories have lived up to this commitment. While some companies do reward researchers for finding security flaws in their AI systems, few companies strongly encourage research on safety or provide concrete protections for good-faith research practices. Instead, leading generative AI companies’ Terms of Service legally prohibit safety and trustworthiness research, in effect threatening anyone who conducts such research with bans from their platforms or even legal action.

In March 2024, over 350 leading AI researchers and advocates signed an open letter calling for “a safe harbor for independent AI evaluation.” The researchers noted that generative AI companies offer no legal protections for independent safety researchers, even though this research is critical to identifying safety issues in AI models and systems. The letter stated: “whereas security research on traditional software has established voluntary protections from companies (‘safe harbors’), clear norms from vulnerability disclosure policies, and legal protections from the DOJ, trustworthiness and safety research on AI systems has few such protections.”

In the months since the letter was released, companies have continued to be opaque about key aspects of their most powerful AI systems, such as the data used to build their models. If a researcher wants to test whether AI systems like ChatGPT, Claude, or Gemini can be jailbroken such that they pose a threat to U.S. national security, they are not allowed to do so as companies proscribe such research. Developers of generative AI models tout the safety of their systems based on internal red-teaming, but there is no way for the federal government or independent researchers to validate these results, as companies do not release reproducible evaluations.

Generative AI companies also impose barriers on their platforms that limit good-faith research. Unlike much of the web, the content on generative AI platforms is not publicly available, meaning that users need accounts to access AI-generated content and these accounts can be restricted by the company that owns the platform. In addition, companies like Google, Amazon, Microsoft, and OpenAI block certain requests that users might make of their AI models and limit the functionality of their models to prevent researchers from unearthing issues related to safety or trustworthiness.

Similar issues plague social media, as companies take steps to prevent researchers and journalists from conducting investigations on their platforms. Social media researchers face liability under the Computer Fraud and Abuse Act and Section 1201 of the Digital Millennium Copyright Act among other laws, which has had a chilling effect on such research and worsened the spread of misinformation online. The stakes are even higher for AI, which has the potential not only to turbocharge misinformation but also to provide U.S. adversaries like China and Russia with material strategic advantages. While legislation like the Platform Accountability and Transparency Act would enable research on recommendation algorithms, proposals that grant researchers access to platform data do not consider generative AI platforms to be in scope.

Congress can safeguard U.S. national security by promoting independent AI safety research. Conducting pre-deployment risk assessments is insufficient in a world where tens of millions of Americans are using generative AI—we need real-time assessments of the risks posed by AI systems after they are deployed as well. Big Tech should not be taken at its word when it says that its AI systems cannot be used by malicious actors to generate malware or spy on Americans. The best way to ensure the safety of generative AI systems is to empower the thousands of cutting-edge researchers at U.S. universities who are eager to stress test these systems. Especially for general-purpose technologies, small corporate safety teams are not sufficient to evaluate the full range of potential risks, whereas the independent research community can do so thoroughly.

Figure 1. What access protections do AI companies provide for independent safety research? Source: Longpre et al., “A Safe Harbor for AI Evaluation and Red Teaming.”

Plan of Action

Congress should enable independent AI safety and trustworthiness researchers by adopting two new policies. First, Congress should incentivize AI safety research by creating algorithmic bug bounties for this kind of work. AI companies often do not incentivize research that could reveal safety flaws in their systems, even though the government will be a major client for these systems. Even small incentives can go a long way, as there are thousands of AI researchers capable of demonstrating such flaws. This would also entail establishing mechanisms through which safety flaws or vulnerabilities in AI models can be disclosed, or a kind of help-line for AI systems.

Second, Congress should require AI platform companies, such as Google, Amazon, Microsoft, and OpenAI to share data with researchers regarding their AI systems. As with social media platforms, generative AI platforms mediate the behavior of millions of people through the algorithms they produce and the decisions they enable. Companies that operate application programming interfaces used by tens of thousands of enterprises should share basic information about their platforms with researchers to facilitate external oversight of these consequential technologies.

Taken together, vulnerability disclosure incentivized through algorithmic bug bounties and protections for researchers enabled by safe harbors would substantially improve the safety and trustworthiness of generative AI systems. Congress should prioritize mitigating the risks of generative AI systems and protecting the researchers who expose them.

Recommendation 1. Establish algorithmic bug bounties for AI safety.

As part of the FY2024 National Defense Authorization Act (NDAA), Congress established “Artificial Intelligence Bug Bounty Programs” requiring that within 180 days “the Chief Digital and Artificial Intelligence Officer of the Department of Defense shall develop a bug bounty program for foundational artificial intelligence models being integrated into the missions and operations of the Department of Defense.” However, these bug bounties extend only to security vulnerabilities. In the FY2025 NDAA, this bug bounty program should be expanded to include AI safety. See below for draft legislative language to this effect.

Recommendation 2. Create legal protections for AI researchers.

Section 9 of the proposed Platform Accountability and Transparency Act (PATA) would establish a “safe harbor for research on social media platforms.” This likely excludes major generative AI platforms such as Google Cloud, Amazon Web Services, Microsoft Azure, and OpenAI’s API, meaning that researchers have no legal protections when conducting safety research on generative AI models via these platforms. PATA and other legislative proposals related to AI should incorporate a safe harbor for research on generative AI platforms.

Conclusion

The need for independent AI evaluation has garnered significant support from academics, journalists, and civil society. Safe harbor for AI safety and trustworthiness researchers is a minimum fundamental protection against the risks posed by generative AI systems, including related to national security. Congress has an important opportunity to act before it’s too late.

This idea is part of our AI Legislation Policy Sprint. To see all of the policy ideas spanning innovation, education, healthcare, and trust, safety, and privacy, head to our sprint landing page.

The authors of this memorandum as well as the academic paper underlying it submitted a comment to the Copyright Office in support of an exemption to DMCA for AI safety and trustworthiness research. The Computer Crime and Intellectual Property Section of the U.S. Department of Justice’s Criminal Division and Senator Mark Warner have also endorsed such an exemption. However, a DMCA exemption regarding research on AI bias, trustworthiness, and safety alone would not be sufficient to assuage the concerns of AI researchers, as they may still face liability under other statutes such as the Computer Fraud and Abuse Act.

Much of this research is currently conducted by research labs with direct connections to the AI companies they are assessing. Researchers who are less well connected, of which there are thousands, may be unwilling to take the legal or personal risk of violating companies’ Terms of Service. See our academic paper on this topic for further details on this and other questions.

See draft legislative language below, building on Sec. 1542 of the FY2024 NDAA:

SEC. X. EXPANSION OF ARTIFICIAL INTELLIGENCE BUG BOUNTY PROGRAMS.

(a) Update to Program for Foundational Artificial Intelligence Products Being Integrated Within Department of Defense.—

(1) Development required.—Not later than 180 days after the date of the enactment of this Act and subject to the availability of appropriations, the Chief Digital and Artificial Intelligence Officer of the Department of Defense shall expand its bug bounty program for foundational artificial intelligence models being integrated into the missions and operations of the Department of Defense to include unsafe model behaviors in addition to security vulnerabilities.

(2) Collaboration.—In expanding the program under paragraph (1), the Chief Digital and Artificial Intelligence Officer may collaborate with the heads of other Federal departments and agencies with expertise in cybersecurity and artificial intelligence.

(3) Implementation authorized.—The Chief Digital and Artificial Intelligence Officer may carry out the program In subsection (a).

(4) Contracts.—The Secretary of Defense shall ensure, as may be appropriate, that whenever the Secretary enters into any contract, such contract allows for participation in the bug bounty program under paragraph (1).

(5) Rule of construction.—Nothing in this subsection shall be construed to require—

(A) the use of any foundational artificial intelligence model; or

(B) the implementation of the program developed under paragraph (1) for the purpose of the integration of a foundational artificial intelligence model into the missions or operations of the Department of Defense.

The Federation of American Scientists supports Congress’ ongoing bipartisan efforts to strengthen U.S. leadership with respect to outer space activities.

By preparing credible, bipartisan options now, before the bill becomes law, we can give the Administration a plan that is ready to implement rather than another study that gathers dust.

Even as companies and countries race to adopt AI, the U.S. lacks the capacity to fully characterize the behavior and risks of AI systems and ensure leadership across the AI stack. This gap has direct consequences for Commerce’s core missions.

As states take up AI regulation, they must prioritize transparency and build technical capacity to ensure effective governance and build public trust.