Establish Data-Sharing Standards for the Development of AI Models in Healthcare

The National Institute for Standards and Technology (NIST) should lead an interagency coalition to produce standards that enable third-party research and development on healthcare data. These standards, governing data anonymization, sharing, and use, have the potential to dramatically expedite development and adoption of medical AI technologies across the healthcare sector.

Challenge and Opportunity

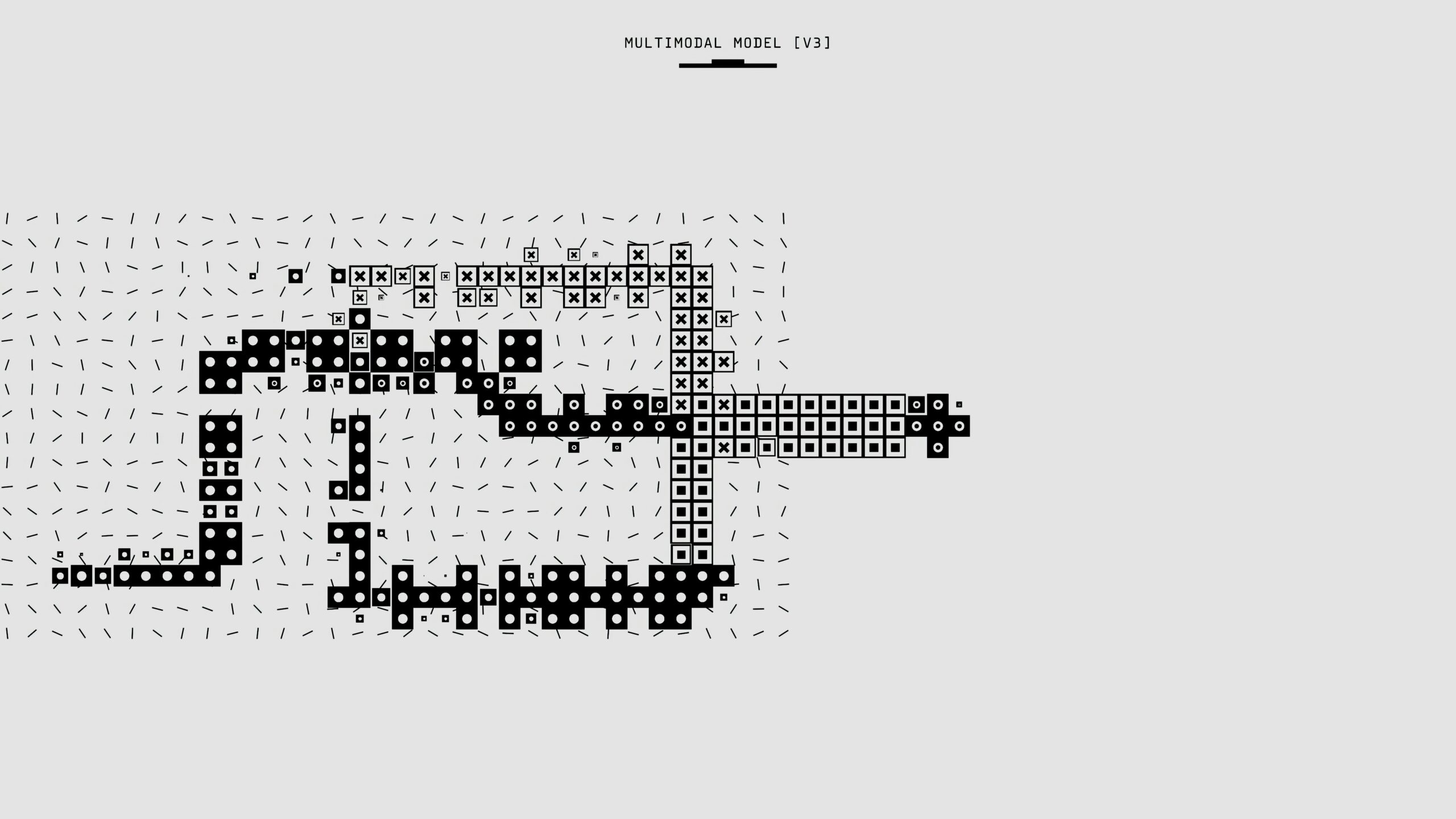

The rise of large language models (LLMs) has demonstrated the predictive power and nuanced understanding that comes from large datasets. Recent work in multimodal learning and natural language understanding have made complex problems—for example, predicting patient treatment pathways from unstructured health records—feasible. A study by Harvard estimated that the wider adoption of AI automation would reduce U.S. healthcare spending by $200 billion to $360 billion annually and reduce the spend of public payers, such as Medicare, Medicaid, and the VA, by five to seven percent, across both administrative and medical costs.

However, the practice of healthcare, while information-rich, is incredibly data-poor. There is not nearly enough medical data available for large-scale learning, particularly when focusing on the continuum of care. We generate terabytes of medical data daily, but this data is fragmented and hidden, held captive by lack of interoperability.

Currently, privacy concerns and legacy data infrastructure create significant friction for researchers working to develop medical AI. Each research project must build custom infrastructure to access data from each and every healthcare system. Even absent infrastructural issues, hospitals and health systems face liability risks by sharing data; there are no clear guidelines for sufficiently deidentifying data to enable safe use by third parties.

There is an urgent need for federal action to unlock data for AI development in healthcare. AI models trained on larger and more diverse datasets improve substantially in accuracy, safety, and generalizability. These tools can transform medical diagnosis, treatment planning, drug development, and health systems management.

New NIST standards governing the anonymization, secure transfer, and approved use of healthcare data could spur collaboration. AI companies, startups, academics, and others could responsibly access large datasets to train more advanced models.

Other nations are already creating such data-sharing frameworks, and the United States risks falling behind. The United Kingdom has facilitated a significant volume of public-private collaborations through its establishment of Trusted Research Environments. Australia has a similar offering in its SURE (Secure Unified Research Environment). Finland has the Finnish Social and Health Data Permit Authority (Findata), which houses and grants access to a centralized repository of health data. But the United States lacks a single federally sponsored protocol and research sandbox. Instead, we have a hodgepodge of offerings, ranging from the federal National COVID Cohort Collaborative Data Enclave to private initiatives like the ENACT Network.

Without federal guidance, many providers will remain reticent to participate or will provide data in haphazard ways. Researchers and AI companies will lack the data required to push boundaries. By defining clear technical and governance standards for third-party data sharing, NIST, in collaboration with other government agencies, can drive transformative impact in healthcare.

Plan of Action

The effort to establish this set of guidelines will be structurally similar to previous standard-setting projects by NIST, such as the Cryptographic Standards or Biometric Standards Program. Using those programs as examples, we expect the effort to require around 24 months and $5 million in funding.

Assemble a Task Force

This standards initiative could be established under NIST’s Information Technology Laboratory, which has expertise in creating data standards. However, in order to gather domain knowledge, partnerships with agencies like the Office of the National Coordinator for Health Information Technology (ONCHIT), Department of Health and Human Services (HHS), the National Institutes of Health (NIH), the Centers for Medicare & Medicaid Services (CMS), and the Agency for Healthcare Research and Quality (AHRQ) would be invaluable.

Draft the Standards

Data sharing would require standards at three levels:

- Syntactic: How exactly shall data be shared? In what file types, over which endpoints, at what frequency?

- Semantic: What format shall the data be in? What do the names and categories within the data schema represent?

- Governance: What data will and won’t be shared? Who will use the data, and in what ways may this data be used?

Syntactic regulations already exist through standards like HL7/FHIR. Semantic formats exist as well, in standards like the Observational Medical Outcomes Partnership’s Common Data Model. We propose to develop the final class of standards, governing fair, privacy-preserving, and effective use.

The governance standards could cover:

- Data Anonymization

- Define technical specifications for deidentification and anonymization of patient data.

- Specify data transformations, differential privacy techniques, and acceptable models and algorithms.

- Augment existing Health Insurance Portability and Accountability Act (HIPAA) standards by defining what constitutes an “expert” under HIPAA to allow for safe harbor under the rules.

- Develop risk quantification methods to measure the downstream impact of anonymization procedures.

- Provide guidelines on managing data with different sensitivity levels (e.g., demographics vs. diagnoses).

- Secure Data Transfer Protocols

- Standardize secure transfer mechanisms for anonymized data between providers, data facilities, and approved third parties.

- Specify storage, encryption, access control, auditing, and monitoring requirements.

- Approved Usage

- Establish policies governing authorized uses of anonymized data by third parties (e.g., noncommercial research, model validation, or types of AI development for healthcare applications). This may be inspired by the Five Safes Framework used by the United Kingdom, Canada, Australia, and New Zealand.

- Develop an accreditation program for data recipients and facilities to enable accountability.

- Public-Private Coordination

- Define roles for collaboration among governmental, academic, and private actors.

- Determine risks and responsibilities for each actor to delineate clear accountability and foster trust.

- Create an oversight mechanism to ensure compliance and address disputes, fostering an ecosystem of responsible data use.

Revise with Public Comment

After releasing the first draft of standards, seek input from stakeholders and the public. In particular, these groups are likely to have constructive input:

- Provider groups and health systems

- Technology companies and AI startups

- Patient advocacy groups

- Academic labs and medical centers

- Health information exchanges

- Healthcare insurers

- Bioethicists and review boards

Implement and Incentivize

After publishing the final standards, the task force should promote their adoption and incentivize public-private partnerships. The HHS Office of Civil Rights must issue regulatory guidance allowable under HIPAA to allow these guide documents to be used as a means to meet regulatory burden. These standards could be initially adopted by public health data sources, such as CMS, or NIH grants may mandate participation as part of recently launched public disclosure and data sharing requirements.

Conclusion

Developing standards for collaboration on health AI is essential for the next generation of healthcare technologies.

All the pieces are already in place. The HITECH Act and the Office of the National Coordinator for Health Information Technology gives grants to Regional Health Information Exchanges precisely to enable this exchange. This effort directly aligns with the administration’s priority of leveraging AI and data for the national good and the White House’s recent statement on advancing healthcare AI. Collaborative protocols like these also move us toward the vision of an interoperable health system—and better outcomes for all Americans.

This idea is part of our AI Legislation Policy Sprint. To see all of the policy ideas spanning innovation, education, healthcare, and trust, safety, and privacy, head to our sprint landing page.

Collaboration among several agencies is essential to the design and implementation of these standards. We envision NIST working closely with counterparts at HHS and other agencies. However, we think that NIST is the best agency to lead this coalition due to its rich technical expertise in emerging technologies.

NIST has been responsible for several landmark technical standards, such as the NIST Cloud Computing Reference Architecture, and has previously done related work in its report on deidentification of personal information and extensive work on assisting adoption of the HL7 data interoperability standard.

NIST has the necessary expertise for drafting and developing data anonymization and exchange protocols and, in collaboration with the HHS, ONCHIT, NIH, AHRQ, and industry stakeholders, will have the domain knowledge to create useful and practical standards.

The Federation of American Scientists supports Congress’ ongoing bipartisan efforts to strengthen U.S. leadership with respect to outer space activities.

By preparing credible, bipartisan options now, before the bill becomes law, we can give the Administration a plan that is ready to implement rather than another study that gathers dust.

Even as companies and countries race to adopt AI, the U.S. lacks the capacity to fully characterize the behavior and risks of AI systems and ensure leadership across the AI stack. This gap has direct consequences for Commerce’s core missions.

As states take up AI regulation, they must prioritize transparency and build technical capacity to ensure effective governance and build public trust.