Use of Attribution and Forensic Science in Addressing Biological Weapon Threats: A Multi-Faceted Study

The threat from the manufacture, proliferation, and use of biological weapons (BW) is a high priority concern for the U.S. Government. As reflected in U.S. Government policy statements and budget allocations, deterrence through attribution (“determining who is responsible and culpable”) is the primary policy tool for dealing with these threats. According to those policy statements, one of the foundational elements of an attribution determination is the use of forensic science techniques, namely microbial forensics. In this report, Christopher Bidwell, FAS Senior Fellow for Nonproliferation Law and Policy, and Kishan Bhatt, an FAS summer research intern and undergraduate student studying public policy and global health at Princeton University, look beyond the science aspect of forensics and examine how the legal, policy, law enforcement, medical response, business, and media communities interact in a bioweapon’s attribution environment. The report further examines how scientifically based conclusions require credibility in these communities in order to have relevance in the decision making process about how to handle threats.

A full PDF version of the report can be found here.

Creating a Community for Global Security

Imagine thousands and potentially millions of scientists committed to making the world safer and more secure. This was the vision of the dedicated group of “atomic scientists” who founded the Federation of Atomic Scientists (the original FAS) in November 1945. As we will soon reach the 70th anniversary, let’s reflect on the meaning of FAS and most especially look forward to the next 70 years. While the next issue of the Public Interest Report will feature many articles that assess the accomplishments of the organization and its affiliated scientists and policy experts during the past 70 years, this PIR issue features many outstanding experts who care deeply about global security.

Before discussing the content of this PIR, I am pleased to introduce to our readers the new Managing Editor: Allison Feldman. Allison started working at FAS in early August as the Communications and Community Outreach Officer. With an undergraduate degree in environmental science and biology from Binghamton University, Allison has a passion for science, and she also brings to FAS her experience in previous jobs in which she has worked with the scientific community and educated the public about science. I am happy to have her working at FAS because she will help FAS continue to revitalize itself as an organization dedicated to involving scientists, engineers, and other technically trained people in advising policymakers and informing the public about practical ways to make the world more secure against dangers such as use of nuclear weapons and outbreaks of pandemics. For example, Allison has recently begun the Scientist Spotlight series that features a prominent FAS-affiliated scientist or engineer on FAS.org each month.

Due to the transition time to have Allison start in this position, she and I decided to make this PIR a larger issue with about twice the number of articles typically found in the PIR. So, this combined summer-fall issue showcases several articles by seasoned practitioners in the fields of science, policy, and arms control, as well as younger engineers who are seeking to apply their technical training to stopping the further proliferation of nuclear weapons.

This PIR has thought-provoking pieces on nuclear nonproliferation, nuclear winter, preventing nuclear terrorism, the vital importance of intercultural understanding, and several other critical issues. Notably, Steven Starr, Director of the Clinical Laboratory Science Program at the University of Missouri Hospital and Clinics, writes on a core mission issue for FAS: the survival of humanity in the event of a nuclear war that could trigger a massive cooling of the earth. Also addressing a dreaded event that is preventable, Edward Friedman, Emeritus Professor at the Stevens Institute of Technology, and longtime FAS member for more than 50 years, has contributed an in-depth review article about the threat of nuclear terrorism and efforts that can reduce this risk.

FAS has an eminent reputation of featuring the work of prominent “hybrid” scientists: those who have distinguished careers in scientific research while also devoting a significant portion of their professional efforts toward societal issues. In this PIR, we show two of these so-called “hybrid” scientists: Professor Rob Goldston and Professor Frank Settle. Dr. Goldston has done path-breaking research for more than 30 years on nuclear fusion and, more recently, has helped develop innovative methods to confirm that nuclear warheads slated for dismantlement are genuine warheads without revealing classified military information. The Q&A with him explores both of these issues as well as his other interests in science and society. Dr. Settle has straddled the worlds of chemistry, teaching, and nuclear policy issues for decades. He has received international recognition for his excellent work in analytical chemistry and in creating an online annotated database for nuclear issues called ALSOS. In his article, he delves into the history of the nuclear age by examining the many leadership roles of General George Marshall in the development of the first atomic bombs and the first initiatives in arms control.

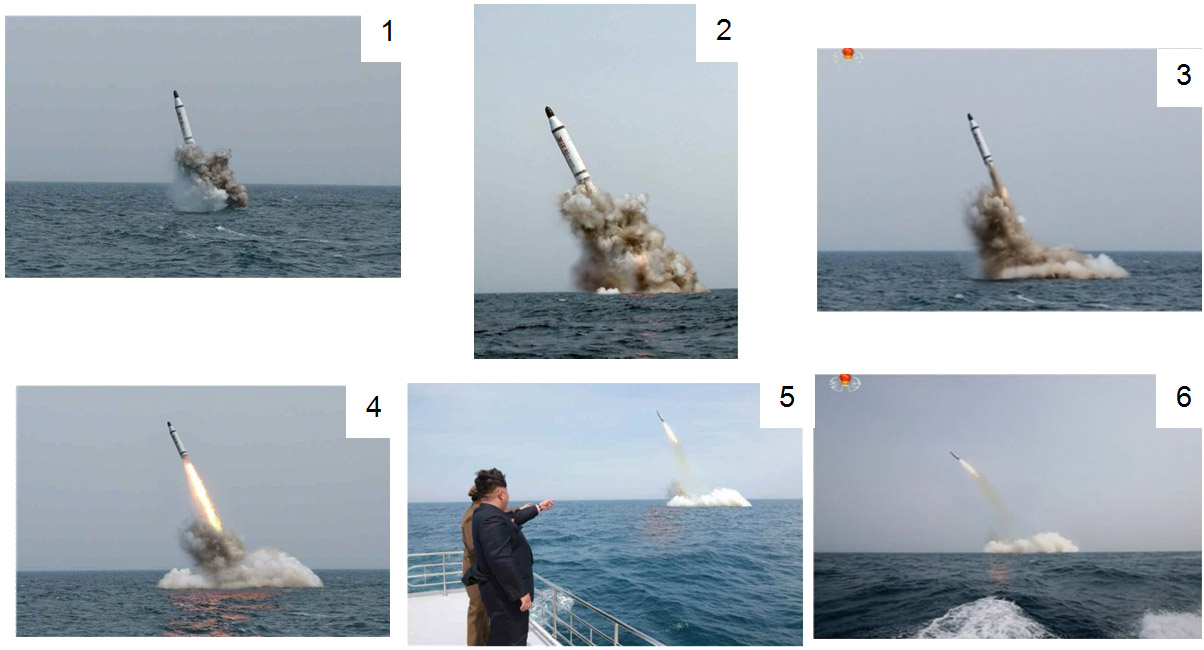

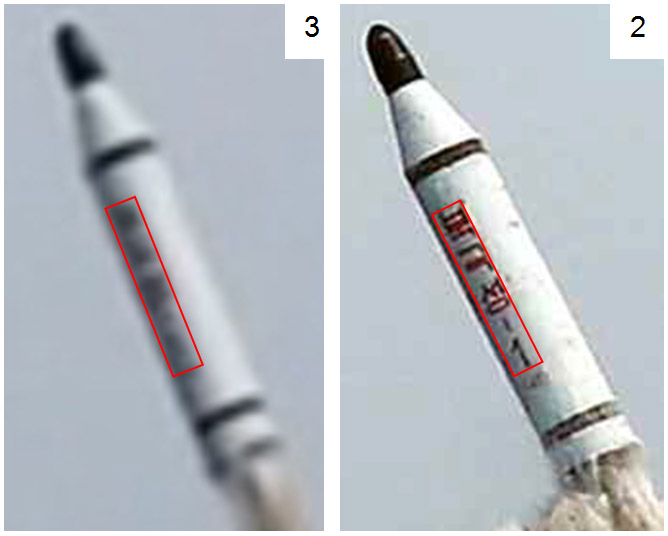

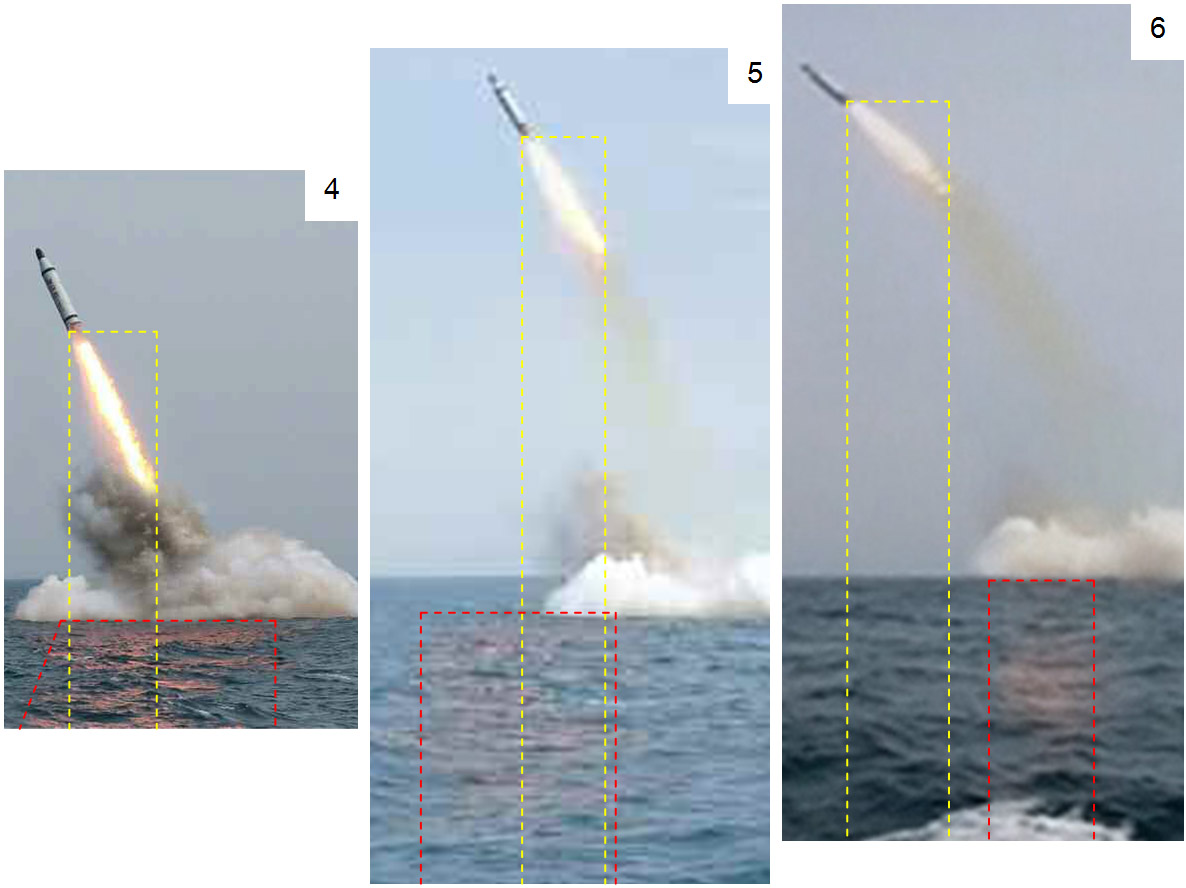

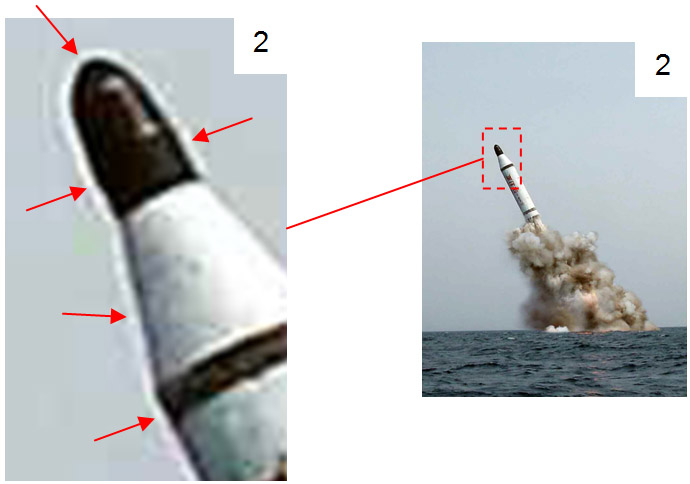

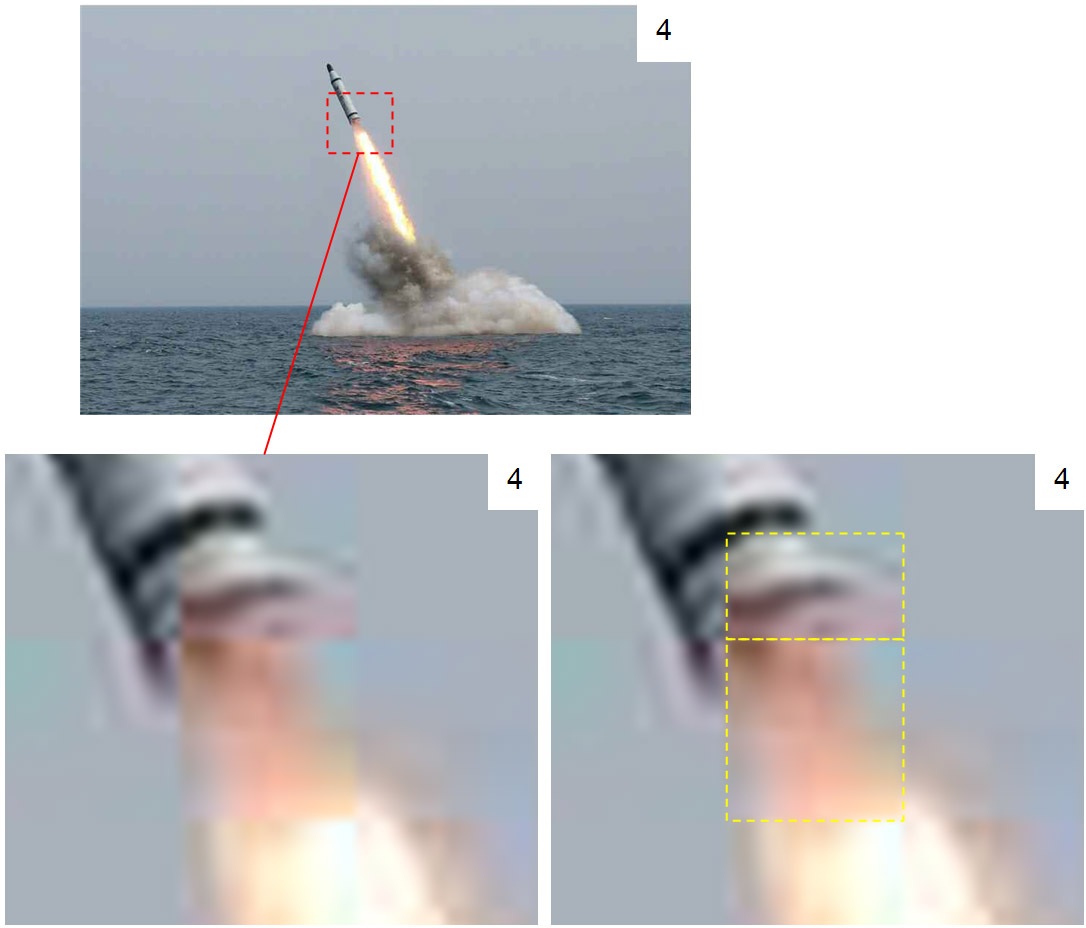

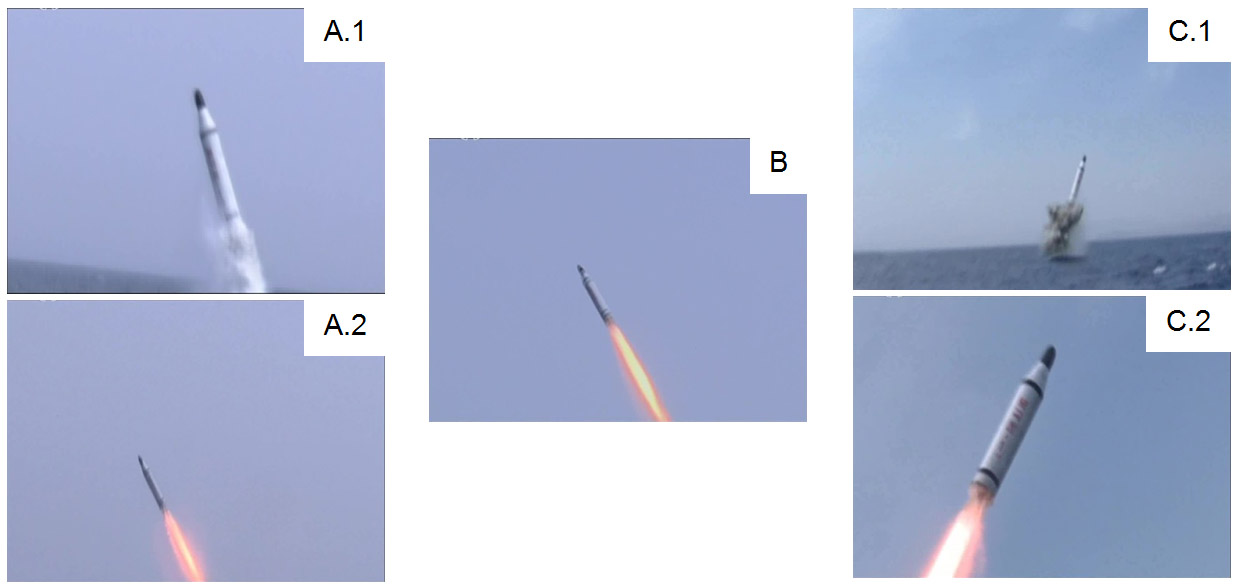

We are also pleased to present the work of early to mid-career engineers and scientists. In this issue, mid-career stars Markus Schiller and James Kim, who have done excellent technical and policy work in Germany, South Korea, and the United States, reveal that the allegedly North Korean missile-launching submarine appears to be “an emperor with no clothes.” They employ their skills in photographic analysis, missile technology, and political assessment to blow the cover on North Korea’s latest purported “super” weapon. This is not to suggest that North Korea is not an international security threat. However, we would be remiss not to provide a possible pathway for resolving this threat. To do that, Texas A&M University graduate students Manit Shah and José Trevino propose adapting the model of the agreement with Iran to limit its nuclear program to the problem of curtailing North Korea’s nuclear program. Of course, North Korea is a greater challenge than Iran, given the fact that North Korea has developed and tested nuclear weapons and Iran has not. But this underscores the need for creative thinking to prevent the further buildup of nuclear weapons by North Korea.

As an organization that supports having all scientific disciplines contribute to improving global security, we are excited to feature an insightful article by Nasser bin Nasser, the head of the Middle East Scientific Institute for Security (MESIS), headquartered in Amman, Jordan. Nasser discusses the urgent need to understand the role of social science and cultural studies in effectively addressing international security. Among several issues, he highlights the misunderstandings that arose during the inspections in Iraq after the 1991 Gulf War. Unfortunately, cultural miss-readings exacerbated an already tense situation between the Iraqis and the inspectors looking for weapons of mass destructions or the programs to make such weapons.

In other news from FAS headquarters, we are happy to welcome Dr. David Hafemeister, an emeritus professor from California Polytechnic State University (Cal Poly), who will work as a visiting scientist at FAS for the next year. An FAS member since the mid-1960s, Dave has led a distinguished career during which he has served as a scientist in the executive and legislative branches of the U.S. government and has been an award-winning educator. During his visiting fellowship at FAS, he will be studying the science policy advisory process and will be seeking opportunities to educate policymakers.

To further our outreach, in June this year, FAS organized a salon dinner in Berkeley. The participants were prominent scientists, engineers, and community leaders in energy, air pollution, climate change, and national security. The two thematic questions the assembled considered were: (1) If you had only three minutes with the president of the United States, what one important issue should he or she know about and act on? And (2) who at the dinner (you had not met before) would you want to collaborate with in your work? In this not for attribution event, we had a very lively discussion with many providing practical advice on how FAS could serve in advancing energy security. Also, we believe that FAS has helped play a convening role in bringing together a diverse group of experts and in fostering interdisciplinary collaboration. We will be seeking to continue these conversations across the United States in the coming years.

We are thankful for the generous financial support from donors like you for FAS to perform these outreach programs and to sustain FAS’s core projects in nuclear security and government secrecy. We are also very appreciative of the advice from several FAS-affiliated scientists about energy and security policy and how FAS can play an effective role in this complex set of issues. Moreover, we welcome and encourage you to send us your ideas about how to get scientists and engineers more involved in societal issues.

The Iran Deal: A Pathway for North Korea?

The majority of all nuclear experts and diplomats, as well as aspiring nuclear and policy students, must have their eyes set on North Korea’s slowly but steadily expanding nuclear weapons program, as well as the recent updates on the Joint Comprehensive Plan of Action (JCPOA) with Iran. North Korea has disregarded all issued warnings to carry out nuclear tests and claims to have nuclear weapons capable of striking the United States. Other nations have considered North Korea’s actions as signs of hostility but still have shown willingness to restart nuclear talks. Iran under President Hassan Rouhani was able to come to terms with the P5+1 group that includes six world powers, namely, the United States, Russia, China, Great Britain, France, and Germany. They successfully negotiated the JCPOA after almost a decade of conciliation efforts to limit Iran’s nuclear program to one with only peaceful purposes. The JCPOA is also significant because of the effect the deal will have on the Iranian economy; following its implementation, billions of dollars will be unfrozen. The deal promotes objectives central to the Treaty on the Non-Proliferation of Nuclear Weapons (NPT) as well as promises to stimulate democracy, potentially bringing stability to the region. The deal with Iran and the companion JCPOA could open up opportunities for nations (like North Korea) to stabilize their regions in exchange for assistance in growing a peaceful nuclear program. In this article, key elements of the JCPOA are addressed, along with issues that demand attention for a deal with North Korea. Our hope is that the information provided will serve as a reference and stepping stone for the international nuclear community to resume discussions with North Korea.

The JCPOA

The so-called “Iran Deal,” an international agreement on Iran’s nuclear program, was signed in Vienna on July 14, 2015 between Iran, the P5+1 group of nations, and the European Union. The deal helps to promote the three objectives of the NPT, to prevent the spread of nuclear weapons, to promote peaceful uses of nuclear energy, and to further the goal of achieving nuclear disarmament. Groundwork for the agreement was founded in the Joint Plan of Action – a temporary agreement between Iran and the P5+1 group that was signed in late 2013. The nuclear talks became most meaningful when Hassan Rouhani came to power in 2013 as President of the Islamic Republic of Iran. It took almost twenty months for the negotiation parties to come to a final “Framework Agreement” in April 2015.

Iran was ensnared in a heavy load of sanctions beginning in 2006 that subsequently contributed to sinking its economy over the last decade. Yet by 2013, Iran had about 20,000 centrifuges that could be used to enrich uranium, an increase from a mere few hundred in 2002. (A uranium enrichment facility can either be used to make low-enriched uranium, typically 3 to 5 percent in the fissile isotope uranium-235, or highly-enriched uranium, greater than 20 percent U-235 and that could be useful for nuclear weapons.) Furthermore, Iran had developed a heavy water reactor in Arak that (once operational) could produce plutonium, a uranium conversion plant in Isfahan, a uranium enrichment plant in Natanz, a military site in Parchin, and an underground enrichment plant in Fordow. As Iran has latent capability to pursue either the uranium enrichment or plutonium (the most sought after nuclear material through which it is realistic to fabricate a nuclear weapon) routes to build a nuclear weapon, the agreement, which addresses both routes, has major significance in the global community that seeks to promote the peaceful use of nuclear energy.

Under the agreement, Iran has agreed to curtail its nuclear program in exchange for lifting imposed sanctions, which would help to revive its economy. These restrictions demand verification by which Iran would have to cooperate with inquiries and monitoring requirements. In addition, Iran’s past nuclear activities would be investigated (various sites could be inspected and environmental samples could be taken). Following these assessments, continuous monitoring would be required to maintain established knowledge that no clandestine activities are taking place. This will leverage the assistance of the Nuclear Suppliers Group to keep a lookout for any import or export of dual-use technology. In particular, the agreement calls for a so-called “white” procurement channel to be created to monitor Iran’s acquisition of technologies for its nuclear program.

Key elements of the Iran deal are: a. Reduction of centrifuges to only 6104 – while only 5060 are allowed to enrich uranium over the next 10 years; b. Centrifuges will only enrich uranium to 3.67 percent (useful for fueling the commercial nuclear power plant at Bushehr) for 15 years; c. No new uranium enrichment facilities will be built; d. Stockpile of 20 percent enriched uranium will be either blended down or sold; e. Only 300 kg of low-enriched uranium will be stockpiled for 15 years; f. Extension of the breakout time to about a year from the current status of two to three months for 10 years; g. The Fordow facility, located about 200 feet underground, would stop enriching uranium for at least 15 years; h. Current facilities will be maintained but modified to ensure the breakout time of about one year (such as the heavy water reactor in Arak); i. the International Atomic Energy Agency (IAEA), the nuclear “watch-dog” for the United Nations, will gain access to all of Iran’s facilities, including the military site in Parchin, to conclude an absence of weapons related activities; and j. The sanctions will be lifted in phases as the listed requirements are met. However, if Iran is found violating any obligations, the sanctions will be reinstated immediately.

The requirements in the Iran deal have been placed to lessen its nuclear program to a peaceful one and to increase the breakout time to about one year for the next 10 years. This would not only help other nations (as the deal will keep Iran from producing a nuclear weapon and bring stability and security to the region) but also Iran, who seeks to revive its economy and continue its peaceful nuclear program while maintaining sovereignty of their nation.

North Korea

On the other hand, North Korea’s nuclear ambitions started early in the 1950s, soon after the bombings on Hiroshima and Nagasaki. North Korea’s close rapport with the Soviet Union led to a nuclear cooperation agreement, signed in 1959. Under this agreement, the Soviet Union supplied the first research reactor, the IRT-2000. This became the Yongbyon Nuclear Scientific Research Center, North Korea’s major nuclear site, which has several facilities to support the North Korean nuclear program. In 1974, the IRT-2000 reactor was upgraded to a power level of 8 MWth (megawatt-thermal).1 2 A year later in 1975, North Korea installed the Isotope Production Laboratory (“Radiochemistry Laboratory”) to carry out small-scale reprocessing operations. Moreover, North Korea in the 1970s performed various activities such as: the indigenous construction of Yongbyon’s second research reactor, uranium mining operations at various locations near Sunchon and Pyongsan, and installation of ore-processing and fuel rod-fabrication plants in Yongbyon. They also began construction on their first electricity-producing reactor in 1985, which was based on the United Kingdom’s declassified information regarding the Calder Hall 50 MWe (megawatt-electric) reactor design.

North Korea was a part of the NPT for about two decades, from its ratification by the government in 1985 until its withdrawal in 2003. [North Korea had first begun to withdraw in 1993, but when the dialogue commenced directly with the United States, they later suspended this action (with only one day left on the intent to withdraw).] Due in part to diplomacy between former U.S. President Jimmy Carter and North Korean leader Kim Il-sung, North Korea signed the Agreed Framework with the United States in 1994. However, the Agreed Framework dissolved in 2002 after President George W. Bush named the country as part of the “axis of evil.” Following its withdrawal, North Korea still showed readiness in freezing its nuclear program in exchange for various concessions. The nuclear talks between North Korea and world powers were recurring, as they never found a common ground, including the Six-Party talks in which South Korea, Japan, China, Russia, the United States, and North Korea were involved. In fact, the last time Six-Party talks were held was six years ago in 2009, despite numerous efforts to resume them.

“On October 9, 2006, North Korea conducted an underground nuclear test, despite warnings by the country’s principal economic benefactors, China and South Korea, not to proceed,” states Marcus Nolan, an economist at the Peterson Institute for International Economics. 3 According to Nolan, “the pre-test conventional wisdom was that a North Korean nuclear test would result in sanctions with dramatic economic consequences.” Five days later, the UN imposed economic sanctions on North Korea, with the passing of Resolution 1718. What is compelling to note, according to Nolan, is that “there is no statistical evidence that the nuclear test and subsequent sanctions had any impact on North Korean trade.” Nolan’s analysis of the trade data suggests that “for better or worse, North Korea correctly calculated that the penalties for nuclear action, at least in this primary sphere, would be trivial to the point of being undetectable – potentially establishing a very unwelcome precedent with respect both to the country’s future behavior and to the behavior of potential emulators.” Following the very first nuclear test in 2006, North Korea carried out two more tests in 2009 and 2013. “Sanctions won’t bring North Korea to its knees,” said Kim Keun-sik, a specialist on North Korea at Kyungnam University in Seoul. “The North knows this very well, from having lived with economic sanctions of one sort or another for the past 60 years.” 4 Does this mean the sanctions are not firm? The answer may be debatable, but the nuclear tests do demonstrate their failure. According to recent reports, activities at the Yongbyon reactor and Radiochemistry Laboratory are proceeding swiftly and it is assumed that the country is gearing up for a fourth nuclear test. This suggests that either sanctions needs to be more robust, which paves a pathway for serious nuclear talks, or North Korea is simply not interested in nuclear talks.

The Across-the-Board Treaty

The Iran Deal has been the hot topic in nonproliferation for various and obvious reasons, but two key questions remain: 1.) Is the deal apt to restrain Iran from advancing further in its nuclear weapons technology? 2.) And would the world see the deal through to successful implementation? The easy answer is that the world powers will know almost immediately whether restraints will take effect because of important milestones within the next six months, but the long-term implementation is more complicated. However, according to various experts, the JCPOA is the best that world powers can achieve given the competing interests among the negotiating parties. Moreover, we argue that this deal can act as a benchmark for many other countries like India, Israel, North Korea, and Pakistan that seek to expand or preserve their nuclear weapons capabilities. The challenge is how to craft deals with these other nuclear-armed states that will not lead to further proliferation or buildup of their nuclear arsenals.

One of the main reasons why North Korea has been able to operate in such a hostile manner in the past is the failure of sanctions. As part of the 1718 resolution of the UN Security Council, an embargo was imposed on exports of heavy weapons, dual-use items, and luxury goods to North Korea, as well as on the exportation of heavy weapons systems from North Korea, though the administration of the sanctions was left to each individual-sanctioning countries. “Russia, for example, defined luxury goods so narrowly (e.g., fur coats costing more than $9,637 and watches costing nearly $2,000) that the effect of the sanctions was questionable,” says Nolan. It is the sanctions themselves that can be the first step in bringing a country to the bargaining table; then, offering some concessions can lead to the meaningful and significant decisions. In this case, it appears North Korea was never cornered-off in yielding them. Most analysts, including Kim Keun-sik, suggest that the most effective measures are “those that target the lifestyle of North Korean leaders: financial sanctions aimed at ending all banking transactions related to North Korea’s weapons trade, and halting most grants and loans. This would effectively freeze many of the North’s overseas bank accounts, cutting off the funds that the North Korean leader has used to secure the cognac, Swiss watches, and other luxury items needed to buy the loyalty of his country’s elite.”

Another dimension to the issue of imposed sanctions is the support North Korea has received from China, who has been their primary trading partner and has provided them with food and energy. In fact, China supported the 1718 resolution only when the sanctions were reduced – less than severe, as they fear the regime collapse and subsequent, refugee invasion across their border. This is the key reason why China has played an important role in the Six-Party talks. However, following the third nuclear test in 2013, China’s patience with North Korea appeared to run out, as they imposed new sanctions and called for nuclear talks. In fact, a forum is planning to be held by a think-tank, the China Institute of International Studies, and backed by the Chinese government. 5 Academics and experts from the United States, Russia, China, South Korea, Japan, and North Korea (Six-Party) will be attending with the intent to restart the nuclear talks on North Korea’s nuclear weapons program. According to a recent report, the United States and China have also discussed ways to boost the sanctions.

A deal with North Korea could potentially be realized once the sanctions are applied in an effective manner, such that loopholes that have previously allowed shortcutting of sanctions are henceforth closed off. Specifically, sanctions will only be effective if China is on board with the other major powers. China has been lenient in the past while dealing with North Korea, as they fear the ripple effects that could be triggered by the sanctions. China can be a part of the enforcement, provided additional world powers offers their support in terms of finance and manpower to maintain the law and order in China’s territory by the border, as they fear the refugee invasion. Furthermore, China has personal interest to reform North Korea. Thus, an assertion from other world powers that they will help to reestablish government in North Korea could strongly sway China.

Once these firm sanctions are enforced, the prime factors, which will be of utmost importance to address during the deal, are hereby listed for diplomats and nuclear experts for their perusal: a. The IRT-2000 reactor was upgraded to use a weapons-usable, highly-enriched uranium fuel containing 80% U-235 by weight (from the original that used only low-enriched uranium fuel, 10% U-235 by weight); b. The reactor modeled after the UK’s Calder Hall was a gas-graphite design that is of concern for proliferation – it uses natural uranium fuel, making it self-reliant on North Korea’s indigenous uranium and able to allow for production of weapons-grade plutonium; c. In the 1970s, the Radiochemistry Laboratory was used to separate 300-mg of plutonium from the irradiated IRT-2000 fuel. This information was not revealed until 1992 to the IAEA and requires significant attention; d. North Korea had initiated the construction of a second 50 MWe reactor, but the specific details were unclear as to its origin and therefore need to be examined; e. According to the IAEA, the activities at the Yongbyon site suggest that the country houses uranium enrichment centrifuges that could help create a uranium-based bomb; f. North Korea was constructing another light-water reactor in the vicinity of Yongbyon that may have become operational; and g. Recent reports indicate a large amount of activity being carried out at the Yongbyon and Pyongsan sites, possibly meaning they are preparing for another nuclear detonation test. 6 7

Presently, the Iran deal has been finalized and the hard task of implementation is underway; yet the activities carried out by North Korea demand valuable attention as well. The aforementioned issues will be vital points of discussion between the world powers during their negotiations with North Korea to curtail their nuclear activities. However, the sanctions need to be effective a priori in order for North Korea to be genuine during the bargaining process. Here, China plays an important role in the implementation of sanctions, as they have been so far submissive due to fear of potential hullabaloo effects. An assertion (moreover, an undertaking) from other world powers that their manpower and funds are accessible for mitigating any ripple effects of harsh sanctions will ensure China’s full backing to boost the efforts against North Korea.

Summary

In this article, Iran’s and North Korea’s nuclear programs have been outlined, as well as the central factors of the Joint Comprehensive Plan of Action. Further, North Korea’s nuclear capabilities and a summary of obstacles that will need to be overcome are detailed – a possible pathway to negotiate with North Korea has been presented with the caveat that it will be extremely challenging to implement effective controls on the North Korean nuclear program (given the hermetic and hostile behaviors of the North Korean government). In the near future, one can anticipate the implementation of the Iran deal, which will have a great impact in the global community and especially the greater Middle Eastern region. In return, the Iranian economy will have tens of billions of dollars unfrozen and ready to be spent, while promoting NPT objectives, as well as bringing stability to the region. In many ways, the Iran deal could act as a stepping stone in establishing a similar relationship with countries such as India, Israel, North Korea, and Pakistan, but given that these nations are already nuclear-armed, the challenges to creating agreements for them are much tougher than for Iran. Such agreements have the potential to further bolster the pillars of the NPT regime: safeguards and verification, safety and security, and science and technology.

Manit Shah is a Ph. D. Candidate in the Department of Nuclear Engineering at Texas A&M University and is a part of the Nuclear Security Science and Policy Institute (NSSPI). His fields of interest are Nuclear Safeguards and Security, and Radiation Detectors. He plans to graduate by May 2016 and is on a job hunt.

Jose Trevino is a Ph. D. Student in the Department of Nuclear Engineering at Texas A&M University and is also a part of the NSSPI. He has interests in Health Physics and Emergency Response. He plans to graduate by May 2016 and hoping to join Nuclear Regulatory Commission.

A Social Science Perspective on International Science Engagement

In the previous issue of the Public Interest Report (Spring 2015), Dr. Charles Ferguson’s President’s Message focused on the importance of empathy in science and security engagements. This was a most welcome surprise, as concepts such as empathy do not typically make it to the pages of technical scientific publications. Yet the social and behavioral sciences play an increasingly critical part in issues as far ranging as arms control negotiations, inspection and verification missions, and cooperative security projects.

The Middle East Scientific Institute for Security (MESIS), the organization that I have headed for five years now, has developed a particular niche in looking at the role of culture in these science and security issues. MESIS works to reduce chemical, biological, radiological and nuclear threats across the region by creating partnerships within the region, and between the region and the international community, with culture as a major component of this work.

As with empathy, culture is often a misunderstood and misappropriated concept for most policymakers. Admittedly, it is not something that is easy to capture, describe, or measure, which may explain why it is not a popular topic. Notwithstanding, there is growing evidence that cultural awareness can make a crucial difference to the prospective success of negotiations, inspections, and cooperative endeavors. The Central Intelligence Agency produced a report in 2006 1 that examined how a lack of cultural awareness among those involved in Iraq’s inspection regime in the mid-1990s resulted in misinterpretation of the behavior of Iraqi officials, leading to an assumption that the exhibited behavior was that of denial and deception. The report relayed a wide range of incidents that were misread by those overseeing the inspection regime. These included: 1) Iraqi scientists’ understanding of the limitations of their weapons programs, combined with their fear to report these limitations to senior leaders, created two accounts about how far advanced these programs were; and 2) Iraqi leaders’ intent on maintaining an illusion of WMD possession to deter Iran regardless of the implications this may have on the inspection regime. The report even cites misinterpretations of customary (read: obligatory) tea served to inspectors at sites under investigation as being a delay tactic. These incidents demonstrate that local cultural factors, on both societal and state levels, were major determinants of nonproliferation performance, but were poorly understood by inspection officials who did not have enough cultural awareness.

It has become equally important to consider intercultural awareness when it comes to cooperative endeavors in non-adversarial circumstances. The sustainability of cooperative programmatic efforts, such as capacity building, cannot be achieved without a solid understanding of cultural awareness. Though terms such as “local ownership” and “partnerships” have become commonplace in the world of scientific cooperative engagements, it is rare to see them translated successfully into policy. As a local organization, MESIS cannot compete with any of the large U.S. scientific organizations on a technical level, yet by virtue of its knowledge of the regional context, it has numerous advantages over any other organization from outside the region. Try getting a U.S. expert to discuss the role that cultural fatalism can play in improving chemical safety and security standards among Middle Eastern laboratory personnel and this becomes all the more apparent. For example, a Jordanian expert looking to promote best practices among laboratory personnel once made an excellent argument by referring to a Hadith by the Prophet Mohammad (PBUH) that calls for the need to be safe and reasonable ahead of, and in conjunction with, placing one’s faith in God. There have been several studies about the relationship between the cultural fatalism of Arab and Muslim societies, and their perceptions of safety culture, especially on road safety. Although there is no ethnographic evidence to support the claim that this is applicable to lab safety, an anecdotal assessment would strongly suggest so.

Language is another critical area for cultural awareness, as exemplified by the success of a cooperative endeavor between the Chinese Scientists Group on Arms Control (CSGAC) of the Chinese People’s Association for Peace and Disarmament, and the Committee on International Security and Arms Control (CISAC) of the U.S. National Academy of Sciences. These groups have been meeting for almost 20 years to discuss nuclear arms control, nuclear nonproliferation, nuclear energy, and regional security issues, with the goal of reducing the possibility of nuclear weapons use and reducing nuclear proliferation in the world at large. Throughout the exchanges, it was often evident that beyond the never-simple translation of one language into the other, there was also the difficulty of differing interpretations of terms. Accordingly, a glossary of about 1000 terms was jointly developed by the two sides to ensure that future misunderstandings possibly between new members or non-bilingual speakers could be avoided. 2 In a similar vein, the World Institute for Nuclear Security (WINS) has partnered with MESIS in developing Arabic versions of its Best Practices Guidelines. This is certainly not due to any shortage of Arabic-language translators in Vienna, but rather because they rightly distinguish between translation and indigenization. Typically, a translator with limited understanding of nuclear security is unable to indigenize a text in the way that a local expert can. In the case of the Guidelines, the use of local experts went a long way to ensure that the concepts themselves were understood by Arabic-language speakers (a case not very different from the U.S.-Chinese example).

The sustainability of the international community’s programmatic efforts in the Middle East and elsewhere is strongly tied to this notion of cultural context. MESIS manages the Radiation Cross Calibration Measurement (RMCC) network, which is a project that seeks to raise radiation measurement standards across the Arab world. It has always been a challenge to find funding for this network from funds dedicated to nonproliferation and nuclear security as the project’s relevance or utility is not readily apparent to decision makers. More creative thinking is needed here. A project like RMCC does in fact build the infrastructure and capacity needed for areas such as nuclear forensics and Additional Protocol compliance 3, but it also addresses more local concerns such as environmental monitoring and improved laboratory management. These sorts of win-win endeavors require a strong degree of cultural awareness. If a network of nuclear forensics laboratories had in fact been established, funding would probably be secured with greater ease, while sustainability would certainly be threatened, because ultimately, nuclear forensics is not currently a priority area for the region.

In a period when there is a tremendous amount of skepticism about international science engagement, increased cultural awareness may lead to more meaningful and, in turn, sustainable outcomes. One would expect this to be more readily apparent to members of a scientific community. There may be some merit in taking a page out of the book of another community, the commercial product development one. They are keenly aware of cultural paradigms when developing products for different markets, often leading to better returns.

Nasser Bin Nasser is the Managing Director of the Middle East Scientific Institute for Security (MESIS) based in Amman, Jordan. He is also the Head of the Amman Regional Secretariat under the European Union’s “Centres of Excellence” initiative on CBRN issues.

Review of Benjamin E. Schwartz’s Right of Boom: The Aftermath of Nuclear Terrorism (Overlook Press, 2015)

Roadside bombs were devastating to American troops in both Iraq and Afghanistan. The press has categorized the moment prior to such an explosion as “left of boom,” and that following the explosion as “right of boom.” Defense Department analyst, Benjamin E. Schwartz, has chosen to title his book about nuclear terrorism, Right of Boom. While capturing the mystery of the weapon’s origin, the title does little to convey the enormity or complexity of the issue being addressed.

This obscure reference adds to a list of euphemisms that shield readers from the shock of confronting nuclear terrorism head on. Homeland Security refers to a nuclear bomb fabricated by a terrorist as an IND (Improvised nuclear device). President Obama has named a series of World Summits on nuclear terrorism, “Nuclear Security Summits.” International affairs analysts and commentators refer to potential perpetrators of nuclear terrorism as non-state actors. The “T-word” is too often hidden in obfuscation and awkward verbal constructs. It is difficult to come to grips with what is perhaps the world’s most serious threat, when a verbal veil shields us from apocalyptic implications.

For more than forty years, serious commentators have drawn public attention to the possibility that terrorists, a.k.a. non-state actors, might detonate a nuclear weapon in a major American metropolitan location, but few have grappled with the question of what action should be taken by America’s President in response to such an attack by a perpetrator whose identity may not be known. Schwartz shares his thoughts with us on the forces that might drive the President to take dramatic action, knowing that it is predicated on a web of conjectures and guesses, rather than on hard intelligence and evidence. He also explores possible unilateral and multilateral actions that might prevent future additional attacks, as well as new world government initiatives for the control of atomic materials. By introducing these hypothetical situations of extreme complexity, Schwartz has made a valuable contribution to civil discourse. He lifts the rock under which these issues have been addressed by security specialists and government agencies that are out of view of the general public. However, he only provides a peek under the rock, rather than a robust examination of the issues.

Schwartz does grapple with the implications of an existential threat to the nation coming from a non-state entity. The norms of international relations go out the window when it is impossible for a government to protect itself through government-to-government relations. Even when dealing with the drug cartels of Colombia and of Mexico, the United States coordinates its efforts through the governments of those countries; but given the extreme threat of a nuclear weapon, if rogue gangs of nuclear terrorists were operating in Mexico, it is likely that the U.S. government would not hesitate to take unilateral action across international borders, much like the drone attacks in the frontier areas of Pakistan or the military operation that captured and killed Osama bin Laden. Furthermore, alliances needed to confront nuclear terrorism might take the form of collaboration with militias that have only a loose affiliation with nation states. Such new forms of international security liaison are emerging as the United States increasingly relies on the efforts of Kurdish and Shiite militias in combat against ISIS.

Schwartz is strongest when he explores the logical non-traditional opportunities for action and weakest when he seeks to draw wisdom from nineteenth century accounts of dealing with the likes of Comanche warriors of the Great Plains and Pashtun tribes of the Khyber Pass. His efforts of gaining guidance in dealing with unprecedented terrorist groups by learning from experiences in historic guerrilla warfare encounters lack credibility.

Right of Boom makes a particularly valuable contribution to discourse about the threat of nuclear terrorism by reviewing a key section of the 2004 book1 by Graham Allison, entitled, Nuclear Terrorism: The Ultimate Preventable Catastrophe. Dr. Allison was the founding Dean of the John F. Kennedy School of Government and a former assistant secretary of defense under President Clinton. Allison ably summarized the dangers and potential policy initiatives in 2004, when he wrote:

The centerpiece of a strategy to prevent nuclear terrorism must be to deny terrorists access to nuclear weapons or materials. To do this we must shape a new international security order according to a doctrine of “Three No’s”:

- No Loose Nukes;

- No New Nascent Nukes; and

- No New Nuclear Weapons States.

The first “No” refers to insecure weapons or materials that could be detonated in a weapon. The second refers to capacity to develop new nuclear weapons material such as enriched uranium or purified plutonium. The third goes beyond the development of fissile materials to the design and development of operational new weapons. Schwartz details how each of these three barriers has been breached within the past decade. This road to instability has been paved by North Korea, Pakistan, and Iran. Schwartz makes it resoundingly clear that the mechanisms for preventing the catastrophe described by Allison need to be reviewed and recast.

Schwartz frames his discussions in the hypothetical context of a Hiroshima-type bomb, known as Little Boy, being detonated on the ground by terrorists in Washington, D.C., but with the executive branch of government having been out of harm’s way. The President is, thus, in a position to deal with needed actions of response and restructuring. He argues that the President must take military action, even if he or she is ignorant of the origin of the nuclear attack. While not completely convincing, his exposition is engaging.

Schwartz speculates other anticipated outcomes following a nuclear terrorist attack that echo post-World War II ideas about international control, including the Acheson-Lilienthal Plan of 1946. While thought-provoking, those ideas, which did not gain traction back then, are still not compelling today.

In order for readers to take the threat of nuclear terrorism seriously, they need to understand how such a cataclysmic event could occur in the first place. For the vast majority of readers, nuclear realities are quite remote and unknown. Most individuals make an implicit assumption that the many layers of security that have evolved since 9/11 adequately protect society from the development of rogue nuclear weapons. Even if there is not full clarity on the issue, there is most likely a vague understanding in the minds of most that the atomic bomb that destroyed Hiroshima required an enterprise, the Manhattan Project, and that it was perhaps the greatest scientific, militaristic, and industrial undertaking in human history. How then, could an equivalent of that Hiroshima bomb arrive in a truck at the corner of 18th and K Streets in Washington, DC, delivered by a team of perpetrators, perhaps no larger than the team of nineteen jihadists who attacked the World Trade Center and the Pentagon on 9/11?

Schwartz does a poor job of providing a clear description, for a layperson, regarding the plausibility of nuclear terrorism. He provides some history about the development of nuclear weapons, the subsequent declassification of the designs and knowledge needed for weapons production, and the 1966 case study of how three young scientists, without nuclear background, successfully designed a Nagasaki type weapon at Lawrence Livermore National Laboratory as an exercise to demonstrate national vulnerability.

His only reference to the Hiroshima bomb design, which would be the likely objective of a terrorist plot, is inserted as a passing phrase in the commentary about the Lawrence Livermore exercise. He states that the three young scientists “… quickly rejected designing a gun-type bomb like Little Boy, which would have used a sawed-off howitzer to crash two pieces of fissile material together, judging it to be too easy and unworthy of their time.” (P.42-43)

It is precisely the ease of both designing and building a Little Boy model that makes nuclear terrorism so feasible! The trio of young scientists succeeded in designing a Nagasaki bomb, known as Fat Man, but did not attempt to actually build one. Schwartz neglects to mention that the Little Boy design uses enriched uranium for its explosive power (which is only mildly radioactive and easy to fabricate into a weapon) while Fat Man uses plutonium (that is quite radioactive and difficult to fabricate into a weapon).

Schwartz identifies uranium 235 as a form of uranium that undergoes fission and he notes that uranium 238, which has three more neutrons in its nucleus, is a much more common form of the element. In the ore that is mined, there are ninety-nine atoms of uranium 238 for every one of uranium 235. Schwartz does not clearly state that bomb fabrication requires enrichment levels of uranium 235, which brings the composition of that component from 1% to 90%. Uranium composed of 90% uranium 235 atoms is known as “Highly Enriched Uranium” (HEU). One way of producing this bomb grade material is with the use of centrifuges. The quality and quantity of their centrifuges has been a key issue of negotiations with Iran.

Graham Allison, in Nuclear Terrorism, provides a clear and concise explanation of the Little Boy design:

If enough Highly Enriched Uranium (HEU) is at hand (approximately 140 pounds), a gun-type design is simple to plan, build, and detonate. In its basic form, a “bullet” (about 56 pounds) of HEU is fired down a gun barrel into a hollowed HEU “target” (about 85 pounds) fastened to the other end of the barrel. Fused together, the two pieces of HEU form a supercritical mass and detonate. The gun in the Hiroshima bomb was a 76.2-millimeter antiaircraft barrel, 6.5 inches wide, 6 feet long, and weighing about 1,000 pounds. A smokeless powder called cordite, normally found in conventional artillery pieces, was used to propel the 56-pound HEU bullet into the 85-pound HEU target. The main attractions of the gun-type weapon are simplicity and reliability. Manhattan Project scientists were so confident about this design that they persuaded military authorities to drop the bomb, untested, on Hiroshima. South Africa also used this model in building its covert nuclear arsenal (in 1977) without even conducting a test. If terrorists develop an elementary nuclear weapon of their own, they will almost certainly use this design. (P85-86)

The general public also needs to understand that U235 is only mildly radioactive. It can be handled safely and is hard to detect. In 2002, ABC News smuggled bars of uranium into ports on both the West Coast and East Coast without being discovered. Furthermore, the amount needed for a weapon can be carried in a container no larger than a soccer ball. Uranium is one of the most dense elements (about 70% more dense than lead). Therefore, 140 pounds can easily be hidden in an automobile that is entering the country or in a shipment of plumbing supplies. While an improvised terrorist bomb could probably be smuggled into the country disguised as an electric generator or embedded in a shipment of granite or other building material stones, its weight of more than a thousand pounds presents challenges. It would be much easier to bring in said soccer ball volume, distributed into smaller packages, and then assemble the weapon in a nondescript machine shop. ABC News transported 15 pounds of depleted uranium in a 12-ounce soda can. Depleted uranium, by definition, contains less U-235 proportionally than natural uranium but has a similar radiation signature.

The largest hurdle for nuclear terrorists is obtaining enriched uranium. Graham Allison does an excellent job of detailing opportunities for terrorists to obtain highly enriched uranium. His book identifies the potential sources of highly-enriched uranium from the many research reactors around the world that were once promoted by President Eisenhower’s Atoms for Peace Program. Other sources include the inadequately guarded storage sites found throughout the former Soviet Union. These sites attracted agents from rogue states and terrorist organizations in the 1990s. How much of the material from unsecured facilities that has entered the black market at that time is unknown, however many examples of black market transactions have been discovered and pose as continued challenges for international inspectors today.

There is a colossal amount of HEU present in various forms around the world. At the end of 2012, an authoritative study2 estimated that there was as much as 1500 tons (3 million pounds). However, great uncertainty exists about the quantity located in Russia. That ambiguity translates directly into possible vulnerability for theft or diversion of HEU. The estimated total supply of HEU could provide fuel for twenty thousand Hiroshima-type gun nuclear weapons. If only a tenth of one percent of this material went missing, it could be used to fabricate 20 improvised nuclear weapons.

Allison describes a particularly egregious case from Kazakhstan where 1,278 pounds of highly enriched uranium were discovered in an abandoned warehouse that was secured only with a single padlock. That material had been collected for shipment to Russia as fuel for nuclear submarines. During the break-up of the Soviet Union, its existence was overlooked (or so it would appear). It is possible that some material was removed and sold to agents from Iraq, Iran, or elsewhere, but there is no public knowledge of that happening. Action was taken by the United States to purchase the material for use in power reactors. In 1994, removal was accomplished in a secret operation known as Project Sapphire, in which teams of U.S. experts packed and transported the materials to the Y-12 facility in Oak Ridge, Tennessee. In 2014, the twentieth anniversary of Project Sapphire was celebrated, but the task of securing highly enriched uranium in the former Soviet Union has yet to be finalized.

Allison further writes that Pakistan (in 2004) was probably producing enough HEU to fuel five to ten new bombs each year. While Allison was concerned with the possibility that some of that material might be diverted, that possibility was exposed as a major U.S. concern in 2010. The Guardian reported on November 30th of that year that Wikileaks revealed that in early 2010, the American Ambassador in Islamabad, Anne Patterson, had cabled to Washington: “Our major concern is not having an Islamic militant steal an entire weapon but rather the chance someone working in government of Pakistan facilities could gradually smuggle enough material out to eventually make a weapon.”

Theft or diversion of HEU from production facilities is not unprecedented. Allison describes theft from a Russian enrichment plant in 1992, which was discovered in an unrelated police action. A famous case published in the March 9, 2014 issue of the New Yorker magazine and discussed in an excellent article by Eric Schlosser involved suspected diversion, in the 1960s, of hundreds of pounds of HEU from a commercial enrichment facility in Pennsylvania to Israel.

Given that large amounts of material that would fuel a Hiroshima-equivalent gun-type weapon are within reach of potential terrorists and successful acquisition of the material is quite plausible, the question remains as to whom might take such an action. Schwartz makes reference to al-Qaeda and to terrorists in general, but does not try to be specific regarding potential nuclear perpetrators.

Allison devotes a chapter of his book to the identification of potential nuclear terrorists, some of whom have actively explored acquisition of fissile material. Included in his overview are al-Qaeda, Chechen separatists, and Aum Shinrikyo. The Aum group, after failing in its attempts to purchase nuclear warheads, initiated a deadly sarin nerve gas attack in the Tokyo subway on March 20, 1995.

Another excellent, comprehensive book3 dealing with nuclear terrorism is The Four Faces of Nuclear Terrorism (2005), by Charles D. Ferguson and William C. Potter with contributing authors Amy Sands, Leonard Spector, and Fred Wehling. Ferguson and Potter explore a number of these issues in great detail. Their discussion of potential perpetrators has a prescient section on apocalyptic groups. They refer to “…certain Jewish or Islamic extremists or factions of the Christian identity movement, whose faith entails a deep belief in the need to cleanse and purify the world via violent upheaval to eliminate non believers.” Given the success of ISIS in acquiring domination over large cities and vast financial resources, their potential for producing a gun-type Hiroshima bomb exceeds any prior threat from a terrorist organization. While attacks on Europe or the United States by ISIS do not appear to be imminent, the use of nuclear weapons to attack Shiites in Iran or Jews in Israel could easily become priorities on their agenda.

In recent years, scant attention has been paid to the possibility that apocalyptic groups or other potential terrorists based in the United States might engage in nuclear terrorism. The most horrific bombing by an American was the detonation of explosives by Timothy McVeigh at the Murrah Federal Building in Oklahoma City on April 19, 1995 that killed 168 people. McVeigh was driven, not by religious belief, but by a passion to avenge actions by the federal government at Waco Texas and Ruby Ridge. These confrontations of armed citizens with federal agencies promoted the militia movement to which McVeigh adhered.

While predating McVeigh, nuclear weapons designer, Ted Taylor, became obsessed with the possibility of nuclear terrorism being initiated by an American terrorist. Taylor was the quintessential embodiment of an obsessed inventor-scientist. All those around him tolerated Taylor’s idiosyncrasies due to his exceptional brilliance. After receiving an undergraduate degree in physics from Cal Tech, he studied for a PhD at the University of California, Berkeley where J. Robert Oppenheimer had established the first American theoretical physics research group of international prominence. Taylor was unable to complete PhD studies there, because he refused to pursue course work in required fields of physics that did not interest him. However, Oppenheimer recognized his genius for creative thought and facilitated his appointment to the post-war theoretical physics staff at Los Alamos in 1948, where he became the leading designer of nuclear weapons. His accomplishments included the creation of the largest fission bomb that was ever assembled and tested, the 500 Kiloton Super Oralloy Bomb, which was thirty-five times more powerful than the Hiroshima Bomb.

The design area in which Taylor confounded the experts was in the conceptualization of small nuclear weapons. His ability to model very small nuclear weapons led to the production for use by the U.S. Army in 1961, of a tripod mounted recoilless rifle known as the Davy Crockett that fired a warhead with the explosive capacity of only 250 tons of TNT (equal to one sixtieth of the Hiroshima bomb). This weapon, which could be deployed and fired by two soldiers on foot, was produced for use against Soviet armored units, but had quite limited distribution.

A leading 20th Century theoretical physicist, Freeman Dyson, is quoted as saying, “Ted (Taylor) taught me everything I know about bombs. He was the man who had made bombs small and cheap.”

Taylor’s deep insights into the ease with which nuclear weapons could be assembled led him to resign from Los Alamos in 1956 and focus his energy on alerting society to the threat of nuclear terrorism. He became acutely aware of how the U.S. Government had contracted out the development, handling, and storage of highly-enriched uranium to commercial suppliers. He observed directly that the security and the procedures for handling and shipping at these facilities were extremely insecure. After trying to promote safeguards through efforts within the nuclear establishment, he decided, in the late 1960s, that he should alert the public to these dangers and promote public policy initiatives. In 1972, he obtained a grant from the Ford Foundation for a thorough study of existing materials that might be diverted into fabricating a clandestine nuclear bomb. Together with Mason Willrich, a social scientist, they published a book in 1974 entitled, Nuclear Theft: Risk and Safeguards (Ballinger). During this same period, he travelled throughout the United States speaking about the issue. Taylor’s efforts attracted the writer, John McPhee, who then asked to accompany him. In 1973, McPhee wrote a book4 about Taylor and his efforts to minimize the risks of nuclear terrorism entitled, The Curve of Binding Energy, from which Schwartz quotes a particularly startling prediction:

“’I think we have to live with the expectation,’ remarked a Los Alamos atomic engineer in 1973, “’that once every four or five years a nuclear explosion will take place and kill a lot of people.’ This statement is cited in John McPhee’s The Curve of Binding Energy, which detailed concerns about the proliferation of nuclear weapons to non-state actors over forty years ago.”

Schwartz then continues with: “While exaggeration may mislead the credulous and offend the perceptive, neither the absence of a precedent for nuclear terrorism nor the intelligence failure regarding Saddam Hussein’s WMD program changes the growing threat.”

While Schwartz gives lip service to the “growing threat” of nuclear terrorism, his book does little to assuage the credulous or to convince the perceptive of the seriousness of such a threat. The fact that he has engaged in this serious analysis of government policy for the aftermath of a nuclear terrorist attack is testimony to the fact that he is does not think that the issue is merely Chicken Little’s exaggerated concern. Certainly, his work as a Defense Department analyst lends gravitas to his posture on this subject.

It is worth reflecting how much traction the effort to call attention to nuclear terrorism has attained within the past 40+ years. The most immediate example of a serious concern for Schwartz’ scenario of a terrorist nuclear weapon being detonated in Washington, DC, is a 120 page report5 from the Federal Emergency Management Agency (FEMA) and Homeland Security entitled, Key Response Planning Factors for the Aftermath of Nuclear Terrorism – the National Capital Region. The report summarizes studies, implemented in 2011, by Lawrence Livermore National Laboratory, Sandia National Laboratories, and Applied Research Associates on civil defense response to the detonation of a terrorist nuclear device. Unlike the bombs at Hiroshima and Nagasaki that were detonated at about 1900 feet, the improvised nuclear weapons hypothesized in this study would explode at ground level. The consequence of a ground level explosion is that a crater would be forced from the ground carrying significant amounts of deadly radioactive debris that would then be dispersed over a range of perhaps 20 miles in length and a mile or two in width. Hiroshima and Nagasaki did not experience this characteristic “fallout” of radioactive debris.

The model that is discussed hypothesizes a 10-kiloton (Hiroshima was 15 kiloton) explosion at ground level at the intersection of K Street NW and 16th Street NW using the actual weather observed at that location on February 14, 2009. This in-depth analysis includes a summary of the effects of the explosion on the infrastructure of the city as well as on the population – including blast, fire, and radiation damage. There are detailed recommendations regarding how, where, and when to shelter from radiation, and assessments of evacuation scenarios. Public health issues are evaluated, including the anticipated post-explosion capacity of hospitals and health care workers to deal with needs of the population. Such a blast would produce nearly total death and destruction for an area about one mile in radius around ground zero and high levels of destruction out to about an area with a three-mile radius. Fallout with serious radiation consequences could impact regions as far as twenty miles from ground zero.

Homeland Security is engaged in studies of major metropolitan areas in the United States and shares these analyses and recommendations with police, firefighters, and other first responders, including emergency medical teams. In this literature, the word “terrorist” is rarely used and the amount of information and advice provided to the public is minimal. The weapon is almost always referred to as an “Improvised Nuclear Device” and its size seems to be standardized at 10 kiloton.

It appears that government agencies are concerned enough about nuclear terrorism to study their impact on physical environments and on human populations. However, the Right of Boom is unique in addressing the political impact and possible retaliatory action. But Schwarz is only addressing the simplest of potential scenarios. What if an explosion in Washington, DC, were accompanied by a blackmail threat that if certain actions were not taken by the United States, other bombs that were already in place would be detonated?

Another possibility would be that bombs were detonated simultaneously in several cities – possibly Washington, New York, and Los Angeles. The challenge of trying to anticipate such a catastrophe is mind boggling, yet, if one bomb were possible, three would be almost equally as feasible. It may be that such studies are taking place out of the public view. Even the Homeland Security studies, that are readily available on the Internet, are not proactively disseminated to the public.

During the height of the Cold War, the threat of nuclear war led to Civil Defense exercises being held throughout the country. While these might not have been entirely realistic, they did prepare civilian populations for the possibility of nuclear conflict. Yet today, while nuclear terrorism may be just as likely, little is shared with the public – regarding either policy considerations or physical realities.

There is at least one instance of important advice that could potentially save many thousands of lives that is known to Homeland Security and FEMA, but is not distributed to the public: in the event of a terrorist nuclear event, the population affected should stay in whatever building they might be located in with positioning away from exterior windows, walls and ceilings. Homeland Security refers to this action as “Sheltering in Place.” The fact is that almost any building structure would shield against the type of radiation that most likely to be present, and that this radiation would dissipate significantly after a few days. By staying indoors for several days, chances of survival would be greatly increased. A practical consequence of this approach is that, following the first days after an attack, parents and children should not seek to be reunited if the children are in school and the parents are elsewhere. A strong concern for this issue was expressed in the 2004 report on terrorism planning after a “dirty bomb” attack issued by the New York Academy of Medicine6.

Lack of public dissemination of practical information, such as this, is partially attributed to the fear of alarming the general population, as well as a deep skepticism, among many, that such an event could even happen. Government policy sustains nuclear terrorism as an invisible topic, lying outside of conscious consideration.

While Homeland Security and FEMA are actively engaged in preparations for an act of nuclear terrorism, the scope of their planning is limited to responding to the physical, medical, and radiological impact of an IND. The Right of Boom comes close to exploring the larger social and political consequences but ultimately fails to do so. Questions that remain unexplored here and elsewhere are the impact on the nation’s economic, transportation, communications, and other fundamental systems that underpin the functioning of society. When one considers the ways in which 9/11, with the deaths of approximately 3,000 civilians, transformed society, it is difficult to image how the deaths of 30,000 or 300,000 civilians might alter the basic framework of civil order. It is difficult to even frame the questions. The enormity of this threat may be a significant contributing factor that keeps it out of public discourse. Examples of the issue being ducked are all too frequent.

Recently, both The Economist and Foreign Policy magazines featured cover stories focused on nuclear weapons (March 7th-13th, 2015 and March-April 2015, respectively). The Economist sums things up with, “But for now the best that can be achieved is to search for ways to restore effective deterrence, bear down on proliferation, and get back to the dogged grind of arms-control negotiations between the main nuclear powers.” Foreign Policy deals more with the active nuclear weapons refurbishing programs that are taking place in the United States, Russia, and China and how these activities might prompt countries that now adhere to the Non Proliferation Treaty to withdraw. Neither of these overview reports mentions the threat of nuclear terrorism by non-state actors.

Even a long-time analyst of nuclear weapons issues, Professor Paul Bracken of Yale, eschews reference to nuclear terrorism in his otherwise insightful book7, The Second Nuclear Era: Strategy, Danger and the New Power Politics (MacMillan, 2012). He bemoans the failure of U.S. strategists to reshape thinking that goes beyond a cold war framework, to grapple with a much more complex, multipolar world. Yet he limits his consideration of terrorists to that of agents for nuclear powers, rather than as independent non-state operatives.

It is striking that those who are worried about an improvised nuclear device exploding in an American city are noteworthy individuals who know the most about the subject: Theodore Taylor, the most capable of the post WWII nuclear weapons designers; Graham Allison, a former undersecretary of defense; Charles Ferguson, the current president of the Federation of American Scientists, and Benjamin Schwartz, an analyst for the U.S. Department of Defense. Following the knowledge trail to the deepest level of national intelligence, we find that the President of the United States is perhaps the most concerned individual of all. Michael Crowley wrote8 in Time Magazine on March 26, 2014, Yes, Obama Really is Worried About a Manhattan Nuke. He quotes the president saying, “I continue to be much more concerned, when it comes to our security, with the prospect of a nuclear weapon going off in Manhattan.”

One might wonder if this statement by Obama is an isolated comment or a deeply ingrained belief that underlies his thinking and strategic approach to governance. By examining his record of policy statements and executive actions of the past six years, one sees that this is his core belief.

Obama most likely became educated about nuclear issues during his time in the Senate. He rubbed shoulders with Senator Sam Nunn, who has probably been the most influential publically-elected official concerned with nuclear issues (in general) and nuclear terrorism (in particular), prior to the emergence of Barack Obama. Less than three months after his first inauguration in 2009, he delivered a historic speech9 on nuclear weapons in Hradcany Square in Prague, the capital of the Czech Republic.

The speech was comprehensive in addressing issues of stockpiles of the major nuclear nations, the need to eliminate proliferation in additional states, and the need to curb developments in Iran and North Korea. However, it is noteworthy that he dealt at length with issues of nuclear terrorism. He stated, “…we must ensure that terrorists never acquire a nuclear weapon. This is the most immediate and extreme threat to global security. One terrorist with one nuclear weapon could unleash massive destruction. Al Qaeda has said it seeks a bomb and that it would have no problem with using it. And we know that there is unsecured nuclear material across the globe. To protect our people we must act with a sense of purpose without delay.”

President Obama renders the threat explicit: “One nuclear weapon exploded in one city – be it New York or Moscow, Islamabad or Mumbai, Tokyo or Tel Aviv, Paris or Prague – could kill hundreds of thousands of people. And no matter where it happens, there is no end to what the consequences might be — for our global safety, our security, our society, our economy, to our ultimate survival.”

He also does not minimize the chances of such an event-taking place: “Black market trade in nuclear secrets and nuclear materials abound. The technology to build a bomb has spread. Terrorists are determined to buy, build, or steal one.”

It is amazing that this Paul Revere-style alert and the call for action given by the President of the United States on the world stage could just as well have been an oration by Chicken Little. Perhaps if the President himself had failed to follow up, it might explain the lack of attention by commentators, think tanks, talking heads, and loquacious pundits. Certainly, the Right of Boom fails to build on the solid case made by President Obama.

But the President has not neglected this topic; far from it. While in Prague, he laid out an agenda and has assiduously adhered to it ever since. His Prague address called for efforts to expand cooperation with Russia and to seek new partnerships to lock down the fissile materials that enable nuclear weapons. He identified comprehensive areas of concern:

We must also build on our efforts to break up black markets, detect and intercept materials in transit, and use financial tools to disrupt this dangerous trade. Because this threat will be lasting, we should come together to turn efforts such as the Proliferation Security Initiative and the Global Initiative to Combat Nuclear Terrorism into durable international institutions. And we should start by having a Global Summit on Nuclear Security that the United States will host within the next year.

President Obama organized a summit meeting in Washington, DC, in 2010 that was attended by 38 heads of state. This was the largest gathering of heads of state called by a U.S. president since the organizational meeting for the United Nations in 1945. He then held follow-up summits in 2012 in Seoul, Korea and in 2014 in The Hague, The Netherlands. A fourth summit will be held March 31- April 1, 2016, at the Walter E. Washington Convention Center in Washington, DC. These historic gatherings of large numbers of heads of state have taken place with remarkably little publicity or comment from politicians or the public. Typically, news media have reported during the time of the meetings, but there has been virtually no mention of the activities that these summits have generated. Since the programs were referred to as “Nuclear Security Summits,” they probably generated much less interest than if they had been headlined as “Nuclear Terrorism Summits,” (which, in fact, is a far more accurate title).

Stemming from these summit meetings have been numerous working groups that pursue targeted goals during the intervals between the meetings. These meetings have been conducted using an innovative approach to international diplomacy that seems to be grounded in a philosophy of achieving what is possible and not being stymied by the usual impediments to negotiated agreements. The working groups bring together countries that have mutual concerns and they work to create implementable policy statements – (but start with no predetermined format, structure, or reporting mechanism). In an attempt to stimulate creativity and new leadership, the participants are not assigned by their governments, specific titles, or rank, but by their relevant expertise. They are given the titles of “Sherpa” and “Sous-Sherpa.” The very title, which is associated with providing assistance to mountain climbers, sets a positive tone. Another innovative break with tradition and creative use of language is to refer to the statements that are produced as “gift baskets.” These gift baskets have resulted in many countries pledging to take further action and applying peer pressure on other countries to take action.

As of April 2015, there are 15 groups10 working to create these gift baskets. The number of countries that come together range from four in the group focused on reducing the use of HEU for the production of medical isotopes to thirty-five seeking to strengthen nuclear security implementation. The latter group has been working to integrate IAEA nuclear security policies into national rules and regulations.

Some of the other topics being addressed include the security of fissile material transportation, the security of radiological materials, forensics in nuclear security, and the promotion of countries becoming free of HEU. The elimination, since 2009, of all HEU from 12 countries has been a major accomplishment, particularly the removal of all HEU from the Ukraine, which was announced in March of 2012.

While Schwartz gives passing mention to the Nuclear Security Summits, he fails to recognize the innovative approach pursued by “gift basket” diplomacy or the successes that have resulted from that approach. Furthermore, the Nuclear Security Summit initiative has created a framework for approaching nuclear terrorism that would have applications following a terrorist nuclear detonation in an American city. Schwartz does not include that framework in his analysis of potential “right of boom” government actions.

More significant than the limited scope of Schwartz’ scenario’s vision regarding retaliation and new international security norms is his complete neglect of the horrific domestic situation that the President and his advisors would need to confront. Certainly the President would need to explain to the American public how he or she would respond to the perpetrators, but it could be argued that the American public’s main concern would be maintenance of civil society. Schwartz presents a hypothetical transcript of an address by the President to the American people in which he notes that he is speaking on his own authority that is enhanced by the advice of the cabinet and the consent of Congress. However, in that address, there is no mention of the deaths, devastation, interruption of commerce, breakdowns in communications, overwhelming strains on transportation systems, medical infrastructure, outbreaks of civil disorder, and general fear and hysteria that must be sweeping the country.

Perhaps it is asking too much for The Right of Boom to carry that load in addition to introducing the challenges of international actions, plans, and policy. Yet, its scenario – which may leave many readers incredulous regarding the actions that it does address, is rendered more unbelievable by its neglect of these obvious civil society considerations.

All of these issues were addressed in the article, “The Day After, Action Following a Nuclear Blast in a U.S. City,”11 by Ashton B. Carter, Michael M. May, and William J. Perry published in the Autumn 2007 issue of The Washington Quarterly (P. 19 This trio of authors had deep knowledge about how nuclear terrorism might manifest itself and what the resulting consequences would be. Aston B. Carter is currently the U.S. Secretary of Defense, Michael M. May was a long time director of the Lawrence Livermore nuclear weapons development laboratory, and William J. Perry served as Secretary of Defense during the Clinton administration. These heavyweights wrote:

As grim a prospect as this scenario (a terrorist nuclear explosion in a U.S. city) is for policymakers to contemplate, a failure to develop a comprehensive contingency plan and inform the American public, where appropriate, about its particulars will only serve to amplify the devastating impact of a nuclear attack on a U.S. city…

In considering the actions that need to be taken on the “Day After”, they take more seriously than Schwartz the possibility of actual follow-on attacks, as well as the threat of follow-on attacks. Their short article refers to the physical impact of blast, radiation, problems regarding evacuation, medical care, civil unrest, etc. There is also a brief section dealing with retaliation and deterrence. It is surprising that Schwartz does not reference this precursor article that was written by such authoritative individuals.

A direct extension of the “Day After” article is an essay12 by Richard L. Garwin entitled, “A Nuclear Explosion in a City or an Attack on a Nuclear Reactor,” that was included in the Summer 2010 issue of The Bridge, a publication of the National Academy of Engineering, within a special installment, “Nuclear Dangers.” Garwin has been a senior advisor for many years to the highest levels of the U.S. government on nuclear weapons policy and other technologies that are relevant to U.S. military and security affairs. In 1950, when Garwin was 22 years old, he turned the concepts developed by Edward Teller and Stanislaw Ulam for the hydrogen bomb into engineering and assembly specifications that produced the first manmade thermonuclear explosion at Enewetak Atoll in the Pacific Ocean in 1952.

Garwin’s essay parallels that of Carter, May, and Perry, (in which he has a lengthy quote). Garwin is explicit that he is hypothesizing a terrorist-improvised nuclear device that uses highly-enriched uranium and the Hiroshima gun design. This IND, like all the other imagined weapons, has a yield of between 10 and 15 kilotons. It is worth noting that everyone who addresses the issue of a terrorist nuclear weapon and who has knowledge of the underlying technology chooses to focus on a device of about 10 KT. Garwin also notes that the scenario he addresses, “…was the focus of President Obama’s Nuclear Security Summit in Washington on April 12-13, 2010 (White House 2010).”

Garwin also emphasizes a point of great concern, made by the trio, with the following quote from the “Day After” article: