Speed Grid Connection Using ‘Smart AI Fast Lanes’ and Competitive Prizes

Innovation in artificial intelligence (AI) and computing capacity is essential for U.S. competitiveness and national security. However, AI data center electricity use is growing rapidly. Data centers already consume more than 4% of U.S. electricity annually and could rise to 6% to 12% of U.S. electricity by 2028. At the same time, electricity rates are rising for consumers across the country, with transmission and distribution infrastructure costs a major driver of these increases. For the first time in fifteen years, the U.S. is experiencing a meaningful increase in electricity demand. Electricity use from data centers already consumes more than 25% of electricity in Virginia, which leads the world in data center installations. Data center electricity load growth results in real economic and environmental impacts for local communities. It also represents a national policy trial on how the U.S. responds to rising power demand from the electrification of homes, transportation, and manufacturing– important technology transitions for cutting carbon emissions and air pollution.

Federal and state governments need to ensure that the development of new AI and data center infrastructure does not increase costs for consumers, impact the environment, and exacerbate existing inequalities. “Smart AI Fast Lanes” is a policy and infrastructure investment framework that ensures the U.S. leads the world in AI while building an electricity system that is clean, affordable, reliable, and equitable. Leveraging innovation prizes that pay for performance, coupled with public-private partnerships, data center providers can work with the Department of Energy, the Foundation for Energy Security and Innovation (FESI), the Department of Commerce, National Labs, state energy offices, utilities, and the Department of Defense to drive innovation to increase energy security while lowering costs.

Challenge and Opportunity

Targeted policies can ensure that the development of new AI and data center infrastructure does not increase costs for consumers, impact the environment, and exacerbate existing energy burdens. Allowing new clean power sources co-located or contracted with AI computing facilities to connect to the grid quickly, and then manage any infrastructure costs associated with that new interconnection, would accelerate the addition of new clean generation for AI while lowering electricity costs for homes and businesses.

One of the biggest bottlenecks in many regions of the U.S. in adding much-needed capacity to the electricity grid are the so-called “interconnection queues”. There are different regional requirements for power plants to complete (often, a number of studies on how a project affects grid infrastructure) before they are allowed to connect. Solar, wind, and battery projects represented 95% of the capacity waiting in interconnection queues in 2023. The operator of Texas’ power grid, the Electric Reliability Council of Texas (ERCOT), uses a “connect and manage” interconnection process that results in faster interconnections of new energy supplies than the rest of the country. Instead of requiring each power plant to complete lengthy studies of needed system-wide infrastructure investments before connecting to the grid, the “connect and manage” approach in Texas gets power plants online quicker than a “studies first” approach. Texas manages any risks that arise using the power markets and system-wide planning efforts. The results are clear: the median time from an interconnection request to commercial operations in Texas was four years, compared to five years in New York and more than six and a half years in California.

“Smart AI Fast Lanes” expands the spirit of the Texas “connect and manage” approach nationwide for data centers and clean energy, and adds to it investment and innovation prizes to speed up the process, ensure grid reliability, and lower costs.

Data center providers would work with the Department of Energy, the Foundation for Energy Security and Innovation (FESI), the Department of Commerce, National Laboratories, state energy offices, utilities, and the Department of Defense to speed up interconnection queues, spur innovation in efficiency, and re-invest in infrastructure, to increase energy security and lower costs.

Why FESI Should Lead ‘Smart AI Fast Lanes’

With FESI managing this effort, the process can move faster than the government acting alone. FESI is an independent, non-profit, agency-related foundation that was created by Congress in the CHIPS and Science Act of 2022 to help the Department of Energy achieve its mission and accelerate “the development and commercialization of critical energy technologies, foster public-private partnerships, and provide additional resources to partners and communities across the country supporting solutions-driven research and innovation that strengthens America’s energy and national security goals”. Congress has created many other agency-related foundations, such as the Foundation for NIH, the National Fish and Wildlife Foundation, and the National Park Foundation, which was created in 1935. These agency-related foundations have a demonstrated record of raising external funding to leverage federal resources and enabling efficient public-private partnerships. As a foundation supporting the mission of the Department of Energy, FESI has a unique opportunity to quickly respond to emergent priorities and create partnerships to help solve energy challenges.

As an independent organization, FESI can leverage the capabilities of the private sector, academia, philanthropies, and other organizations to enable collaboration with federal and state governments. FESI can also serve as an access point to opening up additional external investment, and shared risk structures and clear rules of engagement make emerging energy technologies more attractive to institutional capital. For example, the National Fish and Wildlife Foundation awards grants that are matched with non-federal private, philanthropic, or local funding sources that multiply the impact of any federal investments. In addition, the National Fish and Wildlife Foundation has partnered with the Department of Defense and external funding sources to enhance coastal resilience near military installations. Both AI compute capabilities and energy resilience are of strategic importance to the Department of Defense, Department of Energy, and other agencies, and leveraging public-private partnerships is a key pathway to enhance capabilities and security. FESI leading a Smart AI Fast Lanes initiative could be a force multiplier to enable rapid deployment of clean AI compute capabilities that are good for communities, companies, and national security.

Use Prizes to Lessen Cost and Maximize Return

The Department of Energy has long used prize competitions to spur innovation and accelerate access to funding and resources. Prize competitions with focused objectives but unstructured pathways for success enables the private sector to compete and advance innovation without requiring a lot of federal capacity and involvement. Federal prize programs pay for performance and results, while also providing a mechanism to crowd in additional philanthropic and private sector investment. In the Smart AI Fast Lane framework, FESI could use prizes to support energy innovation from AI data centers while working with the Department of Energy’s Office of Cybersecurity, Energy Security, and Emergency Response (CESER) to enable a repeatable and scalable public private partnership program. These prizes would be structured so that there is a low administrative and operational effort required for FESI itself, with other groups such as American-Made, National Laboratories, or organizations like FAS, helping to provide technical expertise to review and administer prize applications. This can ensure quality while enabling scalable growth.

Plan of Action

Here’s how “Smart AI Fast Lanes” would work. For any proposed data center investment of more than 250 MW, companies could apply to work with FESI. Successful application would leverage public, private, and philanthropic funds and technical assistance. Projects would be required to increase clean energy supplies, achieve world-leading data center energy efficiency, invest in transmission and distribution infrastructure, and/or deploy virtual power plants for grid flexibility.

Recommendation 1. Use a “Smart AI Fast Lane” Connection Fee to Quickly Connect to the Grid, Further Incentivized by a “Bring Your Own Power” Prize

New large AI data center loads choosing the “Smart AI Fast Lane” would pay a fee to connect to the grid without first completing lengthy pre-connection cost studies. Those payments would go into a fund, managed and overseen by FESI, that would be used to cover any infrastructure costs incurred by regional grids for the first three years after project completion. The fee could be a flat fee based on data center size, or structured as an auction, enabling the data centers bidding the highest in a region to be at the front of the line. This enables the market to incentivize the highest priority additions. Alternatively, large load projects could choose to do the studies first and remain in the regular – and likely slower – interconnection queue to avoid the fee.

In addition, FESI could facilitate a “Bring Your Own Power” prize award that is a combination of public, private, and philanthropic funds that data center developers can match to contract for new, additional zero-emission electricity generated locally that covers twice as much as the data center uses annually. For data centers committing to this “Smart AI Fast Lane” process, both the data center and the clean energy supply would receive accelerated priority in the interconnection queue and technical assistance from National Laboratories. This leverages economies of scale for projects, lowers the cost of locally-generated clean electricity, and gets clean energy connected to the grid quicker. Prize resources would support a “connect and manage” interconnection approach to cover 75% of the costs of any required infrastructure for local clean power projects resulting from the project. FESI prize resources could further supplement these payments to upgrade electrical infrastructure in areas of national need for new electricity supplies to maintain electricity reliability. These include areas assessed by the North American Reliability Corporation to have a high risk of an electricity shortfall in the coming years, such as the Upper Midwest or Gulf Coast, or areas with an elevated risk such as California, the Great Plains, Texas, the Mid-Atlantic, or the Northeast.

Recommendation 2. Create an Efficiency Prize To Establish World-Leading Energy and Water Efficiency at AI Data Centers

Data centers have different design configurations that affect how much energy and water are needed to operate. Data centers use electricity for computing, but also for the cooling systems needed for computing equipment, and there are innovation opportunities to increase the efficiency of both. One historical measure of AI data center energy efficiency is Power Use Effectiveness (PUE), which is the total facility annual energy use, divided by the computing equipment annual energy use, with values closer to 1.0 being more efficient. Similarly, Water Use Effectiveness (WUE) is measured as total annual water use divided by the computing equipment annual energy use, with closer to zero being more efficient. We should continue to push for improvement in PUE and WUE, but these are incomplete current metrics to drive deep innovation because they do nor reflect how much computing power is provided and do not assess impacts in the broader infrastructure energy system. While there have been multiple different metrics for data center energy efficiency proposed over the past several years, what is important for innovation is to improve the efficiency of how much AI computing work we get for the amount of energy and water used. Just like efficiency in a car is measured in miles per gallon (MPG), we need to measure the “MPG” of how AI data centers perform work and then create incentives and competition for continuous improvements. There could be different metrics for different types of AI training and inference workloads, but a starting point could be the tokens per kilowatt-hour of electricity used. A token is a word or portion of a word that AI foundation models use for analysis. Another way could be to measure the efficiency of computing performance, or FLOPS, per kilowatt-hour. The more analysis an AI model or data center can perform using the same amount of energy, the more energy efficient it is.

FESI could deploy sliding scale innovation prizes based on data center size for new facilities that demonstrate leading edge AI data center MPG. These could be based on efficiency targets for tokens per kilowatt-hour, FLOPS per kilowatt-hour, top-performing PUE, or other metrics of energy efficiency. Similar prizes could be provided for water use efficiency, within different classes of cooling technologies that exceed best-in-class performance. These prizes could be modeled after the USDA’s agency-related foundation’s FFAR Egg-Tech Prize, which was a program that was easy to administer and has had great success. A secondary benefit of an efficiency innovation prize is continuous competition for improvement, and open information about best-in-class data center facilities.

Fig. 1. Power Use Efficiency (PUE) and Water Use Efficiency (WUE) values for Data Centers Source: LBNL 2024

Recommendation 3. Create Prizes to Maximize Transmission Throughput and Upgrade Grid Infrastructure

FESI could award prizes for rapid deployment of reconductoring, new transmission, or grid enhancing technologies to increase the transmission capacity for any project in DOE’s Coordinated Interagency Authorizations and Permit Program. Similarly, FESI could award prizes for utilities to upgrade local distribution infrastructure beyond the direct needs for the project to reduce future electricity rate cases, which will keep electricity costs affordable for residential customers. The Department of Energy already has authority to finance up to $2.5 billion in the Transmission Facilitation Program, a revolving fund administered by the Grid Deployment Office (GDO) that helps support transmission infrastructure. These funds could be used for public-private partnerships in a national interest electric transmission corridor and necessary to accommodate an increase in electricity demand across more than one state or transmission planning region.

Recommendation 4. Develop Prizes That Reward Flexibility and End-Use Efficiency Investments

Flexibility in how and when data centers use electricity can meaningfully reduce the stress on the grid. FESI should award prizes to data centers that demonstrate best-in-class flexibility through smart controls and operational improvements. Prizes could also be awarded to utilities hosting data centers that reduce summer and winter peak loads in the local service territory. Prizes for utilities that meet home weatherization targets and deploy virtual power plants could help reduce costs and grid stress in local communities hosting AI data centers.

Conclusion

The U.S. is facing the risk of electricity demand outstripping supplies in many parts of the country, which would be severely detrimental to people’s lives, to the economy, to the environment, and to national security. “Smart AI Fast Lanes” is a policy and investment framework that can rapidly increase clean energy supply, infrastructure, and demand management capabilities.

It is imperative that the U.S. addresses the growing demand from AI and data centers, so that the U.S. remains on the cutting edge of innovation in this important sector. How the U.S. approaches and solves the challenge of new demand from AI, is a broader test on how the country prepares its infrastructure for increased electrification of vehicles, buildings, and manufacturing, as well as how the country addresses both carbon pollution and the impacts from climate change. The “Smart AI Fast Lanes” framework and FESI-run prizes will enable U.S. competitiveness in AI, keep energy costs affordable, reduce pollution, and prepare the country for new opportunities.

This memo is part of our AI & Energy Policy Sprint, a policy project to shape U.S. policy at the critical intersection of AI and energy. Read more about the Policy Sprint and check out the other memos here.

A Holistic Framework for Measuring and Reporting AI’s Impacts to Build Public Trust and Advance AI

As AI becomes more capable and integrated throughout the United States economy, its growing demand for energy, water, land, and raw materials is driving significant economic and environmental costs, from increased air pollution to higher costs for ratepayers. A recent report projects that data centers could consume up to 12% of U.S. electricity by 2028, underscoring the urgent need to assess the tradeoffs of continued expansion. To craft effective, sustainable resource policies, we need clear standards for estimating the data centers’ true energy needs and for measuring and reporting the specific AI applications driving their resource consumption. Local and state-level bills calling for more oversight of utility rates and impacts to ratepayers have received bipartisan support, and this proposal builds on that momentum.

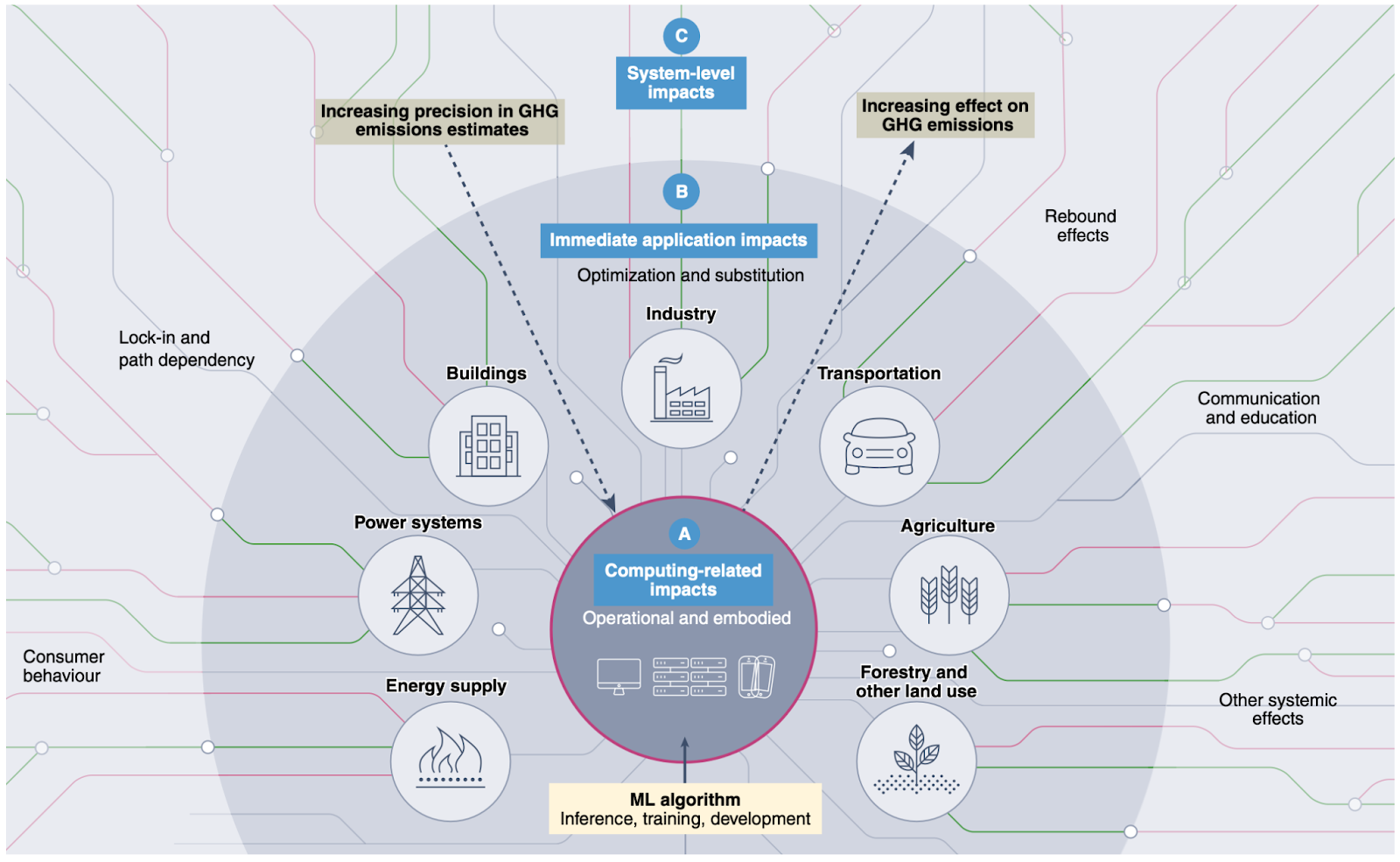

In this memo, we draw on research proposing a holistic evaluation framework for characterizing AI’s environmental impacts, which establishes three categories of impacts arising from AI: (1) Computing-related impacts; (2) Immediate application impacts; and (3) System-level impacts . Concerns around AI’s computing-related impacts, e.g. energy and water use due to AI data centers and hardware manufacturing, have become widely known with corresponding policy starting to be put into place. However, AI’s immediate application and system-level impacts, which arise from the specific use cases to which AI is applied, and the broader socio-economic shifts resulting from its use, remain poorly understood, despite their greater potential for societal benefit or harm.

To ensure that policymakers have full visibility into the full range of AI’s environmental impacts we recommend that the National Institute of Standards and Technology (NIST) oversee creation of frameworks to measure the full range of AI’s impacts. Frameworks should rely on quantitative measurements of the computing and application related impacts of AI and qualitative data based on engagements with the stakeholders most affected by the construction of data centers. NIST should produce these frameworks based on convenings that include academic researchers, corporate governance personnel, developers, utility companies, vendors, and data center owners in addition to civil society organizations. Participatory workshops will yield new guidelines, tools, methods, protocols and best practices to facilitate the evolution of industry standards for the measurement of the social costs of AI’s energy infrastructures.

Challenge and Opportunity

Resource consumption associated with AI infrastructures is expanding quickly, and this has negative impacts, including asthma from air pollution associated with diesel backup generators, noise pollution, light pollution, excessive water and land use, and financial impacts to ratepayers. A lack of transparency regarding these outcomes and public participation to minimize these risks losing the public’s trust, which in turn will inhibit the beneficial uses of AI. While there is a huge amount of capital expenditure and a massive forecasted growth in power consumption, there remains a lack of transparency and scientific consensus around the measurement of AI’s environmental impacts with respect to data centers and their related negative externalities.

A holistic evaluation framework for assessing AI’s broader impacts requires empirical evidence, both qualitative and quantitative, to influence future policy decisions and establish more responsible, strategic technology development. Focusing narrowly on carbon emissions or energy consumption arising from AI’s computing related impacts is not sufficient. Measuring AI’s application and system-level impacts will help policymakers consider multiple data streams, including electricity transmission, water systems and land use in tandem with downstream economic and health impacts.

Regulatory and technical attempts so far to develop scientific consensus and international standards around the measurement of AI’s environmental impacts have focused on documenting AI’s computing-related impacts, such as energy use, water consumption, and carbon emissions required to build and use AI. Measuring and mitigating AI’s computing-related impacts is necessary, and has received attention from policymakers (e.g. the introduction of the AI Environmental Impacts Act of 2024 in the U.S., provisions for environmental impacts of general-purpose AI in the EU AI Act, and data center sustainability targets in the German Energy Efficiency Act). However, research by Kaack et al (2022) highlights that impacts extend beyond computing. AI’s application impacts, which arise from the specific use cases for which AI is deployed (e.g. AI’s enabled emissions, such as application of AI to oil and gas drilling have much greater potential scope for positive or negative impacts compared to AI’s computing impacts alone, depending on how AI is used in practice). Finally, AI’s system-level impacts, which include even broader, cascading social and economic impacts associated with AI energy infrastructures, such as increased pressure on local utility infrastructure leading to increased costs to ratepayers, or health impacts to local communities due to increased air pollution, have the greatest potential for positive or negative impacts, while being the most challenging to measure and predict. See Figure 1 for an overview.

from Kaack et al. (2022). Effectively understanding and shaping AI’s impacts will require going beyond impacts arising from computing alone, and requires consideration and measurement of impacts arising from AI’s uses (e.g. in optimizing power systems or agriculture) and how AI’s deployment throughout the economy leads to broader systemic shifts, such as changes in consumer behavior.

Effective policy recommendations require more standardized measurement practices, a point raised by the Government Accountability Office’s recent report on AI’s human and environmental effects, which explicitly calls for increasing corporate transparency and innovation around technical methods for improved data collection and reporting. But data should also include multi-stakeholder engagement to ensure there are more holistic evaluation frameworks that meet the needs of specific localities, including state and local government officials, businesses, utilities, and ratepayers. Furthermore, while states and municipalities are creating bills calling for more data transparency and responsibility, including in California, Indiana, Oregon, and Virginia, the lack of federal policy means that data center owners may move their operations to states that have fewer protections in place and similar levels of existing energy and data transmission infrastructure.

States are also grappling with the potential economic costs of data center expansion. Ohio’s Policy Matters found that tax breaks for data center owners are hurting tax revenue streams that should be used to fund public services. In Michigan, tax breaks for data centers are increasing the cost of water and power for the public while undermining the state’s climate goals. Some Georgia Republicans have stated that data center companies should “pay their way.” While there are arguments that data centers can provide useful infrastructure, connectivity, and even revenue for localities, a recent report shows that at least ten states each lost over $100 million a year in revenue to data centers because of tax breaks. The federal government can help create standards that allow stakeholders to balance the potential costs and benefits of data centers and related energy infrastructures. We now have an urgent need to increase transparency and accountability through multi-stakeholder engagement, maximizing economic benefits while reducing waste.

Despite the high economic and policy stakes, critical data needed to assess the full impacts—both costs and benefits—of AI and data center expansion remains fragmented, inconsistent, or entirely unavailable. For example, researchers have found that state-level subsidies for data center expansion may have negative impacts on state and local budgets, but this data has not been collected and analyzed across states because not all states publicly release data about data center subsidies. Other impacts, such as the use of agricultural land or public parks for transmission lines and data center siting, must be studied at a local and state level, and the various social repercussions require engagement with the communities who are likely to be affected. Similarly, estimates on the economic upsides of AI vary widely, e.g. the estimated increase in U.S. labor productivity due to AI adoption ranges from 0.9% to 15% due in large part to lack of relevant data on AI uses and their economic outcomes, which can be used to inform modeling assumptions.

Data centers are highly geographically clustered in the United States, more so than other industrial facilities such as steel plants, coal mines, factories, and power plants (Fig. 4.12, IEA World Energy Outlook 2024). This means that certain states and counties are experiencing disproportionate burdens associated with data center expansion. These burdens have led to calls for data center moratoriums or for the cessation of other energy development, including in states like Indiana. Improved measurement and transparency can help planners avoid overly burdensome concentrations of data center infrastructure, reducing local opposition.

With a rush to build new data center infrastructure, states and localities must also face another concern: overbuilding. For example, Microsoft recently put a hold on parts of its data center contract in Wisconsin and paused another in central Ohio, along with contracts in several other locations across the United States and internationally. These situations often stem from inaccurate demand forecasting, prompting utilities to undertake costly planning and infrastructure development that ultimately goes unused. With better measurement and transparency, policymakers will have more tools to prepare for future demands, avoiding the negative social and economic impacts of infrastructure projects that are started but never completed.

While there have been significant developments in measuring the direct, computing-related impacts of AI data centers, public participation is needed to fully capture many of their indirect impacts. Data centers can be constructed so they are more beneficial to communities while mitigating their negative impacts, e.g. by recycling data center heat, and they can also be constructed to be more flexible by not using grid power during peak times. However, this requires collaborative innovation and cross-sector translation, informed by relevant data.

Plan of Action

Recommendation 1. Develop a database of AI uses and framework for reporting AI’s immediate applications in order to understand the drivers of environmental impacts.

The first step towards informed decision-making around AI’s social and environmental impacts is understanding what AI applications are actually driving data center resource consumption. This will allow specific deployments of AI systems to be linked upstream to compute-related impacts arising from their resource intensity, and downstream to impacts arising from their application, enabling estimation of immediate application impacts.

The AI company Anthropic demonstrated a proof-of-concept categorizing queries to their Claude language model under the O*NET database of occupations. However, O*NET was developed in order to categorize job types and tasks with respect to human workers, which does not exactly align with current and potential uses of AI. To address this, we recommend that NIST works with relevant collaborators such as the U.S. Department of Labor (responsible for developing and maintaining the O*NET database) to develop a database of AI uses and applications, similar to and building off of O*NET, along with guidelines and infrastructure for reporting data center resource consumption corresponding to those uses. This data could then be used to understand particular AI tasks that are key drivers of resource consumption.

Any entity deploying a public-facing AI model (that is, one that can produce outputs and/or receive inputs from outside its local network) should be able to easily document and report its use case(s) within the NIST framework. A centralized database will allow for collation of relevant data across multiple stakeholders including government entities, private firms, and nonprofit organizations.

Gathering data of this nature may require the reporting entity to perform analyses of sensitive user data, such as categorizing individual user queries to an AI model. However, data is to be reported in aggregate percentages with respect to use categories without attribution to or listing of individual users or queries. This type of analysis and data reporting is well within the scope of existing, commonplace data analysis practices. As with existing AI products that rely on such analyses, reporting entities are responsible for performing that analysis in a way that appropriately safeguards user privacy and data protection in accordance with existing regulations and norms.

Recommendation 2. NIST should create an independent consortium to develop a system-level evaluation framework for AI’s environmental impacts, while embedding robust public participation in every stage of the work.

Currently, the social costs of AI’s system-level impacts—the broader social and economic implications arising from AI’s development and deployment—are not being measured or reported in any systematic way. These impacts fall heaviest on the local communities that host the data centers powering AI: the financial burden on ratepayers who share utility infrastructure, the health effects of pollutants from backup generators, the water and land consumed by new facilities, and the wider economic costs or benefits of data-center siting. Without transparent metrics and genuine community input, policymakers cannot balance the benefits of AI innovation against its local and regional burdens. Building public trust through public participation is key when it comes to ensuring United States energy dominance and national security interests in AI innovation, themes emphasized in policy documents produced by the first and second Trump administrations.

To develop evaluation frameworks in a way that is both scientifically rigorous and broadly trusted, NIST should stand up an independent consortium via a Cooperative Research and Development Agreement (CRADA). A CRADA allows NIST to collaborate rapidly with non-federal partners while remaining outside the scope of the Federal Advisory Committee Act (FACA), and has been used, for example, to convene the NIST AI Safety Institute Consortium. Membership will include academic researchers, utility companies and grid operators, data-center owners and vendors, state, local, Tribal, and territorial officials, technologists, civil-society organizations, and frontline community groups.

To ensure robust public engagement, the consortium should consult closely with FERC’s Office of Public Participation (OPP)—drawing on OPP’s expertise in plain-language outreach and community listening sessions—and with other federal entities that have deep experience in community engagement on energy and environmental issues. Drawing on these partners’ methods, the consortium will convene participatory workshops and listening sessions in regions with high data-center concentration—Northern Virginia, Silicon Valley, Eastern Oregon, and the Dallas–Fort Worth metroplex—while also making use of online comment portals to gather nationwide feedback.

Guided by the insights from these engagements, the consortium will produce a comprehensive evaluation framework that captures metrics falling outside the scope of direct emissions alone. These system-level metrics could encompass (1) the number, type, and duration of jobs created; (2) the effects of tax subsidies on local economies and public services; (3) the placement of transmission lines and associated repercussions for housing, public parks, and agriculture; (4) the use of eminent domain for data-center construction; (5) water-use intensity and competing local demands; and (6) public-health impacts from air, light, and noise pollution. NIST will integrate these metrics into standardized benchmarks and guidance.

Consortium members will attend public meetings, engage directly with community organizations, deliver accessible presentations, and create plain-language explainers so that non-experts can meaningfully influence the framework’s design and application. The group will also develop new guidelines, tools, methods, protocols, and best practices to facilitate industry uptake and to evolve measurement standards as technology and infrastructure grow.

We estimate a cost of approximately $5 million over two years to complete the work outlined in recommendation 1 and 2, covering staff time, travel to at least twelve data-center or energy-infrastructure sites across the United States, participant honoraria, and research materials.

Recommendation 3. Mandate regular measurement and reporting on relevant metrics by data center operators.

Voluntary reporting is the status quo, via e.g. corporate Environmental, Social, and Governance (ESG) reports, but voluntary reporting has so far been insufficient for gathering necessary data. For example, while the technology firm OpenAI, best known for their highly popular ChatGPT generative AI model, holds a significant share of the search market and likely corresponding share of environmental and social impacts arising from the data centers powering their products, OpenAI chooses not to publish ESG reports or data in any other format regarding their energy consumption or greenhouse gas (GHG) emissions. In order to collect sufficient data at the appropriate level of detail, reporting must be mandated at the local, state, or federal level. At the state level, California’s Climate Corporate Data Accountability Act (SB -253, SB-219) requires that large companies operating within the state report their GHG emissions in accordance with the GHG Protocol, administered by the California Air Resources Board (CARB).

At the federal level, the EU’s Corporate Sustainable Reporting Directive (CSRD), which requires firms operating within the EU to report a wide variety of data related to environmental sustainability and social governance, could serve as a model for regulating companies operating within the U.S. The Environmental Protection Agency’s (EPA) GHG Reporting Program already requires emissions reporting by operators and suppliers associated with large GHG emissions sources, and the Energy Information Administration (EIA) collects detailed data on electricity generation and fuel consumption through forms 860 and 923. With respect to data centers specifically, the Department of Energy (DOE) could require that developers who are granted rights to build AI data center infrastructure on public lands perform the relevant measurement and reporting, and more broadly reporting could be a requirement to qualify for any local, state or federal funding or assistance provided to support buildout of U.S. AI infrastructure.

Recommendation 4. Incorporate measurements of social cost into AI energy and infrastructure forecasting and planning.

There is a huge range in estimates of future data center energy use, largely driven by uncertainty around the nature of demands from AI. This uncertainty stems in part from a lack of historical and current data on which AI use cases are most energy intensive and how those workloads are evolving over time. It also remains unclear the extent to which challenges in bringing new resources online, such as hardware production limits or bottlenecks in permitting, will influence growth rates. These uncertainties are even more significant when it comes to the holistic impacts (i.e. those beyond direct energy consumption) described above, making it challenging to balance costs and benefits when planning future demands from AI.

To address these issues, accurate forecasting of demand for energy, water, and other limited resources must incorporate data gathered through holistic measurement frameworks described above. Further, the forecasting of broader system-level impacts must be incorporated into decision-making around investment in AI infrastructure. Forecasting needs to go beyond just energy use. Models should include predicting energy and related infrastructure needs for transmission, the social cost of carbon in terms of pollution, the effects to ratepayers, and the energy demands from chip production.

We recommend that agencies already responsible for energy-demand forecasting—such as the Energy Information Administration at the Department of Energy—integrate, in line with the NIST frameworks developed above, data on the AI workloads driving data-center electricity use into their forecasting models. Agencies specializing in social impacts, such as the Department of Health and Human Services in the case of health impacts, should model social impacts and communicate those to EIA and DOE for planning purposes. In parallel, the Federal Energy Regulatory Commission (FERC) should update its new rule on long-term regional transmission planning, to explicitly include consideration of the social costs corresponding to energy supply, demand and infrastructure retirement/buildout across different scenarios.

Recommendation 5. Transparently use federal, state, and local incentive programs to reward data-center projects that deliver concrete community benefits.

Incentive programs should attach holistic estimates of the costs and benefits collected under the frameworks above, and not purely based on promises. When considering using incentive programs, policymakers should ask questions such as: How many jobs are created by data centers and for how long do those jobs exist, and do they create jobs for local residents? What tax revenue for municipalities or states is created by data centers versus what subsidies are data center owners receiving? What are the social impacts of using agricultural land or public parks for data center construction or transmission lines? What are the impacts to air quality and other public health issues? Do data centers deliver benefits like load flexibility and sharing of waste heat?

Grid operators (Regional Transmission Organizations [RTOs] and Independent System Operators [ISOs]) can leverage interconnection queues to incentivize data center operators to justify that they have sufficiently considered the impacts to local communities when proposing a new site. FERC recently approved reforms to processing the interconnect request queue, allowing RTOs to implement a “first-ready first-served” approach rather than a first-come first-served approach, wherein proposed projects can be fast-tracked based on their readiness. A similar approach could be used by RTOs to fast-track proposals that include a clear plan for how they will benefit local communities (e.g. through load flexibility, heat reuse, and clean energy commitments), grounded in careful impact assessment.

There is the possibility of introducing state-level incentives in states with existing significant infrastructure. Such incentives could be determined in collaboration with the National Governors Association, who have been balancing AI-driven energy needs with state climate goals.

Conclusion

Data centers have an undeniable impact on energy infrastructures and the communities living close to them. This impact will continue to grow alongside AI infrastructure investment, which is expected to skyrocket. It is possible to shape a future where AI infrastructure can be developed sustainably, and in a way that responds to the needs of local communities. But more work is needed to collect the necessary data to inform government decision-making. We have described a framework for holistically evaluating the potential costs and benefits of AI data centers, and shaping AI infrastructure buildout based on those tradeoffs. This framework includes: establishing standards for measuring and reporting AI’s impacts, eliciting public participation from impacted communities, and putting gathered data into action to enable sustainable AI development.

This memo is part of our AI & Energy Policy Sprint, a policy project to shape U.S. policy at the critical intersection of AI and energy. Read more about the Policy Sprint and check out the other memos here.

Data centers are highly spatially concentrated largely due to reliance on existing energy and data transmission infrastructure; it is more cost-effective to continue building where infrastructure already exists, rather than starting fresh in a new region. As long as the cost of performing the proposed impact assessment and reporting in established regions is less than that of the additional overhead of moving to a new region, data center operators are likely to comply with regulations in order to stay in regions where the sector is established.

Spatial concentration of data centers also arises due to the need for data center workloads with high data transmission requirements, such as media streaming and online gaming, to have close physical proximity to users in order to reduce data transmission latency. In order for AI to be integrated into these realtime services, data center operators will continue to need presence in existing geographic regions, barring significant advances in data transmission efficiency and infrastructure.

bad for national security and economic growth. So is infrastructure growth that harms the local communities in which it occurs.

Researchers from Good Jobs First have found that many states are in fact losing tax revenue to data center expansion: “At least 10 states already lose more than $100 million per year in tax revenue to data centers…” More data is needed to determine if data center construction projects coupled with tax incentives are economically advantageous investments on the parts of local and state governments.

The DOE is opening up federal lands in 16 locations to data center construction projects in the name of strengthening America’s energy dominance and ensuring America’s role in AI innovation. But national security concerns around data center expansion should also consider the impacts to communities who live close to data centers and related infrastructures.

Data centers themselves do not automatically ensure greater national security, especially because the critical minerals and hardware components of data centers depend on international trade and manufacturing. At present, the United States is not equipped to contribute the critical minerals and other materials needed to produce data centers, including GPUs and other components.

Federal policy ensures that states or counties do not become overburdened by data center growth and will help different regions benefit from the potential economic and social rewards of data center construction.

Developing federal standards around transparency helps individual states plan for data center construction, allowing for a high-level, comparative look at the energy demand associated with specific AI use cases. It is also important for there to be a federal intervention because data centers in one state might have transmission lines running through a neighboring state, and resultant outcomes across jurisdictions. There is a need for a national-level standard.

Current cost-benefit estimates can often be extremely challenging. For example, while municipalities often expect there will be economic benefits attached to data centers and that data center construction will yield more jobs in the area, subsidies and short-term jobs in construction do not necessarily translate into economic gains.

To improve the ability of decision makers to do quality cost-benefit analysis, the independent consortium described in Recommendation 2 will examine both qualitative and quantitative data, including permitting histories, transmission plans, land use and eminent domain cases, subsidies, jobs numbers, and health or quality of life impacts in various sites over time. NIST will help develop standards in accordance with this data collection, which can then be used in future planning processes.

Further, there is customer interest in knowing their AI is being sourced from firms implementing sustainable and socially responsible practices. These efforts which can be used in marketing communications and reported as a socially and environmentally responsible practice in ESG reports. This serves as an additional incentive for some data center operators to participate in voluntary reporting and maintain operations in locations with increased regulation.

Advance AI with Cleaner Air and Healthier Outcomes

Artificial intelligence (AI) is transforming industries, driving innovation, and tackling some of the world’s most pressing challenges. Yet while AI has tremendous potential to advance public health, such as supporting epidemiological research and optimizing healthcare resource allocation, the public health burden of AI due to its contribution to air pollutant emissions has been under-examined. Energy-intensive data centers, often paired with diesel backup generators, are rapidly expanding and degrading air quality through emissions of air pollutants. These emissions exacerbate or cause various adverse health outcomes, from asthma to heart attacks and lung cancer, especially among young children and the elderly. Without sufficient clean and stable energy sources, the annual public health burden from data centers in the United States is projected to reach up to $20 billion by 2030, with households in some communities located near power plants supplying data centers, such as those in Mason County, WV, facing over 200 times greater burdens than others.

Federal, state, and local policymakers should act to accelerate the adoption of cleaner and more stable energy sources and address AI’s expansion that aligns innovation with human well-being, advancing the United States’ leadership in AI while ensuring clean air and healthy communities.

Challenge and Opportunity

Forty-six percent of people in the United States breathe unhealthy levels of air pollution. Ambient air pollution, especially fine particulate matter (PM2.5), is linked to 200,000 deaths each year in the United States. Poor air quality remains the nation’s fifth highest mortality risk factor, resulting in a wide range of immediate and severe health issues that include respiratory diseases, cardiovascular conditions, and premature deaths.

Data centers consume vast amounts of electricity to power and cool the servers running AI models and other computing workloads. According to the Lawrence Berkeley National Laboratory, the growing demand for AI is projected to increase the data centers’ share of the nation’s total electricity consumption to as much as 12% by 2028, up from 4.4% in 2023. Without enough sustainable energy sources like nuclear power, the rapid growth of energy-intensive data centers is likely to exacerbate ambient air pollution and its associated public health impacts.

Data centers typically rely on diesel backup generators for uninterrupted operation during power outages. While the total operation time for routine maintenance of backup generators is limited, these generators can create short-term spikes in PM2.5, NOx, and SO2 that go beyond the baseline environmental and health impacts associated with data center electricity consumption. For example, diesel generators emit 200–600 times more NOx than natural gas-fired power plants per unit of electricity produced. Even brief exposure to high-level NOx can aggravate respiratory symptoms and hospitalizations. A recent report to the Governor and General Assembly of Virginia found that backup generators at data centers emitted approximately 7% of the total permitted pollution levels for these generators in 2023. Based on the Environmental Protection Agency’s COBRA modeling tool, the public health cost of these emissions in Virginia is estimated at approximately $200 million, with health impacts extending to neighboring states and reaching as far as Florida. In Memphis, Tennessee, a set of temporary gas turbines powering a large AI data center, which has not undergone a complete permitting process, is estimated to emit up to 2,000 tons of NOx annually. This has raised significant health concerns among local residents and could result in a total public health burden of $160 million annually. These public health concerns coincide with a paradigm shift that favors dirty energy and potentially delays sustainability goals.

In 2023 alone, air pollution attributed to data centers in the United States resulted in an estimated $5 billion in health-related damages, a figure projected to rise up to $20 billion annually by 2030. This projected cost reflects an estimated 1,300 premature deaths in the United States per year by the end of the decade. While communities near data centers and power plants bear the greatest burden, with some households facing over 200 times greater impacts than others, the health impacts of these facilities extend to communities across the nation. The widespread health impacts of data centers further compound the already uneven distribution of environmental costs and water resource stresses imposed by AI data centers across the country.

While essential for mitigating air pollution and public health risks, transitioning AI data centers to cleaner backup fuels and stable energy sources such as nuclear power presents significant implementation hurdles, including lengthy permitting processes. Clean backup generators that match the reliability of diesel remain limited in real-world applications, and multiple key issues must be addressed to fully transition to cleaner and more stable energy.

While it is clear that data centers pose public health risks, comprehensive evaluations of data center air pollution and related public health impacts are essential to grasp the full extent of the harms these centers pose, yet often remain absent from current practices. Washington State conducted a health risk assessment of diesel particulate pollution from multiple data centers in the Quincy area in 2020. However, most states lack similar evaluations for either existing or newly proposed data centers. To safeguard public health, it is essential to establish transparency frameworks, reporting standards, and compliance requirements for data centers, enabling the assessment of PM2.5, NOₓ, SO₂, and other harmful air pollutants, as well as their short- and long-term health impacts. These mechanisms would also equip state and local governments to make informed decisions about where to site AI data center facilities, balancing technological progress with the protection of community health nationwide.

Finally, limited public awareness, insufficient educational outreach, and a lack of comprehensive decision-making processes further obscure the potential health risks data centers pose to public health. Without robust transparency and community engagement mechanisms, communities housing data center facilities are left with little influence or recourse over developments that may significantly affect their health and environment.

Plan of Action

The United States can build AI systems that not only drive innovation but also promote human well-being, delivering lasting health benefits for generations to come. Federal, state, and local policymakers should adopt a multi-pronged approach to address data center expansion with minimal air pollution and public health impacts, as outlined below.

Federal-level Action

Federal agencies play a crucial role in establishing national standards, coordinating cross-state efforts, and leveraging federal resources to model responsible public health stewardship.

Recommendation 1. Incorporate Public Health Benefits to Accelerate Clean and Stable Energy Adoption for AI Data Centers

Congress should direct relevant federal agencies, including the Department of Energy (DOE), the Nuclear Regulatory Commission (NRC), and the Environmental Protection Agency (EPA), to integrate air pollution reduction and the associated public health benefits into efforts to streamline the permitting process for more sustainable energy sources, such as nuclear power, for AI data centers. Simultaneously, federal resources should be expanded to support research, development, and pilot deployment of alternative low-emission fuels for backup generators while ensuring high reliability.

- Public Health Benefit Quantification. Direct the EPA, in coordination with DOE and public health agencies, to develop standardized methods for estimating the public health benefits (e.g., avoided premature deaths, hospital visits, and economic burden) of using cleaner and more stable energy sources for AI data centers. Require lifecycle emissions modeling of energy sources and translate avoided emissions into quantitative health benefits using established tools such as the EPA’s BenMAP. This should:

- Include modeling of air pollution exposure and health outcomes (e.g., using tools like EPA’s COBRA)

- Incorporate cumulative risks from regional electricity generation and local backup generator emissions

- Account for spatial disparities and vulnerable populations (e.g., children, the elderly, and disadvantaged communities)

- Evaluate both short-term (e.g., generator spikes) and long-term (e.g., chronic exposure) health impacts

- Preferential Permitting. Instruct the DOE to prioritize and streamline permitting for cleaner energy projects (e.g., small modular reactors, advanced geothermal) that demonstrate significant air pollution reduction and health benefits in supporting AI data center infrastructures. Develop a Clean AI Permitting Framework that allows project applicants to submit health benefit assessments as part of the permitting package to justify accelerated review timelines.

- Support for Cleaner Backup Systems. Expand DOE and EPA R&D programs to support pilot projects and commercialization pathways for alternative backup generator technologies, including hydrogen combustion systems and long-duration battery storage. Provide tax credits or grants for early adopters of non-diesel backup technologies in AI-related data center facilities.

- Federal Guidance & Training. Provide technical assistance to state and local agencies to implement the protocol, and fund capacity-building efforts in environmental health departments.

Recommendation 2. Establish a Standardized Emissions Reporting Framework for AI Data Centers

Congress should direct the EPA, in coordination with the National Institute of Standards and Technology (NIST), to develop and implement a standardized reporting framework requiring data centers to publicly disclose their emissions of air pollutants, including PM₂.₅, NOₓ, SO₂, and other hazardous air pollutants associated with backup generators and electricity use.

- Multi-Stakeholder Working Group. Task EPA with convening a multi-stakeholder working group, including representatives from NIST, DOE, state regulators, industry, and public health experts, to define the scope, metrics, and methodologies for emissions reporting.

- Standardization. Develop a federal technical standard that specifies:

- Types of air pollutants that should be reported

- Frequency of reporting (e.g., quarterly or annually)

- Facility-specific disclosures (including generator use and power source profiles)

- Geographic resolution of emissions data

- Public access and data transparency protocols

State-level Action

Recommendation 1. State environmental and public health departments should conduct a health impact assessment (HIA) before and after data center construction to evaluate discrepancies between anticipated and actual health impacts for existing and planned data center operations. To maintain and build trust, HIA findings, methodologies, and limitations should be publicly available and accessible to non-technical audiences (including policymakers, local health departments, and community leaders representing impacted residents), thereby enhancing community-informed action and participation. Reports should focus on the disparate impact between rural and urban communities, with particular attention to overburdened communities that have under-resourced health infrastructure. In addition, states should coordinate HIA and share findings to address cross-boundary pollution risks. This includes accounting for nearby communities across state lines, considering that jurisdictional borders should not constrain public health impacts and analysis.

Recommendation 2. State public health departments should establish a state-funded program that offers community education forums for affected residents to express their concerns about how data centers impact them. These programs should emphasize leading outreach, engaging communities, and contributing to qualitative analysis for HIAs. Health impact assessments should be used as a basis for informed community engagement.

Recommendation 3. States should incorporate air pollutant emissions related to data centers into their implementation of the National Ambient Air Quality Standards (NAAQS) and the development of State Implementation Plans (SIPs). This ensures that affected areas can meet standards and maintain their attainment statuses. To support this, states should evaluate the adequacy of existing regulatory monitors in capturing emissions related to data centers and determine whether additional monitoring infrastructure is required.

Local-level Action

Recommendation 1. Local governments should revise zoning regulations to include stricter and more explicit health-based protections to prevent data center clustering in already overburdened communities. Additionally, zoning ordinances should address colocation factors and evaluate potential cumulative health impacts. A prominent example is Fairfax County, Virginia, which updated its zoning ordinance in September 2024 to regulate the proximity of data centers to residential areas, require noise pollution studies prior to construction, and establish size thresholds. These updates were shaped through community engagement and input.

Recommendation 2. Local governments should appoint public health experts to the zoning boards to ensure data center placement decisions reflect community health priorities, thereby increasing public health expert representation on zoning boards.

Conclusion

While AI can revolutionize industries and improve lives, its energy-intensive nature is also degrading air quality through emissions of air pollutants. To mitigate AI’s growing air pollution and public health risks, a comprehensive assessment of AI’s health impact and transitioning AI data centers to cleaner backup fuels and stable energy sources, such as nuclear power, are essential. By adopting more informed and cleaner AI strategies at the federal and state levels, policymakers can mitigate these harms, promote healthier communities, and ensure AI’s expansion aligns with clean air priorities.

This memo is part of our AI & Energy Policy Sprint, a policy project to shape U.S. policy at the critical intersection of AI and energy. Read more about the Policy Sprint and check out the other memos here.

Unlocking AI’s Grid Modernization Potential

Surging energy demand and increasingly frequent extreme weather events are bringing new challenges to the forefront of electric grid planning, permitting, operations, and resilience. These hurdles are pushing our already fragile grid to the limit, highlighting decades of underinvestment, stagnant growth, and the pressing need to modernize our system.

While these challenges aren’t new, they are newly urgent. The society-wide emergence of artificial intelligence (AI) is bringing many of these challenges into sharper focus, pushing the already increasing electricity demand to new heights and cementing the need for deployable, scalable, and impactful solutions. Fortunately, many transformational and mature AI tools provide near-term pathways for significant grid modernization.

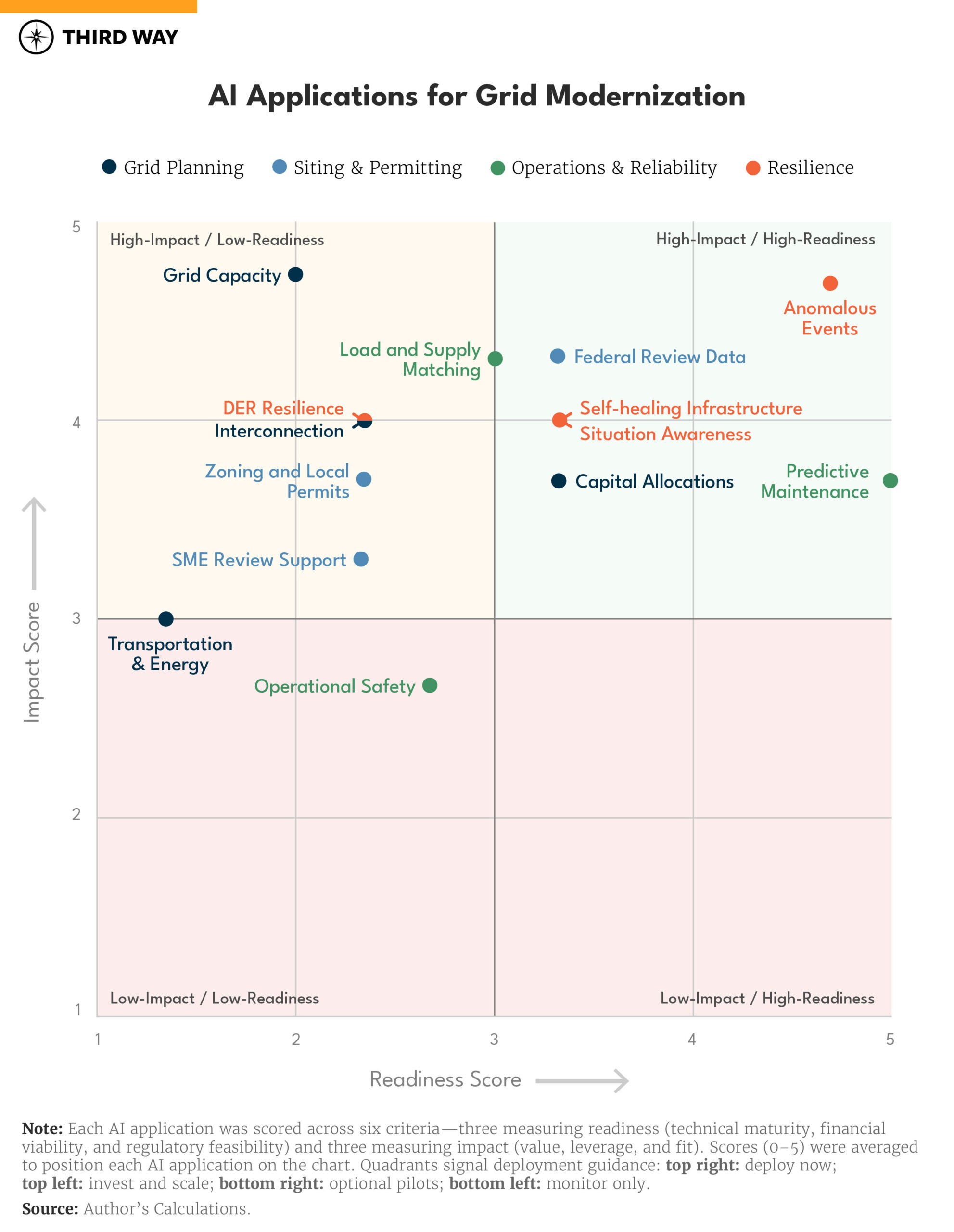

This policy memo builds on foundational research from the US Department of Energy’s (DOE) AI for Energy (2024) report to present a new matrix that maps these unique AI applications onto an “impact-readiness” scale. Nearly half of the applications identified by DOE are high impact and ready to deploy today. An additional ~40% have high impact potential but require further investment and research to move up the readiness scale. Only 2 of 14 use cases analyzed here fall into the “low-impact / low-readiness” quadrant.

Unlike other emerging technologies, AI’s potential in grid modernization is not simply an R&D story, but a deployment one. However, with limited resources, the federal government should invest in use cases that show high-impact potential and demonstrate feasible levels of deployment readiness. The recommendations in this memo target regulatory actions across the Federal Energy Regulatory Commission (FERC) and the Department of Energy (DOE), data modernization programs at the Federal Permitting Improvement Steering Council (FPISC), and funding opportunities and pilot projects at and the DOE and the Federal Emergency Management Agency (FEMA).

Thoughtful policy coordination, targeted investments, and continued federal support will be needed to realize the potential of these applications and pave the way for further innovation.

Challenge and Opportunity

Surging Load Growth, Extreme Events, and a Fragmented Federal Response

Surging energy demand and more frequent extreme weather events are bringing new challenges to the forefront of grid planning and operations. Not only is electric load growing at rates not seen in decades, but extreme weather events and cybersecurity threats are becoming more common and costly. All the while, our grid is becoming more complex to operate as new sources of generation and grid management tools evolve. Underlying these complexities is the fragmented nature of our energy system: a patchwork of regional grids, localized standards, and often conflicting regulations.

The emergence of artificial intelligence (AI) has brought many of these challenges into sharper focus. However, the potential of AI to mitigate, sidestep, or solve these challenges is also vast. From more efficient permitting processes to more reliable grid operations, many unique AI use cases for grid modernization are ready to deploy today and have high-impact potential.

The federal government has a unique role to play in both meeting these challenges and catalyzing these opportunities by implementing AI solutions. However, the current federal landscape is fragmented, unaligned, and missing critical opportunities for impact. Nearly a dozen federal agencies and offices are engaged across the AI grid modernization ecosystem (see FAQ #2), with few coordinating in the absence of a defined federal strategy.

To prioritize effective and efficient deployment of resources, recommendations for increased investments (both in time and capital) should be based on a solid understanding of where the gaps and opportunities lie. Historically, program offices across DOE and other agencies have focused efforts on early-stage R&D and foundational science activities for emerging technology. For AI, however, the federal government is well-positioned to support further deployment of the technology into grid modernization efforts, rather than just traditional R&D activities.

AI Applications for Grid Modernization

AI’s potential in grid modernization is significant, expansive, and deployable. Across four distinct categories—grid planning, siting and permitting, operations and reliability, and resilience—AI can improve existing processes or enable entirely new ones. Indeed, the use of AI in the power sector is not a new phenomenon. Industry and government alike have long utilized machine learning (ML) models across a range of power sector applications, and the recent introduction of “foundation” models (such as large language models, or LLMs) has opened up a new suite of transformational use cases. While LLMs and other foundation models can be used in various use cases, AI’s potential to accelerate grid modernization will span both traditional and novel approaches, with many applications requiring custom-built models tailored to specific operational, regulatory, and data environments.

The following 14 use cases are drawn from DOE’s AI for Energy (2024) report and form the foundation of this memo’s analytical framework.

Grid Planning

- Capital Allocations and Planned Upgrades. Use AI to optimize utility investment decisions by forecasting asset risk, load growth, and grid needs to guide substation upgrades, reconductoring, or distributed energy resource (DER)-related capacity expansions.

- Improved Information on Grid Capacity. Use AI to generate more granular and dynamic hosting capacity, load forecast, and congestion data to guide DER siting, interconnection acceleration, and non-wires alternatives.

- Improved Transportation and Energy Planning Alignment. Use AI-enabled joint forecasting tools to align EV infrastructure rollout with utility grid planning by integrating traffic, land use, and load growth data.

- Interconnection Issues and Power Systems Models. Use AI-accelerated power flow models and queue screening tools to reduce delays and improve transparency in interconnection studies.

Siting and Permitting

- Zoning and Local Permitting Analysis. Use AI to analyze zoning ordinances, land use restrictions, and local permitting codes to identify siting barriers or opportunities earlier in the project development process.

- Federal Environmental Review Accelerations. Use AI tools to extract, organize, and summarize unstructured and disparate datasets to support more efficient and consistent reviews.

- AI Models to Assist Subject Matter Experts in Reviews. Use AI and document analysis tools to support expert reviewers by checking for completeness, inconsistencies, or precedent in technical applications and environmental documents.

Grid Operations and Reliability

- Load and Supply Matching. Use AI to improve short-term load forecasting and optimize generation dispatch, reducing imbalance costs and improving integration of variable resources.

- Predictive and Risk-Informed Maintenance. Use AI to predict asset degradation or failure and inform maintenance schedules based on equipment health, environmental stressors, and historical failure data.

- Operational Safety and Issues Reporting and Analysis. Apply AI to analyze safety incident logs, compliance records, and operator reports to identify patterns of human error, procedural risks, or training needs.

Grid Resilience

- Self-healing Infrastructure for Reliability and Resilience. Use AI to autonomously isolate faults, reconfigure power flows, and restore service in real time through intelligent switching and local control systems.

- Detection and Diagnosis of Anomalous Events. Use AI to identify and localize grid disturbances such as faults, voltage anomalies, or cyber intrusions using high-frequency telemetry and system behavior data.

- AI-enabled Situational Awareness and Actions for Resilience. Leverage AI to synthesize grid, weather, and asset data to support operator awareness and guide event response during extreme weather or grid stress events.

- Resilience with Distributed Energy Resources. Coordinate DERs during grid disruptions using AI for forecasting, dispatch, and microgrid formation, enabling system flexibility and backup power during emergencies.

However, not all applications are created equal. With limited resources, the federal government should prioritize use cases that show high-impact potential and demonstrate feasible levels of deployment readiness. Additional investments should also be allocated to high-impact / low-readiness use cases to help unlock and scale these applications.

Unlocking the potential of these use cases requires a better understanding of which ones hit specific benchmarks. The matrix below provides a framework for thinking through these questions.

Using the use cases identified above, we’ve mapped AI’s applications in grid modernization onto a “readiness-impact” chart based on six unique scoring scales (see appendix for full methodological and scoring breakdown).

Readiness Scale Questions

- Technical Readiness. Is the AI solution mature, validated, and performant?

- Financial Readiness. Is it cost-effective and fundable (via CapEx, OpEx, or rate recovery)?

- Regulatory Readiness. Can it be deployed under existing rules, with institutional buy-in?

Impact Scale Questions

- Value. Does this AI solution reduce costs, outages, emissions, or delays in a measurable way?

- Leverage. Does it enable or unlock broader grid modernization (e.g., DERs, grid enhancing technologies (GETs), and/or virtual power plant (VPP) integration)?

- Fit. Is AI the right or necessary tool to solve this compared to conventional tools (i.e., traditional transmission planning, interconnection study, and/or compliance software)?

Each AI application receives a score of 0-5 in each category, which are then averaged to determine its overall readiness and impact scores. To score each application, a detailed rubric was designed with scoring scales for each of the above-mentioned six categories. Industry examples and experience, existing literature, and outside expert consultation was utilized to then assign scores to each application.

When plotted on a coordinate plane, each application falls into one of four quadrants, helping us easily identify key insights about each use case.

- High-Impact / High-Readiness use cases → Deploy now

- High-Impact / Low-Readiness → Invest, unlock, and scale

- Low-Impact / High-Readiness → Optional pilots, but deprioritize federal effort

- Low-Impact / Low-Readiness → Monitor private sector action

Once plotted, we can then identify additional insights, such as where the clustering happens, what barriers are holding back the highest impact applications, and if there are recurring challenges (or opportunities) across the four categories of grid modernization efforts.

Plan of Action

Grid Planning

Average Readiness Score: 2.3 | Average Impact Score: 3.8

- AI use cases in grid planning face the highest financial and regulatory hurdles of any category. Reducing these barriers can unlock high-impact potential.

- These tools are high-leverage use cases. Getting these deployed unlocks deeper grid modernization activities system-wide, such as grid-enhancing technology (GETs) integration.

- While many of these AI tools are technically mature, adoption is not yet mainstream.

Recommendation 1. The Federal Energy Regulatory Commission (FERC) should clarify the regulatory pathway for AI use cases in grid planning.

Regional Transmission Organizations (RTOs), utilities, and Public Utility Commissions (PUCs) require confidence that AI tools are approved and supported before they deploy them at scale. They also need financial clarity on viable pathways to rate-basing significant up-front costs. Building on Commissioner Rosner’s Letters Regarding Interconnection Automation, FERC should establish a FERC-DOE-RTO technical working group on “Next-Gen Planning Tools” that informs FERC-compliant AI-enabled planning, modeling, and reporting standards. Current regulations (and traditional planning approaches) leave uncertainty around the explainability, validation, and auditability of AI-driven tools.

Thus, the working group should identify where AI tools can be incorporated into planning processes without undermining existing reliability, transparency, or stakeholder-participation standards. The group should develop voluntary technical guidance on model validation standards, transparency requirements, and procedural integration to provide a clear pathway for compliant adoption across FERC-regulated jurisdictions.

Siting and Permitting

Average Readiness Score: 2.7 | Average Impact Score: 3.8

- Zoning and local permitting tools are promising, but adoption is fragmented across state, local, and regional jurisdictions.

- Federal permitting acceleration tools score high on technical readiness but face institutional distrust and a complicated regulatory environment.

- In general, tools in this category have high value but limited transferability beyond highly specific scenarios (low leverage). Even if unlocked at scale, they have narrower application potential than other tools analyzed in this memo.

Recommendation 2. The Federal Permitting Improvement Steering Council (FPISC) should establish a federal siting and permitting data modernization initiative.

AI tools can increase speed and consistency in siting and permitting processes by automating the review of complex datasets, but without structured data, standardized workflows, and agency buy-in, their adoption will remain fragmented and niche. Furthermore, most grid infrastructure data (including siting and permitting documentation) is confidential and protected, leading to industry skepticism about the ability of AI to maintain important security measures alongside transparent workflows. To address these concerns, FPISC should launch a coordinated initiative that creates structured templates for federal permitting documents, pilots AI integration at select agencies, and develops a public validation database that allows AI developers to test their models (with anonymous data) against real agency workflows. Having launched a $30 million effort in 2024 to improve IT systems across multiple agencies, FPSIC is well-positioned to take those lessons learned and align deeper AI integration across the federal government’s permitting processes. Coordination with the Council on Environmental Quality (CEQ), which was recently called on to develop a Permitting Technology Action Plan, is also encouraged. Additional Congressional appropriations to FPISC can unlock further innovation.

Operations and Reliability

Average Readiness Score: 3.6 | Average Impact Score: 3.6

- Overall, this category has the highest average readiness across technical, financial, and regulatory scales. These use cases are clear “ready-now” wins.

- They also have the highest fit component of impact, representing unique opportunities for AI tools to improve on existing systems and processes in ways that traditional tools cannot.

Recommendation 3. Launch an AI Deployment Challenge at DOE to scale high-readiness tools across the sector.

From the SunShot Initiative (2011) through the Energy Storage Grand Challenge (2020) to the Energy Earthshots (2021), DOE has a long history of catalyzing the deployment of new technology in the power sector. A dedicated grand challenge – funded with new Congressional appropriations at the Grid Deployment Office – could deploy matching grants or performance-based incentives to utilities, co-ops, and municipal providers to accelerate adoption of proven AI tools.

Grid Resilience

Average Readiness Score: 3.4 | Average Impact Score: 4.2

- As a category, resilience applications have the highest overall impact score, including a perfect value score across all four use cases. There is significant potential in deploying AI tools to solve these challenges.

- Alongside operations and reliability use cases, these tools also exhibit the highest technical readiness, demonstrating technical maturity alongside high value potential.

- Anomalous events detection is the highest-scoring use case across all 14 applications, on both readiness and impact scales. It’s already been deployed and is ready to scale.

Recommendation 4. DOE, the Federal Emergency Management Agency (FEMA), and FERC should create an AI for Resilience Program that funds and validates AI tools that support cross-jurisdictional grid resilience.

AI for resilience applications often require coordination across traditional system boundaries, from utilities to DERs, microgrids to emergency managers, as well as high levels of institutional trust. Federal coordination can catalyze system integration by funding demo projects, developing integration playbooks, and clarifying regulatory pathways for AI-automated resilience actions.

Congress should direct DOE and FEMA, in consultation with FERC, to establish a new program (or carve out existing grid resilience funds) to: (1) support demonstration projects where AI tools are already being deployed during real-world resilience events; (2) develop standardized playbooks for integrating AI into utility and emergency management operations; and (3) clarify regulatory pathways for actions like DER islanding, fault rerouting, and AI-assisted load restoration.

Conclusion

Managing surging electric load growth while improving the grid’s ability to weather more frequent and extreme events is a once-in-a-generation challenge. Fortunately, new technological innovations combined with a thoughtful approach from the federal government can actualize the potential of AI and unlock a new set of solutions, ready for this era.

Rather than technological limitations, many of the outstanding roadblocks identified here are institutional and operational, highlighting the need for better federal coordination and regulatory clarity. The readiness-impact framework detailed in this memo provides a new way to understand these challenges while laying the groundwork for a timely and topical plan of action.