Breaking Ground on Next-Generation Geothermal Energy

This report is part one of a series on underinvested clean energy technologies, the challenges they face, and how the Department of Energy can use its Other Transaction Authority to implement programs custom tailored to those challenges.

The United States has been gifted with an abundance of clean, firm geothermal energy lying below our feet – tens of thousands of times more than the country has in untapped fossil fuels. Geothermal technology is entering a new era, with innovative approaches on their way to commercialization that will unlock access to more types of geothermal resources. However, the development of commercial-scale geothermal projects is an expensive affair, and the U.S. government has severely underinvested in this technology. The Inflation Reduction Act and the Bipartisan Infrastructure Law concentrated clean energy investments in solar and wind, which are great near-term solutions for decarbonization, but neglected to invest sufficiently in solutions like geothermal energy, which are necessary to reach full decarbonization in the long term. With new funding from Congress or potentially the creative (re)allocation of existing funding, the Department of Energy (DOE) could take a number of different approaches to accelerating progress in next-generation geothermal energy, from leasing agency land for project development to providing milestone payments for the costly drilling phases of development.

Introduction

As the United States power grid transitions towards clean energy, the increasing mix of intermittent renewable energy sources like solar and wind must be balanced by sources of clean firm power that are available around the clock in order to ensure grid reliability and reduce the need to overbuild solar, wind, and battery capacity. Geothermal power is a leading contender for addressing this issue.

Conventional geothermal (also known as hydrothermal) power plants tap into existing hot underground aquifers and circulate the hot water to the surface to generate electricity. Thanks to an abundance of geothermal resources close to the earth’s surface in the western part of the country, the United States currently leads the world in geothermal power generation. Conventional geothermal power plants are typically located near geysers and steam vents, which indicate the presence of hydrothermal resources belowground. However, these hydrothermal sites represent just a small fraction of the total untapped geothermal potential beneath our feet — more than the potential of fossil fuel and nuclear fuel reserves combined.

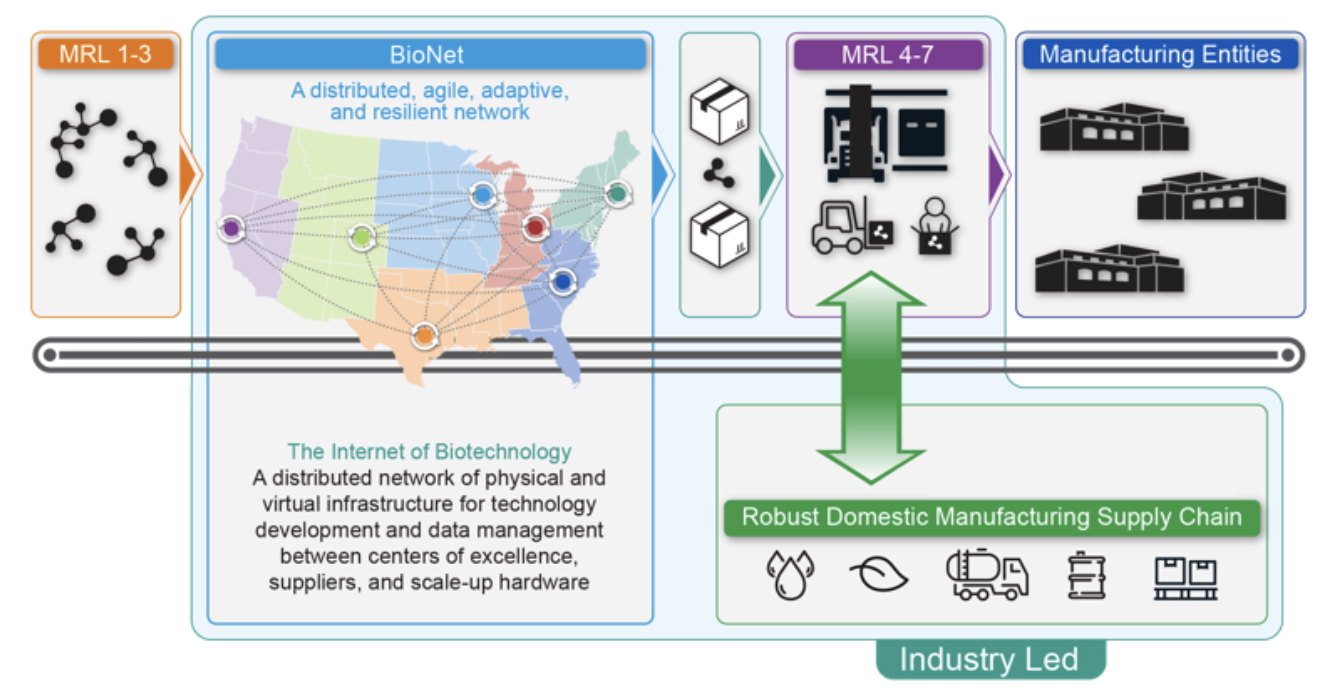

Next-generation geothermal technologies, such as enhanced geothermal systems (EGS), closed-loop or advanced geothermal systems (AGS), and other novel designs, promise to allow access to a wider range of geothermal resources. Some designs can potentially also serve double duty as long-duration energy storage. Rather than tapping into existing hydrothermal reservoirs underground, these technologies drill into hot dry rock, engineer independent reservoirs using either hydraulic stimulation or extensive horizontal drilling, and then introduce new fluids to bring geothermal energy to the surface. These new technologies have benefited from advances in the oil and gas industry, resulting in lower drilling costs and higher success rates. Furthermore, some companies have been developing designs for retrofitting abandoned oil and gas wells to convert them into geothermal power plants. The commonalities between these two sectors present an opportunity not only to leverage the existing workforce, engineering expertise, and supply chain from the oil and gas industry to grow the geothermal industry but also to support a just transition such that current workers employed by the oil and gas industry have an opportunity to help build our clean energy future.

Over the past few years, a number of next-generation geothermal companies have had successful pilot demonstrations, and some are now developing commercial-scale projects. As a result of these successes and the growing demand for clean firm power, power purchase agreements (PPAs) for an unprecedented 1GW of geothermal power have been signed with utilities, community choice aggregators (CCAs), and commercial customers in the United States in 2022 and 2023 combined. In 2023, PPAs for next-generation geothermal projects surpassed those for conventional geothermal projects in terms of capacity. While this is promising, barriers remain to the development of commercial-scale geothermal projects. To meet its goal of net-zero emissions by 2050, the United States will need to invest in overcoming these barriers for next-generation geothermal energy now, lest the technology fail to scale to the level necessary for a fully decarbonized grid.

Meanwhile, conventional hydrothermal still has a role to play in the clean energy transition. The United States needs all the clean firm power that it can get, whether that comes from conventional or next-generation geothermal, in order to retire baseload coal and natural gas plants. The construction of conventional hydrothermal power plants is less expensive and cheaper to finance, since it’s a tried and tested technology, and there are still plenty of untapped hydrothermal resources in the western part of the country.

Challenges Facing Geothermal Projects

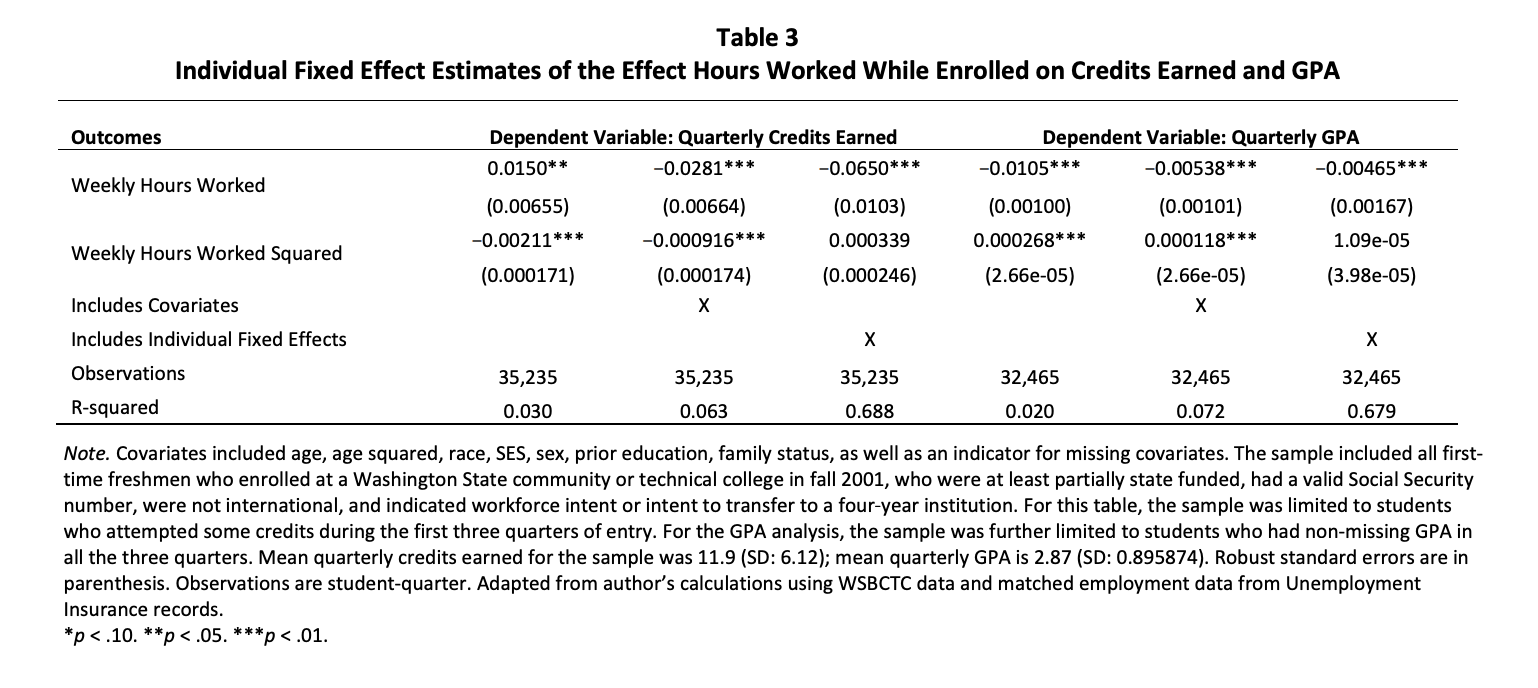

Funding is the biggest barrier to commercial development of next-generation geothermal projects. There are two types of private financing: equity financing or debt financing. Equity financing is more risk tolerant and is typically the source of funding for start-ups as they move from the R&D to demonstration phases of their technology. But because equity financing has a dilutive effect on the company, when it comes to the construction of commercial-scale projects, debt financing is preferred. However, first-of-a-kind commercial projects are almost always precluded from accessing debt financing. It is commonly understood within industry that private lenders will not take on technology risk, meaning that technologies must be at a Technology Readiness Level (TRL) of 9, where they have been proven to operate at commercial scale, and government lenders like the DOE Loan Programs Office (LPO) generally will not take on any risk that private lenders won’t. Manifestations of technology risk in next-generation geothermal include the possibility of underproduction, which would impact the plant’s profitability, or that capacity will decline faster than expected, reducing the plant’s operating lifetime. Moving next-generation technologies from the current TRL-7 level to TRL-9 will be key to establishing the reliability of these emerging technologies and unlocking debt financing for future commercial-scale projects.

Underproduction will likely remain a risk, though to a lesser extent, for next-generation projects even after technologies reach TRL-9. This is because uncertainty in the exploration and subsurface characterization process makes it possible for developers to overestimate the temperature gradient and thus the production capacity of a project. Hydrothermal projects also share this risk: the factors determining the production capacity for hydrothermal projects include not only the temperature gradient but also the flow rate and enthalpy of the natural reservoir. In the worst-case scenario, drilling can result in a dry hole that produces no hot fluids at all. This becomes a financial issue if the project is unable to generate as much revenue as expected due to underproduction or additional wells must be drilled to compensate, driving up the total project cost. Thus, underproduction is a risk shared by both next-generation and conventional geothermal projects. Research into improvements to the accuracy and cost of geothermal exploration and subsurface characterization can help mitigate this risk but may not eliminate it entirely, since there is a risk-cost trade-off in how much time is spent on exploration and subsurface characterization.

Another challenge for both next-generation and conventional geothermal projects is that they are more expensive to develop than solar or wind projects. Drilling requires significant upfront capital expenditures, making up about half of the total capital costs of developing a geothermal project, if not more. For example, in EGS projects, the first few wells can cost around $10 million each, while conventional hydrothermal wells, which are shallower, can cost around $3–7 million each. While conventional hydrothermal plants only consist of two to six wells on average, designs for commercial EGS projects can require several times that amount of wells. Luckily, EGS projects benefit from the fact that wells can be drilled identically, so projects expect to move down the learning curve as they drill more wells, resulting in faster and cheaper drilling. Initial data from commercial-scale projects currently being developed suggest that the learning curves may be even steeper than expected. Nevertheless, this will need to be proven at scale across different locations. Some companies have managed to forgo expensive drilling costs by focusing on developing technologies that can be installed within idle hydrothermal wells or abandoned oil and gas wells to convert them into productive geothermal wells.

Beyond funding, geothermal projects need to obtain land where there are suitable geothermal resources and permits for each stage of project development. The best geothermal resources in the United States are concentrated in the West, where the federal government owns most of the land. The Bureau of Land Management (BLM) manages a lot of that land, in addition to all subsurface resources on federal land. However, there is inconsistency in how the BLM leases its land, depending on the state. While Nevada BLM has been very consistent about holding regular lease sales each year, California BLM has not held a lease sale since 2016. Adding to the complexity is the fact that although BLM manages all subsurface resources on federal land, surface land may sometimes be managed by a different agency, in which case both agencies will need to be involved in the leasing and permitting process.

Last, next-generation geothermal companies face a green premium on electricity produced using their technology, though the green premium does not appear to be as significant of a challenge for next-generation geothermal as it is for other green technologies. In states with high renewables penetration, utilities and their regulators are beginning to recognize the extra value that clean firm power provides in terms of grid reliability. For example, the California Public Utility Commission has issued an order for utilities to procure 1 GW of clean, firm power by 2026, motivating a wave of new demand from utilities and community choice aggregators. As a result of this demand and California’s high electricity prices in general, geothermal projects have successfully signed a flurry of PPAs over the past year. These have included projects located in Nevada and Utah that can transmit electricity to California customers. In most other western states, however, electricity prices are much lower, so utility companies can be reluctant to sign PPAs for next-generation geothermal projects if they aren’t required to, due to the high cost and technology risk. As a result, next-generation geothermal projects in those states have turned to commercial customers, like those operating data centers, who are willing to pay more to meet their sustainability goals.

Federal Support

The federal government is beginning to recognize the important role of next-generation geothermal power for the clean energy transition. For the first time in 2023, geothermal energy became eligible for the renewable energy investment and production tax credits, thanks to technology-neutral language introduced in the Inflation Reduction Act (IRA). Within the DOE, the agency launched the Enhanced Geothermal Shot in 2022, led by the Geothermal Technologies Office (GTO), to reduce the cost of EGS by 90% to $45/MWh by 2035 and make geothermal widely available. In 2020, the Frontier Observatory for Research in Geothermal Energy (FORGE), a dedicated underground field laboratory for EGS research, drilling, and technology testing established by GTO in 2014, drilled their first well using new approaches and tools the lab had developed. This year, GTO announced funding for seven EGS pilot demonstrations from the Bipartisan Infrastructure Law (BIL), for which GTO is currently reviewing the first round of applications. GTO also awarded the Geothermal Energy from Oil and gas Demonstrated Engineering (GEODE) grant to a consortium formed by Project Innerspace, the Society of Petroleum Engineering International, and Geothermal Rising, with over 100 partner entities, to transfer best practices from the oil and gas industry to geothermal, support demonstrations and deployments, identify barriers to growth in the industry, and encourage workforce adoption.

While these initiatives are a good start, significantly more funding from Congress is necessary to support the development of pilot demonstrations and commercial-scale projects and enable wider adoption of geothermal energy. The BIL notably expanded the DOE’s mission area in supporting the deployment of clean energy technologies, including establishing the Office of Clean Energy Demonstrations (OCED) and funding demonstration programs from the Energy Division of BIL and the Energy Act of 2020. However, the $84 million in funding authorized for geothermal pilot demonstrations was only a fraction of the funding that other programs received from BIL and not commensurate to the actual cost of next-generation geothermal projects. Congress should be investing an order of magnitude more into next-generation geothermal projects, in order to maintain U.S. leadership in geothermal energy and reap the many benefits to the grid, the climate, and the economy.

Another key issue is that DOE has currently and in the past limited all of its funding for next-generation geothermal to EGS technologies only. As a result, companies pursuing closed-loop/AGS and other next-generation technologies cannot qualify, leading some projects to be moved abroad. Given GTO’s historically limited budget, it’s possible that this was the result of a strategic decision to focus their funding on one technology rather than diluting it across multiple technologies. However, given that none of these technologies have been successfully commercialized at a wide scale yet, DOE may be missing the opportunity to invest in the full range of viable approaches. DOE appears to be aware of this, as the agency currently has a working group on AGS. New funding from Congress would allow DOE to diversify its investments to support the demonstration and commercial application of other next-generation geothermal technologies.

Alternatively, there are a number of OCED programs with funding from BIL that have not yet been fully spent (Table 1). Congress could reallocate some of that funding towards a new program supporting next-generation geothermal projects within OCED. Though not ideal, this may be a more palatable near-term solution for the current Congress than appropriating new funding.

A third option is that DOE could use some of the funding for the Energy Improvements in Rural and Remote Areas program, of which $635 million remains unallocated, to support geothermal projects. Though the program’s authorization does not explicitly mention geothermal energy, geothermal is a good candidate given the abundance of geothermal production potential in rural and remote areas in the West. Moreover, as a clean firm power source, geothermal has a comparative advantage over other renewable energy sources in improving energy reliability.

Other Transactions Authority

BIL and IRA gave DOE an expanded mandate to support innovative technologies from early stage research through commercialization. To do so, DOE will need to be just as innovative in its use of its available authorities and resources. Tackling the challenge of scaling technologies from pilot to commercialization will require DOE to look beyond traditional grant, loan, and procurement mechanisms. Previously, we identified the DOE’s Other Transaction Authority (OTA) as an underleveraged tool for accelerating clean energy technologies.

OTA is defined in legislation as the authority to enter into any transaction that is not a government grant or contract. This negative definition provides DOE with significant freedom to design and implement flexible financial agreements that can be tailored to the unique challenges that different technologies face. OT agreements allow DOE to be more creative, and potentially more cost-effective, in how it supports the commercialization of new technologies, such as facilitating the development of new markets, mitigating risks and market failures, and providing innovative new types of demand-side “pull” funding and supply-side “push” funding. The DOE’s new Guide to Other Transactions provides official guidance on how DOE personnel can use the flexibilities provided by OTA.

With additional funding from Congress, the DOE could use OT agreements to address the unique barriers that geothermal projects face in ways that may not be possible through other mechanisms. Below are four proposals for how the DOE can do so. We chose to focus on supporting next-generation geothermal projects, since the young industry currently requires more governmental support to grow, but we included ideas that would benefit conventional hydrothermal projects as well.

Program Proposals

Geothermal Development on Agency Land

This year, the Defense Innovation Unit issued its first funding opportunity specifically for geothermal energy. The four winning projects will aim to develop innovative geothermal power projects on Department of Defense (DoD) bases for both direct consumption by the base and sale to the local grid. OT agreements were used for this program to develop mutually beneficial custom terms. For project developers, DoD provided funding for surveying, design, and proposal development in addition to land for the actual project development. The agreement terms also gave companies permission to use the technology and information gained from the project for other commercial use. For DoD, these projects are an opportunity to improve the energy resilience and independence of its bases while also reducing emissions. By implementing the prototype agreement using OTA, DoD will have the option to enter into a follow-on OT agreement with project developers without further competition, expediting future processes.

DOE could implement a similar program for its 2.4 million acres of land. In particular, the DOE’s land in Idaho and other western states has favorable geothermal resources, which the DOE has considered leasing. By providing some funding for surveying and proposal development like the DoD, the DOE can increase the odds of successful project development, compared to simply leasing the land without funding support. The DOE could also offer technical support to projects from its national labs.

With such a program, a lot of the value that the DOE would be providing is the land itself, which the DOE currently has more of than actual funding for geothermal energy. The funding needed for surveying and proposal development is much less than would be needed to support the actual construction of demonstration projects, so GTO could feasibly request funding for such a program through the annual appropriations process. Depending on the program outcomes and the resulting proposals, the DOE could then go back to Congress to request follow-on funding to support actual project construction.

Drilling Cost-Share Program

To help defray the high cost of drilling, the DOE could implement a milestone-based cost-share program. There is precedent for government cost-share programs for geothermal: in 1973, before the DOE was even established, Congress passed the Geothermal Loan Guarantee Program to provide “investment security to the public and private sectors to exploit geothermal resources” in the early days of the industry. Later, the DOE funded the Cascades I and II Cost Shared Programs. Then, from 2000 to 2007, the DOE ran the Geothermal Resource Exploration and Definitions (GRED) I, II, and III Cost-Share Programs. This year, the DOE launched its EGS Pilot Demonstrations program.

A milestone payment structure could be favorable for supporting expensive, next-generation geothermal projects because the government takes on less risk compared to providing all of the funding upfront. Initial funding could be provided for drilling the first few wells. Successful and on-time completion of drilling could then unlock additional funding to drill more wells, and so on. In the past, both the DoD and the National Aeronautics and Space Administration (NASA) have structured their OT agreements using milestone payments, most famously between NASA and SpaceX for the development of the Falcon9 space launch vehicle. The NASA and SpaceX agreement included not just technical but also financial milestones for the investment of additional private capital into the project. The DOE could do the same and include both technical and financial milestones in a geothermal cost-share program.

Risk Insurance Program

Longer term, the DOE could implement a risk insurance program for conventional hydrothermal and next-generation geothermal projects. Insuring against underproduction could make it easier and cheaper for projects to be financed, since the potential downside for investors would be capped. The DOE could initially offer insurance just for conventional hydrothermal, since there is already extensive data on past commercial projects that can inform how the insurance is designed. In order to design insurance for next-generation technologies, more commercial-scale projects will first need to be built to collect the data necessary to assess the underproduction risk of different approaches.

France has administered a successful Geothermal Public Risk Insurance Fund for conventional hydrothermal projects since 1982. The insurance originally consisted of two parts: a Short-Term Fund to cover the risk of underproduction and a Long-Term Fund to cover uncertain long-term behavior over the operating lifetime of the geothermal plant. The Short-Term Fund asked project owners to pay a premium of 1.5% of the maximum guaranteed amount. In return, the Short-Term Fund provided a 20% subsidy for the cost of drilling the first well and, in the case of reduced output or a dry hole, a compensation between 20% and 90% of the maximum guaranteed amount (inclusive of the subsidy that has already been paid). The exact compensation is determined based on a formula for the amount necessary to restore the project’s profitability with its reduced output. The Short-Term Fund relied on a high success rate, especially in the Paris Basin where there is known to be good hydrothermal resources, to fund the costs of failures. Geothermal developers that chose to get coverage from the Short-Term Fund were required to also get coverage from the Long-Term Fund, which was designed to hedge against the possibility of unexpected geological or geothermal changes within the wells, such as if their output declined faster than expected or severe corrosion or scaling occurred, over the geothermal plant’s operating lifetime. The Long-Term Fund ended in 2015, but a new iteration of the Short-Term Fund was approved in 2023.

The Netherlands has successfully run a similar program to the Short-Term Fund since the 2000s. Private-sector attempts at setting up geothermal risk insurance packages in Europe and around the world have mostly failed, though. The premiums were often too high, costing up to 25–30% of the cost of drilling, and were established in developing markets where not enough projects were being developed to mutualize the risk.

To implement such a program at the DOE, projects seeking coverage would first submit an application consisting of the technical plan, timeline, expected costs, and expected output. The DOE would then conduct rigorous due diligence to ensure that the project’s proposal is reasonable. Once accepted, projects would pay a small premium upfront; the DOE should keep in mind the failed attempts at private-sector insurance packages and ensure that the premium is affordable. In the case that either the installed capacity is much lower than expected or the output capacity declines significantly over the course of the first year of operations, the Fund would compensate the project based on the level of underproduction and the amount necessary to restore the project’s profitability with a reduced output. The French Short-Term Fund calculated compensation based on characteristics of the hydrothermal wells; the DOE would need to develop its own formulas reflective of the costs and characteristics of different next-generation geothermal technologies once commercial data actually exists.

Before setting up a geothermal insurance fund, the DOE should investigate whether there are enough geothermal projects being developed across the country to ensure the mutualization of risk and whether there is enough commercial data to properly evaluate the risk. Another concern for next-generation geothermal is that a high failure rate could cause the fund to run out. To mitigate this, the DOE will need to analyze future commercial data for different next-generation technologies to assess whether each technology is mature enough for a sustainable insurance program. Last, poor state capacity could impede the feasibility of implementing such a program. The DOE will need personnel on staff that are sufficiently knowledgeable about the range of emerging technologies in order to properly evaluate technical plans, understand their risks, and design an appropriate insurance package.

Production Subsidy

While the green premium for next-generation geothermal has not been an issue in California, it may be slowing down project development in other states with lower electricity prices. The Inflation Reduction Act introduced a new clean energy Production Tax Credit that included geothermal energy for the first time. However, due to the higher development costs of next-generation geothermal projects compared to other renewable energy projects, that subsidy is insufficient to fully bridge the green premium. DOE could use OTA to introduce a production subsidy for next-generation geothermal energy with varied rates depending on the state that the electricity is sold to and its average baseload electricity price (e.g., the production subsidy likely would not apply to California). This would help address variations in the green premium across different states and expand the number of states in which it is financially viable to develop next-generation geothermal projects.

Conclusion

The United States is well-positioned to lead the next-generation geothermal industry, with its abundance of geothermal resources and opportunities to leverage the knowledge and workforce of the domestic oil and gas industry. The responsibility is on Congress to ensure that DOE has the necessary funding to support the full range of innovative technologies being pursued by this young industry. With more funding, DOE can take advantage of the flexibility offered by OTA to create agreements tailored to the unique challenges that the geothermal industry faces as it begins to scale. Successful commercialization would pave the way to unlocking access to 24/7 clean energy almost anywhere in the country and help future-proof the transition to a fully decarbonized power grid.

Bio x AI: Policy Recommendations for a New Frontier

Artificial intelligence (AI) is likely to yield tremendous advances in our basic understanding of biological systems, as well as significant benefits for health, agriculture, and the broader bioeconomy. However, AI tools, if misused or developed irresponsibly, can also pose risks to biosecurity. The landscape of biosecurity risks related to AI is complex and rapidly changing, and understanding the range of issues requires diverse perspectives and expertise. To better understand and address these challenges, FAS initiated the Bio x AI Policy Development Sprint to solicit creative recommendations from subject matter experts in the life sciences, biosecurity, and governance of emerging technologies. Through a competitive selection process, FAS identified six promising ideas and, over the course of seven weeks, worked closely with the authors to develop them into the recommendations included here. These recommendations cover a diverse range of topics to match the diversity of challenges that AI poses in the life sciences. We believe that these will help inform policy development on these topics, including the work of the National Security Commission on Emerging Biotechnologies.

AI tool developers and others have put significant effort into establishing frameworks to evaluate and reduce risks, including biological risks, that might arise from “foundation” models (i.e., large models designed to be used for many different purposes). These include voluntary commitments from major industry stakeholders, and several efforts to develop methods for evaluations of these models. The Biden Administration’s recent Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence (Bioeconomy EO) furthers this work and establishes a framework for evaluating and reducing risks related to AI.

However, the U.S. government will need creative solutions to establish oversight for biodesign tools (i.e., more specialized AI models that are trained on biological data and provide insight into biological systems). Although there are differing perspectives among experts, including those who participated in this Policy Sprint, about the magnitude of risks that these tools pose, they undoubtedly are an important part of the landscape of biosecurity risks that may arise from AI. Three of the submissions to this Policy Sprint address the need for oversight of these tools. Oliver Crook, a postdoctoral researcher at the University of Oxford and a machine learning expert, calls on the U.S. government to ensure responsible development of biodesign tools by instituting a framework for checklist-based, institutional oversight for these tools while Richard Moulange, AI-Biosecurity Fellow at the Centre for Long-Term Resilience, and Sophie Rose, Senior Biosecurity Policy Advisor at the Centre for Long-Term Resilience, expand on the Executive Order on AI with recommendations for establishing standards for evaluating their risks. In his submission, Samuel Curtis, an AI Governance Associate at The Future Society, takes a more open-science approach, with a recommendation to expand infrastructure for cloud-based computational resources internationally to promote critical advances in biodesign tools while establishing norms for responsible development.

Two of the submissions to this Policy Sprint work to improve biosecurity at the interface where digital designs might become biological reality. Shrestha Rath, a scientist and biosecurity researcher, focuses on biosecurity screening of synthetic DNA, which the Executive Order on AI highlights as a key safeguard, and contains recommendations for how to improve screening methods to better prepare for designs produced using AI. Tessa Alexanian, a biosecurity and bioweapons expert, calls for the U.S. government to issue guidance on biosecurity practices for automated laboratories, sometimes called “cloud labs,” that can generate organisms and other biological agents.

This Policy Sprint highlights the diversity of perspectives and expertise that will be needed to fully explore the intersections of AI with the life sciences, and the wide range of approaches that will be required to address their biosecurity risks. Each of these recommendations represents an opportunity for the U.S. government to reduce risks related to AI, solidify the U.S. as a global leader in AI governance, and ensure a safer and more secure future.

Recommendations

- Develop a Screening Framework Guidance for AI-Enabled Automated Labs by Tessa Alexanian

- An Evidence-Based Approach to Identifying and Mitigating Biological Risks From AI-Enabled Biological Tools by Richard Moulange & Sophie Rose

- A Path to Self-governance of AI-Enabled Biology by Oliver Crook

- A Global Compute Cloud to Advance Safe Science and Innovation by Samuel Curtis

- Establish Collaboration Between Developers of Gene Synthesis Screening Tools and AI Tools Trained on Biological Data by Shrestha Rath

- Responsible and Secure AI in Production Agriculture by Jennifer Clarke

Develop a Screening Framework Guidance for AI-Enabled Automated Labs

Tessa Alexanian

Protecting against the risk that AI is used to engineer dangerous biological materials is a key priority in the Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence (AI EO). AI-engineered biological materials only become dangerous after digital designs are converted into physical biological agents, and biosecurity organizations have recommended safeguarding this digital-to-physical transition. In Section 4.4(b), the AI EO targets this transition by calling for standards and incentives that ensure appropriate screening of synthetic nucleic acids. This should be complemented by screening at another digital-to-physical interface: AI-enabled automated labs, such as cloud labs and self-driving labs.1

Laboratory biorisk management does not need to be reinvented for AI-enabled labs; existing US biosafety practices and Dual Use Research of Concern (DURC) oversight can be adapted and applied, as can emerging best practices for AI safety. However, the U.S. government should develop guidance that addresses two unique aspects of AI-enabled automated labs:

- Remote access to laboratory equipment may allow equipment to be misused by actors who would find it difficult to purchase or program it themselves.

- Unsupervised engineering of biological materials could produce dangerous agents without appropriate safeguards (e.g., if a viral vector regains transmissibility during autonomous experiments).

In short, guidance should ensure that providers of labs are aware of who is using the lab (customer screening), and what it is being used for (experiment screening).

It’s unclear precisely if and when automated labs will become broadly accessible to biologists, though a 2021 WHO horizon scan described them as posing dual-use concerns within five years. These are dual-use concerns, and policymakers must also consider the benefits that remotely accessible, high throughput, AI-driven labs offer for scientific discovery and biomedical innovation. The Australia Group discussed potential policy responses to cloud labs in 2019, including customer screening, experiment screening, and cybersecurity, though no guidance has been released. This is the right moment to develop screening guidance because automated labs are not yet widely used, but are attracting increasing investment and attention.

Recommendations

The evolution of U.S. policy on nucleic acid synthesis screening shows how the government can proactively identify best practices, issue voluntary guidance, allow stakeholders to test the guidance, and eventually require that federally-funded researchers procure from providers that follow a framework derived from the guidance.

Recommendation 1. Convene stakeholders to identify screening best practices

Major cloud lab companies already implement some screening and monitoring, and developers of self-driving labs recognize risks associated with them, but security practices are not standardized. The government should bring together industry and academic stakeholders to assess which capabilities of AI-enabled automated labs pose the most risk and share best practices for appropriate management of these risks.

As a starting point, aspects of the Administration for Strategic Preparedness and Response’s (ASPR) Screening Framework Guidance for synthetic nucleic acids can be adapted for AI-enabled automated labs. Labs that offer remote access could follow a similar process for customer screening, including verifying identity for all customers and verifying legitimacy for work that poses elevated dual-use concerns. If an AI system operating an autonomous or self-driving lab places a synthesis order for a sequence of concern, this could trigger a layer of human-in-the-loop approval.

Best practices will require cross-domain collaboration between experts in machine learning, laboratory automation, autonomous science, biosafety and biosecurity. Consortia such as the International Gene Synthesis Consortium and Global Biofoundy Alliance already have U.S. cloud labs among their members and may be a useful starting point for stakeholder identification.

Recommendation 2. Develop guidance based on these best practices

The Director of the Office of Science and Technology Policy (OSTP) should lead an interagency policy development process to create screening guidance for AI-enabled automated labs. The guidance will build upon stakeholder consultations conducted under Recommendation 1, as well as the recent ASPR-led update to the Screening Framework Guidance, ongoing OSTP-led consultations on DURC oversight, and OSTP-led development of a nucleic acid synthesis screening framework under Section 4.4(b) of the AI EO.

The guidance should describe processes for customer screening and experiment screening. It should address biosafety and biosecurity risks associated with unsupervised engineering of biological materials, including recommended practices for:

- Dual-use review for automated protocols. Automated protocols typically undergo human review because operators of automated labs don’t want to run experiments that fail. Guidance should outline when protocols should undergo additional review for dual-use; the categories of experiments in the DURC policy provide a starting point.

- Identifying biological agents in automated labs. When agents are received from customers, their DNA should be sequenced to ensure they have been labeled correctly. Agents engineered through unsupervised experiments should also be screened after some number of closed-loop experimental cycles.

Recommendation 3. Invest in predictive biology for risk mitigation

The Department of Homeland Security (DHS) and Department of Defense (DOD), building off the evaluation they will conduct under 4.4(a)(i) of the AI EO, should fund programs to develop predictive models to improve biorisk management in AI-enabled automated labs.

It is presently difficult to predict the behavior of biological systems, and there is little focus specifically on predictive biology for risk mitigation. AI could perform real-time risk evaluations and anomaly detection in self-driving labs; for example, autonomous science researchers have highlighted the need to develop models that can recognize novel compounds with potentially harmful properties. The government can actively contribute to innovation in this area; the IARPA Fun GCAT program, which developed methods to assess whether DNA sequences pose a threat, is an example of relevant government-funded AI capability development.

An evidence-based approach to identifying and mitigating biological risks from AI-enabled biological tools

Richard Moulange & Sophie Rose

Both AI-enabled biological tools and large language models (LLMs) have advanced rapidly in a short time. While these tools have immense potential to drive innovation, they could also threaten the United States’ national security.

AI-enabled biological tools refer to AI tools trained on biological data using machine learning techniques, such as deep neural networks. They can already design novel proteins, viral vectors and other biological agents, and may in the future be able to fully automate parts of the biomedical research and development process.

Sophisticated state and non-state actors could potentially use AI-enabled tools to more easily develop biological weapons (BW) or design them to evade existing countermeasures . As accessibility and ease of use of these tools improves, a broader pool of actors is enabled.

This threat was recognized by the recent Executive Order on Safe AI, which calls for evaluation of all AI models (not just LLMs) for capabilities enabling chemical, biological, radiological and nuclear (CBRN) threats, and recommendations for how to mitigate identified risks.

Developing novel AI-enabled biological tool -evaluation systems within 270 days, as directed by the Executive Order §4.1(b), will be incredibly challenging, because:

- There appears to have been little progress on developing benchmarks or evaluations for AI-enabled biological tools in academia or industry, and government capacity (in the U.S. and the UK) has so far focused on model evaluations for LLMs, not AI-enabled biological tools.

- Capabilities are entirely dual-use: for example, tools that can predict which viral mutations improve vaccine targeting can very likely identify mutations that increase vaccine evasion.

To achieve this, it will be important to identify and prioritize those AI-enabled biological tools that pose the most urgent risks, and balance these against the potential benefits. However, government agencies and tool developers currently seem to struggle to:

- Specify which AI–bio capabilities are the most concerning;

- Determine the scope of AI–enabled tools that pose significant biosecurity risks; and

- Anticipate how these risks might evolve as more tools are developed and integrated

Some frontier AI labs have assessed the biological risks associated with LLMs , but there is no public evidence of AI-enabled biological tool evaluation or red-teaming, nor are there currently standards for developing—or requirements to implement—them. The White House Executive Order will build upon industry evaluation efforts for frontier models, addressing the risk posed by LLMs, but analogous efforts are needed for AI-enabled biological tools.

Given the lack of research on AI-enabled biological tool evaluation, the U.S. Government must urgently stand up a specific program to address this gap and meet the Executive Order directives. Without evaluation capabilities, the United States will be unable to scope regulations around the deployment of these tools, and will be vulnerable to strategic surprise. Doing so now is essential to capitalize on the momentum generated by the Executive Order, and comprehensively address the relevant directives within 270 days.

Recommendations

The U.S. Government should urgently acquire the ability to evaluate biological capabilities of AI-enabled biological tools via a specific joint program at the Departments of Energy (DOE) and Homeland Security (DHS), in collaboration with other relevant agencies.

Strengthening the U.S. Government’s ability to evaluate models prior to their deployment is analogous to responsible drug or medical device development: we must ensure novel products do not cause significant harm, before making them available for widespread public use.

The objective(s) of this program would be:

- Develop state-of-the-art evaluations for dangerous biological capabilities

- Establish Department of Energy (DOE) sandbox for testing evaluations on a variety of AI-enabled biological tools

- Produce standards for performance, structure and securitisation of capability evaluations

- Use evaluations of the maturity and capabilities of AI-enabled biological tools to inform U.S. Intelligence Community assessments of potential adversaries’ current bio-weapon capabilities

Implementation

- Standing up and sustaining DOE and DHS’s ‘Bio Capability Evaluations’ program will require an initial investment of $2 million USD and $2 million/year until 2030 to sustain. Funding should draw on existing National Intelligence Program appropriations.

- Supporting DOE to establish a sandbox for conducting ongoing evaluations of AI-enabled biological tools will require investment of $10 million annually. This could be appropriated to DOE under the National Defense Authorization Act (Title II: Research, Development, Test and Evaluation), which establishes funding for AI defense programs.

Lead agencies and organizations

- U.S. Department of Energy (DOE) can draw on expertise from National Labs, which often evaluate—and develop risk mitigation measures for—technologies with CBRN implications.

- U.S. Department of Homeland Security (DHS) can inform threat assessments and inform biological risk mitigation strategy and policy.

- National Institute for Standards and Technology (NIST) can develop the standards for the performance, structure and securitization of dangerous capability evaluations.

- U.S. Department of Health and Human Services (HHS) can leverage their AI Community of Practice (CoP) as an avenue for communicating with BT developers and researchers. The National Institutes of Health (NIH) funds relevant research and will therefore need to be involved in evaluations.

They should coordinate with other relevant agencies, including but not limited to the Department of Defense, and the National Counterproliferation and Biosecurity Center.

The benefits of implementing this program include:

Leveraging public-private expertise. Public-private partnerships (involving both academia and industry) will produce comprehensive evaluations that incorporate technical nuances and national security considerations. This allows the U.S. Government to retain access to diverse expertise whilst safeguarding the sensitive nature of dangerous capability evaluations contents and output—which is harder to guarantee with third-party evaluators.

Enabling evidence-based regulatory decision-making. Evaluating AI tools allows the U.S. Government to identify the models and capabilities that pose the greatest biosecurity risks, enabling effective and appropriately-scoped regulations. Avoiding blanket regulations results in a better balance of the considerations of innovation and economic growth with those of risk mitigation and security.

Broad scope of evaluation application. AI-enabled biological tools vary widely in their application and current state of maturity. Subsequently, what constitutes a concerning, or dangerous, capability may vary widely across tools, necessitating the development of tailored evaluations.

A path to self-governance of AI-enabled biology

Oliver Crook

Artificial intelligence (AI) and machine learning (ML) are being increasingly employed for the design of proteins with specific functions. By adopting these tools, researchers have been able to achieve high success rates designing and generating proteins with certain properties. This will accelerate the design of new medical therapies such as antibodies, vaccines and biotechnologies such as nanopores. However, AI-enabled biology could also be used for malicious – rather than benevolent – purposes. Despite this potential for misuse, there is little to no oversight over what tools can be developed, the data they can be trained on, and how the developed tool can be deployed. While more robust guardrails are needed, any proposed regulation must also be balanced, so that it encourages responsible innovation.

AI-enabled biology is still a specialized methodology that requires significant technical expertise, access to powerful computational resources, and sufficient quantities of data. As the performance of these models increases, their potential for generating significantly harmful agents grows as well. With AI-enabled biology becoming more accessible, the value of guardrails early on in the development of this technology is paramount before widespread technology proliferation makes it challenging – or impossible – to govern. Furthermore, smart policies implemented now can allow us to better monitor the pace of development, and guide reasonable and measured policy in the future.

Here, we propose that fostering self-governance and self-reporting is a scalable approach to this policy challenge. During the research, development and deployment (RDD) phases, practitioners report on a pre-decided checklist and make an ethics declaration. While advancing knowledge is an academic imperative, funders, editors, and institutions need to be fully aware of the risks of some research and have opportunities to adjust the RDD plan, as needed, to ensure that AI models are developed responsibly. Whilst similar policies have already been introduced by some machine learning venues (1, 2, 3), the proposal here seeks to strengthen, formalize and broaden the scope of those proposals. Ultimately, the checklist and ethics declarations seek confirmation from multiple parties during each of the RDD phases that the research is of fundamental public good. We recommend that the National Institutes of Health (NIH) leads on this policy challenge and builds upon decades of experience on related issues.

The recent executive order framework for safe AI provides an opportunity to build upon initial recommendations on reporting but with greater specificity on AI-enabled biology. The proposal fits squarely into the desire under section 4.4 for the executive order to reduce the misuse of AI to assist in the development and design of biological weapons.

Recommendations

We propose the following recommendations:

Recommendation 1. With leadership from the NIH Office of Science Policy, life sciences funding agencies should coordinate development of a checklist in consultation with AI-enabled biology model developers, non-government funders, publishers, and nonprofit organizations that evaluates risks and benefits of the model.

The checklist should take the form of a list of pre-specified questions and guided free-form text. The questions should gather basic information about the models employed: their size, their compute usage and the data they were trained on. This will allow them to be characterized in comparison with existing models. The intended use of the model should be stated along with any dual-use behavior of the model that has already been identified. The document should also reveal whether any strategies have been employed to mitigate the harmful capabilities that the model might demonstrate.

At each stage of the RDD, the predefined checklist for that stage is completed and submitted to the institution.

Recommendation 2. Each institute employing AI-enabled biology across RDD should elect a small internally-led, cross-disciplinary committee to examine and evaluate, at each phase, the submitted checklists. To reduce workload, only models that fall under the executive order specifications or dual use research of concern (DURC) should be considered. The committee makes recommendations based on the value of the work. The committee then posts their proceedings of meetings publicly (as for Institutional Biosafety Committees), except for publicly sensitive intellectual property. If the benefit of the work cannot be evaluated or the outcomes are largely unpredictable the committee should work with the model developer to adjust the RDD plan, as needed. The checklist and institutional signature are then made available to NIH and funding agencies and, upon completion of the project, such as at publication, the checklists are made publicly available.

By following these recommendations, high-risk research will be caught at an institutional level and internal recommendations can facilitate timely mitigation of harms. Public release of committee deliberations and ethics checklists will enable third parties to scrutinize model development and raise concerns. This approach ensures a hierarchy of oversight that allows individuals, institutes, funders and governments to identify and address risks before AI models are developed rather than after the work has been completed.

We recommend that $5 million dollars be provided to the NIH Office of Science Policy to implement this policy. This money would cover hiring a ‘Director of Ethics of AI-enabled Biology’ to oversee this research and several full time researchers/administrators ($1.5 million). These employees should conduct outreach to the institutes to ensure that the policy is understood, to answer any questions, and to facilitate community efforts to develop and update the checklist ($1 million). Additional grants should be made available to allow researchers and non-profit organizations to audit the checklists and committees, evaluate the checklists, and research the socio-technological implications of the checklists ($1.5 million). The rapid pace of development of AI means that the checklists will need to be reevaluated on a yearly basis, with $1 million of funding available to evaluate the impact of these grants. Funding should grow inline with the pace of technological development. Specific subareas of AI-enabled biology may need specific checklists depending on their risk profile.

This recommendation is scalable; once the checklists have been made, the majority of the work is placed in the hands of practitioners rather than government. In addition, these checklists provide valuable information to inform future governance agendas. For example, limiting computational resources to curtail dangerous applications (compute governance) cannot proceed without detailed understanding of how much compute is required to achieve certain goals. Furthermore, it places responsibility on practitioners requiring them to engage with the risk that could arise from their work, with institutes having the ability to make recommendations on how to reduce the risks from models. This approach draws on similar frameworks that support self-governance, such as oversight by Institutional Biosafety Committees (IBCs). This self-governance proposal is well complemented by alternative policies around open access of AI-enabled biology tools, as well as policies strengthening DNA synthesis screening protocols to catch misuse at different places along a broadly-defined value chain.

A Global Compute Cloud to Advance Safe Science and Innovation

Samuel Curtis

Advancements in deep learning have ushered in significant progress in the predictive accuracy and design capabilities of biological design tools (BDTs), opening new frontiers in science and medicine through the design of novel functional molecules. However, these same technologies may be misused to create dangerous biological materials. Mitigating the risks of misuse of BDTs is complicated by the need to maintain openness and accessibility among globally-distributed research and development communities. One approach toward balancing both risks of misuse and the accessibility requirements of development communities would be to establish a federally-funded and globally-accessible compute cloud through which developers could provide secure access to their BDTs.

The term “biological design tools” (or “BDTs”) is a neologism referring to “systems trained on biological data that can help design new proteins or other biological agents.” Computational biological design is, in essence, a data-driven optimization problem. Consequently, over the past decade, breakthroughs in deep learning have propelled progress in computational biology. Today, many of the most advanced BDTs incorporate deep learning techniques and are used and developed by networks of academic researchers distributed across the globe. For example, the Rosetta Software Suite, one of the most popular BDT software packages, is used and developed by Rosetta Commons—an academic consortium of over 100 principal investigators spanning five continents.

Contributions of BDTs to science and medicine are difficult to overstate. There are already several AI-designed molecules in early-stage clinical trials. BDTs are now used to identify new drug targets, design new therapeutics, and construct faster and less expensive drug synthesis techniques. There are already several AI-designed molecules in early-stage clinical trials.

Unfortunately, these same BDTs can be used for harm. They may be used to create pathogens that are more transmissible or virulent than known agents, target specific sub-populations, or evade existing DNA synthesis screening mechanisms. Moreover, developments in other classes of AI systems portend reduced barriers to BDT misuse. One group at RAND Corporation found that language models could provide guidance that could assist in planning and executing a biological attack, and another group from MIT demonstrated how language models could be used to elicit instructions for synthesizing a potentially pandemic pathogen. Similarly, language models could accelerate the acquisition or interpretation of information required to misuse BDTs. Technologies on the horizon, such as multimodal “action transformers,” could help individuals navigate BDT software, further lowering barriers to misuse.

Research points to several measures BDT developers could employ to reduce risks of misuse, such as securing machine learning model weights (the numerical values representing the learned patterns and information that the model has acquired during training), implementing structured access controls, and adopting Know Your Customer (KYC) processes. However, precaution would have to be taken to not unduly limit access to these tools, which could, in aggregate, impede scientific and medical advancement. For any given tool, access limitations risk diminishing its competitiveness (its available features and performance relative to other tools). These tradeoffs extend to their developers’ interests, whereby stifling the development of tools may jeopardize research, funding, and even career stability. The difficulties of striking a balance in managing risk are compounded by the decentralized, globally-distributed nature of BDT development communities. To suit their needs, risk-mitigation measures should involve minimal, if any, geographic or political restrictions placed on access while simultaneously expanding the ability to monitor for and respond to indicators of risk or patterns of misuse.

One approach that would balance the simultaneous needs for accessibility and security would be for the federal government to establish a global compute cloud for academic research, bearing the costs of running servers and maintaining the security of the cloud infrastructure in the shared interests of advancing public safety and medicine. A compute cloud would enable developers to provide access to their tools through computing infrastructure managed—and held to specific security standards—by U.S. public servants. Such infrastructure could even expand access for researchers, including underserved communities, through fast-tracked grants in the form of computational resources.

However, if computing infrastructure is not designed to reflect the needs of the development community—namely, its global research community—it is unlikely to be adopted in practice. Thus, to fully realize the potential of a compute cloud among BDT development communities, access to the infrastructure should extend beyond U.S. borders. At the same time, the efforts should ensure the cloud has requisite monitoring capabilities to identify risk indicators or patterns of misuse and impose access restrictions flexibly. By balancing oversight with accessibility, a thoughtfully-designed compute cloud could enable transparency and collaboration while mitigating the risks of these emerging technologies.

Recommendations

The U.S. government should establish a federally-funded, globally-accessible compute cloud through which developers could securely provide access to BDTs. In fact, the Biden Administration’s October 2023 “Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence” (the “AI EO”) lays groundwork by establishing a pilot program of a National AI Research Resource (NAIRR)—a shared research infrastructure providing AI researchers and students with expanded access to computational resources, high-quality data, educational tools, and user support. Moving forward, to increase the pilot program’s potential for adoption by BDT developers and users, relevant federal departments and agencies should take concerted action in the timelines circumscribed by the AI EO to address the practical requirements of BDT development communities: the simultaneous need to expand access outside U.S. borders while bolstering the capacity to monitor for misuse.

It is important to note that a federally-funded compute cloud has been years in the making. The National AI Initiative Act of 2020 directed the National Science Foundation (NSF), in consultation with the Office of Science and Technology Policy (OSTP), to establish a task force to create a roadmap for the NAIRR. In January 2023, the NAIRR Task Force released its final report, “Strengthening and Democratizing the U.S. Artificial Intelligence Innovation Ecosystem,” which presented a detailed implementation plan for establishing the NAIRR. The Biden Administration’s AI EO then directed the Director of NSF, in coordination with the heads of agencies deemed appropriate by the Director, to launch a pilot program “consistent with past recommendations of the NAIRR Task Force.”

However, the Task Force’s past recommendations are likely to fall short of the needs of BDT development communities (not to mention other AI development communities). In its report, the Task Force described NAIRR’s primary user groups as “U.S.-based AI researchers and students at U.S. academic institutions, non-profit organizations, Federal agencies or FFRDCs, or startups and small businesses awarded [Small Business Innovation Research] or [Small Business Technology Transfer] funding,” and its resource allocation process is oriented toward this user base. Separately, Stanford University’s Institute for Human-centered AI (HAI) and the National Security Commission on Artificial Intelligence (NSCAI) have proposed institutions, building upon or complementing NAIRR, that would support international research consortiums (a Multilateral AI Research Institute and an International Digital Democracy Initiative, respectively), but the NAIRR Task Force’s report—upon which the AI EO’s pilot program is based—does not substantively address this user base.

In launching the NAIRR pilot program under Sec. 5.2(a)(i), the NSF should put the access and security needs of international research consortiums front and center, conferring with heads of departments and agencies with relevant scope and expertise, such as the Department of State, US Agency for International Development (USAID), Department of Education, the National Institutes of Health, and the Department of Energy. The NAIRR Operating Entity (as defined in the Task Force’s report) should investigate how funding, resource allocation, and cybersecurity could be adapted to accommodate researchers outside of U.S. borders. In implementing the NAIRR pilot program, the NSF should incorporate BDTs in their development of guidelines, standards, and best practices for AI safety and security, per Sec. 4.1, which could serve as standards with which NAIRR users should be required to comply. Furthermore, the NSF Regional Innovation Engine launched through Sec. 5(a)(ii) should consider focusing on international research collaborations, such as those in the realm of biological design.

Besides the NSF, which is charged with piloting NAIRR, relevant departments and agencies should take concerted action in implementing the AI EO to address issues of accessibility and security that are intertwined with international research collaborations. This includes but is not limited to:

- In accordance with Sec. 5.2(a)(i), the departments and agencies listed above should be tasked with investigating the access and security needs of international research collaborations and include these in the reports they are required to submit to the NSF. This should be done in concert with the development of guidelines, standards, and best practices for AI safety and security required by Sec. 4.1.

- In fulfilling the requirements of Sec. 5.2(c-d), the Under Secretary of Commerce for Intellectual Property, the Director of the United States Patent and Trademark Office, and the Secretary of Homeland Security should, in the reports and guidance on matters related to intellectual property that they are required to develop, clarify ambiguities and preemptively address challenges that might arise in the cross-border data use agreements.

- Under the terms of Sec. 5.2(h), the President’s Council of Advisors on Science and Technology should, in its development of “a report on the potential role of AI […] in research aimed at tackling major societal and global challenges,” focus on the nature of decentralized, international collaboration on AI systems used for biological design.

- Pursuant to Sec. 11(a-d), the Secretary of State, the Assistant to the President for National Security Affairs, the Assistant to the President for Economic Policy, and the Director of OSTP should focus on AI used for biological design as a use case for expanding engagements with international allies and partners, and establish a robust international framework for managing the risks and harnessing the benefits of AI. Furthermore, the Secretary of Commerce should make this use case a key feature of its plan for global engagement in promoting and developing AI standards.

The AI EO provides a window of opportunity for the U.S. to take steps toward mitigating the risks posed by BDT misuse. In doing so, it will be necessary for regulatory agencies to proactively seek to understand and attend to the needs of BDT development communities, which will increase the likelihood that government-supported solutions, such as the NAIRR pilot program—and potentially future fully-fledged iterations enacted via Congress—are adopted by these communities. By making progress toward reducing BDT misuse risk while promoting safe, secure access to cutting-edge tools, the U.S. could affirm its role as a vanguard of responsible innovation in 21st-century science and medicine.

Establish collaboration between developers of gene synthesis screening tools and AI tools trained on biological data

Shrestha Rath

Biological Design Tools (BDTs) are a subset of AI models trained on genetic and/or protein data developed for use in life sciences. These tools have recently seen major performance gains, enabling breakthroughs like accurate protein structure predictions by AlphaFold2, and alleviating a longstanding challenge in life sciences.

While promising for legitimate research, BDTs risk misuse without oversight. Because universal screening of gene synthesis is currently lacking, potential threat agents could be digitally designed with assistance from BDTs and then physically made using gene synthesis. BDTs pose particular challenges because:

- The growing number of gene synthesis orders evade current screening capabilities. Industry experts in gene synthesis companies report that a small but concerning portion of orders for synthetic nucleic acid sequences show little or no homology with known sequences in widely-used genetic databases and so are not captured by current screening techniques. Advances in BDTs are likely going to make such a scenario more common, thus exacerbating the risk of misuse of synthetic DNA. The combined use of BDTs and gene synthesis has the potential to aid the “design” and “build” steps for malicious misuse. Strengthening screening capabilities to keep up with advances in BDTs is an attractive early intervention point to prevent this misuse.

- Potential for substantial breakthroughs in BDTs. While BDTs for applications beyond protein design face significant challenges, and most are not yet mature, companies are likely to invest in generating data to improve these tools because they see significant economic value in doing so. Moreover, some AI experts speculate that if we used the same amount of computational resources as LLMs when training protein language models, we could significantly improve their performance. Thus there is significant uncertainty about rapid advances in BDTs and how they may affect the potential for misuse.

BDT development is currently concentrated among a few actors, which makes policy implementation tractable, but risks will decentralize over time away from a handful of well-resourced academic labs and private AI companies. The U.S. government should take advantage of this unique window of opportunity to implement policy guardrails while the next generation of advanced BDTs are in development.

In short, it is important that developers of BDTs work together with developers and users of gene synthesis screening tools. This will promote shared understanding of the risks around potential misuse of synthetic nucleic acids, which may be exacerbated by advances in AI.

By bringing together key stakeholders to share information and align on safety standards the U.S. government can steer these technologies to maximize benefits and minimize widespread harms. Section 4.4 (b) of the Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence (henceforth referred to as the “Executive Order”) also emphasizes mitigating risks from the “misuse of synthetic nucleic acids, which could be substantially increased by AI’s capabilities”.

Gene synthesis companies and/or organizations involved in developing gene screening mechanisms are henceforth referred to as “DNA Screener”. Academic and for-profit stakeholders developing BDTs are henceforth referred to as “BDT Developer”. Gene synthesis companies providing synthetic DNA as a service irrespective of their screening capabilities are referred to as “DNA Provider”.

Recommendations

By bringing together key stakeholders (BDT developers, DNA screeners, and security experts) to share information and align on safety standards, the U.S. government can steer these technologies to maximize benefits and minimize widespread harms. Implementing these recommendations requires allocating financial resources and coordinating interagency work.

There are near-term and long-term opportunities to improve coordination between DNA Screeners, DNA Providers (that use in-house screening mechanisms) and BDT Developers, such as to avoid future potential backlash, over-regulation and legal liability. As part of the implementation of Section 4.4 of the Executive Order, the Department of Energy and the National Institute for Standards and Technology should:

Recommendation 1. Convene BDT Developers, DNA Screeners along with ethics, security and legal experts to a) Share information on AI model capabilities and their implications for DNA sequence screening; and b) Facilitate discussion on shared safeguards and security standards. Technical security standards may include adversarial training to make the AI models robust against purposeful misuse, BDTs refusing to follow user requests when the requested action may be harmful (refusals and blacklisting), and maintaining user logs.

Recommendation 2. Create an advisory group to investigate metrics that measure performance of protein BDTs for DNA Screeners, in line with Section 4.4 (a)(ii)(A) of the Executive Order. Metrics that capture BDT performance and thus risk posed by advanced BDTs would give DNA Screeners helpful context while screening orders. For example, some current methods for benchmarking AI-enabled protein design methods focus on sequence recovery, where the backbones of natural proteins with known amino-acid sequences are passed as the input and the accuracy of the method is measured by identity between the predicted sequence and the true sequence.

Recommendation 3. Support and fund development of AI-enabled DNA screening mechanisms that will keep pace with BDTs. U.S. national laboratories should support these types of efforts because commercial incentives for such tools are lacking. IARPA’s Fun GCAT is an exemplary case in this regard.

Recommendation 4. Conduct structured red teaming for current DNA screening methods to ensure they account for functional variants of Sequence Of Concern (SOCs) that may be developed with the help of BDTs and other AI tools. Such red teaming exercises should include expert stakeholders involved in development of screening mechanisms and national security community.

Recommendation 5. Establish both policy frameworks and technical safeguards for identification of certifiable origins. Designs produced by BDTs could require a cryptographically signed certificate detailing the inputs used in the design process of their synthetic nucleic acid order, ultimately providing useful contextual information aids DNA Screeners to check for harmful intent captured in the requests made to the model.

Recommendation 6. Fund third-party evaluations of BDTs to determine how their use might affect DNA sequence screening and provide this information to those performing screening. Having these evaluations would be helpful for new, small and growing DNA Providers and alleviate the burden on established DNA Providers as screening capabilities become more sophisticated. A similar system exists in the automobile industry where insurance providers conduct their own car safety and crash tests to inform premium-related decisions.

Proliferation of open-source tools accelerates innovation and democratization of AI, and is a growing concern in the context of biological misuse. The recommendations here strengthen biosecurity screening at a key point in realizing these risks. This framework could be implemented alongside other approaches to reduce the risks that arise from BDTs. These include: introducing Know Your Customer (KYC) frameworks that monitor buyers/users of AI tools; requiring BDT developers to undergo training on assessing and mitigating dual-use risks of their work; and encouraging voluntary guidelines to reduce misuse risks, for instance, by employing model evaluations prior to release, refraining from publishing preprints or releasing model weights until such evaluations are complete. This multi-pronged approach can help ensure that AI tools are developed responsibly and that biosecurity risks are managed.

Responsible and Secure AI in Production Agriculture

Jennifer Clarke

Agriculture, food, and related industries represent over 5% of domestic GDP. The health of these industries has a direct impact on domestic food security, which equates to a direct impact on national security. In other words, food security is biosecurity is national security. As the world population continues to increase and climate change brings challenges to agricultural production, we need an efficiency and productivity revolution in agriculture. This means using less land and natural resources to produce more food and feed. For decision-makers in agriculture, the lack of human resources and narrow economic margins are driving interest in automation and properly utilizing AI to help increase productivity while decreasing waste amid increasing costs.

Congress should provide funding to support the establishment of a new office within the USDA to coordinate, enable, and oversee the use of AI in production agriculture and agricultural research.

The agriculture, food, and related industries are turning to AI technologies to enable automation and drive the adoption of precision agriculture technologies. The use of AI in agriculture often depends on proprietary approaches that have not been validated by an independent, open process. In addition, it is unclear whether AI tools aimed at the agricultural sector will address critical needs as identified by the producer community. This leads to the potential for detrimental recommendations and loss of trust across producer communities. These will impede adoption of precision agriculture technologies, which is necessary to domestic and sustainable food security.

The industry is promoting AI technologies to help yield healthier crops, control pests, monitor soil and growing conditions, organize data for farmers, help with workload, and improve a wide range of agriculture-related tasks in the entire food supply chain.

However, the use of networked technologies approaches in agriculture poses risks. AI use could add to this problem if not implemented carefully. For example, the use of biased or irrelevant data in AI development can result in poor performance, which erodes producer trust in both extension services and expert systems, hindering adoption. As adoption increases, it is likely that farmers will use a small number of available platforms; this creates centralized points of failure where a limited attack can cause disproportionate harm. The 2021 cyberattack on JBS, the world’s largest meat processor, and a 2021 ransomware attack on NEW Cooperative, which provides feed grains for 11 million farm animals in the United States, demonstrate the potential risks from agricultural cybersystems. Without established cybersecurity standards for AI systems, those systems with broad adoption across agricultural sectors will represent targets of opportunity.

As evidenced by the recent Executive Order on the Safe Secure and Trustworthy Development and Use of Artificial Intelligence and AI Safety Summit held at Bletchley Park, there is considerable interest and attention being given to AI governance and policy by both national and international regulatory bodies. There is a recognition that the risks of AI require more attention and investments in both technical and policy research.

This recognition dovetails with an increase in emphasis on the use of automation and AI in agriculture to enable adoption of new agricultural practices. Increased adoption in the short term is required to reduce greenhouse gas emissions and ensure sustainability of domestic food production. Unfortunately, trust in commercial and governmental entities among agricultural producers is low and has been eroded by corporate data policies. Fortunately, this erosion can be reversed by prompt action on regulation and policy that respects the role of the producer in food and national security. Now is the time to promote the adoption of best practices and responsible development to establish security as a habit among agricultural stakeholders.

Recommendations