Creating A Vision and Setting Course for the Science and Technology Ecosystem of 2050

The science and technology (S&T) ecosystem is a complex network that enables innovation, scientific research, and technology development. The researchers, technologists, investors, educators, policy makers, and businesses that make up this ecosystem have looked different and evolved over centuries. Now, we find ourselves at an inflection point. We are experiencing long-standing crises such as climate change, inequities in healthcare, and education; there are now new ones, including the defunding of federal and private sector efforts to foster diverse, inclusive, and accessible communities, learning, and work environments.

As a Senior Fellow at the Federation of American Scientists (FAS), I am focused on setting a vision for the future of the S&T ecosystem. This is not about making predictions; rather, it is, instead, about articulating and moving toward our collective preferred future. It includes being clear about how discoveries from the S&T ecosystem can be quickly and equitably distributed, and why the ecosystem matters.

The future I’m focused on isn’t next year, or the next presidential election – or even the one after that; many others are already having those discussions. I have my sights set on the year 2050, a future so far out that none of us can predict or forecast its details with much confidence.

This project presents an opportunity to bring together stakeholders across different backgrounds to work towards a common future state of the S&T ecosystem.

To better understand what might drive the way we live, learn, and work in 2050, I’m asking the community to share their expertise and thoughts about how key factors like research and development infrastructure and automation will shape the trajectory of the ecosystem. Specifically, we are looking at the role of automation, including robotics, computing, and artificial intelligence, in shaping how we live, learn, and work. We are examining both the transformative potential and the ethical, social, and economic implications of increasingly automated systems. We are also looking at the future of research and development infrastructure, which includes the physical and digital systems that support innovation: state-of-the-art facilities, specialized equipment, a skilled workforce, and data that enables discovery and collaboration.

To date, we’ve talked to dozens of experts in workforce development, national security, R&D facilities, forecasting, AI policy, automation, climate policy, and S&T policy to better understand what their hopes are, and what it might take to realize our preferred future. They have shared perspectives on what excites and worries them, trends they are watching, and thoughts on why science and technology matter to the U.S. My work is just beginning, and I want your help.

So, I invite you to share your vision for science and technology in 2050 through our survey.

The information shared will be used to develop a report with answers to questions like:

- What’s the closest we can get to a shared “north star” to guide the S&T ecosystem?

- What are the best mechanisms to unite S&T ecosystem stakeholders towards that “north star”?

- What is a potential roadmap for the policy, education, and workforce strategies that will move us forward together?

We know that the S&T epicenter moves around the world as empires, dynasties, and governments rise and fall. The United States has enjoyed the privilege of being the engine of this global ecosystem, fueled by public and private investments and directed by aspirational visions to address our nation’s pressing issues. As a nation, we’ve always challenged ourselves to aspire to greater heights. We must re-commit to this ambition in the face of global competition with clarity, confidence, and speed.

As we stand at this inflection point, it is imperative we ask ourselves – as scientists, and as a nation – what is the purpose of the S&T ecosystem today? Who, or what, should benefit from the risks, capital, and effort poured into this work? Whether you are deeply steeped in the science and technology community, or a concerned citizen who recognizes how your life can be improved by ongoing innovation, please share your thoughts by August 31.

In addition to the survey, we’ll be exploring these questions with subject matter experts, and there will be other ways to engage – to learn more, reach out to me at QBrown@fas.org.

It’s become acutely clear to me that the ecosystem we live in will be shaped by those who speak up, whether it be few or many, and I welcome you to make your voice heard.

Bringing Transparency to Federal R&D Infrastructure Costs

There is an urgent need to manage the escalating costs of federal R&D infrastructure and the increasing risk that failing facilities pose to the scientific missions of the federal research enterprise. Many of the laboratories and research support facilities operating under the federal research umbrella are near or beyond their life expectancy, creating significant safety hazards for federal workers and local communities. Unfortunately, the nature of the federal budget process forces agencies into a position where the actual cost of operations are not transparent in agency budget requests to OMB before becoming further obscured to appropriators, leading to potential appropriations disasters (including an approximately 60% cut to National Institute of Standards and Technology (NIST) facilities in 2024 after the agency’s challenges became newsworthy). Providing both Congress and OMB with a complete accounting of the actual costs of agency facilities may break the gamification of budget requests and help the government prioritize infrastructure investments.

Challenge and Opportunity

Recent reports by the National Research Council and the National Science and Technology Council, including the congressionally-mandated Quadrennial Science and Technology Review have highlighted the dire state of federal facilities. Maintenance backlogs have ballooned in recent years, forcing some agencies to shut down research activities in strategic R&D domains including Antarctic research and standards development. At NIST, facilities outages due to failing steam pipes, electricity, and black mold have led to outages reducing research productivity from 10-40 percent. NASA and NIST have both reported their maintenance backlogs have increased to exceed 3 billion dollars. The Department of Defense forecasts that bringing their buildings up to modern standards would cost approximately 7 billion “putting the military at risk of losing its technological superiority.” The shutdown of many Antarctic science operations and collapse of the Arecibo Observatory have been placed in stark contrast with the People’s Republic of China opening rival and more capable facilities in both research domains. In the late 2010s, Senate staffers were often forced to call national laboratories, directly, to ask them what it would actually cost for the country to fully fund a particular large science activity.

This memo does not suggest that the government should continue to fund old or outdated facilities; merely that there is a significant opportunity for appropriators to understand the actual cost of our legacy research and development ecosystem, initially ramped up during the Cold War. Agencies should be able to provide a straight answer to Congress about what it would cost to operate their inventory of facilities. Likewise, Congress should be able to decide which facilities should be kept open, where placing a facility on life support is acceptable, and which facilities should be shut down. The cost of maintaining facilities should also be transparent to the Office of Management and Budget so examiners can help the President make prudent decisions about the direction of the federal budget.

The National Science and Technology Council’s mandated research and development infrastructure report to Congress is a poor delivery vehicle. As coauthors of the 2024 research infrastructure report, we can attest to the pressure that exists within the White House to provide a positive narrative about the current state of play as well as OMB’s reluctance to suggest additional funding is needed to maintain our inventory of facilities outside the budget process. It would be much easier for agencies who already have a sense of what it costs to maintain their operations to provide that information directly to appropriators (as opposed to a sanitized White House report to an authorizing committee that may or may not have jurisdiction over all the agencies covered in the report)–assuming that there is even an Assistant Director for Research Infrastructure serving in OSTP to complete the America COMPETES mandate. Current government employees suggest that the Trump Administration intends to discontinue the Research and Development Infrastructure Subcommittee.

Agencies may be concerned that providing such cost transparency to Congress could result in greater micromanagement over which facilities receive which investments. Given the relevance of these facilities to their localities (including both economic benefits and environmental and safety concerns) and the role that legacy facilities can play in training new generations of scientists, this is a matter that deserves public debate. In our experience, the wider range of factors considered by appropriation staff are relevant to investment decisions. Further, accountability for macro-level budget decisions should ultimately fall on decisionmakers who choose whether or not to prioritize investments in both our scientific leadership and the health and safety of the federal workforce and nearby communities. Facilities managers who are forced to make agonizing choices in extremely resource-constrained environments currently bear most of that burden.

Plan of Action

Recommendation 1: Appropriations committees should require from agencies annual reports on the actual cost of completed facilities modernization, operations, and maintenance, including utility distribution systems.

Transparency is the only way that Congress and OMB can get a grip on the actual cost of running our legacy research infrastructure. This should be done by annual reporting to the relevant appropriators the actual cost of facilities operations and maintenance. Other costs that should be accounted for include obligations to international facilities (such as ITER) and facilities and collections that are paid for by grants (such as scientific collections which support the bioeconomy). Transparent accounting of facilities costs against what an administration chooses to prioritize in the annual President’s Budget Request may help foster meaningful dialogue between agencies, examiners, and appropriations staff.

The reports from agencies should describe the work done in each building and impact of disruption. Using the NIST as an example, the Radiation Physics Building (still without the funding to complete its renovation) is crucial to national security and the medical community. If it were to go down (or away), every medical device in the United States that uses radiation would be decertified within 6 months, creating a significant single point of failure that cannot be quickly mitigated. The identification of such functions may also enable identification of duplicate efforts across agencies.

The costs of utility systems should be included because of the broad impacts that supporting infrastructure failures can have on facility operations. At NIST’s headquarters campus in Maryland, the entire underground utility distribution system is beyond its designed lifespan and suffering nonstop issues. The Central Utility Plant (CUP), which creates steam and chilled water for the campus, is in a similar state. The CUP’s steam distribution system will be at the complete end of life (per forensic testing of failed pipes and components) in less than a decade and potentially as soon as 2030. If work doesn’t start within the next year (by early 2026), it is likely the system could go down. This would result in a complete loss of heat and temperature control on the campus; particularly concerning given the sensitivity of modern experiments and calibrations to changes in heat and humidity. Less than a decade ago, NASA was forced to delay the launch of a satellite after NIST’s steam system was down for a few weeks and calibrations required for the satellite couldn’t be completed.

Given the varying business models for infrastructure around the Federal government, standardization of accounting and costs may be too great a lift–particularly for agencies that own and operate their own facilities (government owned, government operated, or GOGOs) compared with federally funded research and development centers (FFRDCs) operated by companies and universities (government owned, contractor operated, or GOCOs).

These reports should privilege modernization efforts, which according to former federal facilities managers should help account for 80-90 percent of facility revitalization, while also delivering new capabilities that help our national labs maintain (and often re-establish) their world-leading status. It would also serve as a potential facilities inventory, allowing appropriators the ability to de-conflict investments as necessary.

It would be far easier for agencies to simply provide an itemized list of each of their facilities, current maintenance backlog, and projected costs for the next fiscal year to both Congress and OMB at the time of annual budget submission to OMB. This should include the total cost of operating facilities, projected maintenance costs, any costs needed to bring a federal facility up to relevant safety and environmental codes (many are not). In order to foster public trust, these reports should include an assessment of systems that are particularly at risk of failure, the risk to the agency’s operations, and their impact on surrounding communities, federal workers, and organizations that use those laboratories. Fatalities and incidents that affect local communities, particularly in laboratories intended to improve public safety, are not an acceptable cost of doing business. These reports should be made public (except for those details necessary to preserve classified activities).

Recommendation 2: Congress should revisit the idea of a special building fund from the General Services Administration (GSA) from which agencies can draw loans for revitalization.

During the first Trump Administration, Congress considered the establishment of a special building fund from the GSA from which agencies could draw loans at very low interest (covering the staff time of GSA officials managing the program). This could allow agencies the ability to address urgent or emergency needs that happen out of the regular appropriations cycle. This approach has already been validated by the Government Accountability Office for certain facilities, who found that “Access to full, upfront funding for large federal capital projects—whether acquisition, construction, or renovation—could save time and money.” Major international scientific organizations that operate large facilities, including CERN (the European Organization for Nuclear Research), have similar ability to take loans to pay for repairs, maintenance, or budget shortfalls that helps them maintain financial stability and reduce the risk of escalating costs as a result of deferred maintenance.

Up-front funding for major projects enabled by access to GSA loans can also reduce expenditures in the long run. In the current budget environment, it is not uncommon for the cost of major investments to double due to inflation and doing the projects piecemeal. In 2010, NIST proposed a renovation of its facilities in Boulder with an expected cost of $76 million. The project, which is still not completed today, is now estimated to cost more than $450 million due to a phased approach unsupported by appropriations. Productivity losses as a result of delayed construction (or a need to wait for appropriations) may have compounding effects on industry that may depend on access to certain capabilities and harm American competitiveness, as described in the previous recommendation.

Conclusion

As the 2024 RDI Report points out “Being a science superpower carries the burden of supporting and maintaining the advanced underlying infrastructure that supports the research and development enterprise.” Without a transparent accounting of costs it is impossible for Congress to make prudent decisions about the future of that enterprise. Requiring agencies to provide complete information to both Congress and OMB at the beginning of each year’s budget process likely provides the best chance of allowing us to address this challenge.

Use Artificial Intelligence to Analyze Government Grant Data to Reveal Science Frontiers and Opportunities

President Trump challenged the Director of the Office of Science and Technology Policy (OSTP), Michael Kratsios, to “ensure that scientific progress and technological innovation fuel economic growth and better the lives of all Americans”. Much of this progress and innovation arises from federal research grants. Federal research grant applications include detailed plans for cutting-edge scientific research. They describe the hypothesis, data collection, experiments, and methods that will ultimately produce discoveries, inventions, knowledge, data, patents, and advances. They collectively represent a blueprint for future innovations.

AI now makes it possible to use these resources to create extraordinary tools for refining how we award research dollars. Further, AI can provide unprecedented insight into future discoveries and needs, shaping both public and private investment into new research and speeding the application of federal research results.

We recommend that the Office of Science and Technology Policy (OSTP) oversee a multiagency development effort to fully subject grant applications to AI analysis to predict the future of science, enhance peer review, and encourage better research investment decisions by both the public and the private sector. The federal agencies involved should include all the member agencies of the National Science and Technology Council (NSTC).

Challenge and Opportunity

The federal government funds approximately 100,000 research awards each year across all areas of science. The sheer human effort required to analyze this volume of records remains a barrier, and thus, agencies have not mined applications for deep future insight. If agencies spent just 10 minutes of employee time on each funded award, it would take 16,667 hours in total—or more than eight years of full-time work—to simply review the projects funded in one year. For each funded award, there are usually 4–12 additional applications that were reviewed and rejected. Analyzing all these applications for trends is untenable. Fortunately, emerging AI can analyze these documents at scale. Furthermore, AI systems can work with confidential data and provide summaries that conform to standards that protect confidentiality and trade secrets. In the course of developing these public-facing data summaries, the same AI tools could be used to support a research funder’s review process.

There is a long precedent for this approach. In 2009, the National Institutes of Health (NIH) debuted its Research, Condition, and Disease Categorization (RCDC) system, a program that automatically and reproducibly assigns NIH-funded projects to their appropriate spending categories. The automated RCDC system replaced a manual data call, which resulted in savings of approximately $30 million per year in staff time, and has been evolving ever since. To create the RCDC system, the NIH pioneered digital fingerprints of every scientific grant application using sophisticated text-mining software that assembled a list of terms and their frequencies found in the title, abstract, and specific aims of an application. Applications for which the fingerprints match the list of scientific terms used to describe a category are included in that category; once an application is funded, it is assigned to categorical spending reports.

NIH staff soon found it easy to construct new digital fingerprints for other things, such as research products or even scientists, by scanning the title and abstract of a public document (such as a research paper) or by all terms found in the existing grant application fingerprints associated with a person.

NIH review staff can now match the digital fingerprints of peer reviewers to the fingerprints of the applications to be reviewed and ensure there is sufficient reviewer expertise. For NIH applicants, the RePORTER webpage provides the Matchmaker tool to create digital fingerprints of title, abstract, and specific aims sections, and match them to funded grant applications and the study sections in which they were reviewed. We advocate that all agencies work together to take the next logical step and use all the data at their disposal for deeper and broader analyses.

We offer five recommendations for specific use cases below:

Use Case 1: Funder support. Federal staff could use AI analytics to identify areas of opportunity and support administrative pushes for funding.

When making a funding decision, agencies need to consider not only the absolute merit of an application but also how it complements the existing funded awards and agency goals. There are some common challenges in managing portfolios. One is that an underlying scientific question can be common to multiple problems that are addressed in different portfolios. For example, one protein may have a role in multiple organ systems. Staff are rarely aware of all the studies and methods related to that protein if their research portfolio is restricted to a single organ system or disease. Another challenge is to ensure proper distribution of investments across a research pipeline, so that science progresses efficiently. Tools that can rapidly and consistently contextualize applications across a variety of measures, including topic, methodology, agency priorities, etc., can identify underserved areas and support agencies in making final funding decisions. They can also help funders deliberately replicate some studies while reducing the risk of unintentional duplication.

Use Case 2: Reviewer support. Application reviewers could use AI analytics to understand how an application is similar to or different from currently funded federal research projects, providing reviewers with contextualization for the applications they are rating.

Reviewers are selected in part for their knowledge of the field, but when they compare applications with existing projects, they do so based on their subjective memory. AI tools can provide more objective, accurate, and consistent contextualization to ensure that the most promising ideas receive funding.

Use Case 3: Grant applicant support: Research funding applicants could be offered contextualization of their ideas among funded projects and failed applications in ways that protect the confidentiality of federal data.

NIH has already made admirable progress in this direction with their Matchmaker tool—one can enter many lines of text describing a proposal (such as an abstract), and the tool will provide lists of similar funded projects, with links to their abstracts. New AI tools can build on this model in two important ways. First, they can help provide summary text and visualization to guide the user to the most useful information. Second, they can broaden the contextual data being viewed. Currently, the results are only based on funded applications, making it impossible to tell if an idea is excluded from a funded portfolio because it is novel or because the agency consistently rejects it. Private sector attempts to analyze award information (e.g., Dimensions) are similarly limited by their inability to access full applications, including those that are not funded. AI tools could provide high-level summaries of failed or ‘in process’ grant applications that protect confidentiality but provide context about the likelihood of funding for an applicant’s project.

Use Case 4: Trend mapping. AI analyses could help everyone—scientists, biotech, pharma, investors— understand emerging funding trends in their innovation space in ways that protect the confidentiality of federal data.

The federal science agencies have made remarkable progress in making their funding decisions transparent, even to the point of offering lay summaries of funded awards. However, the sheer volume of individual awards makes summarizing these funding decisions a daunting task that will always be out of date by the time it is completed. Thoughtful application of AI could make practical, easy-to-digest summaries of U.S. federal grants in close to real time, and could help to identify areas of overlap, redundancy, and opportunity. By including projects that were unfunded, the public would get a sense of the direction in which federal funders are moving and where the government might be underinvested. This could herald a new era of transparency and effectiveness in science investment.

Use Case 5: Results prediction tools. Analytical AI tools could help everyone—scientists, biotech, pharma, investors—predict the topics and timing of future research results and neglected areas of science in ways that protect the confidentiality of federal data.

It is standard practice in pharmaceutical development to predict the timing of clinical trial results based on public information. This approach can work in other research areas, but it is labor-intensive. AI analytics could be applied at scale to specific scientific areas, such as predictions about the timing of results for materials being tested for solar cells or of new technologies in disease diagnosis. AI approaches are especially well suited to technologies that cross disciplines, such as applications of one health technology to multiple organ systems, or one material applied to multiple engineering applications. These models would be even richer if the negative cases—the unfunded research applications—were included in analyses in ways that protect the confidentiality of the failed application. Failed applications may signal where the science is struggling and where definitive results are less likely to appear, or where there are underinvested opportunities.

Plan of Action

Leadership

We recommend that OSTP oversee a multiagency development effort to achieve the overarching goal of fully subjecting grant applications to AI analysis to predict the future of science, enhance peer review, and encourage better research investment decisions by both the public and the private sector. The federal agencies involved should include all the member agencies of the NSTC. A broad array of stakeholders should be engaged because much of the AI expertise exists in the private sector, the data are owned and protected by the government, and the beneficiaries of the tools would be both public and private. We anticipate four stages to this effort.

Recommendation 1. Agency Development

Pilot: Each agency should develop pilots of one or more use cases to test and optimize training sets and output tools for each user group. We recommend this initial approach because each funding agency has different baseline capabilities to make application data available to AI tools and may also have different scientific considerations. Despite these differences, all federal science funding agencies have large archives of applications in digital formats, along with records of the publications and research data attributed to those awards.

These use cases are relatively new applications for AI and should be empirically tested before broad implementation. Trend mapping and predictive models can be built with a subset of historical data and validated with the remaining data. Decision support tools for funders, applicants, and reviewers need to be tested not only for their accuracy but also for their impact on users. Therefore, these decision support tools should be considered as a part of larger empirical efforts to improve the peer review process.

Solidify source data: Agencies may need to enhance their data systems to support the new functions for full implementation. OSTP would need to coordinate the development of data standards to ensure all agencies can combine data sets for related fields of research. Agencies may need to make changes to the structure and processing of applications, such as ensuring that sections to be used by the AI are machine-readable.

Recommendation 2. Prizes and Public–Private Partnerships

OSTP should coordinate the convening of private sector organizations to develop a clear vision for the profound implications of opening funded and failed research award applications to AI, including predicting the topics and timing of future research outputs. How will this technology support innovation and more effective investments?

Research agencies should collaborate with private sector partners to sponsor prizes for developing the most useful and accurate tools and user interfaces for each use case refined through agency development work. Prize submissions could use test data drawn from existing full-text applications and the research outputs arising from those applications. Top candidates would be subject to standard selection criteria.

Conclusion

Research applications are an untapped and tremendously valuable resource. They describe work plans and are clearly linked to specific research products, many of which, like research articles, are already rigorously indexed and machine-readable. These applications are data that can be used for optimizing research funding decisions and for developing insight into future innovations. With these data and emerging AI technologies, we will be able to understand the trajectory of our science with unprecedented breadth and insight, perhaps to even the same level of accuracy that human experts can foresee changes within a narrow area of study. However, maximizing the benefit of this information is not inevitable because the source data is currently closed to AI innovation. It will take vision and resources to build effectively from these closed systems—our federal science agencies have both, and with some leadership, they can realize the full potential of these applications.

This memo produced as part of the Federation of American Scientists and Good Science Project sprint. Find more ideas at Good Science Project x FAS

Improving Research Transparency and Efficiency through Mandatory Publication of Study Results

Scientists are incentivized to produce positive results that journals want to publish, improving the chances of receiving more funding and the likelihood of being hired or promoted. This hypercompetitive system encourages questionable research practices and limits disclosure of all research results. Conversely, the results of many funded research studies never see the light of day, and having no written description of failed research leads to systemic waste, as others go down the same wrong path. The Office of Science and Technology Policy (OSTP) should mandate that all grants must lead to at least one of two outputs: 1) publication in a journal that accepts null results (e.g., Public Library of Science (PLOS) One, PeerJ, and F1000Research), or 2) public disclosure of the hypothesis, methodology, and results to the funding agency. Linking grants to results creates a more complete picture of what has been tried in any given field of research, improving transparency and reducing duplication of effort.

Challenge and Opportunity

There is ample evidence that null results are rarely published. Mandated publication would ensure all federal grants have outputs, whether hypotheses were supported or not, reducing repetition of ideas in future grant applications. More transparent scientific literature would expedite new breakthroughs and reduce wasted effort, money, and time across all scientific fields. Mandating that all recipients of federal research grants publish results would create transparency about what exactly is being done with public dollars and what the results of all studies were. It would also enable learning about which hypotheses/research programs are succeeding and which are not, as well as the clinical and pre-clinical study designs that are producing positive versus null findings.

Better knowledge of research results could be applied to myriad funding and research contexts. For example, an application for a grant could state that, in a previous grant, an experiment was not conducted because previous experiments did not support it, or alternatively, the experiment was conducted but it produced a null result. In both scenarios, the outcome should be reported, either in a publication in PubMed or as a disclosure to federal science funding agencies. In another context, an experiment might be funded across multiple labs, but only the labs that obtain positive results end up publishing. Mandatory publication would enable an understanding of how robust the result is across different laboratory contexts and nuances in study design, and also why the result was positive in some contexts and null in others.

Pressure to produce novel and statistically significant results often leads to questionable research practices, such as not reporting null results (a form of publication bias), p-hacking (a statistical practice where researchers manipulate analytical or experimental procedures to find significant results that support their hypothesis, even if the results are not meaningful), hypothesizing after results are known (HARKing), outcome switching (changes to outcome measures), and many others. The replication and reproducibility crisis in science presents a major challenge for the scientific community—questionable results undermine public trust in science and create tremendous waste as the scientific community slowly course-corrects for results that ultimately prove unreliable. Studies have shown that a substantial portion of published research findings cannot be replicated, raising concerns about the validity of the scientific evidence base.

In preclinical research, one survey of 454 animal researchers estimated that 50% of animal experiments are not published, and that one of the most important causes of non-publication was a lack of statistical significance (“negative” findings). The prevalence of these issues in preclinical research undoubtedly plays a role in poor translation to the clinic as well as duplicative efforts. In clinical trials, a recent study found that 19.2% of cancer phase 3 randomized controlled trials (RCTs) had primary end point changes (i.e., outcome switching), and 70.3% of these did not report the changes in their resulting manuscripts. These changes had a statistically significant relationship with trial positivity, indicating that they may have been carried out to present positive results. Other work examining RCTs more broadly found one-third with clear inconsistencies between registered and published primary outcomes. Beyond outcome switching, many trials include “false” data. Among 526 trials submitted to the journal Anaesthesia from February 2017 to March 2020, 73 (14%) had false data, including “the duplication of figures, tables and other data from published work; the duplication of data in the rows and columns of spreadsheets; impossible values; and incorrect calculations.”

Mandatory publication for all grants would help change the incentives that drive the behavior in these examples by fundamentally altering the research and publication processes. At the conclusion of a study that obtained null results, this scientific knowledge would be publicly available to scientists, the public, and funders. All grant funding would have outputs. Scientists could not then repeatedly apply for grants based on failed previous experiments, and they would be less likely to receive funding for research projects that have already been tried, and failed, by others. The cumulative, self-correcting nature of science cannot be fully realized without transparency around what worked and what did not work.

Adopting mandatory publication of results from federally funded grants would also position the U.S. as a global leader in research integrity, matching international initiatives such as the UK Reproducibility Network and European Open Science Cloud, which promote similar reforms. By embracing mandatory publication, the U.S. will enhance its own research enterprise and set a standard for other nations to follow.

Plan of Action

Recommendation 1. The White House should issue a directive to federal research funding agencies that mandates public disclosure of research results from all federal grants, including null results, unless they reveal intellectual property or trade secrets. To ensure lasting reform to America’s research enterprise , Congress could pass a law requiring such disclosures.

Recommendation 2. The National Science and Technology Council (NSTC) should develop guidelines for agencies to implement mandatory reporting. Successful implementation requires that researchers are well-informed and equipped to navigate this process. NSTC should coordinate with agencies to establish common guidelines for all agencies to reduce confusion and establish a uniform policy. In addition, agencies should create and disseminate detailed guidance documents that outline best practice for studies reporting null results, including step-by-step instructions on how to prepare and submit null studies to journals (and their differing guidelines) or federal databases.

Conclusion

Most published research is not replicated because the research system incentivizes the publication of novel, positive results. There is a tremendous amount of research that is not published due to null results, representing an enormous amount of wasted effort, money, and time, and compromised progress and transparency of our scientific institutions. OSTP should mandate the publication of null results through existing agency authority and funding, and Congress should consider legislation to ensure its longevity.

This memo produced as part of the Federation of American Scientists and Good Science Project sprint. Find more ideas at Good Science Project x FAS

It is well understood that most scientific findings cannot be taken at face value until they are replicated or reproduced. To make science more trustworthy, transparent, and replicable, we must change incentives to only publish positive results. Publication of null results will accelerate advancement of science.

Scientific discovery is often unplanned and serendipitous, but it is abundantly clear that we can reduce the amount of waste it currently generates. By mandating outputs for all grants, we expedite a cumulative record of research, where the results of all studies are known, and we can see why experiments might be valid in one context but not another to assess the robustness of findings in different experimental contexts and labs.

While many agencies prioritize hypothesis-driven research, even exploratory research will produce an output, and these outputs should be publicly available, either as an article or by public disclosure.

Studies that produce null results can still easily share data and code, to be evaluated post-publication by the community to see if code can be refactored, refined, and improved.

The “Cadillac” version of mandatory publication would be the registered reports model, where a study has its methodology peer reviewed before data are collected (Stage 1 Review). Authors are given in-principle acceptance, whereby, as long as the scientist follows the agreed-upon methodology, their study is guaranteed publication regardless of the results. When a study is completed, it is peer reviewed again (Stage 2 Review) simply to confirm the agreed-upon methodology was followed. In the absence of this registered reports model, we should at least mandate transparent publication via journals that publish null results, or via public federal disclosure.

Automating Scientific Discovery: A Research Agenda for Advancing Self-Driving Labs

Despite significant advances in scientific tools and methods, the traditional, labor-intensive model of scientific research in materials discovery has seen little innovation. The reliance on highly skilled but underpaid graduate students as labor to run experiments hinders the labor productivity of our scientific ecosystem. An emerging technology platform known as Self-Driving Labs (SDLs), which use commoditized robotics and artificial intelligence for automated experimentation, presents a potential solution to these challenges.

SDLs are not just theoretical constructs but have already been implemented at small scales in a few labs. An ARPA-E-funded Grand Challenge could drive funding, innovation, and development of SDLs, accelerating their integration into the scientific process. A Focused Research Organization (FRO) can also help create more modular and open-source components for SDLs and can be funded by philanthropies or the Department of Energy’s (DOE) new foundation. With additional funding, DOE national labs can also establish user facilities for scientists across the country to gain more experience working with autonomous scientific discovery platforms. In an era of strategic competition, funding emerging technology platforms like SDLs is all the more important to help the United States maintain its lead in materials innovation.

Challenge and Opportunity

New scientific ideas are critical for technological progress. These ideas often form the seed insight to creating new technologies: lighter cars that are more energy efficient, stronger submarines to support national security, and more efficient clean energy like solar panels and offshore wind. While the past several centuries have seen incredible progress in scientific understanding, the fundamental labor structure of how we do science has not changed. Our microscopes have become far more sophisticated, yet the actual synthesizing and testing of new materials is still laboriously done in university laboratories by highly knowledgeable graduate students. The lack of innovation in how we historically use scientific labor pools may account for stagnation of research labor productivity, a primary cause of concerns about the slowing of scientific progress. Indeed, analysis of scientific literature suggests that scientific papers are becoming less disruptive over time and that new ideas are getting harder to find. The slowing rate of new scientific ideas, particularly in the discovery of new materials or advances in materials efficiency, poses a substantial risk, potentially costing billions of dollars in economic value and jeopardizing global competitiveness. However, incredible advances in artificial intelligence (AI) coupled with the rise of cheap but robust robot arms are leading to a promising new paradigm of material discovery and innovation: Self-Driving Labs. An SDL is a platform where material synthesis and characterization is done by robots, with AI models intelligently selecting new material designs to test based on previous experimental results. These platforms enable researchers to rapidly explore and optimize designs within otherwise unfeasibly large search spaces.

Today, most material science labs are organized around a faculty member or principal investigator (PI), who manages a team of graduate students. Each graduate student designs experiments and hypotheses in collaboration with a PI, and then executes the experiment, synthesizing the material and characterizing its property. Unfortunately, that last step is often laborious and the most time-consuming. This sequential method to material discovery, where highly knowledgeable graduate students spend large portions of their time doing manual wet lab work, rate limits the amount of experiments and potential discoveries by a given lab group. SDLs can significantly improve the labor productivity of our scientific enterprise, freeing highly skilled graduate students from menial experimental labor to craft new theories or distill novel insights from autonomously collected data. Additionally, they yield more reproducible outcomes as experiments are run by code-driven motors, rather than by humans who may forget to include certain experimental details or have natural variations between procedures.

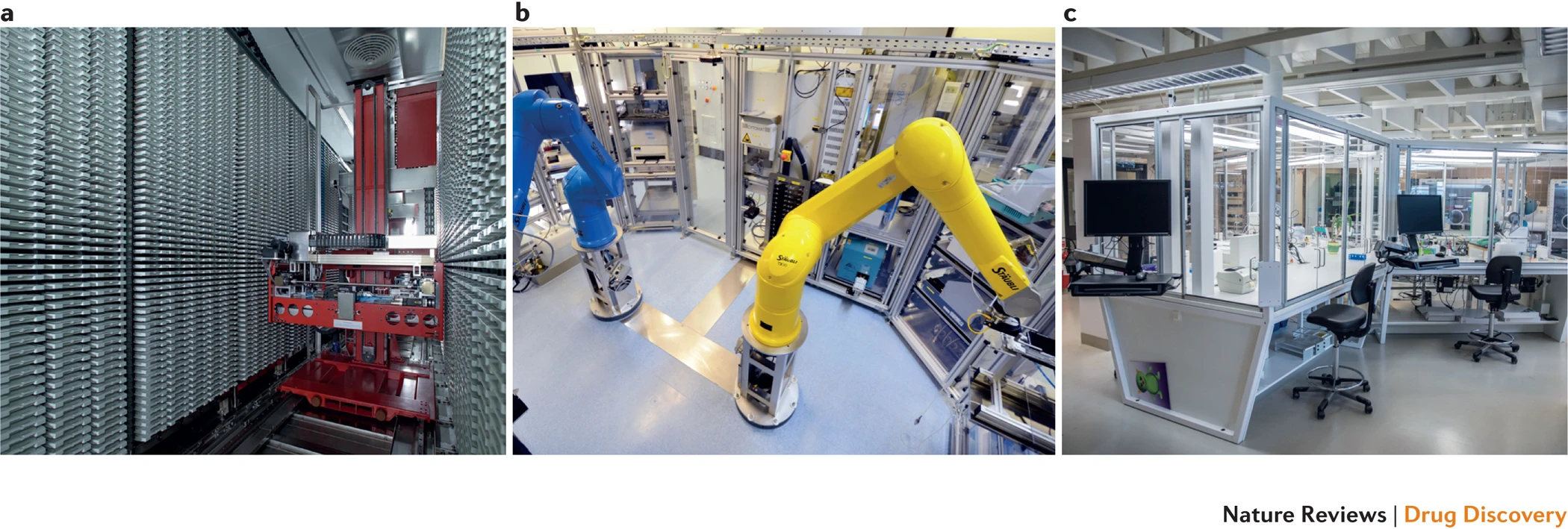

Self-Driving Labs are not a pipe dream. The biotech industry has spent decades developing advanced high-throughput synthesis and automation. For instance, while in the 1970s statins (one of the most successful cholesterol-lowering drug families) were discovered in part by a researcher testing 3800 cultures manually over a year, today, companies like AstraZeneca invest millions of dollars in automation and high-throughput research equipment (see figure 1). While drug and material discovery share some characteristics (e.g., combinatorially large search spaces and high impact of discovery), materials R&D has historically seen fewer capital investments in automation, primarily because it sits further upstream from where private investments anticipate predictable returns. There are, however, a few notable examples of SDLs being developed today. For instance, researchers at Boston University used a robot arm to test 3D-printed designs for uniaxial compression energy adsorption, an important mechanical property for designing stronger structures in civil engineering and aerospace. A Bayesian optimizer was then used to iterate over 25,000 designs in a search space with trillions of possible candidates, which led to an optimized structure with the highest recorded mechanical energy adsorption to date. Researchers at North Carolina State University used a microfluidic platform to autonomously synthesize >100 quantum dots, discovering formulations that were better than the previous state of the art in that material family.

These first-of-a-kind SDLs have shown exciting initial results demonstrating their ability to discover new material designs in a haystack of thousands to trillions of possible designs, which would be too large for any human researcher to grasp. However, SDLs are still an emerging technology platform. In order to scale up and realize their full potential, the federal government will need to make significant and coordinated research investments to derisk this materials innovation platform and demonstrate the return on capital before the private sector is willing to invest it.

Other nations are beginning to recognize the importance of a structured approach to funding SDLs: University of Toronto’s Alan Aspuru-Guzik, a former Harvard professor who left the United States in 2018, has created an Acceleration Consortium to deploy these SDLs and recently received $200 million in research funding, Canada’s largest ever research grant. In an era of strategic competition and climate challenges, maintaining U.S. competitiveness in materials innovation is more important than ever. Building a strong research program to fund, build, and deploy SDLs in research labs should be a part of the U.S. innovation portfolio.

Plan of Action

While several labs in the United States are working on SDLs, they have all received small, ad-hoc grants that are not coordinated in any way. A federal government funding program dedicated to self-driving labs does not currently exist. As a result, the SDLs are constrained to low-hanging material systems (e.g., microfluidics), with the lack of patient capital hindering labs’ ability to scale these systems and realize their true potential. A coordinated U.S. research program for Self-Driving Labs should:

Initiate an ARPA-E SDL Grand Challenge: Drawing inspiration from DARPA’s previous grand challenges that have catalyzed advancements in self-driving vehicles, ARPA-E should establish a Grand Challenge to catalyze state-of-the-art advancements in SDLs for scientific research. This challenge would involve an open call for teams to submit proposals for SDL projects, with a transparent set of performance metrics and benchmarks. Successful applicants would then receive funding to develop SDLs that demonstrate breakthroughs in automated scientific research. A projected budget for this initiative is $30 million1, divided among six selected teams, each receiving $5 million over a four-year period to build and validate their SDL concepts. While ARPA-E is best positioned in terms of authority and funding flexibility, other institutions like National Science Foundation (NSF) or DARPA itself could also fund similar programs.

Establish a Focused Research Organization to open-source SDL components: This FRO would be responsible for developing modular, open-source hardware and software specifically designed for SDL applications. Creating common standards for both the hardware and software needed for SDLs will make such technology more accessible and encourage wider adoption. The FRO would also conduct research on how automation via SDLs is likely to reshape labor roles within scientific research and provide best practices on how to incorporate SDLs into scientific workflows. A proposed operational timeframe for this organization is five years, with an estimated budget of $18 million over that time period. The organization would work on prototyping SDL-specific hardware solutions and make them available on an open-source basis to foster wider community participation and iterative improvement. A FRO could be spun out of the DOE’s new Foundation for Energy Security (FESI), which would continue to establish the DOE’s role as an innovative science funder and be an exciting opportunity for FESI to work with nontraditional technical organizations. Using FESI would not require any new authorities and could leverage philanthropic funding, rather than requiring congressional appropriations.

Provide dedicated funding for the DOE national labs to build self-driving lab user facilities, so the United States can build institutional expertise in SDL operations and allow other U.S. scientists to familiarize themselves with these platforms. This funding can be specifically set aside by the DOE Office of Science or through line-item appropriations from Congress. Existing prototype SDLs, like the Argonne National Lab Rapid Prototyping Lab or Berkeley Lab’s A-Lab, that have emerged in the past several years lack sustained DOE funding but could be scaled up and supported with only $50 million in total funding over the next five years. SDLs are also one of the primary applications identified by the national labs in the “AI for Science, Energy, and Security” report, demonstrating willingness to build out this infrastructure and underscoring the recognized strategic importance of SDLs by the scientific research community.

As with any new laboratory technique, SDLs are not necessarily an appropriate tool for everything. Given that their main benefit lies in automation and the ability to rapidly iterate through designs experimentally, SDLs are likely best suited for:

- Material families with combinatorially large design spaces that lack clear design theories or numerical models (e.g., metal organic frameworks, perovskites)

- Experiments where synthesis and characterization are either relatively quick or cheap and are amenable to automated handling (e.g., UV-vis spectroscopy is relatively simple, in-situ characterization technique)

- Scientific fields where numerical models are not accurate enough to use for training surrogate models or where there is a lack of experimental data repositories (e.g., the challenges of using density functional theory in material science as a reliable surrogate model)

While these heuristics are suggested as guidelines, it will take a full-fledged program with actual results to determine what systems are most amenable to SDL disruption.

When it comes to exciting new technologies, there can be incentives to misuse terms. Self-Driving Labs can be precisely defined as the automation of both material synthesis and characterization that includes some degree of intelligent, automated decision-making in-the-loop. Based on this definition, here are common classes of experiments that are not SDLs:

- High-throughput synthesis, where synthesis automation allows for the rapid synthesis of many different material formulations in parallel (lacks characterization and AI-in-the-loop)

- Using AI as a surrogate trained over numerical models, which is based on software-only results. Using an AI surrogate model to make material predictions and then synthesizing an optimal material is also not a SDL, though certainly still quite the accomplishment for AI in science (lacks discovery of synthesis procedures and requires numerical models or prior existing data, neither of which are always readily available in the material sciences).

SDLs, like every other technology that we have adopted over the years, eliminate routine tasks that scientists must currently spend their time on. They will allow scientists to spend more time understanding scientific data, validating theories, and developing models for further experiments. They can automate routine tasks but not the job of being a scientist.

However, because SDLs require more firmware and software, they may favor larger facilities that can maintain long-term technicians and engineers who maintain and customize SDL platforms for various applications. An FRO could help address this asymmetry by developing open-source and modular software that smaller labs can adopt more easily upfront.