A Generational Model for Inclusive Clusters in St. Louis’ Tech Triangle

The St. Louis Tech Triangle coalition will receive approximately $25 million to support the region’s advanced manufacturing cluster – which will support and grow the existing biosciences and geospatial clusters. One of their primary projects is the Advanced Manufacturing Innovation Center (AMIC), which will be the hub of an inclusive ecosystem and bring investment to a historically excluded area of STL.

Tracy Henke is the Chief Policy Officer/President of ChamberSTL with Greater St. Louis Inc., lead of the region’s Build Back Better Coalition. GSL was founded on January 1, 2021 as a result of combining the strengths of five legacy civic organizations into one with a unified focus around a common vision and strategy for fostering inclusive economic growth. Ben Johnson is Senior Vice President, Programs at BioSTL. Since 2001, BioSTL has laid the foundation for St. Louis’ biosciences innovation economy with a comprehensive set of transformational programs leveraging the region’s medical and plant science strengths.

This interview is part of an FAS series on Unleashing Regional Innovation where we talk to leaders building the next wave of innovative clusters and ecosystems in communities across the United States. We aim to spotlight their work and help other communities learn by example. Our first round of interviews are with finalists of the Build Back Better Regional Challenge run by the Economic Development Administration as part of the American Rescue Plan.

Ryan Buscaglia: Could you tell us how your coalition came together and the history of your partnership between organizations? Were you working on elements of this project before the EDA even announced their process?

Tracy Henke: The whole concept for the hub of our proposal originated back in 2015. Some workforce training and items related to innovation and entrepreneurship were already going on. But they were going on separately. The St. Louis Tech Triangle and the partners came together through identifying synergies that existed that could be built upon and leveraged. This [Build Back Better Regional Challenge] was an opportunity to identify those synergies and bring them together more cohesively with the support of federal funding to accelerate that work to make certain that we could move together instead of in isolation.

So, Ben, on the Bio side that you work on, had you been working on individual projects related to advancing the bioeconomy in St. Louis, which maybe hadn’t had as much connection to the other two legs of the triangle before this partnership came up?

Ben Johnson: I would echo Tracy in that this was an opportunity to grow existing, and find new, synergies across the industry cluster. For the biosciences, over 20 years in St. Louis, we’ve been working as a coalition of academic institutions, corporate partners, entrepreneurs, support organizations, philanthropy and civic organizations, to build our bioscience economy. With the success of that collaboration in building innovation infrastructure, we now feel like we’re just at the starting line of realizing the economic potential in some respects. These were existing partnerships and projects and collaborations that were in the works. In some ways, Tracy and her organization gave us a bit of a framework to knit that together with other emerging clusters in a way that some of the other clusters hadn’t really been positioned to organize in the past. I think a critical piece there is that we didn’t come together exclusively for the purposes of ‘here’s Build Back Better, let’s react to it.’ We had a foundation, we had coalition partnerships. We were able to come together around an organized thesis that really drives inclusive growth for the region. From there, projects knit together well. It wasn’t like, ‘Okay, here’s the NOFO. Let’s figure out what exists in the world.’ There was an existing framework for that discussion, including the STL 2030 Jobs Plan, stewarded through Tracy and Greater St. Louis Inc.

Could you talk about why you decided to focus on three distinct fields (advanced manufacturing, geospatial, and biosciences) in your project?

Tracy: Understand that while it is the St. Louis Tech Triangle, the driver of our submission is advanced manufacturing. Advanced manufacturing supports biosciences, it supports geospatial and it leverages the history and existing strength of our region. Metro St. Louis has always been a leader in manufacturing and so that question, ‘why focus on all three?’ It’s a focus on advanced manufacturing that supports our biosciences, energy and our geospatial, and more. Also, we call it the tech triangle because when you look at an overview map of the region, you can literally create a triangle that links the placemaking hubs of the three clusters.

Growing the advanced manufacturing cluster supports our existing bioscience and geospatial activities, but also helps grow and further grow, for instance, our aerospace and other opportunities that might exist, and that’s leveraging our past and present history in the region.

With all of these different projects ongoing, which coalition actually took the lead on writing and submitting the application?

Tracy: Greater St. Louis, Inc. did. Why was it Greater St. Louis? Partially because we’re not an implementer of any of these programs in particular. We are a convener, we are an entity that works with all of them. Hopefully they all see GSL as a partner in this effort. And because you can’t have 20 writers, it doesn’t work well. If you have 20 people doing these interviews and you try to put it together in one cohesive document, you have 20 different voices. And so for the overarching narrative it needs to be in one voice to make sense for those reviewing. We shared it, and we ensured that partners offered feedback. We incorporated as able, but of course, had the limitations put on us by EDA on page numbers, numerous requirements, etc. Individual partners wrote their individual submissions; it doesn’t mean partners didn’t provide edits and guidance, and I did as well to ensure linkage with the overarching narrative, but they all wrote their own. We met on a regular basis, but there always has to be one person with the pen.

I’m glad you took the pen for St. Louis! Could you talk a bit about the Advanced Manufacturing Innovation Center (AMIC-STL) and why that is the hub of this proposal?

Tracy: The Advanced Manufacturing Innovation Center, which is referred to as AMIC-STL, was identified in 2015. The St. Louis Economic Development Partnership is a partner in our specific submission, not just the overarching narrative, but our specific submission on cluster growth. They received a grant back in 2014, I believe, when it looked like there was going to be significant downsizing in the aerospace industry here in the St. Louis metro, specifically Boeing. And so that’s when a defense readjustment grant was provided to help the region think about what does this region need to do, how do all those people get trained for other employment, and they looked at once again, this region’s strength in manufacturing.

AMICSTL is modeled off of the AMRC in Sheffield, England. The Advanced Manufacturing Research Center in Sheffield, England, was initially a Boeing funded entity. There are other manufacturing centers in Europe, I believe there’s also one in Japan now, they’re all a little different. But in our opinion, and what we have studied here within the United States, there isn’t anything like we’re talking about here in the United States. The Advanced Manufacturing Innovation Center will be an opportunity to do the research and development. It will be an opportunity to do prototyping and small batch manufacturing, but also an opportunity to create what we call verticals in different spaces. So an aerospace vertical, a bioscience vertical, an energy storage vertical. Something that brings not just a prime entity like hypothetically, aerospace and Boeing, but then all their other suppliers, all the entities that feed into Boeing and what Boeing does and the research that goes along with it. By co-locating, it allows cross pollination of ideas and also cross pollination of how to use things in other ways.

The Advanced Manufacturing Research Center in Sheffield, England, was initially a Boeing funded entity.

So something that might be used as relates to technology on a drone to look under the canopy of trees might have some technology applicable to the healthcare space. Or researchers might be able to take something that they learned from the development of a specific technology and apply it in a completely different field. Having these researchers and developers and the ability to prototype etc, allows for cross pollination of ideas and that growth, which then spurs additional development and additional growth.

If you look at what happened in AMRC and Sheffield, they started with a handful of R&D individuals, they now have over 500. And they now have over 400 units of housing built. They started with one building, they now have multiple buildings.

Ben: Another point I think that was critical, particularly for our regional strategy and commitments but also for Build Back Better and EDA is the physical geographic location in a significantly disinvested historically excluded community. It really gives an opportunity to bring some of this new manufacturing innovation, bridging the biosciences and geospatial, as sort of a beachhead into a neighborhood and community where that type of investment has not occurred in a significant amount of years.

Tracy: When you look at the history of St. Louis, and you look at how communities were built up, a lot of them were built up within the urban core around manufacturing. Unfortunately, we lost a lot of that and then saw out-flight. Even early in 2015-2016 with the conversation about the establishment of this Advanced Manufacturing Innovation Center, they talked then about how its location should be in a historically disinvested community. And so this is a strategic location in the heart of the city where the land currently exists, right next to a technical school and in close location to multiple education institutions as well that can help on the workforce side, strategically located near NGA West, near our cortex and biosciences partners, as well as surrounded by other workforce partners.

Recognizing that this is a tough challenge, how are you making sure that AMIC-STL has a constructive and growth minded and equitable result for the community rather than continuing a trend of either pushing people out of homes / businesses or making it difficult for existing residents and existing community members to stay there?

Tracy: Almost every week GSL brings our AMIC-STL partner and our city partners and our neighborhood partners where AMIC-STL will be located to the table.

Once again, this is purposeful on its location. There is nothing currently on the site. Most of the surrounding community, quite honestly, has suffered from disinvestment. There are vacant homes and boarded up buildings because of the outflight and more. And so we are working to ensure AMICSTL, being that centerpiece, sort of like AMRC and Sheffield, will create this domino effect. But to do that, we also have to make sure that partners are at the table. In addition we must have community engagement and will use BBBRC funding to help with this. We’re also drafting an RFP on establishing the baseline of metrics as it relates to the manufacturing ecosystem in the region, as well as the spokes of that and how far they reach.

We’re looking at this holistically. We’re not just looking at building a building.

Also, our workforce partners are looking at what gaps exist in workforce training, and not creating new workforce programs necessarily, but how do our existing workforce entities fill those gaps. We’re looking at this holistically. We’re not just looking at building a building. I think that’s the important thing.

How will St. Louis look different in ten years if you’re successful in doing what you proposed?

Tracy: In ten years: AMIC is built and the dominoes have started resulting in growth. Our workforce training, our innovation entrepreneurship, our community engagement, our cluster growth is working. Can I say this is going to be overnight? No, I cannot. Is this going to be easy? No, it is not. In ten years is it our hope that we’ve seen progress? Yes. Can we say that it is our hope to continue seeing AMIC growing with verticals with benefits to different areas and lines? Yes. We are doing this deliberately and with intention to ensure a higher probability of success.

Ben: Ten years from now, I believe through Build Back Better and the collaboration to form it—with AMIC as its embodiment—will be a longitudinal and generational model, even if it is a model still in progress. It will be a model for how we do intentional, inclusive economic growth with increased permeability between innovation districts and underserved neighborhoods. A new model for STL and beyond for lessons learned and successes. Ten years, we will still be getting better and continuously improving in that work, but AMIC will be a significant mile post in that journey of St. Louis doing things differently.

(Mathematically) Predicting the Future with Professional Forecaster Philipp Schoenegger

Right now, working alongside the Federation of American Scientists, the forecasting platform Metaculus is hosting its first ever Climate Tipping Points Tournament. The aim is to show just how powerful a tool forecasting can be for policymakers trying to effect change.

Part of what makes the Metaculus forecasting community exciting is that anyone with even a passing interest in data science can jump in and begin making predictions; the wisdom of the growing crowd of forecasters helps make the platform more and more accurate over time.

Metaculus has recruited about two dozen of the very best forecasters in its community to become Pro Forecasters. To become a Pro for Metaculus, a forecaster has to have scores in the top 2% of all Metaculus users. The Metaculus scoring system is complex, but for the mathematically-disinclined, you earn points on accuracy, how your prediction compares to those of the rest of the Metaculus community, and how early you make your predictions (in relation to the resolution of the question). Pro Forecasters also have experience forecasting for at least a year, and have a history of providing commentary explaining their methodology.

Philipp Schoenegger became one of the first members of the pro-forecasting team last year – and says he’s been forecasting for about a year and a half in total.

“Right now I have about 6,000 forecasts on the Metaculus platform,” he says. “I think what goes into forecasting questions, time-wise, can vary widely between professional forecasting and hobbyist forecasting.”

Schoenegger, who recently defended his PhD thesis in behavioral economics and experimental philosophy at the University of St. Andrews, says over the past couple of years he found himself spending what he calls an “excessive” amount of time on forecasting – at times to the detriment of his studies or other work. Luckily, the people at Metaculus recognized his talent, and invited him to join the pro forecasting team for its Forecasting Our World in Data project.

Schoenegger says part of what he enjoys about forecasting on the pro team is the incentive to explain and share methodology. In public tournaments, he says, there’s always a tension between collaboration with the community – and winning.

“The cool thing about the professional forecasting tournaments is the sharing of information and writing out rationale is part of what we are incentivized to do. I think that’s really fun,” he says. He also loves reading about how other pro forecasters think. “I find that very useful and helpful, especially when they do things very differently from me. The Pro Forecasters vary widely in our backgrounds and approaches. Some people are 50-plus and have a long history of doing this professionally. Other people are just out of their PhDs. Some people forecast just for Metaculus, but also have another very unrelated job.”

But how does he do things? How does he feel confident offering up predictions on everything from when Queen Elizabeth II’s reign would end, to whether a major nuclear power plant will be operational in Germany by this summer?

“The way I do it is with just very basic simple models – but those models are never more than 50% of what my actual forecast looks like,” he says. The other 50%, he says, is all the background research and extra information he can find on the given subject – additional factors that could influence an outcome. “The very simple models then take a backseat to the background reading, and understanding certain types of risks.”

He returns to the example of Queen Elizabeth II. If you were trying to predict when her reign would end [the queen died in September of 2022, but Metaculus first posed its question in January of 2020], Schoenegger says you would start with base rates on basic questions. “How often do British monarchs abdicate? Or I think the most basic question would be, ‘What’s average life expectancy?’ And looking at her age you might say there’s a 20% chance she dies each year after 2018. But then you might look at her track record of very good health, and update your numbers. And then you might read some article or argument in the other direction, and update depending on how credible you think it is.”

For the Climate Tipping Points tournament, some of the questions center on the availability of certain resources for electric vehicle technology. “It has to do with resources, and scarcity, and when those might be alleviated. The way I approached this was to just try to read a bunch of different [articles and reports] that try to look at those specific sub-questions – like precious metals or lithium supply, or stuff like that.”

And while sharing his methodology is part of the job now – at the outset of a question or tournament, Schoenegger says he guards against his forecasts being influenced by others in the Metaculus forecasting community. “On this tournament, I made an effort to be done with all my work on day one. I wanted to make sure that I get all my numbers into the system first, so I’m not then swayed by other people.”

Though Schoenegger obviously has a good track record as a forecaster now – he’s also very open to the possibility that things could change.

“I think the honest answer – and there are other people and forecasters who might give different, more optimistic answers – but I just think we don’t know yet if my track record over the past 18 months is actually at all predictive of me actually being better [than the average forecaster] over the next 40 years. I honestly think we don’t know yet,” he says.

But that uncertainty doesn’t mean Schoenegger is shy about getting more people to try their hands at predicting the future in an evidence-based way.

He says he’s twisted the figurative arms of ‘half of my family and most of my friends’ into making at least one official forecast. He tells people new to forecasting to start with a question or topic that they already have some interest or expertise in. “Take a look and try to flesh out your thinking and feelings in actual numbers and distributions and dates, because that’s something that most people don’t do. ‘It is very likely.’ What exactly do you mean by that? And I think just that exercise of putting fuzzy feelings into actual numbers – I think that can be very illuminating for oneself.”

And what of the future of forecasting more generally? Schoenegger believes that while specialized research labs with a group of experts investigating a single issue area will likely always have an advantage over crowdsourced platforms like Metaculus – it will be true only in their specific areas of study.

“If you have your proprietary super special model, and ten research scientists work on it – it’s very good for some parametric insurance about floods in Turkey or something like that,” he says. “But [for Metaculus] I think it’s the speed and agility with which you can forecast. The war in Ukraine, climate, presidential elections, something about some king or queen – it’s very broad. And the hope is that once you hit a certain number of dedicated users who continually forecast on those things, with the wisdom of the crowds, you can actually have forecasts on all sorts of stuff. And I think they might underperform the hyper-specialized $10 million model on its specific thing. But [the crowdsourced forecasting] will win on pretty much everything else.”

Schoenegger says he’s not positive he wants professional forecasting to be his main focus forever, but he’s also loath to give it up. “I’m still undecided on going the route of forecasting as my main job, or academics as my main job,” he says. “I think what I end up doing is basically both – because I like forecasting way too much. It’s way more fun than writing papers, to be honest.”

Empowering First Generation Scientists with Gabriel Reyes

For many students in the U.S. a career in science is out of reach. Too often young people interested in science never get the chance to pursue their dreams simply because they come from low-income families or live in parts of the country where opportunities to engage in scientific research are limited. This leads to a critical lack of diversity in the scientific community that stifles creativity, innovation and progress.

FLi Sci, short for first-generation/low-income scientists, is an education nonprofit that addresses the root causes of lack of diversity in the scientific profession. The organization provides financial support for high school and college students in poverty to access, pursue, and engage in scientific opportunities. The flagship program is a two-year paid fellowship during which students are able to conduct their own independent research projects.

FLi Sci recently became a member of FAS’s Fiscal Sponsorship Program which identifies burgeoning entrepreneurs in science and technology policy and supports their philanthropic endeavors. Gabriel Reyes, FLi Sci’s founder and Executive Director, sat down with us to discuss his organization’s pioneering work and how his own experiences as a scientist inspired him to make a difference.

FAS: Before we start talking about FLi Sci – tell us a little bit about your science story. How did you first discover your love for science – was it one class or one teacher?

Gabriel Reyes: I loved math a lot – to the point where when my parents were grocery shopping and they would take me, I would go to the book section, and try to find one of those books that had math problems and I would try to finish as many math problems as I could before my parents picked me up. And I would be really mad at myself if I didn’t finish all the problems. But the real point of discovery was probably in my rising sophomore year of high school, when I took an intro to psychology course at the University of New Mexico. I really took that course because there was a condition at the summer program at the University that if I wanted to have a free lunch, I needed to take a morning class. And so I was like, “Ugh, fine, I’ll take this psych class.” I ended up falling in love with the material.

FAS: And from there was it just a logical transition into neuroscience?

GR: I went to college and became more mature about what science is and what research in neuroscience was; I thought I was interested in psychology, but I realized I was actually interested in neuroscience – because the parts of psychology that I was most excited about were the brain, and the neurons, and topics like brain development.

FAS: Your organization, FLi Sci, focuses on first-generation, low-income, budding scientists. You describe yourself as proud to have come from a first-generation, low-income background. Tell us more about your childhood and upbringing.

GR: My parents were Mexican immigrants. Now they have visas, so they have a residency status in the U.S., but for so long, we grew up in a mixed-status household. As a result of that, it was very difficult for my parents to access careers that offered affordable wages. And so all my life I lived in economic scarcity. That was one of the parts that contributed to us moving constantly when I was a child – my dad kept getting fired from his job, or he wasn’t getting paid enough to make rent. So we had to move.

FAS: You have said that your parents came to the U.S. in part because they had seen such a lack of educational opportunity in Mexico, and didn’t want the same for their children. But New Mexico presented its own challenges, at least compared to other parts of the U.S, right?

GR: Yes, I am the first in my family to go to college. And yes, another unique aspect about me is growing up in a place like New Mexico. Other places like New York or the Bay Area have a myriad of pre-college programs; like teaching low income students how to code, or having them do research with a professor from NYU or Stanford or other big universities nearby. I’m a little envious of high schoolers that grow up in those places. But there are a lot more places like New Mexico, or like Alabama or Kentucky that similarly do not have the same sort of abundance of nonprofits or educational opportunities. It’s important that people like myself and others can engage in science early enough to know science, and careers in science, exist.

This is a focus of the work that my organization does and I hope other organizations in the future do more of this sort of landscape analysis. Which schools and cities already have resources? How do we maybe amplify those existing resources, or strategically work with them to target groups that may not be accessing them, and extend opportunities in areas that don’t already have them.

FAS: The aim of your organization, FLi Sci, is to make science more accessible to help low-income, first-generation American high school students who attend high schools with severely limited resources. You put a specific emphasis on research – why is that?

GR: I say ‘research’ specifically because there are already many pre-college science programs that put a big focus on ‘industry.’ They might teach students, for example, how to code, so that they can pursue careers in tech at Google or Amazon, or to become engineers so that they work in the field of engineering. But FLi Sci specifically is hoping to get a group of students interested in pursuing a PhD or a medical degree.

I think that focus is because along my journey I’ve seen a lot less first generation and low income students and students of color following my path. Every year we talk about the lack of diversity in science, but I don’t see enough action to actually combat the root causes of why there’s not a lot more diversity in the profession. We want to provide students early exposure to these career pathways, and set them up for success by having them do their own research projects. That way they’ll be able to access further science opportunities the minute they get to college.

I want to move away from a model of where low income students are sort of given these science kits; you know, it’s like, ‘Here’s a chemistry kit!’ and that’s supposed to be the thing that inspired them to do science. Instead, I really want to see what people produce – like what are these young people chasing? Because for me, I became a scientist because I got to decide what questions I wanted to ask. But many students in high school that I’ve been interacting with don’t have that same privilege.

FAS: FAS’ Fiscal Sponsorship program basically allows you to fundraise in a way you couldn’t on your own. Are their specific ambitions or initiatives you are aiming for with more funding?

GR: Our flagship program is the FLi Sci Scholars Program, which is right now a two-year fellowship. We’re in the process of recruiting the next cohort, but our true goal is for this to be a longer multi-year fellowship program where we get students at the high school level and support them until they apply to medical school or a PhD program. We know that it can be very easy to fall off the path to a career in science; because access to science isn’t just about getting opportunities, it’s about when you get those opportunities.

The other thing is we are a virtual program primarily because we started during the pandemic, but also because, again, one of our goals is to target and reach students that don’t have access to science, and virtual outreach has been great for that. But with more funding, it would be great to be able to provide some in-person activities. So for example, one of the things that we’re thinking about is a summer conference, either for all of our FLi Sci scholars or a summer conference for people before their first year of college, so that we can do some preparation and support before they start their very first day in higher education.

Then I think the last thing that is a long-term goal that we’re trying to achieve once we’re at a more financially stable place is to start training teachers. Our curriculum is designed to help students pursue their own independent capstone project – but ultimately, I don’t want to be the sole keeper of that curriculum. If we can train astute teachers, we can reach more individuals. Maybe we could even help high school teachers who want to pursue their own scientific research – teachers who never had that opportunity themselves because a program like FLiSci didn’t exist.

FAS: That gets to our last question: what does it feel like to be helping students who remind you of yourself in high school – and trying to give them opportunities that you didn’t have yourself at their age? Rewarding? More challenging than you thought?

GR: It’s a range of emotions, and it varies from day to day. It’s very humbling because one of the things that I try to tell myself is that I worked very hard and I am good at my craft and I’ve created this organization, but there were people before me who did the hard work to provide that access that I’ve been given. Not too long ago the Ivy League schools I attended did not have generous financial aid programs for people like myself to be able to go to school for free. Other people had to advocate for that. They may have taken out loans that they may still be paying on but I was able to go and graduated debt free because of the work others did. So in those moments where I feel sad or angry that there wasn’t a program like FLi Sci when I was in high school, I have to remember that.

I also still get questions about whether I think children from low-income backgrounds have what it takes to excel in science. These students always have to justify, you know, having a morsel of opportunity, whereas people who are wealthier – they just have to write a check or swipe their credit card.

I have some friends who do not share my identity, either race or my gender or my sexuality or my class background. For them, sometimes as little as proposing an idea is enough for them to get a substantial amount of funding that I haven’t been able to obtain. Whereas in my case, the scrutiny is a lot more intense. Like if I don’t have a perfect model, then I’m not ready for funding. Or if I haven’t tried this with at least 100 students, I’m not ready for funding. So it’s very fascinating to see, as an entrepreneur of color, just how different it is for me to get traction in the organization that I’m building. So I imagine that when I was a student, the lack of programming wasn’t because no one cared; I’m sure it was because someone was blocking the emergence of such an opportunity.

Prepping for the CLIMATE TIPPING POINTS TOURNAMENT with Metaculus’ Gaia Dempsey

The concept of forecasting is pretty familiar to anyone who’s flipped on their local news to get a sense of the week’s weather. But the broader science of forecasting, which is being applied to policy-relevant topics such as epidemiology, energy, technology progress, and many more topics – – has never been in a more exciting place. In just a few weeks, FAS, along with Metaculus, will hold a forecasting tournament (The Climate Tipping Points Tournament) aimed at demonstrating just how powerful a tool forecasting can be for policymakers trying to effect change.

Metaculus is a forecasting platform – their unique system aggregates and scores forecasts and forecasters. Metaculus’ global forecasting community correctly predicted the outbreak of Russia’s Ukraine invasion, and its models have also helped state governments make better real-time decisions regarding COVID-19 response.

Metaculus’ CEO, Gaia Dempsey, sat down with us recently to discuss her organization’s work, and why forecasting holds such promise for better public policy.

FAS: Gaia, thank you for making the time for this. To start, could you talk about why you think forecasting, which at first blush can seem like peering into a crystal ball – not scientific at all – is actually very aligned with public policy based on scientific evidence?

Gaia Dempsey: At Metaculus we care a lot about ‘epistemics’ and epistemology. Epistemology is a branch of philosophy that deals with knowledge itself, and how we form beliefs – how we come to believe something to be true. Forecasting is a way of continuously improving our epistemics. It’s connected to the fundamental basis of the scientific method itself, which is this essential idea that our trust in any given theory about the world should increase when it’s able to predict the result of an experiment or the future state of the given system or environment that’s being studied. So this agreement between the theory and the experiment – that’s predictive accuracy. That’s the gold standard for what we trust as a valid explanation for anything in the world – any given phenomenon.

What we do on our platform is bring that mindset into more complex environments — outside of the laboratory and into society, into public policy.

FAS: You discuss Metaculus as both a forecasting platform and a community. What makes your platform unique?

GD: You can think of it as a network of citizen scientists, or a decentralization of the role of the analyst in a way that rewards and gives credence to analysis on the basis of its accuracy rather than the person’s position or title. Everyone has the ability to be an astute observer of the world. Metaculus is kind of like infrastructure that facilitates the collaboration of thousands of people and aggregates their insights. Our platform has a set of scoring rules – every time you make a forecast, you get rigorous, quantitative feedback. When you’re making a prediction, you have to take into account all of the factors that affect the outcome. If you haven’t, your score is going to let you know that you’ve missed something.

FAS: In your community you’ve said there are casual forecasters and hardcore forecasters – and recently you’ve even assembled a team of 30 Pro Forecasters – people with a track record of accuracy and prescience in their forecasts. Aside from a familiarity with and facility with data science, you’ve also said that humility is a good trait for a forecaster. Can you elaborate?

GD: I’d say it’s ‘epistemic humility’: you need to be able to update on new evidence. If you hold on really tightly to a belief, you’re going to be biased by that desire to believe that the world works one way, while the evidence is actually telling you something else.

FAS: That makes sense. In terms of why forecasting is such an exciting field right now, it seems as if we’re reaching this point in forecasting science because of the confluence of human learning coupled with new technologies that allow us to aggregate information at these incredible scales. Is that a fair way to think about it?

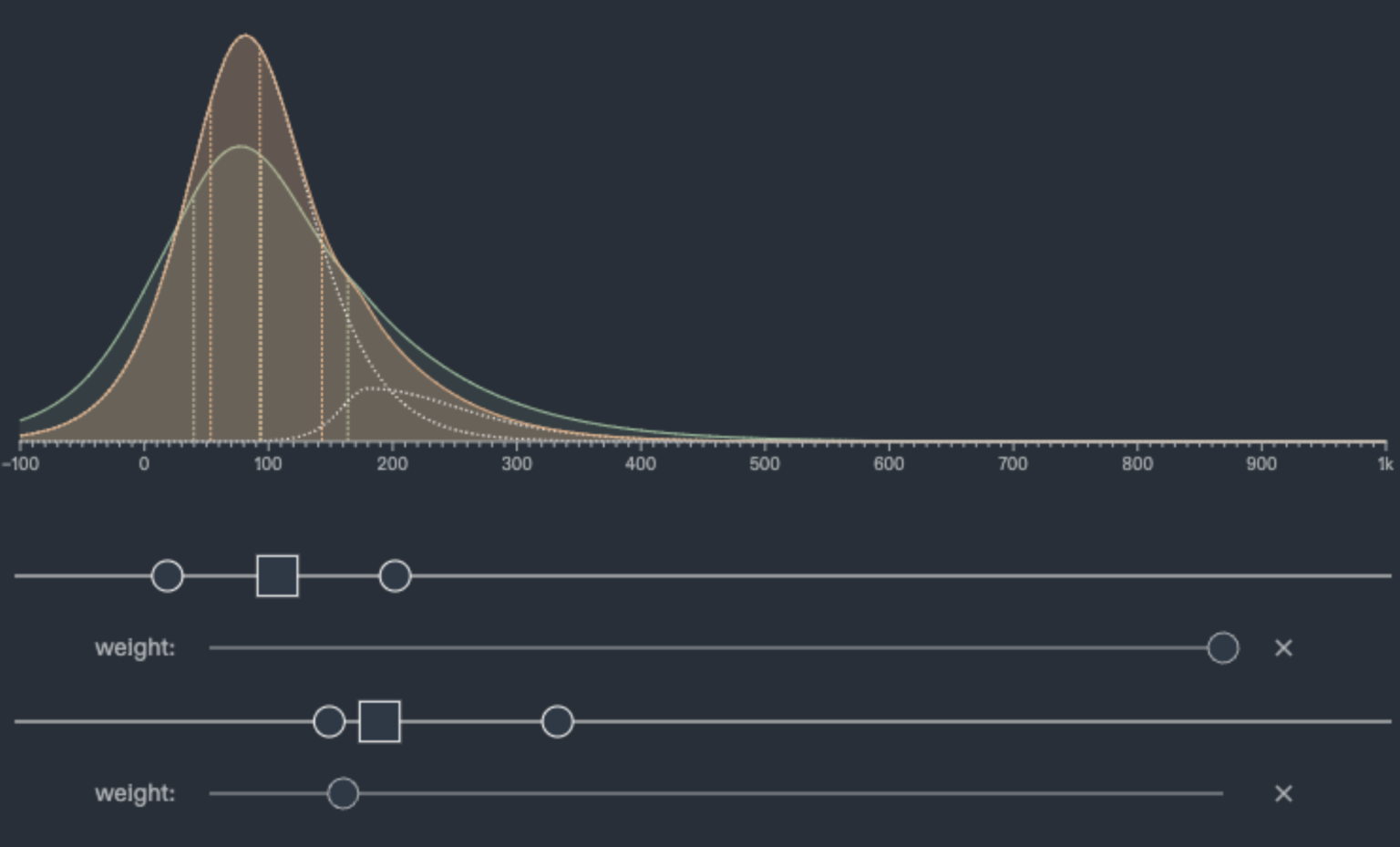

GD: Yes, I would say so. Metaculus mimics the structure of a neural network. We aggregate statistically independent forecasts, and our scores and tournament prizes serve as reward functions. It really is sort of like a hive mind. Within this system, accurate signals are cross-validated, while errors cancel each other out, and since we’re in effect running hundreds of trials at any given time, the system is designed to get more and more accurate over time. Our aggregation algorithm gives more weight to forecasters who have a track record of accuracy. We are basically weighting the probability of a forecaster being more accurate based on their past performance, so in a way it’s actually quantitatively controlling for cognitive biases across a population of forecasters.

FAS: Our upcoming Climate Tipping Points Tournament is aimed at showing how useful forecasting can be for policymakers, looking at questions like “What will the Zero-Emission Vehicle Adoption Rate be in X years?” or “If X policy is implemented, what will the charging infrastructure look like in year X?” It’s focusing on something known as the “conditional approach” to forecasting. How is this different from what Metaculus has done in the past?

GD: Conditional forecasts give you the delta – the difference – between taking an action, or not taking it. It’s going to be the first time we’re really doing this at scale like this. The thought process behind this particular project was: how can we leverage the talent and the analytical capacity of forecasters to actually be as useful as possible to the policy community? We want to help answer questions like, “Which policy actions are really feasible?” or “What may actually happen in the time period of interest that policymakers are concerned about?” If you’re asking, “What would happen if we just outlawed all cars tomorrow?” Yes, we could produce an estimate that could tell you what would happen to global CO2 emissions, but it wouldn’t be very useful. So it’s really about considering the policy interventions that are realistic, and developing a quantitative analysis that can tell us what the likely impact will be of these policy actions on the outcomes we really care about, such as the reduction of CO2 emissions. Our conditional forecasting methodology will make the relative expected value of various policy actions unmistakably clear, using an empirically grounded methodology.

FAS: And as the title of the tournament suggests, it will also highlight a fairly new idea to U.S. climate policy: positive tipping points. FAS’ own Erica Goldman is very excited about this area of study, but how does it relate to forecasting and the work that Metaculus does?

GD: It’s something I find really exciting about this particular project; it brings together research from the University of Exeter on positive tipping points, FAS’ policy expertise, and our forecasting methodology. The goal of this tournament is to assess what climate policies may present positive tipping points toward decarbonization. We know that tipping points are a real phenomenon in lots of different complex systems. The work that our partners at Exeter University did to pinpoint and really identify positive tipping points in our current understanding of climate science is so exciting. But we want to look at how we can then leverage those tipping points to achieve goals in terms of reducing the impact of climate change—and maybe even reversing it.

FAS: Before we let you go, could you talk about how to participate in a forecasting tournament? Once the questions and topics are released, how much expertise is required to engage with this?

GD: Laymen can totally participate – it just requires intellectual curiosity and a willingness to engage in the forecasting process. Most forecasters benefit from building some kind of model or by explicitly implementing Bayes’ rule. People who are already familiar with data science techniques, people who are familiar with modeling, people who have some kind of science background – they tend to be able to jump right in and feel comfortable, but you don’t necessarily need to have that background at the start. It’s something where you become a part of a community. If you comment on the public forecasting questions, people will engage with you very sincerely. It’s just like if you’re a new software developer: People don’t expect you to get everything right away. But if you’re genuinely putting in the effort, they will come and support you.

FAS: On that inviting note – we’ll let you go prepare those tournament questions! Thanks.

GD: Thank you!

Burning Questions: Wildfire Policy with Erica Goldman

Americans now get all-too-regular reminders of the dangers posed by wildfires: just this week California’s McKinney Fire provided a tragic example.

All would agree that limiting the risks posed to lives and property from wildfires is critical – but what are the best ways to do that? Erica Goldman and her science policy team at FAS are working hard to ensure that science has a key role in shaping a “whole-of-government” framework for wildland fire policy. We spoke to her about where things stand, and why this year could be a turning point for how government agencies and leaders take on this problem…

FAS: It certainly seems like wildfires and fears about what wildfires can do are in the national consciousness more than ever, but you’re actually working with policymakers and experts studying these problems. So how big is the problem?

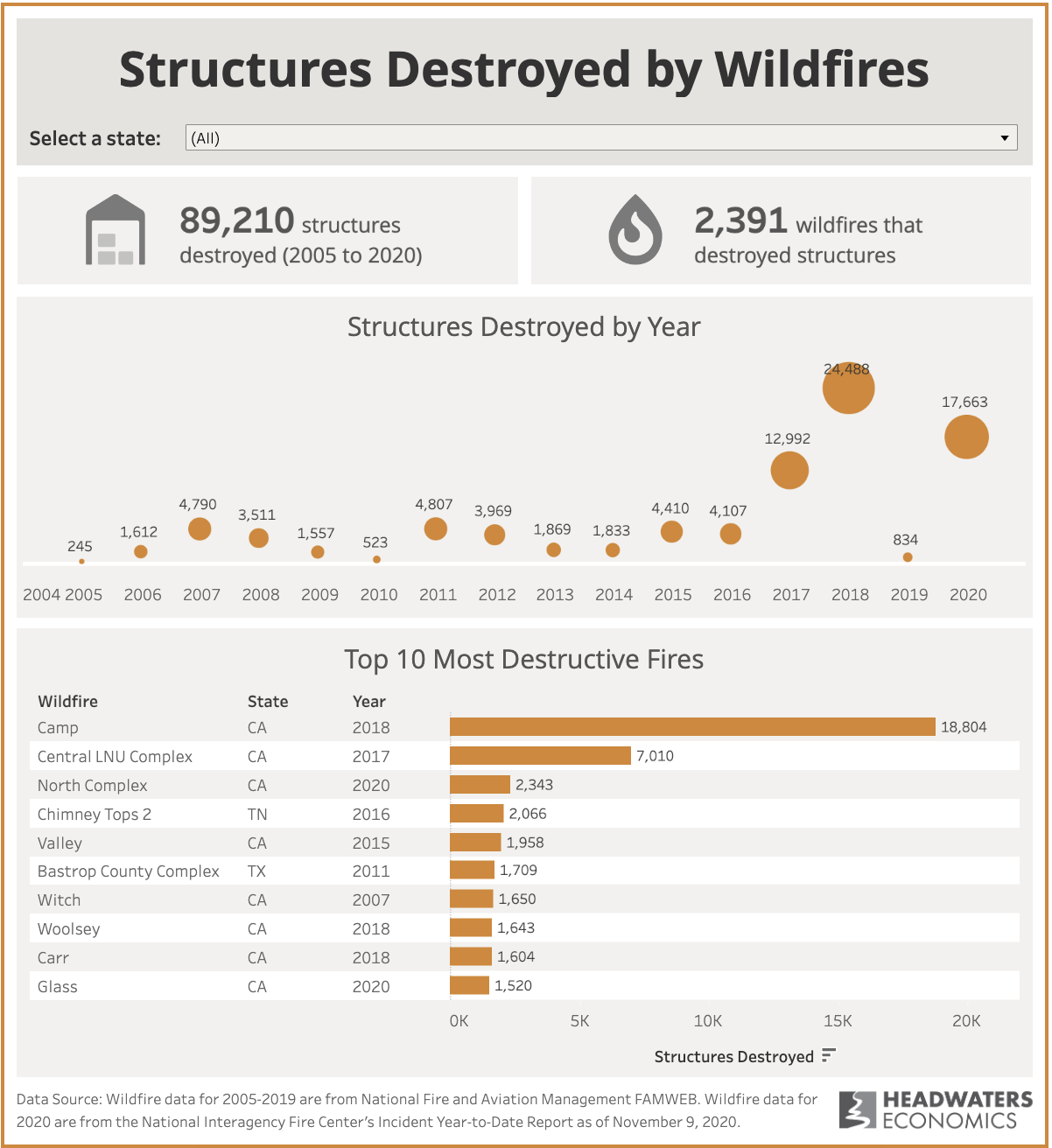

Erica Goldman: It’s important to look back specifically at the past 5 or 6 years. We have seen an average of 8 million acres burned each year, which is double the average from 1987 to 1991, and if you look at the number of structures destroyed by fire in recent years, it’s even clearer that the wildfire regime has changed. We’re seeing more uncontrolled wildfires, bigger wildfires. They’re going through populated areas, and we’re seeing more people at risk and more property at risk. Climate change is clearly having an impact on the wildfire regime and things are getting more dangerous and more complex.

National Fire and Aviation Management FAMWEB, presented by Headwater Economics.

What groups and agencies are working on these issues – and what’s different about this particular moment?

EG: The constellation of state, local, nonprofit, and federal bodies that deal with wildfires is almost too large to comprehend. But there is a lot of momentum within the government – in this case, at the federal level – to address challenges associated with uncontrolled wildfire, while optimizing “beneficial fire” on ecosystems.. There are currently three different federal interagency groups working on this issue: the Wildland Fire Leadership Council (WFLC), the White House Interagency Working Group (IWG), and the Wildland Fire Mitigation and Management Commission. It’s that last one, the Commission, that is likely to be a “fast moving train,” because it was created by Congress and has a deadline to do its work – its statute says it has to deliver recommendations to Congress in the next 12 months.

So that commission will be looking closely at many different aspects of wildfire science and land management over the next year, and then delivering some ideas to lawmakers. But Congress has already passed many wildfire related laws, right?

EG: Congress has been pretty active in this space over the past several years. Wildfire is a close-to-home issue for constituents in many of the western states, so I think we’ve seen a lot of legislative attempts to address aspects of wildfire. There have been several bills focused on science, and there are other bills focused on supporting the firefighting workforce. So Congress ultimately has a big role to play. But one of the missing ingredients right now is how to approach this in a holistic way in a legislative sense. I think there have been over 35 bills introduced just in the past year, but, in my opinion, we haven’t yet seen a comprehensive approach to solving this problem.

There also seem to be some new voices being brought into the search for wildfire solutions. What do these different groups bring to the table?

EG: We have a lot to learn by understanding and listening to and observing how Indigenous people who’ve lived on this land for centuries have managed fire. In particular with respect to prescribed burns – there is growing evidence that Indigenous practices have successfully managed to avoid prescribed burns turning into uncontrolled megafires. So it feels like there’s a real opportunity to not just learn from the practices but incorporate Indigenous decision-makers and knowledge holders into the policy development process.

You’ve also highlighted more involvement from the tech sector – which makes a lot of sense.

EG: Yes – we have a lot of existing capabilities with satellites in orbit to use that technology for early detection of wildfire. We’re also seeing the growth of tech sector efforts around drone capabilities for wildfire detection. It’s an emerging focus: how can tech innovation help, especially on the early detection and suppression side of things. But we are also still figuring out how technological innovation integrates with the way the federal government does its business when it comes to fire management.

One of the ways FAS works is by making sure policymakers have access to the best scientific experts and research. What do you see as the challenge over the next year when it comes to putting science at the forefront of these talks?

EG: I see a gap in the narrative right now: We know that living with fire is critical, and that if we can’t burn the landscape, we’re going to put ourselves at even greater risk for catastrophic megafires. However, the science community is not adequately communicating how prescribed and managed fires fit within our heating world in a way that policy makers can use. The result of that is for policy lean toward blanket moratoria on prescribed fire. The science community agrees that we must include climate change in the way we think about policy, but they’re not getting granular on how to do that. I’ve seen a few ideas suggesting creative solutions such as geospatial mapping of dynamic burnings – this would help communities and land managers make targeted decisions like, ‘Burn now, it’s going to rain – don’t burn now because it’s getting dry.’ The key is getting the science community to serve that up in a way that’s useful to policymakers. You need to speak in a way that policymakers can understand, and actually think about not just about the phenomenon of climate change, but how to offer some solutions for managing the land in spite of it.

FAS also has an Impact Fellow working on wildfire solutions at USDA:

Jenna Knobloch serves as Wildfire Resilience Impact Fellow at the Office of Natural and Environment. Jenna is the most senior expert on wildfire resilience at the USDA, providing policy guidance on megafire suppression, mitigation, and community-based solutions. She has advised senior officials within the Biden Administration on implementation steps for the Executive Order to strengthen the nation’s old-growth forests, and researching the key role these forests play in sustaining ecosystems.

Interview with Erika DeBenedictis

2022 Bioautomation Challenge: Investing in Automating Protein Engineering

Thomas Kalil, Chief Innovation Officer of Schmidt Futures, interviews biomedical engineer Erika DeBenedictis

Schmidt Futures is supporting an initiative – the 2022 Bioautomation Challenge – to accelerate the adoption of automation by leading researchers in protein engineering. The Federation of American Scientists will act as the fiscal sponsor for this challenge.

This initiative was designed by Erika DeBenedictis, who will also serve as the program director. Erika holds a PhD in biological engineering from MIT, and has also worked in biochemist David Baker’s lab on machine learning for protein design at the University of Washington in Seattle.

Recently, I caught up with Erika to understand why she’s excited about the opportunity to automate protein engineering.

Why is it important to encourage widespread use of automation in life science research?

Automation improves reproducibility and scalability of life science. Today, it is difficult to transfer experiments between labs. This slows progress in the entire field, both amongst academics and also from academia to industry. Automation allows new techniques to be shared frictionlessly, accelerating broader availability of new techniques. It also allows us to make better use of our scientific workforce. Widespread automation in life science would shift the time spent away from repetitive experiments and toward more creative, conceptual work, including designing experiments and carefully selecting the most important problems.

How did you get interested in the role that automation can play in the life sciences?

I started graduate school in biological engineering directly after working as a software engineer at Dropbox. I was shocked to learn that people use a drag-and-drop GUI to control laboratory automation rather than an actual programming language. It was clear to me that automation has the potential to massively accelerate life science research, and there’s a lot of low-hanging fruit.

Why is this the right time to encourage the adoption of automation?

The industrial revolution was 200 years ago, and yet people are still using hand pipettes. It’s insane! The hardware for doing life science robotically is quite mature at this point, and there are quite a few groups (Ginkgo, Strateos, Emerald Cloud Lab, Arctoris) that have automated robotic setups. Two barriers to widespread automation remain: the development of robust protocols that are well adapted to robotic execution and overcoming cultural and institutional inertia.

What role could automation play in generating the data we need for machine learning? What are the limitations of today’s publicly available data sets?

There’s plenty of life science datasets available online, but unfortunately most of it is unusable for machine learning purposes. Datasets collected by individual labs are usually too small, and combining datasets between labs, or even amongst different experimentalists, is often a nightmare. Today, when two different people run the ‘same’ experiment they will often get subtly different results. That’s a problem we need to systematically fix before we can collect big datasets. Automating and standardizing measurements is one promising strategy to address this challenge.

Why protein engineering?

The success of AlphaFold has highlighted to everyone the value of using machine learning to understand molecular biology. Methods for machine-learning guided closed-loop protein engineering are increasingly well developed, and automation makes it that much easier for scientists to benefit from these techniques. Protein engineering also benefits from “robotic brute force.” When you engineer any protein, it is always valuable to test more variants, making this discipline uniquely benefit from automation.

If it’s such a good idea, why haven’t academics done it in the past?

Cost and risk are the main barriers. What sort of methods are valuable to automate and run remotely? Will automation be as valuable as expected? It’s a totally different research paradigm; what will it be like? Even assuming that an academic wants to go ahead and spend $300k for a year of access to a cloud laboratory, it is difficult to find a funding source. Very few labs have enough discretionary funds to cover this cost, equipment grants are unlikely to pay for cloud lab access, and it is not obvious whether or not the NIH or other traditional funders would look favorably on this sort of expense in the budget for an R01 or equivalent. Additionally, it is difficult to seek out funding without already having data demonstrating the utility of automation for a particular application. All together, there are just a lot of barriers to entry.

You’re starting this new program called the 2022 Bioautomation Challenge. How does the program eliminate those barriers?

This program is designed to allow academic labs to test out automation with little risk and at no cost. Groups are invited to submit proposals for methods they would like to automate. Selected proposals will be granted three months of cloud lab development time, plus a generous reagent budget. Groups that successfully automate their method will also be given transition funding so that they can continue to use their cloud lab method while applying for grants with their brand-new preliminary data. This way, labs don’t need to put in any money up-front, and are able to decide whether they like the workflow and results of automation before finding long-term funding.

Historically, some investments that have been made in automation have been disappointing, like GM in the 1980s, or Tesla in the 2010s. What can we learn from the experiences of other industries? Are there any risks?

For sure. I would say even “life science in the 2010s” is an example of disappointing automation: academic labs started buying automation robots, but it didn’t end up being the right paradigm to see the benefits. I see the 2022 Bioautomation Challenge as an experiment itself: we’re going to empower labs across the country to test out many different use cases for cloud labs to see what works and what doesn’t.

Where will funding for cloud lab access come from in the future?

Currently there’s a question as to whether traditional funding sources like the NIH would look favorably on cloud lab access in a budget. One of the goals of this program is to demonstrate the benefits of cloud science, which I hope will encourage traditional funders to support this research paradigm. In addition, the natural place to house cloud lab access in the academic ecosystem is at the university level. I expect that many universities may create cloud lab access programs, or upgrade their existing core facilities into cloud labs. In fact, it’s already happening: Carnegie Mellon recently announced they’re opening a local robotic facility that runs Emerald Cloud Lab’s software.

What role will biofabs and core facilities play?

In 10 years, I think the terms “biofab,” “core facility,” and “cloud lab” will all be synonymous. Today the only important difference is how experiments are specified: many core facilities still take orders through bespoke Google forms, whereas Emerald Cloud Lab has figured out how to expose a single programming interface for all their instruments. We’re implementing this program at Emerald because it’s important that all the labs that participate can talk to one another and share protocols, rather than each developing methods that can only run in their local biofab. Eventually, I think we’ll see standardization, and all the facilities will be capable of running any protocol for which they have the necessary instruments.

In addition to protein engineering, are there other areas in the life sciences that would benefit from cloud labs and large-scale, reliable data collection for machine learning?

I think there are many areas that would benefit. Areas that struggle with reproducibility, are manually repetitive and time intensive, or that benefit from closely integrating computational analysis with data are both good targets for automation. Microscopy and mammalian tissue culture might be another two candidates. But there’s a lot of intellectual work for the community to do in order to articulate problems that can be solved with machine learning approaches, if given the opportunity to collect the data.

An interview with Martin Borch Jensen, Co-founder of Gordian Biotechnology

Recently, I caught up with Martin Borch Jensen, the Chief Science Officer of the biotech company Gordian Biotechnology. Gordian is a therapeutics company focused on the diseases of aging.

Martin did his Ph.D. in the biology of aging, received a prestigious NIH award to jumpstart an academic career, but decided to return the grant to launch Gordian. Recently, he designed and launched a $26 million competition called Longevity Impetus Grants. This program has already funded 98 grants to help scientists address what they consider to be the most important problems in aging biology (also known as geroscience). There is a growing body of research which suggests that there are underlying biological mechanisms of aging, and that it may be possible to delay the onset of multiple chronic diseases of aging, allowing people to live longer, healthier lives.

I interviewed Martin not only because I think that the field of geroscience is important, but also because I think the role that Martin is playing has significant benefits for science and society, and should be replicated in other fields. With this work, essentially, you could say that Martin is serving as a strategist for the field of geroscience as a whole, and designing a process for the competitive, merit-based allocation of funding that philanthropists such as Juan Benet, James Fickel, Jed McCaleb, Karl Pfleger, Fred Ehrsam, and Vitalik Buterin have confidence in, and have been willing to support. Martin’s role has a number of potential benefits:

- Many philanthropists are interested in supporting scientific research, but don’t have the professional staff capable of identifying areas of research that we are under-investing in. If more leading researchers were willing to identify areas where there is a strong case for additional philanthropic support, and design a process for the allocation of funding that inspires confidence, philanthropists would find it easier to support scientific research. Currently, there are almost 2,000 families in the U.S. alone that have $500 million in assets, and their current level of philanthropy is only 1.2 percent of their assets.

- Researchers could propose funding mechanisms that are designed to address shortcomings associated with the status quo. For example, at the beginning of the pandemic, Tyler Cowen and Patrick Collison launched Fast Grants, which provided grants for COVID-19 related projects in under 14 days. Other philanthropists have designed funding mechanisms that are specifically designed to support high-risk, high-return ideas by empowering reviewers to back non-consensus ideas. Schmidt Futures and the Astera Institute are supporting Focused Research Organizations, projects that address key bottlenecks in a given field, and are difficult to address using traditional funding mechanisms.

- Early philanthropic support can catalyze additional support from federal science agencies such as NIH. For example, peer reviewers in NIH study sections often want to see “preliminary data” before they recommend funding for a particular project. Philanthropic support could generate evidence not only for specific scientific projections, but for novel approaches to funding and organizing scientific research.

- Physicist Max Planck observed that science progresses one funeral at a time. Early career scientists are likely to have new ideas, and philanthropic support for these ideas could accelerate scientific progress.

Below is a copy of the Q&A conducted over email between me and Martin Borch Jensen.

Tom Kalil: What motivated you to launch Impetus grants?

Martin Borch Jensen: Hearing Patrick Collison describe the outcomes of the COVID-19 Fast Grants. Coming from the world of NIH funding, it seemed to me that the results of this super-fast program were very similar to the year-ish cycle of applying for and receiving a grant from the NIH. If the paperwork and delays could be greatly reduced, while supporting an underfunded field, that seemed unambiguously good.

My time in academia had also taught me that a number of ideas exist, with great potential impact but that fall outside of the most common topics or viewpoints and thus have trouble getting funding. And within aging biology, several ‘unfundable’ ideas turned out to shape the field (for example, DNA methylation ‘clocks’, rejuvenating factors in young blood, and the recent focus on partial epigenetic reprogramming). So what if we focused funding on ideas with the potential to shape thinking in the field, even if there’s a big risk that the idea is wrong? Averaged across a lot of projects, it seemed like that could result in more progress overall.

TK: What enabled you to do this, given that you also have a full-time job as CSO of Gordian?

MBJ: I was lucky (or prescient?) in having recently started a mentoring program for talented individuals who want to enter the field of aging biology. This Longevity Apprenticeship program is centered on contributing to real-life projects, so Impetus was a perfect fit. The first apprentices, mainly Lada Nuzhna and Kush Sharma, with some help from Edmar Ferreira and Tara Mei, helped set up a non-profit to host the program, designed the website and user interface for reviewers, communicated with universities, and did a ton of operational work.

TK: What are some of the most important design decisions you made with respect to the competition, and how did it shape the outcome of the competition?

MBJ: A big one was to remain blind to the applicant while evaluating the impact of the idea. The reviewer discussion was very much focused on ‘will this change things, if true’. We don’t have a counterfactual, but based on the number of awards that went to grad students and postdocs (almost a quarter) I think we made decisions differently than most funders.

Another innovation was to team up with one of the top geroscience journals to organize a special issue where Impetus awardees would be able to publish negative results – the experiments showing that their hypothesis is incorrect. In doing so, we both wanted to empower researchers to take risks and go for their boldest ideas (since you’re expected to publish steadily, risky projects are disincentivized for career reasons), and at the same time take a step towards more sharing of negative results so that the whole field can learn from every project.

TK: What are some possible future directions for Impetus? What advice do you have for philanthropists that are interested in supporting geroscience?

MBJ: I’m excited that Lada (one of the Apprentices) will be taking over to run the Impetus Grants as a recurring funding source. She’s already started fundraising, and we have a lot of ideas for focused topics to support (for example, biomarkers of aging that could be used in clinical trials). We’re also planning a symposium where the awardees can meet, to foster a community of people with bold ideas and different areas of expertise.

One thing that I think could greatly benefit the geroscience field, is to fund more tools and methods development, including and especially by people who aren’t pureblooded ‘aging biologists’. Our field is very limited in what we’re able to measure within aging organisms, as well as measuring the relationships between different areas of aging biology. Determining causal relationships between two mechanisms, e.g. DNA damage and senescence, requires an extensive study when we can’t simultaneously measure both with high time resolution. And tool-building is not a common focus within geroscience. So I think there’d be great benefit to steering talented researchers who are focused on that towards applications in geroscience. If done early in their careers, this could also serve to pull people into a long-term focus on geroscience, which would be a terrific return on investment. The main challenges to this approach are to make sure the people are sincerely interested in aging biology (or at least properly incentivized to solve important problems there), and that they’re solving real problems for the field. The latter might be accomplished by pairing them up with geroscience labs.

TK: If you were going to try to find other people who could play a similar role for another scientific field, what would you look for?

MBJ: I think the hardest part of making Impetus go well was finding the right reviewers. You want people who are knowledgeable, but open to embracing new ideas. Optimistic, but also critical. And not biased towards their own, or their friends’, research topics. So first, look for a ringleader who possesses these traits, and who has spent a long time in the field so that they know the tendencies and reputations of other researchers. In my case, I spent a long time in academia but have now jumped to startups, so I no longer have a dog in the fight. I think this might well be a benefit for avoiding bias.

TK: What have you learned from the process that you think is important for both philanthropists considering this model and scientists that might want to lead an initiative in their field?

MBJ: One thing is that there’s room to improve the basic user interface of how reviews are done. We designed a UI based on what I would have wanted while reviewing papers and grants. Multiple reviewers wrote to us unprompted that this was the smoothest experience they’d had. And we only spent a few weeks building this. So I’d say, it’s working putting a bit of effort into making things work smoothly at each step.

As noted above, getting the right reviewers is key. Our process ran smoothly in large part because the reviewers were all aligned on wanting projects that move the needle, and not biased towards specific topics.

But the most important thing we learned, or validated, is that this rapid model works just fine. We’ll see how things work out, but I think that it is highly likely that Impetus will support more breakthroughs than the same amount of money distributed through a traditional mechanism, although there may be more failures. I think that’s a tradeoff that philanthropists should be willing to embrace.

TK: What other novel approaches to funding and organizing research should we be considering?

MBJ: Hmmm, that’s a tough one. So many interesting experiments are happening already.

One idea we’ve been throwing around in the Longevity Apprenticeship is ‘Impetus for clinical trials’. Fast Grants funded several trials of off-patent drugs, and at least one (fluvoxamine) now looks very promising. Impetus funded some trials as well, but within geroscience in particular, there are several compounds with enough evidence that human trials are warranted, but which are off-patent and thus unlikely to be pursued by biopharma.

One challenge for ‘alternative funding sources’ is that most work is still funded by the NIH. So there has to be a possibility of continuity of research funded by the two mechanisms. Given the amount of funding we had for Impetus (4-7% of the NIA’s budget for basic aging biology), what we had in mind was funding bold ideas to the point where sufficient proof of concept data could be collected so that the NIH would be willing to provide additional funding. Whatever you do, keeping in mind how the projects will garner continued support is important.

A Conversation with Nobel Laureate Dr. Jack Steinberger

On January 27, 2014, I had the privilege and pleasure of meeting with Dr. Jack Steinberger at CERN, the European Organization for Nuclear Research, in Geneva, Switzerland. In a wide-ranging conversation, we discussed nuclear disarmament, nonproliferation, particle physics, great scientific achievements, and solar thermal power plants. Here, I give a summary of the discussion with Dr. Steinberger, a Nobel physics laureate, who serves on the FAS Board of Sponsors and has been an FAS member for decades.

Dr. Steinberger shared the Nobel Prize in 1988 with Leon Lederman and Melvin Schwartz “for the neutrino beam method and the demonstration of the doublet structure of the leptons through the discovery of the muon neutrino.” Dr. Steinberger has worked at CERN since 1968. CERN is celebrating its 60th anniversary this year and is an outstanding exemplar of multinational cooperation in science. Thousands of researchers from around the world have made use of CERN’s particle accelerators and detectors. Notably, in 2012, two teams of scientists at CERN found evidence for the Higgs boson, which helps explain the origin of mass for many subatomic particles. While Dr. Steinberger was not part of these teams, he helped pioneer the use of many innovative particle detection methods such as the bubble chamber in the 1950s. Soon after he arrived at CERN, he led efforts to use methods that recorded much larger samples of events of particle interactions; this was a necessary step along the way to allow discovery of elusive particles such as the Higgs boson.

In addition to his significant path-breaking contributions to physics, he has worked on issues of nuclear disarmament which are discussed in the book A Nuclear-Weapon-Free World: Desirable? Feasible?, (was edited by him, Bhalchandra Udgaonkar, and Joseph Rotblat). The book had recently reached its 20th anniversary when I talked to Dr. Steinberger. I had bought a copy soon after it was published in 1993, when I was a graduate student in physics and was considering a possible career in nuclear arms control. That book contains chapters by many of the major thinkers on nuclear issues including Richard Garwin, Carl Kaysen, Robert McNamara, Marvin Miller, Jack Ruina, Theodore Taylor, and several others. Some of these thinkers were or are affiliated with FAS.

While I do not intend to review the book here, let me highlight two ideas out of several insightful ones. First, the chapter by Joseph Rotblat, the long-serving head of the Pugwash Conferences, on “Societal Verification,” outlined a program for citizen reporting about attempted violations of a nuclear disarmament treaty. He believed that it was “the right and duty” for citizens to play this role. Asserting that technological verification alone is not sufficient to provide confidence that nuclear arms production is not happening, he urged that the active involvement of citizens become a central pillar of any future disarmament treaty. (Please see the article on Citizen Sensor in this issue of the PIR that explores a method of how to apply societal verification.)

The second concept that is worth highlighting is minimal deterrence. Nuclear deterrence, whether minimal or otherwise, has held back the cause of nuclear disarmament, as argued in a chapter by Dr. Steinberger, Essam Galal, and Mikhail Milstein. They point out, “Proponents of the policy of nuclear deterrence habitually proclaim its inherent stability… But the recent political changes in the Soviet Union [the authors were writing in 1993] have brought the problem of long-term stability in the control of nuclear arsenals sharply into focus. … This development demonstrates a fundamental flaw in present nuclear thinking: the premise that the world will forever be controllable by a small, static, group of powers. The world has not been, is not, and will not be static.”

Having laid bare this instability, they explain that proponents of a nuclear-weapon-free world also foresee that minimal deterrence will “encourage proliferation” because nations without nuclear weapons would argue that if minimal deterrence appears to strengthen security for the nuclear-armed nations, then why shouldn’t the non-nuclear weapon states have these weapons. After examining various levels for minimum nuclear deterrence, the authors conclude that any level poses a catastrophic risk because it would not “eliminate the nuclear threat.”

While Dr. Steinberger demurred that he has not actively researched nuclear arms control issues for almost 20 years, he is following the current nuclear political debates. He expressed concern that President Barack Obama “has said that he would lead toward nuclear disarmament but he hasn’t.” Dr. Steinberger emphasized that clinging to nuclear deterrence is “a roadblock” to disarmament and that “New START is too slow.”

On Iran, he said that if he were an Iranian nuclear scientist, he would want Iran to develop nuclear bombs given the threats that Iran faces. He underscored that if the United States stopped threatening Iran and pushed for global nuclear disarmament, real progress can be made in halting Iran’s drive for the latent capability to make nuclear weapons. He also believes that European governments need to decide to “get rid of U.S. nuclear weapons based in Europe.”

Another major interest of his is renewable energy that can provide reliable, around the clock electrical power. In particular, he has repeatedly spoken out in favor of solar thermal power. A few solar thermal plants have recently begun to show that they can generate electricity reliability even during the night or when clouds block the sun. Thus, they would provide “baseload” electricity. For example, the Gemasolar Thermosolar Plant in Fuentes de Andalucia, Spain, has an electrical power of 19.9 MW and uses a “battery” to generate power. The battery is a molten salt energy storage system that consists of a mixture of 60 percent potassium nitrate and 40 percent sodium nitrate. This mixture can retain 99 percent of the thermal energy for up to 24 hours. More than 2,000 specially designed mirrors, or “heliostats,” arrayed 360 degrees around a central tower, reflect sunlight onto the top part of the tower where the molten salt is heated up. The heated salt is then directed to a heat exchanger that turns liquid water into steam, which then spins a turbine coupled to an electrical generator.

Dr. Steinberger urges much faster accelerated development and deployment of these types of solar thermal plants because he is concerned that within the next 60 years the world will run out of relatively easy access to fossil fuels. He is not opposed to nuclear energy, but believes that the world will need greater use of true renewable energy sources.

Turning to the future of the Federation of American Scientists, Dr. Steinberger supports FAS because he values “getting scientists working together,” but he realizes that this is “hard to do” because it is difficult “to make progress in understanding issues” that involve complex politics. Many scientists can be turned off by messy politics and prefer to stick within their comfort zones of scientific research. Nonetheless, Dr. Steinberger urges FAS to get scientists to perform the research and analysis necessary to advance progress toward nuclear disarmament and to solve other challenging problems such as providing reliable renewable energy to the world.

Q&A Session on Recent Developments in U.S. and NATO Missile Defense

Researchers from the Federation of American Scientists (FAS) asked two physicists who are experts in missile defense issues, Dr. Yousaf Butt and Dr. George Lewis, to weigh in on the announcement on March 15, 2013 regarding missile defense by the Obama administration.

Before exploring their reactions and insights, it is useful to identify salient elements of U.S. missile defense and place the issue in context. There are two main strategic missile defense systems fielded by the United States: one is based on large high-speed interceptors called Ground-Based Interceptors or “GBI’s” located in Alaska and California and the other is the mostly ship-based NATO/European system. The latter, European Phased Adaptive Approach (EPAA) to missile defense is designed to deal with the threat posed by possible future Iranian intermediate- and long-range ballistic missiles to U.S. assets, personnel, and allies in Europe – and eventually attempt to protect the U.S. homeland.

The EPAA uses ground-based and mobile ship-borne radars; the interceptors themselves are mounted on Ticonderoga class cruisers and Arleigh Burke class destroyers. Two land-based interceptor sites in Poland and Romania are also envisioned – the so-called “Aegis-ashore” sites. The United States and NATO have stated that the EPAA is not directed at Russia and poses no threat to its nuclear deterrent forces, but as outlined in a 2011 study by Dr. Theodore Postol and Dr. Yousaf Butt, this is not completely accurate because the system is ship-based, and thus mobile it could be reconfigured to have a theoretical capability to engage Russian warheads.

Indeed, General James Cartwright has explicitly mentioned this possible reconfiguration – or global surge capability – as an attribute of the planned system: “Part of what’s in the budget is to get us a sufficient number of ships to allow us to have a global deployment of this capability on a constant basis, with a surge capacity to any one theater at a time.”

In the 2011 study, the authors focused on what would be the main concern of cautious Russian military planners —the capability of the missile defense interceptors to simply reach, or “engage,” Russian strategic warheads—rather than whether any particular engagement results in an actual interception, or “kill.” Interceptors with a kinematic capability to simply reach Russian ICBM warheads would be sufficient to raise concerns in Russian national security circles – regardless of the possibility that Russian decoys and other countermeasures might defeat the system in actual engagements. In short, even a missile defense system that could be rendered ineffective could still elicit serious concern from cautious Russian planners. The last two phases of the EPAA – when the higher burnout velocity “Block II” SM-3 interceptors come on-line in 2018 – could raise legitimate concerns for Russian military analysts.

A Russian news report sums up the Russian concerns: “[Russian foreign minister] Lavrov said Russia’s agreement to discuss cooperation on missile defense in the NATO Russia Council does not mean that Moscow agrees to the NATO projects which are being developed without Russia’s participation. The minister said the fulfillment of the third and fourth phases of the U.S. ‘adaptive approach’ will enter a strategic level threatening the efficiency of Russia’s nuclear containment forces.” [emphasis added]

With this background in mind, FAS’ Senior Fellow on State and Non-State Threat, Charles P. Blair (CB), asked Dr. Yousaf Butt (YB) and Dr. George Lewis (GL) for their input on recent developments on missile defense with eight questions.

___________________________________________________________________________________________

Q: (CB)On March 15, Secretary of Defense Hagel announced that the U.S. will cancel the last Phase – Phase 4 – of the European Phased Adaptive Approach (EPAA) to missile defense which was to happen around 2021. This was the phase with the faster SM-3 “Block IIB” interceptors. Will this cancellation hurt the United State’s ability to protect itself and Europe?