Improve Extreme Heat Monitoring by Launching Cross-Agency Temperature Network

Year after year, record-breaking air temperatures and heat waves are reported nationwide. In 2023, Death Valley, California experienced temperatures as high as 129°F — the highest recorded temperature on Earth for the month of June—and in July, Southwest states experienced prolonged heat waves where temperatures did not drop below 90°F. This is especially worrisome as the frequency, intensity, and duration of rising temperatures are projected to increase, and the leading weather-related cause of death in the United States is heat. To address this growing threat, the Environmental Protection Agency (EPA) and the National Oceanic and Atmospheric Administration (NOAA) should combine and leverage their existing resources to develop extreme-heat monitoring networks that can capture spatiotemporal trends of heat and protect communities from heat-related hazards.

Urban areas are particularly vulnerable to the effects of extreme heat due to the urban heat island (UHI) effect. However, UHIs are not uniform throughout a city, with some neighborhoods experiencing higher air temperatures than others. Further, communities with higher populations of Color and lower socioeconomic status disproportionately experience higher temperatures and are reported to have the highest increase in heat-related mortality. It is imperative for local government officials and city planners to understand who is most vulnerable to the impacts of extreme heat and how temperatures vary throughout a city to develop effective heat mitigation and response strategies. While the NOAA’s National Weather Service (NWS) stations provide hourly, standardized air measurements, their data do not capture intraurban variability.

Challenge and Opportunity

Heat has killed more than 11,000 Americans since 1979, yet an extreme heat monitoring network does not exist in the country. While NOAA NWS stations capture air temperatures at a central location within a city, they do not reveal how temperatures within a city vary. This missing information is necessary to create targeted, location-specific heat mitigation and response efforts.

Synergistic Environmental Hazards and Health Impacts

UHIs are metropolitan areas that experience higher temperatures than surrounding rural regions. The temperature differences can be attributed to many factors, including high impervious surface coverage, lack of vegetation and tree canopy, tall buildings, air pollution, and anthropogenic heat. UHIs are of significant concern as they contribute to higher daytime temperatures and reduce nighttime cooling, which in turn exacerbates heat-related deaths and illnesses in densely populated areas. Heat-related illnesses include heat exhaustion, cramps, edema, syncope, and stroke, among others. However, heat is not uniform throughout a city, and some neighborhoods experience warmer temperatures than others in part due to structural inequalities. Further, it has been found that, on average, People of Color and those living below the poverty line are disproportionately exposed to higher air temperatures and experience the highest increase in heat-related mortality. As temperatures continue to rise, it becomes more imperative for the federal government to protect vulnerable populations and communities from the impacts of extreme heat. This requires tools that can help guide heat mitigation strategies, such as the proposed interagency monitoring network.

High air temperatures and extreme heat are also associated with poor air quality. As common pavement surfacing materials, like asphalt and concrete, absorb heat and energy from the sun during the day, the warm air at the surface rises with present air pollutants. High air temperatures and sunlight are also known to help catalyze the production of air pollutants such as ozone in the atmosphere and impact the movement of air and, therefore, the movement of air pollution. As a result, during extreme heat events, individuals are exposed to increased levels of harmful pollutants. Because poor air quality and extreme heat are directly related, the EPA should expand its air quality networks, which currently only detect pollutants and their sources, to include air temperature. Projections have determined extreme heat events and poor air quality days will increase due to climate change, with compounding detriments to human health.

Furthermore, extreme heat is linked not only to poor air quality but also to wildfire smoke—and they are becoming increasingly concomitant. Projections report with very high confidence that warmer temperatures will lengthen the wildfire season and thus increase areas burned. Similar to extreme heat’s relationship with poor air quality, extreme heat and wildfire smoke have a synergistic effect in negatively impacting human health. Extreme heat and wildfire smoke can lead to cardiovascular and respiratory complications as well as dehydration and death. These climatic hazards have an even larger impact on environmental and human health when they occur together.

As the UHI effect is localized and its causes are well understood, urban cities are ideal locations to implement heat mitigation and adaptation strategies. To execute these plans equitably, it is critical to identify areas and communities that are most vulnerable and impacted by extreme heat events through an extreme heat monitoring network. The information collected from this network will also be valuable when planning strategies targeting poor air quality and wildfire smoke. The launch of an extreme heat monitoring network will have a considerable impact on protecting lives.

Urban Heat Mapping Efforts

Both NOAA and EPA have existing programs that aim to map, reduce, or monitor UHIs throughout the country. These efforts may have the capacity to also implement the proposed heat monitoring network.

Since 2017, NOAA has worked with the National Integrated Heat Health Information System (NIHHIS) and CAPA Strategies LLC to fund yearly UHI mapping campaign programs, which has been instrumental in highlighting the uneven distribution of heat throughout U.S. cities. These programs rely on community science volunteers who attach NOAA-funded sensors to their cars to collect air temperature, humidity, and time data. These campaigns, however, are currently only run during summer months, and not all major cities are mapped each year. NOAA’s NIHHIS has also created a Heat Vulnerability Mapping Tool, which impressively illustrates the relationship between social vulnerability and heat exposure. These maps, however, are not updated in real-time and do not display air temperature data. Another critical tool in mapping UHIs is NWS recently created HeatRisk prototype, which identifies risks of heat-related impacts in numerous parts of the country. This prototype also forecasts levels of heat concerns up to seven days into the future. However, HeatRisk does not yet provide forecasts for the entire country and uses NWS air temperature products, which do not capture intraurban variability. The EPA has a Heat Island Reduction program dedicated to working with community groups and local officials to find opportunities to mitigate UHIs and adopt projects to build heat-resilient communities. While this program aims to reduce and monitor UHIs, there are no explicit monitoring or mapping strategies in place.

While the products and services of each agency have been instrumental in mapping UHIs throughout the country and in heat communication and mitigation efforts, consistent and real-time monitoring is required to execute extreme heat response plans in a timely fashion. Merging the resources of both agencies would provide the necessary foundation to design and implement a nationwide extreme heat monitoring network.

Plan of Action

Heat mitigation strategies are often city-wide. However, there are significant differences in heat exposure between neighborhoods. To create effective heat adaptation and mitigation strategies, it is critical to understand how and where temperatures vary throughout a city. Achieving this requires a cross-agency extreme heat monitoring network between federal agencies.

The EPA and NOAA should sign a memorandum of agreement to improve air temperature monitoring nationwide. Following this, agencies should collaborate to create an extreme heat monitoring network that can capture the intraurban variability of air temperatures in major cities throughout the country.

Implementation and continued success require a number of actions from the EPA and NOAA.

- EPA should expand its Heat Island Reduction program to include monitoring urban heat. The Inflation Reduction Act (IRA) provided the agency with $41.5 billion to fund new and existing programs, with $11 billion going toward clean air efforts. Currently, their noncompetitive and competitive air grants do not address extreme heat efforts. These funds could be used to place air temperature sensors in each census tract within cities to map real-time air temperatures with high spatial resolution.

- EPA should include air temperature monitoring in their monitoring deployments. Due to air quality tracking efforts mandated by the Clean Air Act, there are existing EPA air quality monitoring sites in cities throughout the country. Heat monitoring efforts could be tested by placing temperature sensors in the same locations.

- EPA and NOAA should help determine vulnerable communities most impacted by extreme heat. Utilizing EPA’s Environmental Justice Screening and Mapping (EJScreen) Tool and NIHHIS’s Heat Vulnerability Mapping Tool, EPA and NOAA could determine where to place air temperature monitors, as the largest burden due to extreme heat tends to occur in neighborhoods with the lowest economic status.

- NOAA should develop additional air temperature sensors. NOAA’s summer UHI campaign programs highlight the agency’s ability to create sensors that capture temperature data. Given their expertise in capturing meteorological conditions, NOAA should develop national air temperature sensors that can withstand various weather conditions.

- NOAA should build data infrastructure capable of supporting real-time monitoring. Through NIHHIS, the data obtained from the monitoring network could be updated in real-time and be publicly available. This data could also merge with the current vulnerability mapping tool and HeatRisk to examine extreme heat impacts at finer spatial scales.

Successful implementation of these recommendations would result in a wealth of air temperature data, making it possible to monitor extreme heat at the neighborhood level in cities throughout the United States. These data can serve as a foundation for developing extreme heat forecasting models, which would enable governing bodies to develop and execute response plans in a timely fashion. In addition, the publicly available data from these monitoring networks will allow local, state, and tribal officials, as well as academic and non-academic researchers, to better understand the disproportionate impacts of extreme heat. This insight can support the development of targeted, location-specific mitigation and response efforts.

Conclusion

As temperatures continue to rise in the United States, so do the risks of heat-related hazards, morbidity, and mortality. This is especially true for urban cities, where the effects of extreme heat are most prevalent. A cross-agency extreme-heat monitoring network can support the development of equitable heat mitigation and disaster preparedness efforts in major cities throughout the country.

This idea of merit originated from our Extreme Heat Ideas Challenge. Scientific and technical experts across disciplines worked with FAS to develop potential solutions in various realms: infrastructure and the built environment, workforce safety and development, public health, food security and resilience, emergency planning and response, and data indices. Review ideas to combat extreme heat here.

Urban heat islands are urbanized regions experiencing higher temperatures compared to nearby rural areas. Heat waves—also known as extreme heat events—are persistent periods of unusually hot weather lasting more than two days. Research has found, however, that urban heat islands and heat waves have a synergistic relationship.

Nationwide, more than 1,300 annual deaths are estimated to be attributable to extreme heat. This number is likely an undercount, as medical records do not regularly include the impact of heat when describing the cause of death.

The Missing Data for Systemic Improvements to U.S. Public School Facilities

Peter Drucker famously said, “You can’t improve what you don’t measure.” Data on facilities helps public schools to make equitable decisions, prevent environmental health risks, ensure regular maintenance, and conduct long-term planning. Publicly available data increases transparency and accountability, resulting in more informed decision making and quality analysis. Across the U.S., public schools lack the resources to track their facilities and operations, resulting in missed opportunities to ensure equitable access to high quality learning environments. As public schools face increasing challenges to infrastructure, such as climate change, this data gap becomes more pronounced.

Why do we need data on school facilities?

School facilities affect student health and learning. The conditions of a school building directly impact the health and learning outcomes of students. The COVID-19 pandemic brought the importance of indoor air quality into the public consciousness. Many other chronic diseases are exacerbated by inadequate facilities, causing absenteeism and learning loss. From asthma to obesity to lead poisoning, the condition of the places where children spend their time impacts their health, wellbeing, and ability to learn. Better data on the physical environment helps us understand the conditions that hinder student learning.

School buildings are a source of emissions and environmental impacts. The U.S. Energy Information Administration reports that schools annually spend $8 billion on energy, and emit an estimated 72 million metric tons of carbon dioxide. While the energy use intensity of school buildings is not itself that high when compared with other sectors, there are interesting trends such as education being the largest consumer of natural gas. The public school fleet is the largest mass transit system in the U.S. As of 2023 only 1-2% of the countries estimated nearly 500,000 buses are electric.

Data provides accountability for public investment. After highways, elementary and secondary education infrastructure is the leading public capital outlay expenditure nationwide (2021 Census). Most funds to maintain school facilities come from local and state tax sources. Considering the sizable taxpayer investment, relatively little is known about the condition of these facilities. Some state governments have no school facilities staff or funding to help manage or improve school facilities. The 2021 State of Our Schools Report, the leading resource on school facilities data, uses fiscal data to highlight the issues in school facilities. This report found that there is a $85 billion annual school facilities infrastructure funding gap, meaning that, according to industry standards for both capital investment and maintenance, schools are funded $85 billion less than what is required for upkeep. Consistent with these findings, the U.S. Government Accountability Office conducted research on school building common facilities issues and found that, in 2020, 50% of districts needed to replace or update multiple essential building systems such as HVAC or plumbing.

What data do we need?

Despite the clear connections between students’ health, learning, and the condition of school buildings, there are no standardized national data sets that assist school leaders and policy makers in making informed and strategic decisions to systematically improve facilities to support health and learning.

Some examples of data points school facilities advocates want more of include:

- Long-term planning

- The number of school districts with Facilities Master Plans, or similar long-range planning tools adoption, and funding allocations

- Emergency planning and standards for declaring school closures, especially for climate-related emergencies such as extreme heat and wildfire smoke

- Facilities footprint and condition

- Landscape and scoping datasets such as total number of facilities, square footage of buildings, acres of land managed, number and type of leased facilities

- Condition reports, including dates of last construction, repairs, and maintenance compliance checks

- Prioritization of repairs, resource usage (especially energy and water), construction practices and costs, waste from construction and/or ongoing operations

- Industry standards

- Indoor environmental quality information such as the percent of classrooms meeting industry ventilation standards

- Adoption rates of climate resiliency strategies

- Board policies, greenhouse gas emissions reductions, funds dedicated to sustainability or energy staff positions, certifications and awards, and more.

Getting strategic and accessible with facilities data

Gathering this type of data represents a significant challenge for schools that are already overburdened and lack the administrative support for facilities maintenance and operations. By supporting the best available facilities research methods, facilities conditions standards, and dedicating resources to long term planning, we ensure that data collection is undertaken equitably. Some strategies that bear these challenges in mind are:

Incorporate facilities into existing data collection and increase data linkages in integrated and high quality data centers like National Center for Education Statistics. School leaders should provide key facilities metrics through the same mechanisms by which they report other education statistics. Creating data linkages allow users to make connections using existing data.

Building capacity ensures that there are staff and support systems in place to effectively gather and process school facilities data. There are more federal funds than ever before offered for building the capacity of schools to improve facilities conditions. For instance, the U.S. Department of Education recently launched the Supporting America’s School Infrastructure grant program, aimed at developing the ability of state departments of education to address facilities matters.

Research how school facilities are connected to environmental justice to better understand how resources could be most equitably distributed. Ten Strands and UndauntedK12 are piloting a framework which looks at pollution burden indicators and school adoption of environmental and climate action. We can support policies and fund research that looks at this intersection and makes these connections more transparent.

The connection between school facilities and student health and learning outcomes is clear. What we need now are the resources to effectively collect more data on school facilities that can be used by policy makers and school leaders to plan, improve learning conditions, and provide accountability to the public.

The Federation of American Scientists values diversity of thought and believes that a range of perspectives — informed by evidence — is essential for discourse on scientific and societal issues. Contributors allow us to foster a broader and more inclusive conversation. We encourage constructive discussion around the topics we care about.

It’s Time to Move Towards Movement as Medicine

For over 10 years, physical inactivity has been recognized as a global pandemic with widespread health, economic, and social impacts. Despite the wealth of research support for movement as medicine, financial and environmental barriers limit the implementation of physical activity intervention and prevention efforts. The need to translate research findings into policies that promote physical activity has never been higher, as the aging population in the U.S. and worldwide is expected to increase the prevalence of chronic medical conditions, many of which can be prevented or treated with physical activity. Action at the federal and local level is needed to promote health across the lifespan through movement.

Research Clearly Shows the Benefits of Movement for Health

Movement is one of the most important keys to health. Exercise benefits heart health and physical functioning, such as muscle strength, flexibility, and balance. But many people are unaware that physical activity is closely tied to the health conditions they fear most. Of the top five health conditions that people reported being afraid of in a recent survey conducted by the Centers for Disease Control and Prevention (CDC), the risk for four—cancer, Alzheimer’s disease, heart disease, and stroke—is increased by physical inactivity. It’s not only physical health that is impacted by movement, but also mental health and other aspects of brain health. Research shows exercise is effective in treating and preventing mental health conditions such as depression and anxiety, rates of which have skyrocketed in recent years, now impacting nearly one-third of adults in the U.S. Physical fitness also directly impacts the brain itself, for example, by boosting its ability to regenerate after injury and improving memory and cognitive functioning. The scientific evidence is clear: Movement, whether through structured exercise or general physical activity in everyday life, has a major impact on the health of individuals and as a result, on the health of societies.

Movement Is Not Just about Weight, It’s about Overall Lifelong Health

There is increasing recognition that movement is important for more than weight loss, which was the primary focus in the past. Overall health and stress relief are often cited as motivations for exercise, in addition to weight loss and physical appearance. This shift in perspective reflects the growing scientific evidence that physical activity is essential for overall physical and mental health. Research also shows that physical activity is not only an important component of physical and mental health treatment, but it can also help prevent disease, injury, and disability and lower the risk for premature death. The focus on prevention is particularly important for conditions such as Alzheimer’s disease and other types of dementia that have no known cure. A prevention mindset requires a lifespan perspective, as physical activity and other healthy lifestyle behaviors such as good nutrition earlier in life impact health later in life.

Despite the Research, Americans Are Not Moving Enough

Even with so much data linking movement to better health outcomes, the U.S. is part of what has been described as a global pandemic of physical inactivity. Results of a national survey by the CDC published in 2022 found that 25.3% of Americans reported that outside of their regular job, they had not participated in any physical activity in the previous month, such as walking, golfing, or gardening. Rates of physical inactivity were even higher in Black and Hispanic adults, at 30% and 32%, respectively. Another survey highlighted rural-urban differences in the number of Americans who meet CDC physical activity guidelines that recommend ≥ 150 minutes per week of moderate-intensity aerobic exercise and ≥ 2 days per week of muscle-strengthening exercise. Respondents in large metropolitan areas were most active, yet only 27.8% met both aerobic and muscle strengthening guidelines. Even fewer people (16.1%) in non-metropolitan areas met the guidelines.

Why are so many Americans sedentary? The COVID-19 pandemic certainly exacerbated the problem; however, data from 2010 showed similar rates of physical inactivity, suggesting long-standing patterns of sedentary behavior in the country. Some of the barriers to physical activity are internal to the individual, such as lack of time, motivation, or energy. But other barriers are societal, at both the community and federal level. At the community level, barriers include transportation, affordability, lack of available programs, and limited access to high-quality facilities. Many of these barriers disproportionately impact communities of color and people with low income, who are more likely to live in environments that limit physical activity due to factors such as accessibility of parks, sidewalks, and recreation facilities; traffic; crime; and pollution. Action at the state and federal government level could address many of these environmental barriers, as well as financial barriers that limit access to exercise facilities and programs.

Physical Inactivity Takes a Toll on the Healthcare System and the Economy

Aside from a moral responsibility to promote the health of its citizens, the government has a financial stake in promoting movement in American society. According to recent analyses, inactive lifestyles cost the U.S. economy an estimated $28 billion each year due to medical expenses and lost productivity. Physical inactivity is directly related to the non-communicable diseases that place the highest burden on the economy, such as hypertension, heart disease, and obesity. In 2016, these types of modifiable risk factors comprised 27% of total healthcare spending. These costs are mostly driven by older adults, which highlights the increasing urgency to address physical inactivity as the population ages. Physical activity is also related to healthcare costs at an individual level, with savings ranging from 9-26.6% for physically active people, even after accounting for increased costs due to longevity and injuries related to physical activity. Analysis of 2012 data from the Agency for Healthcare Research and Quality’s Medical Expenditure Panel Survey (MEPS) found that each year, people who met World Health Organization aerobic exercise guidelines, which correspond with CDC guidelines, paid on average $2,500 less in healthcare expenses related to heart disease alone compared to people who did not meet the recommended activity levels. Changes are needed at the federal, state, and local level to promote movement as medicine. If changes are not made in physical activity patterns by 2030, it is estimated that an additional $301.8 billion of direct healthcare costs will be incurred.

Government Agencies Can Play a Role in Better Promoting Physical Activity Programs

Promoting physical activity in the community requires education, resources, and removal of barriers in order for programs to have a broad reach to all citizens, including communities that are disproportionately impacted by the pandemic of physical inactivity. Integrated efforts from multiple agencies within the federal government is essential.

Past initiatives have met with varying levels of success. For example, Let’s Move!, a campaign initiated by First Lady Michelle Obama in 2010, sought to address the problem of childhood obesity by increasing physical activity and healthy eating, among other strategies. The Food and Drug Administration, Department of Agriculture, Department of Health and Human Services including the Centers for Disease Control and Prevention, and Department of Interior were among the federal agencies that collaborated with state and local government, schools, advocacy groups, community-based organizations, and private sector companies. The program helped improve the healthy food landscape, increased opportunities for children to be more physically active, and supported healthier lifestyles at the community level. However, overall rates of childhood obesity remained constant or even increased in some age brackets since the program started, and there is no evidence of an overall increase in physical activity level in children and adolescents since that time.

More recently, the U.S. Office of Disease Prevention and Health Promotion’s Healthy People 2030 campaign established data-driven national objectives to improve the health and well-being of Americans. The campaign was led by the Federal Interagency Workgroup, which includes representatives across several federal agencies including the U.S. Department of Health and Human Services, the U.S. Department of Agriculture, and the U.S. Department of Education. One of the campaign’s leading health indicators—a small subset of high-priority objectives—is increasing the number of adults who meet current minimum guidelines for aerobic physical activity and muscle-strengthening activity from 25.2% in 2020 to 29.7% by 2030. There are also movement-related objectives focused on children and adolescents as well as older adults, for example:

- Reducing the proportion of proportion of adults who do no physical activity in their free time

- Increasing the proportion of children, adolescents, and adults who do enough aerobic physical activity, muscle-strengthening activity, or both

- Increasing the proportion of child care centers where children aged 3 to 5 years do at least 60 minutes of physical activity a day

- Increasing the proportion of adolescents and adults who walk or bike to get places

- Increasing the proportion of children and adolescents who play sports

- Increasing the proportion of older adults with physical or cognitive health problems who get physical activity

- Increasing the proportion of worksites that offer an employee physical activity program

Unfortunately, there is currently no evidence of improvement in any of these objectives. All of the objectives related to physical activity with available follow-up data either show little or no detectable change, or they are getting worse.

To make progress towards the physical activity goals established by the Healthy People 2030 campaign, it will be important to identify where breakdowns in communication and implementation may have occurred, whether it be between federal agencies, between federal and local organizations, or between local organizations and citizens. Challenges brought on by the COVID-19 pandemic (e.g., less movement outside of the house for people who now work from home) will also need to be addressed, with the recognition that many of these challenges will likely persist for years to come. Critically, financial barriers should be reduced in a variety of ways, including more expansive coverage by the Centers for Medicare & Medicaid Services for exercise interventions as well as exercise for prevention. Policies that reflect a recognition of movement as medicine have the potential to improve the physical and mental health of Americans and address health inequities, all while boosting the health of the economy.

Opening Up Scientific Enterprise to Public Participation

This article was written as part of the Future of Open Science Policy project, a partnership between the Federation of American Scientists, the Center for Open Science, and the Wilson Center. This project aims to crowdsource innovative policy proposals that chart a course for the next decade of federal open science. To read the other articles in the series, and to submit a policy idea of your own, please visit the project page.

For decades, communities have had little access to scientific information despite paying for it with their tax dollars. The August 2022 Office of Science and Technology Policy (OSTP) memorandum thus catalyzed transformative change by requiring all federally funded research to be made publicly available by the end of 2025. Implementation of the memo has been supported by OSTP’s “Year of Open Science”, which is coordinating actions across the federal government to advance open access research. Access, though, is the first step to building a more responsive, equitable research ecosystem. A more recent memorandum from the Office of Management and Budget (OMB) and OSTP outlining research and development (R&D) policy priorities for fiscal year (FY) 2025 called on federal agencies to address long-standing inequities by broadening public participation in R&D. This is a critical demand signal for solutions that ensure that federally funded research delivers for the American people.

Public engagement researchers have long been documenting the importance of partnerships with key local stakeholders — such as local government and community-based organizations — in realizing the full breadth of participation with a given community. The lived experience of community members can be an invaluable asset to the scientific process, informing and even shaping research questions, data collection, and interpretation of results. Public participation can also benefit the scientific enterprise by realizing active translation and implementation of research findings, helping to return essential public benefits from the $170 billion invested in R&D each year.

The current reality is that many local governments and community-based organizations do not have the opportunities, incentives, or capacity to engage effectively in federally-funded scientific research. For example, Headwaters Economics found that a significant proportion of communities in the United States do not have the staffing, resources, or expertise to apply to receive and manage federal funding. Additionally, community-based organizations (CBOs) — the groups that are most connected to people facing problems that science could be activated to solve, such as health inequities and environmental injustices — face similar capacity barriers, especially around compliance with federal grants regulations and reporting obligations. Few research funds exist to facilitate the building and maintenance of strong relationships with CBOs and communities, or to provide capacity-building financing to ensure their full participation. Thus, relationships between communities and academia, companies, and the federal government often consume those communities’ time and resources without much return on their investment.

Great participatory science exists, if we know where to look

Place-based investments in regional innovation and research and development (R&D) unlocked by the CHIPS and Science Act (i.e. Economic Development Administration’s (EDA) Tech Hubs and National Science Foundation’s (NSF) Regional Innovation Engines and Convergence Accelerator) are starting to provide transformative opportunities to build local research capacity in an equitable manner. What they’ll need are the incentives, standards, requirements, and programmatic ideas to institutionalize equitable research partnerships.

Models of partnership have been established between community organizations, academic institutions, and/or the federal government focused on equitable relationships to generate evidence and innovations that advance community needs.

An example of an academic-community partnership is the Healthy Flint Research Coordinating Center (HFRCC). The HFRCC evaluates and must approve all research conducted in Flint, Michigan. HFRCC designs proposed studies that would align better with community concerns and context and ensures that benefits flow directly back to the community. Health equity is assessed holistically: considering the economic, environmental, behavioral, and physical health of residents. Finally, all work done in Flint is made open access through this organization. From these efforts we learn that communities can play a vital role in defining problems to solve and ensuring the research will be done with equity in mind.

An example of a federal agency-community partnership is the Environmental Protection Agency’s (EPA) Participatory Science Initiative. Through citizen science processes, the EPA has enabled data collection of under-monitored areas to identify climate-related and environmental issues that require both technical and policy solutions. The EPA helps to facilitate these citizen-science initiatives through providing resources on the best air monitoring equipment and how to then visualize field data. These initiatives specifically empower low-income and minority communities who face greater environmental hazards, but often lack power and agency to vocalize concerns.

Finally, communities themselves can be the generators of research projects, initially without a partner organization. In response to the lack of innovation in diabetic care management, Type 1 diabetic patients founded openAPS. This open source effort spurred the creation of an overnight, closed loop artificial pancreas system to reduce disease burden and save lives. Through decentralized deployment to over 2700 individuals, there are 63 million hours of real-world “closed-loop” data, with the results of prospective trials and randomized control trials (RCTs) showing fewer highs and less severe lows, i.e., greater quality of life. Thus, this innovation is now ripe for federal investment and partnership for it to reach a further critical scale.

Scaling participatory science requires infrastructure

Participatory science and innovation is still an emerging field. Yet, effective models for infrastructuring participation within scientific research enterprises have emerged over the past 20 years to build community engagement capacity of research institutions. Participatory research infrastructure (PRI) could take the form of the following:

- Offices that develop tools for interfacing with communities, like citizen’s juries, online platforms, deliberative forums, and future-thinking workshops.

- Ongoing technology assessment projects to holistically evaluate innovation and research along dimensions of equity, trust, access, etc.

- Infrastructure (physical and digital) for research, design experimentation, and open innovation led by community members.

- Organized stakeholder networks for co-creation and community-driven citizen science

- Funding resources to build CBO capacity to meaningfully engage (examples including the RADx-UP program from the NIH and Civic Innovation Challenge from NSF).

- Governance structures with community members in decision-making roles and requirements that CBOs help to shape the direction of the research proposals.

- Peer-review committees staffed by members of the public, demonstrated recently by NSF’s Regional Innovation Engines

- Coalitions that utilize research as an input for collective action and making policy and governance decisions to advance communities’ goals.

Call to action

The responsibility of federally-funded scientific research is to serve the public good. And yet, because there are so few interventions that have been scaled, participatory science will remain a “nice to have” versus an imperative for the scientific enterprise. To bring participatory science into the mainstream, there will need to be creative policy solutions that create incentive mechanisms, standards, funding streams, training ecosystems, assessment mechanisms, and organizational capacity for participatory science. To meet this moment, we need a broader set of voices contributing ideas on this aspect of open science and countless others. That is why we recently launched an Open Science Policy Sprint, in partnership with the Center for Open Science and the Wilson Center. If you have ideas for federal actions that can help the U.S. meet and exceed its open science goals, we encourage you to submit your proposals here.

Towards a Well-Being Economy: Establishing an American Mental Wealth Observatory

Summary

Countries are facing dynamic, multidimensional, and interconnected crises. The pandemic, climate change, rising economic inequalities, food and energy insecurity, political polarization, increasing prevalence of youth mental and substance use disorders, and misinformation are converging, with enormous sociopolitical and economic consequences that are weakening democracies, corroding the social fabric of communities, and threatening social stability and national security. Globalization and digitalization are synchronizing, amplifying, and accelerating these crises globally by facilitating the rapid spread of disinformation through social media platforms, enabling the swift transmission of infectious diseases across borders, exacerbating environmental degradation through increased consumption and production, and intensifying economic inequalities as digital advancements reshape job markets and access to opportunities.

Systemic action is needed to address these interconnected threats to American well-being.

A pathway to addressing these issues lies in transitioning to a Well-Being Economy, one that better aligns and balances the interests of collective well-being and social prosperity with traditional economic and commercial interests. This paradigm shift encompasses a ‘Mental Wealth’ approach to national progress, recognizing that sustainable national prosperity encompasses more than just economic growth and instead elevates and integrates social prosperity and inclusivity with economic prosperity. To embark on this transformative journey, we propose establishing an American Mental Wealth Observatory, a translational research entity that will provide the capacity to quantify and track the nation’s Mental Wealth, generate the transdisciplinary science needed to empower decision makers to achieve multisystem resilience, social and economic stability, and sustainable, inclusive national prosperity.

Challenge and Opportunity

America is facing challenges that pose significant threats to economic security and social stability. Income and wealth inequalities have risen significantly over the last 40 years, with the top 10% of the population capturing 45.5% of the total income and 70.7% of the total wealth of the nation in 2020. Loneliness, isolation, and lack of connection are a public health crisis affecting nearly half of adults in the U.S. In addition to increasing the risk of premature mortality, loneliness is associated with a three-fold greater risk of dementia.

Gun-related suicides and homicides have risen sharply over the last decade. Mental disorders are highly prevalent. Currently, more than 32% of adults and 47% of young people (18–29 years) report experiencing symptoms of anxiety and depression. The COVID-19 pandemic compounded the burden, with a 25–30% upsurge in the prevalence of depressive and anxiety disorders. America is experiencing a social deterioration that threatens its continued prosperity, as evidenced by escalating hate crimes, racial tensions, conflicts, and deepening political polarization.

To reverse these alarming trends in America and globally, policymakers must first acknowledge that these problems are interconnected and cannot effectively be tackled in isolation. For example, despite the tireless efforts of prominent stakeholder groups and policymakers, the burden of mental disorders persists, with no substantial reduction in global burden since the 1990s. This lack of progress is evident even in high-income countries where investments in and access to mental health care have increased.

Strengthening or reforming mental health systems, developing more effective models of care, addressing workforce capacity challenges, leveraging technology for scalability, and advancing pharmaceuticals are all vital for enhancing recovery rates among individuals grappling with mental health and substance use issues. However, policymakers must also better understand the root causes of these challenges so we can reshape the economic and social environments that give rise to common mental disorders.

Understanding and Addressing the Root Causes

Prevention research and action often focus on understanding and addressing the social determinants of health and well-being. However, this approach lacks focus. For example, traditional analytic approaches have delivered an extensive array of social determinants of mental health and well-being, which are presented to policymakers as imperatives for investment. These include (but are not limited to):

- Adverse early life exposures (abuse and neglect)

- Substance misuse

- Domestic, family, and community violence

- Unemployment

- Poverty and inequality

- Poor education quality

- Homelessness

- Social disconnection

- Food insecurity

- Pollution

- Natural disasters and climate change

This practice is replicated across other public health and social challenges, such as obesity, child health and development, and specific infectious and chronic diseases. Long lists of social determinants lobbied for investment lead policymakers to conclude that nations simply can’t afford to invest sufficiently to solve these health and social challenges.

However, it Is likely that many of these determinants and challenges are merely symptoms of a more systemic problem. Therefore, treating the ongoing symptoms only perpetuates a cycle of temporary relief, diverts resources away from nurturing innovation, and impedes genuine progress.

To create environments that foster mental health and well-being, where children can thrive and fulfill their potential, where people can pursue meaningful vocation and feel connected and supported to give back to communities, and where Americans can live a healthy, active, and purposeful life, policymakers must recognize human flourishing and prosperity of nations depends on a delicate balance of interconnected systems.

The Rise of Gross Domestic Product: An Imperfect Measure for Assessing the Success and Wealth of Nations

To understand the roots of our current challenges, we need to look at the history of the foundational economic metric, gross domestic product (GDP). While the concept of GDP had been established decades earlier, it was during a 1960 meeting of the Organization for Economic Co-operation and Development that economic growth became a primary ambition of nations. In the shadow of two world wars and the Great Depression, member countries pledged to achieve the highest sustainable economic growth, employment, efficiency, and development of the world economy as their top priority (Articles 1 & 2).

GDP growth became the definitive measure of a government’s economic management and its people’s welfare. Over subsequent decades, economists and governments worldwide designed policies and implemented reforms aimed at maximizing economic efficiency and optimizing macroeconomic structures to ensure consistent GDP growth. The belief was that by optimizing the economic system, prosperity could be achieved for all, allowing governments to afford investments in other crucial areas. However, prioritizing the optimization of one system above all others can have unintended consequences, destabilizing interconnected systems and leading to a host of symptoms we currently recognize as the social determinants of health.

As a result of the relentless focus on optimizing processes, streamlining resources, and maximizing worker productivity and output, our health, social, political, and environmental systems are fragile and deteriorating. By neglecting the necessary buffers, redundancies, and adaptive capacities that foster resilience, organizations and nations have unwittingly left themselves exposed to shocks and disruptions. Americans face a multitude of interconnected crises, which will profoundly impact life expectancy, healthy development and aging, social stability, individual and collective well-being, and our very ability to respond resiliently to global threats. Prioritizing economic growth has led to neglect and destabilization of other vital systems critical to human flourishing.

Shifting Paradigms: Building the Nation’s Mental Wealth

The system of national accounts that underpins the calculation of GDP is a significant human achievement, providing a global standard for measuring economic activity. It has evolved over time to encompass a wider range of activities based on what is considered productive to an economy. As recently as 1993, finance was deemed “explicitly productive” and included in GDP. More recently, Biden-Harris Administration leaders have advanced guidance for accounting for ecosystem services in benefit-cost analyses for regulatory decision-making and a roadmap for natural capital inclusion in the nation’s economic accounting services. This shows the potential to expand what counts as beneficial to the American economy—and what should be measured as a part of economic growth.

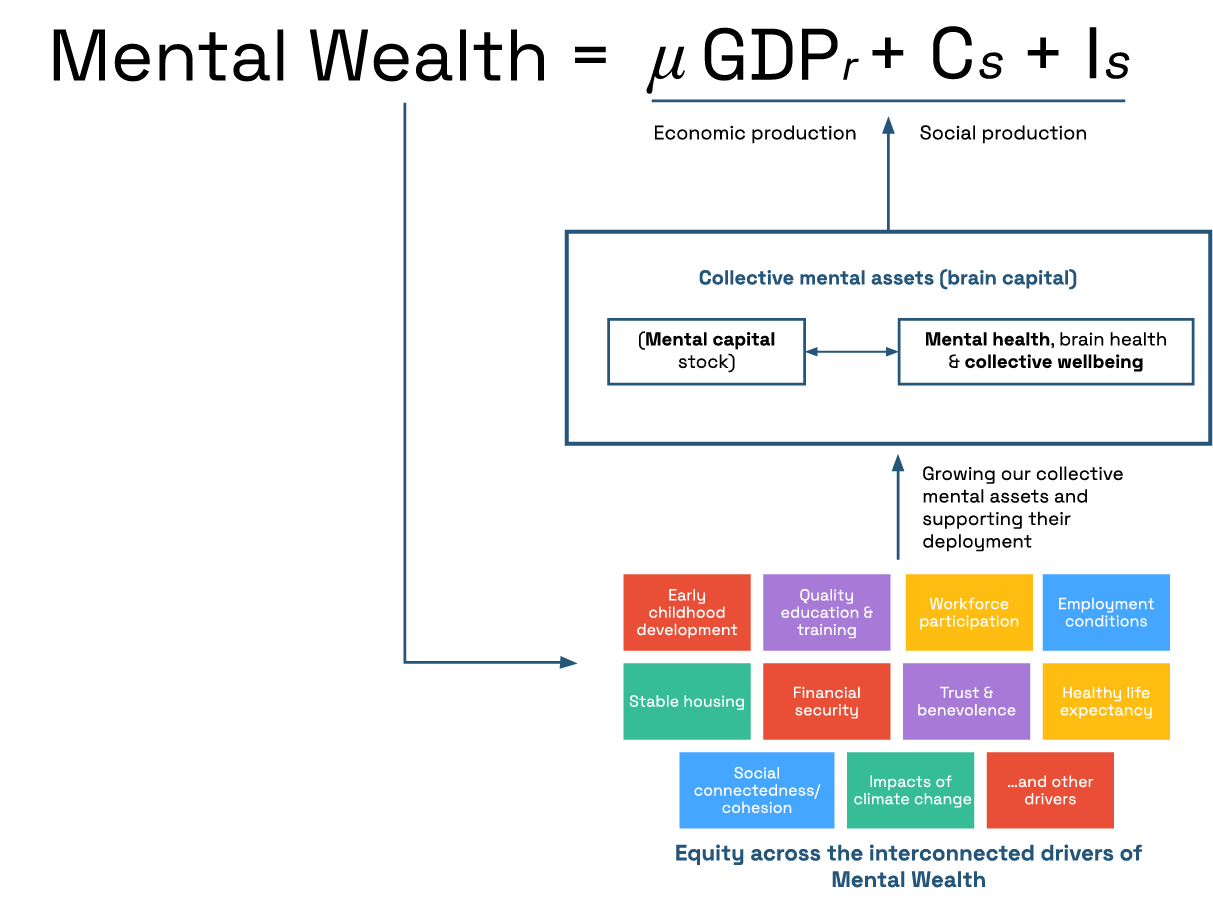

While many alternative indices and indicators of well-being and national prosperity have been proposed, such as the genuine progress indicator, the vast majority of policy decisions and priorities remain focused on growing GDP. Further, these metrics often fail to recognize the inherent value of the system of national accounts that GDP is based on. To account for this, Mental Wealth is a measure that expands the inputs of GDP to include well-being indicators. In addition to economic production metrics, Mental Wealth includes both unpaid activities that contribute to the social fabric of nations and social investments that build community resilience. These unpaid activities (Figure 1, social contributions, Cs) include volunteering, caregiving, civic participation, environmental restoration, and stewardship, and are collectively called social production. Mental Wealth also includes the sum of investment in community infrastructure that enables engagement in socially productive activities (Figure 1, social investment, Is). This more holistic indicator of national prosperity provides an opportunity to shift policy priorities towards greater balance between the economy and broader societal goals and is a measure of the strength of a Well-Being Economy.

Mental wealth is a more comprehensive measure of national prosperity that monetizes the value generated by a nation’s economic and social productivity.

Valuing social production also promotes a more inclusive narrative of a contributing life, and it helps to rebalance societal focus from individual self-interest to collective responsibilities. A recent report suggests that, in 2021, Americans contributed more than $2.293 trillion in social production, equating to 9.8% of GDP that year. Yet social production is significantly underestimated due to data gaps. More data collection is needed to analyze the extent and trends of social production, estimate the nation’s Mental Wealth, and assess the impact of policies on the balance between social and economic production.

Unlocking Policy Insights through Systems Modeling and Simulation

Systems modeling plays a vital role in the transition to a Well-Being Economy by providing an understanding of the complex interdependencies between economic, social, environmental, and health systems, and guiding policy actions. Systems modeling brings together expertise in mathematics, biostatistics, social science, psychology, economics, and more, with disparate datasets and best available evidence across multiple disciplines, to better understand which policies across which sectors will deliver the greatest benefits to the economy and society in balance. Simulation allows policymakers to anticipate the impacts of different policies, identify strategic leverage points, assess trade-offs and synergies, and make more informed decisions in pursuit of a Well-Being Economy. Forecasting and future projections are a long-standing staple activity of infectious disease epidemiologists, business and economic strategists, and government agencies such as the National Oceanic and Atmospheric Administration, geared towards preparing the nation for the economic realities of climate change.

Plan of Action

An American Mental Wealth Observatory to Support Transition to a Well-Being Economy

Given the social deterioration that is threatening America’s resilience, stability, and sustainable economic prosperity, the federal government must systemically redress the imbalance by establishing a framework that privileges an inclusive, holistic, and balanced approach to development. The government should invest in an American Mental Wealth Observatory (Table 1) as critical infrastructure to guide this transition. The Observatory will report regularly on the strength of the Well-Being Economy as a part of economic reporting (see Table 1, Stream 1); generate the transdisciplinary science needed to inform systemic reforms and coordinated policies that optimize economic, environmental, health and social sectors in balance such as adding Mental Wealth to the system of national accounts (Streams 2–4); and engage in the communication and diplomacy needed to achieve national and international cooperation in transitioning to a Well-Being Economy (Streams 5–6).

This transformative endeavor demands the combined instruments of science, policy, politics, public resolve, social legislation, and international cooperation. It recognizes the interconnectedness of systems and the importance of a systemic and balanced approach to social and economic development in order to build equitable long-term resilience, a current federal interagency priority. The Observatory will make better use of available data from across multiple sectors to provide evidence-based analysis, guidance, and advice. The Observatory will bring together leading scientists (across disciplines of economics, social science, implementation science, psychology, mathematics, biostatistics, business, and complex systems science), policy experts, and industry partners through public-private partnerships to rapidly develop tools, technologies, and insights to inform policy and planning at national, state, and local levels. Importantly, the Observatory will also build coalitions between key cross-sectoral stakeholders and seek mandates for change at national and international levels.

The American Mental Wealth Observatory should be chartered by the National Science and Technology Council, building off the work of the White House Report on Mental Health Research Priorities. Federal partners should include, at a minimum, the Department of Health and Human Services (HHS) Office of the Assistant Secretary for Health (OASH), specifically the Office of the Surgeon General (OSG) and Office of Disease Prevention and Health Promotion (ODPHP); the Substance Abuse and Mental Health Services Administration (SAMHSA); the Office of Management and Budget; the Council of Economic Advisors (CEA); and the Department of Commerce (DOC), alongside strong research capacity provided by the National Science Foundation (NSF) and the National Institutes of Health (NIH).

Operationalizing the American Mental Wealth Observatory will require an annual investment of $12 million from diverse sources, including government appropriations, private foundations, and philanthropy. This funding would be used to implement a comprehensive range of priority initiatives spanning the six streams of activity (Table 2) coordinated by the American Mental Wealth Observatory leadership. Acknowledging the critical role of brain capital in upholding America’s prosperity and security, this investment offers considerable returns for the American people.

Conclusion

America stands at a pivotal moment, facing the aftermath of a pandemic, a pressing crisis in youth mental and substance use disorders, and a growing sense of disconnection and loneliness. The fragility of our health, social, environmental, and political systems has come into sharp focus, and global threats of climate change and generative AI loom large. There is a growing sense that the current path is unsustainable.

After six decades of optimizing the economic system for growth in GDP, Americans are reaching a tipping point where losses due to systemic fragility, disruption, instability, and civil unrest will outweigh the benefits. The United States government and private sector leaders must forge a new path. The models and approaches that guided us through the 20th century are ill-equipped to guide us through the challenges and threats of the 21st century.

This realization presents an extraordinary opportunity to transition to a Well-Being Economy and rebuild the Mental Wealth of the nations. An American Mental Wealth Observatory will provide the data and science capacity to help shape a new generation grounded in enlightened global citizenship, civic-mindedness, and human understanding and equipped with the cognitive, emotional, and social resources to address global challenges with unity, creativity, and resilience.

The University of Sydney’s Mental Wealth Initiative thanks the following organizations for their support in drafting this memo: FAS, OECD, Rice University’s Baker Institute for Public Policy, Boston University School of Public Health, the Brain Capital Alliance, and CSART.

Brain capital is a collective term for brain skills and brain health, which are fundamental drivers of economic and social prosperity. Brain capital comprises (1) brain skills, which includes the ability to think, feel, work together, be creative, and solve complex problems, and (2) brain health, which includes mental health, well-being, and neurological disorders that critically impact the ability to use brain skills effectively, for building and maintaining positive relationships with others, and for resilience against challenges and uncertainties.

Social production is the glue that holds society together. These unpaid social contributions foster community well-being, support our economic productivity, improve environmental wellbeing, and help make us more prosperous and resilient as a nation.

Social production includes volunteering and charity work, educating and caring for children, participating in community groups, and environmental restoration—basically any activity that contributes to the social fabric and community well-being.

Making the value of social production visible helps us track how economic policies are affecting social prosperity and allows governments to act to prevent an erosion of our social fabric. So instead of just measuring our economic well-being through GDP, measuring and reporting social production as well gives us a more holistic picture of our national welfare. The two combined (GDP plus social production) is what we call the overall Mental Wealth of the nation, which is a measure of the strength of a Well-Being Economy.

The Mental Wealth metric extends GDP to include not only the value generated by our economic productivity but also the value of this social productivity. In essence, it is a single measure of the strength of a Well-Being Economy. Without a Mental Wealth assessment, we won’t know how we are tracking overall in transitioning to such an economy.

Furthermore, GDP only includes the value created by those in the labor market. The exclusion of socially productive activities sends a signal that society does not value the contributions made by those not in the formal labor market. Privileging employment as a legitimate social role and indicator of societal integration leads to the structural and social marginalization of the unemployed, older adults, and the disabled, which in turn leads to lower social participation, intergenerational dependence, and the erosion of mental health and well-being.

Well-being frameworks are an important evolution in our journey to understand national prosperity and progress in more holistic terms. Dashboards of 50-80 indicators like those proposed in Australia, Scotland, New Zealand, Iceland, Wales, and Finland include things like health, education, housing, income and wealth distribution, life satisfaction, and more, which help track some important contributors to social well-being.

However, these sorts of dashboards are unlikely to compete with topline economic measures like GDP as a policy focus. Some indicators will go up, some will go down, some will remain steady, so dashboards lack the ability to provide a clear statement of overall progress to drive policy change.

We need an overarching measure. Measurement of the value of social production can be integrated into the system of national accounts so that we can regularly report on the nation’s overall economic and social well-being (or Mental Wealth). Mental Wealth provides a dynamic measure of the strength (and good management) of a Well-Being Economy. By adopting Mental Wealth as an overarching indicator, we also gain an improved understanding of the interdependence of a healthy economy and a healthy society.

Tilling the Federal SOIL for Transformative R&D: The Solution Oriented Innovation Liaison

Summary

The federal government is increasingly embracing Advanced Research Projects Agencies (ARPAs) and other transformative research and engagement enterprises (TREEs) to connect innovators and create the breakthroughs needed to solve complex problems. Our innovation ecosystem needs more of these TREEs, especially for societal challenges that have not historically benefited from solution-oriented research and development. And because the challenges we face are so interwoven, we want them to work and grow together in a solution-oriented mode.

The National Science Foundation (NSF)’s new Directorate for Technology, Innovation and Partnerships should establish a new Office of the Solution-Oriented Innovation Liaison (SOIL) to help TREEs share knowledge about complementary initiatives, establish a community of practice among breakthrough innovators, and seed a culture for exploring new models of research and development within the federal government. The SOIL would have two primary goals: (1) provide data, information, and knowledge-sharing services across existing TREEs; and (2) explore opportunities to pilot R&D models of the future and embed breakthrough innovation models in underleveraged agencies.

Challenge and Opportunity

Climate change. Food security. Social justice. There is no shortage of complex challenges before us—all intersecting, all demanding civil action, and all waiting for us to share knowledge. Such challenges remain intractable because they are broader than the particular mental models that any one individual or organization holds. To develop solutions, we need science that is more connected to social needs and to other ways of knowing. Our problem is not a deficit of scientific capital. It is a deficit of connection.

Connectivity is what defines a growing number of approaches to the public administration of science and technology, alternatively labeled as transformative innovation, mission-oriented innovation, or solutions R&D. Connectivity is what makes DARPA, IARPA, and ARPA-E work, and it is why new ARPAs are being created for health and proposed for infrastructure, labor, and education. Connectivity is also a common element among an explosion of emerging R&D models, including Focused Research Organizations (FROs) and Distributed Autonomous Organizations (DAOs). And connectivity is the purpose of NSF’s new Directorate for Technology, Innovation and Partnerships (TIP), which includes “fostering innovation ecosystems” in its mission. New transformative research and engagement enterprises (TREEs) could be especially valuable in research domains at the margins, where “the benefits of innovation do not simply trickle down.

The history of ARPAs and other TREEs shows that solutions R&D is successfully conducted by entities that combine both research and engagement. If grown carefully, such organisms bear fruit. So why just plant one here or there when we could grow an entire forest? The metaphor is apt. To grow an innovation ecosystem, we must intentionally sow the seeds of TREEs, nurture their growth, and cultivate symbiotic relationships—all while giving each the space to thrive.

Plan of Action

NSF’s TIP directorate should create a new Office of Solution-Oriented Innovation (SOIL) to foster a thriving community of TREEs. SOIL would have two primary goals: (1) nurture more TREEs of more varieties in more mission spaces; and (2) facilitate more symbiosis among TREEs of increasing number and variety.

Goal 1: More TREEs of more varieties in more mission spaces

SOIL would shepherd the creation of TREEs wherever they are needed, whether in a federal department, a state or local agency, or in the private, nonprofit, or academic sectors. Key to this is codifying the lessons of successful TREEs and translating them to new contexts. Not all such knowledge is codifiable; much is tacit. As such, SOIL would draw upon a cadre of research-management specialists who have a deep familiarity with different organizational forms (e.g., ARPAs, FROs, DAOs) and could work with the leaders of departments, businesses, universities, consortia, etc. to determine which form best suits the need of the entity in question and provide technical assistance in establishment.

An essential part of this work would be helping institutions create mission-appropriate governance models and cultures. Administering TREEs is neither easy nor typical. Indeed, the very fact that they are managed differently from normal R&D programs makes them special. Former DARPA Director Arati Prabhakar has emphasized the importance of such tailored structures to the success of TREEs. To this end, SOIL would also create a Community of Cultivators comprising former TREE leaders, principal investigators (PIs), and staff. Members of this community would provide those seeking to establish new TREEs with guidance during the scoping, launch, and management phases.

SOIL would also provide opportunities for staff at different TREEs to connect with each other and with collective resources. It could, for example, host dedicated liaison officers at agencies (as DARPA has with its service lines) to coordinate access to SOIL resources and other TREEs and support the documentation of lessons learned for broader use. SOIL could also organize periodic TREE conventions for affiliates to discuss strategic directions and possibly set cross-cutting goals. Similar to the SBIR office at the Small Business Administration, SOIL would also report annually to Congress on the state of the TREE system, as well as make policy recommendations.

Goal 2: More symbiosis among TREEs of increasing number and variety

Success for SOIL would be a community of TREEs that is more than the sum of its parts. It is already clear how the defense and intelligence missions of DARPA and IARPA intersect. There are also energy programs at DARPA that might benefit from deeper engagement with programs at ARPA-E. In the future, transportation-infrastructure programs at ARPA-E could work alongside similar programs at an ARPA for infrastructure. Fostering stronger connections between entities with overlapping missions would minimize redundant efforts and yield shared platform technologies that enable sector-specific advances.

Indeed, symbiotic relationships could spawn untold possibilities. What if researchers across different TREEs could build knowledge together? Exchange findings, data, algorithms, and ideas? Co-create shared models of complex phenomena and put competing models to the test against evidence? Collaborate across projects, and with stakeholders, to develop and apply digital technologies as well as practices to govern their use? A common digital infrastructure and virtual research commons would enable faster, more reliable production (and reproduction) of research across domains. This is the logic underlying the Center for Open Science and the National Secure Data Service.

To this end, SOIL should build a digital Mycelial Network (MyNet), a common virtual space that would harness the cognitive diversity across TREEs for more robust knowledge and tools. MyNet would offer a set of digital services and resources that could be accessed by TREE managers, staff, and PIs. Its most basic function could be to depict the ecosystem of challenges and solutions, search for partners, and deconflict programs. Once partnerships are made, higher-level functions would include secure data sharing, co-creation of solutions, and semantic interconnection. MyNet could replace the current multitude of ad hoc, sector-specific systems for sharing research resources, giving more researchers access to more knowledge about complex systems and fewer obstacles from paywalls. And the larger the network, the bigger the network effects. If the MyNet infrastructure proves successful for TREEs, it could ultimately be expanded more broadly to all research institutions—just as ARPAnet expanded into the public internet.

For users, MyNet would have three layers:

- A data layer for archive and access

- An information layer for analysis and synthesis

- A knowledge layer for creating meaning in terms of problems and solutions

These functions would collectively require:

- Physical structures: The facilities, equipment, and workforce for data storage, routing, and cloud computing

- Virtual structures: The applications and digital environments for sharing data, algorithms, text, and other media, as well as for remote collaboration in virtual laboratories and discourse across professional networks

- Institutional structures: The practices and conventions to promote a robust research enterprise, prohibit dangerous behavior, and enforce community data and information standards.

How might MyNet be applied? Consider three hypothetical programs, all focused on microplastics: a medical program that maps how microplastics are metabolized and impact health; a food-security program that maps how microplastics flow through food webs and supply chains; and a social justice program that maps which communities produce and consume microplastics. In the data layer, researchers at the three programs could combine data on health records, supply logistics, food inspections, municipal records, and demographics. In the information layer, they might collaborate on coding and evaluating quantitative models. Finally, in the knowledge layer, they could work together to validate claims regarding who is impacted, how much, and by what means.

Initial Steps

First, Congress should authorize and appropriate the NSF TIP Directorate with $500 million over four years for a new Office of the Solution-Oriented Innovation Liaison. Congress should view SOIL as an opportunity to create a shared service among emergent, transformative federal R&D efforts that will empower—rather than bureaucratically stifle—the science and technological advances we need most. This mission fits squarely under the NSF TIP Directorate’s mandate to “mobilize the collective power of the nation” by serving as “a crosscutting platform that collaboratively integrates with NSF’s existing directorates and fosters partnerships—with government, industry, nonprofits, civil society and communities of practice—to leverage, energize and rapidly bring to society use-inspired research and innovation.”

Once appropriated and authorized to begin intentionally growing a network of TREEs, NSF’s TIP Directorate should focus on a four-year plan for SOIL. TIP should begin by choosing an appropriate leader for SOIL, such as a former director or directorate manager of an ARPA (or other TREE). SOIL would be tasked with first engaging the management of existing ARPAs in the federal government, such as those at the Departments of Defense and Energy, to form an advisory board. The advisory board would in turn guide the creation of experience-informed operating procedures for SOIL to use to establish and aid new TREEs. These might include discussions geared toward arriving at best practices and mechanisms to operate rapid solutions-focused R&D programs for the following functions:

- Hiring services for temporary employees and program managers, pipelines to technical expertise, and consensus on out-of-government pay scales

- Rapid contracting toolkits to acquire key technology inputs from foreign and domestic suppliers

- Research funding structures than enable program managers to make use of multiple kinds of research dollars in the same project, in a coordinated fashion, managed by one entity, and without needing to engage different parts of different agencies

- Early procurement for demonstration, such that mature technologies and systems can transition smoothly into operational use in the home agency or other application space

- The right vehicles (e.g., FFRDCs) for SOIL to subcontract with to pursue support structures on each of these functions

- The ability to define multiyear programs, portfolios, and governance structures, and execute them at their own pace, off-cycle from the budget of their home agencies

Beyond these structural aspects, the board must also incorporate important cultural aspects of TREES into best practices. In my own research into the managerial heuristics that guide TREEs, I found that managers must be encouraged to “drive change” (critique the status quo, dream big, take action), “be better” (embrace difference, attract excellence, stand out from the crowd), “herd nerds” (focus the creative talent of scientists and engineers), “gather support” (forge relationships with research conductors and potential adversaries), “try and err” (take diverse approaches, expect to fail, learn from failure), and “make it matter” (direct activities to realize outcomes for society, not for science).

The board would also recommend a governance structure and implementation strategy for MyNet. In its first year, SOIL could also start to grow the Community of Cultivators, potentially starting with members of the advisory board. The board chair, in partnership with the White House Office of Science and Technology Policy, would also convene an initial series of interagency working groups (IWGs) focused on establishing a community of practice around TREEs, including but not limited to representatives from the following R&D agencies, offices, and programs:

- DARPA

- ARPA-E

- IARPA

- NASA

- National Institutes of Health

- National Institute for Standards and Technology

In years two and three, SOIL would focus on growing three to five new TREEs at organizations that have not had solutions-oriented innovation programs before but need them.

- If a potential TREE opportunity is found at another agency, SOIL should collaborate with the agency’s R&D teams to identify how the TREEs might be pursued and consult the advisory board on the new mission space and its potential similarities and differences to existing TREEs. If there is a clear analogue to an existing TREE, the SOIL should use programmatic dollars to detail one or two technical experts for a one-year appointment to the new agency’s R&D teams to explore how to build the new TREE.

- If a potential TREE opportunity is found at a government-adjacent or external organization such as a new Focused Research Organization created around a priority NSF domain, SOIL should leverage programmatic dollars to provide needed seed funding for the organization to pursue near-term milestones. SOIL should then recommend to the TIP Directorate leadership the outcomes of these near-term pilot supports and whether the newly created organization should receive funds to scale. SOIL may also consider convening a round of aligned philanthropic and private funders interested in funding new TREEs.

- If the opportunity concerns an existing TREE, there should be a memorandum of understanding (MOU) and or request for funding process by which the TREE may apply for off-cycle funding with approval from the host agency.

SOIL would also start to build a pilot version of MyNet as a resource for these new TREEs, with a goal of including existing ARPAs and other TREEs as quickly as possible. In establishing MyNet, SOIL should focus on implementing the most appropriate system of data governance by first understanding the nature of the collaborative activities intended. Digital research collaborations can apply and mix a range of different governance patterns, with different amounts of availability and freedoms with respect to digital resources. MyNet should be flexible enough to meet a range of needs for openness and security. To this end, SOIL should coordinate with the recently created National Secure Data Service and apply lessons forward in creating an accessible, secure, and ethical information-sharing environment.

Year four and beyond would be characterized by scaling up. Building on the lessons learned in the prior two years of pilot programs, SOIL would coordinate with new and legacy TREEs to refresh operating procedures and governance structures. It would then work with an even broader set of organizations to increase the number of TREEs beyond the three to five pilots and continue to build out MyNet as well as the Community of Cultivators. Periodic evaluations of SOIL’s programmatic success would shape its evolution after this point. These should be framed in terms of its capacity to create and support programs that yield meaningful technological and socioeconomic outcomes, not just produce traditional research metrics. As such, in its creation of new TREEs, SOIL should apply a major lesson of the National Academies’ evaluation of ARPA-E: explicitly align the (necessarily) robust performance management systems at the project level with strategy and evaluation systems at the program, portfolio, and agency levels. The long-term viability of SOIL and TREEs will depend on their ability to demonstrate value to the public.

The transformative research model typically works like this:

- Engage with stakeholders to understand their needs and set audacious goals for addressing them.

- Establish lean projects run by teams of diverse experts assembled just long enough to succeed or fail in one approach.

- Continuously evaluate projects, build on what works, kill what doesn’t, and repeat as necessary.

In a nutshell, transformative research enterprises exist solely to solve a particular problem, rather than to grow a program or amass a stock of scientific capital.