Using Targeted Industrial Policy to Address National Security Implications of Chinese Chips

Last year the Federation of American Scientists (FAS), Jordan Schneider (of ChinaTalk), Chris Miller (author of Chip War) and Noah Smith (of Noahpinion) hosted a call for ideas to address the U.S. chip shortage and Chinese competition. A handful of ideas were selected based on the feasibility of the idea and its and bipartisan nature. This memo is one of them.

In recent years, China has heavily subsidized its legacy chip manufacturing capabilities. Although U.S. sanctions have restricted China’s access to and ability to develop advanced AI chips, they have done nothing to undermine China’s production of “legacy chips,” which are semiconductors built on process nodes 28nm or larger. It is important to clarify that the “22 nm” “20nm” “28nm” or “32nm” lithography process is simply a commercial name for a generation of a certain size and its technology that has no correlation to the actual manufacturing specification, such as the gate length or half pitch. Furthermore, it is important to note that different firms have different specifications when it comes to manufacturing. For instance, Intel’s 22nm lithography process uses a 193nm wavelength argon fluoride laser (ArF laser) with a 90nm Gate Pitch and a 34 nm Fin height. These specifications vary between fab plats such as Intel and TSMC. The prominence of these chips makes them a critical technological component in applications as diverse as medical devices, fighter jets, computers, and industrial equipment. Since 2014, state-run funds in China have invested more than $40 billion into legacy chip production to meet their goal of 70% chip sufficiency by 2030. Chinese legacy chip dominance—made possible only through the government’s extensive and unfair support—will undermine the position of Western firms and render them less competitive against distorted market dynamics.

Challenge and Opportunity

Growing Chinese capacity and “dumping” will deprive non-Chinese chipmakers of substantial revenue, making it more difficult for these firms to maintain a comparative advantage. China’s profligate industrial policy has damaged global trade equity and threatens to create an asymmetrical market. The ramifications of this economic problem will be most felt in America’s national security, as opposed to from the lens of consumers, who will benefit from the low costs of Chinese dumping programs until a hostile monopoly is established. Investors—anticipating an impending global supply glut—are already encouraging U.S. firms to reduce capital expenditures by canceling semiconductor fabs, undermining the nation’s supply chain and self-sufficiency. In some cases, firms have decided to cease manufacturing particular types of chips outright due to profitability concerns and pricing pressures. Granted, the design of chip markets is intentionally opaque, so pricing data is insufficient to fully define the extent of this phenomenon; however, instances such as Taiwan’s withdrawal from certain chip segments shortly after a price war between China and its competitors in late 2023 indicate the severity of this issue. If they continue, similar price disputes are capable of severely subverting the competitiveness of non-Chinese firms, especially considering how Chinese firms are not subject to the same fiscal constraints as their unsubsidized counterparts. In an industry with such high fixed costs, the Chinese state’s subsidization gives such firms a great advantage and imperils U.S. competitiveness and national security.

Were the U.S. to engage in armed conflict with China, reduced industrial capacity could quickly impede the military’s ability to manufacture weapons and other materials. Critical supply chain disruptions during the COVID-19 pandemic illustrate how the absence of a single chip can hold hostage entire manufacturing processes; if China gains absolute legacy chip manufacturing dominance, these concerns would be further amplified as Chinese firms become able to outright deny American access to critical chips, impose harsh costs through price hikes, or impose diplomatic compromises and quid-pro-quo.Furthermore, decreased Chinese reliance on Taiwanese semiconductors reduces their economic incentive to pursue a diplomatic solution in the Taiwan Strait—making armed conflict in the region more likely. This weakened posture endangers global norms and the balance of power in Asia—undermining American economic and military hegemony in the region.

China’s legacy chip manufacturing is fundamentally an economic problem with national security consequences. The state ought to interfere in the economy only when markets do not operate efficiently and in cases where the conduct of foreign adversaries creates market distortion. While the authors of this brief do not support carte blanche industrial policy to advance the position of American firms, it is the belief of these authors that the Chinese government’s efforts to promote legacy chip manufacturing warrant American interference to ameliorate harms that they have invented. U.S. regulators have forced American companies to grapple with the sourcing problems surrounding Chinese chips; however, the issue with chip control is largely epistemic. It is not clear which firms do and do not use Chinese chips, and even if U.S. regulators knew, there is little political appetite to ban them as corporations would then have to pass higher costs onto consumers and exacerbate headline inflation. Traditional policy tools for achieving economic objectives—such as sanctions—are therefore largely ineffectual in this circumstance. More innovative solutions are required.

If its government fully commits to the policy, there is little U.S. domestic or foreign policy can do to prevent China from developing chip independence. While American firms can be incentivized to outcompete their Chinese counterparts, America cannot usurp Chinese political directives to source chips locally. This is true because China lacks the political restraints of Western countries in financially incentivizing production, but also because in the past—under lighter sanctions regimes—China’s Semiconductor Manufacturing International Corporation (SMIC) acquired multiple Advanced Semiconductor Materials Lithography (ASML) DUV (Deep Ultraviolet Light) machines. Consequently, any policy that seeks to mitigate the perverse impact of Chinese dominance of the legacy chip market must a) boon the competitiveness of American and allied firms in “third markets” such as Indonesia, Vietnam, and Brazil and b) de-risk America’s supply chain from market distortions and the overreliance that Chinese policies have affected. China’s growing global share of legacy chip manufacturing threatens to recreate the global chip landscape in a way that will displace U.S. commercial and security interests. Consequently, the United States must undertake both defensive and offensive measures to ensure a coordinated response to Chinese disruption.

Plan of Action

Considering the above, we propose the United States enact a policy mutually predicated on innovative technological reform and targeted industrial policy to onshore manufacturing capabilities.

Recommendation 1. Weaponizing electronic design automation

Policymakers must understand that from a lithography perspective, the United States controls all essential technologies when it comes to the design and manufacturing of integrated circuits. This is a critically overlooked dimension in contemporary policy debates because electronic design automation (EDA) software closes the gap between high-level chip design in software and the lithography system itself. Good design simulates a proposed circuit before manufacturing, plans large integrated circuits (IC) by “bundling” small subcomponents together, and verifies the design is connected correctly and will deliver the required performance. Although often overlooked, the photolithography process, as well as the steps required before it, is a process as complex as coming up with the design of the chip itself.

No profit-maximizing manufacturer would print a chip “as designed” because it would suffer certain distortions and degradations throughout the printing process; therefore, EDA software is imperative to mitigate imperfections throughout the photolithography process. In much the same way that software within a home-use printer automatically screens for paper material (printer paper vs glossy photo paper) and automatically adjusts the mixture of solvent, resins, and additives to display properly, EDA software learns design kinks and responds dynamically. In the absence of such software, the yield of usable chips would be much lower, making these products less commercially viable. Contemporary public policy discourse focuses only on chips as a commodified product, without recognizing the software ecosystem that is imperative in their design and use.

Today, there exist only two major suppliers of EDA software for semiconductor manufacturing: Synopsys and Cadence Design Systems. This reality presents a great opportunity for the United States to assert dominance in the legacy chips space. In hosting all EDA in a U.S.-based cloud—for instance, a data center located in Las Vegas or another secure location—America can force China to purchase computing power needed for simulation and verification for each chip they design. This policy would mandate Chinese reliance on U.S. cloud services to run electromagnetic simulations and validate chip design. Under this proposal, China would only be able to use the latest EDA software if such software is hosted in the U.S., allowing American firms to a) cut off access at will, rendering their technology useless and b) gain insight into homegrown Chinese designs built on this platform. Since such software would be hosted on a U.S.-based cloud, Chinese users would not download the software which would greatly mitigate the risk of foreign hacking or intellectual property theft. While the United States cannot control chips outright considering Chinese production, it can control where they are integrated. A machine without instructions is inoperable, and the United States can make China’s semiconductors obsolete.

The emergence of machine learning has introduced substantial design innovation in older lithography technologies. For instance, Synopsis has used new technologies to discern the optimal route for wires that link chip circuits, which can factor in all the environmental variables to simulate the patterns a photo mask design would project throughout the lithography process. While the 22nm process is not cutting edge, it is legacy only in the sense of its architecture. Advancements in hardware design and software illustrate the dynamism of this facet in the semiconductor supply chain. In extraordinary circumferences, the United States could also curtail usage of such software in the event of a total trade war. Weaponizing this proprietary software could compel China to divulge all source code for auditing purposes since hardware cannot work without a software element.

The United States must also utilize its allied partnerships to restrict critical replacement components from enabling injurious competition from the Chinese. Software notwithstanding, China currently has the capability to produce 14nm nodes because SMIC acquired multiple ASML DUV machines under lighter Department of Commerce restrictions; however, SMIC heavily relies on chip-making equipment imported from the Netherlands and Japan. While the United States cannot alter the fact of possession, it has the capacity to take limited action against the realization of these tools’ potential by restricting China’s ability to import replacement parts to service these machines, such as the lenses they require to operate. Only the German firm Zeiss has the capability to produce such lenses that ArF lasers require to focus—illustrating the importance of adopting a regulatory outlook that encompasses all verticals within the supply chain. The utility of controlling critical components is further amplified by the fact that American and European firms have limited efficacy in enforcing copyright laws against Chinese entities. For instance, while different ICs are manufactured within the 22nm instruction set, not all run on a common instruction set such as ARM. However, even if such designs run on a copyrighted instruction set, the United States has no power to enforce domestic copyright law in a Chinese jurisdiction. China’s capability to reverse engineer and replicate Western-designed chips further underscores the importance of controlling 1) the EDA landscape and 2) ancillary components in the chip manufacturing process. This reality presents a tremendous yet overlooked opportunity for the United States to reassert control over China’s legacy chip market.

Recommendation 2. Targeted industrial policy

In the policy discourse surrounding semiconductor manufacturing, this paper contends that too much emphasis has been placed on the chips themselves. It is important to note that there are some areas in which the United States is not commercially competitive with China, such as in the NAND flash memory space. China’s Yangtze Memory Technologies has become a world leader in flash storage and can now manufacture a 232-layer 3D NAND on par with the most sophisticated American and Korean firms, such as Western Digital and Samsung, at a lower cost. However, these shortcomings do not preclude America from asserting dominance over the semiconductor market as a whole by leveraging its dynamic random-access memory (DRAM) dominance, bolstering nearshore NAND manufacturing, and developing critical mineral processing capabilities. Both DRAM and NAND are essential components for any computationally integrated technology.

While the U.S. cannot compete on rote manufacturing prowess because of high labor costs, it would be strategically beneficial to allow supply chain redundancies with regard to NAND and rare earth metal processing. China currently processes upwards of 90% of the world’s rare earth metals, which are critical to any type of semiconductor chips. While the U.S. possesses strategic reserves for commodities such as oil, it does not have any meaningful reserve when it comes to rare earth metals—making this a critical national security threat. Should China stop processing rare earth metals for the U.S., the price of any type of semiconductor—in any form factor—would increase dramatically. Furthermore, as a strategic matter, the United States would not have accomplished its national security objectives if it built manufacturing capabilities yet lacked critical inputs to supply this potential. Therefore, any legacy chips proposal must first establish sufficient rare earth metal processing capabilities or a strategic reserve of these critical resources.

Furthermore, given the advanced status of U.S. technological manufacturing prowess, it makes little sense to outright onshore legacy chip manufacturing capabilities—especially considering high U.S. costs and the substantial diversion of intellectual capital that such efforts would require. Each manufacturer must develop their own manufacturing process from scratch. A modern fab runs 24×7 and has a complicated workflow, with its own technique and software when it comes to lithography. For instance, since their technicians and scientists are highly skilled, TSMC no longer focuses on older generation lithography (i.e., 22nm) because it would be unprofitable for them to do so when they cannot fulfill their demand for 3nm or 4nm. The United States is better off developing its comparative advantage by specializing in cutting-edge chip manufacturing capabilities, as well as research and development initiatives; however, while American expertise remains expensive, America has wholly neglected the potential utility of its southern neighbors in shoring up rare earth metals processing. Developing Latin American metals processing—and legacy chip production—capabilities can mitigate national security threats. Hard drive manufacturers have employed a similar nearshoring approach with great success.

To address both rare earth metals and onshoring concerns, the United States should pursue an economic integration framework with nations in Latin America’s Southern Cone, targeting a partialized (or multi-sectoral) free trade agreement with the Southern Common Market (MERCOSUR) bloc. The United States should pursue this policy along two industry fronts, 1) waiving the Common External Tariff for United States’ petroleum and other fuel exports, which currently represent the largest import group for Latin American members of the bloc, and 2) simultaneously eliminating all trade barriers on American importation of critical minerals––namely arsenic, germanium, and gallium––which are necessary for legacy chip manufacturing. Enacting such an agreement and committing substantial capital to the project over a long-term time horizon would radically increase semiconductor manufacturing capabilities across all verticals of the supply chain. Two mutually inclusive premises underpin this policy’s efficacy:

Firstly, the production of economic interdependence with a bloc of Latin American states (as opposed to a single nation) serves to diversify risk in the United States; each nation provides different sets and volumes of critical minerals and has competing foreign policy agendas. This reduces the capacity of states to exert meaningful and organized diplomatic pressure on the United States, as supply lines can be swiftly re-adjusted within the bloc. Moreover, MERCOSUR countries are major energy importers, specifically with regard to Bolivian natural gas and American petroleum. Under an energy-friendly U.S. administration, the effects of this policy would be especially pronounced: low petroleum costs enable the U.S. to subtly reassert its geopolitical sway within its regional sphere of influence, notably in light of newly politically friendly Argentinian and Paraguayan governments. China has been struggling to ratify its own trade accords with the bloc given industry vulnerability, this initiative would further undermine its geopolitical influence in the region. Refocusing critical mineral production within this regional geography would decrease American reliance on Chinese production.

Secondly, nearshoring the semiconductor supply chain would reduce transport costs, decrease American vulnerability to intercontinental disruptions, and mitigate geopolitical reliance on China. Reduced extraction costs in Latin America, minimized transportation expenses, and reduced labor costs in especially Midwestern and Southern U.S. states enable America to maintain export competitiveness as a supplier to ASEAN’s booming technology industry in adjacent sectors, which indicates that China will not automatically fill market distortions. Furthermore, establishing investment arbitration procedures compliant with the General Agreement on Tariffs and Trade’s Dispute Settlement Understanding should accompany the establishment of transcontinental commerce initiatives, and therefore designate the the World Trade Organization as the exclusive forum for dispute settlement.

This policy is necessary to avoid the involvement of corrupt states’ backpaddling on established systems, which has historically impeded corporate involvement in the Southern Cone. This international legal security mechanism serves to assure entrepreneurial inputs that will render cooperation with American enterprises mutually attractive. However, partial free trade accords for primary sector materials are not sufficient to revitalize American industry and shift supply lines. To address the demand side, the exertion of downward pressure on pricing, alongside the reduction of geopolitical risk, should be accompanied by the institution of a state-subsidized low-interest loan, with available rate reset for approved legacy chip manufacturers, and a special-tier visa for hired personnel working in legacy chip manufacturing. Considering the sensitive national security interests at stake, the U.S. Federal Contractor Registration ought to employ the same awarding mechanisms and security filtering criteria used for federal arms contracts in its company auditing mechanisms. Under this scheme, vetted and historically capable legacy chip manufacturing firms will be exclusively able to take advantage of significant subventions and exceptional ‘wartime’ loans. Two reasons underpin the need for this martial, yet market-oriented industrial policy.

Firstly, legacy chip production requires highly specialized labor and immensely expensive fixed costs given the nature of accompanying machinery. Without targeted low-interest loans, the significant capital investment required for upgrading and expanding chip manufacturing facilities would be prohibitively high–potentially eroding the competitiveness of American and allied industries in markets that are heavily saturated with Chinese subsidies. Such mechanisms for increased and cheap liquidity also render it easier to import highly specialized talent from China, Taiwan, Germany, the Netherlands, etc., by offering more competitive compensation packages and playing onto the attractiveness of the United States lifestyle. This approach would mimic the Second World War’s “Operation Paperclip,” executed on a piecemeal basis at the purview of approved legacy chip suppliers.

Secondly, the investment fluidity that accompanies significant amounts of accessible capital serves to reduce stasis in the research and development of sixth-generation automotive, multi-use legacy chips (in both autonomous and semi-autonomous systems). Much of this improvement a priori occurs through trial-and-error processes within state-of-the-art facilities under the long-term commitment of manufacturing, research, and operations teams.

Acknowledging the strategic importance of centralizing, de-risking, and reducing reliance on foreign suppliers will safeguard the economic stability, national defense capabilities, and the innovative flair of the United States––restoring the national will and capacity to produce on its own shores. The national security ramifications of Chinese legacy chip manufacturing are predominantly downstream of their economic consequences, particularly vis-à-vis the integrity of American defense manufacturing supply chains. In implementing the aforementioned solutions and moving chip manufacturing to closer and friendlier locales, American firms can be well positioned to compete globally against Chinese counterparts and supply the U.S. military with ample chips in the event of armed conflict.

In 2023, the Wall Street Journal exposed the fragility of American supply chain resilience when they profiled how one manufacturing accident took offline 100% of the United States’ production capability for black powder—a critical component of mortar shells, artillery rounds, and Tomahawk missiles. This incident illustrates how critical a consolidated supply chain can be for national security and the importance of mitigating overreliance on China for critical components. As firms desire lower prices for their chips, ensuring adequate capacity is a significant component of a successful strategy to address China’s growing global share of legacy chip manufacturing. However, there are additional national security concerns for legacy chip manufacturing that supersede their economic significance–mitigating supply chain vulnerabilities is among the most consequential of these considerations.

Lastly, when there are substantial national security objectives at stake, the state is justified in acting independently of economic considerations; markets are sustained only by the imposition of binding and common rules. Some have argued that the possibility of cyber sabotage and espionage through military applications of Chinese chip technology warrants accelerating the timeline of procurement restrictions. The National Defense Authorization Act for Fiscal Year 2023’s Section 5949 prohibits the procurement of China-sourced chips from 2027 onwards. Furthermore, the Federal Communications Commission has the power to restrict China-linked semiconductors in U.S. critical infrastructure under the Secure Networks Act and the U.S. Department of Commerce reserves the right to restrict China-sourced semiconductors if they pose a threat to critical communications and information technology infrastructure.

However, Matt Blaze’s 1994 article “Protocol Failure in the Escrowed Encryption Standard” exposed the shortcomings of supposed hardware backdoors, such as the NSA’s “clipper chip” that they designed in the 1990s to surveil users. In the absence of functional software, a Chinese-designed hardware backdoor into sensitive applications could not function. This scenario would be much like a printer trying to operate without an ink cartridge. Therefore, instead of outright banning inexpensive Chinese chips and putting American firms at a competitive disadvantage, the federal government should require Chinese firms to release source code to firmware and supporting software for the chips they sell to Western companies. This would allow these technologies to be independently built and verified without undermining the competitive position of American industry. The U.S. imposed sanctions against Huawei in 2019 on suspicion of the potential espionage risks that reliance on Chinese hardware poses. While tighter regulation of Chinese semiconductors in sensitive areas seems to be a natural and pragmatic extension of this logic, it is unnecessary and undermines American dynamism.

Conclusion

Considering China’s growing global share of legacy chip manufacturing as a predominantly economic problem with substantial national security consequences, the American foreign policy establishment ought to pursue 1) a new technological outlook that exploits all facets of the integrated chip supply chain—including EDA software and allied replacement component suppliers—and 2) a partial free-trade agreement with MERCOSUR to further industrial policy objectives.

To curtail Chinese legacy chip dominance, the United States should weaponize its monopoly on electronic design automation software. By effectively forcing Chinese firms to purchase computing services from a U.S.-based cloud, American EDA software firms can audit and monitor Chinese innovations while reserving the ability to deny them service during armed conflict. Restricting allied firms’ ability to supply Chinese manufacturers with ancillary components can likewise slow the pace of Chinese legacy chip ascendence.

Furthermore, although China no longer relies on the United States or allied countries for NAND manufacturing, the United States and its allies maintain DRAM superiority. The United States must leverage capabilities to maintain Chinese reliance on its DRAM prowess and sustain its competitive edge while considering restricting the export of this technology for Chinese defense applications under extraordinary circumstances. Simultaneously, efforts to nearshore NAND technologies in South America can delay the pace of Chinese legacy chip ascendence, especially if implemented alongside a strategic decision to reduce reliance on Chinese rare earth metals processing.

In nearshoring critical mineral inputs to the end of preserving national security and reducing costs, the United States should adopt a market-oriented industrial policy of rate-reset, and state-subsidized low-interest loans for vetted legacy chip manufacturing firms. Synergy between greater competitiveness, capital solvency, and de-risked supply chains would enable U.S. firms to compete against Chinese counterparts in critical “third markets,” and reduce supply chain vulnerabilities that undermine national security. As subsidy-induced Chinese market distortions weigh less on the commercial landscape, the integrity of American defense capabilities will simultaneously improve, especially if bureaucratic agencies move to further insulate critical U.S. infrastructure against potential cyber espionage.

An “Open Foundational” Chip Design Standard and Buyers’ Group to Create a Strategic Microelectronics Reserve

Last year the Federation of American Scientists (FAS), Jordan Schneider (of ChinaTalk), Chris Miller (author of Chip War) and Noah Smith (of Noahpinion) hosted a call for ideas to address the U.S. chip shortage and Chinese competition. A handful of ideas were selected based on the feasibility of the idea and its and bipartisan nature. This memo is one of them.

Semiconductors are not one industry, but thousands. They range from ultra-high value advanced logic chips like H100s to bulk discrete electronic components and basic integrated circuits (IC). Leading-edge chips are advanced logic, memory, and interconnect devices manufactured in cutting-edge facilities requiring production processes at awe-inspiring precision. Leading-edge chips confer differential capabilities, and “advanced process nodes”1 enable the highest performance computation and the most compact and energy-efficient devices. This bleeding edge performance is derived from the efficiencies enabled by more densely packed circuit elements in a computer chip. Smaller transistors require lower voltages and more finely packed ones can compute faster.

Devices manufactured with older process nodes, 65nm and above, form the bulk by volume of devices we use. These include discrete electrical components like diodes or transistors, power semiconductors, and low-value integrated circuits such as bulk microcontrollers (MCU). These inexpensive logic chips like MCUs, memory controllers, and clock setters I term “commodity ICs”. While the keystone components in advanced applications are manufactured at the leading edge, older nodes are the table stakes of electrical systems. These devices supply power, switch voltages, transform currents, command actuators, and sense the environment. These devices we’ll collectively term foundational chips, as they provide the platform upon which all electronics rest. And their supply can be a point of failure. The automotive MCU shortage provides a bitter lesson that even the humblest device can throttle production.

Foundational devices themselves do not typically enable differentiating capabilities. In many applications, such as computing or automotive, they simply enable basic functions. These devices are low-cost, low-margin goods, made with a comparatively simpler production process. Unfortunately, a straightforward supply does not equate to a secure one. Foundational chips are manufactured by a small number of firms concentrated in China. This is in part due to long-running industrial policy efforts by the Chinese government, with significant production subsidies. The Chips and Science Act was mainly about innovation and international competitiveness. Reshoring a significant fraction of leading-edge production to the United States in the hope of returning valuable communities of engineering practice (Fuchs & Kirchain, 2010). While these policy goals are vital, foundational chip supply represents a different challenge and must be addressed by other interventions.

The main problem posed by the existing foundational chip supply is resilience. They are manufactured by a few geographically clustered firms and are thus vulnerable to disruption, from geopolitical conflicts (e.g. export controls on these devices) or more quotidian outages such as natural disasters or shipping disruptions.

There is also concern that foreign governments may install hardware backdoors in chips manufactured within their borders, enabling them to deactivate the deployed stock of chips. While this meaningful security consideration, it is less applicable in foundational devices, as their low complexity makes such backdoors more challenging. A DoD analysis found mask and wafer production to be the manufacturing process steps most resilient to adversarial interference (Coleman, 2023, p. 36). There already exist “trusted foundry” electronics manufacturers for critical U.S. defense applications concerned about confidentiality; these policy interventions seek to address the vulnerability to a conventional supply disruption. This report will first outline the technical and economic features of foundational chip supply which are barriers to a resilient supply, and then propose policy to address these barriers.

Challenge and Opportunity

Technical characteristics of the manufacture and end-use of foundational microelectronics make supply especially vulnerable to disruption. Commodity logic ICs such as MCUs or memory controllers vary in their clock speed, architecture, number of pins, number of inputs/outputs (I/O), mapping of I/O to pins, package material, circuit board connection, and other design features. Some of these features, like operating temperature range, are key drivers of performance in particular applications. However most custom features in commodity ICs do not confer differential capability or performance advantages to the final product, the pin-count of a microcontroller does not determine the safety or performance of a vehicle. Design lock-in combined with this feature variability results in dramatically reduced short-run substitutability of these devices; while MCUs exist in a commodity-style market, they are not interchangeable without significant redesign efforts. This phenomenon, designs based on specialized components that are not required in the application, is known as over-specification (Smith & Eggert, 2018). This means that while there are numerous semiconductor manufacturing firms, in practice there may only be a single supplier for a specified foundational component.

These over-specification risks are exacerbated by a lack of value chain visibility. Firms possess little knowledge of their tier 2+ suppliers. The fractal symmetry of this knowledge gap means that even if an individual firm secures robust access to the components they directly use, they may still be exposed to disruption through their suppliers. Value chains are only as strong as their weakest link. Physical characteristics of foundational devices also uncouple them from the leading edge. Many commodity ICs just don’t benefit from classical feature shrinkage; bulk MCUs or low-end PMICs don’t improve in performance with transistor density as their outputs are essentially fixed. Analog devices experience performance penalties at too small a feature scale, with physically larger transistors able to process higher voltages and produce lower sympathetic capacitance. Manufacturing commodity logic ICs using leading-edge logic fabs would be prohibitively expensive and would be actively detrimental to analog device performance. These factors, design over-specification, supply chain opacity, and insulation from leading-edge production, combine to functionally decrease the already narrow supply of legacy chips.

Industrial dynamics impede this supply from becoming more robust without policy intervention. Foundational chips, whether power devices or memory controllers are low-margin commodity-style products sold in volume. The extreme capital intensity of the industry combined with the low margin for these makes supply expansion unattractive for producers, with short-term capital discipline a common argument against supply buildout (Connatser, 2024). The premium firms pay for performance results in significant investment in leading-edge design and production capacity as firms compete for this demand. The commodity environment of foundational devices in contrast is challenging to pencil out as even trailing-edge fabs are highly capital-intensive (Reinhardt, 2022). Chinese production subsidies also impede the expansion of foundational fabs, as they further narrow already low margins. Semiconductor demand is historically cyclical, and producers don’t make investment decisions based on short-run demand signals. These factors make foundational device supply challenging to expand: firms manufacture commodity-style products manufactured in capital-intensive facilities, competing with subsidized producers, to meet widely varying demands. Finally, foundational chip supply resilience is a classic positive externality good. No individual firm captures all or even most of the benefit of a more robust supply ecosystem.

Plan of Action

To secure the supply of foundational chips, this memo recommends the development of an “Open Foundational” design standard and buyers’ group. One participant in that buyer’s group will be the U.S. federal government, which would establish a strategic microelectronics reserve to ensure access to critical chips. This reserve would be initially stocked through a multi-year advanced market commitment for Open Foundational devices.

The foundational standard would be a voluntary consortium of microelectronics users in critical sectors, inspired by the Open Compute Project. It would ideally contain firms from critical sectors such as enterprise computation, automotive manufacturing, communications infrastructure, and others. The group would initially convene to identify a set of foundational devices that are necessary to their sectors (i.e. system architecture commodity ICs and power devices for computing) and identify design features that don’t significantly impact performance, and thus could be standardized. From these, a design standard could be developed. Firms are typically locked to existing devices for their current design; one can’t place a 12-pin MCU into a board built for 8. Steering committee firms will thus be asked to commit some fraction of future designs to use Open Foundational microelectronics, ideally on a ramping-up basis. The goal of the standard is not to mandate away valuable features, unique application needs should still be met by specialized devices, such as rad-hardened components in satellites. By adopting a standard platform of commodity chips in future designs, the buyers’ group would represent demand of sufficient scale to motivate investment, and supply would be more robust to disruptions once mature.

Government should adopt the standard where feasible, to build greater resilience in critical systems if nothing else. This should be accompanied by a diplomatic effort for key democratic allies to partner in adopting these design practices in their defense applications. The foundational standard should seek geographic diversity in suppliers, as manufacturing concentrated anywhere represents a point of failure. The foundational standard also allows firms to de-risk their suppliers as well as themselves. They can stipulate in contracts that their tier-one suppliers need to adopt Foundational Standards in their designs, and OEMs who do so can market the associated resilience advantage.

Having developed the open standard through the buyers’ group, Congress should authorize the purchase through the Department of Commerce a strategic microelectronics reserve (SMR). Inspired by the strategic petroleum reserve, the microelectronics reserve is intended to provide the backstop foundational hardware for key government and societal operations during a crisis. The composition of the SMR will likely evolve as technologies and applications develop, but at launch, the purchasing authority should commit to a long-term high-volume purchase of Foundational Standard devices, a policy structure known as an advanced market commitment.

Advanced market commitments are effective tools to develop supply when there is initial demand uncertainty, clear product specification, and a requirement for market demand to mature (Ransohoff, 2024). The foundational standard provides the product specification, and the advanced government commitment provides demand at a duration that should exceed both the product development and fab construction lifecycle, on the order of 5 years or more. This demand should be steady, with regular annual purchases at scale, ensuring producers’ consistent demand through the ebbs and flows of a volatile industry. If these efforts are successful, the U.S. government will cultivate a more robust and resilient supply ecosystem both for its own core services and for firms and citizens. The SMR could also serve as a backstop when supply fluctuations do occur, as with the strategic petroleum reserve.

The goal of the SMR is not to fully substitute for existing stockpiling efforts, either by firms or by the government for defense applications. Through the expanded supply base for foundational chips enabled by the SMR, and through the increase in substitutability driven by the Foundational Standard, users can concentrate their stockpiling efforts on the chips which confer differentiated capabilities. As resources can be concentrated in more application-specific chips, stockpiling becomes more efficient, enabling more production for the same investment. In the long run, the SMR should likely diversify to include more advanced components such as high-capacity memory, and field-programmable processors. This would ensure government access to core computational capabilities in a disaster or conflict scenario. But as all systems are built on a foundation, the SMR should begin with Foundational Standard devices.

There are potential risks to this approach. The most significant is that this model of foundational chips does not accurately reflect physical reality. Interfirm cooperation in setting and adhering to the standards is conditional on these devices not determining performance. If firms perceive foundational chips as providing a competitive advantage to their system or products, they shall not crucify capability on a cross of standards. Alternatively, each sector may have a basket of foundational devices as we describe, but there may be little to no overlap sector-to-sector. In this case, the sectors representing the largest demand, such as enterprise computing, may be able to develop their own standard, but without resilience spillovers into other applications. These scenarios should be identifiably early in the standard-setting process before significant physical investment is made. In such cases, the government should explore using fab lines in the national prototyping facility to flexibly manufacture a variety of foundational chips when needed, by developing adaptive production lines and processes. This functionally shifts the policy goal up the value chain, achieving resilience through flexible manufacture of devices rather than flexible end-use.

Value chains may be so opaque that the buyers’ group might fail to identify a vulnerable chip. The Department of Commerce developing an office of supply mapping, and applying a tax penalty to firms who fail to report component flows are potential mitigation strategies. Existing subsidized foundational chip supply by China may make virtually any greenfield production uncompetitive. In this case, trade restrictions or a counter-subsidy may be required until the network effects of the Foundational Standard enable long-term viability. We do not want the Foundational standard to lock in technological stagnation, in fact the opposite. Accordingly, there should be a periodic and iterative review of the devices within the standard and their features. The problems of legacy chips are distinct from those at the technical frontier.

Foundational chips are necessary but not sufficient for modern electronic systems. It was not the hundreds of dollar System-on-a-Chip components that brought automotive production to a halt, but the sixteen-cent microcontroller. The technical advances fueled by leading-edge nodes are vital to our long-term competitiveness, but they too rely on legacy devices. We must in parallel fortify the foundation on which our security and dynamism rests.

Blank Checks for Black Boxes: Bring AI Governance to Competitive Grants

The misuse of AI in federally-funded projects can risk public safety and waste taxpayer dollars.

The Trump administration has a pivotal opportunity to spot wasteful spending, promote public trust in AI, and safeguard Americans from unchecked AI decisions. To tackle AI risks in grant spending, grant-making agencies should adopt trustworthy AI practices in their grant competitions and start enforcing them against reckless grantees.

Federal AI spending could soon skyrocket. One ambitious legislative plan from a Senate AI Working Group calls for doubling non-defense AI spending to $32 billion a year by 2026. That funding would grow AI across R&D, cybersecurity, testing infrastructure, and small business support.

Yet as federal AI investment accelerates, safeguards against snake oil lag behind. Grants can be wasted on AI that doesn’t work. Grants can pay for untested AI with unknown risks. Grants can blur the lines of who is accountable for fixing AI’s mistakes. And grants offer little recourse to those affected by an AI system’s flawed decisions. Such failures risk exacerbating public distrust of AI, discouraging possible beneficial uses.

Oversight for federal grant spending is lacking, with:

- No AI-specific policies vetting discretionary grants

- Varying AI expertise on grant judging panels

- Unclear AI quality standards set by grantmaking agencies

- Little-to-no pre- or post-award safeguards that identify and monitor high-risk AI deployments.

Watchdogs, meanwhile, play a losing game, chasing after errant programs one-by-one only after harm has been done. Luckily, momentum is building for reform. Policymakers recognize that investing in untrustworthy AI erodes public trust and stifles genuine innovation. Steps policymakers could take include setting clear AI quality standards, training grant judges, monitoring grantee’s AI usage, and evaluating outcomes to ensure projects achieve their potential. By establishing oversight practices, agencies can foster high-potential projects for economic competitiveness, while protecting the public from harm.

Challenge and Opportunity

Poor AI Oversight Jeopardizes Innovation and Civil Rights

The U.S. government advances public goals in areas like healthcare, research, and social programs by providing various types of federal assistance. This funding can go to state and local governments or directly to organizations, nonprofits, and individuals. When federal agencies award grants, they typically do so expecting less routine involvement than they would with other funding mechanisms, for example cooperative agreements. Not all federal grants look the same—agencies administer mandatory grants, where the authorizing statute determines who receives funding, and competitive grants (or “discretionary grants”), where the agency selects award winners. In competitive grants, agencies have more flexibility to set program-specific conditions and award criteria, which opens opportunities for policymakers to structure how best to direct dollars to innovative projects and mitigate emerging risks.

These competitive grants fall short on AI oversight. Programmatic policy is set in cross-cutting laws, agency-wide policies, and grant-specific rules; a lack of AI oversight mars all three. To date, no government-wide AI regulation extends to AI grantmaking. Even when President Biden’s 2023 AI Executive Order directed agencies to implement responsible AI practices, the order’s implementing policies exempted grant spending (see footnote 25) entirely from the new safeguards. In this vacuum, the 26 grantmaking agencies are on their own to set agency-wide policies. Few have. Agencies can also set AI rules just for specific funding opportunities. They do not. In fact, in a review of a large set of agency discretionary grant programs, only a handful of funding notices announced a standard for AI quality in a proposed program. (See: One Bad NOFO?) The net result? A policy and implementation gap for the use of AI in grant-funded programs.

Funding mistakes damage agency credibility, stifle innovation, and undermines the support for people and communities financial assistance aims to provide. Recent controversies highlight how today’s lax measures—particularly in setting clear rules for federal financial assistance, monitoring how they are used, and responding to public feedback—have led to inefficient and rights-trampling results. In just the last few years, some of the problems we have seen include:

- The Department of Housing and Urban Development set few rules on a grant to protect public housing residents, letting officials off the hook when they bought facial recognition cameras to surveil and evict residents.

- Senators called for a pause on predictive policing grants, finding the Department of Justice failed to check that their grantees’ use of AI complied with civil rights laws—the same laws on which the grant awards were conditioned.

- The National Institute of Justice issued a recidivism forecasting challenge which researchers argued “incentivized exploiting the metrics used to judge entrants, leading to the development of trivial solutions that could not realistically work in practice.”

Any grant can attract controversy, and these grants are no exception. But the cases above spotlight transparency, monitoring, and participation deficits—the same kinds of AI oversight problems weakening trust in government that policymakers aim to fix in other contexts.

Smart spending depends on careful planning. Without it, programs may struggle to drive innovation or end up funding AI that infringes peoples’ rights. OMB, as well as agency Inspectors General, and grant managers will need guidance to evaluate what money is going towards AI and how to implement effective oversight. Government will face tradeoffs and challenges promoting AI innovation in federal grants, particularly due to:

1) The AI Screening Problem. When reviewing applications, agencies might fail to screen out candidates that exaggerate their AI capabilities—or fail to report bunk AI use altogether. Grantmaking requires calculated risks on ideas that might fail. But grant judges who are not experts in AI can make bad bets. Applicants will pitch AI solutions directly to these non-experts, and grant winners, regardless of their original proposal, will likely purchase and deploy AI, creating additional oversight challenges.

2) The grant-procurement divide. When planning a grant, agencies might set overly burdensome restrictions that dissuade qualified applicants from applying or otherwise take up too much time, getting in the way of grant goals. Grants are meant to be hands-off; fostering breakthroughs while preventing negligence will be a challenging needle to thread.

3) Limited agency capacity. Agencies may be unequipped to monitor grant recipients’ use of AI. After awarding funding, agencies can miss when vetted AI breaks down on launch. While agencies audit grantees, those audits typically focus on fraud and financial missteps. In some cases, agencies may not be measuring grantee performance well at all (slides 12-13). Yet regular monitoring, similar to the oversight used in procurement, will be necessary to catch emergent problems that affect AI outcomes. Enforcement, too, could be cause for concern; agencies clawback funds for procedural issues, but “almost never withhold federal funds when grantees are out of compliance with the substantive requirements of their grant statutes.” Even as the funding agency steps away, an inaccurate AI system can persist, embedding risks over a longer period of time.

Plan of Action

Recommendation 1. OMB and agencies should bake-in pre-award scrutiny through uniform requirements and clearer guidelines

- Agencies should revise funding notices to require applicants disclose plans to use AI, with a greater level of disclosure required for funding in a foreseeable high-risk context. Agencies should take care not to overburden applicants with disclosures on routine AI uses, particularly as the tools grow in popularity. States may be a laboratory to watch for policy innovation; Illinois, for example, has proposed AI disclosure policies and penalties for its state grants.

- Agencies should make AI-related grant policies clearer to prospective applicants. Such a change would be consistent with OMB policy and the latest Uniform Guidance, the set of rules OMB sets for agencies to manage their grants. For example, in grant notices, any AI-related review criteria should be plainly stated, rather than inferred from a project’s description. Any AI restrictions should be spelled out too, not merely incorporated by reference. More generally, agencies should consider simplifying grant notices, publishing yearly AI grant priorities, hosting information sessions, and/or extending public comment periods to significant AI-related discretionary spending.

- Agencies could consider generally-applicable metrics with which to evaluate applicants’ suggested AI uses. For example, agencies may require applicants to demonstrate they have searched for less discriminatory algorithms in the development of an automated system.

- OMB could formally codify pre-award AI risk assessments in the Uniform Guidance, the set of rules OMB sets for agencies to manage their grants. OMB updates its guidance periodically (with the most recent updates in 2024) and can also issue smaller revisions.

- Agencies could also provide resources targeted at less established AI developers, who might otherwise struggle to meet auditing and compliance standards.

Recommendation 2. OMB and grant marketplaces should coordinate information sharing between agencies

To support review of AI-related grants, OMB and grantmaking agency staff should pool knowledge on AI’s tricky legal, policy, and technical matters.

- OMB, through its Council on Federal Financial Assistance, should coordinate information-sharing between grantmaking agencies on AI risks.

- The White House Office of Science and Technology Policy, the National Institute of Standards and Technology, and the Administrative Conference of the United States (ACUS) should support agencies by devising what information agencies should collect on their grantee’s use of AI so as to limit administrative burden on grantees.

- OMB can share expertise on monitoring and managing grant risks, best practices and guides, and trade offs; other relevant interagency councils can share grant evaluation criteria and past performance templates across agencies.

- Online grants marketplaces, such as the Grants Quality Service Management Office Marketplace operated by the Department of Health and Human Services, should improve grantmakers’ decisions by sharing AI-specific information like an applicants’ AI quality, audit and enforcement history, where applicable.

Recommendation 3. Agencies should embrace targeted hiring and talent exchanges for grant review boards

Agencies should have experts in a given AI topic judging grant competitions. To do so requires overcoming talent acquisition challenges. To that end:

- Agency Chief AI Officers should assess grant office needs as part of their OMB-required assessments on AI talent. Those officers should also include grant staff in any AI trainings, and, when prudent, agency-wide risk assessment meetings

- Agencies should staff review boards with technical experts through talent exchanges and targeted hiring.

- OMB should coordinate drop-in technical experts who can sit in as consultants across agencies.

- OMB should support the training of federal grants staff on matters that touch AI– particularly as surveyed grant managers see training as an area of need.

Recommendation 4. Agencies should step up post-award monitoring and enforcement

You can’t improve what you don’t measure—especially when it comes to AI. Quantifying, documenting, and enforcing against careless AI uses can be a new task for grantmaking agencies. Incident reporting will improve the chances that existing cross-cutting regulations, including civil rights laws, can reel back AI gone awry.

- Congress can delegate investigative authority to agencies with AI audit expertise. Such an effort might mirror the cross-agency approach taken by the Department of Justice’s Procurement Collusion Strike Force, which investigates antitrust crimes in procurement and grantmaking.

- Congress can require agencies to cut off funds when grantees show repeated or egregious violations of grant terms pertaining to AI use. Agencies, where authorized, should voluntarily enforce against repeat bad players through spending clawbacks, cutoffs or ban lists.

- Agencies should consider introducing dispute resolution procedures that give redress to enforced-upon grantees.

Recommendation 5. Agencies should encourage and fund efforts to investigate and measure AI harms

- Agencies should invest in establishing measurement and standards within their topic areas on which to evaluate prospective applicants. For example, the National Institute of Justice recently opened funding to research evaluating the use of AI in the criminal legal system.

- Agencies should follow through on longstanding calls to encourage public whistleblowing on grantee missteps, particularly around AI.

- Agencies should solicit feedback from the public through RFIs on grants.gov on how to innovate in AI in their specific research or topic area.

Conclusion

Little limits how grant winners can spend federal dollars on AI. With the government poised to massively expand its spending on AI, that should change.

The federal failure to oversee AI use in grants erodes public trust, civil rights, effective service delivery and the promise of government-backed innovation. Congressional efforts to remedy these problems–starting probes, drafting letters–are important oversight measures, but only come after the damage is done.

Both the Trump and Biden administrations have recognized that AI is exceptional and needs exceptional scrutiny. Many of the lessons learned from scrutinizing federal agency AI procurement apply to grant competitions. Today’s confluence of public will, interest, and urgency is a rare opportunity to widen the aperture of AI governance to include grantmaking.

This action-ready policy memo is part of Day One 2025 — our effort to bring forward bold policy ideas, grounded in science and evidence, that can tackle the country’s biggest challenges and bring us closer to the prosperous, equitable and safe future that we all hope for whoever takes office in 2025 and beyond.

PLEASE NOTE (February 2025): Since publication several government websites have been taken offline. We apologize for any broken links to once accessible public data.

Enabling statutes for agencies often are the authority for grant competitions. For grant competitions, the statutory language leaves it to agencies to place further specific policies on the competition. Additionally, laws, like the DATA Act and Federal Grant and Cooperative Agreement Act, offer definitions and guidance to agencies in the use of federal funds.

Agencies already conduct a great deal of pre-award planning to align grantmaking with Executive Orders. For example, in one survey of grantmakers, a little over half of respondents updated their pre-award processes, such as applications and organization information, to comply with an Executive Order. Grantmakers aligning grant planning with the Trump administration’s future Executive Orders will likely follow similar steps.

A wide range of states, local governments, companies, and individuals receive grant competition funds. Spending records, available on USASpending.gov, give some insight into where grant funding goes, though these records too, can be incomplete.

Fighting Fakes and Liars’ Dividends: We Need To Build a National Digital Content Authentication Technologies Research Ecosystem

The U.S. faces mounting challenges posed by increasingly sophisticated synthetic content. Also known as digital media ( images, audio, video, and text), increasingly, these are produced or manipulated by generative artificial intelligence (AI). Already, there has been a proliferation in the abuse of generative AI technology to weaponize synthetic content for harmful purposes, such as financial fraud, political deepfakes, and the non-consensual creation of intimate materials featuring adults or children. As people become less able to distinguish between what is real and what is fake, it has become easier than ever to be misled by synthetic content, whether by accident or with malicious intent. This makes advancing alternative countermeasures, such as technical solutions, more vital than ever before. To address the growing risks arising from synthetic content misuse, the National Institute of Standards and Technology (NIST) should take the following steps to create and cultivate a robust digital content authentication technologies research ecosystem: 1) establish dedicated university-led national research centers, 2) develop a national synthetic content database, and 3) run and coordinate prize competitions to strengthen technical countermeasures. In turn, these initiatives will require 4) dedicated and sustained Congressional funding of these initiatives. This will enable technical countermeasures to be able to keep closer pace with the rapidly evolving synthetic content threat landscape, maintaining the U.S.’s role as a global leader in responsible, safe, and secure AI.

Challenge and Opportunity

While it is clear that generative AI offers tremendous benefits, such as for scientific research, healthcare, and economic innovation, the technology also poses an accelerating threat to U.S. national interests. Generative AI’s ability to produce highly realistic synthetic content has increasingly enabled its harmful abuse and undermined public trust in digital information. Threat actors have already begun to weaponize synthetic content across a widening scope of damaging activities to growing effect. Project losses from AI-enabled fraud are anticipated to reach up to $40 billion by 2027, while experts estimate that millions of adults and children have already fallen victim to being targets of AI-generated or manipulated nonconsensual intimate media or child sexual abuse materials – a figure that is anticipated to grow rapidly in the future. While the widely feared concern of manipulative synthetic content compromising the integrity of the 2024 U.S. election did not ultimately materialize, malicious AI-generated content was nonetheless found to have shaped election discourse and bolstered damaging narratives. Equally as concerning is the accumulative effect this increasingly widespread abuse is having on the broader erosion of public trust in the authenticity of all digital information. This degradation of trust has not only led to an alarming trend of authentic content being increasingly dismissed as ‘AI-generated’, but has also empowered those seeking to discredit the truth, or what is known as the “liar’s dividend”.

A. In March 2023, a humorous synthetic image of Pope Francis, first posted on Reddit by creator Pablo Xavier, wearing a Balenciaga coat quickly went viral across social media.

B. In May 2023, this synthetic image was duplicitously published on X as an authentic photograph of an explosion near the Pentagon. Before being debunked by authorities, the image’s widespread circulation online caused significant confusion and even led to a temporary dip in the U.S. stock market.

Research has demonstrated that current generative AI technology is able to produce synthetic content sufficiently realistic enough that people are now unable to reliably distinguish between AI-generated and authentic media. It is no longer feasible to continue, as we currently do, to rely predominantly on human perception capabilities to protect against the threat arising from increasingly widespread synthetic content misuse. This new reality only increases the urgency of deploying robust alternative countermeasures to protect the integrity of the information ecosystem. The suite of digital content authentication technologies (DCAT), or techniques, tools, and methods that seek to make the legitimacy of digital media transparent to the observer, offers a promising avenue for addressing this challenge. These technologies encompass a range of solutions, from identification techniques such as machine detection and digital forensics to classification and labeling methods like watermarking or cryptographic signatures. DCAT also encompasses technical approaches that aim to record and preserve the origin of digital media, including content provenance, blockchain, and hashing.

Evolution of Synthetic Media

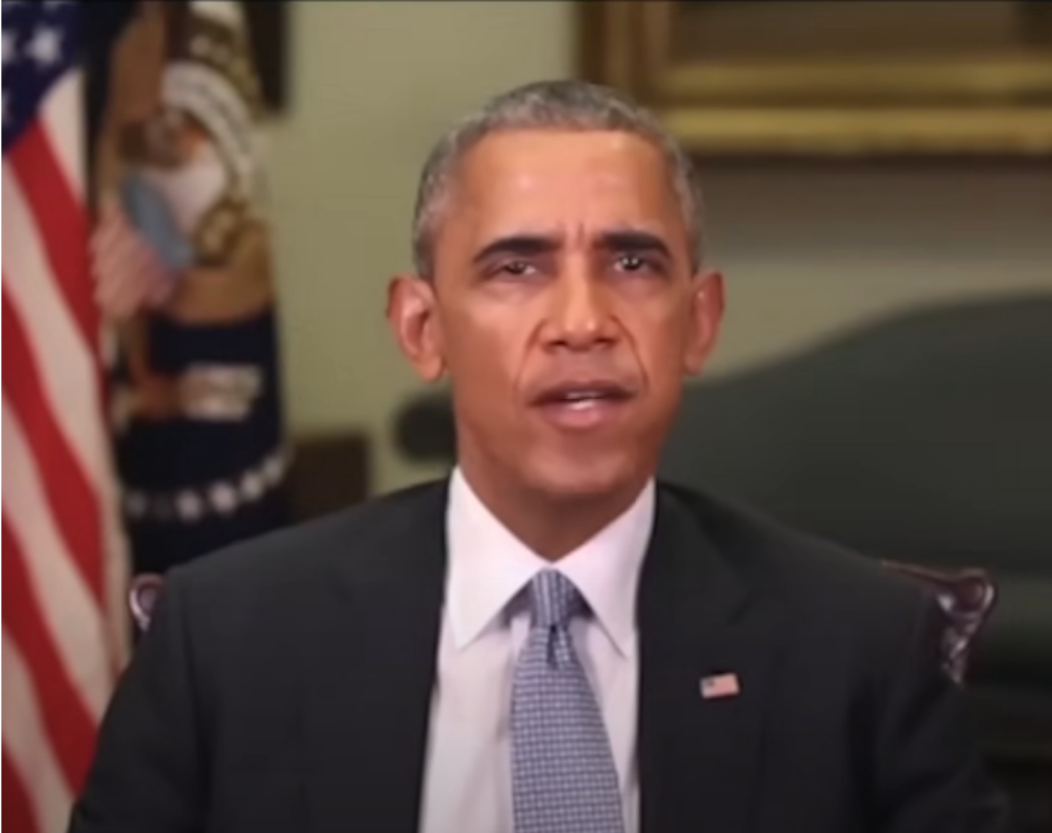

Published in 2018, this now infamous PSA sought to illustrate the dangers of synthetic content. It shows an AI-manipulated video of President Obama, using narration from a comedy sketch by comedian Jordan Peele.

In 2020, a hobbyist creator employed an open-source generative AI model to ‘enhance’ the Hollywood CGI version of Princess Leia in the film Rouge One.

The hugely popular Tiktok account @deeptomcruise posts parody videos featuring a Tom Cruise imitator face-swapped with the real Tom Cruise’s real face, including this 2022 video, racking up millions of views.

The 2024 film Here relied extensively on generative AI technology to de-age and face-swap actors in real-time as they were being filmed.

Robust DCAT capabilities will be indispensable for defending against the harms posed by synthetic content misuse, as well as bolstering public trust in both information systems and AI development. These technical countermeasures will be critical for alleviating the growing burden on citizens, online platforms, and law enforcement to manually authenticate digital content. Moreover, DCAT will be vital for enforcing emerging legislation, including AI labeling requirements and prohibitions on illegal synthetic content. The importance of developing these capabilities is underscored by the ten bills (see Fig 1) currently under Congressional consideration that, if passed, would require the employment of DCAT-relevant tools, techniques, and methods.

However, significant challenges remain. DCAT capabilities need to be improved, with many currently possessing weaknesses or limitations such brittleness or security gaps. Moreover, implementing these countermeasures must be carefully managed to avoid unintended consequences in the information ecosystem, like deploying confusing or ineffective labeling to denote the presence of real or fake digital media. As a result, substantial investment is needed in DCAT R&D to develop these technical countermeasures into an effective and reliable defense against synthetic content threats.

The U.S. government has demonstrated its commitment to advancing DCAT to reduce synthetic content risks through recent executive actions and agency initiatives. The 2023 Executive Order on AI (EO 14110) mandated the development of content authentication and tracking tools. Charged by the EO 14110 to address these challenges, NIST has taken several steps towards advancing DCAT capabilities. For example, NIST’s recently established AI Safety Institute (AISI) takes the lead in championing this work in partnership with NIST’s AI Innovation Lab (NAIIL). Key developments include: the dedication of one of the U.S. Artificial Intelligence Safety Institute Consortium’s (AISIC) working groups to identifying and advancing DCAT R&D; the publication of NIST AI 100-4, which “examines the existing standards, tools, methods, and practices, as well as the potential development of further science-backed standards and techniques” regarding current and prospective DCAT capabilities; and the $11 million dedicated to international research on addressing dangers arising from synthetic content announced at the first convening of the International Network of AI Safety Institutes. Additionally, NIST’s Information Technology Laboratory (ITL) has launched the GenAI Challenge Program to evaluate and advance DCAT capabilities. Meanwhile, two pending bills in Congress, the Artificial Intelligence Research, Innovation, and Accountability Act (S. 3312) and the Future of Artificial Intelligence Innovation Act (S. 4178), include provisions for DCAT R&D.

Although these critical first steps have been taken, an ambitious and sustained federal effort is necessary to facilitate the advancement of technical countermeasures such as DCAT. This is necessary to more successfully combat the risks posed by synthetic content—both in the immediate and long-term future. To gain and maintain a competitive edge in the ongoing race between deception and detection, it is vital to establish a robust national research ecosystem that fosters agile, comprehensive, and sustained DCAT R&D.

Plan of Action

NIST should engage in three initiatives: 1) establishing dedicated university-based DCAT research centers, 2) curating and maintaining a shared national database of synthetic content for training and evaluation, as well as 3) running and overseeing regular federal prize competitions to drive innovation in critical DCAT challenges. The programs, which should be spearheaded by AISI and NAIIL, are critical for enabling the creation of a robust and resilient U.S. DCAT research ecosystem. In addition, the 118th Congress should 4) allocate dedicated funding to supporting these enterprises.

These recommendations are not only designed to accelerate DCAT capabilities in the immediate future, but also to build a strong foundation for long-term DCAT R&D efforts. As generative AI capabilities expand, authentication technologies must too keep pace, meaning that developing and deploying effective technical countermeasures will require ongoing, iterative work. Success demands extensive collaboration across technology and research sectors to expand problem coverage, maximize resources, avoid duplication, and accelerate the development of effective solutions. This coordinated approach is essential given the diverse range of technologies and methodologies that must be considered when addressing synthetic content risks.

Recommendation 1. Establish DCAT Research Institutes

NIST should establish a network of dedicated university-based research to scale up and foster long-term, fundamental R&D on DCAT. While headquartered at leading universities, these centers would collaborate with academic, civil society, industry, and government partners, serving as nationwide focal points for DCAT research and bringing together a network of cross-sector expertise. Complementing NIST’s existing initiatives like the GenAI Challenge, the centers’ research priorities would be guided by AISI and NAIIL, with expert input from the AISIC, the International Network of AISI, and other key stakeholders.

A distributed research network offers several strategic advantages. It leverages elite expertise from industry and academia, and having permanent institutions dedicated to DCAT R&D enables the sustained, iterative development of authentication technologies to better keep pace with advancing generative AI capabilities. Meanwhile, central coordination by AISI and NAIIL would also ensure comprehensive coverage of research priorities while minimizing redundant efforts. Such a structure provides the foundation for a robust, long-term research ecosystem essential for developing effective countermeasures against synthetic content threats.

There are multiple pathways via which dedicated DCAT research centers could be stood up. One approach is direct NIST funding and oversight, following the model of Carnegie Mellon University’s AI Cooperative Research Center. Alternatively, centers could be established through the National AI Research Institutes Program, similar to the University of Maryland’s Institute for Trustworthy AI in Law & Society, leveraging NSF’s existing partnership with NIST.

The DCAT research agenda could be structured in two ways. Informed by NIST’s report NIST AI 100-4, a vertical approach could be taken to centers’ research agendas, assigning specific technologies to each center (e.g. digital watermarking, metadata recording, provenance data tracking, or synthetic content detection). Centers would focus on all aspects of a specific technical capability, including: improving the robustness and security of existing countermeasures; developing new techniques to address current limitations; conducting real-world testing and evaluation, especially in a cross-platform environment; and studying interactions with other technical safeguards and non-technical countermeasures like regulations or educational initiatives. Conversely, a horizontal approach might seek to divide research agendas across areas such as: the advancement of multiple established DACT techniques, tools, and methods; innovation of novel techniques, tools, and methods; testing and evaluation of combined technical approaches in real-world settings; examining the interaction of multiple technical countermeasures with human factors such as label perception and non-technical countermeasures. While either framework provides a strong foundation for advancing DCAT capabilities, given institutional expertise and practical considerations, a hybrid model combining both approaches is likely the most feasible option.

Recommendation 2. Build and Maintain a National Synthetic Content Database

NIST should also build and maintain a national database of synthetic content database to advance and accelerate DCAT R&D, similar to existing federal initiatives such as NIST’s National Software Reference Library and NSF’s AI Research Resource pilot. Current DCAT R&D is severely constrained by limited access to diverse, verified, and up-to-date training and testing data. Many researchers, especially in academia, where a significant portion of DCAT research takes place, lack the resources to build and maintain their own datasets. This results in less accurate and more narrowly applicable authentication tools that struggle to keep pace with rapidly advancing AI capabilities.

A centralized database of synthetic and authentic content would accelerate DCAT R&D in several critical ways. First, it would significantly alleviate the effort on research teams to generate or collect synthetic data for training and evaluation, encouraging less well-resourced groups to conduct research as well as allowing researchers to focus more on other aspects of R&D. This includes providing much-needed resources for the NIST-facilitated university-based research centers and prize competitions proposed here. Moreover, a shared database would be able to provide more comprehensive coverage of the increasingly varied synthetic content being created today, permitting the development of more effective and robust authentication capabilities. The database would be useful for establishing standardized evaluation metrics for DCAT capabilities – one of NIST’s critical aims for addressing the risks posed by AI technology.

A national database would need to be comprehensive, encompassing samples of both early and state-of-the-art synthetic content. It should have controlled laboratory-generated along with verified “in the wild” or real world synthetic content datasets, including both benign and potentially harmful examples. Further critical to the database’s utility is its diversity, ensuring synthetic content spans multiple individual and combined modalities (text, image, audio, video) and features varied human populations as well as a variety of non-human subject matter. To maintain the database’s relevance as generative AI capabilities continue to evolve, routinely incorporating novel synthetic content that accurately reflects synthetic content improvements will also be required.

Initially, the database could be built on NIST’s GenAI Challenge project work, which includes “evolving benchmark dataset creation”, but as it scales up, it should operate as a standalone program with dedicated resources. The database could be grown and maintained through dataset contributions by AISIC members, industry partners, and academic institutions who have either generated synthetic content datasets themselves or, as generative AI technology providers, with the ability to create the large-scale and diverse datasets required. NIST would also direct targeted dataset acquisition to address specific gaps and evaluation needs.

Recommendation 3. Run Public Prize Competitions on DCAT Challenges

Third, NIST should set up and run a coordinated prize competition program, while also serving as federal oversight leads for prize competitions run by other agencies. Building on existing models such as the DARPA SemaFor’s AI FORCE and the FTC’s Voice Cloning challenge, the competitions would address expert-identified priorities as informed by the AISIC, International Network of AISI, and proposed DCAT national research centers. Competitions represent a proven approach to spurring innovation for complex technical challenges, enabling the rapid identification of solutions through diverse engagement. In particular, monetary prize competitions are especially successful at ensuring engagement. For example, the 2019 Kaggle Deepfake Detection competition, which had a prize of $1 million, fielded twice as many participants as the 2024 competition, which gave no cash prize.

By providing structured challenges and meaningful incentives, public competitions can accelerate the development of critical DCAT capabilities while building a more robust and diverse research community. Such competitions encourage novel technical approaches, rapid testing of new methods, facilitate the inclusion of new or non-traditional participants, and foster collaborations. The more rapid-cycle and narrow scope of the competitions would also complement the longer-term and broader research being conducted by the national DCAT research centers. Centralized federal oversight would also prevent the implementation gaps which have occurred in past approved federal prize competitions. For instance, the 2020 National Defense Authorization Act (NDAA) authorized a $5 million machine detection/deepfakes prize competition (Sec. 5724), and the 2024 NDAA authorized a ”Generative AI Detection and Watermark Competition” (Sec. 1543). However, neither prize competition has been carried out, and Watermark Competition has now been delayed to 2025. Centralized oversight would also ensure that prize competitions are run consistently to address specific technical challenges raised by expert stakeholders, encouraging more rapid development of relevant technical countermeasures.