Bringing Transparency to Federal R&D Infrastructure Costs

There is an urgent need to manage the escalating costs of federal R&D infrastructure and the increasing risk that failing facilities pose to the scientific missions of the federal research enterprise. Many of the laboratories and research support facilities operating under the federal research umbrella are near or beyond their life expectancy, creating significant safety hazards for federal workers and local communities. Unfortunately, the nature of the federal budget process forces agencies into a position where the actual cost of operations are not transparent in agency budget requests to OMB before becoming further obscured to appropriators, leading to potential appropriations disasters (including an approximately 60% cut to National Institute of Standards and Technology (NIST) facilities in 2024 after the agency’s challenges became newsworthy). Providing both Congress and OMB with a complete accounting of the actual costs of agency facilities may break the gamification of budget requests and help the government prioritize infrastructure investments.

Challenge and Opportunity

Recent reports by the National Research Council and the National Science and Technology Council, including the congressionally-mandated Quadrennial Science and Technology Review have highlighted the dire state of federal facilities. Maintenance backlogs have ballooned in recent years, forcing some agencies to shut down research activities in strategic R&D domains including Antarctic research and standards development. At NIST, facilities outages due to failing steam pipes, electricity, and black mold have led to outages reducing research productivity from 10-40 percent. NASA and NIST have both reported their maintenance backlogs have increased to exceed 3 billion dollars. The Department of Defense forecasts that bringing their buildings up to modern standards would cost approximately 7 billion “putting the military at risk of losing its technological superiority.” The shutdown of many Antarctic science operations and collapse of the Arecibo Observatory have been placed in stark contrast with the People’s Republic of China opening rival and more capable facilities in both research domains. In the late 2010s, Senate staffers were often forced to call national laboratories, directly, to ask them what it would actually cost for the country to fully fund a particular large science activity.

This memo does not suggest that the government should continue to fund old or outdated facilities; merely that there is a significant opportunity for appropriators to understand the actual cost of our legacy research and development ecosystem, initially ramped up during the Cold War. Agencies should be able to provide a straight answer to Congress about what it would cost to operate their inventory of facilities. Likewise, Congress should be able to decide which facilities should be kept open, where placing a facility on life support is acceptable, and which facilities should be shut down. The cost of maintaining facilities should also be transparent to the Office of Management and Budget so examiners can help the President make prudent decisions about the direction of the federal budget.

The National Science and Technology Council’s mandated research and development infrastructure report to Congress is a poor delivery vehicle. As coauthors of the 2024 research infrastructure report, we can attest to the pressure that exists within the White House to provide a positive narrative about the current state of play as well as OMB’s reluctance to suggest additional funding is needed to maintain our inventory of facilities outside the budget process. It would be much easier for agencies who already have a sense of what it costs to maintain their operations to provide that information directly to appropriators (as opposed to a sanitized White House report to an authorizing committee that may or may not have jurisdiction over all the agencies covered in the report)–assuming that there is even an Assistant Director for Research Infrastructure serving in OSTP to complete the America COMPETES mandate. Current government employees suggest that the Trump Administration intends to discontinue the Research and Development Infrastructure Subcommittee.

Agencies may be concerned that providing such cost transparency to Congress could result in greater micromanagement over which facilities receive which investments. Given the relevance of these facilities to their localities (including both economic benefits and environmental and safety concerns) and the role that legacy facilities can play in training new generations of scientists, this is a matter that deserves public debate. In our experience, the wider range of factors considered by appropriation staff are relevant to investment decisions. Further, accountability for macro-level budget decisions should ultimately fall on decisionmakers who choose whether or not to prioritize investments in both our scientific leadership and the health and safety of the federal workforce and nearby communities. Facilities managers who are forced to make agonizing choices in extremely resource-constrained environments currently bear most of that burden.

Plan of Action

Recommendation 1: Appropriations committees should require from agencies annual reports on the actual cost of completed facilities modernization, operations, and maintenance, including utility distribution systems.

Transparency is the only way that Congress and OMB can get a grip on the actual cost of running our legacy research infrastructure. This should be done by annual reporting to the relevant appropriators the actual cost of facilities operations and maintenance. Other costs that should be accounted for include obligations to international facilities (such as ITER) and facilities and collections that are paid for by grants (such as scientific collections which support the bioeconomy). Transparent accounting of facilities costs against what an administration chooses to prioritize in the annual President’s Budget Request may help foster meaningful dialogue between agencies, examiners, and appropriations staff.

The reports from agencies should describe the work done in each building and impact of disruption. Using the NIST as an example, the Radiation Physics Building (still without the funding to complete its renovation) is crucial to national security and the medical community. If it were to go down (or away), every medical device in the United States that uses radiation would be decertified within 6 months, creating a significant single point of failure that cannot be quickly mitigated. The identification of such functions may also enable identification of duplicate efforts across agencies.

The costs of utility systems should be included because of the broad impacts that supporting infrastructure failures can have on facility operations. At NIST’s headquarters campus in Maryland, the entire underground utility distribution system is beyond its designed lifespan and suffering nonstop issues. The Central Utility Plant (CUP), which creates steam and chilled water for the campus, is in a similar state. The CUP’s steam distribution system will be at the complete end of life (per forensic testing of failed pipes and components) in less than a decade and potentially as soon as 2030. If work doesn’t start within the next year (by early 2026), it is likely the system could go down. This would result in a complete loss of heat and temperature control on the campus; particularly concerning given the sensitivity of modern experiments and calibrations to changes in heat and humidity. Less than a decade ago, NASA was forced to delay the launch of a satellite after NIST’s steam system was down for a few weeks and calibrations required for the satellite couldn’t be completed.

Given the varying business models for infrastructure around the Federal government, standardization of accounting and costs may be too great a lift–particularly for agencies that own and operate their own facilities (government owned, government operated, or GOGOs) compared with federally funded research and development centers (FFRDCs) operated by companies and universities (government owned, contractor operated, or GOCOs).

These reports should privilege modernization efforts, which according to former federal facilities managers should help account for 80-90 percent of facility revitalization, while also delivering new capabilities that help our national labs maintain (and often re-establish) their world-leading status. It would also serve as a potential facilities inventory, allowing appropriators the ability to de-conflict investments as necessary.

It would be far easier for agencies to simply provide an itemized list of each of their facilities, current maintenance backlog, and projected costs for the next fiscal year to both Congress and OMB at the time of annual budget submission to OMB. This should include the total cost of operating facilities, projected maintenance costs, any costs needed to bring a federal facility up to relevant safety and environmental codes (many are not). In order to foster public trust, these reports should include an assessment of systems that are particularly at risk of failure, the risk to the agency’s operations, and their impact on surrounding communities, federal workers, and organizations that use those laboratories. Fatalities and incidents that affect local communities, particularly in laboratories intended to improve public safety, are not an acceptable cost of doing business. These reports should be made public (except for those details necessary to preserve classified activities).

Recommendation 2: Congress should revisit the idea of a special building fund from the General Services Administration (GSA) from which agencies can draw loans for revitalization.

During the first Trump Administration, Congress considered the establishment of a special building fund from the GSA from which agencies could draw loans at very low interest (covering the staff time of GSA officials managing the program). This could allow agencies the ability to address urgent or emergency needs that happen out of the regular appropriations cycle. This approach has already been validated by the Government Accountability Office for certain facilities, who found that “Access to full, upfront funding for large federal capital projects—whether acquisition, construction, or renovation—could save time and money.” Major international scientific organizations that operate large facilities, including CERN (the European Organization for Nuclear Research), have similar ability to take loans to pay for repairs, maintenance, or budget shortfalls that helps them maintain financial stability and reduce the risk of escalating costs as a result of deferred maintenance.

Up-front funding for major projects enabled by access to GSA loans can also reduce expenditures in the long run. In the current budget environment, it is not uncommon for the cost of major investments to double due to inflation and doing the projects piecemeal. In 2010, NIST proposed a renovation of its facilities in Boulder with an expected cost of $76 million. The project, which is still not completed today, is now estimated to cost more than $450 million due to a phased approach unsupported by appropriations. Productivity losses as a result of delayed construction (or a need to wait for appropriations) may have compounding effects on industry that may depend on access to certain capabilities and harm American competitiveness, as described in the previous recommendation.

Conclusion

As the 2024 RDI Report points out “Being a science superpower carries the burden of supporting and maintaining the advanced underlying infrastructure that supports the research and development enterprise.” Without a transparent accounting of costs it is impossible for Congress to make prudent decisions about the future of that enterprise. Requiring agencies to provide complete information to both Congress and OMB at the beginning of each year’s budget process likely provides the best chance of allowing us to address this challenge.

A National Institute for High-Reward Research

The policy discourse about high-risk, high-reward research has been too narrow. When that term is used, people are usually talking about DARPA-style moonshot initiatives with extremely ambitious goals. Given the overly conservative nature of most scientific funding, there’s a fair appetite (and deservedly so) for creating new agencies like ARPA-H, and other governmental and private analogues.

The “moonshot” definition, however, omits other types of high-risk, high-reward research that are just as important for the government to fund—perhaps even more so, because they are harder for anyone else to support or even to recognize in the first place.

Far too many scientific breakthroughs and even Nobel-winning discoveries had trouble getting funded at the outset. The main reason at the time was that the researcher’s idea seemed irrelevant or fanciful. For example, CRISPR was originally thought to be nothing more than a curiosity about bacterial defense mechanisms.

Perhaps ironically, the highest rewards in science often come from the unlikeliest places. Some of our “high reward” funding should therefore be focused on projects, fields, ideas, theories, etc. that are thought to be irrelevant, including ideas that have gotten turned down elsewhere because they are unlikely to “work.” The “risk” here isn’t necessarily technical risk, but the risk of being ignored.

Traditional funders are unlikely to create funding lines specifically for research that they themselves thought was irrelevant. Thus, we need a new agency that specializes in uncovering funding opportunities that were overlooked elsewhere. Judging from the history of scientific breakthroughs, the benefits could be quite substantial.

Challenge and Opportunity

There are far too many cases where brilliant scientists had trouble getting their ideas funded or even faced significant opposition at the time. For just a few examples (there are many others):

- The team that discovered how to manufacture human insulin applied for an NIH grant for an early stage of their work. The rejection notice said that the project looked “extremely complex and time-consuming,” and “appears as an academic exercise.”

- Katalin Karikó’s early work on mRNA was a key contributor to multiple Covid vaccines, and ultimately won the Nobel Prize. But she repeatedly got demoted at the University of Pennsylvania because she couldn’t get NIH funding.

- Carol Grieder’s work on telomerase was rejected by an NIH panel on literally the same day that she won the Nobel Prize, on the grounds that she didn’t have enough preliminary data about telomerase.

- Francisco Mojica (who identified CRISPR while studying archaebacteria in the 1990s) has said, “When we didn’t have any idea about the role these systems played, we applied for financial support from the Spanish government for our research. The government received complaints about the application and, subsequently, I was unable to get any financial support for many years.”

- Peyton Rous’s early 20th century studies on transplanting tumors between purebred chickens was ridiculed at the time, but his work won the Nobel Prize over 50 years later, and provided the basis for other breakthroughs involving reverse transcription, retroviruses, oncogenes, and more.

One could fill an entire book with nothing but these kinds of stories.

Why do so many brilliant scientists struggle to get funding and support for their groundbreaking ideas? In many cases, it’s not because of any reason that a typical “high risk, high reward” research program would address. Instead, it’s because their research can be seen as irrelevant, too far removed from any practical application, or too contrary to whatever is currently trendy.

To make matters worse, the temptation for government funders is to opt for large-scale initiatives with a lofty goal like “curing cancer” or some goal that is equally ambitious but also equally unlikely to be accomplished by a top-down mandate. For example, the U.S. government announced a National Plan to Address Alzheimer’s Disease in 2012, and the original webpage promised to “prevent and effectively treat Alzheimer’s by 2025.” Billions have been spent over the past decade on this objective, but U.S. scientists are nowhere near preventing or treating Alzheimer’s yet. (Around October 2024, the webpage was updated and now aims to “address Alzheimer’s and related dementias through 2035.”)

The challenge is whether quirky, creative, seemingly irrelevant, contrarian science—which is where some of the most significant scientific breakthroughs originated—can survive in a world that is increasingly managed by large bureaucracies whose procedures don’t really have a place for that type of science, and by politicians eager to proclaim that they have launched an ambitious goal-driven initiative.

The answer that I propose: Create an agency whose sole raison d’etre is to fund scientific research that other agencies won’t fund—not for reasons of basic competence, of course, but because the research wasn’t fashionable or relevant.

The benefits of such an approach wouldn’t be seen immediately. The whole point is to allocate money to a broad portfolio of scientific projects, some of which would fail miserably but some of which would have the potential to create the kind of breakthroughs that, by definition, are unpredictable in advance. This plan would therefore require a modicum of patience on the part of policymakers. But over the longer term, it would likely lead to a number of unforeseeable breakthroughs that would make the rest of the program worth it.

Plan of Action

The federal government needs to establish a new National Institute for High-Reward Research (NIHRR) as a stand-alone agency, not tied to the National Institutes of Health or the National Science Foundation. The NIHRR would be empowered to fund the potentially high-reward research that goes overlooked elsewhere. More specifically, the aim would be to cast a wide net for:

- Researchers (akin to Katalin Karikó or Francisco Mojica) who are perfectly well-qualified but have trouble getting funding elsewhere;

- Research projects or larger initiatives that are seen as contrary to whatever is trendy in a given field;

- Research projects or larger initiatives that are seen as irrelevant (e.g., bacterial or animal research that is seen as unrelated to human health).

NIHRR should be funded at, say, $100m per year as a starting point ($1 billion would be better). This is an admittedly ambitious proposal. It would mean increasing the scientific and R&D expenditure by that amount, or else reassigning existing funding (which would be politically unpopular). But it is a worthy objective, and indeed, should be seen as a starting point.

Significant stakeholders with an interest in a new NIHRR would obviously include universities and scholars who currently struggle for scientific funding. In a way, that stacks the deck against the idea, because the most politically powerful institutions and individuals might oppose anything that tampers with the status quo of how research funding is allocated. Nonetheless, there may be a number of high-status individuals (e.g., current Nobel winners) who would be willing to support this idea as something that would have aided their earlier work.

A new fund like this would also provide fertile ground for metascience experiments and other types of studies. Consider the striking fact that as yet, there is virtually no rigorous empirical evidence as to the relative strengths and weaknesses of top-down, strategically-driven scientific funding versus funding that is more open to seemingly irrelevant, curiosity-driven research. With a new program for the latter, we could start to derive comparisons between the results of that funding as compared to equally situated researchers funded through the regular pathways.

Moreover, a common metascience proposal in recent years is to use a limited lottery to distribute funding, on the grounds that some funding is fairly random anyway and we might as well make it official. One possibility would be for part of the new program to be disbursed by lottery amongst researchers who met a minimum bar of quality and respectability, and who had got a high enough score on “scientific novelty.” One could imagine developing an algorithm to make an initial assessment as well. Then we could compare the results of lottery-based funding versus decisions made by program officers versus algorithmic recommendations.

Conclusion

A new line of funding like the National Institute for High-Reward Research (NIHRR) drive innovation and exploration by funding the potentially high-reward research that goes overlooked elsewhere. This would elevate worthy projects with unknown outcomes so that unfashionable or unpopular ideas can be explored. Funding these projects would have the added benefit of offering many opportunities to build in metascience studies from the outset, which is easier than retrofitting projects later.

This memo produced as part of the Federation of American Scientists and Good Science Project sprint. Find more ideas at Good Science Project x FAS

Absolutely, but that is also true for the current top-down approach of announcing lofty initiatives to “cure Alzheimer’s” and the like. Beyond that, the whole point of a true “high-risk, high-reward” research program should be to fund a large number of ideas that don’t pan out. If most research projects succeed, then it wasn’t a “high-risk” program after all.

Again, that would be a sign of potential success. Many of history’s greatest breakthroughs were mocked for those exact reasons at the time. And yes, some of the research will indeed be irrelevant or silly. That’s part of the bargain here. You can’t optimize both Type I and Type II errors at the same time (that is, false positives and false negatives). If we want to open the door to more research that would have been previously rejected on overly stringent grounds, then we also open the door to research that would have been correctly rejected on those grounds. That’s the price of being open to unpredictable breakthroughs.

How to evaluate success is a sticking point here, as it is for most of science. The traditional metrics (citations, patents, etc.) would likely be misleading, at least in the short-term. Indeed, as discussed above, there are cases where enormous breakthroughs took a few decades to be fully appreciated.

One simple metric in the shorter term would be something like this: “How often do researchers send in progress reports saying that they have been tackling a difficult question, and that they haven’t yet found the answer?” Instead of constantly promising and delivering success (which is often achieved by studying marginal questions and/or exaggerating results), scientists should be incentivized to honestly report on their failures and struggles.

Measuring Research Bureaucracy to Boost Scientific Efficiency and Innovation

Bureaucracy has become a critical barrier to scientific progress in America. An excess of management and administration efforts pulls researchers away from their core scientific work and consumes resources that could advance discovery. While we lack systematic measures of this inefficiency, the available data is troubling: researchers spend nearly half their time on administrative tasks, and nearly one in five dollars of university research budgets goes to regulatory compliance.

The proposed solution is a three-step effort to measure and roll back the bureaucratic burden. First, we need to create a detailed baseline by measuring administrative personnel, management layers, and associated time/costs across government funding agencies and universities receiving grant funding. Second, we need to develop and apply objective criteria to identify specific bureaucratic inefficiencies and potential improvements, based on direct feedback from researchers and administrators nationwide. Third, we need to quantify the benefits of reducing bureaucratic overhead and implement shared strategies to streamline processes, simplify regulations, and ultimately enhance research productivity.

Through this ambitious yet practical initiative, the administration could free up over a million research days annually and redirect billions of dollars toward scientific pursuits that strengthen America’s innovation capacity.

Challenge and Opportunity

Federally funded university scientists spend much of their time navigating procedures and management layers. Scientists, administrators, and policymakers widely agree that bureaucratic burden hampers research productivity and innovation, yet as the National Academy of Sciences noted in 2016 there is “little rigorous analysis or supporting data precisely quantifying the total burden and cost to investigators and research institutions of complying with federal regulations specific to the conduct of federally funded research.” This continues to be the case, despite evidence suggesting that federally funded faculty spend nearly half of their research time on administrative tasks, and nearly one in every five dollars spent on university research goes to regulatory compliance.

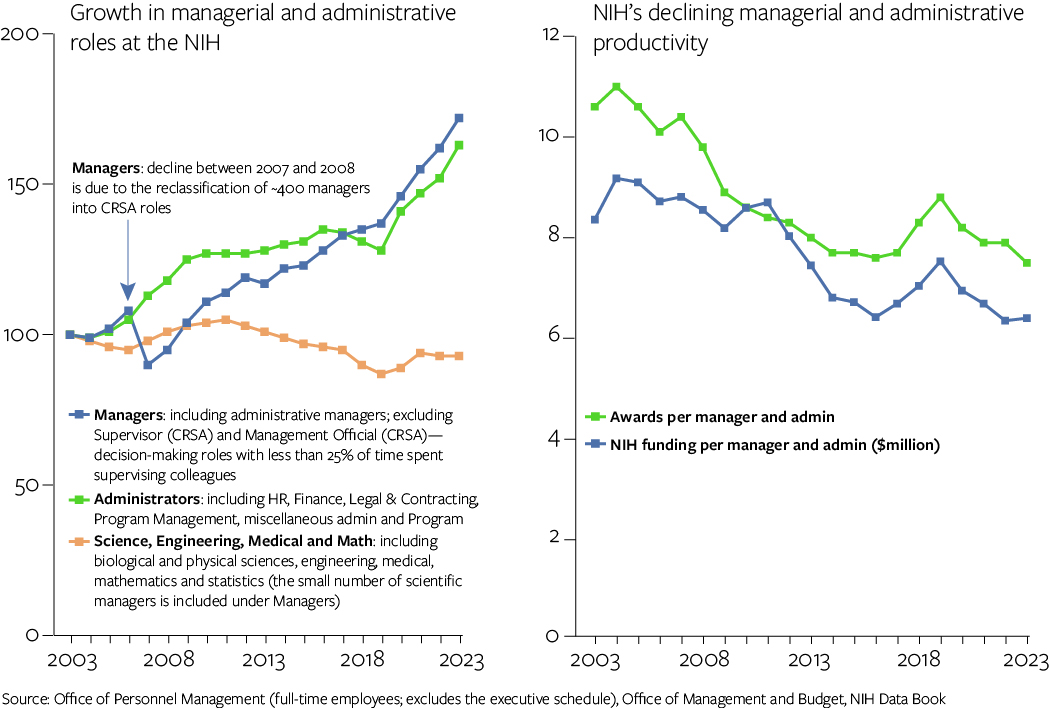

Judging by the steady rise in research administration requirements that face universities, the problem is getting worse. Federal rules and policies affecting research have multiplied ninefold in two decades— from 29 in 2004 to 255 in 2024, with half of the increase just in the last five years. It is no coincidence that the bureaucratic overhead is also expanding in funding agencies. At the National Institutes of Health (NIH), for instance, the growth of managers and administrators has significantly outpaced scientific roles and research funding activity (see figure).

The question is: just how much of universities’ $100 billion-plus annual research spend (more than half of it funded by the federal government) is hobbled by excess management and administration? To answer this, we must understand:

- Which bureaucratic activities are wasteful, or have a poor return on time and effort?

- How much time do bureaucratic activities take up, and what is the cost overall?

- Which activities are not required by the law or regulations, but are imposed by overly risk-averse legal counsel, compliance, and other administrators at agencies or universities?

- Which activities, rules, and processes should be eliminated or reimagined, and how?

- What portion of the overhead budget isn’t spent on research administration or management?

Plan of Action

The current administration aims to make government-funded research more efficient and productive. Recently, the director of the Office of Science and Technology Policy (OSTP) vowed to “reduce administrative burdens on federally funded researchers, not bog them down in bureaucratic box checking.” To that end, I propose a systematic effort that measures bureaucratic excess, quantifies the payoff from eliminating specific aspects of this burden, and improves accountability for results.

The president should issue an Executive Order directing the Office of Management and Budget (OMB) and Office of Science and Technology Policy (OSTP) to develop a Bureaucratic Burden report within 180 days of signing. The report should detail specific steps agencies will take to reduce administrative requirements. Agencies must participate in this effort at the leadership level, launching a government-wide effort to reduce bureaucracy. Furthermore, all research agencies should work together to develop a standardized method for calculating burden within both agencies and funded institutes, create a common set of policies that will streamline research processes, and establish clear limits on overhead spending to ensure full transparency in research budgets.

OMB and OSTP should create a cross-agency Research Efficiency Task Force within the National Science and Technology Council to conduct this work. This team would develop a shared approach and lead the data gathering, analysis, and synthesis using consistent measures across agencies. The Task Force’s first step would be to establish a bureaucratic baseline, including a detailed view of the managerial and administrative footprint within federal research agencies and universities that receive research funding, broken down into core components. The measurement approach would certainly vary between government agencies and funding recipients.

Key agencies, including the NIH, National Science Foundation, NASA, the Department of Defense, and the Department of Energy, should:

- Count personnel at each level—managers, administrators, and intramural scientists—along with their compensation;

- Document management layers from executives to frontline staff and supervisor ratios;

- Calculate time spent on administrative work by all staff, including researchers, to estimate total compliance costs and overhead.

- Task Force agencies should also hire an independent contractor(s) to analyze the administrative burden at a representative sample of universities. Through surveys and interviews, they should measure staffing, management structures, researcher time allocation, and overhead costs to size up the bureaucratic footprint across the scientific establishment.

Next, the Task Force should launch an online consultation with researchers and administrators nationwide. Participants could identify wasteful administrative tasks, quantify their time impact, and share examples of efficient practices. In parallel, agency leaders should submit to OMB and OSTP their formal assessment of which bureaucratic requirements can be eliminated, along with projected benefits.

Finally, the Task Force should produce a comprehensive estimate of the total cost of unnecessary bureaucracy and propose specific reforms. Its recommendations will identify potential savings from streamlining agency practices, statutory requirements, and oversight mechanisms. The Task Force should also examine how much overhead funding supports non-research activities, propose ways to redirect these resources to scientific research, and establish metrics and a public dashboard to track progress.

Some of this information may have already been gathered as part of ongoing reorganization efforts, which would expedite the assessment.

Within six months, the group should issue a public report that would include:

- A detailed estimate of the unnecessary costs of research bureaucracy.

- The cost gains from rolling back or adjusting specific burdens.

- A synthesis of harder-to-quantify benefits from these moves, such as faster approval cycles, better decision-making, and less conservatism in research proposals;

- A catalog of innovative research management practices, with a four-year timeline for studying and scaling them.

- A proposed approach for regular tracking and reporting on bureaucratic burden in science.

- A prioritized list of changes that each agency should make, including a clear timeline for making those changes and the estimated cost savings.

These activities would serve as the start of a series of broad reforms by the White House and research funding agencies to improve federal funding policies and practices.

Conclusion

This initiative will build an irrefutable case for reform, provide a roadmap for meaningful improvement, and create real accountability for results. Giving researchers and administrators a voice in reimagining the system they navigate daily will generate better insights and build commitment for change. The potential upside is enormous: millions of research days could be freed from paperwork for lab work, strengthening America’s capacity to innovate and lead the world. With committed leadership, this administration could transform how the US funds and conducts research, delivering maximum scientific return on every federal dollar invested.

This memo produced as part of the Federation of American Scientists and Good Science Project sprint. Find more ideas at Good Science Project x FAS

Maintaining American Leadership through Early-Stage Research in Methane Removal

Methane is a potent gas with increasingly alarming effects on the climate, human health, agriculture, and the economy. Rapidly rising concentrations of atmospheric methane have contributed about a third of the global warming we’re experiencing today. Methane emissions also contribute to the formation of ground-level ozone, which causes an estimated 1 million premature deaths around the world annually and poses a significant threat to staple crops like wheat, soybeans, and rice. Overall, methane emissions cost the United States billions of dollars each year.

Most methane mitigation efforts to date have rightly focused on reducing methane emissions. However, the increasingly urgent impacts of methane create an increasingly urgent need to also explore options for methane removal. Methane removal is a new field exploring how methane, once in the atmosphere, could be broken down faster than with existing natural systems alone to help lower peak temperatures, and counteract some of the impact of increasing natural methane emissions. This field is currently in the “earliest stages of knowledge discovery”, meaning that there is a tremendous opportunity for the United States to establish its position as the unrivaled world leader in an emerging critical technology – a top goal of the second Trump Administration. Global interest in methane means that there is a largely untapped market for innovative methane-removal solutions. And investment in this field will also generate spillover knowledge discovery for associated fields, including atmospheric, materials, and biological sciences.

Congress and the Administration must move quickly to capitalize on this opportunity. Following the recommendations of the National Academies of Sciences, Engineering, and Medicine (NASEM)’s October 2024 report, the federal government should incorporate early-stage methane removal research into its energy and earth systems research programs. This can be achieved through a relatively small investment of $50–80 million annually, over an initial 3–5 year phase. This first phase would focus on building foundational knowledge that lays the groundwork for potential future movement into more targeted, tangible applications.

Challenge and Opportunity

Methane represents an important stability, security, and scientific frontier for the United States. We know that this gas is increasing the risk of severe weather, worsening air quality, harming American health, and reducing crop yields. Yet too much about methane remains poorly understood, including the cause(s) of its recent accelerating rise. A deeper understanding of methane could help scientists better address these impacts – including potentially through methane removal.

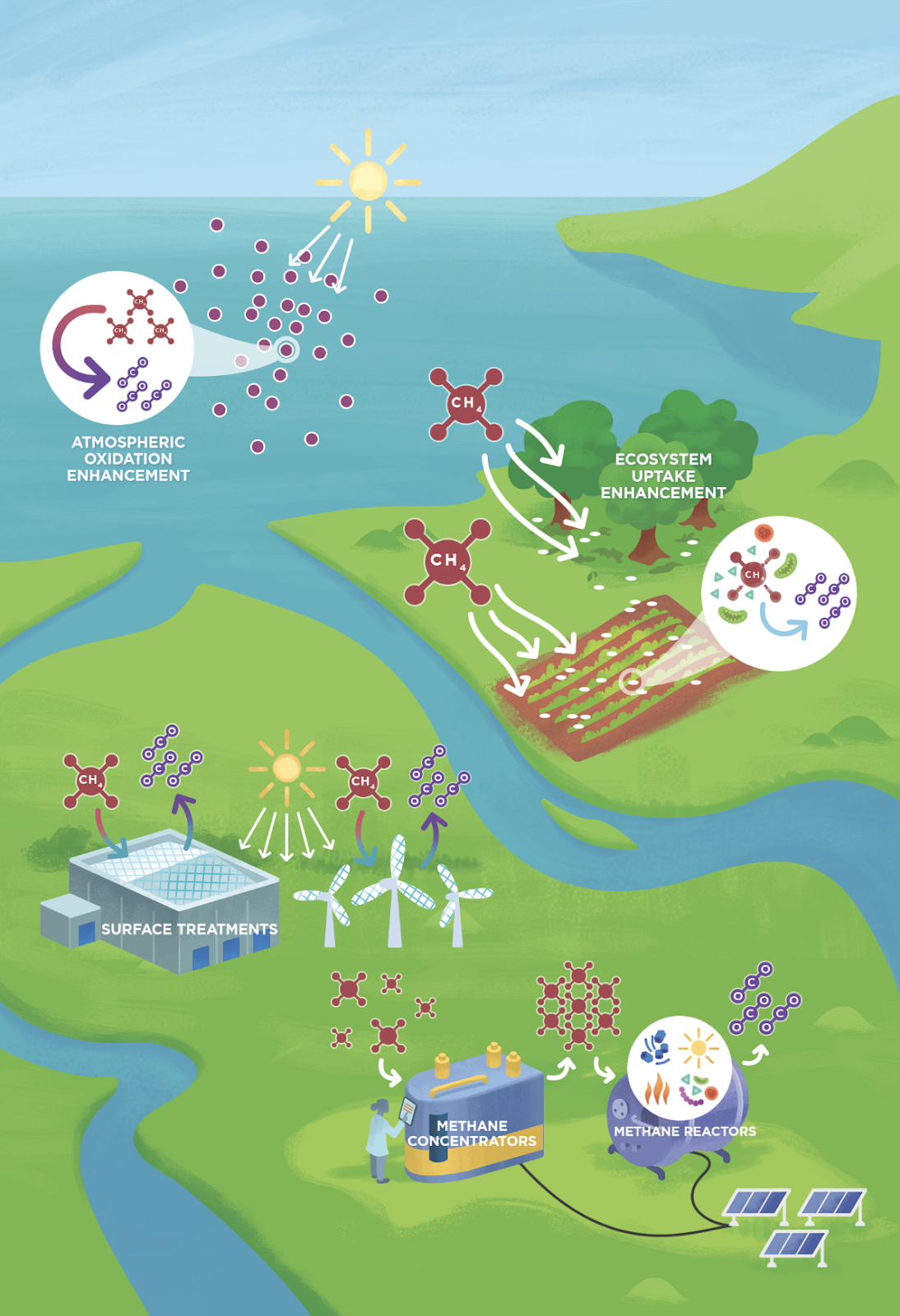

Methane removal is an early-stage research field primed for new American-led breakthroughs and discoveries. To date, four potential methane-removal technologies and one enabling technology have been identified. They are:

- Ecosystem uptake enhancement: Increasing microbes’ consumption of methane in soils and trees or getting plants to do so.

- Surface treatments: Applying special coatings that “eat” methane on panels, rooftops, or other surfaces.

- Atmospheric oxidation enhancement: Increasing atmospheric reactions conducive to methane breakdown.

- Methane reactors: Breaking down methane in closed reactors using catalysts, reactive gases, or microbes.

- Methane concentrators: A potentially enabling technology that would separate or enrich methane from other atmospheric components.

Figure 1. Atmospheric Methane Removal Technologies. (Source: National Academies Research Agenda)

Many of these proposed technologies have analogous traits to existing carbon dioxide removal methods and other interventions. However, much more research is needed to determine the net climate benefit, cost plausibility and social acceptability of all proposed methane removal approaches. The United States has positioned itself to lead on assessing and developing these technologies, such as through NASEM’s 2024 report and language included in the final FY24 appropriations package directing the Department of Energy to produce its own assessment of the field. The United States also has shown leadership with its civil society funding some of the earliest targeted research on methane removal.

But we risk ceding our leadership position – and a valuable opportunity to reap the benefits of being a first-mover on an emergent technology – without continued investment and momentum. Indeed, investing in methane removal research could help to improve our understanding of atmospheric chemistry and thus unlock novel discoveries in air quality improvement and new breakthrough materials for pollution management. Investing in methane removal, in short, would simultaneously improve environmental quality, unlock opportunities for entrepreneurship, and maintain America’s leadership in basic science and innovation. New research would also help the United States avoid possible technological surprises by competitors and other foreign governments, who otherwise could outpace the United States in their understanding of new systems and approaches and leave the country unprepared to assess and respond to deployment of methane removal elsewhere.

Plan of Action

The federal government should launch a five-year Methane Removal Initiative pursuant to the recommendations of the National Academies. A new five-year research initiative will allow the United States to evaluate and potentially develop important new tools and technologies to mitigate security risks arising from the dangerous accumulation of methane in the atmosphere while also helping to maintain U.S. global leadership in innovation. A well-coordinated, broad, cross-cutting federal government effort that fosters collaborations among agencies, research universities, national laboratories, industry, and philanthropy will enable the United States to lead science and technology improvements to meet these goals. To develop any new technologies on timescales most relevant for managing earth system risk, this foundational research should begin this year at an annual level of $50–$80 million per year. Research should last ideally five years and inform a more applied second-phase assessment recommended by the National Academies.

Consistent with the recommendations from the National Academies’ Atmospheric Methane Removal Research Agenda and early philanthropic seed funding for methane removal research, the Methane Removal Initiative would:

- Establish a national methane removal research and development program involving key science agencies, primarily the National Science Foundation, Department of Energy, and National Oceanic and Atmospheric Administration, with contributions from other agencies including the US Department of Agriculture, National Institute of Standards and Technology, National Aeronautics and Space Administration, Department of Interior, and Environmental Protection Agency.

- Focus early investments in foundational research to advance U.S. interests and close knowledge gaps, specifically in the following areas:

- The “sinks” and sources of methane, including both ground-level and atmospheric sinks as well as human-driven and natural sources (40% of research budget),

- Methane removal technologies, as described below (30% of research budget); and

- Potential applications of methane removal, such as demonstration and deployment systems and their interaction with other climate response strategies (30% of research budget).

The goal of this research program is ultimately to assess the need for and viability of new methods that could break down methane already in the atmosphere faster than natural processes already do alone. This program would be funded through several appropriations subcommittees in Congress, most notably Energy & Water Development and Commerce, Justice, Science and Related Agencies. Agriculture, Rural Development, Food and Drug Administration, and Interior and Environment also have funding recommendations relevant to their subcommittees. As scrutiny grows on the federal government’s fiscal balance, it should be noted that the scale of proposed research funding for methane removal is relatively modest and that no funding has been allocated to this potentially critical area of research to date. Forgoing these investments could result in neglecting this area of innovation at a critical time where there is an opportunity for the United States to demonstrate leadership.

Conclusion

Emissions reductions remain the most cost-effective means of arresting the rise in atmospheric methane, and improvements in methane detection and leak mitigation will also help America increase its production efficiency by reducing losses, lowering costs, and improving global competitiveness. The National Academies confirms that methane removal will not replace mitigation on timescales relevant to limiting peak warming this century, but the world will still likely face “a substantial methane emissions gap between the trajectory of increasing methane emissions (including from anthropogenically amplified natural emissions) and technically available mitigation measures.” This creates a substantial security risk for the United States in the coming decades, especially given large uncertainties around the exact magnitude of heat-trapping emissions from natural systems. A modest annual investment of $50–80 million can pay much larger dividends in future years through new innovative advanced materials, improved atmospheric models, new pollution control methods, and by potentially enhancing security against these natural systems risks. The methane removal field is currently at a bottleneck: ideas for innovative research abound, but they remain resource-limited. The government has the opportunity to eliminate these bottlenecks to unleash prosperity and innovation as it has done for many other fields in the past. The intensifying rise of atmospheric methane presents the United States with a new grand challenge that has a clear path for action.

Methane is a powerful greenhouse gas that plays an outsized role in near-term warming. Natural systems are an important source of this gas, and evidence indicates that these sources may be amplified in a warming world and emit even more. Even if we succeed in reducing anthropogenic emissions of methane, we “cannot ignore the possibility of accelerated methane release from natural systems, such as widespread permafrost thaw or release of methane hydrates from coastal systems in the Arctic.” Methane removal could potentially serve as a partial response to such methane-emitting natural feedback loops and tipping elements to reduce how much these systems further accelerate warming.

No. Aggressive emissions reductions—for all greenhouse gases, including methane—are the highest priority. Methane removal cannot be used in place of methane emissions reduction. It’s incredibly urgent and important that methane emissions be reduced to the greatest extent possible, and that further innovation to develop additional methane abatement approaches is accelerated. These have the important added benefit of improving American energy security and preventing waste.

More research is needed to determine the viability and safety of large-scale methane removal. The current state of knowledge indicates several approaches may have the potential to remove >10 Mt of methane per year (~0.8 Gt CO₂ equivalent over a 20 year period), but the research is too early to verify feasibility, safety, and effectiveness. Methane has certain characteristics that suggest that large-scale and cost-effective removal could be possible, including favorable energy dynamics in turning it into CO2 and the lack of a need for storage.

The volume of methane removal “needed” will depend on our overall emissions trajectory, atmospheric methane levels as influenced by anthropogenic emissions and anthropogenically amplified natural systems feedbacks, and target global temperatures. Some evidence indicates we may have already passed warming thresholds that trigger natural system feedbacks with increasing methane emissions. Depending on the ultimate extent of warming, permafrost methane release and enhanced methane emissions from wetland systems are estimated to potentially lead to ~40-200 Mt/yr of additional methane emissions and a further rise in global average temperatures (Zhang 2023, Kleinen 2021, Walter 2018, Turetsky 2020). Methane removal may prove to be the primary strategy to address these emissions.

Methane is a potent greenhouse gas, 43 times stronger than carbon dioxide molecule for molecule, with an atmospheric lifetime of roughly a decade (IPCC, calculation from Table 7.15). Methane removal permanently removes methane from the atmosphere by oxidizing or breaking down methane into carbon dioxide, water, and other byproducts, or if biological processes are used, into new biomass. These products and byproducts will remain cycling through their respective systems, but without the more potent warming impact of methane. The carbon dioxide that remains following oxidation will still cause warming, but this is no different than what happens to the carbon in methane through natural removal processes. Methane removal approaches accelerate this process of turning the more potent greenhouse gas methane into the less potent greenhouse gas carbon dioxide, permanently removing the methane to reduce warming.

The cost of methane removal will depend on the specific potential approach and further innovation, specific costs are not yet known at this stage. Some approaches have easier paths to cost plausibility, while others will require significant increases in catalytic, thermal or air processing efficiency to achieve cost plausibility. More research is needed to determine credible estimates, and innovation has the potential to significantly lower costs.

Greenhouse gases are not interchangeable. Methane removal cannot be used in place of carbon dioxide removal because it cannot address historical carbon dioxide emissions, manage long-term warming or counteract other effects (e.g., ocean acidification) that are results of humanity’s carbon dioxide emissions. Some methane removal approaches have characteristics that suggest that they may be able to get to scale quickly once developed and validated, should deployment be deemed appropriate, which could augment our near-term warming mitigation capacity on top of what carbon dioxide removal and emissions reductions offer.

Methane has a short atmospheric lifetime due to substantial methane sinks. The primary methane sink is atmospheric oxidation, from hydroxyl radicals (~90% of the total sink) and chlorine radicals (0-5% of the total sink). The rest is consumed by methane-oxidizing bacteria and archaea in soils (~5%). While understood at a high level, there is substantial uncertainty in the strength of the sinks and their dynamics.

Up until about 2000, the growth of methane was clearly driven by growing human-caused emissions from fossil fuels, agriculture, and waste. But starting in the mid-2000s, after a brief pause where global emissions were balanced by sinks, the level of methane in the atmosphere started growing again. At the same time, atmospheric measurements detected an isotopic signal that the new growth in methane may be from recent biological—as opposed to older fossil—origin. Multiple hypotheses exist for what the drivers might be, though the answer is almost certainly some combination of these. Hypotheses include changes in global food systems, growth of wetlands emissions as a result of the changing climate, a reduction in the rate of methane breakdown and/or the growth of fracking. Learn more in Spark’s blog post.

Methane has a significant warming effect for the 9-12 years that it remains in the atmosphere. Given how potent methane is, and how much is currently being emitted, even with a short atmospheric lifetime, methane is accumulating in the atmosphere and the overall warming impact of current and recent methane emissions is 0.5°C. Methane removal approaches may someday be able to bring methane-driven warming down faster than with natural sinks alone. The significant risk of ongoing substantial methane sources, such as natural methane emissions from permafrost and wetlands, would lead to further accumulation. Exploring options to remove atmospheric methane is one strategy to better manage this risk.

Research into all methane removal approaches is just beginning, and there is no known timeline for their development or guarantee that they will prove to be viable and safe.

Some methane removal and carbon dioxide removal approaches overlap. Some soil amendments may have an impact on both methane and carbon dioxide removal, and are currently being researched. Catalytic methane-oxidizing processes could be added to direct air capture (DAC) systems for carbon dioxide, but more innovation will be needed to make these systems sufficiently efficient to be feasible. If all planned DAC capacity also removed methane, it would make a meaningful difference, but still fall very short of the scale of methane removal that could be needed to address rising natural methane emissions, and additional approaches should be researched in parallel.

Methane emissions destruction refers to the oxidation of methane from higher-methane-concentration air streams from sources, for example air in dairy barns. There is technical overlap between some methane emissions destruction and methane removal approaches, but each area has its own set of constraints that will also lead to non-overlapping approaches, given different methane concentrations to treat, and different form-factor constraints.

Ambitious, Achievable, and Sustainable: A Blueprint for Reclaiming American Research Leadership

Summary

The next Administration should accelerate federal basic and applied research investments over a period of five years to return funding to its historical average as a share of GDP. While this ambitious yet achievable strategy should encompass the entire research portfolio, it should particularly seek to reverse the long-term erosion of collective investments in physical and computer science, mathematics, and engineering to lay the foundation for economic competitiveness deep into the 21st century. This proposal outlines a strategy and series of steps for the federal government to take to reinvigorate U.S. competitiveness by restoring research and development investments.

Under Pressure: Long Duration Undersea Research

“The Office of Naval Research is conducting groundbreaking research into the dangers of working for prolonged periods of time in extreme high and low pressure environments.”

Why? In part, it reflects “the increased operational focus being placed on undersea clandestine operations,” said Rear Adm. Mathias W. Winter in newly published answers to questions for the record from a February 2016 hearing.

“The missions include deep dives to work on the ocean floor, clandestine transits in cold, dark waters, and long durations in the confines of the submarine. The Undersea Medicine Program comprises the science and technology efforts to overcome human shortfalls in operating in this extreme environment,” he told the House Armed Services Committee.

See DoD FY2017 Science and Technology Programs: Defense Innovation to Create the Future Military Force, House Armed Services Committee hearing, February 24, 2016.