Addressing Online Harassment and Abuse through a Collaborative Digital Hub

Efforts to monitor and combat online harassment have fallen short due to a lack of cooperation and information-sharing across stakeholders, disproportionately hurting women, people of color, and LGBTQ+ individuals. We propose that the White House Task Force to Address Online Harassment and Abuse convene government actors, civil society organizations, and industry representatives to create an Anti-Online Harassment (AOH) Hub to improve and standardize responses to online harassment and to provide evidence-based recommendations to the Task Force. This Hub will include a data-collection mechanism for research and analysis while also connecting survivors with social media companies, law enforcement, legal support, and other necessary resources. This approach will open pathways for survivors to better access the support and recourse they need and also create standardized record-keeping mechanisms that can provide evidence for and enable long-term policy change.

Challenge and Opportunity

The online world is rife with hate and harassment, disproportionately hurting women, people of color, and LGBTQ+ individuals. A research study by Pew indicated that 47% of women were harassed online for their gender compared to 18% of men, while 54% of Black or Hispanic internet users faced race-based harassment online compared to 17% of White users. Seven in 10 LGBTQ+ adults have experienced online harassment, and 51% faced even more severe forms of abuse. Meanwhile, existing measures to combat online harassment continue to fall short, leaving victims with limited means for recourse or protection.

Numerous factors contribute to these shortcomings. Social media companies are opaque, and when survivors turn to platforms for assistance, they are often met with automated responses and few means to appeal or even contact a human representative who could provide more personalized assistance. Many survivors of harassment face threats that escalate from online to real life, leading them to seek help from law enforcement. While most states have laws against cyberbullying, law enforcement agencies are often ill-trained and ill-equipped to navigate the complex web of laws involved and the available processes through which they could provide assistance. And while there are nongovernmental organizations and companies that develop tools and provide services for survivors of online harassment, the onus continues to lie primarily on the survivor to reach out and navigate what is often both an overwhelming and a traumatic landscape of needs. Although resources exist, finding the correct organizations and reaching out can be difficult and time-consuming. Most often, the burden remains on the victims to manage and monitor their own online presence and safety.

On a larger, systemic scale, the lack of available data to quantitatively analyze the scope and extent of online harassment hinders the ability of researchers and interested stakeholders to develop effective, long-term solutions and to hold social media companies accountable. Lack of large-scale, cross-sector and cross-platform data further hinders efforts to map out the exact scale of the issue, as well as provide evidence-based arguments for changes in policy. As the landscape of online abuse is ever changing and evolving, up-to-date information about the lexicons and phrases that are used in attacks also change.

Forming the AOH Hub will improve the collection and monitoring of online harassment while preserving victims’ privacy; this data can also be used to develop future interventions and regulations. In addition, the Hub will streamline the process of receiving aid for those targeted by online harassment.

Plan of Action

Aim of proposal

The White House Task Force to Address Online Harassment and Abuse should form an Anti-Online Harassment Hub to monitor and combat online harassment. This Hub will center around a database that collects and indexes incidents of online harassment and abuse from technology companies’ self-reporting, through connections civil society groups have with survivors of harassment, and from reporting conducted by the general public and by targets of online abuse. Civil society actors that have conducted past work in providing resources and monitoring harassment incidents, ranging from academics to researchers to nonprofits, will run the AOH Hub in consortium as a steering committee. There are two aims for the creation of this hub.

First, the AOH Hub can promote collaboration within and across sectors, forging bonds among government, the technology sector, civil society, and the general public. This collaboration enables the centralization of connections and resources and brings together diverse resources and expertise to address a multifaceted problem.

Second, the Hub will include a data collection mechanism that can be used to create a record for policy and other structural reform. At present, the lack of data limits the ability of external actors to evaluate whether social media companies have worked adequately to combat harmful behavior on their platforms. An external data collection mechanism enables further accountability and can build the record for Congress and the Federal Trade Commission to take action where social media companies fall short. The allocated federal funding will be used to (1) facilitate the initial convening of experts across government departments and nonprofit organizations; (2) provide support for the engineering structure required to launch the Hub and database; (3) support the steering committee of civil society actors that will maintain this service; and (4) create training units for law enforcement officials on supporting survivors of online harassment.

Recommendation 1. Create a committee for governmental departments.

Survivors of online harassment struggle to find recourse, failed by legal technicalities in patchworks of laws across states and untrained law enforcement. The root of the problem is an outdated understanding of the implications and scale of online harassment and a lack of coordination across branches of government on who should handle online harassment and how to properly address such occurrences. A crucial first step is to examine and address these existing gaps. The Task Force should form a long-term committee of members across governmental departments whose work pertains to online harassment. This would include one person from each of the following organizations, nominated by senior staff:

- Department of Homeland Security

- Department of Justice

- Federal Bureau of Investigation

- Department of Health and Human Services

- Office on Violence Against Women

- Federal Trade Commission

This committee will be responsible for outlining fallibilities in the existing system and detailing the kind of information needed to fill those gaps. Then, the committee will outline a framework clearly establishing the recourse options available to harassment victims and the kinds of data collection required to prove a case of harassment. The framework should be completed within the first 6 months after the committee has been convened. After that, the committee will convene twice a year to determine how well the framework is working and, in the long term, implement reforms and updates to current laws and processes to increase the success rates of victims seeking assistance from governmental agencies.

Recommendation 2: Establish a committee for civil society organizations.

The Task Force shall also convene civil society organizations to help form the AOH Hub steering committee and gather a centralized set of resources. Victims will be able to access a centralized hotline and information page, and Hub personnel will then triage reports and direct victims to resources most helpful for their particular situation. This should reduce the burden on those who are targets of harassment campaigns to find the appropriate organizations that can help address their issues by matching incidents to appropriate resources.

To create the AOH Hub, members of the Task Force can map out civil society stakeholders in the space and solicit applications to achieve comprehensive and equitable representation across sectors. Relevant organizations include organizations/actors working on (but not limited to):

- Combating domestic violence and intimate partner violence

- Addressing technology-facilitated gender based violence (TF-GBV)

- Developing online tools for survivors of harassment to protect themselves

- Conducting policy work to improve policies on harassment

- Providing mental health support for survivors of harassment

- Servicing pro bono or other forms of legal assistance for survivors of harassment

- Connecting tech company representatives with survivors of harassment

- Researching methods to address online harassment and abuse

The Task Force will convene an initial meeting, during which core members will be selected to create an advisory board, act as a liaison across members, and conduct hiring for the personnel needed to redirect victims to needed services. Other secondary members will take part in collaboratively mapping out and sharing available resources, in order to understand where efforts overlap and complement each other. These resources will be consolidated, reviewed, and published as a public database of resources within a year of the group’s formation.

For secondary members, their primary obligation will be to connect with victims who have been recommended to their services. Core members, meanwhile, will meet quarterly to evaluate gaps in services and assistance provided and examine what more needs to be done to continue growing the robustness of services and aid provided.

Recommendation 3: Convene committee for industry.

After its formation, the AOH steering committee will be responsible for conducting outreach with industry partners to identify a designated team from each company best equipped to address issues pertaining to online abuse. After the first year of formation, the industry committee will provide operational reporting on existing measures within each company to address online harassment and examine gaps in existing approaches. Committee dialogue should also aim to create standardized responses to harassment incidents across industry actors and understandings of how to best uphold community guidelines and terms of service. This reporting will also create a framework for standardized best practices for data collection, in terms of the information collected on flagged cases of online harassment.

On a day-to-day basis, industry teams will be available resources for the hub, and cases can be redirected to these teams to provide person-to-person support for handling cases of harassment that require a personalized level of assistance and scale. This committee will aim to increase transparency regarding the reporting process and improve equity in responses to online harassment.

Recommendation 4: Gather committees to provide long-term recommendations for policy change.

On a yearly basis, representatives across the three committees will convene and share insights on existing measures and takeaways. These recommendations will be given to the Task Force and other relevant stakeholders, as well as be accessible by the general public. Three years after the formation of these committees, the groups will publish a report centralizing feedback and takeaway from all committees, and provide recommendations of improvement for moving forward.

Recommendation 5: Create a data-collection mechanism and standard reporting procedures.

The database will be run and maintained by the steering committee with support from the U.S. Digital Service, with funding from the Task Force for its initial development. The data collection mechanism will be informed by the frameworks provided by the committees that compose the Hub to create a trauma-informed and victim-centered framework surrounding the collection, protection, and use of the contained data. The database will be periodically reviewed by the steering committee to ensure that the nature and scope of data collection is necessary and respects the privacy of those whose data it contains. Stakeholders can use this data to analyze and provide evidence of the scale and cross-cutting nature of online harassment and abuse. The database would be populated using a standardized reporting form containing (1) details of the incident; (2) basic demographic data of the victim; (3) platform/means through which the incident occurred; (4) whether it is part of a larger organized campaign; (5) current status of the incident (e.g., whether a message was taken down, an account was suspended, the report is still ongoing); (6) categorization within existing proposed taxonomies indicating the type of abuse. This standardization of data collection would allow advocates to build cases regarding structured campaigns of abuse with well-documented evidence, and the database will archive and collect data across incidents to ensure accountability even if the originals are lost or removed.

The reporting form will be available online through the AOH Hub. Anyone with evidence of online harassment will be able to contribute to the database, including but not limited to victims of abuse, bystanders, researchers, civil society organizations, and platforms. To protect the privacy and safety of targets of harassment, this data will not be publicly available. Access will be limited to: (1) members of the Hub and its committees; (2) affiliates of the aforementioned members; (3) researchers and other stakeholders, after submitting an application stating reasons to access the data, plans for data use, and plans for maintaining data privacy and security. Published reports using data from this database will be nonidentifiable, such as with statistics being published in aggregate, and not be able to be linked back to individuals without express consent.

This database is intended to provide data to inform the committees in and partners of the Hub of the existing landscape of technology-facilitated abuse and violence. The large-scale, cross-domain, and cross-platform nature of the data collected will allow for better understanding and analysis of trends that may not be clear when analyzing specific incidents, and provide evidence regarding disproportionate harms to particular communities (such as women, people of color, LGBTQ+ individuals). Resources permitting, the Hub could also survey those who have been impacted by online abuse and harassment to better understand the needs of victims and survivors. This data aims to provide evidence for and help inform the recommendations made from the committees to the Task Force for policy change and further interventions.

Recommendation 6: Improve law enforcement support.

Law enforcement is often ill-equipped to handle issues of technology-facilitated abuse and violence. To address this, Congress should allocate funding for the Hub to create training materials for law enforcement nationwide. The developed materials will be added to training manuals and modules nationwide, to ensure that 911 operators and officers are aware of how to handle cases of online harassment and how state and federal law can apply to a range of scenarios. As part of the training, operators will also be notified to add records of 911 calls regarding online harassment to the Hub database, with the survivor’s consent.

Conclusion

As technology-facilitated violence and abuse proliferates, we call for funding to create a steering committee in which experts and stakeholders from civil society, academia, industry, and government can collaborate on monitoring and regulating online harassment across sectors and incidents. The resulting Anti-Online Harassment Hub would maintain a data-collection mechanism accessible to researchers to better understand online harassment as well as provide accountability for social media platforms to address the issue. Finally, the Hub would provide accessible resources for targets of harassment in a fashion that would reduce the burden on these individuals. Implementing these measures would create a safer online space where survivors are able to easily access the support they need and establish a basis for evidence-based, longer-term policy change.

Platform policies on hate and harassment differ in the redress and resolution they offer. Twitter’s proactive removal of racist abuse toward members of the England football team after the UEFA Euro 2020 Finals shows that it is technically feasible for abusive content to be proactively detected and removed by the platforms themselves. However, this appears to only be for high-profile situations or for well-known individuals. For the general public, the burden of dealing with abuse usually falls to the targets to report messages themselves, even as they are in the midst of receiving targeted harassment and threats. Indeed, the current processes for reporting incidents of harassment are often opaque and confusing. Once a report is made, targets of harassment have very little control over the resolution of the report or the speed at which it is addressed. Platforms also have different policies on whether and how a user is notified after a moderation decision is made. A lot of these notifications are also conducted through automated systems with no way to appeal, leaving users with limited means for recourse.

Recent years have seen an increase in efforts to combat online harassment. Most notably, in June 2022, Vice President Kamala Harris launched a new White House Task Force to Address Online Harassment and Abuse, co-chaired by the Gender Policy Council and the National Security Council. The Task Force aims to develop policy solutions to enhance accountability of perpetrators of online harm while expanding data collection efforts and increasing access to survivor-centered services. In March 2022, the Biden-Harris Administration also launched the Global Partnership for Action on Gender-Based Online Harassment and Abuse, alongside Australia, Denmark, South Korea, Sweden, and the United Kingdom. The partnership works to advance shared principles and attitudes toward online harassment, improve prevention and response measures to gender-based online harassment, and expand data and access on gender-based online harassment.

Efforts focus on technical interventions, such as tools that increase individuals’ digital safety, automatically blur out slurs, or allow trusted individuals to moderate abusive messages directed towards victims’ accounts. There are also many guides that walk individuals through how to better manage their online presence or what to do in response to being targeted. Other organizations provide support for those who are victims and provide next steps, help with reporting, and information on better security practices. However, due to resource constraints, organizations may only be able to support specific types of targets, such as journalists, victims of intimate partner violence, or targets of gendered disinformation. This increases the burden on victims to find support for their specific needs. Academic institutions and researchers have also been developing tools and interventions that measure and address online abuse or improve content moderation. While there are increasing collaborations between academics and civil society, there are still gaps that prevent such interventions from being deployed to their full efficacy.

While complete privacy and security is extremely different to ensure in a technical sense, we envision a database design that preserves data privacy while maintaining its usability. First, the fields of information required for filing an incident report form would minimize the amount of personally identifiable information collected. As some data can be crowdsourced from the public and external observers, this part of the dataset would consist of existing public data. Nonpublicly available data would be entered by only individuals who are sharing incidents that are targeting them (e.g., direct messages), and individuals would be allowed to choose whether it is visible in the database or only shown in summary statistics. Furthermore, the data collection methods and the database structure will be periodically reviewed by the steering committee of civil society organizations, who will make recommendations for improvement as needed.

Data collection and reporting can be conducted internationally, as we recognize that limiting data collection to the U.S. will also undermine our goals of intersectionality. However, the hotline will likely have more comprehensive support for U.S.-based issues. In the long run, however, efforts can also be expanded internationally, as a cross-collaborative effort across multinational governments.

Creating a Fair Work Ombudsman to Bolster Protections for Gig Workers

To increase protections for fair work, the U.S. Department of Labor (DOL) should create an Office of the Ombudsman for Fair Work. Gig workers are a category of non-employee contract workers who engage in on-demand work, often through online platforms. They have had historic vulnerabilities in the U.S. economy. A large portion of gig workers are people of color, and the nature of their temporary and largely unregulated work can leave them vulnerable to economic instability and workplace abuse. Currently, there is no federal mechanism to protect gig workers, and state-level initiatives have not offered thorough enough policy redress. Establishing an Office of the Ombudsman would provide the Department of Labor with a central entity to investigate worker complaints against gig employers, collect data and evidence about the current gig economy, and provide education to gig workers about their rights. There is strong precedent for this policy solution, since bureaus across the federal government have successfully implemented ombudsmen that are independent and support vulnerable constituents. To ensure its legal and long-lasting status, the Secretary of Labor should establish this Office in an act of internal agency reorganization.

Challenge and Opportunity

The proportion of the U.S. workforce engaging in gig work has risen steadily in the past few decades, from 10.1% in 2005 to 15.8% in 2015 to roughly 20% in 2018. Since the COVID-19 pandemic began, this trend has only accelerated, and a record number of Americans have now joined the gig economy and rely on its income. In a 2021 Pew Research study, over 16% of Americans reported having made money through online platform work alone, such as on apps like Uber and Doordash, which is merely a subset of gig work jobs. Gig workers in particular are more likely to be Black or Latino compared to the overall workforce.

Though millions of Americans rely on gig work, it does not provide critical employee benefits, such as minimum wage guarantees, parental leave, healthcare, overtime, unemployment insurance, or recourse for injuries incurred during work. According to an NPR survey, in 2018 more than half of contract workers received zero benefits through work. Further, the National Labor Relations Act, which protects employees’ rights to unionize and collectively bargain without retaliation, does not protect gig workers. This lack of benefits, rights, and voice leaves millions of workers more vulnerable than full-time employees to predatory employers, financial instability, and health crises, particularly during emergencies—such as the COVID-19 pandemic.

Additionally, in 2022, inflation reached a decades-long high, and though the price of necessities has spiked, wages have not increased correspondingly. Extreme inflation hurts lower-income workers without savings the most and is especially dangerous to gig workers, some of whom make less than the federal minimum hourly wage and whose income and work are subject to constant flux.

State-level measures have as yet failed to create protections for all gig workers. In 2020, California passed AB5, legally reclassifying many gig workers as employees instead of independent contractors and thus entitling them to more benefits and protections. But further bills and Proposition 22 reverted several groups of gig workers, including online platform gig workers like Uber and Doordash drivers, to being independent contractors. Ongoing litigation related to Proposition 22 leaves the future status of online platform gig workers in California unclear. In 2022, Washington State passed ESHB 2076 guaranteeing online platform workers—but not all gig workers—the benefits of full-time employees.

This sparse patchwork of state-level measures, which only supports subgroups of gig workers, could trigger a “race to the bottom” in which employers of gig workers relocate to less strict states. Additionally, inconsistencies between state laws make it harder for gig workers to understand their rights and gain redress for grievances, harder for businesses to determine with certainty their duties and liabilities, and harder for states to enforce penalties when an employer is headquartered in one state and the gig worker lives in another. The status quo is also difficult for businesses that strive to be better employers because it creates downward pressure on the entire landscape of labor market competition. Ultimately, only federal policy action can fully address these inconsistencies and broadly increase protections and benefits for all gig workers.

The federal ombudsman’s office outlined in this proposal can serve as a resource for gig workers to understand the scope of their current rights, provide a voice to amplify their grievances and harms, and collect data and evidence to inform policy proposals. It is the first step toward a sustainable and comprehensive national solution that expands the rights of gig workers.

Specifically, clarifying what rights, benefits, and means of recourse gig workers do and do not have would help gig workers better plan for healthcare and other emergent needs. It would also allow better tracking of trends in the labor market and systemic detection of employee misclassification. Hearing gig workers’ complaints in a centralized office can help the Department of Labor more expeditiously address gig workers’ concerns in situations where they legally do have recourse and can otherwise help the Department of Labor better understand the needs of and harms experienced by all workers. Collecting broad-ranging data on gig workers in particular could help inform federal policy change on their rights and protections. Currently, most datasets are survey based and often leave out people who were not working a gig job at the time the survey was conducted but typically otherwise do. More broadly, because of its informal and dynamic nature, the gig economy is difficult to accurately count and characterize, and an entity that is specifically charged with coordinating and understanding this growing sector of the market is key.

Lastly, employees who are not gig workers are sometimes misclassified as such and thus lose out on benefits and protections they are legally entitled to. Having a centralized ombudsman office dedicated to gig work could expedite support of gig workers seeking to correct their classification status, which the Wage and Hour Division already generally deals with, as well as help the Department of Labor and other agencies collect data to clarify the scope of the problem.

Plan of Action

The Department of Labor should establish an Office of the Ombudsman for Fair Work. This office should be independent of Department of Labor agencies and officials, and it should report directly to the Secretary of Labor. The Office would operate on a federal level with authority over states.

The Secretary of Labor should establish the Office in an act of internal agency reorganization. By establishing the Office such that its powers do not contradict the Department of Labor’s statutory limitations, the Secretary can ensure the Office’s status as legal and long-lasting, due to the discretionary power of the Department to interpret its statutes.

The role of the Office of the Ombudsman for Fair Work would be threefold: to serve as a centralized point of contact for hearing complaints from gig workers; to act as a central resource and conduct outreach to gig workers about their rights and protections; and to collect data such as demographic, wage, and benefit trends on the labor practices of the gig economy. Together, these responsibilities ensure that this Office consolidates and augments the actions of the Department of Labor as they pertain to workers in the gig economy, regardless of their classification status.

The functions of the ombudsman should be as follows:

- Establish a clear and centralized mechanism for hearing, collating, and investigating complaints from workers in the gig economy, such as through a helpline or mobile app.

- Establish and administer an independent, neutral, and confidential process to receive, investigate, resolve, and provide redress for cases in which employers misrepresent to individuals that they are engaged as independent contractors when they’re actually engaged as employees.

- Commence court proceedings to enforce fair work practices and entitlements, as they pertain to workers in the gig economy, in conjunction with other offices in the DOL.

- Represent employees or contractors who are or may become a party to proceedings in court over unfair contracting practices, including but not limited to misclassification as independent contractors. The office would refer matters to interagency partners within the Department of Labor and across other organizations engaged in these proceedings, augmenting existing work where possible.

- Provide education, assistance, and advice to employees, employers, and organizations, including best practice guides to workplace relations or workplace practices and information about rights and protections for workers in the gig economy.

- Conduct outreach in multiple languages to gig economy workers informing them of their rights and protections and of the Office’s role to hear and address their complaints and entitlements.

- Serve as the central data collection and publication office for all gig-work-related data. The Office will publish a yearly report detailing demographic, wage, and benefit trends faced by gig workers. Data could be collected through outreach to gig workers or their employers, or through a new data-sharing agreement with the Internal Revenue Service (IRS). This data report would also summarize anonymized trends based on the complaints collected (as per function 1), including aggregate statistics on wage theft, reports of harassment or discrimination, and misclassification. These trends would also be broken down by demographic group to proactively identify salient inequities. The office may also provide separate data on platform workers, which may be easier to collect and collate, since platform workers are a particular subject of focus in current state legislation and litigation.

Establishing an Office of the Ombudsman for Fair Work within the Department of Labor will require costs of compensation for the ombudsman and staff, other operational costs, and litigation expenses. To reflect the need for a reaction to the rapid ongoing changes in gig economy platforms, a small portion of the Office’s budget should be set aside to support the appointment of a chief innovation officer, aimed at examining how technology can strengthen its operations. Some examples of tasks for this role include investigating and strengthening complaint sorting infrastructure, utilizing artificial intelligence to evaluate contracts for misclassification, and streamlining request for proposal processes.

Due to the continued growth of the gig economy, and the precarious status of gig workers in the onset of an economic recession, this Office should be established in the nearest possible window. Establishing, appointing, and initiating this office will require up to a year of time, and will require budgeting within the DOL.

There are many precedents of ombudsmen in federal office, including the Office of the Ombudsman for the Energy Employees Occupational Illness Compensation Program within the Department of Labor. Additionally, the IRS established the Office of the Taxpayer Advocate, and the Department of Homeland Security has both a Citizenship and Immigration Services Ombudsman and an Immigration Detention Ombudsman. These offices have helped educate constituents about their rights, resolved issues that an individual might have with that federal agency, and served as independent oversight bodies. The Australian Government has a Fair Work Ombudsman that provides resources to differentiate between an independent contractor and employee and investigates employers who may be engaging in sham contracting or other illegal practices. Following these examples, the Office of the Ombudsman for Fair Work should work within the Department of Labor to educate, assist, and provide redress for workers engaged in the gig economy.

Conclusion

How to protect gig workers is a long-standing open question for labor policy and is likely to require more attention as post-pandemic conditions affect labor trends. The federal government needs a solution to the issues of vulnerability and instability experienced by gig workers, and this solution needs to operate independently of legislation that may take longer to gain consensus on. Establishing an office of an ombudsman is the first step to increase federal oversight for gig work. The ombudsman will use data, reporting, and individual worker cases to build a clearer picture for how to create redress for laborers that have been harmed by gig work, which will provide greater visibility into the status and concerns of gig workers. It will additionally serve as a single point of entry for gig workers and businesses to learn about their rights and for gig workers to lodge complaints. If made a reality, this office will be an influential first step in changing the entire policy ecosystem regarding gig work.

There is a current definitional debate about whether gig workers and platform workers are employees or contractors. Until this issue of misclassification can be resolved, there will likely not be a comprehensive state or federal policy governing gig work. However, the office of an ombudsman would be able to serve as the central point within the Department of Labor to handle gig worker issues, and it would be the entity tasked with collecting and publishing data about this class of laborers. This would help elevate the problems gig workers face as well as paint a picture of the extent of the issue for future legislation.

Each ombudsman will be appointed for a six-year period, to ensure insulation from partisan politics.

States often do not have adequate solutions to handle the discrepancies between employees and contractors. There is also the “race to the bottom” issue, where if protections are increased in one state, gig employers will simply relocate to states where the policies are less stringent. Further, there is the issue of gig companies being headquartered in one state while employees work in another. It makes sense for the Department of Labor to house a central, federal mechanism to handle gig work.

The key challenge right now is for the federal government to collect data and solve issues regarding protections for gig work. The office of the ombudsman’s broadly defined mandate is actually an advantage in this still-developing conversation about gig work.

Establishing a new Department of Labor office is no small feat. It requires a clear definition of the goal and allowed activities of the ombudsman. This would require buy-in from key DOL bureaucrats. The office would also have to hire, recruit, and train staff. These tasks may be speed bottlenecks for this proposal to get off the ground. Since DOL plans its budget several years in advance, this proposal would likely be targeted for the 2026 cycle.

Establishing an AI Center of Excellence to Address Maternal Health Disparities

Maternal mortality is a crisis in the United States. Yet more than 60% of maternal deaths are preventable—with the right evidence-based interventions. Data is a powerful tool for uncovering best care practices. While healthcare data, including maternal health data, has been generated at a massive scale by the widespread adoption and use of Electronic Health Records (EHR), much of this data remains unstandardized and unanalyzed. Further, while many federal datasets related to maternal health are openly available through initiatives set forth in the Open Government National Action Plan, there is no central coordinating body charged with analyzing this breadth of data. Advancing data harmonization, research, and analysis are foundational elements of the Biden Administration’s Blueprint for Addressing the Maternal Health Crisis. As a data-driven technology, artificial intelligence (AI) has great potential to support maternal health research efforts. Examples of promising applications of AI include using electronic health data to predict whether expectant mothers are at risk of difficulty during delivery. However, further research is needed to understand how to effectively implement this technology in a way that promotes transparency, safety, and equity. The Biden-Harris Administration should establish an AI Center of Excellence to bring together data sources and then analyze, diagnose, and address maternal health disparities, all while demonstrating trustworthy and responsible AI principles.

Challenge and Opportunity

Maternal deaths currently average around 700 per year, and severe maternal morbidity-related conditions impact upward of 60,000 women annually. Stark maternal health disparities persist in the United States, and pregnancy outcomes are subject to substantial racial/ethnic disparities, including maternal morbidity and mortality. According to the Centers for Disease Control and Prevention (CDC), “Black women are three times more likely to die from a pregnancy-related cause than White women.” Research is ongoing to specifically identify the root causes, which include socioeconomic factors such as insurance status, access to healthcare services, and risks associated with social determinants of health. For example, maternity care deserts exist in counties throughout the country where maternal health services are substantially limited or not available, impacting an estimated 2.2 million women of child-bearing age.

Many federal, public, and private datasets exist to understand the conditions that impact pregnant people, the quality of the care they receive, and ultimate care outcomes. For example, the CDC collects abundant data on maternal health, including the Pregnancy Mortality Surveillance System (PMSS) and the National Vital Statistics System (NVSS). Many of these datasets, however, have yet to be analyzed at scale or linked to other federal or privately held data sources in a comprehensive way. More broadly, an estimated 30% of the data generated globally is produced by the healthcare industry. AI is uniquely designed for data management, including cataloging, classification, and data integration. AI will play a pivotal role in the federal government’s ability to process an unprecedented volume of data to generate evidence-based recommendations to improve maternal health outcomes.

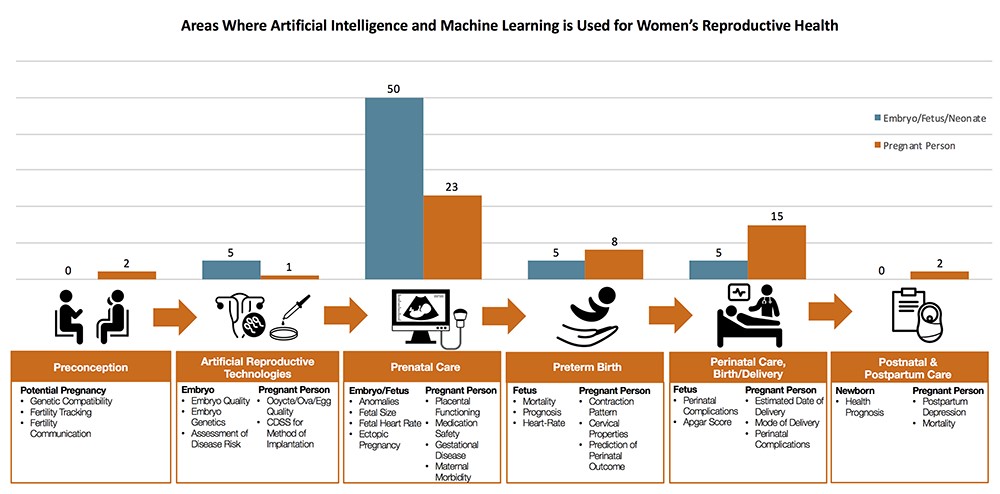

Applications of AI have rapidly proliferated throughout the healthcare sector due to their potential to reduce healthcare expenditures and improve patient outcomes (Figure 1). Several applications of this technology exist across the maternal health continuum and are shown in the figure below. For example, evidence suggests that AI can help clinicians identify more than 70% of at-risk moms during the first trimester by analyzing patient data and identifying patterns associated with poor health outcomes. Based on its findings, AI can provide recommendations for which patients will most likely be at-risk for pregnancy challenges before they occur. Research has also demonstrated the use of AI in fetal health monitoring.

Source: Davidson and Boland (2021).

Yet for all of AI’s potential, there is a significant dearth of consumer and medical provider understanding of how these algorithms work. Policy analysts argue that “algorithmic discrimination” and feedback loops in algorithms—which may exacerbate algorithmic bias—are potential risks of using AI in healthcare outside of the confines of an ethical framework. In response, certain federal entities such as the Department of Defense, the Office of the Director of National Intelligence, the National Institute for Standards and Technology, and the U.S. Department of Health and Human Services have published and adopted guidelines for implementing data privacy practices and building public trust of AI. Further, past Day One authors have proposed the establishment of testbeds for government-procured AI models to provide services to U.S. citizens. This is critical for enhancing the safety and reliability of AI systems while reducing the risk of perpetuating existing structural inequities.

It is vital to demonstrate safe, trustworthy uses of AI and measure the efficacy of these best practices through applications of AI to real-world societal challenges. For example, potential use cases of AI for maternal health include a social determinants of health [SDoH] extractor, which combines AI with clinical notes to more effectively identify SDoH information and analyze its potential role in health inequities. A center dedicated to ethically developing AI for maternal health would allow for the development of evidence-based guidelines for broader AI implementation across healthcare systems throughout the country. Lessons learned from this effort will contribute to the knowledge base around ethical AI and enable development of AI solutions for health disparities more broadly.

Plan of Action

To meet the calls for advancing data collection, standardization, transparency, research, and analysis to address the maternal health crisis, the Biden-Harris Administration should establish an AI Center of Excellence for maternal health. The AI Center of Excellence for Maternal Health will bring together data sources, then analyze, diagnose, and address maternal health disparities, all while demonstrating trustworthy and responsible AI principles. The Center should be created within the Department of Health and Human Services (HHS) and work closely with relevant offices throughout HHS and beyond, including the HHS Office of the Chief Artificial Intelligence Officer (OCAIO), the National Institutes of Health (NIH) IMPROVE initiative, the CDC, the Veterans Health Administration (VHA), and the National Institute for Standards and Technology (NIST). The Center should offer competitive salaries to recruit the best and brightest talent in AI, human-centered design, biostatistics, and human-computer interaction.

The first priority should be to work with all agencies tasked by the White House Blueprint for Addressing the Maternal Health Crisis to collect and evaluate data. This includes privately held EHR data that is made available through the Qualified Health Information Network (QHIN) and federal data from the CDC, Centers for Medicare and Medicaid (CMS), Office of Personnel Management (OPM), Healthcare Resources and Services Agency (HRSA), NIH, United States Department of Agriculture (USDA), Housing and Urban Development (HUD), the Veterans Health Administration, and Environmental Protection Agency (EPA), all of which contain datasets relevant to maternal health at different stages of the reproductive health journey from Figure 1. The Center should serve as a data clearing and cleaning shop, preparing these datasets using best practices for data management, preparation, and labeling.

The second priority should be to evaluate existing datasets to establish high-priority, high-impact applications of AI-enabled research for improving clinical care guidelines and tools for maternal healthcare providers. These AI demonstrations should be aligned with the White House’s Action Plan and be focused on implementing best practices for AI development, such as the AI Risk Management Framework developed by NIST. The following examples demonstrate how AI might help address maternal health disparities, based on priority areas informed by clinicians in the field:

- AI implementation should be explored for analysis of electronic health records from the VHA and QHIN to predict patients who have a higher risk of pregnancy and/or delivery complications.

- Drawing on the robust data collection and patient surveillance capabilities of the VHA and HRSA, AI should be explored for the deployment of digital tools to help monitor patients during pregnancy to ensure adequate and consistent use of prenatal care.

- Using VHA data and QHIN data, AI should be explored in supporting patient monitoring in instances of patient referrals and/or transfers to hospitals that are appropriately equipped to serve high-risk patients, following guidelines provided by the American College of Obstetricians and Gynecologists.

- Data on housing from HUD, rural development from the USDA, environmental health from the EPA, and social determinants of health research from the CDC should be connected to risk factors for maternal mortality in the academic literature to create an AI-powered risk algorithm.

- Understand the power of payment models operated by CMS and OPM for novel strategies to enhance maternal health outcomes and reduce maternal deaths.

The final priority should be direct translation of the findings from AI to federal policymaking around reducing maternal health disparities as well as ethical development of AI tools. Research findings for both aspects of this interdisciplinary initiative should be framed using Living Evidence models that help ensure that research-derived evidence and guidance remain current.

The Center should be able to meet the following objectives within the first year after creation to further the case for future federal funding and creation of more AI Centers of Excellence for healthcare:

- Conduct a study on the use cases uncovered for AI to help address maternal health disparities explored through the various demonstration projects.

- Publish a report of study findings, which should be submitted to Congress with recommendations to help inform funding priorities for subsequent research activities.

- Make study findings available to the public to help build public trust in AI.

Successful piloting of the Center could be made possible by passage of an equivalent bill to S.893 in the current Congress. This is a critical first step in supporting this work. In March 2021, the S.893—Tech to Save Moms Act was introduced in the Senate to fund research conducted by National Academies of Sciences, Engineering, and Medicine to understand the role of AI in maternal care delivery and its impact on bias in maternal health. Passage of an equivalent bill into law would enable the National Academies of Sciences, Engineering, and Medicine to conduct research in parallel with HHS to generate more findings and to broaden potential impact.

Conclusion

The United States has the highest rate of maternal health disparities among all developed countries. Yet more than 60% of pregnancy-related deaths are preventable, highlighting a critical opportunity to uncover the factors impeding more equitable health outcomes for the nation as a whole. Legislative support for research to understand AI’s role in addressing maternal health disparities will affirm the nation’s commitment to ensuring that we are prepared to thrive in a 21st century influenced and shaped by next-generation technologies such as artificial intelligence.

Creating Auditing Tools for AI Equity

The unregulated use of algorithmic decision-making systems (ADS)—systems that crunch large amounts of personal data and derive relationships between data points—has negatively affected millions of Americans. These systems impact equitable access to education, housing, employment, and healthcare, with life-altering effects. For example, commercial algorithms used to guide health decisions for approximately 200 million people in the United States each year were found to systematically discriminate against Black patients, reducing, by more than half, the number of Black patients who were identified as needing extra care.

One way to combat algorithmic harm is by conducting system audits, yet there are currently no standards for auditing AI systems at the scale necessary to ensure that they operate legally, safely, and in the public interest. According to one research study examining the ecosystem of AI audits, only one percent of AI auditors believe that current regulation is sufficient.

To address this problem, the National Institute of Standards and Technology (NIST) should invest in the development of comprehensive AI auditing tools, and federal agencies with the charge of protecting civil rights and liberties should collaborate with NIST to develop these tools and push for comprehensive system audits.

These auditing tools would help the enforcement arms of these federal agencies save time and money while fulfilling their statutory duties. Additionally, there is a pressing need to develop these tools now, with Executive Order 13985 instructing agencies to “focus their civil rights authorities and offices on emerging threats, such as algorithmic discrimination in automated technology.”

Challenge and Opportunity

The use of AI systems across all aspects of life has become commonplace as a way to improve decision-making and automate routine tasks. However, their unchecked use can perpetuate historical inequities, such as discrimination and bias, while also potentially violating American civil rights.

Algorithmic decision-making systems are often used in prioritization, classification, association, and filtering tasks in a way that is heavily automated. ADS become a threat when people uncritically rely on the outputs of a system, use them as a replacement for human decision-making, or use systems with no knowledge of how they were developed. These systems, while extremely useful and cost-saving in many circumstances, must be created in a way that is equitable and secure.

Ensuring the legal and safe use of ADS begins with recognizing the challenges that the federal government faces. On the one hand, the government wants to avoid devoting excessive resources to managing these systems. With new AI system releases happening everyday, it is becoming unreasonable to oversee every system closely. On the other hand, we cannot blindly trust all developers and users to make appropriate choices with ADS.

This is where tools for the AI development lifecycle come into play, offering a third alternative between constant monitoring and blind trust. By implementing auditing tools and signatory practices, AI developers will be able to demonstrate compliance with preexisting and well-defined standards while enhancing the security and equity of their systems.

Due to the extensive scope and diverse applications of AI systems, it would be difficult for the government to create a centralized body to oversee all systems or demand each agency develop solutions on its own. Instead, some responsibility should be shifted to AI developers and users, as they possess the specialized knowledge and motivation to maintain proper functioning systems. This allows the enforcement arms of federal agencies tasked with protecting the public to focus on what they do best, safeguarding citizens’ civil rights and liberties.

Plan of Action

To ensure security and verification throughout the AI development lifecycle, a suite of auditing tools is necessary. These tools should help enable outcomes we care about, fairness, equity, and legality. The results of these audits should be reported (for example, in an immutable ledger that is only accessible by authorized developers and enforcement bodies) or through a verifiable code-signing mechanism. We leave the specifics of the reporting and documenting the process to the stakeholders involved, as each agency may have different reporting structures and needs. Other possible options, such as manual audits or audits conducted without the use of tools, may not provide the same level of efficiency, scalability, transparency, accuracy, or security.

The federal government’s role is to provide the necessary tools and processes for self-regulatory practices. Heavy-handed regulations or excessive government oversight are not well-received in the tech industry, which argues that they tend to stifle innovation and competition. AI developers also have concerns about safeguarding their proprietary information and users’ personal data, particularly in light of data protection laws.

Auditing tools provide a solution to this challenge by enabling AI developers to share and report information in a transparent manner while still protecting sensitive information. This allows for a balance between transparency and privacy, providing the necessary trust for a self-regulating ecosystem.

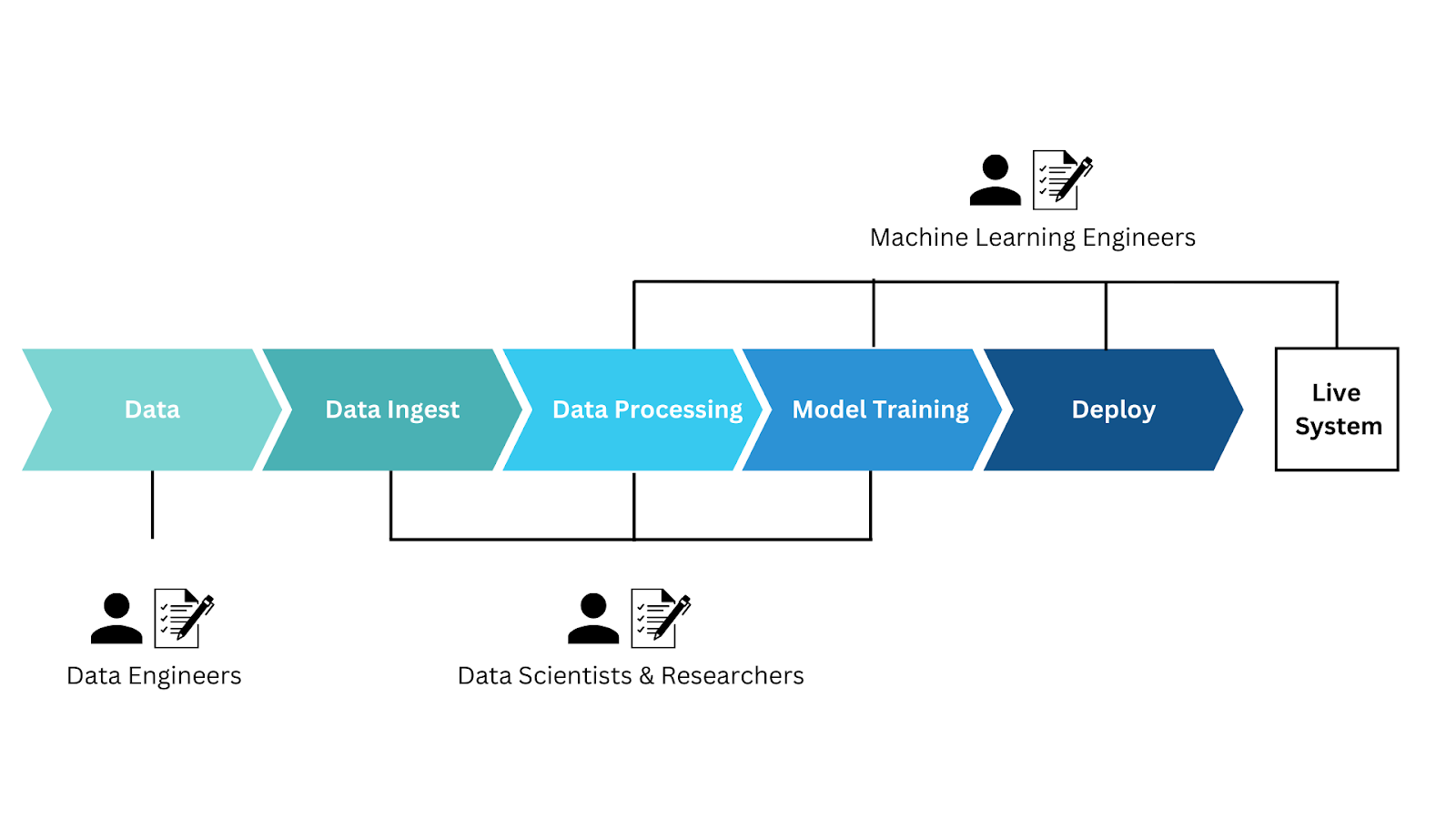

A general machine learning lifecycle. Examples of what system developers at each stage would be responsible for signing off on the use of the security and equity tools in the lifecycle. These developers represent companies, teams, or individuals.

The equity tool and process, funded and developed by government agencies such as NIST, would consist of a combination of (1) AI auditing tools for security and fairness (which could be based on or incorporate open source tools such as AI Fairness 360 and the Adversarial Robustness Toolbox), and (2) a standardized process and guidance for integrating these checks (which could be based on or incorperate guidance such as the U.S. Government Accountability Office’s Artificial Intelligence: An Accountability Framework for Federal Agencies and Other Entities).1

Dioptra, a recent effort between NIST and the National Cybersecurity Center of Excellence (NCCoE) to build machine learning testbeds for security and robustness, is an excellent example of the type of lifecycle management application that would ideally be developed. Failure to protect civil rights and ensure equitable outcomes must be treated as seriously as security flaws, as both impact our national security and quality of life.

Equity considerations should be applied across the entire lifecycle; training data is not the only possible source of problems. Inappropriate data handling, model selection, algorithm design, and deployment, also contribute to unjust outcomes. This is why tools combined with specific guidance is essential.

As some scholars note, “There is currently no available general and comparative guidance on which tool is useful or appropriate for which purpose or audience. This limits the accessibility and usability of the toolkits and results in a risk that a practitioner would select a sub-optimal or inappropriate tool for their use case, or simply use the first one found without being conscious of the approach they are selecting over others.”

Companies utilizing the various packaged tools on their ADS could sign off on the results using code signing. This would create a record that these organizations ran these audits along their development lifecycle and received satisfactory outcomes.

We envision a suite of auditing tools, each tool applying to a specific agency and enforcement task. Precedents for this type of technology already exist. Much like security became a part of the software development lifecycle with guidance developed by NIST, equity and fairness should be integrated into the AI lifecycle as well. NIST could spearhead a government-wide initiative on auditing AI tools, leading guidance, distribution, and maintenance of such tools. NIST is an appropriate choice considering its history of evaluating technology and providing guidance around the development and use of specific AI applications such as the NIST-led Face Recognition Vendor Test (FRVT).

Areas of Impact & Agencies / Departments Involved

Security & Justice

The U.S. Department of Justice, Civil Rights Division, Special Litigation SectionDepartment of Homeland Security U.S. Customs and Border Protection U.S. Marshals Service

Public & Social Sector

The U.S. Department of Housing and Urban Development’s Office of Fair Housing and Equal Opportunity

Education

The U.S. Department of Education

Environment

The U.S. Department of Agriculture, Office of the Assistant Secretary for Civil RightsThe Federal Energy Regulatory CommissionThe Environmental Protection Agency

Crisis Response

Federal Emergency Management Agency

Health & Hunger

The U.S. Department of Health and Human Services, Office for Civil RightsCenter for Disease Control and PreventionThe Food and Drug Administration

Economic

The Equal Employment Opportunity Commission, The U.S. Department of Labor, Office of Federal Contract Compliance Programs

Infrastructure

The U.S. Department of Transportation, Office of Civil RightsThe Federal Aviation AdministrationThe Federal Highway Administration

Information Verification & Validation

The Federal Trade Commission, The Federal Communication Commission, The Securities and Exchange Commission.

Many of these tools are open source and free to the public. A first step could be combining these tools with agency-specific standards and plain language explanations of their implementation process.

Benefits

These tools would provide several benefits to federal agencies and developers alike. First, they allow organizations to protect their data and proprietary information while performing audits. Any audits, whether on the data, model, or overall outcomes, would be run and reported by the developers themselves. Developers of these systems are the best choice for this task since ADS applications vary widely, and the particular audits needed depend on the application.

Second, while many developers may opt to use these tools voluntarily, standardizing and mandating their use would allow an evaluation of any system thought to be in violation of the law to be easily assesed. In this way, the federal government will be able to manage standards more efficiently and effectively.

Third, although this tool would be designed for the AI lifecycle that results in ADS, it can also be applied to traditional auditing processes. Metrics and evaluation criteria will need to be developed based on existing legal standards and evaluation processes; once these metrics are distilled for incorporation into a specific tool, this tool can be applied to non-ADS data as well, such as outcomes or final metrics from traditional audits.

Fourth, we believe that a strong signal from the government that equity considerations in ADS are important and easily enforceable will impact AI applications more broadly, normalizing these considerations.

Example of Opportunity

An agency that might use this tool is the Department of Housing and Urban Development (HUD), whose purpose is to ensure that housing providers do not discriminate based on race, color, religion, national origin, sex, familial status, or disability.

To enforce these standards, HUD, which is responsible for 21,000 audits a year, investigates and audits housing providers to assess compliance with the Fair Housing Act, the Equal Credit Opportunity Act, and other related regulations. During these audits, HUD may review a provider’s policies, procedures, and records, as well as conduct on-site inspections and tests to determine compliance.

Using an AI auditing tool could streamline and enhance HUD’s auditing processes. In cases where ADS were used and suspected of harm, HUD could ask for verification that an auditing process was completed and specific metrics were met, or require that such a process be undergone and reported to them.

Noncompliance with legal standards of nondiscrimination would apply to ADS developers as well, and we envision the enforcement arms of protection agencies would apply the same penalties in these situations as they would in non-ADS cases.

R&D

To make this approach feasible, NIST will require funding and policy support to implement this plan. The recent CHIPS and Science Act has provisions to support NIST’s role in developing “trustworthy artificial intelligence and data science,” including the testbeds mentioned above. Research and development can be partially contracted out to universities and other national laboratories or through partnerships/contracts with private companies and organizations.

The first iterations will need to be developed in partnership with an agency interested in integrating an auditing tool into its processes. The specific tools and guidance developed by NIST must be applicable to each agency’s use case.

The auditing process would include auditing data, models, and other information vital to understanding a system’s impact and use, informed by existing regulations/guidelines. If a system is found to be noncompliant, the enforcement agency has the authority to impose penalties or require changes to be made to the system.

Pilot program

NIST should develop a pilot program to test the feasibility of AI auditing. It should be conducted on a smaller group of systems to test the effectiveness of the AI auditing tools and guidance and to identify any potential issues or areas for improvement. NIST should use the results of the pilot program to inform the development of standards and guidelines for AI auditing moving forward.

Collaborative efforts

Achieving a self-regulating ecosystem requires collaboration. The federal government should work with industry experts and stakeholders to develop the necessary tools and practices for self-regulation.

A multistakeholder team from NIST, federal agency issue experts, and ADS developers should be established during the development and testing of the tools. Collaborative efforts will help delineate responsibilities, with AI creators and users responsible for implementing and maintaining compliance with the standards and guidelines, and agency enforcement arms agency responsible for ensuring continued compliance.

Regular monitoring and updates

The enforcement agencies will continuously monitor and update the standards and guidelines to keep them up to date with the latest advancements and to ensure that AI systems continue to meet the legal and ethical standards set forth by the government.

Transparency and record-keeping

Code-signing technology can be used to provide transparency and record-keeping for ADS. This can be used to store information on the auditing outcomes of the ADS, making reporting easy and verifiable and providing a level of accountability to users of these systems.

Conclusion

Creating auditing tools for ADS presents a significant opportunity to enhance equity, transparency, accountability, and compliance with legal and ethical standards. The federal government can play a crucial role in this effort by investing in the research and development of tools, developing guidelines, gathering stakeholders, and enforcing compliance. By taking these steps, the government can help ensure that ADS are developed and used in a manner that is safe, fair, and equitable.

Code signing is used to establish trust in code that is distributed over the internet or other networks. By digitally signing the code, the code signer is vouching for its identity and taking responsibility for its contents. When users download code that has been signed, their computer or device can verify that the code has not been tampered with and that it comes from a trusted source.

Code signing can be extended to all parts of the AI lifecycle as a means of verifying the authenticity, integrity, and function of a particular piece of code or a larger process. After each step in the auditing process, code signing enables developers to leave a well-documented trail for enforcement bodies/auditors to follow if a system were suspected of unfair discrimination or unsafe operation.

Code signing is not essential for this project’s success, and we believe that the specifics of the auditing process, including documentation, are best left to individual agencies and their needs. However, code signing could be a useful piece of any tools developed.

Additionally, there may be pushback on the tool design. It is important to remember that currently, engineers often use fairness tools at the end of a development process, as a last box to check, instead of as an integrated part of the AI development lifecycle. These concerns can be addressed by emphasizing the comprehensive approach taken and by developing the necessary guidance to accompany these tools—which does not currently exist.

New York regulators are calling on a UnitedHealth Group to either stop using or prove there is no problem with a company-made algorithm that researchers say exhibited significant racial bias. This algorithm, which UnitedHealth Group sells to hospitals for assessing the health risks of patients, assigned similar risk scores to white patients and Black patients despite the Black patients being considerably sicker.

In this case, researchers found that changing just one parameter could generate “an 84% reduction in bias.” If we had specific information on the parameters going into the model and how they are weighted, we would have a record-keeping system to see how certain interventions affected the output of this model.

Bias in AI systems used in healthcare could potentially violate the Constitution’s Equal Protection Clause, which prohibits discrimination on the basis of race. If the algorithm is found to have a disproportionately negative impact on a certain racial group, this could be considered discrimination. It could also potentially violate the Due Process Clause, which protects against arbitrary or unfair treatment by the government or a government actor. If an algorithm used by hospitals, which are often funded by the government or regulated by government agencies, is found to exhibit significant racial bias, this could be considered unfair or arbitrary treatment.

Example #2: Policing

A UN panel on the Elimination of Racial Discrimination has raised concern over the increasing use of technologies like facial recognition in law enforcement and immigration, warning that it can exacerbate racism and xenophobia and potentially lead to human rights violations. The panel noted that while AI can enhance performance in some areas, it can also have the opposite effect as it reduces trust and cooperation from communities exposed to discriminatory law enforcement. Furthermore, the panel highlights the risk that these technologies could draw on biased data, creating a “vicious cycle” of overpolicing in certain areas and more arrests. It recommends more transparency in the design and implementation of algorithms used in profiling and the implementation of independent mechanisms for handling complaints.

A case study on the Chicago Police Department’s Strategic Subject List (SSL) discusses an algorithm-driven technology used by the department to identify individuals at high risk of being involved in gun violence and inform its policing strategies. However, a study by the RAND Corporation on an early version of the SSL found that it was not successful in reducing gun violence or reducing the likelihood of victimization, and that inclusion on the SSL only had a direct effect on arrests. The study also raised significant privacy and civil rights concerns. Additionally, findings reveal that more than one-third of individuals on the SSL, approximately 70% of that cohort, have never been arrested or been a victim of a crime yet received a high-risk score. Furthermore, 56% of Black men under the age of 30 in Chicago have a risk score on the SSL. This demographic has also been disproportionately affected by the CPD’s past discriminatory practices and issues, including torturing Black men between 1972 and 1994, performing unlawful stops and frisks disproportionately on Black residents, engaging in a pattern or practice of unconstitutional use of force, poor data collection, and systemic deficiencies in training and supervision, accountability systems, and conduct disproportionately affecting Black and Latino residents.

Predictive policing, which uses data and algorithms to try to predict where crimes are likely to occur, has been criticized for reproducing and reinforcing biases in the criminal justice system. This can lead to discriminatory practices and violations of the Fourth Amendment’s prohibition on unreasonable searches and seizures, as well as the Fourteenth Amendment’s guarantee of equal protection under the law. Additionally, bias in policing more generally can also violate these constitutional provisions, as well as potentially violating the Fourth Amendment’s prohibition on excessive force.

Example #3: Recruiting

ADS in recruiting crunch large amounts of personal data and, given some objective, derive relationships between data points. The aim is to use systems capable of processing more data than a human ever could to uncover hidden relationships and trends that will then provide insights for people making all types of difficult decisions.

Hiring managers across different industries use ADS every day to aid in the decision-making process. In fact, a 2020 study reported that 55% of human resources leaders in the United States use predictive algorithms across their business practices, including hiring decisions.

For example, employers use ADS to screen and assess candidates during the recruitment process and to identify best-fit candidates based on publicly available information. Some systems even analyze facial expressions during interviews to assess personalities. These systems promise organizations a faster, more efficient hiring process. ADS do theoretically have the potential to create a fairer, qualification-based hiring process that removes the effects of human bias. However, they also possess just as much potential to codify new and existing prejudice across the job application and hiring process.

The use of ADS in recruiting could potentially violate several constitutional laws, including discrimination laws such as Title VII of the Civil Rights Act of 1964 and the Americans with Disabilities Act. These laws prohibit discrimination on the basis of race, gender, and disability, among other protected characteristics, in the workplace. Additionally, the these systems could also potentially violate the right to privacy and the due process rights of job applicants. If the systems are found to be discriminatory or to violate these laws, they could result in legal action against the employers.

Supporting Historically Disadvantaged Workers through a National Bargaining in Good Faith Fund

Black, Indigenous, and other people of color (BIPOC) are underrepresented in labor unions. Further, people working in the gig economy, tech supply chain, and other automation-adjacent roles face a huge barrier to unionizing their workplaces. These roles, which are among the fastest-growing segments of the U.S. economy, are overwhelmingly filled by BIPOC workers. In the absence of safety nets for these workers, the racial wealth gap will continue to grow. The Biden-Harris Administration can promote racial equity and support low-wage BIPOC workers’ unionization efforts by creating a National Bargaining in Good Faith Fund.

As a whole, unions lift up workers to a better standard of living, but historically they have failed to protect workers of color. The emergence of labor unions in the early 20th century was propelled by the passing of the National Labor Relations Act (NLRA), also known as the Wagner Act of 1935. Although the NLRA was a beacon of light for many working Americans, affording them the benefits of union membership such as higher wages, job security, and better working conditions, which allowed many to transition into the middle class, the protections of the law were not applied to all working people equally. Labor unions in the 20th century were often segregated, and BIPOC workers were often excluded from the benefits of unionization. For example, the Wagner Act excluded domestic and agricultural workers and permitted labor unions to discriminate against workers of color in other industries, such as manufacturing.

Today, in the aftermath of the COVID-19 pandemic and amid a renewed interest in a racial reckoning in the United States, BIPOC workers—notably young and women BIPOC workers—are leading efforts to organize their workplaces. In addition to demanding wage equity and fair treatment, they are also fighting for health and safety on the job. Unionized workers earn on average 11.2% more in wages than their nonunionized peers. Unionized Black workers earn 13.7% more and unionized Hispanic workers 20.1% more than their nonunionized peers. But every step of the way, tech giants and multinational corporations are opposing workers’ efforts and their legal right to organize, making organizing a risky undertaking.

A National Bargaining in Good Faith Fund would provide immediate and direct financial assistance to workers who have been retaliated against for attempting to unionize, especially those from historically disadvantaged groups in the United States. This fund offers a simple and effective solution to alleviate financial hardships, allowing affected workers to use the funds for pressing needs such as rent, food, or job training. It is crucial that we advance racial equity, and this fund is one step toward achieving that goal by providing temporary financial support to workers during their time of need. Policymakers should support this initiative as it offers direct payments to workers who have faced illegal retaliation, providing a lifeline for historically disadvantaged workers and promoting greater economic justice in our society.

Challenges and Opportunities

The United States faces several triangulating challenges. First is our rapidly evolving economy, which threatens to displace millions of already vulnerable low-wage workers due to technological advances and automation. The COVID-19 pandemic accelerated automation, which is a long-term strategy for the tech companies that underpin the gig economy. According to a report by an independent research group, self-driving taxis are likely to dominate the ride-hailing market by 2030, potentially displacing 8 million human drivers in the United States alone.

Second, we have a generation of workers who have not reaped the benefits associated with good-paying union jobs due to decades of anti-union activities. As of 2022, union membership has dropped from more than 30% of wage and salary workers in the private sector in the 1950s to just 6.3%. The declining percentage of workers represented by unions is associated with widespread and deep economic inequality, stagnant wages, and a shrinking middle class. Lower union membership rates have contributed to the widening of the pay gap for women and workers of color.

Third, historically disadvantaged groups are overrepresented in nonunionized, low-wage, app-based, and automation-adjacent work. This is due in large part to systemic racism. These structures adversely affect BIPOC workers’ ability to obtain quality education and training, create and pass on generational wealth, or follow through on the steps required to obtain union representation.

Workers face tremendous opposition to unionization efforts from companies that spend hundreds of millions of dollars and use retaliatory actions, disinformation, and other intimidating tactics to stop them from organizing a union. For example, in New York, Black organizer Chris Smalls led the first successful union drive in a U.S. Amazon facility after the company fired him for his activities and made him a target of a smear campaign against the union drive. Smalls’s story is just one illustration of how BIPOC workers are in the middle of the collision between automation and anti-unionization efforts.

The recent surge of support for workers’ rights is a promising development, but BIPOC workers face challenges that extend beyond anti-union tactics. Employer retaliation is also a concern. Workers targeted for retaliation suffer from reduced hours or even job loss. For instance, a survey conducted at the beginning of the COVID-19 pandemic revealed that one in eight workers perceived possible retaliatory actions by their employers against colleagues who raised health and safety concerns. Furthermore, Black workers were more than twice as likely as white workers to experience such possible retaliation. This sobering statistic is a stark reminder of the added layers of discrimination and economic insecurity that BIPOC workers have to navigate when advocating for better working conditions and wages.

The time to enact strong policy supporting historically disadvantaged workers is now. Advancing racial equity and racial justice is a focus for the Biden-Harris Administration, and the political and social will is evident. The day one Biden-Harris Administration Executive Order on Advancing Racial Equity and Support for Underserved Communities Through the Federal Government seeks to develop policies designed to advance equity for all, including people of color and others who have been historically underinvested in, marginalized, and adversely affected by persistent poverty and inequality. Additionally, the establishment of the White House is a significant development. Led by Vice-President Kamala Harris and Secretary of Labor Marty Walsh, the Task Force aims to empower workers to organize and negotiate with their employers through federal government policies, programs, and practices.