Increasing the Value of Federal Investigator-Initiated Research through Agency Impact Goals

American investment in science is incredibly productive. Yet, it is losing trust with the public, being seen as misaligned with American priorities and very expensive. To increase the real and perceived benefit of research funding, funding agencies should develop challenge goals for their extramural research programs focused on the impact portion of their mission. For example, the NIH could adopt one goal per institute or center “to enhance health, lengthen life, and reduce illness and disability”; NSF could adopt one goal per directorate “to advance the national health, prosperity and welfare; [or] to secure the national defense”. Asking research agencies to consider person-level or economic impacts in advance helps the American people see the value of federal research funding, and encourages funders to approach the problem holistically, from basic to applied research. For almost every problem there are different scientific questions that will yield benefit over multiple time scales and insight from multiple disciplines.

This plan has three elements:

- Focus some agency funding on measurable mission impacts

- Fund multiple timescales as part of a single plan

- Institutionalize the impact funding process across science funders

For example, if NIH wanted to reduce the burden of Major Depression, it could invest in a shorter time frame to learn how to better deliver evidence-based care to everyone who needs it. At the same time, it can invest in midrange work to develop and test new models and medications, and in the decades-long work required to understand how the exosome influences mood disorders. A simple way to implement this approach would be to build on the processes developed by the Government Performance Results Act (GPRA), which already requires goal setting and reporting, though proposals could be worked into any strategic planning process through a variety of administrative mechanisms.

Challenge and Opportunity

In 1945, Vannevar Bush called science the ‘endless frontier’, and argued funding scientific research is fundamental to the obligations of American government. He wrote “without scientific progress no amount of achievement in other directions can insure our health, prosperity, and security as a nation in the modern world”. The legacy of this report is that health, prosperity, and security feature prominently in the missions of most federal research agencies (see Table 1). However, in this century we have begun to drift from his focus on the impacts of science. We have the strange situation where our enterprise is both incredibly productive, and losing trust with the public, viewed as out of touch or misaligned with American priorities. This memo proposes a simple solution to address this issue for federal funding agencies like NIH and NSF that largely focus on extramural investigator-initiated research. These are research programs where the funding agency signals interest in specific topics and teams of scientists submit their research plans addressing those topics. The agency then funds a subset of those plans with input from external scientific reviewers.

This funding approach is incredibly productive. For example, NIH funds most of the pipeline for the emerging bioeconomy, which accounts for 5.1% of our GDP. From 2010 to 2016, every one of the 210 new entities approved by the FDA had some NIH funding. And yet, there appears to be a disconnect between our funding strategy and the public interest focus of the Endless Frontier operationalized through our federal science agency missions for investigator initiated research.

A fundamental driver of this disconnect might be a slight misalignment of the incentives of academic scientists, who are rewarded for novelty and scientific impact, with the broader public interest. Our federal agencies are highly attuned to scientific leaders, and place equal or even greater weight on innovation (novelty plus scientific impact) than real world impact. For example, NSF review criteria place equal weight on intellectual merit (‘advance knowledge’) and broader impacts (‘benefit society and contribute to the achievement of specific, desired societal outcomes’). NIH’s impact score of new applications is an ‘assessment of the likelihood for the project to exert a sustained, powerful influence on the research field(s) involved’ [emphasis mine], which is only part of the agency’s mission. The practical implications of this sustained focus away from the impact portion of agencies missions become apparent in figure 1, showing tremendous spending in health research unrelated to a key public interest measure like lifespan, especially when compared to other nations’ health research spending.

Perhaps the realization that the federal research investment is not strongly linked to their mission impact is one reason why American science has been slowly losing public trust over time. Among the people of 68 nations ranking the integrity of scientists, Americans ranked scientists 7th highest, whereas we ranked scientists 16th highest in our estimation of them acting in the public interest. And this is despite the fact that the American investment in science is many times higher than the 15 nations who rated scientists more highly on public interest. A more accurate description of our 21st century federal science enterprise might be the ‘timeless frontier’, where our science agencies pursue cycles of funding year in and year out, with their functional goal being scientific changes and their primary measure of success being projects funded. Advancing the economy, health, national defense, etc., are almost incidental benefits to our process measures.

We can do better. In 2024, the National Academy of Medicine called out the lack of high level coordination in research funding. In 2025, the administration has been making drastic cuts and dramatic changes to goals and processes of federal research funding, and the ultimate outcome of these changes is unclear. In the face of this change, Drs. Victor Dzau and Keith Yamamoto, staunch champions of our federal science programs, are calling for “a coherent strategy […] to sustain and coordinate the unrivaled strengths of government-funded research and ensure that its benefits reach all Americans”.

We can build on the incredible success of the federal science enterprise – inarguably the most productive science enterprise in all history. The primary source of American scientific strength is scale. American funding agencies are usually the largest funders in their space. I will highlight some challenges of the current approach and suggest improvements to yield even more impactful approaches more closely aligned with the public interest.

The primary federal funding strategy is broad diversification, where our agencies fund every high scoring application in a topic space (see FAQs). Further, federal science agencies pay little attention to when they expect to see a fundamental impact arising from their research portfolio. For example, a centrally directed program like the Human Genome Project can lead to breakthrough treatments decades later, but in the meantime, other research that generates improvements on faster timescales could have been coordinated, such as developing conventional drug treatments, or research to optimize quality and delivery of existing treatment.

And yet, the breadth and complexity of broad diversification makes it easy to cherry pick successes. This is a strategic issue, and is bigger than the project selection issues highlighted in the earlier discussion about review criteria. When research funding agencies make their pitch for federal dollars they highlight a handful of successes over tens of thousands of projects funded over many years. They ignore failures, the time when investments were made, and time to benefit. With the goals and metrics we have in place, it is simply too hard to summarize progress in any other way.

Overly diversified science funding supports both good Congressional testimony and bad strategy. If your problem happens to fall into a unicorn space of success, there is a lot to celebrate. But most problems do not, and we experience inconsistent returns. We need to define the success of research funding more precisely, in advance, and in ways that more obviously align with the public interest.

Plan of Action

If we tweak our funding strategy to focus on societal impacts, we can move to a more impactful science enterprise, and help regain public support for science funding. We can focus federal research funding on effective answers to difficult problems demanding both urgency and short term improvements, and fundamental discoveries that may take decades to realize. My solution and implementation actions for agencies, and potentially Congress, are described below.

Recommendation 1. Focus some agency funding on measurable mission impacts.

We should empower our science agencies to step away from broad diversification as the predominant funding strategy, and pursue measurable mission impacts with specific time horizons. It can be a challenge for funders to step away from process measures (e.g. projects or consortia funded) and focus on actual changes in mission impact.

Ideally, these specific impacts would be broken into measurable goals that would be selected through a participatory process that includes scientific experts, people with lived experience of the issue, and potential partner agencies. I recommend each agency division (e.g. an NSF Directorate) allocate a percentage of their budget to these mission impact strategies. Further, to avoid strategic errors that can arise from overwhelming power of federal funding to shape the direction of scientific fields, these high level funding plans should be as impact focused as possible, and avoid steering funding to one scientific theory or discipline over another.

Recommendation 2. Fund multiple timescales as part of a single plan.

Research funders need to balance their investment portfolios not only across problem areas, but over time. Complex challenges will often require funding different aspects of the solution on different timelines in parallel as part of a larger plan. Balancing time as well as spending allows for a more robust portfolio of funding that draws from a broader array of scientific disciplines and institutions.

Note, this approach means starting lines of research that may not lead to ultimate impact for decades. This approach might seem strange given our relatively short budget cycles, but is very common in science, where projects like the Human Genome initiative, the Brain Initiative, or the National Nanotechnology Initiative, have all exceeded a single budget cycle and will take years to realize their full impact. These kinds of efforts require milestones to ensure they stay on track over time.

Recommendation 3. Institutionalize the impact funding process across science funders.

Our research enterprise has become oriented around investigator-initiated, project-based awards. Alternative funding strategies, such as the DARPA model, are viewed as anomalies that must require completely different governance and procedures. These differences in goals are unnecessary. A consistent focus on impacts and strategy in funding across agencies will help the scientific community become more aware of the time to benefit of research, help underscore the value of research investment to the American public, and help research agencies collaborate among themselves and with their partner agencies (e.g. NIH collaborates more closely with CMS, FDA, etc.).

In short, institutionalizing this process can lead to greater accountability and recognition for our science enterprise. This structure allows our funders to report to the public progress on specific goals on predetermined and preannounced timelines, rather than having to comb through tens of thousands of independent funding decisions and competing strategies to find case studies to highlight. In this way, expected and unexpected scientific results, and even operational challenges, can be discussed within an impact framework that clearly ties to the agency mission and public interest.

Example of Planning using an Impact Focus

Here is an example of a mission impact goal Reducing the Burden of Major Depressive Disorder that could be put forth by the National Institute of Mental Health (NIMH), and the process to develop it.

Commence Inclusive Planning: NIMH brings together experts from academia, clinical care, industry, people impacted by depression, and FDA and CMS to develop measures, timelines and funding strategies.

Develop Specific Impact Measures: These should reflect the agency’s impact portion of their mission. For example, NIH’s mission impact of “enhance health, lengthen life, and reduce illness and disability” requires measuring impact on human beings. Example measurement targets could include:

- Reduced incidence of Major Depressive Disorder

- Increased productivity (e.g. days worked) of people living with Major Depressive Disorder

- Reduced suicide rates

Fund Multiple Time Scales: Designate time scales in parallel as part of a comprehensive strategy. These different plans would involve different disciplines, funding mechanisms, and private sector and government partners. Examples of plans working at different timescales to support the same goal and measures could include:

- 10 year plan: Increase utilization of evidence based care

- 15 year plan: Develop and implement new treatments

- 30 year plan: Determine how the exposome causes and prevents depression, and how can be changed

- It is likely that NIMH has already obligated funds to projects that support one of these plans, though they may need additional work to ensure that those projects can directly tie to the specific plan measures.

Implementation Strategies for Impact Goals

Each federal funding agency could allocate a percentage of their budget to these and other impact goals. The exact amount would depend on the current funding approach of each agency. As this proposal calls for more direct focus on agency mission, and not a change in mission, it is likely that a significant percentage of the agency’s current budget already supports an impact goal on one or more of its time scales.

For an agency heavily weighted towards project based funding of small investigator teams, like NIH, I would recommend starting with a goal of 20% of their budgets set towards impact spending and consider increases over time. Other agencies with different funding models may want to start in a different place. Further, I would recommend different goals and targeted funds for each major administrative unit, such as an institute or directorate.

All federal funders already engage in some form of strategic and budget planning, and most also have formal structures for engaging stakeholders into those planning decisions. Therefore, each agency already has sufficient authorities and structures to implement this proposal. However, it is likely that these impact goals will require collaboration across agencies, and that could be difficult for agencies to efficiently conduct by themselves.

Additional support to make this change could come from Congressional Report language as part of the budget process, through interagency leadership from the White House Office of Science and Technology, or through the Office of Management and Budget. For example, the Government Performance Results Act (GPRA) already requires agency goal setting, reporting and supports cross agency priority goals. That planning process could easily be adapted to this more specific impact focus for research funding agencies, and reporting on those goals could be incorporated into routine reporting of agency activities.

Conclusion

We are living through a massive disruption in federal research funding, and as of the fall of 2025, it is not clear what future federal research funding will look like. We have an opportunity to focus the incredibly productive federal research enterprise around the central reasons why Americans invest in it. We can meet Bush’s challenge of the Endless Frontier simply by clearly defining the benefits the American people want to see, and explicitly setting plans, timing and money to make that happen.

We can call our shots and focus our science funding around impacts, not spending. And we can set our goals with enough emotional resonance and depth to capture both the interests of the average American, and the needs of scientists from different disciplines and types of institutions. We already have the legal authorities in place to adopt these techniques, we just need the will.

Inadvertently, the huge scale of federal funding could lead to a monopsonistic effect. In other words, NIH’s buying power is so large, if NIH does not fund a specific type of research, people may stop studying it. This risk is highest within a narrow scientific field if there is a bias in grant selection. A well publicized example being NIH’s strong funding preference to one theory of Alzheimer’s Disease to the diminishment of competing theories, which in turn influenced careers and publication patterns to contribute to that bias.

Behavioral Economics Megastudies are Necessary to Make America Healthy

Through partnership with the Doris Duke Foundation, FAS is advancing a vision for healthcare innovation that centers safety, equity, and effectiveness in artificial intelligence. Inspired by work from the Social Science Research Council (SSRC) and Arizona State University (ASU) symposiums, this memo explores new research models such as large-scale behavioral “megastudies” and how they can transform our understanding of what drives healthier choices for longer lives. Through policy entrepreneurship FAS engages with key actors in government, research, academia and industry. These recommendations align with ongoing efforts to integrate human-centered design, data interoperability, and evidence-based decision-making into health innovation.

By shifting funding from small underpowered randomized control trials to large field experiments in which many different treatments are tested synchronously in a large population using the same objective measure of success, so-called megastudies can start to drive people toward healthier lifestyles. Megastudies will allow us to more quickly determine what works, in whom, and when for health-related behavioral interventions, saving tremendous dollars over traditional randomized controlled trial (RCT) approaches because of the scalability. But doing so requires the government to back the establishment of a research platform that sits on top of a large, diverse cohort of people with deep demographic data.

Challenge and Opportunity

According to the National Research Council, almost half of premature deaths (< 86 years of age) are caused by behavioral factors. Poor diet, high blood pressure, sedentary lifestyle, obesity, and tobacco use are the primary causes of early death for most of these people. Yet, despite studying these factors for decades, we know surprisingly little about what can be done to turn these unhealthy behaviors into healthier ones. This has not been due to a lack of effort. Thousands of randomized controlled trials intended to uncover messaging and incentives that can be used to steer people towards healthier behaviors have failed to yield impactful steps that can be broadly deployed to drive behavioral change across our diverse population. For sure, changing human behavior through such mechanisms is controversial, and difficult. Nonetheless studying how to bend behavior should be a national imperative if we are to extend healthspan and address the declining lifespan of Americans at scale.

Limitations of RCTs

Traditional randomized controlled trials (RCTs), which usually test a single intervention, are often underpowered, and expensive, and short-lived, limiting their utility even though RCTs remain the gold standard for determining the validity of behavioral economics studies. In addition, because the diversity of our population in terms of biology, and culture are severely limiting factors for study design, RCTs are often conducted on narrow, well-defined populations. What works for a 24-year-old female African American attorney in Los Angeles may not be effective for a 68-year-old male white fisherman living in Mississippi. Overcoming such noise in the system means either limiting the population you are examining through demographics, or deploying raw power of numbers of study participants that can allow post study stratification and hypothesis development. It also means that health data alone is not enough. Such studies require deep personal demographic data to be combined with health data, and wearables. In essence, we need a very clear picture of the lives of participants to properly identify interventions that work and apply them appropriately post-study on broader populations. Similarly, testing a single intervention means that you cannot be sure that it is the most cost-effective or impactful intervention for a desired outcome. This further limits the ability to deploy RCTs at scale. Finally, the data sometimes implies spurious associations. Therefore, preregistration of endpoints, interventions, and analysis of such studies will make for solid evidence development even if the most tantalizing outcomes come from sifting through the data later to develop new hypotheses that can be further tested.

Value of Megastudies

Professors Angela Duckworth and Katherine Milkman, at the University of Pennsylvania, have proposed an expansion of the use of megastudies to gain deeper behavioral insights from larger populations. In essence, megastudies are “massive field experiments in which many different treatments are tested synchronously in a large sample using a common objective outcome.” This sort of paradigm allows independent researchers to develop interventions to test in parallel against other teams. Participants are randomly assigned across a large cohort to determine the most impactful and cost-effective interventions. In essence, the teams are competing against each other to develop the most effective and practical interventions on the same population for the same measurable outcome.

Using this paradigm, we can rapidly assess interventions, accelerate scientific progress by saving time and money, all while making more appropriate comparisons to bend behavior towards healthier lifestyles. Due to the large sample sizes involved and deep knowledge of the demographics of participants, megastudies allow for the noise that is normal in a broad population that normally necessitates narrowing the demographics of participants. Further, post study analysis allows for rich hypothesis generation on what interventions are likely to work in more narrow populations. This enables tailored messaging and incentives to the individual. A centralized entity managing the population data reduces costs and makes it easier to try a more diverse set of risk-tolerant interventions. A centralized entity also opens the door to smaller labs to participate in studies. Finally, the participants in these megastudies are normally part of ongoing health interactions through a large cohort study or directly through care providers. Thus, they benefit directly from participation and tailored messages and incentives. Additionally, dataset scale allows for longer term study design because of the reduction in overall costs. This enables study designers to determine if their interventions work well over a longer period of time or if the impact of interventions wane and need to be adjusted.

Funding and Operational Challenges

But this kind of “apples to apples” comparison has serious drawbacks that have prevented megastudies from being used routinely in science despite their inherent advantage. First, megastudies require access to a large standing cohort of study participants that will remain in the cohort long term. Ideally, the organizer of such studies should be vested in having positive outcomes. Here, larger insurance companies are poor targets for organizing. Similarly, they have to be efficient, thus, government run cohorts, which tend to be highly bureaucratic, expensive, and inefficient are not ideal. Not everything need go through a committee. (Looking at you, All of Us at NIH and Million Veterans Program at the VA).

Companies like third party administrators of healthcare plans might be an ideal organizing body, but so can companies that aim to lower healthcare costs as a means of generating revenue through cost savings. These companies tend to have access to much deeper data than traditional cohorts run by government and academic institutions and could leverage that data for better stratifying participants and results. However, if the goal of government and philanthropic research efforts is to improve outcomes, then they should open the aperture on available funds to stand up a persistent cohort that can be used by many researchers rather than continuing the one-off paradigm, which in the end is far more expensive and inefficient. Finally, we do not imply that all intervention types should be run through megastudies. They are an essential, albeit underutilized tool in the arsenal, but not a silver bullet for testing behavioral interventions.

Fear of Unauthorized Data Access or Misuse

There is substantial risk when bringing together such deep personal data on a large population of people. While companies compile deep data all the time, it is unusual to do so for research purposes and will, for sure, raise some eyebrows, as has been the case for large studies like the aforementioned All of Us and the Million Veteran’s Program.

Patients fear misuse of their data, inaccurate recommendations, and biased algorithms—especially among historically marginalized populations. Patients must trust that their data is being used for good, not for marketing purposes and determining their insurance rates.

Icons © 2024 by Jae Deasigner is licensed under CC BY 4.0

Need for Data Interoperability

Many healthcare and community systems operate in data silos and data integration is a perennial challenge in healthcare. Patient-generated data from wearables, apps, or remote sensors often do not integrate with electronic health record data or demographic data gathered from elsewhere, limiting the precision and personalization of behavior-change interventions. This lack of interoperability undermines both provider engagement and user benefit. Data fragmentation and poor usability requires designing cloud-based data connectors and integration, creating shared feedback dashboards linking self-generated data to provider workflows, and creating and promoting policies that move towards interoperability. In short, given the constantly evolving data integration challenge and lack of real standards for data formats and integration requirements, a dedicated and persistent effort will have to be made to ensure that data can be seamlessly integrated if we are to draw value from combining data from many sources for each patient.

Additional Barriers

One of the largest barriers to using behavioral economics is that some rural, tribal, low-income, and older adults face access barriers. These could include device affordability, broadband coverage, and other usability and digital literacy limitations. Megastudies are not generally designed to bridge this gap leaving a significant limitation of applicability for these populations. Complicating matters, these populations also happen to have significant and specific health challenges unique to their cohorts. As the use of behavioral economic levers are developed, these communities are in danger of being left behind, further exacerbating health disparities. Nonetheless, insight into how to reach these populations can be gained for individuals in these populations that do have access to technology platforms. Communications will have to be tailored accordingly.

External motivators have been consistently shown to be the essential drivers of behavioral change. But motivation to sustain a behavior change and continue using technology often wanes over time. Embedding intrinsic-value rewards and workplace incentives may not be enough. Therefore, external motivations likely have to be adjusted over time in a dynamic system to ensure that adjustments to the behavior of the individual can be rooted in evidence. Indeed, study of the dynamic nature of driving behavioral change will be necessary due to the likelihood of waning influence of static messaging. By designing reward systems that tie personal values and workplace wellness programs sustained engagement through social incentives and tailored nudges may keep users engaged.

Plan of Action

By enabling a private sector entity to create a research platform using patient data combined with deep demographic data, and an ethical framework for access and use, we can create a platform for megastudies. This would allow the rapid testing of behavioral interventions that steer people towards healthier lifestyles, saving money, accelerating progress, and better understanding what works, in whom, and when for changing human behavior.

This could have been done through either the All of Us program or Million Veterans program or a different large cohort study, but neither program has the deep demographic and lifestyle data required to stratify their population. Both are mired in bureaucratic lethargy that is common in large scale government programs. Health insurance companies and third-party administrators of health insurance can gather such data, be nimbler, create a platform for communicating directly with patients, coordinate with their clinical providers. But one could argue that neither entity has a real incentive to bend behavior and encourage healthy lifestyles. Simply put, that is not their business.

Recommendation 1. Issue a directive to agencies to invest in the development of a megastudy platform for health behavioral economics studies.

The White House of HHS Secretary should direct the NIH or ARPA-H to develop a plan for funding the creation of a behavioral economics megastudy platform. The directive should include details on the ethical and technical framework requirements as well as directions for development of oversight of the platform once it is created. The platform should be required to establish a sustainability plan as part of the application for a contract to create the megastudy platform.

Recommendation 2. Government should fund the establishment of a megastudy platform.

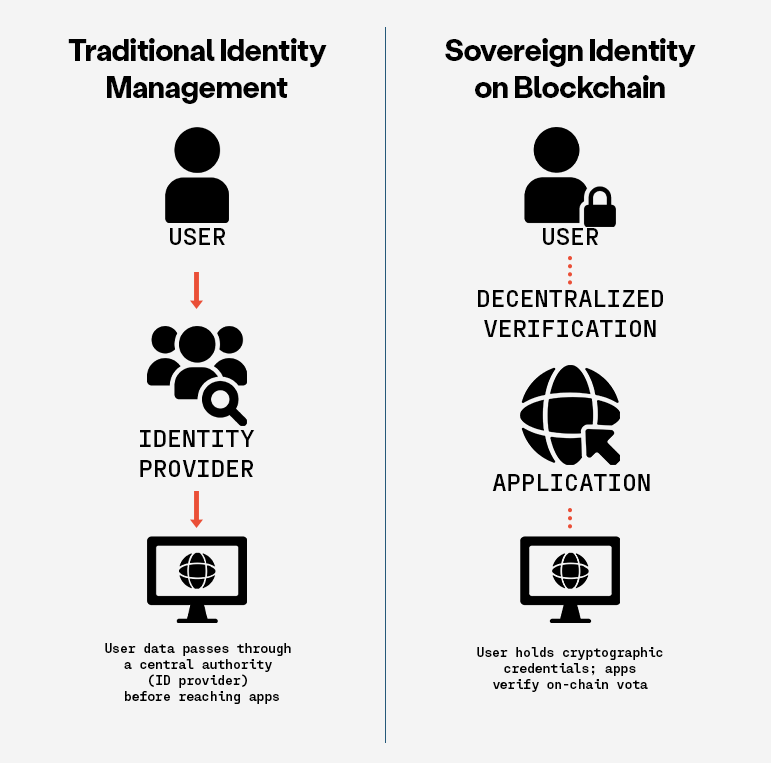

ARPA-H and/or DARPA should develop a program to establish a broad research platform in the private sector that will allow for megastudies to be conducted. Then research teams can, in parallel, test dozens of behavioral interventions on populations and access patient data. This platform should have required ethical rules and be grounded in data sovereignty that allows patients to opt out of participation and having their data shared.

Data sovereignty is one solution to the trust challenge. Simply put, data sovereignty means that patients have access to the data on themselves (without having to pay a fee that physicians’ offices now routinely charge for access) and control over who sees and keeps that data. So, if at any time, a participant changes their mind, they can get their data and force anyone in possession of that data to delete it (with notable exceptions, like their healthcare providers). Patients would have ultimate control of their data in a ‘trust-less’ way that they never need to surrender, going well past the rather weak privacy provisions of HIPAA, so there is no question that they are in charge.

We suggest that using blockchain and token systems for data transfer would certainly be appropriate. Data held in a federated network to limit the danger of a breach would also be appropriate.

Recommendation 3. The NIH should fund behavioral economics megastudies using the platform.

Once the megastudy platform(s) are established, the NIH should make dedicated funds available for researchers to test for behavioral interventions using the platform to decrease costs, increase study longevity, and improve speed and efficiency for behavioral economics studies on behavioral health interventions.

Conclusion

Randomized controlled trials have been the gold standard for behavioral research but are not well suited for health behavioral interventions on a broad and diverse population because of the required number of participants, typical narrow population, recruiting challenges, and cost. Yet, there is an urgent need to encourage and incentivize d health related behaviors to make Americans healthier. Simply put, we cannot start to grow healthspan and lifespan unless we change behaviors towards healthier choices and habits. When the U.S. government funds the establishment of a platform for testing hundreds of behavioral interventions on a large diverse population, we will start to better understand the interventions that will have an efficient and lasting impact on health behavior. Doing so requires private sector cooperation and strict ethical rules to ensure public trust.

This memo produced as part of Strengthening Pathways to Disease Prevention and Improved Health Outcomes.

Fueling the Bioeconomy: Clean Energy Policies Driving Biotechnology Innovation

The transition to a clean energy future and diversified sources of energy requires a fundamental shift in how we produce and consume energy across all sectors of the U.S. economy. The transportation sector, a sector that heavily relies on fossil-based energy, stands out not only because it is the sector that releases the most carbon into the atmosphere, but also for its progress in adopting next-generation technologies when it comes to new technologies and fuel alternatives.

Over the past several years, the federal government has made concerted efforts to support clean energy innovation in transportation, both for on-road and off-road. Particularly, in hard-to-electrify transportation sub-sectors, there has been added focus such as through the Sustainable Aviation Fuel (SAF) Grand Challenge. These efforts have enabled a wave of biotechnology-driven solutions to move from research labs to commercial markets, such as LanzaJets alcohol-to-jet technology in producing SAF. From renewable fuels to bio-based feedstocks, biotechnologies are enabling the replacement of fossil-derived energy sources and contributing to a more sustainable, secure, and diversified energy system.

SAF in particular has gained traction, enabled in part by public investment and interagency coordination, like the SAF Grand Challenge Roadmap. This increased federal attention demonstrated how strategic federal action, paired with demand signals from government, targeted incentives, and industry buy-in, can create the conditions needed to accelerate biotechnology adoption.

To better understand the factors driving this progress, FAS conducted a landscape analysis at the federal and regional level of biotechnology innovation within the clean energy sector, complemented by interviews with key stakeholders. Several policy mechanisms, public-private partnerships, and investment strategies were identified that were enablers of advanced SAF adoption and production and similar technologies. By identifying the enabling conditions that supported biotechnology’s uptake and commercialization, we aim to inform future efforts on how to accelerate other sectors that utilize biotechnologies and overall, strengthen the U.S. bioeconomy.

Key Findings & Recommendations

An analysis of the federal clean energy landscape reveals several critical insights that are vital for advancing the development and deployment of biotechnologies. Federal and regional strategies are central to driving innovation and facilitating the transition of biotechnologies from research to commercialization. The following key findings and actionable recommendations address the challenges and opportunities in accelerating this transition.

Federal Level Key Findings & Recommendations

The federal government plays a pivotal role in guiding market signals and investment toward national priorities. In the clean energy sector, decarbonizing aviation has emerged as a strategic objective, with SAF serving as a critical lever. Federal initiatives such as the SAF Grand Challenge, the SAF Roadmap, and the SAF Metrics Dashboard have helped to elevate SAF within national climate priorities and enabled greater interagency coordination. These mechanisms not only track progress but also communicate federal commitment. Still, despite these efforts, current SAF production remains far below target levels, with capacity largely concentrated in HEFA, a pathway with constrained feedstock availability and limited scalability.

This production gap reflects deeper structural challenges, many of which parallel broader issues across the clean-energy biotech interface. One of the main challenges is the fragmented, short-duration policy incentives currently in use. Tax credits like 40B and 45Z, while important, lack the longevity and clarity required to unlock large-scale, long-term private investment. The absence of binding fuel mandates further undermines market certainty. These policy gaps limit the ability of the clean energy sector to serve as a sustained demand signal for emerging biotechnologies and slow the transition from pilot to commercial scale.

Importantly, these challenges point to a broader opportunity: SAF as a test case for how the clean energy sector can serve as a driver of biotechnology uptake. Promising biotechnologies, such as alcohol-to-jet and power-to-liquid, are currently stalled by high capital costs, uncertain regulatory pathways, and a lack of coordinated federal support. Addressing these bottlenecks through aligned incentives, technology-neutral mandates, and harmonized accounting frameworks could not only accelerate SAF deployment but also establish a broader policy blueprint for scaling biotechnology across other clean energy applications.

To alleviate some of the challenges identified, the federal government should:

Extend & Clarify Incentives

While tax incentives such as the 45Z Clean Fuel Production Credit offer a promising framework to accelerate low-carbon fuel deployment, current design and implementation challenges limit their impact, particularly for emerging bio-based and synthetic fuels. To fully unlock the climate and market potential of these incentives, Congress and relevant agencies should take the following steps:

- Congress should amend the 45Z tax credit structure to differentiate between fuel types, such as SAF, e-fuels, biofuels, and renewable diesel, based on life cycle CI and production pathways. This would better reflect technology-specific costs and accelerate deployment across multiple clean fuel markets, adding specificity as to how to utilize and earn the credits based on the type of fuel.

- Congress should extend the duration of the 45Z credit and other clean-fuel related incentives to provide long-term policy certainty. Multi-year extensions with a defined minimum value floor would reduce investment risk and enable financing of capital-intensive projects.

- Congress and the Department of Treasury should clarify eligibility to ensure inclusion of co-processing methods and hybrid production systems, which are currently in regulatory gray areas. This would ensure broader participation by innovative fuel producers.

- Federal agencies, including the Department of Energy (DOE), Department of Transportation (DOT), and the Department of Defense, should be directed to enter into long-term (more than 10 years) procurement agreements for low-carbon fuels, including electrofuels and SAF. These offtake mechanisms would complement tax incentives and send strong market signals to producers and investors.

Scale Biotech Commercialization Support

The clean energy transition depends in part on the successful commercialization of enabling biotechnologies, ranging from advanced biofuels to bio-based carbon capture, SAF and biomanufacturing platforms that reduce industrial emissions. Recent or proposed funding cuts to clean energy programs risk stalling this progress and undermining U.S. competitiveness in the bioeconomy.

To accelerate biotechnology deployment and bridge the gap between lab-scale innovation and commercial-scale production, Congress should take the following actions:

- Authorize and appropriate expanded funding to the DOE, particularly through Bioenergy Technologies Office (BETO) and to the Department of Agriculture (USDA) to support pilot, demonstration, and first-of-a-kind commercial scale projects that enable biotechnology applications across clean energy sectors.

- Direct and fund the DOE Loan Programs Office to establish a dedicated loan guarantee program focused on biotechnology commercialization, targeting platforms that can be integrated into the energy system, such as bio-based fuels, bioproducts, carbon utilization technologies, and electrification-enabling materials.

- Encourage DOE and USDA to enter into long-term offtake agreements or structured purchasing mechanisms with qualified bioenergy and biomanufacturing companies. These agreements would help de-risk early commercial projects, crowd in private investment, and provide market certainty during the critical scale-up phase.

- Strengthen public-private coordination mechanisms, such as cross-sector working groups or interagency task forces, to align commercialization support with industry needs, improve program targeting, and reduce time-to-market for promising technologies.

Design and Promote Next-Gen Biofuel Policies

To accelerate the deployment of low-carbon fuels and enable innovation in next-generation bioenergy technologies, Congress and relevant agencies should take the following actions:

- Congress should direct the Environmental Protection Agency (EPA) to modernize the Renewable Fuel Standard by incorporating life cycle carbon intensity as a core metric, moving beyond volume-based mandates. Legislative authority could also support the development of a national Low Carbon Fuel Standard, modeled on successful state-level programs to drive demand for fuels with demonstrable climate benefits.

- EPA should update its emissions accounting framework to reflect the latest science on life cycle greenhouse gas (GHG) emissions, enabling more accurate assessment of advanced biofuels and synthetic fuels.

- DOE should expand R&D and demonstration funding for biofuel pathways that meet stringent carbon performance thresholds, with an emphasis on scalability and compatibility.

Regional Level Key Findings & Recommendations

Regional strengths continue to serve as foundational drivers of clean energy innovation, with localized assets shaping the pace and direction of technology development. Federal designations, such as the Economic Development Administration (EDA) Tech Hub program (Tech Hub), have proven catalytic. These initiatives enable regions to unlock state-level co-investment, attract private capital, and align workforce training programs with local industry needs. Early signs suggest that the Tech Hub framework is helping to seed innovation ecosystems where they are most needed, but long-term impact will depend on sustained funding support and continued regional coordination.

Workforce readiness and enabling infrastructure remain critical differentiators. Regions with deep and committed involvement from major research universities, national labs, or advanced manufacturing clusters are better positioned to scale innovation from prototype to deployment. Real-world testbeds provide environments for stress-testing technologies and accelerating regulatory and market readiness, reinforcing the importance of place-based strategies in federal innovation planning.

At the same time, private investment in clean energy and enabling biotechnologies remains crucial to developing and scaling innovative technologies. High capital costs, regulatory uncertainty, and limited early-stage demand signals continue to inhibit market entry, especially in geographies with less mature innovation ecosystems. Addressing these barriers through coordinated federal procurement, long-term incentives, and regional capacity-building will be essential to supporting growth in regions with strong assets to develop industry clusters that could yield clean energy benefits.

To accomplish this, the federal government and regional governments should:

Strengthen Regional Workforce Pipelines

A skilled and regionally distributed workforce is essential to realizing the full economic and technological potential of clean energy investments, particularly as they intersect with the bioeconomy. While federal funding is accelerating deployment through initiatives such as the IRA and DOE programs, workforce gaps, especially outside major innovation hubs, pose barriers to implementation. Addressing these gaps through targeted education, training, and talent retention efforts will be critical to ensuring that clean energy projects deliver durable, regionally inclusive economic growth. To this end:

- Federal agencies like the Department of Education and National Science Foundation should explore expanding support for STEM programs at community colleges and Minority Serving Institutions, with a focus on biosciences, engineering, and agricultural technologies relevant to the clean energy transition.

- Federally supported training and reskilling programs tailored to regional clean energy and biomanufacturing workforce needs could benefit new and existing cross-sector partnerships between state workforce agencies and regional employers.

- State and local governments should consider implementing talent retention strategies, including local hiring incentives, relocation support, and career placement services, to ensure that skilled workers remain in and contribute to regional clean energy ecosystems.

Strengthen Regional Infrastructure and Foster Cross-Sector Collaboration

Robust regional infrastructure and cross-sector collaboration are essential to accelerating the deployment of clean energy technologies that leverage advancements in biotechnology and manufacturing. Strategic investments in shared facilities, modernized logistics, and coordinated innovation ecosystems will strengthen supply chain resilience and improve technology transfer across sectors. Facilitating access to R&D infrastructure, particularly for small and mid-sized enterprises, will ensure that innovation is not limited to large firms or major metropolitan areas. To support these outcomes:

- Federal support for regional testbeds, prototyping sites, and grid modernization labs, coordinated by agencies such as DOE and EDA, would support the demonstration and scaling of biologically enabled clean energy technologies.

- State and local governments, in coordination with federal agencies including DOT and DOC, explore investment in logistics infrastructure to enhance supply chain reliability and support distributed manufacturing.

- States should consider creating or expanding the use of innovation voucher programs that allow small and mid-sized enterprises to access national lab facilities, pilot-scale infrastructure, and technical expertise, fostering cross-sector collaboration between clean energy, biotech, and advanced manufacturing firms.

Attract and De-Risk Private Capital

Attracting and de-risking private capital is critical for scaling clean energy and biotechnology innovations. By offering targeted financial mechanisms and leveraging federal visibility, governments can reduce the financial uncertainties that often deter private investment. Effective strategies, such as state-backed loan guarantees and co-investment models, can help bridge funding gaps while strategic partnerships with philanthropic and venture capital entities can unlock additional resources for emerging technologies. To this end:

- State governments, in collaboration with the federal agencies such as DOE and Treasury, should consider implementing state-backed loan guarantees and co-investment models to attract private capital into high-risk clean energy and biotech projects.

- Federal agencies like EDA, DOE, and the SBA should explore additional programs and partnerships to attract philanthropic and venture capital to emerging clean energy technologies, particularly in underserved regions.

- Federal agencies should increase efforts to facilitate connecting early-stage companies with potential investors, using federal initiatives to build investor confidence and reduce perceived risks in the clean energy sector.

Cross-Cutting Key Findings

The successful deployment of federal clean energy and biotechnology initiatives, such as the SAF Grand Challenge, relies heavily on the capacity of regional ecosystems and the private sector to absorb and implement national goals. Many regions, particularly those outside established innovation hubs, lack the infrastructure, resources, and technical expertise to effectively utilize federal funding. As a result, the impact of national policies is often limited, and the full potential of federal investments goes unrealized in certain areas.

Federal programs often take a one-size-fits-all approach, overlooking regional variability in feedstocks, industrial bases and cost structures. Programs like tax credits and life cycle analysis models can unintentionally disadvantage regions with different economic contexts, creating disparities in access to federal incentives. This lack of regional customization prevents certain areas from fully benefiting from national clean energy and biotech initiatives.

The diffusion of innovation in clean energy and biotechnology remains concentrated in a few key regions, leaving others underutilized. Despite robust federal R&D investments, commercialization and scaling of innovations are primarily concentrated in regions with established infrastructure, hindering the broader geographic spread of these technologies. In addition, workforce development efforts across federal and regional programs are fragmented, creating misalignments in talent pipelines and further limiting the ability of local industries to leverage available resources effectively. The absence of a unified system for tracking key metrics, such as SAF production and emissions reductions, makes it difficult to coordinate efforts or assess progress consistently across regions. To address this, the federal and regional governments should:

Create a Federal–Regional Clean Energy Deployment Compact

A Federal-Regional Clean Energy Deployment Compact is critical for aligning federal clean energy initiatives with the unique capabilities and needs of regional ecosystems. By establishing formal mechanisms, such as intergovernmental councils and regional liaisons, federal programs can be more effectively tailored to local conditions. These mechanisms will ensure two-way communication between federal agencies and regional stakeholders, fostering a collaborative approach that adapts to evolving technological, economic, and environmental conditions. In addition, treating regional tech hubs and initiatives as testbeds for new policy tools, such as performance-based incentives or carbon standards, will allow for innovative solutions to be tested locally before scaling them nationally, ensuring that policies are effective and contextually relevant across diverse regions. To this end:

- The White House Office of Science and Technology Policy (OSTP), in collaboration with the DOE and EPA, should establish formal intergovernmental councils or regional liaisons to facilitate ongoing dialogue between federal agencies and regional stakeholders. These councils would focus on aligning federal clean energy initiatives with regional needs, ensuring that local priorities, such as feedstock availability or infrastructure readiness, are considered in policy design.

- The DOE and EPA should treat tech hubs and regional clean energy initiatives as testbeds for policy innovation. These regions can pilot performance-based incentives, carbon standards, and other policy tools to assess their effectiveness before scaling them nationally. Successful models developed in these testbeds should be expanded to other regions, with lessons learned shared across state and local governments.

- Regional universities and innovation hubs should collaborate with federal and state agencies to develop pilot programs that test new policy tools and technologies. These institutions can serve as incubators for innovative clean energy solutions, providing valuable data on what policies work best in specific regional contexts.

Build a National Innovation-to-Deployment Pipeline

Creating a seamless innovation-to-deployment pipeline is essential for scaling clean energy technologies and ensuring that regional ecosystems can fully participate in national clean energy transitions. By linking DOE national labs, Tech Hubs, and regional consortia into a coordinated network, the U.S. can support the full life cycle of innovation, from early-stage R&D to commercialization and deployment, across diverse geographies. Additionally, co-developing curricula and training programs between federal agencies, regional tech hubs, and industry partners will ensure that talent pipelines are closely aligned with the evolving needs of the clean energy sector, providing the skilled workforce necessary to implement and scale innovations effectively. To accomplish this the:

- DOE should facilitate the creation of a national network that connects federal labs, regional tech hubs and innovation consortia. This network would provide a clear pathway for the transition of technologies from research to commercialization, ensuring that innovations can be deployed across different regions based on local needs and capacities.

- Regional Tech Hubs, in partnership with local universities and research institutions, should be integrated into the pipeline to provide localized innovation support and commercialization expertise. These hubs can act as nodes in the broader network, offering the infrastructure and expertise necessary for scaling up clean energy technologies.

Develop a Shared Metrics and Monitoring Platform

A centralized dashboard for tracking key metrics related to clean energy and biotechnology initiatives is crucial for guiding investment and policy decisions. By integrating federal and regional data can provide a comprehensive, real-time view of progress across the country. This shared platform would enable better coordination among federal, state, and local agencies, ensuring that resources are allocated efficiently and that policy decisions are informed by accurate, up-to-date data. Moreover, a unified system would allow for more effective tracking of regional performance, enabling tailored solutions and based on localized needs and challenges. To this end:

- The DOE, in partnership with the EPA, should lead the development of a centralized dashboard that integrates existing federal and regional data on SAF production, emissions reductions, workforce needs, and infrastructure gaps. This platform should be publicly accessible, allowing stakeholders at all levels to monitor progress and identify opportunities for improvement.

- State and local governments should contribute relevant data from regional initiatives, including workforce training programs, infrastructure development projects, and emissions reductions efforts. These contributions would help ensure that the platform reflects the full range of activities across different regions, providing a more accurate picture of national progress.

The Department of Labor and the DOC should integrate workforce development and industrial capacity data into the platform. This would include information on training programs, regional workforce readiness, and skills gaps, helping policymakers align talent development efforts with regional needs.

The DOT should ensure that transportation infrastructure data, particularly related to SAF production and distribution networks, is included in the platform. This would provide a comprehensive view of the supply chain and infrastructure readiness necessary to scale clean energy technologies across regions.

The clean energy sector, and specifically SAF, highlights both the promise and the persistent challenges of scaling biotechnologies, reflecting broader issues, such as fragmented regulation, limited commercialization support, and misaligned incentives that hinder the deployment of advanced biotechnologies. Overcoming these systemic barriers requires coordinated, long-term policies including performance-based incentives, and procurement mechanisms that reduce investment risk and free up capital. SAF should be seen not as a standalone initiative but as a model for integrating biotechnology into industrial and energy strategy, supported by a robust innovation pipeline, expanded infrastructure, and shared metrics to guide progress. With sustained federal leadership and strategic alignment, the bioeconomy can become a key pillar of a low-carbon, resilient energy future.

De-Risking the U.S. Bioeconomy by Establishing Financial Mechanisms to Drive Growth and Innovation

The bioeconomy is a pivotal economic sector driving national growth, technological innovation, and global competitiveness. However, the biotechnology innovation and biomanufacturing sector faces significant challenges, particularly in scaling technologies and overcoming long development timelines that don’t align with short-term return expectations from investors. These extended timelines and the inherent risks involved lead to funding gaps that hinder the successful commercialization of technologies and bio-based products. If obstacles like the ‘Valleys of Death, a lack of capital at crucial development junctures, that companies and technology struggle to overcome are not addressed, this could result in economic stagnation and the U.S. losing its competitive edge in the global bioeconomy.

Government programs like SBIR and STTR lessen the financial gap inherent in the U.S. bioeconomy, but existing financial mechanisms have proven insufficient to fully de-risk the sector and attract the necessary private investment. In FY24, the National Defense Authorization Act established the Office of Strategic Capital within the Department of Defense to provide financial and technical support for its 31 ‘Covered Technology Categories’, which includes biotechnology and biomanufacturing. To address the challenges associated with de-risking biotechnology and biomanufacturing within the U.S. bioeconomy, the Office of Strategic Capital within the Department of Defense should house a Bioeconomy Finance Program. This program would offer tailored financial incentives such as loans, tax credits, and volume guarantees, targeting both short-term and long-term scale-up needs in biomanufacturing and biotechnology.

By providing these essential funding mechanisms, the Bioeconomy Finance Program will reduce the risks inherent in biotechnology innovation, encouraging more private sector investment. In parallel, states and regions across the country should develop regional specific strategies, like investing in necessary infrastructure, and fostering public-private partnerships, to complement the federal government’s initiatives to de-risk the sector. Together, these coordinated efforts will create a sustainable, competitive bioeconomy that supports economic growth, and strengthens U.S. national security.

Challenge & Opportunity

The U.S. bioeconomy encompasses economic activity derived from the life sciences, particularly in biotechnology and biomanufacturing. The sector plays an important role in driving national growth and innovation. Given its broad reach across industries, impact on job creation, potential for technological advancements, and requirement for global competitiveness, the U.S. bioeconomy is a critical sector for U.S. policymakers to support. With continued development and growth, the U.S. bioeconomy promises not only economic benefits, but also strengthens national security, health outcomes, and environmental sustainability for the country.

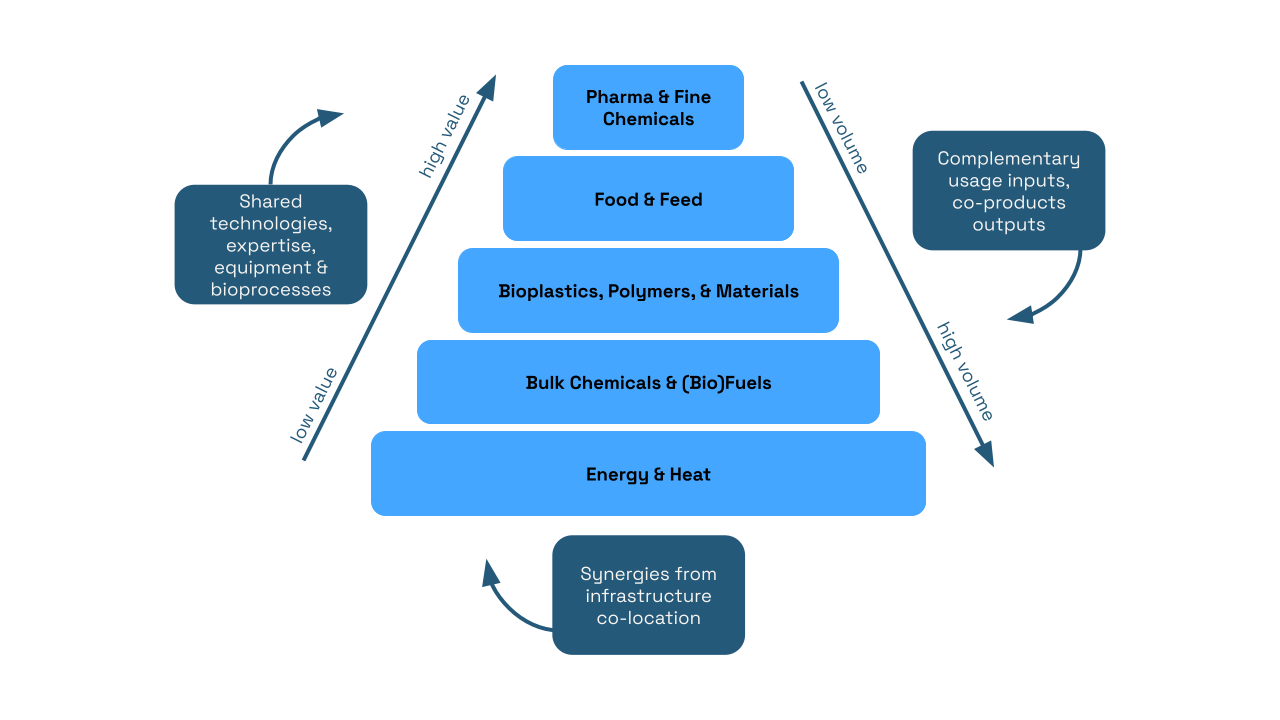

Ongoing advancements in biotechnology, including artificial intelligence and automation, have accelerated the growth of the bioeconomy, making the sector both globally competitive and an important domestic economic sector. In 2023, the U.S. bioeconomy supported nearly 644,000 domestic jobs, contributed $210 billion to the GDP, and generated $49 billion in wages. Biomanufactured products within the bioeconomy span multiple categories (Figure 1). Growth here will drive future economic development and address societal challenges, making the bioeconomy a key priority for government investment and strategic focus.

Biomanufactured products span a wide range of categories, from pharmaceuticals and chemicals, which require small volumes of biomass but yield high-value products, to energy and heat, which require larger volumes of biomass but result in lower-value products. Additionally, there are common infrastructure synergies, bioprocesses, and complementary input-output relationships that facilitate a circular bioeconomy within bioproduct manufacturing. Source: https://edepot.wur.nl/407896

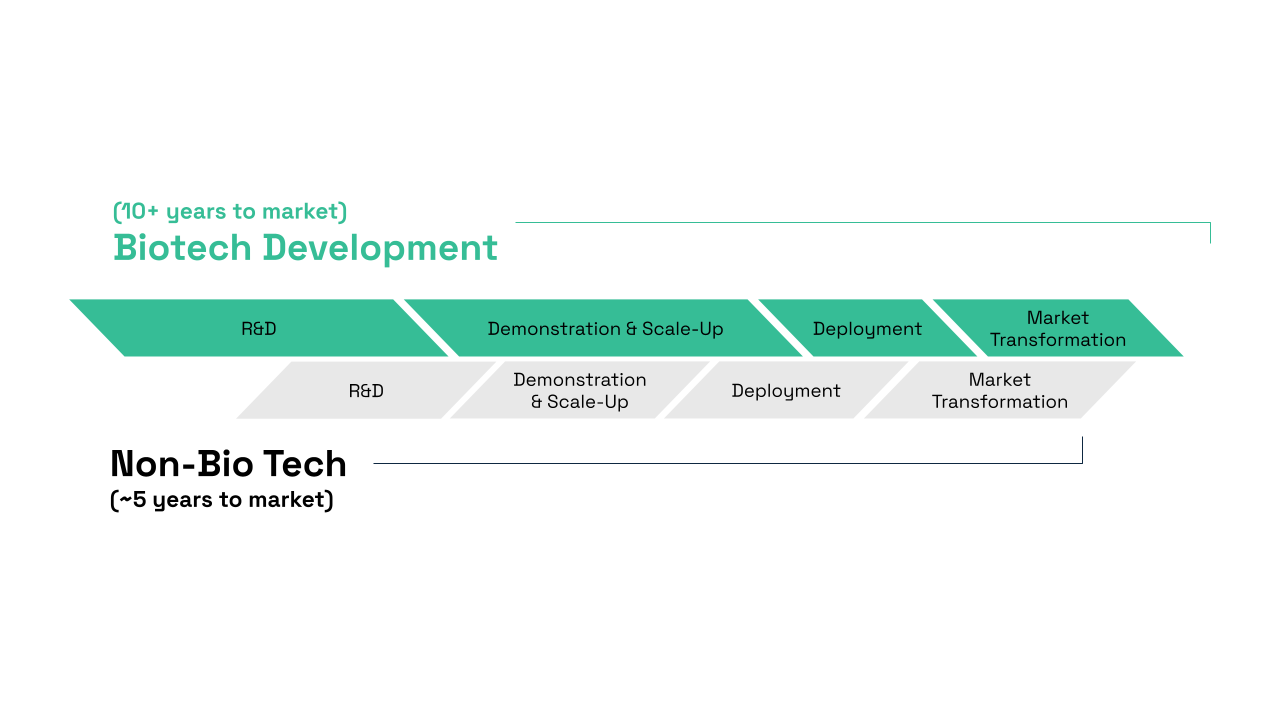

An important driving force for the U.S. bioeconomy is biotechnology and biomanufacturing innovation. However, bringing biotechnologies to market requires substantial investment, capital, and most importantly, time. Unlike other technology sectors which see returns on investment within a short period of time, often, there is a misalignment between scientific and capitalistic expectations. Many biotechnology based companies rely on venture capital, a form of private equity investments, to finance their operations. However, venture capitalists (VCs) typically operate on short return on investment timelines, which may not align with the longer development cycles characteristic of the biotechnology sector (Figure 2). Additionally, the need for large-scale and the high capital expenditures (CAPEX) required for commercially profitable production, along with the low-profit margins in high-volume commodity production, create further barriers to obtaining investment. While this misalignment is not universal, it remains a challenge for many biotech startups.

The U.S. government has implemented several programs to address the financing void that often arises during the biotechnology innovation process. These include the Small Business Innovation Research (SBIR) and Small Business Technology Transfer (STTR) programs, which provide phased funding across all Technology Readiness Levels (TRLs); the DOE Loan Program Office, which offers debt financing for energy-related innovations; the DOE Office of Clean Energy Demonstrations which provides funding for demonstration-scale projects that provide proof of concept; and the newly established Office of Strategic Capital (OSC) within the DOD (as outlined in the FY24 National Defense Authorization Act), which is tasked with issuing loans and loan guarantees to stimulate private investment in critical technologies. An example is the office’s new Equipment Loan Financing through OSC’s Credit Program.

Biotechnology development timelines typically take around ~10+ years to complete and reach the market due to longer R&D and Demonstration & Scale-Up phases, while non-biotechnology development timelines are generally much shorter, averaging around ~5+ years.

While these efforts are important, they are insufficient on their own to de-risk the sector to the degree which is needed to realize the full potential of the U.S. bioeconomy. To effectively support the biotechnology innovation pipeline at critical stages, the government must explore and implement additional financial mechanisms that attract more private investment and mitigate the inherent risks associated with biotechnology innovation. Building on existing resources like the Regional Technology and Innovation Hubs, NSF Regional Innovation Engines, and Manufacturing USA Institutes, help stimulate private sector investment and are crucial for strengthening the nation’s economic competitiveness.

The newly established Office of Strategic Capital (OSC) within the DOD is well-positioned to enhance resilience in critical sectors for national security, including biotechnology and biomanufacturing, through large-scale investments. Biotechnology and biomanufacturing inherently require significant CAPEX, expenses related to the purchase, upgrade, or maintenance of physical assets. This requires substantial amounts of strategic and concessional capital to de-risk and accelerate the biomanufacturing process. By creating, implementing, and leveraging various financial incentives and resources, the Office of Strategic Capital can help build the robust infrastructure necessary for private sector engagement.

To achieve this, the U.S. government should create the Bioeconomy Finance Program (BFP) within the OSC, specifically tasked with enabling and de-risking the biotechnology and biomanufacturing sectors through financial incentives and programs. The BFP should focus on different levels of funding based on the time required to scale, addressing potential ‘Valleys of Death’ that occur during the biomanufacturing and biotechnology innovation process. These funding levels would target short-term (1-2 years) scale-up hurdles to accelerate the biotechnology and biomanufacturing process, as well as long-term (3-5 years) scale-up challenges, providing transformative funding mechanisms that could either make or break entire sectors.

In addition to the federal programs within the BFP to de-risk the sector, states and regions must also make substantial investments and collaborate with federal efforts to accelerate biomanufacturing and biotechnology ecosystems within their own areas. While the federal government can provide a top-down strategy, regional efforts are critical for supporting the sector with bottom-up strategies that complement and align with federal investments and programs, ultimately enabling a sustainable and competitive biotechnology and biomanufacturing industry regionally. To facilitate this, regions should develop and implement state-wide investment initiatives like resource analysis, infrastructure programs, and a cohesive, long-term strategy focused on public-private partnerships. The federal government can encourage these regional efforts by ensuring continued funding for biotechnology hubs and creating additional opportunities for federal investment in the future.

Plan of Action

To strengthen and increase the competitiveness of the U.S. bioeconomy, a coordinated approach is needed that combines federal leadership with state-level action. This includes establishing a dedicated Bioeconomy Finance Program within the Office of Strategic Capital to create targeted financial mechanisms, such as loan programs, tax incentives, and volume guarantees. Additionally, states must be empowered to support commercial-scale biomanufacturing and infrastructure development, leveraging tech hubs, cross-regional partnerships, and building public-private partnerships to build capacity and foster innovation nationwide.

Recommendation 1. Establish and Fund a Bioeconomy Finance Program

Congress, in the next National Defense Authorization Act, should codify the Office of Strategic Capital (OSC) within DOD and authorize the creation of a Bioeconomy Finance Program (BFP) within the OSC to provide centralized federal structure for addressing financial gaps in the bioeconomy, thereby increasing productivity and competitiveness globally. In 2024, Congress expanded the OSCs mission to offer financial and technical support to entities within its 31 ‘Covered Technology Categories,’ including biotechnology and biomanufacturing. Additionally, in order to build resilience in the sector and maintain a competitive advantage globally while also strengthening national security, these substantial expenditures should be housed within the OSC. Establishing the BFP within the OSC at the DOD would allow for a targeted focus on these critical sectors, ensuring long-term stability and resilience against political shifts.

The DOD and OSC should leverage its own funding as well as its existing partnership with the Small Business Administration to direct $1 billion to set up the BFP to create and implement initiatives aimed at de-risking the U.S. bioeconomy. The Bioeconomy Finance Program should work closely with relevant federal agencies, such as the DOE, Department of Agriculture (USDA), and the Department of Commerce (DOC), to ensure a long-term cohesive strategy for financing bioeconomy innovation and biomanufacturing capacity.

Recommendation 2. Task the Bioeconomy Finance Program with Key Initiatives

A key element of the OSC’s mission and investment strategy is to provide financial incentives and support to entities within its 31 ‘Core Technology Categories’. By having BFP design and manage these financial initiatives for the biotechnology and biomanufacturing sectors, the OSC can leverage lessons from similar programs, such as the DOE’s loan program, to address the unique needs of these critical industries, which are essential for national security and economic growth.

Currently, the OSC has launched a credit program for equipment financing. While this is a necessary first step in fulfilling the office’s mission, the program is open to all 31 ‘Core Technology Categories’, resulting in broad, dilutive funding. To accelerate the bioeconomy and reduce risks in biotechnology and biomanufacturing, it is crucial to allocate resources specifically to these sectors. Therefore, BFP should take the lead in several key financial initiatives to support the growth of the bioeconomy, including:

Loan Programs

The BFP should develop specific biotechnology enabling loan programs, in addition to the new equipment loan financing program run by the OSC. These loan programs should be modeled after those in the DOE LPO, focusing on biomanufacturing scale-up, technology transfer, and overcoming financing gaps that hinder commercialization.

Example loan programs:

- DOE Title 17 Clean Energy Financing Program

- USDA Business & Industry Loan Guarantee

- Solar Foods EU Grant/Loan

Tax Incentives

The BFP office should create tax incentives tailored to the bioeconomy, such as, transferable investment and production tax credits. For example, the 45V tax credit for production of clean hydrogen could serve as a model for similar incentives aimed at other bioproducts.

Example tax incentives:

- The Inflation Reduction Act’s transferable tax credits are the gold standard for this category.

Volume Guarantees & Procurement Support

To mitigate risks in biomanufacturing, the office should establish volume guarantees for various bioproducts, offering financial assurance to manufacturers and encouraging private sector investment. An initial assessment should be conducted to identify which bioproducts are best suited for such guarantees. Additionally, the office should explore the possibility of procurement programs to increase government demand for bio-based products, further incentivizing industry growth and innovation. This effort should be undertaken in coordination with the USDA’s BioPreferred Program to minimize redundancy and to create a cohesive procurement strategy. In addition, the BFP should look to the procurement innovations promoted by the Office of Federal Procurement Policy to find solutions for forward funding to create a functioning market.

Example Volume Guarantees & Procurement Support:

- Heavy Forging Press Infrastructure Lease Agreement

- NASA and USAF buying Fairchild semiconductors in advance of needing them, and overbought performance

- Advance Market Commitments

- Joint Venture Partnerships

- Other Transaction Authorities

Recommendation 3. Develop Pipeline Programs to Address Financial and Time Horizon Needs

Utilizing the key initiatives highlighted above, the BFP should create a two-tiered financial mechanisms pipeline and program to address both the short-term and long-term financial needs. The different financial levels could potentially include:

- Level 1 – Short Term Scale-Up (1-2 years) Programs

- Subsidized cost of electricity and other utilities (waste, wastewater treatment, natural gas, energy, etc.)

- Funding for demonstration-scale projects and early-stage engineering development. Similar to the DOEs Office of Clean Energy Demonstrations or the DODs’ Defense Industrial Base Consortium round one $1-2M engineering grants)

- Tax holidays for corporate taxes and property taxes

- Allowing accelerated depreciation to reduce tax liabilities

- Land grants or subsidies for manufacturing assets

- Fast-track permitting and site preparation to avoid long waits

- Labor and workforce subsidies

- Removal of export duties on products created in the U.S. and shipped overseas

- Level 2 – Long Term Scale-Up (3-5 years) Programs

- Large-scale transferable tax credits (either production or investment tax credits) for manufacturing. Similar to the tax credits seen in the Inflation Reduction Act for clean energy.

- Large-scale manufacturing grants

- Large-scale, low-interest manufacturing loans and loan guarantees

- Government procurement contracts or commitment for offtake, such as partial/full volume guarantees

- Government direct or indirect equity investments in biomanufacturing and biotechnology innovations

Recommendation 4. State-Level Initiatives, Infrastructure Development, and Public-Private Partnerships

While federal efforts are crucial, a bottom-up approach is needed to support biomanufacturing and the bioeconomy at the state level. The federal government can support these regional activities by providing targeted funding, policy guidance, and financial incentives that align with regional priorities, ensuring a coordinated effort toward industry growth. States should be encouraged to complement federal initiatives by developing programs that support commercial-scale biomanufacturing. Key actions include:

- State-Level Bioeconomy Resource Analysis: Each state and region should conduct their own analysis to understand the bioeconomy resources at their disposal and determine what relevant resources they would need to establish or strengthen state or regional bioeconomies. Identifying these resources will help the nation understand its true bioeconomic potential by understanding where certain biomass is contained, what facilities are available and needed to develop an economically sustainable bioeconomy, and create data to better understand the economic return on investment.

- Once the analysis is completed, States should collaborate with federal agencies like the DOE, DOC, and Economic Development Administration (EDA) to create and apply for specialized grants for commercial-scale biomanufacturing facilities based off of these analyses. Grants should prioritize non-pharmaceutical biomanufacturing to expand the scope of bioeconomy growth beyond traditional sectors.

- Utility Infrastructure Grants: Another critical area is the creation of utility infrastructure needed to support biomanufacturing, such as wastewater treatment and electricity infrastructure. States should receive targeted funding for these infrastructure projects, which are essential for scaling up production. States should take these targeted funds and establish their own granting mechanism to build necessary, regional infrastructure that is needed long-term to support the U.S. bioeconomy.

- Tech Hub Partnerships: States should leverage existing tech hubs to serve as centers for innovation in bioeconomy technologies. These hubs, which are already positioned in regions with high technological readiness, can be incentivized to partner with other regions that may not yet have robust tech ecosystems. The goal is to create a collaborative, cross-regional network that fosters knowledge-sharing and builds capacity across the country.

- Foster Public-Private Partnerships (PPP): To ensure the success and sustainability of these initiatives, states should actively foster PPPs that bring together government, industry leaders, and academic institutions. These partnerships can help align private sector investment with public goals, enhance resource sharing, and accelerate the commercialization of bioeconomy technologies. By engaging in collaborative R&D, sharing infrastructure costs, and co-developing new biotechnologies, PPPs will play a crucial role in driving innovation and economic growth in the bioeconomy sector. In addition to fostering PPPs, regions should proactively work on creating models that enable these partnerships to become self-sustaining, helping to mitigate potential financial pitfalls if partners drop out of the partnership. By not only creating PPPs, but also ensuring they become fully independent over time, the associated risks with PPPs decrease significantly.

By addressing these steps at both the federal and state levels, the U.S. can create a robust, scalable framework for financing biomanufacturing and the broader bioeconomy, supporting the transition from early-stage innovation to commercial success and ensuring long-term economic competitiveness. A good example of how this approach works is the DOE Loan Program Office, which collaborates with state energy financing institutions. This partnership has successfully supported various projects by leveraging both federal and state resources to accelerate innovation and drive economic growth. This model makes sense for biomanufacturing and biotechnology within the BFP in the OSC, as it ensures coordination between federal and state efforts, de-risks the sector, and facilitates the scaling of transformative technologies.

Conclusion