Transforming the Carceral Experience: Leveraging Technology for Rehabilitation

Despite a $182 billion annual cost, the U.S. correctional system perpetuates itself: At least 95% of all state prisoners will be released from prison at some point, yet more than 50% of them reoffend within three years.

A key driver of high recidivism is the systemic negligence of the carceral experience. While much attention is given to interventions post-release, rehabilitation inside correctional facilities is largely invisible to the public. This dynamic results in approximately 2 million incarcerated persons being locked in a “time capsule”—the world passes them by as they serve their sentences. This is a missed opportunity, as simple interventions like accessing educational resources and maintaining family contact during incarceration can cut recidivism by up to 56%. Reduced recidivism translates into more robust workforce, safer communities, and higher political participation. The new administration should harness the momentous bipartisan interest in criminal justice reform, audit the condition and availability of rehabilitative resources in prisons and jails, invest in digital and technology infrastructure, and sustainably end mass incarceration through building meaningful digital citizenship behind bars.

Challenge and Opportunity

In the post-COVID-19 world, robust and reliable technology and digital infrastructure are prerequisites for any program and resource delivery. However, the vast majority of U.S. correctional facilities still lack adequate technology infrastructure, with cascading effects on the availability of in-prison programs, utilization of digital resources, and incarcerated people’s transition to the free world.

As many other institutions quickly embrace new technology, prisons lag behind. In Massachusetts, prisons struggle to provide even basic rehabilitative, educational, and vocational training programs due to a shortage of hardware devices, such as tablets and Chromebooks, and insufficient staffing. Similarly, in Florida, internet access is constrained by legislation and exacerbated by a lack of funding. Many prisons are forced to limit or entirely cancel programs when in-person visits are inaccessible, due to either COVID-19 restrictions or simply insufficient transportation options for resource providers. Consequently, only 0.5% of incarcerated individuals are enrolled in educational courses. The situation is equally dire in juvenile detention centers from California to Louisiana, where poor access to educational opportunities contributes to low graduation rates, severely limiting future employment prospects for at-risk youths.

Despite these systemic challenges, there is a strong, bipartisan recognition of the need to improve conditions within the carceral system—and therefore a unique opportunity for reform.

The Federal Communications Commission (FCC) has passed the most comprehensive regulations on incarcerated people’s communication services, setting rate caps for various means of virtual communications. Electronic devices, such as tablets and Chromebooks, are gradually being accepted in correctional facilities, and they carry education resources and entertainment. Foundationally, federal investments in broadband and digital equity present a generational opportunity for correctional facilities and incarcerated people. These investments will provide baseline assessment of the network conditions and digital landscape in prisons, and the learnings can lay the very foundation to enable incarcerated people to enter the digital age prepared, ready to contribute to their communities from the day they return home.

This is just the beginning.

Plan of Action

Recommendation 1. Invest in technology infrastructure inside correctional facilities.

A significant investment in technology infrastructure within correctional facilities is the prerequisite to transforming corrections.

The Infrastructure Investment and Jobs Act (IIJA), through the Broadband Equity, Access, and Deployment (BEAD) and Digital Equity (DE) programs, sets a good precedent. BEAD and DE funding enable digital infrastructure assessments and improvements inside correctional facilities. These are critical for delivering educational programs, maintaining family connections, and facilitating legal and medical communications. However, only a few corrections systems are able to utilize the funding, as BEAD and DE do not have a specific focus on improving the carceral system, and states tend to prioritize other vulnerable populations (e.g., the rural, aging, veteran populations) over the incarcerated. Currently incarcerated individuals are difficult to reach, so they are routinely neglected from the planning process of funding distribution across the country.

The new administration should recognize the urgent need to modernize digital infrastructure behind bars and allocate new and dedicated federal funding sources specifically for correctional institutions. The administration can ensure the implementation of best practices through grant guidelines. For example, it could stipulate that prior to accessing funding, states have to conduct a comprehensive network assessment, including speed and capacity tests, a security risk analysis, and a thorough audit of existing equipment and wiring. Further, it could mandate that all new networks built or consolidated using federal funding be vendor-neutral, ensuring robust competition among service providers down the road.

Recommendation 2. Incentivize mission-driven technology solutions.

Expanding mandatory access to social benefits for incarcerated individuals will incentivize mission-driven technology innovation and adoption in this space.

There have been best practices on how to do so at both the federal and state levels. For example, the Second Chance Pell restored educational opportunities for incarcerated individuals and inspired the emergence of mission-driven educational technologies. Another critical federal action was the Consolidated Appropriations Act of 2023 (specifically, Section 5121), which mandated Medicaid enrollment and assessment for juveniles, thereby expanding demand for health and telehealth solutions in correctional facilities.

The new administration should work with Congress to propose new legislation that mandates access to social benefits for those behind bars. Specifically, access to mental health assessment, screening, and treatment, as well as affordable access to communication with families and loved ones on the outside, will be critical to successful rehabilitation and reentry. Additionally, it should invest in robust research focusing on in-prison interventions. Such projects can be rare and more costly, given the complexity of doing research in a correctional environment and the dearth of in-prison interventions. But they will play a big part in establishing the basis for data-driven policies.

Recommendation 3. Remove procurement barriers for new solutions and encourage pilots.

Archaic procurement procedures pose significant barriers to competition in the correctional technology industry and block innovative solutions from being piloted.

The prison telecommunications industry, for example, has been dominated by two private companies for decades. The effective duopoly has consolidated the market by entering into exclusive contracts with high percentages of kickback and so-called “premium services.” These procurement and contracting tactics minimize healthy competition from new entrants of the industry.

Some states and federal agencies are trying to change this. In July 2024, the FCC ruled out revenue-sharing between correctional agencies and for-profit providers, ending the arms race of higher commission for good. On a state level, California’s RFI initiative exemplifies how strategic procurement processes can encourage public-private partnerships to deliver cutting-edge technology solutions to government agencies.

The administration should take a strong stance by issuing an executive order asking all Federal Bureau of Prisons facilities, including ICE detention centers, to competitively procure innovative technology solutions and establish pilots across its institutions, setting an example and a playbook for state corrections to follow.

Recommendation 4. Invest in need assessments, topic-specific research and development of best practices through National Science Foundation and Bureau of Justice Assistance.

Accurate needs assessments, topic-specific research, development of best practices, and technical assistance are all critical to smooth delivery and implementation.

The Department of Justice, through the Bureau of Justice Assistance (BJA), offers a range of technical assistance (TA) programs that can support state and local correctional facilities in implementing these technology and educational initiatives. Programs such as the Community-based Reentry Program and the Encouraging Innovation: Field-Initiated Program have demonstrated success in providing the necessary resources and expertise to ensure these reforms are effectively integrated.

However, these TA programs tend to disproportionately benefit correctional facilities where significant programs are already in place but are less useful for “first timers,” where taking that first step is hard enough.

The new administration should work with the National Science Foundation (NSF) and the BJA to systematically assess and understand challenges faced by correctional systems trying to take the first step of reform. Many first-timer agencies have deep understanding of the issues they experience (“program providers complain that tablets are not online”) but limited knowledge on how to assess the root causes of the issues (multiple proprietary wireless networks in place).

The NSF can bring together subject matter experts to offer workshops to correctional workers on network assessments, program cataloging, and human-centered design on service delivery. These workshops can help grow capacity at correctional facilities. The NSF should also establish guidelines and standards for these assessments. In addition to the TA efforts, the BJA could offer informational sessions, seminars, and gatherings for practitioners, as many of them learn best from each other. In parallel to learning on the ground, the BJA should also organize centralized task forces to oversee and advise on implementation across jurisdictions, document best practice, and make recommendations.

Conclusion

Investing in interventions behind the walls is not just a matter of improving conditions for incarcerated individuals—it is a public safety and economic imperative. By reducing recidivism through education and family contact, we can improve reentry outcomes and save billions in taxpayer dollars. A robust technology infrastructure and an innovative provider ecosystem are prerequisites to delivering outcomes. As 95% of incarcerated individuals will reenter society one day, it is vital to ensure that they can become contributing members of their communities. These investments will create a stronger workforce, more stable families, and safer communities. Now is the time for the new administration to act and ensure that the carceral system enables rehabilitation, not recidivism.

Creating a National Exposome Project

The U.S. government should establish a public-private National Exposome Project (NEP) to generate benchmark human exposure levels for the ~80,000 chemicals to which Americans are regularly exposed. Such a project will revolutionize our ability to treat and prevent human disease. An investment of $10 billion over 20 years would fuel a new wave of scientific discovery and advancements in human health. To date, there has not been a systematic assessment of how exposures to these environmental chemicals (such as pesticides, solvents, plasticizers, medications, preservatives, flame retardants, fossil fuel exhaust, and food additives) impact human health across the lifespan and in combination with one another.

While there is emerging scientific consensus that environmental exposures play a role in most diseases, including autoimmune conditions and many of the most challenging neurodegenerative diseases and cancers, the lack of exposomic reference data restrains the ability of scientists and physicians to understand their root causes and manage them. The biomedical impact of creating a reference exposome would be no less than that of the Human Genome Project and will serve as the basis of technological advancement, the development of new medicines and advanced chemicals, and improved preventative healthcare and the clinical management of diseases.

Challenge and Opportunity

The Human Genome Project greatly advanced our understanding of the genetics of disease and helped accelerate a biotech revolution, creating an estimated $265 billion economic impact in 2019 alone. However, genetics has been unable to independently explain the root causes of the majority of diseases from which we suffer, including neurodegenerative diseases like Alzheimer’s and Parkinson’s and many types of cancer. We know exposures to chemicals and pollution are responsible for or mediate the 70–90% of disease causation not explained by genetics. However, because we lack an understanding of their underlying causal factors, many new medicines in development are more palliative than curative. If we want to truly prevent and cure the most intractable illnesses, we must uncover the complex environmental factors that contribute to their pathogenesis.

In addition to the social and economic benefits that would come from reducing our society’s disease burden, American leadership in exposomics would also strengthen the foundation of our biomedical innovation ecosystem, making the U.S. the premier partner for what is likely to be the most advanced health-related research field in this century.

Three key trends are converging to make now the best time to act: First, the costs of chemical sensors and the data and analytics infrastructure to manage them have fallen precipitously over the last two decades. Second, a few existing small scale exposomic projects offer a blueprint for how to build the NEP. Third, advancements in artificial intelligence (AI) and machine learning are making possible entirely new tools to make causal inferences from complex environmental data, which can inform research into treatments and policies to prevent diseases.

Plan of Action

To bring the National Exposome Project to life, Congress should appropriate $10 billion over 20 years to Department of Health and Human Services (HHS) to establish a National Exposomics Project Office within the Office of the HHS Secretary, whose director reports directly to the HHS Secretary. The NEP director should be given authority to establish partnerships with HHS agencies (National Institutes of Health, Centers for Disease Control, Advanced Research Projects Agency for Health, Food and Drug Administration) and other federal agencies (Environmental Protection Agency, Commerce, Defense, Homeland Security, National Science Foundation), and to fund and enter into agreements with state and local governments, academic, and private sector partners. The NEP will operate through a series of public-private cores that each are responsible for one of three pillars.

Recommendation 1. Create a reference human exposome

Through partnerships with industry, government, and academic partners, the NEP would generate comprehensive data on the body burden of chemicals and the corresponding biological responses in a representative sampling of the U.S. (>500,000 individuals). This would likely require collecting bio samples (such as blood, saliva, etc.) from participating individuals, performing advanced chemical analysis on the samples using technologies such as high- resolution mass spectrometry, and following up over the study with the participants to observe which health conditions emerge. Critically, bio samples will need to be collected repeatedly over time and bio-banked in order to ensure that the temporal aspect of exposures (such as whether someone was exposed to a particular chemical as a child or as an adult) is included in the final complete data set.

High-throughput toxicological data using microphysiological systems with human cells and tissues could also be generated on a priority list (~1000) of chemicals of concern to understand their potential harm or benefit.

These data would inform a reference standard for particular chemical exposures, which would contain the distribution of exposure levels across the population, the potential health hazards associated with a particular exposure level, and the potential combinations of exposures that would be of concern. This information could ultimately be integrated into patient electronic health records for use in clinical practice.

Recommendation 2. Develop cutting-edge data and analytical standards for exposomic analysis

The NEP would develop both a data standard for collecting and making available exposomic data to researchers, companies, and the public and advanced analytics to enable high-value causal insights to be extracted from these data to enable policymaking and scientific discovery. Importantly, the data would include both biochemical data collected directly as part of this project and in-field sensor data that is already being collected at individual, local, regional, national, and global levels by trusted third-party organizations, such as air/water quality. A key challenge in understanding the connections between a set of exposures and a disease state today is the lack of data standardization. The NEP’s investments in standardization and analytics could result in a global standard for how environmental exposure data is collected, cementing the U.S. as the global leader.

Recommendation 3. Catalyze biomedical innovation and entrepreneurship

A NEP could bolster new entrepreneurial ecosystems in advanced diagnostics, medicines, and clinical services. With access to a core reference exposome as a foundation, the ingeniousness of American entrepreneurs and scientists could result in a wellspring of innovation, creating the potential to reverse the rising incidence rates of many intractable illnesses of our modern era. One can imagine a future where exposomic tests are a part of routine physicals, informing physicians and patients exactly how to prevent certain diseases from emerging or progressing, or one where exposomic data is used to identify novel biological targets for curative therapeutics that could reverse the course of an environmentally caused disease.

The Size of the Prize

The National Exposome Project offers great potential to catalyze biomedical entrepreneurship and innovation.

First, the high-quality reference levels of exposures generated by the NEP could unlock significant opportunities in medical diagnostics. Already, great work is being done in diagnostics to understand how environmental exposures are driving diseases from autism to congenital heart defects in newborns. NEP would accelerate such work, enabling the early detection and monitoring of conditions that today have limited diagnostic approaches.

Second, a deeper understanding of exposures could lead to the faster development of new medicines. One way the NEP data set could do this would be by enabling biologists to identify novel molecular targets for medicines that might otherwise be overlooked—for example, the NEP data might reveal that certain exposures are protective and beneficial for patients with a given disease, a finding that could be more deeply examined at the molecular level to identify a novel therapeutic strategy. In addition, we know that genetics is unable to explain the hundreds of failed drug trials. Exposomics could rescue many drugs that failed testing due to environmentally related nonresponse by identifying the causative agents.

Finally, we expect that the NEP would likely result in significant advances in the physical hardware and instrumentation that is used for large-scale chemical analysis and research, and in the AI-driven computational approaches that would be necessary for the data analysis. These advancements would set the U.S. up to be the leader in exposomic sequencing and analysis, much in the same way that the Human Genome Project established the U.S. as the leader in genetic sequencing. Furthermore, these technical advances would likely be useful in many domains outside of human health where chemical analysis is useful in developing new products—such as in the agriculture, industrial chemical, and energy industries.

Conclusion

To catalyze the next generation of biomedical innovation, we need to establish a national network of exposome facilities to track human exposure levels over time, accelerate efforts to create toxicological profiles of these chemicals, develop advanced analytical models to establish causal links to human disease, and use this foundational knowledge to further the development of new medicines and policies to reduce harmful exposure. This knowledge will transform our biomedical and healthcare industries, as well as provide a path for an improved chemical industry that creates products that are safer by design. The result will be longer health spans, reductions in mortality and morbidity, and economic development associated with spurring new startups that can create new therapies, technologies, and interventions.

This action-ready policy memo is part of Day One 2025 — our effort to bring forward bold policy ideas, grounded in science and evidence, that can tackle the country’s biggest challenges and bring us closer to the prosperous, equitable and safe future that we all hope for whoever takes office in 2025 and beyond.

PLEASE NOTE (February 2025): Since publication several government websites have been taken offline. We apologize for any broken links to once accessible public data.

Anyone concerned with large government initiatives may object to the proposed budget for this project. While we acknowledge the investment needed is substantial, the upside to the public is enormous, borne out both in direct economic development benefits in new exposomic industries created as a result and in the potential demystification of a large portion of currently unexplained diseases that afflict us.

Industries responsible for manufacturing products that potentially expose populations to suspected harmful chemicals may also push back on this effort. As a response, we believe that there is abundant misinformation fueled by underpowered or poorly designed studies on chemicals, including those with more harmful reputations than data supports. A more systemic data set and a newly created industry that gives people more complete, personalized, and real-time data on exposure can not only support debunking myths but also expand the set of possible actions to mitigate exposure, taking us out of a continued cycle of finger-pointing. Indeed, such a systematic approach should reveal many positive associations with modern chemicals and health outcomes, such as preservatives reducing food-borne illness or antibiotics reducing microbial-based disease.

Governance and accountability will be critical to ensure proper stewardship of taxpayer dollars and responsible engagement with the complex set of stakeholders across the country. We therefore propose creating an external advisory committee made up of community members, industry representatives, and key opinion leaders to provide oversight over the project’s design and execution and advice and recommendations throughout all stages to the NEP director.

The first steps to realizing this vision have actually already begun. ARPA-H, the agency responsible for high-risk, high-reward research and development for health, has begun to fund some foundational exposomic work. National Institutes of Health’s All of Us program has also set a foundation for what might be possible in regards to large-scale bio-banking studies. However, to have the needed impact at scale, the NEP needs to be launched on a much bigger scale, outside of existing programs, and focus on spurring economic development and the creation of new industries.

Some of the most important factors that determine success of ambitious efforts like this are the specifics of the legislative authority, the leadership/governance structure, and how much appropriations can be made available upfront. Further, while collaboration across agencies is clear, establishing clear decision-making structure with the proper oversight is critical. This is why we believe creating a dedicated program office, with a clear leader who reports directly to a member of the cabinet, endowed with the necessary authorities including Other Transactions Authority, is key to success.

Fixing Impact: How Fixed Prices Can Scale Results-Based Procurement at USAID

The United States Agency for International Development (USAID) currently uses Cost-Plus-Fixed-Fee (CPFF) as its de facto default funding and contracting model. Unfortunately, this model prioritizes administrative compliance over performance, hindering USAID’s development goals and U.S. efforts to counter renewed Great Power competition with Russia, the People’s Republic of China (PRC), and other competitors. The U.S. foreign aid system is losing strategic influence as developing nations turn to faster and more flexible (albeit riskier) options offered by geopolitical competitors like the PRC.

To respond and maintain U.S. global leadership, USAID should transition to heavily favor a Fixed-Price model – tying payments to specific, measurable objectives rather than incurred costs – to enhance the United States’ ability to compete globally and deliver impact at scale. Moreover, USAID should require written justifications for not choosing a Fixed-Price model, shifting the burden of proof. (We will use “Fixed-Price” to refer to both Firm Fixed Price Contracts and Fixed Amount Award Grants, wherein payments are linked to results or deliverables.)

This shock to the system would encourage broader adoption of Fixed-Price models, reducing administrative burdens, incentivizing implementers (of contracts, cooperative agreements, and grants) to focus on outcomes, and streamlining outdated and inefficient procurement processes. The USAID Bureau for Management’s Office of Acquisition and Assistance (OAA) should lead this transition by developing a framework for greater use of Firm Fixed Price (FFP) contracts and Fixed Amount Award (FAA) grants, establishing criteria for defining milestones and outcomes, retraining staff, and providing continuous support. With strong support from USAID leadership, this shift will reduce administrative burdens within USAID and improve competitiveness by expanding USAID’s partner base and making it easier for smaller organizations to collaborate.

Challenge and Opportunity

Challenge

The U.S. remains the largest donor of foreign assistance around the world, accounting for 29% of total official development assistance from major donor governments in 2023. Its foreign aid programs have paid dividends over the years in American jobs and economic growth, as well as an unprecedented and unrivaled network of alliances and trading partners. Today, however, USAID has become mired once again in procurement inefficiencies, reversing previous trends and efforts at reform and blocking – for years – sensible initiatives such as third country national (TCN) warrants, thereby reducing the impact of foreign aid for those it intends to help and impeding the U.S. Government’s (USG) ability to respond to growing Great Power Competition.

Foreign aid serves as a critical instrument of foreign policy influence, shaping geopolitical landscapes and advancing national interests on the global stage. No actor has demonstrated this more clearly than the PRC, whose rise as a major player in global development adds pressure on the U.S. to maintain its leadership. Notably, China has increased its spending of foreign assistance for economic development by 525% in the last 15 years. Through the Belt & Road Initiative, its Digital Silk Road, alternative development banks, and increasingly sophisticated methods of wielding its soft power, the PRC has built a compelling and attractive foreign assistance model which offers quick, low-cost solutions without the governance “strings” attached to U.S. aid. While it seems to fulfill countries’ needs efficiently, hidden costs include long-term debt, high lifecycle expenses, and potential Chinese ownership upon default.

By contrast, USAID’s Cost-Plus-Fixed-Fee (CPFF) foreign assistance model – in which implementers are guaranteed to recover their costs and earn a profit – mainly prioritizes tracking receipts over achieving results and therefore often fails to achieve intended outcomes, with billions spent on programs that lack measurable impact or fail to meet goals. Implementers are paid for budget compliance, regardless of results, placing all performance risk on the government.

The USG invented CPFF to establish fair prices where no markets existed. However, its use has now extended far beyond this purpose – including for products and services with well-established commercial markets. The compliance infrastructure necessary to administer USAID awards and adhere to the documentation/reporting requirements favors entrenched contractors – as noted by USAID Administrator Samantha Power – stifles innovation, and keeps prices high, thereby encumbering America’s ability to agilely work with local partners and respond to changing conditions. (Note: USAID typically uses “award” to refer to contracts, cooperative agreements, and grants. We use “award” in this same manner to refer to all three procurement mechanisms. We use “Fixed-Price Awards” to refer to fixed-price grants and contracts. “Fixed Amount Awards,” however, specifically refers to a fixed-price grant.)

In light of the growing Great Power Competition with China and Russia – and threats by those who wish to undermine the US-led liberal international order – as well as the possibility of further global shocks like COVID-19 or the war in Ukraine, USAID must consider whether its current toolset can maintain a position of strategic strength in global development. Furthermore, amid declining Official Development Assistance (ODA) – 2% year-over-year – and a global failure to meet the UN Sustainable Development Goals (SDGs), it is critical for USAID to reconcile the gap between its funding and lack of results. Without change, USAID funding will largely continue to fall short of objectives. The time is now for USAID to act.

Opportunity

While USAID cannot have a de jure default procurement mechanism, CPFF has become the de facto default procurement mechanism, but it does not have to be. USAID has other mechanisms to deploy funding at its disposal. In fact, at least two alternative award and contract pricing models exist:

- Time and materials (T&M): The implementer proposes a set of fully loaded (i.e., inclusive of salary, benefits, overhead, plus profit) hourly rates for different labor categories and the USG pays for time incurred – not results delivered.

- Fixed-Price (Firm Fixed Price, FFP, for contracts, or Fixed Amount Award, FAA/Fixed Obligation Grants, FOG, for grants): The implementer proposes a set fee and is paid for milestones or results (not receipts).

While CPFF simply reimburses providers for costs plus profit, the Fixed-Price alternatives tie funding to achieving milestones, promoting efficiency and accountability. The Code of Federal Regulations (§ 200.1) permits using Fixed-Price mechanisms whenever pricing data can establish a reasonable estimate of implementation costs.

USAID has acknowledged the need to adapt funding mechanisms to better support local and impact-driven organizations and enhance cost-effectiveness. USAID has already started supporting these goals by incorporating evidence-based approaches and transitioning to models that emphasize cost-effectiveness and impact. As an example, in the Biden administration, USAID’s Office of the Chief Economist (OCE) issued the Promoting Impact and Learning with Cost-Effectiveness Evidence (PILCEE) award, which aims to enhance USAID’s programmatic effectiveness by promoting the use of cost-effectiveness evidence in strategic planning, policy-making, activity design, and implementation. Progress, though, remains limited. Funding disbursed based on performance milestones has remained unchanged since Fiscal Year (FY) 2016. In FY 2022, Fixed Amount Awards represented only 12.4% of new awards, or 1.4% by value.

An October 2020 Stanford Social Innovation Review article by two USAID officials argued that the Agency could enhance its use of Fixed Amount Awards by promoting “performance over compliance”. Other organizations have already begun to make this shift: the Millennium Challenge Corporation (MCC) and The Global Fund to Fight AIDS, Tuberculosis and Malaria – among others – have invested in increasing results-based approaches and embedding different results-based instruments into their procurement processes for increased aid effectiveness.

To shift USAID into an Agency that invests in impact at scale, we propose going one step further, and making Fixed-Price awards the de facto default procurement mechanism across USAID by requiring procurement officials to provide written justification for choosing CPFF.

This would build on the work completed during the first Trump administration under Administrator Mark Green, including the creation of the first Acquisition and Assistance Strategy, designed to “empower and equip [USAID] partners and staff to produce results-driven solutions” by, inter alia, “increasing usage of awards that pay for results, as opposed to presumptively reimbursing for costs”, and the promotion of the Pay-for-Results approach to development.

Such a change would unlock benefits for both the USG and for global development, including:

- Better alignment of risk and reward by ensuring implementers are paid only when they deliver on pre-agreed milestones. The risk of not achieving impact would no longer be solely borne by the USG, and implementers would be highly incentivized to achieve results.

- Promotion of a results-driven culture by shifting focus from administrative oversight to actual outcomes. By agreeing to milestones at the start of an award, USAID would give implementers flexibility to achieve results and adapt more nimbly to changing circumstances and place the focus on performing and reporting results, rather than administrative reporting.

- Diversification of USAID’s partner base by reducing the administrative burden associated with being a USAID implementer. This would allow the Agency to leverage the unique strengths, contextual knowledge, and innovative approaches of a diverse set of development actors. By allowing the Agency to work more nimbly with small businesses and local actors on shared priorities, it would further enhance its ability to counter current Great Power Competition with China and Russia.

- Incentivization of cost efficiency, motivating implementers to reduce expenses if they want to increase their profits, without extra cost to the USG.

- Facilitation of greater progress by USAID and the USG toward the UN’s 2030 Agenda for Sustainable Development, in ways likely to attract more meaningful and substantive private sector partnerships and leverage scarce USG resources.

Plan of Action

Making Fixed-Price the de facto default option for both grants and contracts would provide the U.S. foreign aid procurement process a necessary shock to the system. The success of such a large institutional shift will require effective change management; therefore, it should be accompanied with the necessary training and support for implementing staff. This would entail, inter alia, establishing a dedicated team within OAA specialized in the design and implementation of FFPs and FAAs; and changing the culture of USAID procurement by supporting both contracting and programming staff with a robust change management program, including training and strong messaging from USAID leadership and education for Congressional appropriators.

Recommendation 1. Making Fixed-Price the de facto “default” option for both grants and contracts, and tying payments to results.

Fixed-Price as the default option for both grants and contracts would come at a low additional cost to USAID (assuming staff are able to be redistributed). The Agency’s Senior Procurement Executive, Chief Acquisition Officer (CAO), and Director for OAA should first convene a design working group composed of representatives from program offices, technical offices, OAA, and the General Counsel’s office tasked with reviewing government spending by category to identify sectors exempt from the “Fixed-Price default” mandate, namely for work that lacks deep commercial markets (e.g., humanitarian assistance or disaster relief). This working group would then propose a phased approach for adopting Fixed-Price as the default option across these sectors. After making its recommendations, the working group would be disbanded and a more permanent dedicated team would carry this effort forward (see Recommendation 2).

Once reset, Contract and Agreement Officers would justify any exceptions (i.e., the choice of T&M or CPFF) in an explanatory memo. The CAO could delegate authority to supervising Contracting Officers or other acquisition officials to approve these exceptions. To ensure that the benefits of Fixed-Price results-based contracting reach all levels of awardees, this requirement should become a flow-down clause in all prime awards. This will require additional training for the prime award recipient’s own overseers.

Recommendation 2. Establishing a dedicated team within USAID’s OAA, or the equivalent office in the next administration, specialized in the design and implementation of FFPs and FAAs.

To facilitate a smooth transition, USAID should create a dedicated team within OAA specialized in designing and implementing FFPs and FAAs using existing funds and personnel. This team would have expertise in the choices involved in designing Fixed-Price agreements: results metrics and targets, pricing for results, and optimizing payment structures to incentivize results.

They would have the mandate and resources necessary to support expanding the use of and the amount of funding flowing through high-quality FFPs and FAAs. They would jumpstart the process and support Acquisition and Program Officers by developing guidelines and procedures for Fixed-Price models (along with sector-specific recommendations), overseeing their design and implementation, and evaluating effectiveness. As USAID will learn along the way about how to best implement the Fixed-Price model across sectors, this team will also need to capture lessons learned from the initial experiences to lower the costs and increase the confidence of Acquisition and Assistance Officers using this model going forward.

Recommendation 3. Launching a robust change management program to support USAID acquisition, assistance, program, and legislative and public affairs staff in making the shift to Fixed-Price grant and contract management.

Successfully embedding Fixed-Price as the default option will entail a culture shift within USAID, requiring a multi-faceted approach. This will include the retraining of Contracts and Agreements Officers and their Representatives – who have internalized a culture of administrative compliance and been evaluated primarily on their extensive administrative oversight skills – and promoting a reorganization of the culture of Monitoring, Evaluation and Learning (MEL) and Collaboration, Learning and Adaptation (CLA) to prioritize results over reporting. Setting contracting and agreements staff up for success requires capacity building in the form of training, toolkits, and guidelines on how to implement Fixed-Price models across USAID’s diverse sectors. Other USG agencies make greater use of Fixed-Price awards, and alternative training for both government and prime contractor overseers exists. OAA’s Professional Development and Training unit should adapt existing training from these other agencies, specifically ensuring it addresses how to align payments with results.

Furthermore, the broader change management program should seek to create the appropriate internal incentive structure at the Agency for Acquisition and Assistance staff, motivating and engaging them in this significant restructuring of foreign aid. To succeed at this, the mandate for change needs to come from the top, reassuring staff that the Fixed-Price model does not expose individuals, the Agency, or implementers to undue legal or financial liability.

While this change will not require a Congressional Notification, the Office of Legislative & Public Affairs (LPA) should join this effort early on, including as part of the design working group. LPA would also play a guiding role in both internal and external communications, especially in educating members of Congress and their staffs on the importance and value of this change to improve USAID effectiveness and return on taxpayer dollars. Entrenched players with significant investments in existing CPFF systems will resist this effort, including with political lobbying; LPA will play an important role informing Congress and the public.

Conclusion

USAID’s current reliance on CPFF has proven inadequate in driving impact and must evolve to meet the challenges of global development and Great Power Competition. To create more agile, efficient, and results-driven foreign assistance, the Agency should adopt Fixed-Price as the de facto default model for disbursing funds, prioritizing results over administrative reporting. By embracing a results-based model, USAID will enhance its ability to respond to global shocks and geopolitical shifts, better positioning the U.S. to maintain strategic influence and achieve its foreign policy and development objectives while fostering greater accountability and effectiveness in its foreign aid programs. Implementing these changes will require a robust change management program, which would include creating a dedicated team within OAA, retraining staff and creating incentives for them to take on the change, ongoing guidance throughout the award process, and education and communication with Congress, implementing partners, and the public. This transformation is essential to ensure that U.S. foreign aid continues to play a critical role in advancing national interests and addressing global development challenges.

This action-ready policy memo is part of Day One 2025 — our effort to bring forward bold policy ideas, grounded in science and evidence, that can tackle the country’s biggest challenges and bring us closer to the prosperous, equitable and safe future that we all hope for whoever takes office in 2025 and beyond.

PLEASE NOTE (February 2025): Since publication several government websites have been taken offline. We apologize for any broken links to once accessible public data.

The revisions to the Code of Federal Regulations, specifically the Uniform Guidance (2 CFR 200) provision, represent an exciting opportunity for USAID and its partners. These changes, which took effect on October 1, 2024, align with the Office of Management & Budget’s vision for enhanced oversight, transparency, and management of USAID’s foreign assistance. This update opens the door to several significant improvements in key reform areas: simplified requirements for federal assistance; reduced burdens on staff and implementing partners; and the introduction of new tools to support USAID’s localization efforts. The updated regulations will reduce the need for exception requests to OMB, speeding up timelines between planning and budget execution. This regulatory update presents a valuable opportunity for USAID to streamline its aid practices, pave the way for the adoption of the Fixed-Price model, and create a performance-driven culture at USAID. For these changes to come into full effect, USAID will need to ensure the necessary flow-down and enforcement of them through accompanying policies, guidance, and training. USAID will also need to ensure that these changes flow down and are incorporated into both prime and sub-awards.

Wider adoption of Fixed-Price could expand USAID’s pool of qualified local partners, enhancing engagement with diverse implementers and facilitating more sustainable, locally-driven development outcomes. Fixed-Price grants and contracts disburse payments based on achieving pre-agreed milestones rather than on incurred costs, reducing the administrative burden of compliance. This simplified approach enables local organizations –many of which often lack the capacity to manage complex cost-tracking requirements –to be more competitive for USAID programs and to be better prepared to manage USAID awards. By linking payments to results rather than detailed expense documentation, the Fixed-Price model gives local organizations greater flexibility and autonomy in achieving their objectives, empowering them to leverage their contextual knowledge and innovative approaches more effectively. This results in a partnership where local actors can operate independently and adapt quickly to changing circumstances, without the bureaucratic burdens traditionally associated with USAID funding.

In the same way that Fixed-Price could help USAID diversify its partner base and increase localization, it could also help expand the Agency’s pool of qualified small businesses, enhancing engagement with diverse implementers, and facilitating more sustainable development outcomes while achieving its Congressionally mandated small and disadvantaged business utilization goals. The current extensive use of CPFF favors entrenched implementers who have already paid for the expensive administrative compliance systems it requires. Fixed-Price grants and contracts have fewer administrative burdens enabling new small businesses–many of which often lack the administrative infrastructure necessary to manage complex cost-tracking requirements–to be more competitive for USAID programs and to be better prepared to manage USAID awards.

USAID’s research and development arm, Development Innovation Ventures (DIV), uses fixed-fee awards almost exclusively to fund innovative implementers. Yet proven interventions rarely transition from DIV into mainstream USAID programs. Innovators and impact-first organizations find themselves well suited for USAID’s R&D, but with no path forward due to the use of CPFF at scale.

USAID has historically relied on expensive procedures to ensure implementers are using funding in ways that align with USG policies and procedures. These concerns are reduced, however, when the government pays for outcomes (rather than tracking receipts). For example, the government would no longer need to verify whether the implementer has the proper accounting and reporting systems in place, nor would the government need to spend time negotiating indirect rates nor implementing incurred cost audits. As detailed regulations on the permissibility of specific costs under federal acquisition and assistance don’t apply to Fixed-Price awards and contracts, neither the government nor the implementer needs to spend time examining the allowability of costs. Furthermore, we expect wider use of Fixed-Price models to lead to significantly improved results per dollar spent. This means that, although there would be initial costs associated with strategy implementation, we would expect Fixed-Price to be significantly more cost-effective.

Yes, USAID has made recent efforts to provide more effective aid by incorporating evidence-based approaches and transitioning to models that emphasize cost-effectiveness and impact. In order to do this, during the last Trump administration, USAID elevated the Office of the Chief Economist (OCE) by enlarging its size and mandate. The OCE issued the activity Promoting Impact and Learning with Cost-Effectiveness Evidence (PILCEE), which aims to enhance USAID’s programmatic effectiveness by promoting the use of cost-effectiveness evidence in strategic planning, policy-making, activity design, and implementation. Our approach of establishing a team within OAA would draw on lessons learned from the OCE approach while reducing any associated costs by not establishing an entirely new operating unit.

Building Regional Cyber Coalitions: Reimagining CISA’s JCDC to Empower Mission-Focused Cyber Professionals Across the Nation

State, local, tribal, and territorial governments along with Critical Infrastructure Owners (SLTT/CIs) face escalating cyber threats but struggle with limited cybersecurity staff and complex technology management. Relying heavily on private-sector support, they are hindered by the private sector’s lack of deep understanding of SLTT/CI operational environments. This gap leaves SLTT/CIs vulnerable and underprepared for emerging threats all while these practitioners on the private sector side end up underleveraged.

To address this, CISA should expand the Joint Cyber Defense Collaborative (JCDC) to allow broader participation by practitioners in the private sector who serve public sector clients, regardless of the size or current affiliation of their company, provided they can pass a background check, verify their employment, and demonstrate their role in supporting SLTT governments or critical infrastructure sectors.

Challenge and Opportunity

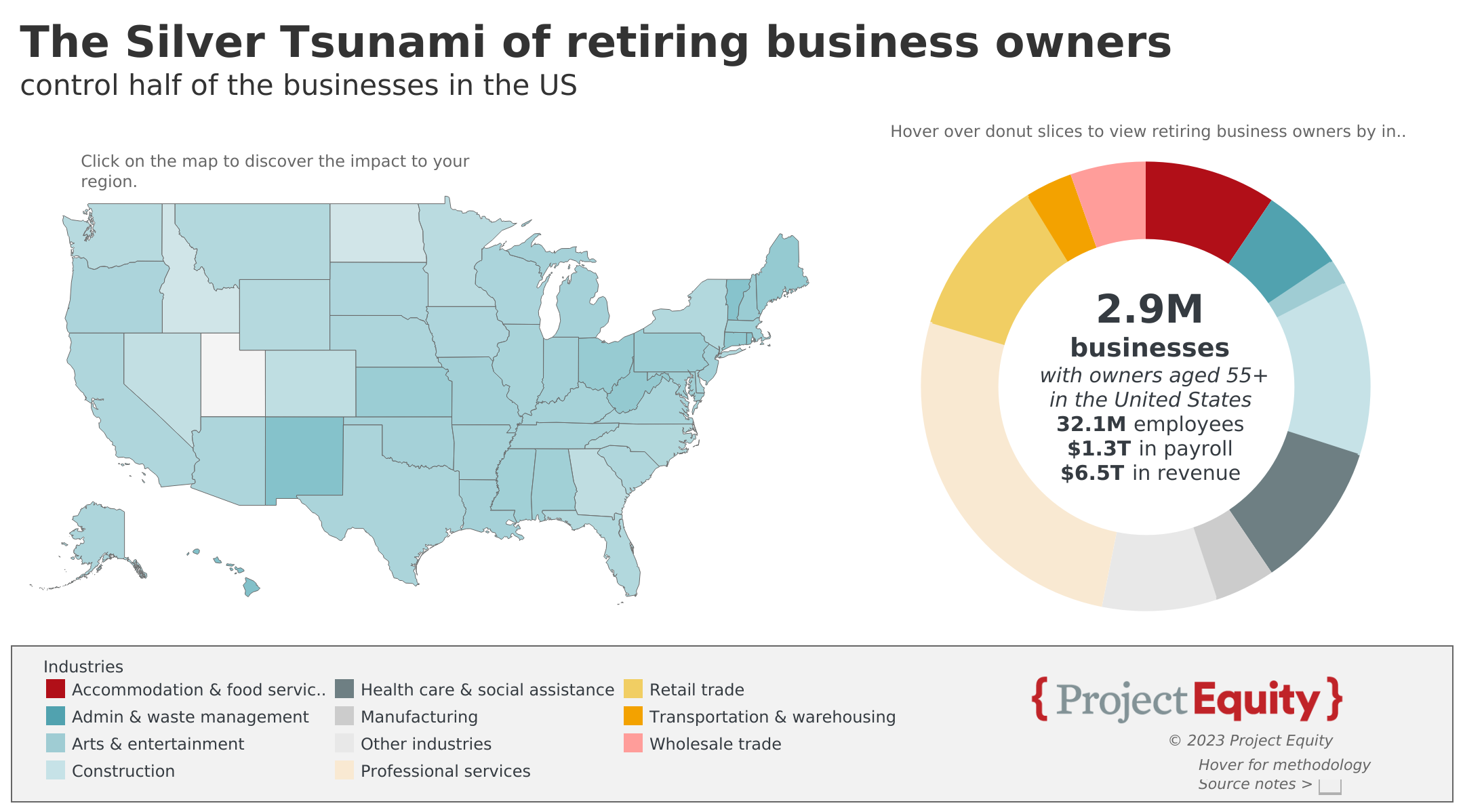

State, local, tribal, and territorial (SLTT) governments face a significant increase in cyber threats, with incidents like remote access trojans and complex malware attacks rising sharply in 2023. These trends indicate not only a rise in the number of attacks but also an increase in their sophistication, requiring SLTTs to contend with a diverse and evolving array of cyber threats.The 2022 Nationwide Cybersecurity Review (NCSR) found that most SLTTs have not yet achieved the cybersecurity maturity needed to effectively defend against these sophisticated attacks, largely due to limited resources and personnel shortages. Smaller municipalities, especially in rural areas, are particularly impacted, with many unable to implement or maintain the range of tools required for comprehensive security. As a result, SLTTs remain vulnerable, and critical public infrastructure risks being compromised. This urgent situation presents an opportunity for CISA to strengthen regional cybersecurity efforts through enhanced public-private collaboration, empowering SLTTs to build resilience and raise baseline cybersecurity standards.

Average cyber maturity scores for the State, Local, Tribal, and Territorial peer groups are at the minimum required level or below. Source: Center for Internet Security

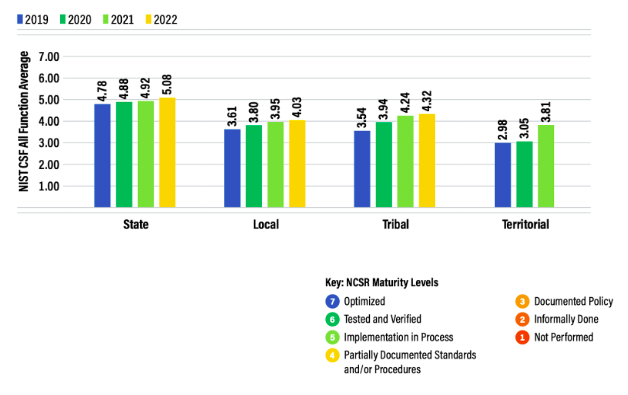

Furthermore, effective cybersecurity requires managing a complex array of tools and technologies. Many SLTT organizations, particularly those in critical infrastructure sectors, need to deploy and manage dozens of cybersecurity tools, including asset management systems, firewalls, intrusion detection systems, endpoint protection platforms, and data encryption tools, to safeguard their operations.

An example of the immense array of different combinations of cybersecurity tools that could comprise a full suite necessary to implement baseline cybersecurity controls. Source: The Software Analyst Newsletter

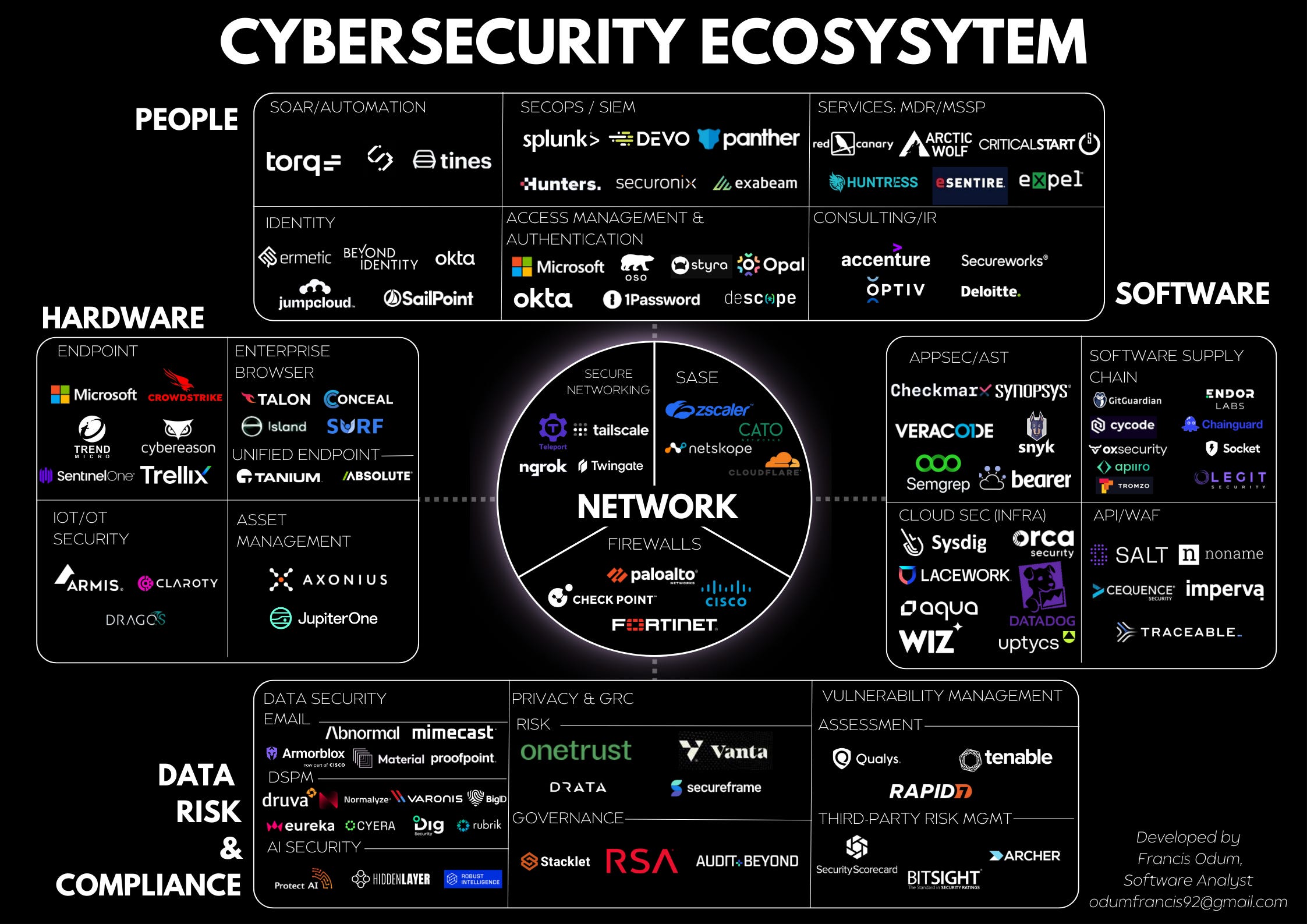

The ability of SLTTs to implement these tools is severely hampered by two critical issues: insufficient funding and a shortage of skilled cybersecurity professionals to operate such a large volume of tools that require tuning and configuration. Budget constraints mean that many SLTT organizations are forced to make difficult decisions about which tools to prioritize, and the shortage of qualified cybersecurity professionals further limits their ability to operate them. The Deloitte-NASCIO Cybersecurity Study highlights how state Chief Information Security Officers (CISOs) are increasingly turning to the private sector to fill gaps in their workforce, procuring staff-augmentation resources to support security control deployment, management of Security Operations Centers (SOCs), and incident response services.

The Top 5 Security Concerns for Nationwide Cybersecurity Review Respondents include lack of sufficient funding and inadequate availability of cybersecurity professionals. Source: Centers for Internet Security.

What Strong Regionalized Communities Would Achieve

This reliance on private-sector expertise presents a unique opportunity for federal policymakers to foster stronger public-private partnerships. However, currently, JCDC membership entry requirements are vague and appear to favor more established companies, limiting participation from many professionals who are actively engaged in this mission.

The JCDC is led by CISA’s Stakeholder Engagement Division (SED) which also serves as the agency’s hub for the shared stakeholder information that unifies CISA’s approach to whole-of-nation operational collaboration. One of the Joint Cyber Defense Collaborative’s (JCDC) main goals is to “organize and support efforts that strengthen the foundational cybersecurity posture of critical infrastructure entities,” ensuring they are better equipped to defend against increasingly sophisticated threats.

Given the escalating cybersecurity challenges, there is a significant opportunity for CISA to enhance localized collaboration between the public and private sectors in the form of improving the quality of service delivery that personnel at managed service providers and software companies can provide. This helps SLTTs/CIs close the workforce gap, allows vendors to create new services focused on SLTT/CIs consultative needs, and boosts a talent market that incentivizes companies to hire more technologists fluent in the “business” needs of SLTTs/CIs.

Incentivizing the Private Sector to Participate

With intense competition for market share in cybersecurity, vendors will need to provide good service and successful outcomes in order to retain and grow their portfolio of business. They will have to compete on their ability to deliver better, more tailored service to SLTTs/CIs and pursue talent that is more fluent in government operations, which incentivizes candidates to build great reputations amongst SLTT/CI customers.

Plan of Action

Recommendation 1. Community Platform

To accelerate the progress of CISA’s mission to improve the cyber baseline for SLTT/CIs, the Joint Cyber Defense Collaborative (JCDC) should expand into a regional framework aligned with CISA’s 10 regional offices to support increasing participation. The new, regionalized JCDC should facilitate membership for all practitioners that support the cyber defense of SLTT/CIs, regardless of whether they are employed by a private or public sector entity. With a more complete community, CISA will be able to direct focused, custom implementation strategies that require deep public-private collaboration.

Participants from relevant sectors should be able to join the regional JCDC after passing background checks, employment verification, and, where necessary, verification that the employer is involved in security control implementation for at least one eligible regional client. This approach allows the program to scale rapidly and ensures fairness across organizations of all sizes. Private sector representatives, such as solutions engineers and technical account managers, will be granted conditional membership to the JCDC, with need-to-know access to its online resources. The program will emphasize the development of collaborative security control implementation strategies centered around the client, where CISA coordinates the implementation functions between public and private sector staff, as well as between cybersecurity vendors and MSPs that serve each SLTT/CI entity.

Recommendation 2. Online Training Platform

Currently, CISA provides a multitude of training offerings both online and in-person, most of which are only accessible by government employees. Expanding CISA’s training offerings to include programs that teach practitioners at MSPs and Software Companies how to become fluent in the operation of government is essential for raising the cybersecurity baseline across various National Cybersecurity Review (NCSR) areas with which SLTTs currently struggle. The training platform should be a flexible, learn-at-your-own-pace virtual learning platform, and CISA is encouraged to develop on existing platforms with existing user bases, such as Salesforce’s Trailhead. Modules should enable students around specific challenges tailored to the SLTT/CI operating environment, such as applying patches to workstations that belong to a Police or Fire Department, where the availability of critical systems is essential, and downtime could mean lives.

The platform should offer a gamified learning experience, where participants can earn badges and certificates as they progress through different learning paths. These badges and certificates will serve as a way for companies and SLTT/CIs to understand which individuals are investing the most time learning and delivering the best service. Each badge will correspond to specific skills or competencies, allowing participants to build a portfolio of recognized achievements. This approach has already proven effective, as seen in the use of Salesforce’s Trailhead by other organizations like the Center for Internet Security (CIS), which offers an introductory course on CIS Controls v8 through the platform.

The benefits of this training platform are multifaceted. First, it provides a structured and scalable way to upskill a large number of cybersecurity professionals across the country with a focus on tailored implementation of cybersecurity controls for SLTT/CIs. Second, the badge system incentivizes ongoing participation, ensuring that cybersecurity professionals can continue to maintain their reputation if they choose to move jobs between companies or between the public and private sectors. Third, the platform fosters a sense of community and collaboration around the client, allowing CISA to understand the specific individuals supporting each SLTT/CI organization, in the case that it needs to mobilize a team with both security knowledge and government operations knowledge around an incident response scenario.

Recommendation 3. A “Smart Rolodex”

A Customer Relationship Management (CRM) system should be integrated within CISA’s Office of Stakeholder Engagement to manage the community of cyber defenders more effectively and streamline incident response efforts. The CRM will maintain a singular database of regionalized JCDC members, their current company, their expertise, and their roles within critical infrastructure sectors. This system will act as a “smart Rolodex,” enabling CISA to quickly identify and coordinate with the most suitable experts during incidents, ensuring a swift and effective response. The recent recommendations by a CISA panel underscore the importance of this approach, emphasizing that a well-organized and accessible database is crucial for deploying the right resources in real-time and enhancing the overall effectiveness of the JCDC.

Recommendation 4. Establishment of Merit-Based Recognition Programs

Finally, to foster a sense of mission and camaraderie among JCDC participants, recognition programs should be introduced to increase morale and highlight above-and-beyond contributions to national cybersecurity efforts. Digital badges, emblematic patches, “CISA Swag” or challenge coins will be awarded as symbols of achievement within the JCDC, boosting morale and practitioner commitment to the greater mission. These programs will also enhance the appeal of cybersecurity careers, elevating those involved with the JCDC, and encouraging increased participation and retention within the JCDC initiative.

Cost Analysis

Estimated Costs and Justification

The proposed regional JCDC program requires procuring ~100,000 licenses for a digital communication platform (Based on Slack) across all of its regions and 500 licenses for a popular Customer Relationship Management (CRM) platform(Based on Salesforce) for its Office of Stakeholder Engagement to be able to access records. The estimated annual costs are as follows:

Digital Communication Platform Licenses:

- Standard Plan: $8,700,000 per year (100,000 users at $7.25 per month).

CRM Platform Licenses:

- Professional Tier: $450,000 per year (500 users at $75 per month).

Total Estimated Cost:

- Lower Tier Option (Standard Communication + Professional CRM): $9,150,000 per year.

Buffer for Operational Costs: To ensure the program’s success, a buffer of approximately 15% should be added to cover additional operational expenses, unforeseen costs, and any necessary uplifts or expansions in features or seats. This does not take into consideration volume discounts that CISA would normally expect when purchasing through a reseller such as Carahsoft or CDW.

Cost Justification: Although the initial investment is significant, the potential savings from avoiding cyber incidents should far outweigh these costs. Considering that the average cost of a data breach in the U.S. is approximately $9.48 million, preventing even a few such incidents through this program could easily justify the expenditure.

Conclusion

The cybersecurity challenges faced by State, Local, Tribal, and Territorial (SLTT) governments and critical infrastructure sectors are becoming increasingly complex and urgent. As cyber threats continue to evolve, it is clear that the existing defenses are insufficient to protect our nation’s most vital services. The proposed expansion of the Joint Cyber Defense Collaborative (JCDC) to allow broader participation by practitioners in the private sector who serve public sector clients, regardless of the size or current affiliation of their company presents a crucial opportunity to enhance collaboration, particularly among SLTTs, and to bolster the overall cybersecurity baseline.These efforts align closely with CISA’s strategic goals of enhancing public-private partnerships, improving the cybersecurity baseline, and fostering a skilled cybersecurity workforce. By taking decisive action now, we can create a more resilient and secure nation, ensuring that our critical infrastructure remains protected against the ever-growing array of cyber threats.

This action-ready policy memo is part of Day One 2025 — our effort to bring forward bold policy ideas, grounded in science and evidence, that can tackle the country’s biggest challenges and bring us closer to the prosperous, equitable and safe future that we all hope for whoever takes office in 2025 and beyond.

PLEASE NOTE (February 2025): Since publication several government websites have been taken offline. We apologize for any broken links to once accessible public data.

Establishing a Cyber Workforce Action Plan

The next presidential administration should establish a comprehensive Cyber Workforce Action Plan to address the critical shortage of cybersecurity professionals and bolster national security. This plan encompasses innovative educational approaches, including micro-credentials, stackable certifications, digital badges, and more, to create flexible and accessible pathways for individuals at all career stages to acquire and demonstrate cybersecurity competencies.

The initiative will be led by the White House Office of the National Cyber Director (ONCD) in collaboration with key agencies such as the Department of Education (DoE), Department of Homeland Security (DHS), National Institute of Standards and Technology (NIST), and National Security Agency (NSA). It will prioritize enhancing and expanding existing initiatives—such as the CyberCorps: Scholarship for Service program that recruits and places talent in federal agencies—while also spearheading new engagements with the private sector and its critical infrastructure vulnerabilities. To ensure alignment with industry needs, the Action Plan will foster strong partnerships between government, educational institutions, and the private sector, particularly focusing on real-world learning opportunities.

This Action Plan also emphasizes the importance of diversity and inclusion by actively recruiting individuals from underrepresented groups, including women, people of color, veterans, and neurodivergent individuals, into the cybersecurity workforce. In addition, the plan will promote international cooperation, with programs to facilitate cybersecurity workforce development globally. Together, these efforts aim to close the cybersecurity skills gap, enhance national defense against evolving cyber threats, and position the United States as a global leader in cybersecurity education and workforce development.

Challenge and Opportunity

The United States and its allies face a critical shortage of cybersecurity professionals, in both the public and private sectors. This shortage poses significant risks to our national security and economic competitiveness in an increasingly digital world.

In the federal government, the cybersecurity workforce is aging rapidly, with only about 3% of information technology (IT) specialists under 30 years old. Meanwhile, nearly 15% of the federal cyber workforce is eligible for retirement. This demographic imbalance threatens the government’s ability to defend against sophisticated and evolving cyber threats.

The private sector faces similar challenges. According to recent estimates, there are nearly half a million unfilled cybersecurity positions in the United States. This gap is expected to grow as cyber threats become more complex and pervasive across all industries. Small and medium-sized businesses are particularly vulnerable, often lacking the resources to compete for scarce cyber talent.

The cybersecurity talent shortage extends beyond our borders, affecting our allies as well. As cyber threats from adversarial nation states become increasingly global in nature, our international partners’ ability to defend against these threats directly impacts U.S. national security. Many of our allies, particularly in Eastern Europe and Southeast Asia, lack robust cybersecurity education and training programs, further exacerbating the global skills gap.

A key factor contributing to this shortage is the lack of accessible, flexible pathways into cybersecurity careers. Traditional education and training programs often fail to keep pace with rapidly evolving technology and threat landscapes. Moreover, they frequently overlook the potential of career changers and nontraditional students who could bring valuable diverse perspectives to the field.

However, this challenge presents a unique opportunity to revolutionize cybersecurity education and workforce development. By leveraging innovative approaches such as apprenticeships, micro-credentials, stackable certifications, peer-to-peer learning platforms, digital badges, and competition-based assessments, we can create more agile and responsive training programs. These methods can provide learners with immediately applicable skills while allowing for continuous upskilling as the field evolves.

Furthermore, there’s an opportunity to enhance cybersecurity awareness and basic skills among all American workers, not just those in dedicated cyber roles. As digital technologies permeate every aspect of modern work, a baseline level of cyber hygiene and security consciousness is becoming essential across all sectors.

By addressing these challenges through a comprehensive Cyber Workforce Action Plan, we can not only strengthen our national cybersecurity posture but also create new pathways to well-paying, high-demand jobs for Americans from all backgrounds. This initiative has the potential to position the United States as a global leader in cyber workforce development, enhancing both our national security and our economic competitiveness in the digital age.

Evidence of Existing Initiatives

While numerous excellent cybersecurity workforce development initiatives exist, they often operate in isolation, lacking cohesion and coordination. ONCD is positioned to leverage its whole-of-government approach and the groundwork laid by its National Cyber Workforce and Education Strategy (NCWES) to unite these disparate efforts. By bringing together the strengths of various initiatives and their stakeholders, ONCD can transform high-level strategies into concrete, actionable steps. This coordinated approach will maximize the impact of existing resources, reduce duplication of efforts, and create a more robust and adaptable cybersecurity workforce development ecosystem. This proposed Action Plan is the vehicle to turn these collective workforce-minded strategies into tangible, measurable outcomes.

At the foundation of this plan lies the NICE Cybersecurity Workforce Framework, developed by NIST. This common lexicon for cybersecurity work roles and competencies provides the essential structure upon which we can build. The Cyber Workforce Action Plan seeks to expand on this foundation by creating standardized assessments and implementation guidelines that can be adopted across both public and private sectors.

Micro-credentials, stackable certifications, digital badges, and other innovations in accessible education—as demonstrated by programs like SANS Institute’s GIAC certifications and CompTIA’s offerings—form a core component of the proposed plan. These modular, skills-based learning approaches allow for rapid validation of specific competencies—a crucial feature in the fast-evolving cybersecurity landscape. The Action Plan aims to standardize and coordinate these and similar efforts, ensuring widespread recognition and adoption of accessible credentials across industries.

The array of gamification and competition-based learning approaches—including but not limited to National Cyber League, SANS NetWars, and CyberPatriot—are also exemplary starting points that would benefit from greater federal engagement and coordination. By formalizing these methods within education and workforce development programs, the government can harness their power to simulate real-world scenarios and drive engagement at a national scale.

Incorporating lessons learned from the federal government’s previous DoE CTE CyberNet program, the National Science Foundation’s (NSF) Scholarship for Service Program (SFS), and the National Security Agency’s (NSA) GenCyber camps—the Action Plan emphasizes the importance of early engagement (the middle grades and early high school years) and practical, hands-on learning experiences. By extending these principles across all levels of education and professional development, we can create a continuous pathway from high school through to advanced career stages.

A Cyber Workforce Action Plan would provide a unifying praxis to standardize competency assessments, create clear pathways for career progression, and adapt to the evolving needs of both the public and private sectors. By building on the successes of existing initiatives and introducing innovative solutions to fill critical gaps in the cybersecurity talent pipeline, we can create a more robust, diverse, and skilled cybersecurity workforce capable of meeting the complex challenges of our digital future.

Plan of Action

Recommendation 1. Create a Cyber Workforce Action Plan.

ONCD will develop and oversee the plan, in close collaboration with DoE, NIST, NSA, and other relevant agencies. The plan has three distinct components:

1. Develop standardized assessments aligned with the NICE framework. ONCD will work with NIST to create a suite of standardized assessments to evaluate cybersecurity competencies that:

- Cover the full range of knowledge, skills, and abilities defined in the NICE framework.

- Include both theoretical knowledge tests and practical, scenario-based evaluations.

- Be regularly updated to reflect evolving cybersecurity threats and technologies.

- Be designed with input from both government and industry cybersecurity professionals to ensure relevance and applicability.

2. Establish a system of stackable and portable micro-credentials. To provide flexible and accessible pathways into cybersecurity careers, ONCD will work with DoE, NIST, and the private sector to help develop and support systems of micro-credentials that are:

- Aligned with specific competencies in the NICE framework: NIST, as the national standards-setting body, will issue these credentials to ensure alignment with the NICE framework. This will provide legitimacy and broad recognition across industries.

- Stackable, allowing learners to build towards larger certifications or degrees: These credentials will be designed to allow individuals to accumulate certifications over time, ultimately leading to more comprehensive qualifications or degrees.

- Portable across different sectors and organizations: The micro-credentials will be recognized by both government agencies and private-sector employers, ensuring they have value regardless of where an individual seeks employment.

- Recognized and valued by both government agencies and private-sector employers: By working closely with the private sector—where credentialing systems like those from CompTIA and Google are already advanced—the ONCD will help ensure government-issued credentials are not duplicative but complementary to existing industry standards. NIST’s involvement, combined with input from private-sector leaders, will provide confidence that these credentials are relevant and accepted in both public and private sectors.

- Designed to facilitate rapid upskilling and reskilling in response to evolving cybersecurity needs: Given the rapidly changing landscape of cybersecurity threats, these micro-credentials will be regularly updated to reflect the most current technologies and skills, enabling professionals to remain agile and competitive.

3. Integrate more closely with more federal initiatives. The Action Plan will be integrated with existing federal cybersecurity programs and initiatives, including:

- DHS’s Cybersecurity Talent Management System

- DoD’s Cyber Excepted Service

- NIST’s NICE framework

- NSF’s CyberCorps SFS program

- NSA’s GenCyber camps

This proposal emphasizes stronger integration with existing federal initiatives and greater collaboration with the private sector. Instead of creating entirely new credentialing standards, ONCD will explore opportunities to leverage widely adopted commercial certifications, such as those from Google, CompTIA, and other private-sector leaders. By selecting and promoting recognized commercial standards where applicable, ONCD can streamline efforts, avoiding duplication and ensuring the cybersecurity workforce development approach is aligned with what is already successful in industry. Where necessary, ONCD will work with NIST and industry professionals to ensure these commercial certifications meet federal needs, creating a more cohesive and efficient approach across both government and industry. This integrated public-private strategy will allow ONCD to offer a clear leadership structure and accountability mechanism while respecting and utilizing commercial technology and standards to address the scale and complexity of the cybersecurity workforce challenge.

The Cyber Workforce Action Plan will emphasize strong collaborations with the private sector, including the establishment of a Federal Cybersecurity Curriculum Advisory Board composed of experts from relevant federal agencies and leading private-sector companies. This board will work directly with universities to develop model curricula that incorporate the latest cybersecurity tools, techniques, and threat landscapes, ensuring that graduates are well-prepared for the specific challenges faced by both federal and private-sector cybersecurity professionals.

To provide hands-on learning opportunities, the Action Plan will include a new National Cyber Internship Program. Managed by the Department of Labor in partnership with DHS’s Cybersecurity and Infrastructure Security Agency (CISA) and leading technology companies, the program will match students with government agencies and private-sector companies. An online platform will be developed, modeled after successful programs like Hacking for Defense, where industry partners can propose real-world cybersecurity projects for student teams.

To incentivize industry participation, the General Services Administration (GSA) and DoD will update federal procurement guidelines to require companies bidding on cybersecurity-related contracts to certify that they offer internship or early-career opportunities for cybersecurity professionals. Additionally, CISA will launch a “Cybersecurity Employer of Excellence” certification program, which will be a prerequisite for companies bidding on certain cybersecurity-related federal contracts.

The Action Plan will also address the global nature of cybersecurity challenges by incorporating international cooperation elements. This includes adapting the plan for international use in strategically important regions, facilitating joint training programs and professional exchanges with allied nations, and promoting global standardization of cybersecurity education through collaboration with international standards organizations.

Ultimately, this effort intends to implement a national standard for cybersecurity competencies—providing clear, accessible pathways for career progression and enabling more agile and responsive workforce development in this critical field.

Recommendation 2. Implement an enhanced CyberCorps fellowship program.

ONCD should expand the NSF’s CyberCorps Scholarship for Service program as an immediate, high-impact initiative. Key features of the expanded CyberCorps fellowship program include:

1. Comprehensive talent pipeline: While maintaining the current SFS focus on students, the enhanced CyberCorps will also target recent graduates and early-career professionals with 1–5 years of work experience. This expansion addresses immediate workforce needs while continuing to invest in future talent. The program will offer competitive salaries, benefits, and loan forgiveness options to attract top talent from both academic and private-sector backgrounds.