Carbon Capture in the Industrial Sector: Addressing Training, Startups, and Risk

This memo is part of the Day One Project Early Career Science Policy Accelerator, a joint initiative between the Federation of American Scientists & the National Science Policy Network.

Summary

Decarbonizing our energy system is a major priority for slowing and eventually reversing climate change. Federal policies supporting industrial-scale solutions for carbon capture, utilization, and sequestration (CCUS) have significantly decreased costs for large-scale technologies, yet these costs are still high enough to create considerable investment risks. Multiple companies and laboratories have developed smaller-scale, modular technologies to decrease the risk and cost of point-source carbon capture and storage (CCS). Additional federal support is needed to help these flexible, broadly implementable technologies meet the scope of necessary decarbonization in the highly complex industrial sector. Accordingly, the Department of Energy (DOE) should launch an innovation initiative comprising the following three pillars:

- Launch a vocational CCS training program to grow the pool of workers equipped with the skills to install, operate, and maintain CCS infrastructure.

- Develop an accelerator to develop and commercialize modular CCS for the industrial sector.

- Create a private-facing CCS Innovation Connector (CIC) to increase stability and investment.

These activities will target underfunded areas and complement existing DOE policies for CCS technologies.

Challenge and Opportunity

Carbon dioxide (CO2) is the largest driver of human-induced climate change. Tackling the climate crisis requires the United States to significantly decarbonize; however, CCS and CCUS are still too costly. CCUS costs must drop to $100 per ton of CO2 captured to incentivize industry uptake. U.S. policymakers have paved the way for CCUS by funding breakthrough research, increasing demand for captured CO2through market-shaping, improving technologies for point-source CCS, and building large-scale plants for direct-air capture (DAC). DAC has great promise for remediating CO2 in the atmosphere despite its higher cost (up to $600/ton of CO2 sequestered), so the Biden Administration and DOE have recently focused on DAC via policies such as the Carbon Negative Shot (CNS) and the 2021 Infrastructure Investment and Jobs Act (IIJA). By comparison, point-source CCS has been described as an “orphan technology” due to a recent lack of innovation.

Part of the problem is that few long-term mechanisms exist to make CCS economical. Industrial CO2 demand is rising, but without a set carbon price, emissions standard, or national carbon market, the cost of carbon capture technology outweighs demand. The Biden Administration is increasing demand for captured carbon through government purchasing and market-shaping, but this process is slow and does not address the root problems of high technology and infrastructure costs. Therefore, targeting the issue from the innovation side holds the most promise for improving industry uptake. DOE grants for technology research and demonstration are common, while public opinion and the 45Q tax credit have led to increased funding for CCS from companies like ExxonMobil. These efforts have allowed large-scale projects like the $1 billion Petra Nova plant to be developed; however, concerns about carbon capture pipelines, the high-cost, high-risk technology, and years needed for permitting mean that large-scale projects are few and far between. Right now, there are only 26 operating CCUS plants globally. Therefore, a solution is to pursue smaller-scale technologies to fill this gap and provide lower-cost and smaller-scale — but much more widespread — CCS installations.

Modular CCS technologies, like those created by the startups Carbon Clean and Carbon Capture, have shown promise for industrial plants. Carbon Clean has serviced 44 facilities that have collectively captured over 1.4 million metric tons of carbon. Mitsubishi is also trialing smaller CCS plants based on successful larger facilities like Orca or Petra Nova. Increasing federal support for modular innovation with lower risks and installation costs could attract additional entrants to the CCS market. Most research focuses on breakthrough innovation to significantly decrease carbon capture costs. However, there are many existing CCS technologies — like amine-based solvents or porous membranes — that can be improved and specialized to cut costs as well. In particular, modular CCS systems could effectively target the U.S. industrial sector, given that industrial subsectors such as steel or plastics manufacturing receive less pressure and have fewer decarbonization options than oil and gas enterprises. The industrial sector accounts for 30% of U.S. greenhouse gas emissions through a variety of small point sources, which makes it a prime area for smaller-scale CCS technologies.

Plan of Action

DOE should launch an initiative designed to dramatically advance technological options for and use of small-scale, modular CCS in the United States. The program would comprise three major pillars, detailed in Table 1.

DOE should leverage IIJA and the new DOE Office of Clean Energy Demonstration (OCED) to create a vocational CCS training program. DOE has in the past supported — and is currently supporting — a suite of regional carbon capture training. However, DOE’s 2012 program was geared toward scientists and workers already in the CCS field, and its 2022 program is specialized for 20–30 specific scientists and projects. DOE should build on this work with a new vocational CCS training program that will:

- Offer a free, 2- to 3-hour online course designed to raise private-sector awareness about CCS technologies, benefits, and prospects for future projects and employment. DOE should advertise this new program alongside existing grant programs and industry connections.

- Work with community colleges, four-year institutions, and workers’ unions to disseminate the online course and create aligned vocational training programs specifically for CCS jobs. In this effort, DOE should target states like Texas and Louisiana that have carbon-rich economies and low public approval of CCS.

- Partner with DOE-sponsored public university programs and private issue groups like ConservAmerica, American Conservation Coalition, and the Center for Climate and Energy Solutions to advertise and update the course.

This educational program would be cost-effective: the online course would require little upkeep, and the vocational training programs could be largely developed with financial and technical support from external partners. Initial funding of $5 million would cover course development and organization of the vocational training programs.

Pillar 2. Create an accelerator for the development and commercialization of modular CCS technologies.

The DOE Office of Fossil Energy and Carbon Management (FECM) or OCED should continue to lead global innovation by creating the Modular CCS Innovation Program (MCIP). This accelerator would provide financial and technical support for U.S. research and development (R&D) startups working on smaller-scale, flexible CCS for industrial plants (e.g., bulk chemical, cement, and steel manufacturing plants). The MCIP should prioritize technology that can be implemented widely with lower costs for installation and upkeep. For example, MCIP projects could focus on improving the resistance of amine-based systems to specialty chemicals, or on developing a modular system like Carbon Clean that can be adopted by different industrial plants. Projects like these have been proposed by different U.S. companies and laboratories, yet to date they have received comparatively less support from government loans or tax credits.

Proposed timeline of the MCIP accelerator for U.S. startups.

As illustrated in Figure 1, the MCIP would be launched with a Request for Proposals (RFP), awarding an initial $1 million each to the top 10 proposals received. In the first 100 days after receiving funding, each participating startup would be required to submit a finalized design and market analysis for its proposed product. The startup would then have an additional 200 days to produce a working prototype of the product. Then, the startup would move into the implementation and commercialization stages, with the goal to have its product market-ready within the next year. Launching the MCIP could therefore be achieved with approximately $10 million in grant funding plus additional funding to cover administrative costs and overhead — amounts commensurate with recent DOE funding for large-scale CCUS projects. This funding could come from the $96 million recently allocated by DOE to advance carbon capture technology and/or from funding allocated in the IIJA allocation. Implementation funding could be secured in part or in whole from private investors or other external industry partners.

Pillar 3. Create a private-facing CCS Innovation Connector (CIC) to increase stability and investment.

The uncertainty and risk that discourages private investment in CCS must be addressed. Many oil and gas companies such as ExxonMobil have called for a more predictable policy landscape and increased funding for CCS projects. Creating a framework for a CCS Innovation Connector (CIC) within the DOE OCED based on a similar fund in the European Union would decrease the perceived risks of CCS technologies emerging from MCIP. The CIC would work as follows: first, a company would submit a proposal relating to point-source carbon capture. DOE technical experts would perform an initial quality-check screening and share proposals that pass to relevant corporate investors. Once funding from investors is secured, the project would begin. CIC staff (likely two to three full-time employees) would monitor projects to ensure they are meeting sponsor goals and offer technical assistance as necessary. The CIC would serve as a liaison between CCS project developers and industrial sponsors or investors to increase investment in and implementation of nascent CCS technologies. While stability in the CCS sector will require policies such as increasing carbon tax credits or creating a global carbon price, the CIC will help advance such policies by funding important American CCS projects.

Conclusion

CO2 emissions will continue to rise as U.S. energy demand grows. Many existing federal policies target these emissions through clean energy or DAC projects, but more can and should be done to incentivize U.S. innovation in point-source CCS. In particular, increased federal support is needed for small-scale and modular carbon capture technologies that target complex areas of U.S. industry and avoid the high costs and risks of large-scale infrastructure installations. This federal support should involve improving CCS education and training, accelerating the development and commercialization of modular CCS technologies for the industrial sector, and connecting startup CCS projects to private funding. Biden Administration policies — coupled with growing public and industrial support for climate action — make this the ideal time to expand the reach of our climate strategy into an “all of the above” solution that includes CCS as a core component.

An Earthshot for Clean Steel and Aluminum

Summary

The scale of mobilization and technological advancement required to avoid the worst effects of climate change has recently led U.S. politicians to invoke the need for a new, 21st century “moonshot.” The Obama Administration launched the SunShot Initiative to dramatically reduce the cost of solar energy and, more recently, the Department of Energy (DOE) announced a series of “Earthshots” to drive down the cost of emerging climate solutions, such as long-duration energy storage.

While DOE’s Earthshots to date have been technology-specific and sector-agnostic, certain heavy industrial processes, such as steel and concrete, are so emissions- intensive and fundamental to modern economies as to demand an Earthshot unto themselves. These products are ubiquitous in modern life, and will be subject to increasing demand as we seek to deploy the clean energy infrastructure necessary to meet climate goals. In other words, there is no reasonable pathway to preserving a livable planet without developing clean steel and concrete production at mass scale. Yet the sociotechnical pathways to green industry – including the mix of technological solutions to replace high-temperature heat and process emissions, approaches to address local air pollutants, and economic development strategies – remain complex and untested. We urgently need to orient our climate innovation programs to the task.

Therefore, this memo proposes that DOE launch a Steel Shot to drive zero-emissions iron, steel, and aluminum production to cost-parity with traditional production within a decade. In other words, zero dollar difference for zero-emissions steel in ten years, or Zero for Zero in Ten.

Challenge and Opportunity

As part of the Biden-Harris Administration’s historic effort to quadruple federal funding for clean energy innovation, DOE has launched a series of “Earthshots” to dramatically slash the cost of emerging technologies and galvanize entrepreneurs and industry to hone in on ambitious but achievable goals. DOE has announced Earthshots for carbon dioxide removal, long-duration storage, and clean hydrogen. New programs authorized by the Infrastructure Investment and Jobs Act, such as hydrogen demonstration hubs, provide tools to help DOE to meet the ambitious cost and performance targets set in the Earthshots. The Earthshot technologies have promising applications for achieving net-zero emissions economy-wide, including in sectors that are challenging to decarbonize through clean electricity alone.

One such sector is heavy industry, a notoriously challenging and emissions-intensive sector that, despite contributing to nearly one-third of U.S. emissions, has received relatively little focus from federal policymakers. Within the industrial sector, production of iron and steel, concrete, and chemicals are the biggest sources of CO2 emissions, producing climate pollution not only from their heavy energy demands, but also from their inherent processes (e.g., clinker production for cement).

Meanwhile, global demand for cleaner versions of these products – the basic building blocks of modern society – is on the rise. The International Energy Agency (IEA) estimates that CO2 emissions from iron and steel production alone will need to fall from 2.4 Gt to 0.2 Gt over the next three decades to meet a net-zero emissions target economy-wide, even as overall steel consumption increases to meet our needs for clean energy buildout. Accordingly, by 2050, global investment in clean energy and sustainable infrastructure materials will grow to $5 trillion per year. The United States is well-positioned to seize these economic opportunities, particularly in the metals industry, given its long history of metals production, skilled workforce, the initiation of talks to reach a carbon emissions-based steel and aluminum trade agreement, and strong labor and political coalitions in favor of restoring U.S. manufacturing leadership.

“The metals industry is foundational to economic prosperity, energy infrastructure, and national security. It has a presence in all 50 states and directly employs more than a half million people. The metals industry also contributes 10% of national climate emissions.”

Department of Energy request for information on a new Clean Energy Manufacturing Institute, 2021

However, the exact solutions that will be deployed to decarbonize heavy industry remain to be seen. According to the aforementioned IEA Net-Zero Energy (NZE) scenario, steel decarbonization could require a mix of carbon capture, hydrogen-based, and other innovative approaches, as well as material efficiency gains. It is likely that electrification – and in the case of steel, increased global use of electric arc furnaces – will also play a significant role. While technology research funding should be increased, traditional “technology-push” efforts alone are unlikely to spur rapid and widespread adoption of a diverse array of solutions, particularly at low-margin, capital-intensive manufacturing facilities. This points to the potential for creative technology-neutral policies, such as clean procurement programs, which create early markets for low-emissions production practices without prescribing a particular technological pathway.

Therefore, as a complement to its Earthshots that “push” promising clean energy technologies down the cost curve, DOE should also consider adopting technology-neutral Earthshots for the industrial sector, even if some of the same solutions may be found in other Earthshots (e.g., hydrogen). It is important for DOE to be very disciplined in identifying one or two essential sectors, where the opportunity is large and strategic, to avoid creating overly balkanized sectoral strategies. In particular, DOE should start with the launch of a Steel Shot to buy down the cost of zero-emissions iron, steel, and aluminum production to parity with traditional production within a decade, while increasing overall production in the sector. In other words, zero dollar difference for zero-emissions steel in ten years, or Zero for Zero in Ten.

The Steel Shot can bring together applied research and demonstration programs, public-private partnerships, prizes, and government procurement, galvanizing public energy around a target that enables a wide variety of approaches to compete. These efforts will be synergistic with technology-specific Earthshots seeking dramatic cost declines on a similar timeline.

Plan of Action

Develop and launch a metals-focused Earthshot:

- Design and announce the Steel Shot in close partnership with industry, labor, and communities. DOE should hold a series of roundtables with industry, labor, and communities to define and calculate the gap between zero-emissions and traditional production, often called the “green premium,” for clean steel and aluminum. This should incorporate measures to achieve near-zero carbon dioxide emissions as well as deep reductions in other harmful air and water pollutants to achieve a “Zero for Zero in Ten” goal – zero dollar difference for zero emissions steel within one decade. DOE should launch the Steel Shot with pledges from major steelmakers and steel purchasers, such as automakers.

- Calculate targets along the way to the decadal goal and define how success will be measured. After launching the new Earthshot, DOE should release a Request for Information (RFI) and use an initial Steel Shot Summit to compile projections for anticipated cost parity milestones along the way to the decadal target. DOE should plan to update assessments of the current “green premium” on a regular basis to ensure that research, development, and demonstration efforts are targeted at continued reductions in the cost of clean steel – not just improvements over the original baseline. To assess the emissions footprint of various steel production processes, DOE should work closely with the White House’s Buy Clean Task Force, which was tasked with developing recommendations for improving transparency and reporting around embodied emissions, particularly through environmental product declarations.

- Hold an annual Steel Shot Summit to bring together technologists, industry, and financiers to share solutions and develop projects. DOE should hold an annual Steel Shot event to help to highlight existing innovation efforts underway and connect stakeholders. This summit will build on existing Eartshot stakeholder gathering efforts underway, such as the Hydrogen Shot Summit and the Long Duration Storage Shot Summit.

Invest in domestic clean steelmaking capacity:

- Stand up the seventh Clean Energy Manufacturing Institute with funding for cooperative applied R&D and a demonstration facility. Last year, AMO put out a Request for Information on the establishment of a seventh Manufacturing USA institute on industrial decarbonization. The RFI had a particular focus on metals manufacturing. In 2022, DOE should formally issue a funding opportunity for the institute, with a requirement that the institute conduct cooperative R&D in industrial decarbonization practices and operate a manufacturing demonstration and workforce development facility for low- and zero-emissions manufacturing processes.

- Launch an annual competition for entrepreneurs and companies demonstrating low- and zero-emissions processes that reduce the green premium. Modeled after the SunShot’s American Made Solar Prize, AMO could issue a series of smaller-scale prize competitions targeted at challenges for clean metals. Prizes are particularly effective for challenges where the desired end target is defined and clearly measurable, but the optimal solution to achieve this target is not yet known. The variety of potential solutions for steel decarbonization makes the sector an excellent candidate for a prize program with multiple rounds and awardees. DOE could consider subprograms within the Steel Shot prize that align with reducing key sources of emissions – EPA identifies the three sources of emissions as 1) process emissions, 2) direct fuel combustion, and 3) indirect emissions from electricity consumption.

- Pass legislation to directly invest in deployment of commercial-scale solutions. While a prize program can promote prototype and pilot-stage technologies, real-world demonstration and deployment will buy down the cost of clean steel. These investments should pursue a range of decarbonization opportunities across blast-oxygen furnaces, electric arc furnaces, and emerging direct reduction approaches. They should also ensure that federal funds go to projects with strong labor standards, building on a long legacy of quality U.S. steelmaking jobs. The original American Jobs Plan released by President Biden proposed ten “pioneer facilities” to demonstrate clean industrial processes, including steel. Several proposals included in House-passed bills, such as the Build Back Better Act and the America COMPETES Act, would provide new authorities to DOE to fund commercial-scale retrofits and first-of-a-kind facilities employing clean steelmaking technologies. For instance, an amendment to America COMPETES expands the industrial decarbonization RD&D program authorized in the Energy Act of 2020 to include “commercial deployment projects.” Should these provisions pass, they can be leveraged to rapidly retrofit facilities and achieve the goals of the Steel Shot.

Create demand for “green steel” through market pull mechanisms:

- Match innovators and steelmakers with private purchasers to generate demand for clean metals. Demand-pull incentives can reduce risk for U.S. steelmakers and move the innovations that emerge from DOE R&D and prize programs into commercial adoption, which is critical for additional “learning-by-doing” at scale. DOE can work with domestic industries that are major purchasers of steel to develop sector-based advanced market commitments as part of the Earthshot launch. For instance, DOE should leverage its relationships with major automakers with ambitious climate goals, such as Ford and GM, to spur auto sector commitments to purchasing clean steel. In developing these advanced market commitments, DOE can work with the First Movers Coalition, a consortium of private sector buyers of innovative, clean products, launched by the State Department and the World Economic Forum in Glasgow in 2021. They included both steel and aluminum in their initial round of target products.

- Use federal procurement power to favor “green steel” for government-funded projects, including infrastructure and defense. AMO and DOE’s Federal Energy Management Program should advise the General Services Administration, Department of Defense, Department of Transportation, and other major federal procurers as they execute federal sustainability plans and procurement working groups, including the Buy Clean Task Force announced in December 2021. For instance, DOE can utilize the Earthshot to provide recommendations on reasonable costs for steel included in a Buy Clean program, and provide technical assistance to innovators to access federal clean procurement efforts.

The lower technology prices targeted by the Hydrogen Earthshot and the Carbon Negative Shot are necessary but not sufficient to guarantee that these technologies are deployed in the highest emissions producing sectors, such as steel, cement, and chemicals. The right combination of approaches to achieve price reduction remains uncertain and can vary by plant, location, process, product, as noted in a recent McKinsey study on decarbonization challenges across the industrial sector. Additionally, there is a high upfront cost to deploying novel solutions, and private financers are reluctant to take a risk on untested technologies. Nonetheless, to avoid creating overly balkanized sectoral strategies, it will be important for DOE to be very disciplined in identifying one or two essential sectors, such as metals, where the opportunity is large and strategic.

These products are ubiquitous and increasingly crucial for deploying the clean energy infrastructure necessary to reach net-zero. The United States of America has a long history of metals production, a skilled workforce, and strong labor and political coalitions in favor of restoring U.S. manufacturing leadership. Additionally, carbon-intensive steel from China has become a growing concern for U.S. manufacturers and policymakers; China produces 56% of global crude steel, followed by India (6%), Japan (5%), and then the U.S. (4%). The U.S. already maintains a strong competitive advantage in clean steel, and the technologies needed to double-down and fully decarbonize steel are close to commercialization, but still require government support to achieve cost parity.

U.S. steel production is already less polluting than many foreign sources, but that typically comes with additional costs. Reducing the “green premium” will help to keep US metal producers competitive, while preparing them for the needs of buyers, who are increasingly seeking out green steel products. End users such as Volkswagen are aiming for zero emissions across their entire value chain by 2050, while Mercedes-Benz and Volvo have already begun sourcing low-emissions steel for new autos. Meanwhile, the EU is preparing to implement a carbon border adjustment mechanism that could result in higher prices for steel and aluminum-produced products from the United States. The ramifications of the carbon border tax are already being seen in steel agreements, such as the recent US-EU announcement to drop punitive tariffs on each other’s steel and aluminum exports and to begin talks on a carbon-based trade agreement.

Breakthrough Energy estimated that the “green premium” for steel using carbon capture is approximately 16% – 29% higher than “normally” produced steel. Because there are a variety of processes that could be used to reduce emissions, and thus contribute to the “green premium,” there may not be a single number that can be estimated for the current costs. However, wherever possible, we advocate for using real-world data of “green” produced steel to estimate how close DOE is to achieving its benchmark targets in comparison to “traditional” steel.

Leveraging Department of Energy Authorities and Assets to Strengthen the U.S. Clean Energy Manufacturing Base

Summary

The Biden-Harris Administration has made revitalization of U.S. manufacturing a key pillar of its economic and climate strategies. On the campaign trail, President Biden pledged to do away with “invent it here, make it there,” alluding to the long-standing trend of outsourcing manufacturing capacity for critical technologies — ranging from semiconductors to solar panels —that emerged from U.S. government labs and funding. As China and other countries make major bets on the clean energy industries of the future, it has become clear that climate action and U.S. manufacturing competitiveness are deeply intertwined and require a coordinated strategy.

Additional legislative action, such as proposals in the Build Back Better Act that passed the House in 2021, will be necessary to fully execute a comprehensive manufacturing agenda that includes clean energy and industrial products, like low-carbon cement and steel. However, the Department of Energy (DOE) can leverage existing authorities and assets to make substantial progress today to strengthen the clean energy manufacturing base.

This memo recommends two sets of DOE actions to secure domestic manufacturing of clean technologies:

- Foundational steps to successfully implement the new Determination of Exceptional Circumstances (DEC) issued in 2021 under the Bayh-Dole Act to promote domestic manufacturing of clean energy technologies.

- Complementary U.S.-based manufacturing investments to maximize the DEC’s impact and to maximize the overall domestic benefits of DOE’s clean energy innovation programs.

Challenge and Opportunity

Recent years have been marked by growing societal inequality, a pandemic, and climate change-driven extreme weather. These factors have exposed the weaknesses of essential supply chains and our nation’s legacy energy system.

Meanwhile, once a reliable source of supply chain security and economic mobility, U.S. manufacturing is at a crossroads. Since the early 2000s, U.S. manufacturing productivity has stagnated and five million jobs have been lost. While countries like Germany and South Korea have been doubling down on industrial innovation — in ways that have yielded a strong manufacturing job recovery since the Great Recession — the United States has only recently begun to recognize domestic manufacturing as a crucial part of a holistic innovation ecosystem. Our nation’s longstanding, myopic focus on basic technological research and development (R&D) has contributed to the American share of global manufacturing declining by 10 percentage points, and left U.S. manufacturers unprepared to scale up new innovations and compete in critical sectors long-term.

The Biden-Harris administration has sought to reverse these trends with a new industrial strategy for the 21st century, one that includes a focus on the industries that will enable us to tackle our most pressing global challenge and opportunity: climate change. This strategy recognizes that the United States has yet to foster a robust manufacturing base for many of the key products —ranging from solar modules to lithium-ion batteries to low-carbon steel — that will dominate a clean energy economy, despite having funded a large share of the early and applied research into underlying technologies. The strategy also recognizes that as clean energy technologies become increasingly foreign-produced, risks increase for U.S. climate action, national security, and our ability to capture the economic benefits of the clean energy transition.

The U.S. Department of Energy (DOE) has a central role to play in executing the administration’s strategy. The Obama administration dramatically ramped up funding for DOE’s Advanced Manufacturing Office (AMO) and launched the Manufacturing USA network, which now includes seven DOE-sponsored institutes that focus on cross-cutting research priorities in collaboration with manufacturers. In 2021, DOE issued a Determination of Exceptional Circumstances (DEC) under the Bayh-Dole Act of 19801 to ensure that federally funded technologies reach the market and deliver benefits to American taxpayers through substantial domestic manufacturing. The DEC cites global competition and supply chain security issues around clean energy manufacturing as justification for raising manufacturing requirements from typical Bayh-Dole “U.S. Preference” rules to stronger “U.S. Competitiveness” rules across DOE’s entire science and energy portfolio (i.e., programs overseen by the Under Secretary for Science and Innovation (S4)). This change requires DOE-funded subject inventions to be substantially manufactured in the United States for all global use and sales (not just U.S. sales) and expands applicability of the manufacturing requirement to the patent recipient as well as to all assignees and licensees. Notably, the DEC does allow recipients or licensees to apply for waivers or modifications if they can demonstrate that it is too challenging to develop a U.S. supply chain for a particular product or technology.

The DEC is designed to maximize return on investment for taxpayer-funded innovation: the same goal that drives all technology transfer and commercialization efforts. However, to successfully strengthen U.S. manufacturing, create quality jobs, and promote global competitiveness and national security, DOE will need to pilot new evaluation processes and data reporting frameworks to better assess downstream impacts of the 2021 DEC and similar policies, and to ensure they are implemented in a manner that strengthens manufacturing without slowing technology transfer. It is essential that DOE develop an evidence base to assess a common critique of the DEC: that it reduces appetite for companies and investors to engage in funding agreements. Continuous evaluation can enable DOE to understand how well-founded these concerns are.

Yet, the new DEC rules and requirements alone cannot overcome the structural barriers to domestic commercialization that clean energy companies face today. DOE will also need to systematically build domestic manufacturing efforts into basic and applied R&D, demonstration projects, and cross-cutting initiatives. DOE should also pursue complementary investments to ensure that licensees of federally funded clean energy technologies are able and eager to manufacture in the United States. Under existing authorities, such efforts can include:

- Elevating and empowering AMO and Manufacturing USA to build a competitive U.S. workforce and regional infrastructure for clean energy technologies.

- Directly investing in domestic manufacturing capacity through DOE’s Loan Programs Office and through new authorities granted under the Bipartisan Infrastructure Law.

- Market creation through targeted clean energy procurement.

- Coordination with place-based and justice strategies.

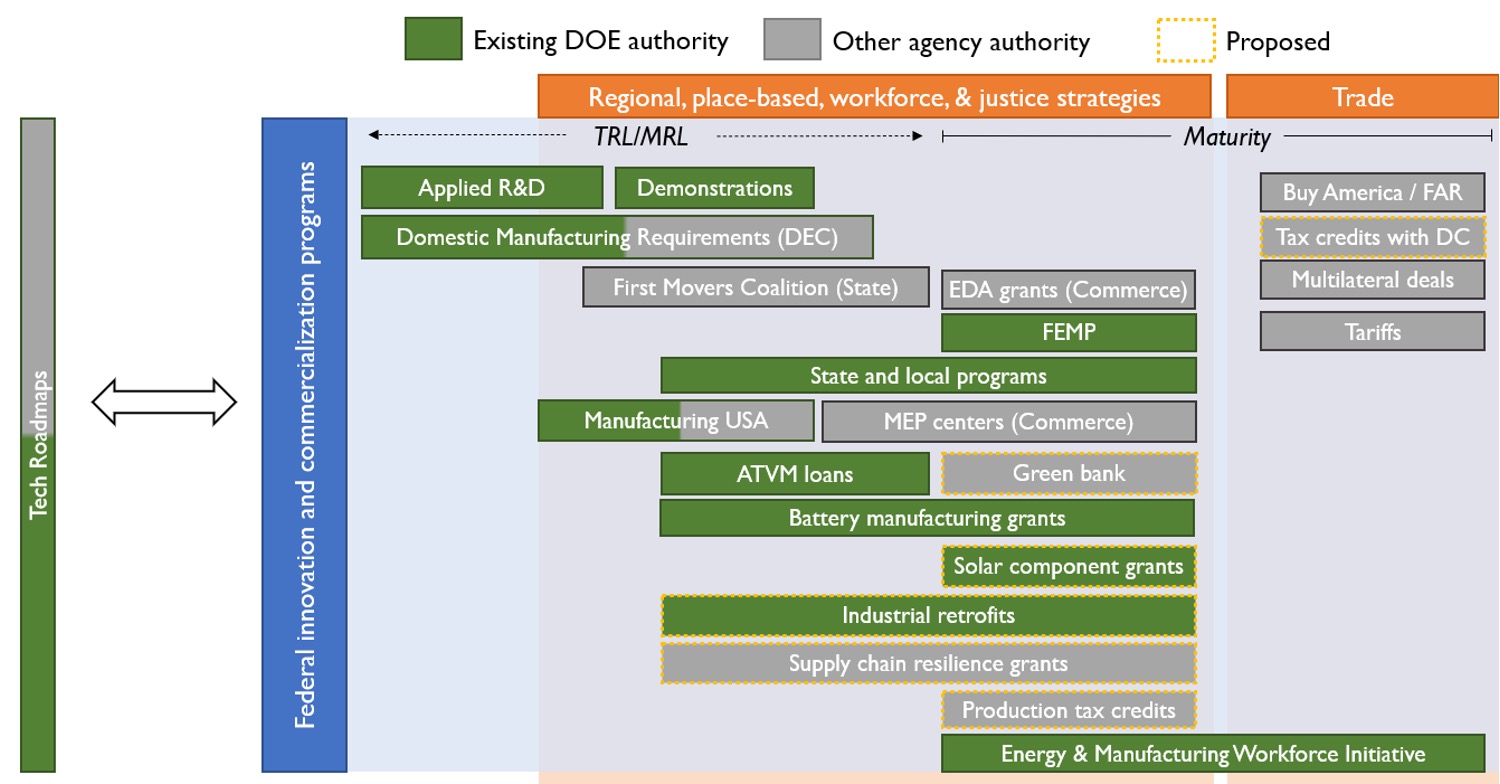

These complementary efforts will enable DOE to generate more productive outcomes from its 2021 DEC, reduce the need for waivers, and strengthen the U.S. clean manufacturing base. In other words, rather than just slow the flow of innovation overseas without presenting an alternative, they provide a domestic outlet for that flow. Figure 1 provides an illustration of the federal ecosystem of programs, DOE and otherwise, that complement the mission of the DEC.

Programs are arranged in rough accordance to their role in the innovation cycle. TRL and MRL refer to technology and manufacturing readiness level, respectively. Proposed programs, highlighted with a dotted yellow border, are either found in the Build Back Better Act passed by the House in 2021 or the Bipartisan Innovation Bill (USICA/America COMPETES)

Figure 1Programs are arranged in rough accordance to their role in the innovation cycle. TRL and MRL refer to technology and manufacturing readiness level, respectively. Proposed programs, highlighted with a dotted yellow border, are either found in the Build Back Better Act passed by the House in 2021 or the Bipartisan Innovation Bill (USICA/America COMPETES).

Plan of Action

While further Congressional action will be necessary to fully execute a long-term national clean manufacturing strategy and ramp up domestic capacity in critical sectors, DOE can meaningfully advance such a strategy now through both long-standing authorities and recently authorized programs. The following plan of action consists of (1) foundational steps to successfully implement the DEC, and (2) complementary efforts to ensure that licensees of federally funded clean energy technologies are able and eager to manufacture in the United States. In tandem, these recommendations can maximize impact and benefits of the DEC for American companies, workers, and citizens.

Part 1: DEC Implementation

The following action items, many of which are already underway, are focused on basic DEC implementation.

- Develop and socialize a draft reporting and data collection framework. The Office of the Under Secretary for Science and Innovation should work closely with DOE’s General Counsel and individual program offices to develop a reporting and data collection framework for the DEC. Key metrics for the framework should be informed by the Science and Innovation (S4) mission, and capture broader societal benefits (e.g., job creation). DOE should target completion of a draft framework by the end of 2022, with plans to socialize, pilot, and finalize the framework in consultation with the S4 programs and key external stakeholders.

- Identify pilots for the new data reporting framework in up to five Science and Innovation programs. Since the DEC issuance, Science and Innovation (S4) funding opportunity announcements (FOAs) have been required to include a section on “U.S. Manufacturing Commitments” that states the requirements of the U.S. Competitiveness Provision. FOAs also include a section on “Subject Invention Utilization Reporting,” though the reporting listed is subject to program discretion. By early 2023, DOE should identify up to five program offices in which to pilot the data reporting framework referenced above. The Office of the Under Secretary for Science and Innovation (S4) should also consider coordinating with the Office of the Under Secretary for Infrastructure (S3) to pilot the framework in the Office of Clean Energy Demonstrations. Pilot programs should build in opportunities for external feedback and continuous evaluation to ensure that the reporting framework is adequately capturing the effects of the DEC.

- Set up a DEC implementation task force. The DEC requires quarterly reporting from program offices to the Under Secretary for Science and Innovation. The Under Secretary’s office should convene a task force — comprising representatives from the Office of Technology Transitions (OTT), the General Counsel’s office (GC), the Office of Manufacturing and Energy Supply Chains, and each of DOE’s major R&D programs — to track these reports. The task force should meet at least quarterly, and its findings should be transmitted to the DOE GC to monitor DEC implementation, troubleshoot compliance issues, and identify challenges for funding recipients and other stakeholders. From an administrative standpoint, these activities could be conducted under the Technology Transfer Policy Board.

- Incorporate domestic manufacturing objectives into all technology-specific roadmaps and initiatives, including the Earthshots. DOE and the National Labs regularly track the development and future potential of key clean energy technologies through analysis (e.g., the National Renewable Energy Laboratory (NREL)’s Future Studies). DOE also has developed high-profile cross-cutting initiatives, such as the Grid Modernization Initiative and the “Earthshots” initiative series, aimed at achieving bold technology targets. OTT, in concert with the Office of Policy and individual program offices, should incorporate domestic manufacturing into all technology-specific roadmaps and cross-cutting initiatives. Specifically, technology-specific roadmaps and initiatives should (i) assess the current state of U.S. manufacturing for that technology, and (ii) identify key steps needed to promote robust U.S. manufacturing capabilities for that technology. ARPA-E (which has traditionally included manufacturing in its technology targets and been subject to a DEC since 2013)and the supply chain recommendations in the Energy Storage Grand Challenge Roadmap may provide helpful models.

- Support the White House and NIST on the iEdison rebuild. The National Institute of Standards and Technology (NIST) is currently revamping the iEdison tool for reporting federally funded inventions. The coincident timing of this effort with the DOE’s DEC creates an opportunity to align data and waiver processes across government. DOE should work closely with NIST to understand new features being developed in the iEdison rebuild, offer input on manufacturing data collection, and align DOE reporting requirements where appropriate. Data reported through iEdison will help DOE evaluate the success of the DEC and identify areas in need of support. For instance, if iEdison data shows that a certain component for batteries becomes an increasing source of DEC waivers, DOE and the Department of Commerce may respond with targeted actions to remedy this gap in the domestic battery supply chain. Under the pending Bipartisan Innovation Bill, the Department of Commerce could receive funding for a new supply-chain monitoring program to support these efforts, as well as $45 billion in grants and loans to finance supply chain resilience. iEdison data could also be used to justify Congressional approval of new DOE authorities to strengthen domestic manufacturing.

Part 2: Complementary Investments

Investments to support the domestic manufacturing sector and regional innovation infrastructure must be pursued in tandem with the DEC to translate into enhanced clean manufacturing competitiveness. The following actions are intended to reduce the need for waivers, shore up supply chains, and expand opportunities for domestic manufacturing:

- Elevate and empower DOE’s AMO to serve as the hub of U.S. clean manufacturing strategy. Under the Obama administration, recognition that the U.S. was underinvesting in manufacturing innovation led to a dramatic expansion of the Advanced Manufacturing Office (AMO) and the launch of the Manufacturing USA institutes, modeled on Germany’s Fraunhofer institutes. DOE has begun to add a seventh institute focused on industrial decarbonization to the six institutes it already manages, and requested funding to launch an eighth and ninth institute in FY22. While both AMO and Manufacturing USA have proven successful through an array of industry-university-government partnerships, technical assistance, and cooperative R&D, neither are fully empowered to serve as hubs for U.S. clean manufacturing strategy. AMO currently faces bifurcated demands to implement advanced manufacturing practices (cross-sector) and promote competitiveness in emerging clean industries (sector-specific). The Manufacturing USA institutes have also been limited by their narrow, often siloed mandates and the expectation of financial independence after five years; under the Trump Administration, DOE sought to wind down the institutes rather than pursue additional funding. DOE should reinvest in establishing AMO and the institutes as the “tip of the spear” for a domestic clean manufacturing strategy and seek to empower them in four ways:

- Institutional structure. AMO should be elevated to the Deputy Under Secretary or Assistant Secretary level, as has been recommended by recent DOE Chief of Staff Tarak Shah in a 2019 report, the House Select Committee on the Climate Crisis, the National Academies, and many others. This combination of enhanced funding and authority would empower DOE to pursue a more holistic clean manufacturing strategy, commensurate with the scale of the climate and industrial challenges we face.

- Mission focus. It is critical that AMO continue to work on both advanced manufacturing practices (cross-sector) and competitiveness in emerging clean energy industries (sector-specific), but this bifurcated mission does present challenges. As alluded to in a January 2022 RFI, AMO is attempting to pursue both goals in tandem. With the structural elevation proposed above, there is an opportunity for AMO’s clean energy manufacturing mission to be clarified, with a subset of staff and programs specifically dedicated to competitiveness in these emerging sectors.

- Regional infrastructure and workforce development. AMO’s authority already extends beyond applied R&D, providing technical assistance, workforce development, and more. The Manufacturing USA institutes provide regional support for early prototyping efforts, officially operating up to Technology Readiness Level (TRL) 7. However, these programs should be granted greater authority and budget to foster regional demonstration and workforce development centers for low-carbon and critical clean energy manufacturing technologies. These activities create the infrastructure for constant learning that is necessary to entice manufacturers to remain in the U.S. and reduce the need for waivers, even when foreign manufacturers present cost advantages. To start, DOE should establish a regional demonstration and workforce development facility operated by the new clean manufacturing institute for industrial decarbonization (similar in nature to Oak Ridge’s Manufacturing Demonstration Facility (MDF)) to accelerate domestic technology transfer of clean manufacturing practices, and consider additional demonstration and workforce development facilities at future institutes.

- Scale. Despite accounting for roughly one-third of U.S. greenhouse gas emissions and 11% of GDP, manufacturing receives less than 10% of DOE energy innovation funding. Additionally, the Manufacturing USA institutes have roughly one-fourth of the budget, one-fifth of the institutes, and one-hundredth of the employees of the Fraunhofer institutes in Germany, a much smaller country that has nevertheless managed to outpace the United States in manufacturing output. To align with climate targets and the administration’s goal to quadruple innovation budgets, DOE manufacturing RD&D would need to grow to roughly $2 billion by 2025.

- Deploy at least $20 billion in grants, loans, and loan guarantees to support solar, wind, battery, and electric vehicle manufacturing and recycling by 2027. Not only is financial support to expand domestic clean manufacturing capacity critical for energy security, innovation clusters, and economic development, but it can also alleviate the barriers for innovators to manufacture in the U.S. and reduce the need for DEC waivers. Existing DOE authorities include the $7 billion for battery manufacturing provided in the Bipartisan Infrastructure Law and $17 billion in existing direct loan authority at the Loan Programs Office’s Advanced Technology Vehicles Manufacturing unit. DOE’s technology roadmaps can help these programs to be coordinated with earlier stage RD&D efforts by anticipating emerging manufacturing needs, so that S4 funding recipients who are subject to U.S. manufacturing requirements have more confidence in their ability to find ample domestic manufacturing capacity. The same entities that receive R&D funds also should be eligible for follow-on manufacturing incentives. The pending Bipartisan Innovation Bill and Build Back Better Act may also provide $3 billion for solar manufacturing, renewal of the 48C advanced manufacturing investment tax credit, and a new advanced manufacturing production tax credit. While these funding mechanisms have already been identified in response to the battery supply chain review, they should be applied beyond the battery sector.

- Leverage DOE procurement authority and state block grant programs to drive demand for American-made clean energy. Procurement is a key demand-pull lever in any coordinated industrial strategy, and can reinforce the DEC by assuring potential applicants that American-made clean energy products will be rewarded in government purchasing. This administration’s Executive Order (EO) on federal sustainability calls for 100% carbon-free electricity by 2030 and “net-zero emissions from overall federal operations by 2050, including a 65 percent emissions reduction by 2030.” The Federal Energy Management Program (FEMP), noted in the EO and the federal government’s accompanying sustainability plan as one of the hubs of clean-energy procurement expertise, will play a key role in providing technical support and progress measurement for all government agencies as they pursue these goals, including by helping agencies to identify U.S. suppliers. For instance, in response to the battery supply chain review, FEMP was tasked with conducting a diagnostic on stationary battery storage at federal sites. DOE also delivers substantial funding and technical assistance to help states and localities deploy clean energy through the Weatherization Assistance Program and State Energy Program. These programs are now consolidated under a new Under Secretary for Infrastructure. DOE should build on these efforts by leveraging DOE’s multi-billion dollar state block grant and competitive financial assistance programs, including the recently-authorized State Manufacturing Leadership grants, to support states and communities in planning to strengthen local and regional manufacturing capacity to make progress on sustainability targets (see Updating the State Energy Program to Promote Regional Manufacturing and Economic Revitalization).

- Align the above activities with DOE’s place-based strategies for advancing environmental justice and supporting fossil fuel-centered communities in their clean energy transition. Throughout U.S. history, manufacturing has fostered rich local cultures and strong regional economies. Domestic manufacturing of clean energy technologies and clean industrial materials represents a major opportunity for economic revitalization, job growth, and pollution reduction. DOE also has a major role in executing President Biden’s environmental justice agenda, including as chair of the Interagency Working Group (IWG) on Coal and Power Plant Communities. As noted in the IWG’s initial report, investments in manufacturing have the potential to provide pollution relief to frontline communities and also retain the U.S. industrial workforce from high-carbon industries. Indeed, this is one reason why NIST’s Manufacturing Extension Partnerships played a significant role in the POWER Initiative under the Obama administration. The domestic clean energy manufacturing investments detailed above — including expansion of AMO, new grant programs, and procurement —should all be executed in close coordination with DOE’s place-based strategies to deliver benefits for environmental justice and legacy energy communities and to foster regional cultures of innovation. Finally, DOE should coordinate with other regional development efforts across government, such as the EDA’s Build Back Better Regional Challenge and USDA’s Rural Development programs.

Deploy a National Network of Air-Pollution and CO2 Sensors in 300 American Cities by 2030

Summary

The Biden-Harris Administration should deploy a national network of low-cost, co-located, real-time greenhouse gas (GHG) and air-pollution emission sensors in 300 American cities by 2030 to help communities address environmental inequities, combat global warming, and improve public health. Urban areas contribute more than 70% of total GHG emissions. Aerosols and other byproducts of fossil-fuel combustion — the major drivers of poor air quality — are emitted in huge quantities alongside those GHGs. A “300 by ‘30” initiative establishing a national network of local, ground-level sensors will provide precise and customized information to drive critical climate and air-quality decisions and benefit neighborhoods, schools, and businesses in communities across the nation. Ground-level dense sensor networks located in community neighborhoods also provide a resource that educators can leverage to engage students on co-created “real-time and actionable science”, helping the next generation see how science and technology can contribute to solving our country’s most challenging issues.

Challenge and Opportunity

U.S. cities contribute 70% of our nation’s GHG emissions and have more concentrated air pollutants that harm neighborhoods and communities unequally. Climate change profoundly impacts human health and wellbeing through drought, wildfire, and extreme-weather events, among numerous other impacts. Microscopic air pollutants, which penetrate the body’s respiratory and circulatory systems, play a significant role in heart disease, stroke, lung cancer, and asthma. These diseases collectively cost Americans $800 billion annually in medical bills and result in more than 100,000 Americans dying prematurely each year. Also, health impacts are experienced more acutely for certain communities. Some racial groups and poorer households, especially those located near highways and industry, face higher exposure to harmful air pollutants than others, deepening health inequities across American society.

GHG emissions and ground-level air pollution are both negative products of fossil-fuel combustion and are inextricably linked. But our nation lacks a comprehensive approach to measure, monitor, and mitigate these drivers of climate change and air pollution. Furthermore, key indicators of air quality — such as ground-level pollutant measurements — are not typically considered alongside GHG measurements in governmental attempts to regulate emissions. A coordinated and data-driven approach across government is needed to drive policies that are ambitious enough to simultaneously and equitably tackle both the climate crisis and worsening air-quality inequities in the United States.

Technologies that are coming down in cost enable ground-level, real-time, and neighborhood-scale observations of GHG and air-pollutant levels. These data support cost-effective mapping of carbon dioxide (CO2) and air-quality related emissions (such as PM2.5, ozone, CO, and nitrogen oxides) to aid in forecasting local air quality, conducting GHG inventories, detecting pollution hotspots, and assessing the effectiveness of policies designed to reduce air pollution and GHG emissions. The result can be more successful, targeted strategies to reduce climate impacts, improve human health, and ensure environmental equity.

Pilot projects are proving the value of hyper-local GHG and air-quality sensor networks. Multiple universities, philanthropies, and nongovernmental organizations (NGOs) have launched pilot projectsdeploying local, real-time GHG and air-pollutant sensors in cities including Los Angeles, New York City, Houston, TX, Providence, RI, and cities in the San Francisco Bay Area. In the San Francisco Bay Area, for instance, a dense network of 70 sensors enabled researchers to closely investigate how movement patterns changed as a result of the COVID-19 pandemic. Observations from local air-quality sensors could be used to evaluate policies aimed at increasing electric-vehicle deployment, to demonstrate how CO and NOx emissions from vehicles change day to day, and to prove that emissions from heavy-duty trucks disproportionately impact lower-income neighborhoods and neighborhoods of color. The federal government can and should incorporate lessons learned from these pilot projects in designing a national network of air-quality sensors in cities across the country.

Components of a national air-quality sensor network are in place. On-the-ground sensor measurements provide essential ground-level, high-spatial-density measurements that can be combined with data from satellites and other observing systems to create more accurate climate and air-quality maps and models for regions, states, and the country. Through sophisticated computational models, for instance, weather data from the National Oceanic and Atmospheric Administration (NOAA) are already being combined with existing satellite data and data from ground-level dense sensor networks to help locate sources of GHG emissions and air-pollution in cities throughout the day and across seasons. The Environmental Protection Agency (EPA) is working on improving these measurements and models by encouraging development of standards for low-cost sensor data. Finally, data from pilot projects referenced above is being used on an ad hoc basis to inform policy. Data showing that CO2 emissions from the vehicle fleet are decreasing faster than expected in cities with granular emissions monitoring are that policies designed to reduce GHG emissions are working as or better than intended. Federal leadership is needed to bring the impacts of such insights to scale on larger and even more impactful levels.

A national network of hyper-local GHG and air-quality sensors will contribute to K–12 science curricula. The University of California, Berkeley partnered with the National Aeronautics and Space Administration (NASA) on the GLOBE educational program. The program provides ideas and materials for K–12 activities related to climate education and data literacy that leverage data from dense local air-quality sensor networks. Data from a national air-quality sensor network would expand opportunities for this type of place-based learning, motivating students with projects that incorporate observations occurring on the roof of their schools or nearby in their neighborhoods to investigate the atmosphere, climate, and use of data in scientific analyses.Scaling a national network of local GHG and air-quality sensors to include hundreds of cities will yield major economies of scale. A national air-quality sensor network that includes 300 American cities — essentially, all U.S. cities with populations greater than 100,000 — will drive down sensor costs and drive up sensor quality by growing the relevant market. Scaling up the network will also lower operational costs of merging large datasets, interpreting those data, and communicating insights to the public. This city-federal collaboration would provide validated data needed to prove which national and local policies to improve air quality and reduce emissions work, and to weed out those that don’t.

Plan of Action

The National Oceanic and Atmospheric Administration (NOAA), in partnership with the Bureau of Economic Analysis, the Centers for Disease Control and Prevention (CDC), the Environmental Protection Agency (EPA), the National Aeronautics and Space Administration (NASA), the National Institute of Standards and Technology (NIST), and the National Science Foundation (NSF) should lead a $100 million “300 by ’30: The American City Urban Air Challenge” to deploy low-cost, real-time, ground-based sensors by the year 2030 in all 300 U.S. cities with populations greater than 100,000 residents.

The initiative could be organized and managed by region through an expanded NOAA Regional Collaboration Network, under the auspices of NOAA’s Office of Oceanic and Atmospheric Research. NOAA is responsible for weather and air-quality forecasting and already manages a large suite of global CO2 and global air-quality-related observations along with local weather observations. In a complementary manner, the “300 by ‘30” sensor network would measure CO2, CO (carbon monoxide), NO (nitric oxide), NO2 (nitrogen dioxide), O3 (ozone), and PM2.5 (particulate matter down to 2.5 microns in size) at the neighborhood scale. “300 by ‘30” network operators would coordinate data integration and management within and across localities and report findings to the public through a uniform portal maintained by the federal government. Overall, NOAA would coordinate sensor deployment, network integration and data management and manage the transition from research to operations. NOAA would also work with NIST and EPA to provide uniform formats for collecting and sharing data.

Though NOAA is the natural agency to lead the “300 by ‘30” initiative, other federal agencies can and should play key supporting roles. NSF can support new approaches to instrument design and major innovations in data and computational science methods for analysis of observations that would transition rapidly to practical deployment. NIST can provide technical expertise and leadership in much-needed standards-setting for GHG measurements. NASA can advance the STEM-education portion of this initiative (see below), showing educators and students how to observe GHGs and air quality in their neighborhoods and how to link ground-level observations to observations made from space. NASA can also work with NOAA to merge high-density ground-level and wide-area space-based datasets. BEA can develop local models to provide the nonpartisan, nonpolitical economic information cities will need to inform urban air-policy decisions triggered by insights from the sensor network. Similarly, the EPA can help guide cities in using climate and air-quality information from the sensor network. The CDC can use network data to better characterize public-health threats related to climate change and air pollution, as well as to coordinate responses with state and local health officials.

The “300 by ‘30” challenge should be deployed in a phased approach that (i) leverages lessons learned from pilot projects referenced above, and (ii) optimizes cost savings and efficiencies from increasing the number of networked cities. Leveraging its Regional Collaboration Network, NOAA would launch the Challenge in 2023 with an initial cohort of nine cities (one in each of NOAA’s nine regions). The Challenge would expand to 25 cities by 2024, 100 cities by 2027, and all 300 cities by 2030. The Challenge would also be open to participation by states and territories whose largest cities have populations less than 100,000.

The challenge should also build on NASA’s GLOBE program to develop and share K–12 curricula, activities, and learning materials that use data from the sensor network to advance climate education and data literacy and to inspire students to pursue higher education and careers in STEM. NOAA and NSF could provide additional support in promoting observation-based science education in classrooms and museums, illustrating how basic scientific observations of the atmosphere vary by neighborhood and collectively contribute to weather, air-quality, and climate models.

Recent improvements in sensor technologies are only now enabling the use of dense mesh networks of sensors to precisely pinpoint levels and sources of GHGs and air pollutants in real time and at the neighborhood scale. Pilot projects in the San Francisco Bay Area, Los Angeles, Houston, Providence, and New York City have proven the value of localized networks of air-quality sensors, and have demonstrated how data from these sensors can inform emissions-reductions policies. While individual localities, states, and the EPA are continuing to support pilot projects, there has never been a national effort to deploy networked GHG and air-quality sensors in all of the nation’s largest cities, nor has there been a concerted effort to link data collected from such sensors at scale.

Although urban areas are responsible for over 70% of national GHG emissions and over 70% of air pollution in urban environments, even cities with existing policy approaches to GHGs and air quality lack the information to rapidly evaluate whether their emissions-reduction policies are effective. Further, COVID-19 has impacted local revenue, strained municipal budgets, and has understandably detracted attention from environmental issues in many localities. Federal involvement is needed to (i) give cities the equipment, data, and support they need to make meaningful progress on emissions of GHGs and air pollutants, (ii) coordinate efforts and facilitate exchange of information and lessons learned across cities, and (iii) provide common standards for data collection and sharing.

A pilot project including a 20-device sensor network was led by U.S. scientists and developed for the City of Glasgow, Scotland as a demonstration for the COP26 climate conference. The City of Glasgow is an active partner in efforts to expand sensor networks, and is one model for how scientists and municipalities can work together to develop needed information presented in a useful format.

Sensors appropriate for this initiative can be manufactured in the United States. A design for a localized network air-quality sensors the size of a shoe box has been described in freely available literature by researchers at the University of California, Berkeley. Domestic manufacture, installation, and maintenance of sensors needed for a national monitoring network will create stable, well-paying jobs in cities nationwide.

Leading scientific societies Optica (formerly OSA) and the American Geophysical Union (AGU) are spearheading the effort to provide “actionable science” to local and regional policymakers as part of their Global Environmental Measurement & Monitoring (GEMM) Initiative. Optica and AGU are also exploring opportunities with the United Nations Human Settlements Program (UN-Habitat) and the World Meteorological Organization (WMO) to expand these efforts. GHG- and air-quality-measurement pilot projects referenced above are based on the BEACO2N Network of sensors developed by University of California, Berkeley Professor Ronald Cohen.

Broadening the Knowledge Economy through Independent Scholarship

Summary

Scientists and scholars in the United States are faced with a relatively narrow set of traditional career pathways. Our lack of creativity in defining the scholarly landscape is limiting our nation’s capacity for innovation by stifling exploration, out-of-the-box thinking, and new perspectives.

This does not have to be the case. The rise of the gig economy has positioned independent scholarship as an effective model for people who want to continue doing research outside of traditional academic structures, in ways that best fit their life priorities. New research institutes are emerging to support independent scholars and expand access to the knowledge economy.

The Biden-Harris Administration should further strengthen independent scholarship by (1) facilitating partnerships between independent scholarship institutions and conventional research entities; (2) creating professional-development opportunities for independent scholars; and (3) allocating more federal funding for independent scholarship.

Challenge and Opportunity

The academic sector is often seen as a rich source of new and groundbreaking ideas in the United States. But it has become increasingly evident that pinning all our nation’s hopes for innovation and scientific advancement on the academic sector is a mistake. Existing models of academic scholarship are limited, leaving little space for any exploration, out-of-the-box thinking, and new perspectives. Our nation’s universities, which are shedding full-time faculty positions at an alarming rate, no longer offer as reliable and attractive career opportunities for young thinkers as they once did. Conventional scholarly career pathways, which were initially created with male breadwinners in mind, are strewn with barriers to broad participation. But outside of academia, there is a distinct lack of market incentive structures that support geographically diverse development and implementation of new ideas.

These problems are compounded by the fact that conventional scholarly training pathways are long, expensive, and unforgiving. A doctoral program takes an average of 5.8 years and $115,000 to complete. The federal government spends $75 billion per year on financial assistance for students in higher education. Yet inflexible academic structures prevent our society from maximizing returns on these investments in human capital. Individuals who pursue and complete advanced scholarly training but then opt to take a break from the traditional academic pipeline — whether to raise a family, explore another career path, or deal with a personal crisis — can find it nearly impossible to return. This problem is especially pronounced among first-generation students, women of color, and low income groups. A 2020 study found that out of the 67% of Ph.D. students who wanted to stay in academia after completing their degree, only 30% of those people did. Outside of academia, though, there are few obvious ways for even highly trained individuals to contribute to the knowledge economy. The upshot is that every year, innumerable great ideas and scholarly contributions are lost because ideators and scholars lack suitable venues in which to share them.

Fortunately, an alternative model exists. The rise of the gig economy has positioned independent scholarship as a viable approach to work and research. Independent scholarship recognizes that research doesn’t have to be a full-time occupation, be conducted via academic employment, or require attainment of a certain degree. By being relatively free of productivity incentives (e.g., publish or perish), independent scholarship provides a flexible work model and career fluidity that allows people to pursue research interests alongside other life and career goals.

Online independent-scholarship institutes (ISIs) like the Ronin Institute, IGDORE, and others have recently emerged to support independent scholars. By providing an affiliation, a community, and a boost of confidence, such institutes empower independent scholars to do meaningful research. Indeed, the original perspectives and diverse life experiences that independent scholars bring to the table increase the likelihood that such scholars will engage in high-risk research that can deliver tremendous benefits to society.

But it is currently difficult for ISIs to help independent scholars reach their full potential. ISIs generally cannot provide affiliated individuals with access to resources like research ethics review boards, software licenses, laboratory space, scientific equipment, computing services, and libraries. There is also concern that without intentionally structuring ISIs around equity goals, ISIs will develop in ways that marginalize underrepresented groups. ISIs (and individuals affiliated with them) are often deemed ineligible for research grants, and/or are outcompeted for grants by well-recognized names and affiliations in academia. Finally, though independent scholarship is growing, there is still relatively little concrete data on who is engaging in independent scholarship, and how and why they are doing so.

Strengthening support for ISIs and their affiliates is a promising way to fast-track our nation towards needed innovation and technological advancements. Augmenting the U.S. knowledge-economy infrastructure with agile ISIs will pave the way for new and more flexible scholarly work models; spur greater diversity in scholarship; lift up those who might otherwise be lost Einsteins; and increase access to the knowledge economy as a whole.

Plan of Action

The Biden-Harris Administration should consider taking the following steps to strengthen independent scholarship in the United States:

- Facilitate partnerships between independent scholarship institutions and conventional research entities.

- Create professional-development opportunities for independent scholars.

- Allocate more federal funding for independent scholarship.

More detail on each of these recommendations is provided below.

1. Facilitate partnerships between ISIs and conventional research entities.

The National Science Foundation (NSF) could provide $200,000 to fund a Research Coordination Network or INCLUDES alliance of ISIs. This body would provide a forum for ISIs to articulate their main challenges and identify solutions specific to the conduct of independent research (see FAQ for a list) — solutions may include exploring Cooperative Research & Development Agreements (CRADAs) as mechanisms for accessing physical infrastructure needed for research. The body would help establish ISIs as recognized complements to traditional research facilities such as universities, national laboratories, and private-sector labs.

NSF could also include including ISIs in its proposed National Networks of Research Institutes (NNRIs). ISIs meet many of the criteria laid out for NNRI affiliates, including access to cross-sectoral partnerships (many independent scholars work in non-academic domains), untapped potential among diverse scholars who have been marginalized by — or who have made a choice to work outside of — conventional research environments, novel approaches to institutional management (such as community-based approaches), and a model that truly supports the “braided river” or ”ecosystem” career pathway model.

The overall goal of this recommendation is to build ISI capacity to be effective players in the broader knowledge-economy landscape.

2. Create professional-development opportunities for independent scholars.

To support professional development among ISIs, The U.S. Small Business Administration and/or the NSF America’s Seed Fund program could provide funding to help ISI staff develop their business models, including funding for training and coaching on leadership, institutional administration, financial management, communications, marketing, and institutional policymaking. To support professional development among independent scholars directly, the Office of Postsecondary Education at the Department of Education — in partnership with professional-development programs like Activate, the Department of Labor’s Wanto, and the Minority Business Development Agency — can help ISIs create professional-development programs customized towards the unique needs of independent scholars. Such programs would provide mentorship and apprenticeship opportunities for independent scholars (particularly for those underrepresented in the knowledge economy), led by scholars experienced with working outside of conventional academia.

The overall goal of this recommendation is to help ISIs and individuals create and pursue viable work models for independent scholarship.

3. Allocate more federal funding for independent scholarship.

Federal funding agencies like NSF struggle to diversify the types of projects they support, despite offering funding for exploratory high-risk work and for early-career faculty. A mere 4% of NSF funding is provided to “other” entities outside of private industry, federally supported research centers, and universities. But outside of the United States, independent scholarship is recognized and funded. NSF and other federal funding agencies should consider allocating more funding for independent scholarship. Funding opportunities should support individuals over institutions, have low barriers to entry, and prioritize provision of part-time funding over longer periods of time (rather than full funding for shorter periods of time).

Funding opportunities could include:

- Funding for seed-grant programs administered by ISIs. Federal agencies already have authority to support seed-grant programs — like the National Aeronautics and Space Agency (NASA)’s impactful program at Earth Science Information Partners — as prizes competitions.

- Funding research awards for individual independent scholars. For instance, Congress could consider amending the 2021 Supporting Early-Career Researchers Act to allow NSF to award funding to researchers who are not affiliated with an “institution of higher education”, as well as to award part-time funding.

- An NSF program that exclusively funds innovative, high-risk research led by scholars outside of universities, federally supported research centers, and private-sector labs.

- An NSF-funded research effort to capture basic information about independent scholars in order to provide them with better support. The effort would strive to understand why independent scholars choose not to work with a conventional research institution, what their work models look like, and their greatest challenges and needs.

Conclusion

Our nation urgently needs more innovative, broadly sourced ideas. But limited traditional career options are discouraging participation in the knowledge economy. By strengthening independent scholarship institutes and independent scholarship generally, the Biden-Harris Administration can help quickly diversify and grow the pool of people participating in scholarship. This will in turn fast-track our nation towards much-needed scientific and technological advancements.

The traditional academic pathway consists of 4–5 years of undergraduate training (usually unfunded), 1–3 years for a master’s degree (sometimes funded; not always a precondition for enrollment in a doctoral program), 3–6+ years for a doctoral degree (often at least partly funded through paid assistantships), 2+ years of a postdoctoral position (fully funded at internship salary levels), and 5–7 years to complete the tenure-track process culminating in appointment to an Associate Professor position (fully funded at professional salary levels).

Independent scholarship in any academic field is, as defined by the Effective Altruism Forum, scholarship “conducted by an individual who is not employed by any organization or institution, or who is employed but is conducting this research separately from that”.

Independent scholars can draw on their varied backgrounds and professional experience to bring fresh and diverse worldviews and networks to research projects. Independent scholars often bring a community-oriented and collaborative approach to their work, which is helpful for tackling pressing transdisciplinary social issues. For students and mentees, independent scholars can provide connections to valuable field experiences, practicums, research apprenticeships, and career-development opportunities. In comparison to their academic colleagues, many independent scholars have more time flexibility, and are less prone to being influenced by typical academic incentives (e.g., publish or perish). As such, independent scholars often demonstrate long-term thinking in their research, and may be more motivated to work on research that they feel personally inspired by.

An ISI is a legal entity or organization (e.g, a nonprofit) that offers an affiliation for people conducting independent scholarship. ISIs can take the form of research institutes, scholarly communities, cooperatives, and others. Different ISIs can have different goals, such as emphasizing work within a specific domain or developing different ways of doing scholarship. Many ISIs exist solely online, which allows them to function in very low-cost ways while retaining a broad diversity of members. Independent scholarship institutes differ from professional societies, which do not provide an affiliation for individual researchers.