Teacher Education Clearinghouse for AI and Data Science

The next presidential administration should develop a teacher education and resource center that includes vetted, free, self-guided professional learning modules, resources to support data-based classroom activities, and instructional guides pertaining to different learning disciplines. This would provide critical support to teachers to better understand and implement data science education and use of AI tools in their classroom. Initial resource topics would be:

- An Introduction to AI, Data Literacy, and Data Science

- AI & Data Science Pedagogy

- AI and Data Science for Curriculum Development & Improvement

- Using AI Tools for Differentiation, Assessment & Feedback

- Data Science for Ethical AI Use

In addition, this resource center would develop and host free, pre-recorded, virtual training sessions to support educators and district professionals to better understand these resources and practices so they can bring them back to their contexts. This work would improve teacher practice and cut administrative burdens. A teacher education resource would lessen the digital divide and ensure that our educators are prepared to support their students in understanding how to use AI tools so that each and every student can be college and career ready and competitive at the global level. This resource center would be developed using a process similar to the What Works Clearinghouse, such that it is not endorsing a particular system or curriculum, but is providing a quality rating, based on the evidence provided.

Challenge and Opportunity

AI is an incredible technology that has the power to revolutionize many areas, especially how educators teach and prepare the next generation to be competitive in higher education and the workforce. A recent RAND study showed leaders in education indicating promise in adapting instructional content to fit the level of their students and for generating instructional materials and lesson plans. While this technology holds a wealth of promise, the field has developed so rapidly that people across the workforce do not understand how best to take advantage of AI-based technologies. One of the most crucial areas for this is in education. AI-enabled tools have the potential to improve instruction, curriculum development, and assessment, but most educators have not received adequate training to feel confident using them in their pedagogy. In a Spring 2024 pilot study (Beiting-Parrish & Melville, in preparation), initial results indicated that 64.3% of educators surveyed had not had any professional development or training in how to use AI tools. In addition, more than 70% of educators surveyed felt they did not know how to pick AI tools that are safe for use in the classroom, and that they were not able to detect biased tools. Additionally, the RAND study indicated only 18% of educators reported using AI tools for classroom purposes. Within those 18%, approximately half of those educators used AI because they had been specifically recommended or directly provided a tool for classroom use. This suggests that educators need to be given substantial support in choosing and deploying tools for classroom use. Providing guidance and resources to support vetting tools for safe, ethical, appropriate, and effective instruction is one of the cornerstone missions of the Department of Education. This education should not rest on the shoulders of individual educators who are known to have varying levels of technical and curricular knowledge, especially for veteran teachers who have been teaching for more than a decade.

If the teachers themselves do not have enough professional development or expertise to select and teach new technology, they cannot be expected to thoroughly prepare their students to understand emerging technologies, such as AI, nor the underpinning concepts necessary to understand these technologies, most notably data science and statistics. As such, students’ futures are being put at risk from a lack of emphasis in data literacy that is apparent across the nation. Recent results from the National Assessment of Education Progress (NAEP), assessment scores show a shocking decline in student performance in data literacy, probability, and statistics skills – outpacing declines in other content areas. In 2019, the NAEP High School Transcript Study (HSTS) revealed that only 17% of students completed a course in statistics and probability, and less than 10% of high school students completed AP Statistics. Furthermore, the HSTS study showed that less than 1% of students completed a dedicated course in modern data science or applied data analytics in high school. Students are graduating with record-low proficiency in data, statistics, and probability, and graduating without learning modern data science techniques. While students’ data and digital literacy are failing, there is a proliferation of AI content online; they are failing to build the necessary critical thinking skills and a discerning eye to determine what is real versus what has been AI-generated, and they aren’t prepared to enter the workforce in sectors that are booming. The future the nation’s students will inherit is one in which experience with AI tools and Big Data will be expected to be competitive in the workforce.

Whether students aren’t getting the content because it isn’t given its due priority, or because teachers aren’t comfortable teaching the content, AI and Big Data are here, and our educators don’t have the tools to help students get ready for a world in the midst of a data revolution. Veteran educators and preservice education programs alike may not have an understanding of the essential concepts in statistics, data literacy, or data science that allow them to feel comfortable teaching about and using AI tools in their classes. Additionally, many of the standard assessment and practice tools are not fit for use any longer in a world where every student can generate an A-quality paper in three seconds with proper prompting. The rise of AI-generated content has created a new frontier in information literacy; students need to know to question the output of publically available LLM-based tools, such as Chat-GPT, as well as to be more critical of what they see online, given the rise of AI-generated deep fakes, and educators need to understand how to either incorporate these tools into their classrooms or teach about them effectively. Whether educators are ready or not, the existing Digital Divide has the potential to widen, depending on whether or not they know how to help students understand how to use AI safely and effectively and have the access to resources and training to do so.

The United States finds itself at a crossroads in the global data boom. Demand in the economic marketplace, and threat to national security by way of artificial intelligence and mal-, mis-, and disinformation, have educators facing an urgent problem in need of an immediate solution. In August of 1958, 66 years ago, Congress passed the National Defense Education Act (NDEA), emphasizing teaching and learning in science and mathematics. Specifically in response to the launch of Sputnik, the law supplied massive funding to, “insure trained manpower of sufficient quality and quantity to meet the national defense needs of the United States.” The U.S. Department of Education, in partnership with the White House Office of Science and Technology Policy, must make bold moves now to create such a solution, as Congress did once before.

Plan of Action

In the years since the Space Race, one problem with STEM education persists: K-12 classrooms still teach students largely the same content; for example, the progression of high school mathematics including algebra, geometry, and trigonometry is largely unchanged. We are no longer in a race to space – we’re now needing to race against data. Data security, artificial intelligence, machine learning, and other mechanisms of our new information economy are all connected to national security, yet we do not have educators with the capacity to properly equip today’s students with the skills to combat current challenges on a global scale. Without a resource center to house the urgent professional development and classroom activities America’s educators are calling for, progress and leadership in spaces where AI and Big Data are being used will continue to dwindle, and our national security will continue to be at risk. It’s beyond time for a new take on the NDEA that emphasizes more modern topics in the teaching and learning of mathematics and science, by way of data science, data literacy, and artificial intelligence.

Previously, the Department of Education has created resource repositories to support the dissemination of information to the larger educational praxis and research community. One such example is the What Work Clearinghouse, a federally vetted library of resources on educational products and empirical research that can support the larger field. The WWC was created to help cut through the noise of many different educational product claims to ensure that only high-quality tools and research were being shared. A similar process is happening now with AI and Data Science Resources; there are a lot of resources online, but many of these are of dubious quality or are even spreading erroneous information.

To combat this, we suggest the creation of something similar to the WWC, with a focus on vetted materials for educator and student learning around AI and Data Science. We propose the creation of the Teacher Education Clearinghouse (TEC) underneath the Institute of Education Sciences, in partnership with the Office of Education Technology. Currently, WWC costs approximately $2,500,000 to run, so we anticipate a similar budget for the TEC website. The resource vetting process would begin with a Request for Information from the larger field that would encourage educators and administrators to submit high quality materials. These materials would be vetted using an evaluation framework that looks for high quality resources and materials.

For example, the RFI might request example materials or lesson goals for the following subjects:

- An Introduction to AI, Data Literacy, and Data Science

- Introduction to AI & Data Science Literacy & Vocabulary

- Foundational AI Principles

- Cross-Functional Data Literacy and Data Science

- LLMs and How to Use Them

- Critical Thinking and Safety Around AI Tools

- AI & Data Science Pedagogy

- AI and Data Science for Curriculum Development & Improvement

- Using AI Tools for Differentiation, Assessment & Feedback

- Data Science for Safe and Ethical AI Use

- Characteristics of Potentially Biased Algorithms and Their Shortcomings

A framework for evaluating how useful these contributions might be for the Teacher Education Clearinghouse would consider the following principles:

- Accuracy and relevance to subject matter

- Availability of existing resources vs. creation of new resources

- Ease of instructor use

- Likely classroom efficacy

- Safety, responsible use, and fairness of proposed tool/application/lesson

Additionally, this would also include a series of quick start guide books that would be broken down by topic and include a set of resources around foundational topics such as, “Introduction to AI” and “Foundational Data Science Vocabulary”.

When complete, this process would result in a national resource library, which would house a free series of asynchronous professional learning opportunities and classroom materials, activities, and datasets. This work could be promoted through the larger DoE as well as through the Regional Educational Laboratory program and state level stakeholders. The professional learning would consist of prerecorded virtual trainings and related materials (ex: slide decks, videos, interactive components of lessons, etc.). The materials would include educator-facing materials to support their professional development in Big Data and AI alongside student-facing lessons on AI Literacy that teachers could use to support their students. All materials would be publicly available for download on an ED-owned website. This will allow educators from any district, and any level of experience, to access materials that will improve their understanding and pedagogy. This especially benefits educators from less resourced environments because they can still access the training they need to adequately support their students, regardless of local capacity for potentially expensive training and resource acquisition. Now is the time to create such a resource center because there currently isn’t a set of vetted and reliable resources that are available and accessible to the larger educator community and teachers desperately need these resources to support themselves and their students in using these tools thoughtfully and safely. The successful development of this resource center would result in increased educator understanding of AI and data science such that the standing of U.S. students increases on such international measurements as the International Computer and Information Literacy Study (ICILS), as well as increased participation in STEAM fields that rely on these skills.

Conclusion

The field of education is at a turning point; the rise of advancements in AI and Big Data necessitate increased focus on these areas in the K-12 classroom; however, most educators do not have the preparation needed to adequately teach these topics to fully prepare their students. For the United States to continue to be a competitive global power in technology and innovation, we need a workforce that understands how to use, apply, and develop new innovations using AI and Data Science. This proposal for a library of high quality, open-source, vetted materials would support democratization of professional development for all educators and their students.

Modernizing AI Analysis in Education Contexts

The 2022 release of ChatGPT and subsequent foundation models sparked a generative AI (GenAI) explosion in American society, driving rapid adoption of AI-powered tools in schools, colleges, and universities nationwide. Education technology was one of the first applications used to develop and test ChatGPT in a real-world context. A recent national survey indicated that nearly 50% of teachers, students, and parents use GenAI Chatbots in school, and over 66% of parents and teachers believe that GenAI Chatbots can help students learn more and faster. While this innovation is exciting and holds tremendous promise to personalize education, educators, families, and researchers are concerned that AI-powered solutions may not be equally useful, accurate, and effective for all students, in particular students from minoritized populations. It is possible that as this technology further develops that bias will be addressed; however, to ensure that students are not harmed as these tools become more widespread it is critical for the Department of Education to provide guidance for education decision-makers to evaluate AI solutions during procurement, to support EdTech developers to detect and mitigate bias in their applications, and to develop new fairness methods to ensure that these solutions serve the students with the most to gain from our educational systems. Creating this guidance will require leadership from the Department of Education to declare this issue as a priority and to resource an independent organization with the expertise needed to deliver these services.

Challenge and Opportunity

Known Bias and Potential Harm

There are many examples of the use of AI-based systems introducing more bias into an already-biased system. One example with widely varying results for different student groups is the use of GenAI tools to detect AI-generated text as a form of plagiarism. Liang et. al found that several GPT-based plagiarism checkers frequently identified the writing of students for whom English is not their first language as AI-generated, even though their work was written before ChatGPT was available. The same errors did not occur with text generated by native English speakers. However, in a publication by Jiang (2024), no bias against non-native English speakers was encountered in the detection of plagiarism between human-authored essays and ChatGPT-generated essays written in response to analytical writing prompts from the GRE, which is an example of how thoughtful AI tool design and representative sampling in the training set can achieve fairer outcomes and mitigate bias.

Beyond bias, researchers have raised additional concerns about the overall efficacy of these tools for all students; however, more understanding around different results for subpopulations and potential instances of bias(es) is a critical aspect of deciding whether or not these tools should be used by teachers in classrooms. For AI-based tools to be usable in high-stakes educational contexts such as testing, detecting and mitigating bias is critical, particularly when the consequences of being incorrect are so high, such as for students from minoritized populations who may not have the resources to recover from an error (e.g., failing a course, being prevented from graduating school).

Another example of algorithmic bias before the widespread emergence of GenAI which illustrates potential harms is found in the Wisconsin Dropout Early Warning System. This AI-based tool was designed to flag students who may be at risk of dropping out of school; however, an analysis of the outcomes of these predictions found that the system disproportionately flagged African American and Hispanic students as being likely to drop out of school when most of these students were not at risk of dropping out). When teachers learn that one of their students is at risk, this may change how they approach that student, which can cause further negative treatment and consequences for that student, creating a self-fulfilling prophecy and not providing that student with the education opportunities and confidence that they deserve. These examples are only two of many consequences of using systems that have underlying bias and demonstrate the criticality of conducting fairness analysis before these systems are used with actual students.

Existing Guidance on Fair AI & Standards for Education Technology Applications

Guidance for Education Technology Applications

Given the harms that algorithmic bias can cause in educational settings, there is an opportunity to provide national guidelines and best practices that help educators avoid these harms. The Department of Education is already responsible for protecting student privacy and provides guidelines via the Every Student Succeeds Act (ESSA) Evidence Levels to evaluate the quality of EdTech solution evidence. The Office of Educational Technology, through support of a private non-profit organization (Digital Promise) has developed guidance documents for teachers and administrators, and another for education technology developers (U.S. Department of Education, 2023, 2024). In particular, “Designing for Education with Artificial Intelligence” includes guidance for EdTech developers including an entire section called “Advancing Equity and Protecting Civil Rights” that describes algorithmic bias and suggests that, “Developers should proactively and continuously test AI products or services in education to mitigate the risk of algorithmic discrimination.” (p 28). While this is a good overall guideline, the document critically is not sufficient to help developers conduct these tests.

Similarly, the National Institute of Standards and Technology has released a publication on identifying and managing bias in AI . While this publication highlights some areas of the development process and several fairness metrics, it does not provide specific guidelines to use these fairness metrics, nor is it exhaustive. Finally demonstrating the interest of industry partners, the EDSAFE AI Alliance, a philanthropically-funded alliance representing a diverse group of companies in educational technology, has also created guidance in the form of the 2024 SAFE (Safety, Accountability, Fairness, and Efficacy) Framework. Within the Fairness section of the framework, the authors highlight the importance of using fair training data, monitoring for bias, and ensuring accessibility of any AI-based tool. But again, this framework does not provide specific actions that education administrators, teachers, or EdTech developers can take to ensure these tools are fair and are not biased against specific populations. The risk to these populations and existing efforts demonstrate the need for further work to develop new approaches that can be used in the field.

Fairness in Education Measurement

As AI is becoming increasingly used in education, the field of educational measurement has begun creating a set of analytic approaches for finding examples of algorithmic bias, many of which are based on existing approaches to uncovering bias in educational testing. One common tool is called Differential Item Functioning (DIF), which checks that test questions are fair for all students regardless of their background. For example, it ensures that native English speakers and students learning English have an equal chance to succeed on a question if they have the same level of knowledge . When differences are found, this indicates that a student’s performance on that question is not based on their knowledge of the content.

While DIF checks have been used for several decades as a best practice in standardized testing, a comparable process in the use of AI for assessment purposes does not yet exist. There also is little historical precedent indicating that for-profit educational companies will self-govern and self-regulate without a larger set of guidelines and expectations from a governing body, such as the federal government.

We are at a critical juncture as school districts begin adopting AI tools with minimal guidance or guardrails, and all signs point to an increase of AI in education. The US Department of Education has an opportunity to take a proactive approach to ensuring AI fairness through strategic programs of support for school leadership, developers in educational technology, and experts in the field. It is important for the larger federal government to support all educational stakeholders under a common vision for AI fairness while the field is still at the relative beginning of being adopted for educational use.

Plan of Action

To address this situation, the Department of Education’s Office of the Chief Data Officer should lead development of a national resource that provides direct technical assistance to school leadership, supports software developers and vendors of AI tools in creating quality tech, and invests resources to create solutions that can be used by both school leaders and application developers. This office is already responsible for data management and asset policies, and provides resources on grants and artificial intelligence for the field. The implementation of these resources would likely be carried out via grants to external actors with sufficient technical expertise, given the rapid pace of innovation in the private and academic research sectors. Leading the effort from this office ensures that these advances are answering the most important questions and can integrate them into policy standards and requirements for education solutions. Congress should allocate additional funding to the Department of Education to support the development of a technical assistance program for school districts, establish new grants for fairness evaluation tools that span the full development lifecycle, and pursue an R&D agenda for AI fairness in education. While it is hard to provide an exact estimate, similar existing programs currently cost the Department of Education between $4 and $30 million a year.

Action 1. The Department of Education Should Provide Independent Support for School Leadership Through a Fair AI Technical Assistance Center (FAIR-AI-TAC)

School administrators are hearing about the promise and concerns of AI solutions in the popular press, from parents, and from students. They are also being bombarded by education technology providers with new applications of AI within existing tools and through new solutions.

These busy school leaders do not have time to learn the details of AI and bias analysis, nor do they have the technical background required to conduct deep technical evaluations of fairness within AI applications. Leaders are forced to either reject these innovations or implement them and expose their students to significant potential risk with the promise of improved learning. This is not an acceptable status quo.

To address these issues, the Department of Education should create an AI Technical Assistance Center (the Center) that is tasked with providing direct guidance to state and local education leaders who want to incorporate AI tools fairly and effectively. The Center should be staffed by a team of professionals with expertise in data science, data safety, ethics, education, and AI system evaluation. Additionally, the Center should operate independently of AI tool vendors to maintain objectivity.

There is precedent for this type of technical support. The U.S. Department of Education’s Privacy Technical Assistance Center (PTAC) provides guidance related to data privacy and security procedures and processes to meet FERPA guidelines; they operate a help desk via phone or email, develop training materials for broad use, and provide targeted training and technical assistance for leaders. A similar kind of center could be stood up to support leaders in education who need support evaluating proposed policy or procurement decisions.

This Center should provide a structured consulting service offering a variety of levels of expertise based on the individual stakeholder’s needs and the variety of levels of potential impact of the system/tool being evaluated on learners; this should include everything from basic levels of AI literacy to active support in choosing technological solutions for educational purposes. The Center should partner with external organizations to develop a certification system for high-quality AI educational tools that have passed a series of fairness checks. Creating a fairness certification (operationalized by third party evaluators) would make it much easier for school leaders to recognize and adopt fair AI solutions that meet student needs.

Action 2. The Department of Education Should Provide Expert Services, Data, and Grants for EdTech Developers

There are many educational technology developers with AI-powered innovations. Even when well-intentioned, some of these tools do not achieve their desired impacts or may be unintentionally unsafe due to a lack of processes and tests for fairness and safety.

Educational Technology developers generally operate under significant constraints when incorporating AI models into their tools and applications. Student data is often highly detailed and deeply personal, potentially containing financial, disability, and educational status information that is currently protected by FERPA, which makes it unavailable for use in AI model training or testing.

Developers need safe, legal, and quality datasets that they can use for testing for bias, as well as appropriate bias evaluation tools. There are several promising examples of these types of applications and new approaches to data security, such as the recently awarded NSF SafeInsights project, which allows analysis without disclosing the underlying data. In addition, philanthropically-funded organizations such as the Allen Institute for AI have released LLM evaluation tools that could be adapted and provided to Education Technology developers for testing. A vetted set of evaluation tools, along with more detailed technical resources and instructions for how to use them would encourage developers to incorporate bias evaluations early and often. Currently, there are very few market incentives or existing requirements that push developers to invest the necessary time or resources into this type of fairness analysis. Thus, the government has a key role to play here.

The Department of Education should also fund a new grant program that tasks grantees with developing a robust and independently validated third-party evaluation system that checks for fairness violations and biases throughout the model development process from pre-processing of data, to the actual AI use, to testing after AI results are created. This approach would support developers in ensuring that the tools they are publishing meet an agreed-upon minimum threshold for safe and fair use and could provide additional justification for the adoption of AI tools by school administrators.

Action 3. The Department of Education Should Develop Better Fairness R&D Tools with Researchers

There is still no consensus on best practices for how to ensure that AI tools are fair. As AI capabilities evolve, the field needs an ongoing vetted set of analyses and approaches that will ensure that any tools being used in an educational context are safe and fair for use with no unintended consequences.

The Department of Education should lead the creation of a a working group or task force comprised of subject matter experts from education, educational technology, educational measurement, and the larger AI field to identify the state of the art in existing fairness approaches for education technology and assessment applications, with a focus on modernized conceptions of identity. This proposed task force would be an inter-organizational group that would include representatives from several different federal government offices, such as the Office of Educational Technology and the Chief Data Office as well as prominent experts from industry and academia. An initial convening could be conducted alongside leading national conferences that already attract thousands of attendees conducting cutting-edge education research (such as the American Education Research Association and National Council for Measurement in Education).

The working group’s mandate should include creating a set of recommendations for federal funding to advance research on evaluating AI educational tools for fairness and efficacy. This research agenda would likely span multiple agencies including NIST, the Institute of Education Sciences of the U.S. Department of Education, and the National Science Foundation. There are existing models for funding early stage research and development with applied approaches, including the IES “Accelerate, Transform, Scale” programs that integrate learning sciences theory with efforts to scale theories through applied education technology program and Generative AI research centers that have the existing infrastructure and mandates to conduct this type of applied research.

Additionally, the working group should recommend the selection of a specialized group of researchers who would contribute ongoing research into new empirically-based approaches to AI fairness that would continue to be used by the larger field. This innovative work might look like developing new datasets that deliberately look for instances of bias and stereotypes, such as the CrowS-Pairs dataset. It may build on current cutting edge research into the specific contributions of variables and elements of LLM models that directly contribute to biased AI scores, such as the work being done by the AI company Anthropic. It may compare different foundation LLMs and demonstrate specific areas of bias within their output. It may also look like a collaborative effort between organizations, such as the development of the RSM-Tool, which looks for biased scoring. Finally, it may be an improved auditing tool for any portion of the model development pipeline. In general, the field does not yet have a set of universally agreed upon actionable tools and approaches that can be used across contexts and applications; this research team would help create these for the field.

Finally, the working group should recommend policies and standards that would incentivize vendors and developers working on AI education tools to adopt fairness evaluations and share their results.

Conclusion

As AI-based tools continue being used for educational purposes, there is an urgent need to develop new approaches to evaluating these solutions to fairness that include modern conceptions of student belonging and identity. This effort should be led by the Department of Education, through the Office of the Chief Data Officer, given the technical nature of the services and the relationship with sensitive data sources. While the Chief Data Officer should provide direction and leadership for the project, partnering with external organizations through federal grant processes would provide necessary capacity boosts to fulfill the mandate described in this memo.As we move into an age of widespread AI adoption, AI tools for education will be increasingly used in classrooms and in homes. Thus, it is imperative that robust fairness approaches are deployed before a new tool is used in order to protect our students, and also to protect the developers and administrators from potential litigation, loss of reputation, and other negative outcomes.

This action-ready policy memo is part of Day One 2025 — our effort to bring forward bold policy ideas, grounded in science and evidence, that can tackle the country’s biggest challenges and bring us closer to the prosperous, equitable and safe future that we all hope for whoever takes office in 2025 and beyond.

PLEASE NOTE (February 2025): Since publication several government websites have been taken offline. We apologize for any broken links to once accessible public data.

When AI is used to grade student work, fairness is evaluated by comparing the scores assigned by AI to those assigned by human graders across different demographic groups. This is often done using statistical metrics, such as the standardized mean difference (SMD), to detect any additional bias introduced by the AI. A common benchmark for SMD is 0.15, which suggests the presence of potential machine bias compared to human scores. However, there is a need for more guidance on how to address cases where SMD values exceed this threshold.

In addition to SMD, other metrics like exact agreement, exact + adjacent agreement, correlation, and Quadratic Weighted Kappa are often used to assess the consistency and alignment between human and AI-generated scores. While these methods provide valuable insights, further research is needed to ensure these metrics are robust, resistant to manipulation, and appropriately tailored to specific use cases, data types, and varying levels of importance.

Existing approaches to demographic post hoc analysis of fairness assume that there are two discrete populations that can be compared, for example students from African-American families vs. those not from African-American families, students from an English language learner family background vs. those that are not, and other known family characteristics. However in practice, people do not experience these discrete identities. Since at least the 1980s, contemporary sociological theories have emphasized that a person’s identity is contextual, hybrid, and fluid/changing. One current approach to identity that integrates concerns of equity that has been applied to AI is “intersectional identity” theory . This approach has begun to develop promising new methods that bring contemporary approaches to identity into evaluating fairness of AI using automated methods. Measuring all interactions between variables results in too small a sample; these interactions can be prioritized using theory or design principles or more advanced statistical techniques (e.g., dimensional data reduction techniques).

Driving Equitable Healthcare Innovations through an AI for Medicaid (AIM) Initiative

Artificial intelligence (AI) has transformative potential in the public health space – in an era when millions of Americans have limited access to high-quality healthcare services, AI-based tools and applications can enable remote diagnostics, drive efficiencies in implementation of public health interventions, and support clinical decision-making in low-resource settings. However, innovation driven primarily by the private sector today may be exacerbating existing disparities by training models on homogenous datasets and building tools that primarily benefit high socioeconomic status (SES) populations.

To address this gap, the Center for Medicare and Medicaid Innovation (CMMI) should create an AI for Medicaid (AIM) Initiative to distribute competitive grants to state Medicaid programs (in partnership with the private sector) for pilot AI solutions that lower costs and improve care delivery for rural and low-income populations covered by Medicaid.

Challenge & Opportunity

In 2022, the United States spent $4.5 trillion on healthcare, accounting for 17.3% of total GDP. Despite spending far more on healthcare per capita compared to other high-income countries, the United States has significantly worse outcomes, including lower life expectancy, higher death rates due to avoidable causes, and lesser access to healthcare services. Further, the 80 million low-income Americans reliant on state-administered Medicaid programs often have below-average health outcomes and the least access to healthcare services.

AI has the potential to transform the healthcare system – but innovation solely driven by the private sector results in the exacerbation of the previously described inequities. Algorithms in general are often trained on datasets that do not represent the underlying population – in many cases, these training biases result in tools and models that perform poorly for racial minorities, people living with comorbidities, and people of low SES. For example, until January 2023, the model used to prioritize patients for kidney transplants systematically ranked Black patients lower than White patients – the race component was identified and removed due to advocacy efforts within the medical community. AI models, while significantly more powerful than traditional predictive algorithms, are also more difficult to understand and engineer, resulting in the likelihood of further perpetuating such biases.

Additionally, startups innovating the digital health space today are not incentivized to develop solutions for marginalized populations. For example, in FY 2022, the top 10 startups focused on Medicaid received only $1.5B in private funding, while their Medicare Advantage (MA)-focused counterparts received over $20B. Medicaid’s lower margins are not attractive to investors, so digital health development targets populations that are already well-insured and have higher degrees of access to care.

The Federal Government is uniquely positioned to bridge the incentive gap between developers of AI-based tools in the private sector and American communities who would benefit most from said tools. Accordingly, the Center for Medicare and Medicaid Innovation (CMMI) should launch the AI for Medicaid (AIM) Initiative to incentivize and pilot novel AI healthcare tools and solutions targeting Medicaid recipients. Precedents in other countries demonstrate early success in state incentives unlocking health AI innovations – in 2023, the United Kingdom’s National Health Service (NHS) partnered with Deep Medical to pilot AI software that streamlines services by predicting and mitigating missed appointment risk. The successful pilot is now being adopted more broadly and is projected to save the NHS over $30M annually in the coming years.

The AIM Initiative, guided by the structure of the former Medicaid Innovation Accelerator Program (IAP), President Biden’s executive order on integrating equity into AI development, and HHS’ Equity Plan (2022), will encourage the private sector to partner with State Medicaid programs on solutions that benefit rural and low-income Americans covered by Medicaid and drive efficiencies in the overall healthcare system.

Plan of Action

CMMI will launch and operate the AIM Initiative within the Department of Health and Human Services (HHS). $20M of HHS’ annual budget request will be allocated towards the program. State Medicaid programs, in partnership with the private sector, will be invited to submit proposals for competitive grants. In addition to funding, CMMI will leverage the former structure of the Medicaid IAP program to provide state Medicaid agencies with technical assistance throughout their participation in the AIM Initiative. The programs ultimately selected for pilot funding will be monitored and evaluated for broader implementation in the future.

Sample Detailed Timeline

- 0-6 months:

- HHS Secretary to announce and launch the AI for Medicaid (AIM) Initiative within CMMI (e.g., delineating personnel responsibilities and engaging with stakeholders to shape the program)

- HHS to include AIM funding in annual budget request to Congress ($20M allocation)

- 6-12 months:

- CMMI to engage directly with state Medicaid agencies to support proposal development and facilitate connections with private sector partners

- CMMI to complete solicitation period and select ~7-10 proposals for pilot funding of ~$2-5M each by end of Year 1

- Year 2-7: Launch and roll out selected AI projects, led by state Medicaid agencies with continued technical assistance from CMMI

- Year 8: CMMI to produce an evaluative report and provide recommendations for broader adoption of AI tools and solutions within Medicaid-covered and other populations

Risks and Limitations

- Participation: Success of the initiative relies on state Medicaid programs and private sector partners’ participation. To mitigate this risk, CMMI will engage early with the National Association of Medicaid Directors (NAMD) to generate interest and provide technical assistance in proposal development. These conversations will also include input and support from the HHS Office of the Chief AI Officer (OCAIO) and its AI Council/Community of Practice. Further, startups in the healthcare AI space will be invited to engage with CMMI on identifying potential partnerships with state Medicaid agencies. A secondary goal of the initiative will be to ensure a number of private sector partners are involved in AIM.

- Oversight: AI is at the frontier of technological development today, and it is critical to ensure guardrails are in place to protect patients using AI technologies from potential adverse outcomes. To mitigate this risk, state Medicaid agencies will be required to submit detailed evaluation plans with their proposals. Additionally, informed consent and the ability to opt-out of data sharing when engaging with personally identifiable information (PII) and diagnostic or therapeutic technologies will be required. Technology partners (whether private, academic, or public sector) will further be required to demonstrate (1) adequate testing to identify and reduce bias in their AI tools to reasonable standards, (2) engagement with beneficiaries in the development process, and (3) leveraging testing environments that reflect the particular context of the Medicaid population. Finally, all proposals must adhere to guidelines published by AI guidelines adopted by HHS and the federal government more broadly, such as the CMS AI Playbook, the HHS Trustworthy AI Playbook, and any imminent regulations.

- Longevity: As a pilot grant program, the initiative does not promise long-term results for the broader population and will only facilitate short-term projects at the state level. Consequently, HHS leadership must remain committed to program evaluation and a long-term outlook on how AI can be integrated to support Americans more broadly. AI technologies or tools considered for acquisition by state Medicaid agencies or federal agencies after pilot implementation should ensure compliance with OMB guidelines.

Conclusion

The AI for Medicaid Initiative is an important step in ensuring the promise of artificial intelligence in healthcare extends to all Americans. The initiative will enable the piloting of a range of solutions at a relatively low cost, engage with stakeholders across the public and private sectors, and position the United States as a leader in healthcare AI technologies. Leveraging state incentives to address a critical market failure in the digital health space can additionally unlock significant efficiencies within the Medicaid program and the broader healthcare system. The rural and low-income Americans reliant on Medicaid have too often been an afterthought in access to healthcare services and technologies – the AIM Initiative provides an opportunity to address this health equity gap.

This action-ready policy memo is part of Day One 2025 — our effort to bring forward bold policy ideas, grounded in science and evidence, that can tackle the country’s biggest challenges and bring us closer to the prosperous, equitable and safe future that we all hope for whoever takes office in 2025 and beyond.

PLEASE NOTE (February 2025): Since publication several government websites have been taken offline. We apologize for any broken links to once accessible public data.

Accelerating Materials Science with AI and Robotics

Innovations in materials science enable innumerable downstream innovations: steel enabled skyscrapers, and novel configurations of silicon enabled microelectronics. Yet progress in materials science has slowed in recent years. Fundamentally, this is because there is a vast universe of potential materials, and the only way to discover which among them are most useful is to experiment. Today, those experiments are largely conducted by hand. Innovations in artificial intelligence and robotics will allow us to accelerate the search process using foundation AI models for science research and automate much of the experimentation with robotic, self-driving labs. This policy memo recommends the Department of Energy (DOE) lead this effort because of its unique expertise in supercomputing, AI, and its large network of National Labs.

Challenge and Opportunity

Take a look at your smartphone. How long does its battery last? How durable is its frame? How tough is its screen? How fast and efficient are the chips inside it?

Each of these questions implicates materials science in fundamental ways. The limits of our technological capabilities are defined by the limits of what we can build, and what we can build is defined by what materials we have at our disposal. The early eras of human history are named for materials: the Stone Age, the Bronze Age, the Iron Age. Even today, the cradle of American innovation is Silicon Valley, a reminder that even our digital era is enabled by finding innovative ways to assemble matter to accomplish novel things.

Materials science has been a driver of economic growth and innovation for decades. Improvements to silicon purification and processing—painstakingly worked on in labs for decades—fundamentally enabled silicon-based semiconductors, a $600 billion industry today that McKinsey recently projected would double in size by 2030. The entire digital economy, conservatively estimated by the Bureau of Economic Analysis (BEA) at $3.7 trillion in the U.S. alone, in turn, rests on semiconductors. Plastics, another profound materials science innovation, are estimated to have generated more than $500 billion in economic value in the U.S. last year. The quantitative benefits are staggering, but even qualitatively, it is impossible to imagine modern life without these materials.

However, present-day materials are beginning to show their age. We need better batteries to accelerate the transition to clean energy. We may be approaching the limits of traditional methods of manufacturing semiconductors in the next decade. We require exotic new forms of magnets to bring technologies like nuclear fusion to life. We need materials with better thermal properties to improve spacecraft.

Yet materials science and engineering—the disciplines of discovering and learning to use new materials—have slowed down in recent decades. The low-hanging fruit has been plucked, and the easy discoveries are old news. We’re approaching the limits of what our materials can do because we are also approaching the limits of what the traditional practice of materials science can do.

Today, materials science proceeds at much the same pace as it did half a century ago: manually, with small academic labs and graduate students formulating potential new combinations of elements, synthesizing those combinations, and studying their characteristics. Because there are more ways to configure matter than there are atoms in the universe, manually searching through the space of possible materials is an impossible task.

Fortunately, AI and robotics present an opportunity to automate that process. AI foundation models for physics and chemistry can be used to simulate potential materials with unprecedented speed and low cost compared to traditional ab initio methods. Robotic labs (also known as “self-driving labs”) can automate the manual process of performing experiments, allowing scientists to synthesize, validate, and characterize new materials twenty-four hours a day at dramatically lower costs. The experiments will generate valuable data for further refining the foundation models, resulting in a positive feedback loop. AI language models like OpenAI’s GPT-4 can write summaries of experimental results and even help ideate new experiments. The scientists and their grad students, freed from this manual and often tedious labor, can do what humans do best: think creatively and imaginatively.

Achieving this goal will require a coordinated effort, significant investment, and expertise at the frontiers of science and engineering. Because much of materials science is basic R&D—too far from commercialization to attract private investment—there is a unique opportunity for the federal government to lead the way. As with much scientific R&D, the economic benefits of new materials science discoveries may take time to emerge. One literature review estimated that it can take roughly 20 years for basic research to translate to economic growth. Research indicates that the returns—once they materialize—are significant. A study from the Federal Reserve Bank of Dallas suggests a return of 150-300% on federal R&D spending.

The best-positioned department within the federal government to coordinate this effort is the DOE, which has many of the key ingredients in place: a demonstrated track record of building and maintaining the supercomputing facilities required to make physics-based AI models, unparalleled scientific datasets with which to train those models collected over decades of work by national labs and other DOE facilities, and a skilled scientific and engineering workforce capable of bringing challenging projects to fruition.

Plan of Action

Achieving the goal of using AI and robotics to simulate potential materials with unprecedented speed and low cost, and benefit from the discoveries, rests on five key pillars:

- Creating large physics and chemistry datasets for foundation model training (estimated cost: $100 million)

- Developing foundation AI models for materials science discovery, either independently or in collaboration with the private sector (estimated cost: $10-100 million, depending on the nature of the collaboration);

- Building 1-2 pilot self-driving labs (SDLs) aimed at establishing best practices, building a supply chain for robotics and other equipment, and validating the scientific merit of SDLs (estimated cost: $20-40 million);

- Making self-driving labs an official priority of the DOE’s preexisting FASST initiative (described below);

- Directing the DOE’s new Foundation for Energy Security and Innovation (FESI) to prioritize establishing fellowships and public-private partnerships to support items (1) and (2), both financially and with human capital.

The total cost of the proposal, then, is estimated at between $130-240 million. The potential return on this investment, though, is far higher. Moderate improvements to battery materials could drive tens or hundreds of billions of dollars in value. Discovery of a “holy grail” material, such as a room-temperature, ambient-pressure superconductor, could create trillions of dollars in value.

Creating Materials Science Foundation Model Datasets

Before a large materials science foundation model can be trained, vast datasets must be assembled. DOE, through its large network of scientific facilities including particle colliders, observatories, supercomputers, and other experimental sites, collects enormous quantities of data–but this, unfortunately, is only the beginning. DOE’s data infrastructure is out-of-date and fragmented between different user facilities. Data access and retention policies make sharing and combining different datasets difficult or impossible.

All of these policy and infrastructural decisions were made far before training large-scale foundation models was a priority. They will have to be changed to capitalize on the newfound opportunity of AI. Existing DOE data will have to be reorganized into formats and within technical infrastructure suited to training foundation models. In some cases, data access and retention policies will need to be relaxed or otherwise modified.

In other cases, however, highly sensitive data will need to be integrated in more sophisticated ways. A 2023 DOE report, recognizing the problems with DOE data infrastructure, suggests developing federated learning capabilities–an active area of research in the broader machine learning community–which would allow for data to be used for training without being shared. This would, the report argues, ”allow access and connections to the information through access control processes that are developed explicitly for multilevel privacy.”

This work will require deep collaboration between data scientists, machine learning scientists and engineers, and domain-specific scientists. It is, by far, the least glamorous part of the process–yet it is the necessary groundwork for all progress to follow.

Building AI Foundation Models for Science

Fundamentally, AI is a sophisticated form of statistics. Deep learning, the broad approach that has undergirded all advances in AI over the past decade, allows AI models to uncover deep patterns in extremely complex datasets, such as all the content on the internet, the genomes of millions of organisms, or the structures of thousands of proteins and other biomolecules. Models of this kind are sometimes loosely referred to as “foundation models.”

Foundation models for materials science can take many different forms, incorporating various aspects of physics, chemistry, and even—for the emerging field of biomaterials—biology. Broadly speaking, foundation models can help materials science in two ways: inverse design and property prediction. Inverse design allows scientists to input a given set of desired characteristics (toughness, brittleness, heat resistance, electrical conductivity, etc.) and receive a prediction for what material might be able to achieve those properties. Property prediction is the opposite flow of information, inputting a given material and receiving a prediction of what properties it will have in the real world.

DOE has already proposed creating AI foundation models for materials science as part of its Frontiers in Artificial Intelligence for Science, Security and Technology (FASST) initiative. While this initiative contains numerous other AI-related science and technology objectives, supporting it would enable the creation of new foundation models, which can in turn be used to support the broader materials science work.

DOE’s long history of stewarding America’s national labs makes it the best-suited home for this proposal. DOE labs and other DOE sub-agencies have decades of data from particle accelerators, nuclear fusion reactors, and other specialized equipment rarely seen in other facilities. These labs have performed hundreds of thousands of experiments in physics and chemistry over their lifetimes, and over time, DOE has created standardized data collection practices. AI models are defined by the data that they are trained with, and DOE has some of the most comprehensive physics and chemistry datasets in the country—if not the world.

The foundation models created by DOE should be made available to scientists. The extent of that availability should be determined by the sensitivity of the data used to train the model and other potential risks associated with broad availability. If, for example, a model was created using purely internal or otherwise sensitive DOE datasets, it might have to be made available only to select audiences with usage monitored; otherwise, there is a risk of exfiltrating sensitive training data. If there are no such data security concerns, DOE could choose to fully open source the models, meaning their weights and code would be available to the general public. Regardless of how the models themselves are distributed, the fruits of all research enabled by both DOE foundation models and self-driving labs should be made available to the academic community and broader public.

Scaling Self-Driving Labs

Self-driving labs are largely automated facilities that allow robotic equipment to autonomously conduct scientific experiments with human supervision. They are well-suited to relatively simple, routine experiments—the exact kind involved in much of materials science. Recent advancements in robotics have been driven by a combination of cheaper hardware and enhanced AI models. While fully autonomous humanoid robots capable of automating arbitrary manual labor are likely years away, it is now possible to configure facilities to automate a broad range of scripted tasks.

Many experiments in materials science involve making iterative tweaks to variables within the same broad experimental design. For example, a grad student might tweak the ratios of the elements that constitute the material, or change the temperature at which the elements are combined. These are highly automatable tasks. Furthermore, by allowing multiple experiments to be conducted in parallel, self-driving labs allow scientists to rapidly accelerate the pace at which they conduct their work.

Creating a successful large-scale self-driving lab will require collaboration with private sector partners, particularly robot manufacturers and the creators of AI models for robotics. Fortunately, the United States has many such firms. Therefore, DOE should initiate a competitive bidding process for the robotic equipment that will be housed within its self-driving labs. Because DOE has experience in building lab facilities, it should directly oversee the construction of the self-driving lab itself.

The United States already has several small-scale self-driving labs, primarily led by investments at DOE National Labs. The small size of these projects, however, makes it difficult to achieve the economies of scale that are necessary for self-driving labs to become an enduring part of America’s scientific ecosystem.

AI creates additional opportunities to expand automated materials science. Frontier language and multi-modal models, such as OpenAI’s GPT-4o, Anthropic’s Claude 3.5, and Google’s Gemini family, have already been used to ideate scientific experiments, including directing a robotic lab in the fully autonomous synthesis of a known chemical compound. These models would not operate with full autonomy. Instead, scientists would direct the inquiry and the design of the experiment, with the models autonomously suggesting variables to tweak.

Modern frontier models have substantial knowledge in all fields of science, and can hold all of the academic literature relevant to a specific niche of materials science within their active attention. This combination means that they have—when paired with a trained human—the scientific intuition to iteratively tweak an experimental design. They can also write the code necessary to direct the robots in the self-driving lab. Finally, they can write summaries of the experimental results—including the failures. This is crucial, because, given the constraints on their time, scientists today often only report their successes in published writing. Yet failures are just as important to document publicly to avoid other scientists duplicating their efforts.

Once constructed, this self-driving lab infrastructure can be a resource made available as another DOE user facility to materials scientists across the country, much as DOE supercomputers are today. DOE already has a robust process and infrastructure in place to share in-demand resources among different scientists, again underscoring why the Department is well-positioned to lead this endeavor.

Conclusion

Taken together, materials science faces a grand challenge, yet an even grander opportunity. Room-temperature, ambient-pressure superconductors—permitted by the laws of physics but as-yet undiscovered—could transform consumer electronics, clean energy, transportation, and even space travel. New forms of magnets could enable a wide range of cutting-edge technologies, such as nuclear fusion reactors. High-performance ceramics could improve reusable rockets and hypersonic aircraft. The opportunities are limitless.

With a coordinated effort led by DOE, the federal government can demonstrate to Americans that scientific innovation and technological progress can still deliver profound improvements to daily life. It can pave the way for a new approach to science firmly rooted in modern technology, creating an example for other areas of science to follow. Perhaps most importantly, it can make Americans excited about the future—something that has been sorely lacking in American society in recent decades.

AI is a radically transformative technology. Contemplating that transformation in the abstract almost inevitably leads to anxiety and fear. There are legislative proposals, white papers, speeches, blog posts, and tweets about using AI to positive ends. Yet merely talking about positive uses of AI is insufficient: the technology is ready, and the opportunities are there. Now is the time to act.

This action-ready policy memo is part of Day One 2025 — our effort to bring forward bold policy ideas, grounded in science and evidence, that can tackle the country’s biggest challenges and bring us closer to the prosperous, equitable and safe future that we all hope for whoever takes office in 2025 and beyond.

PLEASE NOTE (February 2025): Since publication several government websites have been taken offline. We apologize for any broken links to once accessible public data.

Compared to “cloud labs” for biology and chemistry, the risks associated with self-driving labs for materials science are low. In a cloud lab equipped with nucleic acid synthesis machines, for example, genetic sequences need to be screened carefully to ensure that they are not dangerous pathogens—a nontrivial task. There are not analogous risks for most materials science applications.

However, given the dual-use nature of many novel materials, any self-driving lab would need to have strong cybersecurity and intellectual property protections. Scientists using self-driving lab facilities would need to be carefully screened by DOE—fortunately, this is an infrastructure DOE possesses already for determining access to its supercomputing facilities.

Not all materials involve easily repeatable, and hence automatable, experiments for synthesis and characterization. But many important classes of materials do, including:

- Thin films and coatings

- Photonic and optoelectronic materials such as perovskites (used for solar panels)

- Polymers and monomers

- Battery and energy storage materials

Over time, additional classes of materials can be added.

DOE can and should be creative and resourceful in finding additional resources beyond public funding for this project. Collaborations on both foundation AI models and scaling self-driving labs between DOE and private sector AI firms can be uniquely facilitated by DOE’s new Foundation for Energy Security and Innovation (FESI), a private foundation created by DOE to support scientific fellowships, public-private partnerships, and other key mission-related initiatives.

Yes. Some private firms have recently demonstrated the promise. In late 2023, Google DeepMind unveiled GNoME, a materials science model that identified thousands of new potential materials (though they need to be experimentally validated). Microsoft’s GenMatter model pushed in a similar direction. Both models were developed in collaboration with DOE National Labs (Lawrence Berkeley in the case of DeepMind, and Pacific Northwest in the case of Microsoft).

America’s Teachers Innovate: A National Talent Surge for Teaching in the AI Era

Thanks to Melissa Moritz, Patricia Saenz-Armstrong, and Meghan Grady for their input on this memo.

Teaching our young children to be productive and engaged participants in our society and economy is, alongside national defense, the most essential job in our country. Yet the competitiveness and appeal of teaching in the United States has plummeted over the past decade. At least 55,000 teaching positions went unfilled this year, with long-term annual shortages set to double to 100,000 annually. Moreover, teachers have little confidence in their self-assessed ability to teach critical digital skills needed for an AI enabled future and in the profession at large. Efforts in economic peer countries such as Canada or China demonstrate that reversing this trend is feasible. The new Administration should announce a national talent surge to identify, scale, and recruit into innovative teacher preparation models, expand teacher leadership opportunities, and boost the profession’s prestige. “America’s Teachers Innovate” is an eight-part executive action plan to be coordinated by the White House Office of Science and Technology Policy (OSTP), with implementation support through GSA’s Challenge.Gov and accompanied by new competitive priorities in existing National Science Foundation (NSF), Department of Education (ED), Department of Labor (DoL), and Department of Defense education (DoDEA) programs.

Challenge and Opportunity

Artificial Intelligence may add an estimated $2.6 trillion to $4.4 trillion annually to the global economy. Yet, if the U.S. is not able to give its population the proper training to leverage these technologies effectively, the U.S. may witness a majority of this wealth flow to other countries over the next few decades while American workers are automated from, rather than empowered by, AI deployment within their sectors. The students who gain the digital, data, and AI foundations to work in tandem with these systems – currently only 5% of graduating high school students in the U.S. – will fare better in a modern job market than the majority who lack them. Among both countries and communities, the AI skills gap will supercharge existing digital divides and dramatically compound economic inequality.

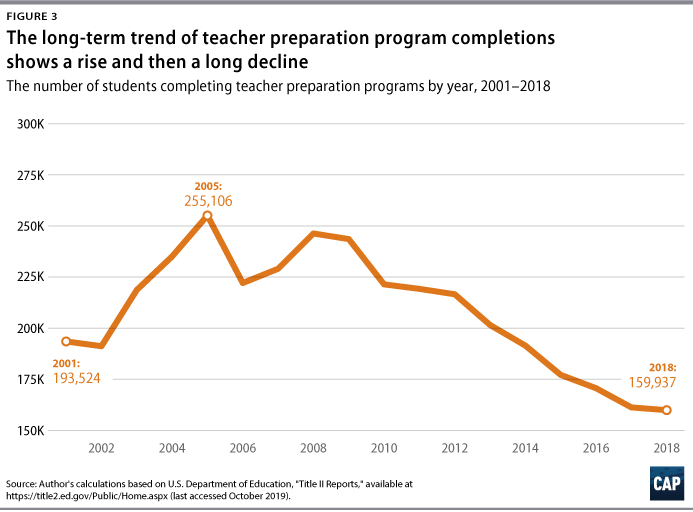

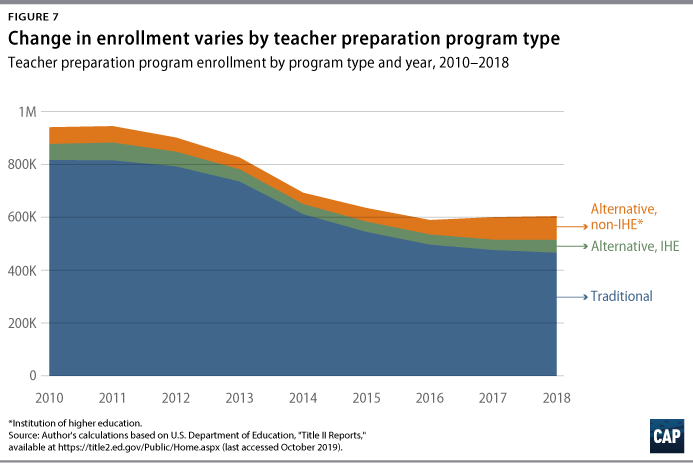

China, India, Germany, Canada, and the U.K. have all made investments to dramatically reshape the student experience for the world of AI and train teachers to educate a modern, digitally-prepared workforce. While the U.S. made early research & development investments in computer science and data science education through the National Science Foundation, we have no teacher workforce ready to implement these innovations in curriculum or educational technology. The number of individuals completing a teacher preparation program has fallen 25% over the past decade; long-term forecasts suggest at least 100,000 shortages annually, teachers themselves are discouraging others from joining their own profession (especially in STEM), and preparing to teach digital skills such as computer science was the least popular option for prospective educators to pursue. In 2022, even Harvard discontinued its Undergraduate Teacher Education Program completely, citing low interest and enrollment numbers. There is still consistent evidence that young people or even current professionals remain interested in teaching as a possible career, but only if we create the conditions to translate that interest into action. U.S. policymakers have a narrow window to leverage the strong interest in AI to energize the education workforce, and ensure our future graduates are globally competitive for the digital frontier.

Plan of Action

America’s teaching profession needs a coordinated national strategy to reverse decades of decline and concurrently reinvigorate the sector for a new (and digital) industrial revolution now moving at an exponential pace. Key levers for this work include expanding the number of leadership opportunities for educators; identifying and scaling successful evidence-based models such as UTeach, residency-based programs, or National Writing Project’s peer-to-peer training sites; scaling registered apprenticeship programs or Grow Your Own programs along with the nation’s largest teacher colleges; and leveraging the platform of the President to boost recognition and prestige of the teaching profession.

The White House Office of Science and Technology Policy (OSTP) should coordinate a set of Executive Actions within the first 100 days of the next administration, including:

Recommendation 1. Launch a Grand Challenge for AI-Era Teacher Preparation

Create a national challenge via www.Challenge.Gov to identify the most innovative teacher recruitment, preparation, and training programs to prepare and retain educators for teaching in the era of AI. Challenge requirements should be minimal and flexible to encourage innovation, but could include the creation of teacher leadership opportunities, peer-network sites for professionals, and digital classroom resource exchanges. A challenge prompt could replicate the model of 100Kin10 or even leverage the existing network.

Recommendation 2. Update Areas of National Need

To enable existing scholarship programs to support AI readiness, the U.S. Department of Education should add “Artificial Intelligence,” “Data Science,” and “Machine Learning” to GAANN Areas of National Need under the Computer Science and Mathematics categories to expand eligibility for Masters-level scholarships for teachers to pursue additional study in these critical areas. The number of higher education programs in Data Science education has significantly increased in the past five years, with a small but increasing number of emerging Artificial Intelligence programs.

Recommendation 3. Expand and Simplify Key Programs for Technology-Focused Training

The President should direct the U.S. Secretary of Education, the National Science Foundation Director, and the Department of Defense Education Activity Director to add “Artificial Intelligence, Data Science, Computer Science” as competitive priorities where appropriate for existing grant or support programs that directly influence the national direction of teacher training and preparation, including the Teacher Quality Partnerships (ED) program, SEED (ED), the Hawkins Program (ED), the STEM Corps (NSF), the Robert Noyce Scholarship Program (NSF), and the DoDEA Professional Learning Division, and the Apprenticeship Building America grants from the U.S. Department of Labor. These terms could be added under prior “STEM” competitive priorities, such as the STEM Education Acts of 2014 and 2015 for “Computer Science,”and framed under “Digital Frontier Technologies.”

Additionally, the U.S. Department of Education should increase funding allocations for ESSA Evidence Tier-1 (“Demonstrates Rationale”), to expand the flexibility of existing grant programs to align with emerging technology proposals. As AI systems quickly update, few applicants have the opportunity to conduct rigorous evaluation studies or randomized control trials (RCTs) within the timespan of an ED grant program application window.

Additionally, the National Science Foundation should relaunch the 2014 Application Burden Taskforce to identify the greatest barriers in NSF application processes, update digital review infrastructure, review or modernize application criteria to recognize present-day technology realities, and set a 2-year deadline for recommendations to be implemented agency-wide. This ensures earlier-stage projects and non-traditional applicants (e.g. nonprofits, local education agencies, individual schools) can realistically pursue NSF funding. Recommendations may include a “tiered” approach for requirements based on grant size or applying institution.

Recommendation 4. Convene 100 Teacher Prep Programs for Action

The White House Office of Science & Technology Policy (OSTP) should host a national convening of nationally representative colleges of education and teacher preparation programs to 1) catalyze modernization efforts of program experiences and training content, and 2) develop recruitment strategies to revitalize interest in the teaching profession. A White House summit would help call attention to falling enrollment in teacher preparation programs; highlight innovative training models to recruit and retrain additional graduates; and create a deadline for states, districts, and private philanthropy to invest in teacher preparation programs. By leveraging the convening power of the White House, the Administration could make a profound impact on the teacher preparation ecosystem.

The administration should also consider announcing additional incentives or planning grants for regional or state-level teams in 1) catalyzing K-12 educator Registered Apprenticeship Program (RAPs) applications to the Department of Labor and 2) enabling teacher preparation program modernization for incorporating introductory computer science, data science, artificial intelligence, cybersecurity, and other “digital frontier skills,” via the grant programs in Recommendation 3 or via expanded eligibility for the Higher Education Act.

Recommendation 5. Launch a Digital “White House Data Science Fair”

Despite a bipartisan commitment to continue the annual White House Science Fair, the tradition ended in 2017. OSTP and the Committee on Science, Technology, and Math Education (Co-STEM) should resume the White House Science Fair and add a national “White House Data Science Fair,” a digital rendition of the Fair for the AI-era. K-12 and undergraduate student teams would have the opportunity to submit creative or customized applications of AI tools, machine-learning projects (similar to Kaggle competitions), applications of robotics, and data analysis projects centered on their own communities or global problems (climate change, global poverty, housing, etc.), under the mentorship of K-12 teachers. Similar to the original White House Science Fair, this recognition could draw from existing student competitions that have arisen over the past few years, including in Cleveland, Seattle, and nationally via AP Courses and out-of-school contexts. Partner Federal agencies should be encouraged to contribute their own educational resources and datasets through FC-STEM coordination, enabling students to work on a variety of topics across domains or interests (e.g. NASA, the U.S. Census, Bureau of Labor Statistics, etc.).

Recommendation 6. Announce a National Teacher Talent Surge at the State of Union

The President should launch a national teacher talent surge under the banner of “America’s Teachers Innovate,” a multi-agency communications campaign to reinvigorate the teaching profession and increase the number of teachers completing undergraduate or graduate degrees each year by 100,000. This announcement would follow the First 100 Days in office, allowing Recommendations 1-5 to be implemented and/or planned. The “America’s Teachers Innovate” campaign would include:

A national commitments campaign for investing in the future of American teaching, facilitated by the White House, involving State Education Agencies (SEAs) and Governors, the 100 largest school districts, industry, and philanthropy. Many U.S. education organizations are ready to take action. Commitments could include targeted scholarships to incentivize students to enter the profession, new grant programs for summer professional learning, and restructuring teacher payroll to become salaried annual jobs instead of nine-month compensation (see Discover Bank: “Surviving the Summer Paycheck Gap”).

Expansion of the Presidential Awards for Excellence in Mathematics and Science Teaching (PAMEST) program to include Data Science, Cybersecurity, AI, and other emerging technology areas, or a renaming of the program for wider eligibility across today’s STEM umbrella. Additionally, the PAMEST Award program should resume in-person award ceremonies beyond existing press releases, which were discontinued during COVID disruptions and have not since been offered. Several national STEM organizations and teacher associations have requested these events to return.

Student loan relief through the Teacher Loan Forgiveness (TLF) program for teachers who commit to five or more years in the classroom. New research suggests the lifetime return of college for education majors is near zero, only above a degree in Fine Arts. The administration should add “computer science, data science, and artificial intelligence” to the subject list of “Highly Qualified Teacher” who receive $17,500 of loan forgiveness via executive order.

An annual recruitment drive at college campus job fairs, facilitated directly under the banner of the White House Office of Science & Technology Policy (OSTP), to help grow awareness on the aforementioned programs directly with undergraduate students at formative career choice-points.

Recommendation 7. Direct IES and BLS to Support Teacher Shortage Forecasting Infrastructure