A Holistic Framework for Measuring and Reporting AI’s Impacts to Build Public Trust and Advance AI

As AI becomes more capable and integrated throughout the United States economy, its growing demand for energy, water, land, and raw materials is driving significant economic and environmental costs, from increased air pollution to higher costs for ratepayers. A recent report projects that data centers could consume up to 12% of U.S. electricity by 2028, underscoring the urgent need to assess the tradeoffs of continued expansion. To craft effective, sustainable resource policies, we need clear standards for estimating the data centers’ true energy needs and for measuring and reporting the specific AI applications driving their resource consumption. Local and state-level bills calling for more oversight of utility rates and impacts to ratepayers have received bipartisan support, and this proposal builds on that momentum.

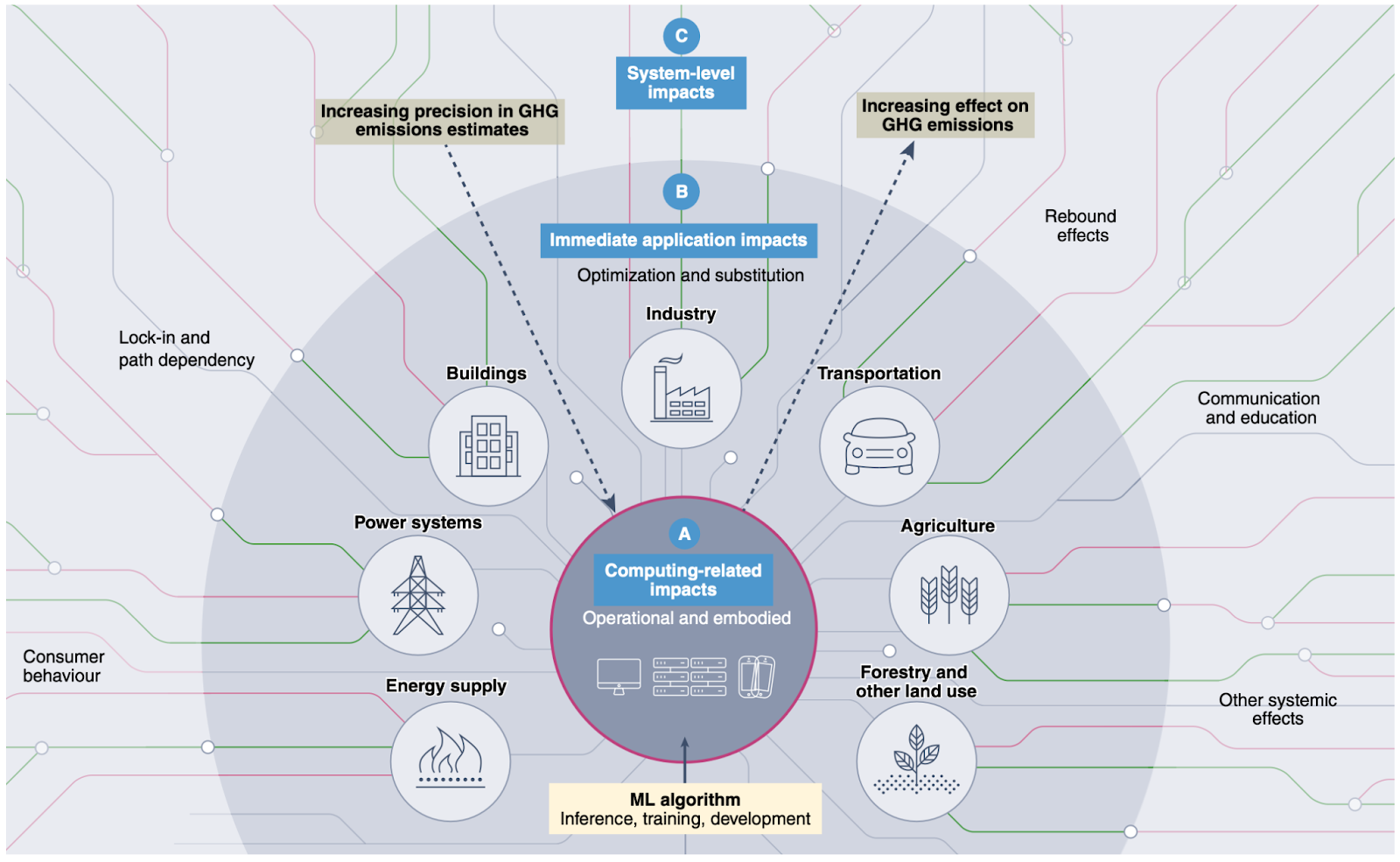

In this memo, we draw on research proposing a holistic evaluation framework for characterizing AI’s environmental impacts, which establishes three categories of impacts arising from AI: (1) Computing-related impacts; (2) Immediate application impacts; and (3) System-level impacts . Concerns around AI’s computing-related impacts, e.g. energy and water use due to AI data centers and hardware manufacturing, have become widely known with corresponding policy starting to be put into place. However, AI’s immediate application and system-level impacts, which arise from the specific use cases to which AI is applied, and the broader socio-economic shifts resulting from its use, remain poorly understood, despite their greater potential for societal benefit or harm.

To ensure that policymakers have full visibility into the full range of AI’s environmental impacts we recommend that the National Institute of Standards and Technology (NIST) oversee creation of frameworks to measure the full range of AI’s impacts. Frameworks should rely on quantitative measurements of the computing and application related impacts of AI and qualitative data based on engagements with the stakeholders most affected by the construction of data centers. NIST should produce these frameworks based on convenings that include academic researchers, corporate governance personnel, developers, utility companies, vendors, and data center owners in addition to civil society organizations. Participatory workshops will yield new guidelines, tools, methods, protocols and best practices to facilitate the evolution of industry standards for the measurement of the social costs of AI’s energy infrastructures.

Challenge and Opportunity

Resource consumption associated with AI infrastructures is expanding quickly, and this has negative impacts, including asthma from air pollution associated with diesel backup generators, noise pollution, light pollution, excessive water and land use, and financial impacts to ratepayers. A lack of transparency regarding these outcomes and public participation to minimize these risks losing the public’s trust, which in turn will inhibit the beneficial uses of AI. While there is a huge amount of capital expenditure and a massive forecasted growth in power consumption, there remains a lack of transparency and scientific consensus around the measurement of AI’s environmental impacts with respect to data centers and their related negative externalities.

A holistic evaluation framework for assessing AI’s broader impacts requires empirical evidence, both qualitative and quantitative, to influence future policy decisions and establish more responsible, strategic technology development. Focusing narrowly on carbon emissions or energy consumption arising from AI’s computing related impacts is not sufficient. Measuring AI’s application and system-level impacts will help policymakers consider multiple data streams, including electricity transmission, water systems and land use in tandem with downstream economic and health impacts.

Regulatory and technical attempts so far to develop scientific consensus and international standards around the measurement of AI’s environmental impacts have focused on documenting AI’s computing-related impacts, such as energy use, water consumption, and carbon emissions required to build and use AI. Measuring and mitigating AI’s computing-related impacts is necessary, and has received attention from policymakers (e.g. the introduction of the AI Environmental Impacts Act of 2024 in the U.S., provisions for environmental impacts of general-purpose AI in the EU AI Act, and data center sustainability targets in the German Energy Efficiency Act). However, research by Kaack et al (2022) highlights that impacts extend beyond computing. AI’s application impacts, which arise from the specific use cases for which AI is deployed (e.g. AI’s enabled emissions, such as application of AI to oil and gas drilling have much greater potential scope for positive or negative impacts compared to AI’s computing impacts alone, depending on how AI is used in practice). Finally, AI’s system-level impacts, which include even broader, cascading social and economic impacts associated with AI energy infrastructures, such as increased pressure on local utility infrastructure leading to increased costs to ratepayers, or health impacts to local communities due to increased air pollution, have the greatest potential for positive or negative impacts, while being the most challenging to measure and predict. See Figure 1 for an overview.

from Kaack et al. (2022). Effectively understanding and shaping AI’s impacts will require going beyond impacts arising from computing alone, and requires consideration and measurement of impacts arising from AI’s uses (e.g. in optimizing power systems or agriculture) and how AI’s deployment throughout the economy leads to broader systemic shifts, such as changes in consumer behavior.

Effective policy recommendations require more standardized measurement practices, a point raised by the Government Accountability Office’s recent report on AI’s human and environmental effects, which explicitly calls for increasing corporate transparency and innovation around technical methods for improved data collection and reporting. But data should also include multi-stakeholder engagement to ensure there are more holistic evaluation frameworks that meet the needs of specific localities, including state and local government officials, businesses, utilities, and ratepayers. Furthermore, while states and municipalities are creating bills calling for more data transparency and responsibility, including in California, Indiana, Oregon, and Virginia, the lack of federal policy means that data center owners may move their operations to states that have fewer protections in place and similar levels of existing energy and data transmission infrastructure.

States are also grappling with the potential economic costs of data center expansion. Ohio’s Policy Matters found that tax breaks for data center owners are hurting tax revenue streams that should be used to fund public services. In Michigan, tax breaks for data centers are increasing the cost of water and power for the public while undermining the state’s climate goals. Some Georgia Republicans have stated that data center companies should “pay their way.” While there are arguments that data centers can provide useful infrastructure, connectivity, and even revenue for localities, a recent report shows that at least ten states each lost over $100 million a year in revenue to data centers because of tax breaks. The federal government can help create standards that allow stakeholders to balance the potential costs and benefits of data centers and related energy infrastructures. We now have an urgent need to increase transparency and accountability through multi-stakeholder engagement, maximizing economic benefits while reducing waste.

Despite the high economic and policy stakes, critical data needed to assess the full impacts—both costs and benefits—of AI and data center expansion remains fragmented, inconsistent, or entirely unavailable. For example, researchers have found that state-level subsidies for data center expansion may have negative impacts on state and local budgets, but this data has not been collected and analyzed across states because not all states publicly release data about data center subsidies. Other impacts, such as the use of agricultural land or public parks for transmission lines and data center siting, must be studied at a local and state level, and the various social repercussions require engagement with the communities who are likely to be affected. Similarly, estimates on the economic upsides of AI vary widely, e.g. the estimated increase in U.S. labor productivity due to AI adoption ranges from 0.9% to 15% due in large part to lack of relevant data on AI uses and their economic outcomes, which can be used to inform modeling assumptions.

Data centers are highly geographically clustered in the United States, more so than other industrial facilities such as steel plants, coal mines, factories, and power plants (Fig. 4.12, IEA World Energy Outlook 2024). This means that certain states and counties are experiencing disproportionate burdens associated with data center expansion. These burdens have led to calls for data center moratoriums or for the cessation of other energy development, including in states like Indiana. Improved measurement and transparency can help planners avoid overly burdensome concentrations of data center infrastructure, reducing local opposition.

With a rush to build new data center infrastructure, states and localities must also face another concern: overbuilding. For example, Microsoft recently put a hold on parts of its data center contract in Wisconsin and paused another in central Ohio, along with contracts in several other locations across the United States and internationally. These situations often stem from inaccurate demand forecasting, prompting utilities to undertake costly planning and infrastructure development that ultimately goes unused. With better measurement and transparency, policymakers will have more tools to prepare for future demands, avoiding the negative social and economic impacts of infrastructure projects that are started but never completed.

While there have been significant developments in measuring the direct, computing-related impacts of AI data centers, public participation is needed to fully capture many of their indirect impacts. Data centers can be constructed so they are more beneficial to communities while mitigating their negative impacts, e.g. by recycling data center heat, and they can also be constructed to be more flexible by not using grid power during peak times. However, this requires collaborative innovation and cross-sector translation, informed by relevant data.

Plan of Action

Recommendation 1. Develop a database of AI uses and framework for reporting AI’s immediate applications in order to understand the drivers of environmental impacts.

The first step towards informed decision-making around AI’s social and environmental impacts is understanding what AI applications are actually driving data center resource consumption. This will allow specific deployments of AI systems to be linked upstream to compute-related impacts arising from their resource intensity, and downstream to impacts arising from their application, enabling estimation of immediate application impacts.

The AI company Anthropic demonstrated a proof-of-concept categorizing queries to their Claude language model under the O*NET database of occupations. However, O*NET was developed in order to categorize job types and tasks with respect to human workers, which does not exactly align with current and potential uses of AI. To address this, we recommend that NIST works with relevant collaborators such as the U.S. Department of Labor (responsible for developing and maintaining the O*NET database) to develop a database of AI uses and applications, similar to and building off of O*NET, along with guidelines and infrastructure for reporting data center resource consumption corresponding to those uses. This data could then be used to understand particular AI tasks that are key drivers of resource consumption.

Any entity deploying a public-facing AI model (that is, one that can produce outputs and/or receive inputs from outside its local network) should be able to easily document and report its use case(s) within the NIST framework. A centralized database will allow for collation of relevant data across multiple stakeholders including government entities, private firms, and nonprofit organizations.

Gathering data of this nature may require the reporting entity to perform analyses of sensitive user data, such as categorizing individual user queries to an AI model. However, data is to be reported in aggregate percentages with respect to use categories without attribution to or listing of individual users or queries. This type of analysis and data reporting is well within the scope of existing, commonplace data analysis practices. As with existing AI products that rely on such analyses, reporting entities are responsible for performing that analysis in a way that appropriately safeguards user privacy and data protection in accordance with existing regulations and norms.

Recommendation 2. NIST should create an independent consortium to develop a system-level evaluation framework for AI’s environmental impacts, while embedding robust public participation in every stage of the work.

Currently, the social costs of AI’s system-level impacts—the broader social and economic implications arising from AI’s development and deployment—are not being measured or reported in any systematic way. These impacts fall heaviest on the local communities that host the data centers powering AI: the financial burden on ratepayers who share utility infrastructure, the health effects of pollutants from backup generators, the water and land consumed by new facilities, and the wider economic costs or benefits of data-center siting. Without transparent metrics and genuine community input, policymakers cannot balance the benefits of AI innovation against its local and regional burdens. Building public trust through public participation is key when it comes to ensuring United States energy dominance and national security interests in AI innovation, themes emphasized in policy documents produced by the first and second Trump administrations.

To develop evaluation frameworks in a way that is both scientifically rigorous and broadly trusted, NIST should stand up an independent consortium via a Cooperative Research and Development Agreement (CRADA). A CRADA allows NIST to collaborate rapidly with non-federal partners while remaining outside the scope of the Federal Advisory Committee Act (FACA), and has been used, for example, to convene the NIST AI Safety Institute Consortium. Membership will include academic researchers, utility companies and grid operators, data-center owners and vendors, state, local, Tribal, and territorial officials, technologists, civil-society organizations, and frontline community groups.

To ensure robust public engagement, the consortium should consult closely with FERC’s Office of Public Participation (OPP)—drawing on OPP’s expertise in plain-language outreach and community listening sessions—and with other federal entities that have deep experience in community engagement on energy and environmental issues. Drawing on these partners’ methods, the consortium will convene participatory workshops and listening sessions in regions with high data-center concentration—Northern Virginia, Silicon Valley, Eastern Oregon, and the Dallas–Fort Worth metroplex—while also making use of online comment portals to gather nationwide feedback.

Guided by the insights from these engagements, the consortium will produce a comprehensive evaluation framework that captures metrics falling outside the scope of direct emissions alone. These system-level metrics could encompass (1) the number, type, and duration of jobs created; (2) the effects of tax subsidies on local economies and public services; (3) the placement of transmission lines and associated repercussions for housing, public parks, and agriculture; (4) the use of eminent domain for data-center construction; (5) water-use intensity and competing local demands; and (6) public-health impacts from air, light, and noise pollution. NIST will integrate these metrics into standardized benchmarks and guidance.

Consortium members will attend public meetings, engage directly with community organizations, deliver accessible presentations, and create plain-language explainers so that non-experts can meaningfully influence the framework’s design and application. The group will also develop new guidelines, tools, methods, protocols, and best practices to facilitate industry uptake and to evolve measurement standards as technology and infrastructure grow.

We estimate a cost of approximately $5 million over two years to complete the work outlined in recommendation 1 and 2, covering staff time, travel to at least twelve data-center or energy-infrastructure sites across the United States, participant honoraria, and research materials.

Recommendation 3. Mandate regular measurement and reporting on relevant metrics by data center operators.

Voluntary reporting is the status quo, via e.g. corporate Environmental, Social, and Governance (ESG) reports, but voluntary reporting has so far been insufficient for gathering necessary data. For example, while the technology firm OpenAI, best known for their highly popular ChatGPT generative AI model, holds a significant share of the search market and likely corresponding share of environmental and social impacts arising from the data centers powering their products, OpenAI chooses not to publish ESG reports or data in any other format regarding their energy consumption or greenhouse gas (GHG) emissions. In order to collect sufficient data at the appropriate level of detail, reporting must be mandated at the local, state, or federal level. At the state level, California’s Climate Corporate Data Accountability Act (SB -253, SB-219) requires that large companies operating within the state report their GHG emissions in accordance with the GHG Protocol, administered by the California Air Resources Board (CARB).

At the federal level, the EU’s Corporate Sustainable Reporting Directive (CSRD), which requires firms operating within the EU to report a wide variety of data related to environmental sustainability and social governance, could serve as a model for regulating companies operating within the U.S. The Environmental Protection Agency’s (EPA) GHG Reporting Program already requires emissions reporting by operators and suppliers associated with large GHG emissions sources, and the Energy Information Administration (EIA) collects detailed data on electricity generation and fuel consumption through forms 860 and 923. With respect to data centers specifically, the Department of Energy (DOE) could require that developers who are granted rights to build AI data center infrastructure on public lands perform the relevant measurement and reporting, and more broadly reporting could be a requirement to qualify for any local, state or federal funding or assistance provided to support buildout of U.S. AI infrastructure.

Recommendation 4. Incorporate measurements of social cost into AI energy and infrastructure forecasting and planning.

There is a huge range in estimates of future data center energy use, largely driven by uncertainty around the nature of demands from AI. This uncertainty stems in part from a lack of historical and current data on which AI use cases are most energy intensive and how those workloads are evolving over time. It also remains unclear the extent to which challenges in bringing new resources online, such as hardware production limits or bottlenecks in permitting, will influence growth rates. These uncertainties are even more significant when it comes to the holistic impacts (i.e. those beyond direct energy consumption) described above, making it challenging to balance costs and benefits when planning future demands from AI.

To address these issues, accurate forecasting of demand for energy, water, and other limited resources must incorporate data gathered through holistic measurement frameworks described above. Further, the forecasting of broader system-level impacts must be incorporated into decision-making around investment in AI infrastructure. Forecasting needs to go beyond just energy use. Models should include predicting energy and related infrastructure needs for transmission, the social cost of carbon in terms of pollution, the effects to ratepayers, and the energy demands from chip production.

We recommend that agencies already responsible for energy-demand forecasting—such as the Energy Information Administration at the Department of Energy—integrate, in line with the NIST frameworks developed above, data on the AI workloads driving data-center electricity use into their forecasting models. Agencies specializing in social impacts, such as the Department of Health and Human Services in the case of health impacts, should model social impacts and communicate those to EIA and DOE for planning purposes. In parallel, the Federal Energy Regulatory Commission (FERC) should update its new rule on long-term regional transmission planning, to explicitly include consideration of the social costs corresponding to energy supply, demand and infrastructure retirement/buildout across different scenarios.

Recommendation 5. Transparently use federal, state, and local incentive programs to reward data-center projects that deliver concrete community benefits.

Incentive programs should attach holistic estimates of the costs and benefits collected under the frameworks above, and not purely based on promises. When considering using incentive programs, policymakers should ask questions such as: How many jobs are created by data centers and for how long do those jobs exist, and do they create jobs for local residents? What tax revenue for municipalities or states is created by data centers versus what subsidies are data center owners receiving? What are the social impacts of using agricultural land or public parks for data center construction or transmission lines? What are the impacts to air quality and other public health issues? Do data centers deliver benefits like load flexibility and sharing of waste heat?

Grid operators (Regional Transmission Organizations [RTOs] and Independent System Operators [ISOs]) can leverage interconnection queues to incentivize data center operators to justify that they have sufficiently considered the impacts to local communities when proposing a new site. FERC recently approved reforms to processing the interconnect request queue, allowing RTOs to implement a “first-ready first-served” approach rather than a first-come first-served approach, wherein proposed projects can be fast-tracked based on their readiness. A similar approach could be used by RTOs to fast-track proposals that include a clear plan for how they will benefit local communities (e.g. through load flexibility, heat reuse, and clean energy commitments), grounded in careful impact assessment.

There is the possibility of introducing state-level incentives in states with existing significant infrastructure. Such incentives could be determined in collaboration with the National Governors Association, who have been balancing AI-driven energy needs with state climate goals.

Conclusion

Data centers have an undeniable impact on energy infrastructures and the communities living close to them. This impact will continue to grow alongside AI infrastructure investment, which is expected to skyrocket. It is possible to shape a future where AI infrastructure can be developed sustainably, and in a way that responds to the needs of local communities. But more work is needed to collect the necessary data to inform government decision-making. We have described a framework for holistically evaluating the potential costs and benefits of AI data centers, and shaping AI infrastructure buildout based on those tradeoffs. This framework includes: establishing standards for measuring and reporting AI’s impacts, eliciting public participation from impacted communities, and putting gathered data into action to enable sustainable AI development.

This memo is part of our AI & Energy Policy Sprint, a policy project to shape U.S. policy at the critical intersection of AI and energy. Read more about the Policy Sprint and check out the other memos here.

Data centers are highly spatially concentrated largely due to reliance on existing energy and data transmission infrastructure; it is more cost-effective to continue building where infrastructure already exists, rather than starting fresh in a new region. As long as the cost of performing the proposed impact assessment and reporting in established regions is less than that of the additional overhead of moving to a new region, data center operators are likely to comply with regulations in order to stay in regions where the sector is established.

Spatial concentration of data centers also arises due to the need for data center workloads with high data transmission requirements, such as media streaming and online gaming, to have close physical proximity to users in order to reduce data transmission latency. In order for AI to be integrated into these realtime services, data center operators will continue to need presence in existing geographic regions, barring significant advances in data transmission efficiency and infrastructure.

bad for national security and economic growth. So is infrastructure growth that harms the local communities in which it occurs.

Researchers from Good Jobs First have found that many states are in fact losing tax revenue to data center expansion: “At least 10 states already lose more than $100 million per year in tax revenue to data centers…” More data is needed to determine if data center construction projects coupled with tax incentives are economically advantageous investments on the parts of local and state governments.

The DOE is opening up federal lands in 16 locations to data center construction projects in the name of strengthening America’s energy dominance and ensuring America’s role in AI innovation. But national security concerns around data center expansion should also consider the impacts to communities who live close to data centers and related infrastructures.

Data centers themselves do not automatically ensure greater national security, especially because the critical minerals and hardware components of data centers depend on international trade and manufacturing. At present, the United States is not equipped to contribute the critical minerals and other materials needed to produce data centers, including GPUs and other components.

Federal policy ensures that states or counties do not become overburdened by data center growth and will help different regions benefit from the potential economic and social rewards of data center construction.

Developing federal standards around transparency helps individual states plan for data center construction, allowing for a high-level, comparative look at the energy demand associated with specific AI use cases. It is also important for there to be a federal intervention because data centers in one state might have transmission lines running through a neighboring state, and resultant outcomes across jurisdictions. There is a need for a national-level standard.

Current cost-benefit estimates can often be extremely challenging. For example, while municipalities often expect there will be economic benefits attached to data centers and that data center construction will yield more jobs in the area, subsidies and short-term jobs in construction do not necessarily translate into economic gains.

To improve the ability of decision makers to do quality cost-benefit analysis, the independent consortium described in Recommendation 2 will examine both qualitative and quantitative data, including permitting histories, transmission plans, land use and eminent domain cases, subsidies, jobs numbers, and health or quality of life impacts in various sites over time. NIST will help develop standards in accordance with this data collection, which can then be used in future planning processes.

Further, there is customer interest in knowing their AI is being sourced from firms implementing sustainable and socially responsible practices. These efforts which can be used in marketing communications and reported as a socially and environmentally responsible practice in ESG reports. This serves as an additional incentive for some data center operators to participate in voluntary reporting and maintain operations in locations with increased regulation.

In the absence of guardrails and guidance, AI can increase inequities, introduce bias, spread misinformation, and risk data security for schools and students alike.

At a time when universities are already facing intense pressure to re-envision their role in the S&T ecosystem, we encourage NSF to ensure that the ambitious research acceleration remains compatible with their expertise.

FAS CEO Daniel Correa recently spoke with Adam Marblestone and Sam Rodriques, former FAS fellows who developed the idea for FROs and advocated for their use in a 2020 policy memo.

When the U.S. government funds the establishment of a platform for testing hundreds of behavioral interventions on a large diverse population, we will start to better understand the interventions that will have an efficient and lasting impact on health behavior.