Automating Scientific Discovery: A Research Agenda for Advancing Self-Driving Labs

Despite significant advances in scientific tools and methods, the traditional, labor-intensive model of scientific research in materials discovery has seen little innovation. The reliance on highly skilled but underpaid graduate students as labor to run experiments hinders the labor productivity of our scientific ecosystem. An emerging technology platform known as Self-Driving Labs (SDLs), which use commoditized robotics and artificial intelligence for automated experimentation, presents a potential solution to these challenges.

SDLs are not just theoretical constructs but have already been implemented at small scales in a few labs. An ARPA-E-funded Grand Challenge could drive funding, innovation, and development of SDLs, accelerating their integration into the scientific process. A Focused Research Organization (FRO) can also help create more modular and open-source components for SDLs and can be funded by philanthropies or the Department of Energy’s (DOE) new foundation. With additional funding, DOE national labs can also establish user facilities for scientists across the country to gain more experience working with autonomous scientific discovery platforms. In an era of strategic competition, funding emerging technology platforms like SDLs is all the more important to help the United States maintain its lead in materials innovation.

Challenge and Opportunity

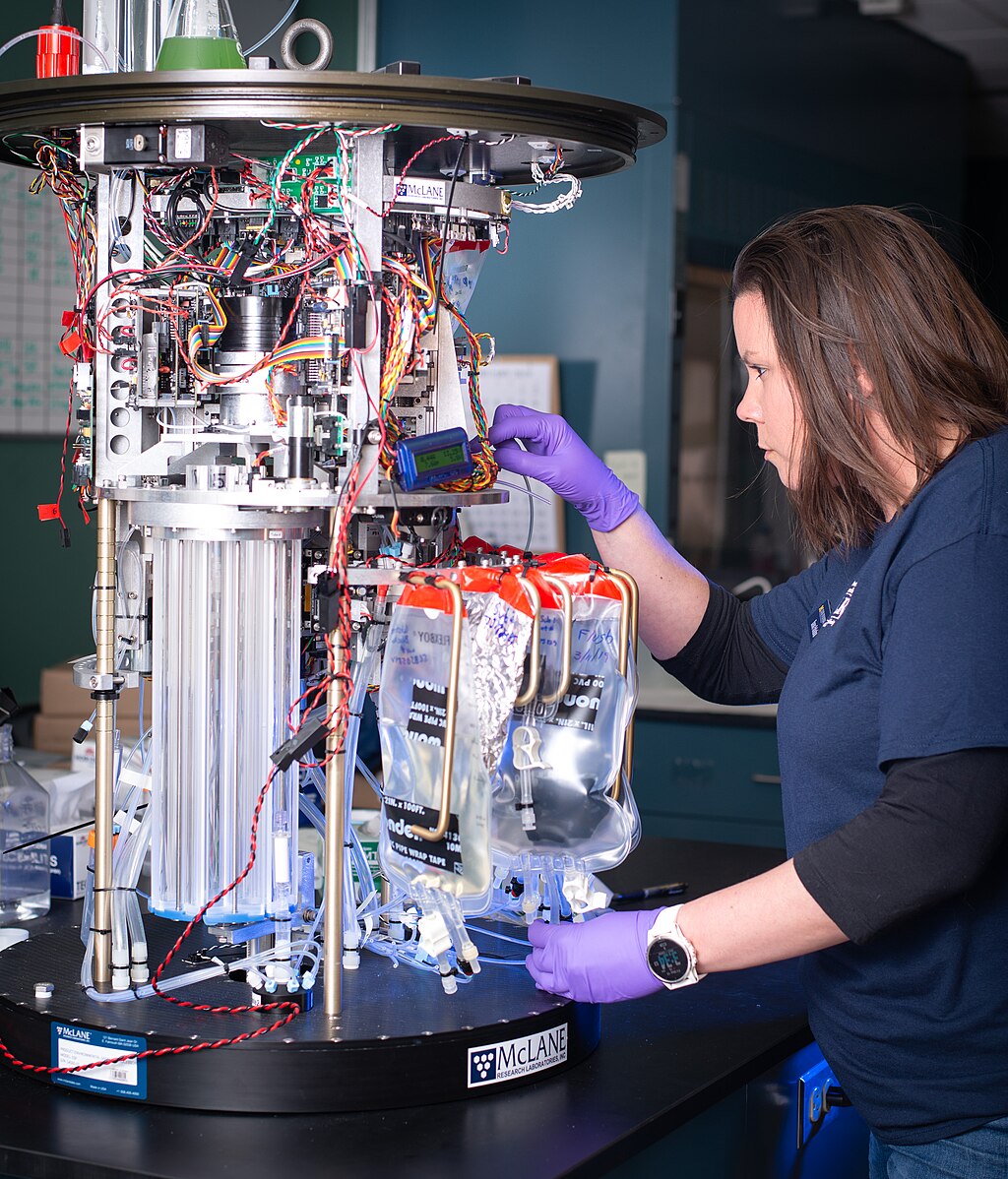

New scientific ideas are critical for technological progress. These ideas often form the seed insight to creating new technologies: lighter cars that are more energy efficient, stronger submarines to support national security, and more efficient clean energy like solar panels and offshore wind. While the past several centuries have seen incredible progress in scientific understanding, the fundamental labor structure of how we do science has not changed. Our microscopes have become far more sophisticated, yet the actual synthesizing and testing of new materials is still laboriously done in university laboratories by highly knowledgeable graduate students. The lack of innovation in how we historically use scientific labor pools may account for stagnation of research labor productivity, a primary cause of concerns about the slowing of scientific progress. Indeed, analysis of scientific literature suggests that scientific papers are becoming less disruptive over time and that new ideas are getting harder to find. The slowing rate of new scientific ideas, particularly in the discovery of new materials or advances in materials efficiency, poses a substantial risk, potentially costing billions of dollars in economic value and jeopardizing global competitiveness. However, incredible advances in artificial intelligence (AI) coupled with the rise of cheap but robust robot arms are leading to a promising new paradigm of material discovery and innovation: Self-Driving Labs. An SDL is a platform where material synthesis and characterization is done by robots, with AI models intelligently selecting new material designs to test based on previous experimental results. These platforms enable researchers to rapidly explore and optimize designs within otherwise unfeasibly large search spaces.

Today, most material science labs are organized around a faculty member or principal investigator (PI), who manages a team of graduate students. Each graduate student designs experiments and hypotheses in collaboration with a PI, and then executes the experiment, synthesizing the material and characterizing its property. Unfortunately, that last step is often laborious and the most time-consuming. This sequential method to material discovery, where highly knowledgeable graduate students spend large portions of their time doing manual wet lab work, rate limits the amount of experiments and potential discoveries by a given lab group. SDLs can significantly improve the labor productivity of our scientific enterprise, freeing highly skilled graduate students from menial experimental labor to craft new theories or distill novel insights from autonomously collected data. Additionally, they yield more reproducible outcomes as experiments are run by code-driven motors, rather than by humans who may forget to include certain experimental details or have natural variations between procedures.

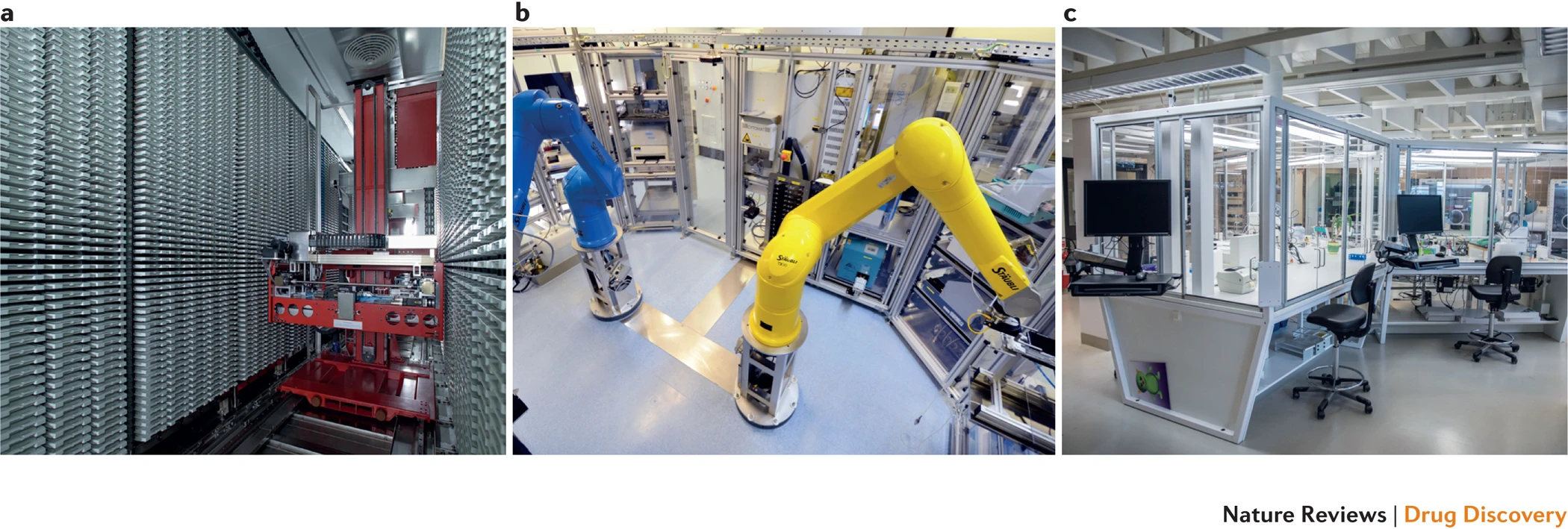

Self-Driving Labs are not a pipe dream. The biotech industry has spent decades developing advanced high-throughput synthesis and automation. For instance, while in the 1970s statins (one of the most successful cholesterol-lowering drug families) were discovered in part by a researcher testing 3800 cultures manually over a year, today, companies like AstraZeneca invest millions of dollars in automation and high-throughput research equipment (see figure 1). While drug and material discovery share some characteristics (e.g., combinatorially large search spaces and high impact of discovery), materials R&D has historically seen fewer capital investments in automation, primarily because it sits further upstream from where private investments anticipate predictable returns. There are, however, a few notable examples of SDLs being developed today. For instance, researchers at Boston University used a robot arm to test 3D-printed designs for uniaxial compression energy adsorption, an important mechanical property for designing stronger structures in civil engineering and aerospace. A Bayesian optimizer was then used to iterate over 25,000 designs in a search space with trillions of possible candidates, which led to an optimized structure with the highest recorded mechanical energy adsorption to date. Researchers at North Carolina State University used a microfluidic platform to autonomously synthesize >100 quantum dots, discovering formulations that were better than the previous state of the art in that material family.

These first-of-a-kind SDLs have shown exciting initial results demonstrating their ability to discover new material designs in a haystack of thousands to trillions of possible designs, which would be too large for any human researcher to grasp. However, SDLs are still an emerging technology platform. In order to scale up and realize their full potential, the federal government will need to make significant and coordinated research investments to derisk this materials innovation platform and demonstrate the return on capital before the private sector is willing to invest it.

Other nations are beginning to recognize the importance of a structured approach to funding SDLs: University of Toronto’s Alan Aspuru-Guzik, a former Harvard professor who left the United States in 2018, has created an Acceleration Consortium to deploy these SDLs and recently received $200 million in research funding, Canada’s largest ever research grant. In an era of strategic competition and climate challenges, maintaining U.S. competitiveness in materials innovation is more important than ever. Building a strong research program to fund, build, and deploy SDLs in research labs should be a part of the U.S. innovation portfolio.

Plan of Action

While several labs in the United States are working on SDLs, they have all received small, ad-hoc grants that are not coordinated in any way. A federal government funding program dedicated to self-driving labs does not currently exist. As a result, the SDLs are constrained to low-hanging material systems (e.g., microfluidics), with the lack of patient capital hindering labs’ ability to scale these systems and realize their true potential. A coordinated U.S. research program for Self-Driving Labs should:

Initiate an ARPA-E SDL Grand Challenge: Drawing inspiration from DARPA’s previous grand challenges that have catalyzed advancements in self-driving vehicles, ARPA-E should establish a Grand Challenge to catalyze state-of-the-art advancements in SDLs for scientific research. This challenge would involve an open call for teams to submit proposals for SDL projects, with a transparent set of performance metrics and benchmarks. Successful applicants would then receive funding to develop SDLs that demonstrate breakthroughs in automated scientific research. A projected budget for this initiative is $30 million1, divided among six selected teams, each receiving $5 million over a four-year period to build and validate their SDL concepts. While ARPA-E is best positioned in terms of authority and funding flexibility, other institutions like National Science Foundation (NSF) or DARPA itself could also fund similar programs.

Establish a Focused Research Organization to open-source SDL components: This FRO would be responsible for developing modular, open-source hardware and software specifically designed for SDL applications. Creating common standards for both the hardware and software needed for SDLs will make such technology more accessible and encourage wider adoption. The FRO would also conduct research on how automation via SDLs is likely to reshape labor roles within scientific research and provide best practices on how to incorporate SDLs into scientific workflows. A proposed operational timeframe for this organization is five years, with an estimated budget of $18 million over that time period. The organization would work on prototyping SDL-specific hardware solutions and make them available on an open-source basis to foster wider community participation and iterative improvement. A FRO could be spun out of the DOE’s new Foundation for Energy Security (FESI), which would continue to establish the DOE’s role as an innovative science funder and be an exciting opportunity for FESI to work with nontraditional technical organizations. Using FESI would not require any new authorities and could leverage philanthropic funding, rather than requiring congressional appropriations.

Provide dedicated funding for the DOE national labs to build self-driving lab user facilities, so the United States can build institutional expertise in SDL operations and allow other U.S. scientists to familiarize themselves with these platforms. This funding can be specifically set aside by the DOE Office of Science or through line-item appropriations from Congress. Existing prototype SDLs, like the Argonne National Lab Rapid Prototyping Lab or Berkeley Lab’s A-Lab, that have emerged in the past several years lack sustained DOE funding but could be scaled up and supported with only $50 million in total funding over the next five years. SDLs are also one of the primary applications identified by the national labs in the “AI for Science, Energy, and Security” report, demonstrating willingness to build out this infrastructure and underscoring the recognized strategic importance of SDLs by the scientific research community.

As with any new laboratory technique, SDLs are not necessarily an appropriate tool for everything. Given that their main benefit lies in automation and the ability to rapidly iterate through designs experimentally, SDLs are likely best suited for:

- Material families with combinatorially large design spaces that lack clear design theories or numerical models (e.g., metal organic frameworks, perovskites)

- Experiments where synthesis and characterization are either relatively quick or cheap and are amenable to automated handling (e.g., UV-vis spectroscopy is relatively simple, in-situ characterization technique)

- Scientific fields where numerical models are not accurate enough to use for training surrogate models or where there is a lack of experimental data repositories (e.g., the challenges of using density functional theory in material science as a reliable surrogate model)

While these heuristics are suggested as guidelines, it will take a full-fledged program with actual results to determine what systems are most amenable to SDL disruption.

When it comes to exciting new technologies, there can be incentives to misuse terms. Self-Driving Labs can be precisely defined as the automation of both material synthesis and characterization that includes some degree of intelligent, automated decision-making in-the-loop. Based on this definition, here are common classes of experiments that are not SDLs:

- High-throughput synthesis, where synthesis automation allows for the rapid synthesis of many different material formulations in parallel (lacks characterization and AI-in-the-loop)

- Using AI as a surrogate trained over numerical models, which is based on software-only results. Using an AI surrogate model to make material predictions and then synthesizing an optimal material is also not a SDL, though certainly still quite the accomplishment for AI in science (lacks discovery of synthesis procedures and requires numerical models or prior existing data, neither of which are always readily available in the material sciences).

SDLs, like every other technology that we have adopted over the years, eliminate routine tasks that scientists must currently spend their time on. They will allow scientists to spend more time understanding scientific data, validating theories, and developing models for further experiments. They can automate routine tasks but not the job of being a scientist.

However, because SDLs require more firmware and software, they may favor larger facilities that can maintain long-term technicians and engineers who maintain and customize SDL platforms for various applications. An FRO could help address this asymmetry by developing open-source and modular software that smaller labs can adopt more easily upfront.

FAS Senior Fellow Jen Pahlka testifies on Using AI to Improve Government Services

Jennifer Pahlka (@pahlkadot) is a FAS Senior Fellow and the author of Recoding America: Why Government is Failing in the Digital Age and How We Can Do Better. Here is Pahlka’s testimony about artificial intelligence presented today, January 10, 2024, to the full Senate Committee on Homeland Security and Government Affairs hearing on “Harnessing AI to Improve Government Services and Customer Experience”. More can be found here, here, and here.

How the U.S. government chooses to respond to the changes AI brings is indeed critical, especially in its use to improve government services and customer experience. If the change is going to be for the better (and we can’t afford otherwise) it will not be primarily because of how much or how little we constrain AI’s use. Constraints are an important conversation, and AI safety experts are better suited to discuss these than me. But we could constrain agencies significantly and still get exactly the bad outcomes that those arguing for risk mitigation want to avoid. We could instead direct agencies to dive headlong into AI solutions, and still fail to get the benefit that the optimists expect. The difference will come down to how much or how little capacity and competency we have to deploy these technologies thoughtfully.

There are really two ways to build capacity: having more of the right people doing the right things (including but not limited to leveraging technology like AI) and safely reducing the burdens we place on those people. AI, of course, could help reduce those burdens, but not without the workforce we need – one that understands the systems we have today, the policy goals we have set, and the technology we are bringing to bear to achieve those goals. Our biggest priority as a government should be building that capacity, working both sides of that equation (more people, less burden.)

Building that capacity will require bodies like the US Senate to use a wide range of the tools at its disposal to shape our future, and use them in a specific way. Those tools can be used to create mandates and controls on the institutions that deliver for the American people, adding more rules and processes for administrative agencies and others to comply with. Or they can be used to enable these institutions to develop the capacity they so desperately need and to use their judgment in the service of agreed-upon goals, often by asking what mandates and controls might be removed, rather than added. This critical AI moment calls for enablement.

The recent executive order on AI already provides some new controls and safeguards. The order strikes a reasonable balance between encouragement and caution, but I worry that some of its guidance will be applied inappropriately. For example, some government agencies have long been using AI for day to day functions like handwriting recognition on envelopes or improved search to retrieve evidence more easily, and agencies may now subject these benign, low-risk uses to red tape based on the order. Caution is merited in some places, and dangerous in others, where we risk moving backwards, not forward. What we need to navigate these frameworks of safeguard and control are people in agencies who can tell the difference, and who have the authority to act accordingly.

Moreover, in many areas of government service delivery, the status quo is frankly not worth protecting. We understandably want to make sure, for instance, that applicants for government benefits aren’t unfairly denied because of bias in algorithms. The reality is that, to take just one benefit, one in six determinations of eligibility for SNAP is substantively incorrect today. If you count procedural errors, the rate is 44%. Worse are the applications and adjudications that haven’t been decided at all, the ones sitting in backlogs, causing enormous distress to the public and wasting taxpayer dollars. Poor application of AI in these contexts could indeed make a bad situation worse, but for people who are fed up and just want someone to get back to them about their tax return, their unemployment insurance check, or even their company’s permit to build infrastructure, something has to change. We may be able to make progress by applying AI, but not if we double down on the remedies that failed in the Internet Age and hope they somehow work in the age of AI. We must finally commit to the hard work of building digital capacity.

What Works in Boston, Won’t Necessarily Work in Birmingham: 4 Pragmatic Principles for Building Commercialization Capacity in Innovation Ecosystems

Just like crop tops, flannel, and some truly unfortunate JNCO jeans that one of these authors wore in junior high, the trends of the 90’s are upon us again. In the innovation world, this means an outsized focus on tech-based economic development, the hottest new idea in economic development, circa 1995. This takes us back in time to fifteen years after the passage of the Bayh Dole Act, the federal legislation that granted ownership of federally funded research to universities. It was a time when the economy was expanding, dot-com growth was a boom, not a bubble, and we spent more time watching Saved by the Bell than thinking about economic impact.

After the creation of tech transfer offices across the country and the benefit of time, universities were just starting to understand how much the changes wrought by Bayh-Dole would impact them (or not). A raft of optimistic investments in venture development organizations and state public-private partnerships swept the country, some of which (like Ben Franklin Technology Partners and BioSTL) are still with us today, and some of which (like the Kansas Technology Enterprise Center) have flamed out in spectacular fashion. All of a sudden, research seemed like a process to be harnessed for economic impact. Out of this era came the focus on “technology commercialization” that has captured the economic development imagination to this day.

Commercialization, in the context of this piece, describes the process through which universities (or national labs) and the private sector collaborate to bring to the market technologies that were developed using federal funding. Unlike sponsored research and development, in which industry engages with universities from the beginning to fund and set a research agenda, commercialization brings in the private sector after the technology has been conceptualized. Successful commercialization efforts have now grown across the country, and we believe they can be described by four practical principles:

- Principle 1: A strong research enterprise is a necessary precondition to building a strong commercialization pipeline.

- Principle 2: Commercialization via established businesses creates different economic impacts than commercialization via startups; each pathway requires fundamentally different support.

- Principle 3: Local context matters; what works in Boston won’t necessarily work in Birmingham.

- Principle 4: Successful commercialization pipelines include interventions at the individual, institutional, and ecosystem level.

Principle 1: A strong research enterprise is a necessary precondition to building a strong commercialization pipeline.

The first condition necessary to developing a commercialization pipeline is a reasonably advanced research enterprise. While not every region in the U.S. has access to a top-tier research university, there are pockets of excellent research at most major U.S. R1 and R2 institutions. However, because there is natural attrition at each stage of the commercialization process (much like the startup process) a critical mass of novel, leading, and relevant research activity must exist in a given University. If that bar is assumed to be the ability to attract $10 million in research funding (the equivalent of winning 20-25 SBIR Phase 1 grants annually), that limits the number of schools that can run a fruitful commercialization pipeline to approximately 350 institutions, based on data from the NSF NCSES. A metro area should have at least one research institution that meets this bar in order to secure federal funding for the development of lab-to-market programs, though given the co-location of many universities, it is possible for some metro areas to have several such research institutions or none at all.

Principle 2: Commercialization via established businesses creates different economic impacts than commercialization via startups; each pathway requires fundamentally different support.

When talking about commercialization, it is also important to differentiate between whether a new technology is brought to market by a large, incumbent company or start-up. The first half of the commercialization process is the same for both: technology is transferred out of universities, national labs, and other research institutions through the process of registering, patenting, and licensing new intellectual property (IP). Once licensed, though, the commercialization pathway branches into two.

With an incumbent company, whether or not it successfully brings new technology to the market is largely dependent on the company’s internal goals and willingness to commit resources to commercializing that IP. Often, incumbent companies will license patents as a defensive strategy in order to prevent competition with their existing product lines. As a result, license of a technology by an incumbent company cannot be assumed to represent a guarantee of commercial use or value creation.

The alternative pathway is for universities to license their IP to start-ups, which may be spun out of university labs. Though success is not guaranteed, licensing to these new companies is where new programs and better policies can actually make an impact. Start-ups are dependent upon successful commercialization and require a lot of support to do so. Policies and programs that help meet their core needs can play a significant role in whether or not a start-up succeeds. These core needs include independent space for demonstrating and scaling their product, capital for that work and commercialization activities (e.g. scouting customers and conducting sales), and support through mentorship programs, accelerators, and in-kind help navigating regulatory processes (especially in deep tech fields).

Principle 3: Local context matters; what works in Boston won’t necessarily work in Birmingham.

Unfortunately, many universities approach their tech transfer programs with the goal of licensing their technology to large companies almost exclusively. This arises because university technology transfer offices (TTOs) are often understaffed, and it is easier to license multiple technologies to the same large company under an established partnership than to scout new buyers and negotiate new contracts for each patent. The Bayh-Dole Act, which established the current tech transfer system, was never intended to subsidize the R&D expenditures of our nation’s largest and most profitable companies, nor was it intended to allow incumbents to weaponize IP to repel new market entrants. Yet, that is how it is being used today in practical application.

Universities are not necessarily to blame for the lack of resources, though. Universities spend on average 0.6% of their research expenditures on their tech transfer programs. However, there is a large difference in research expenditures between top universities that can attract over a billion in research funding and the average research university, and thus a large difference in the staffing and support of TTOs. State government funding for the majority of public research universities have been declining since 2008, though there has been a slight upswing since the pandemic, while R&D funding at top universities continues to increase. Only a small minority of TTOs bring in enough income from licensing in order to be self-sustaining, often from a single “blockbuster” patent, while the majority operate at a loss to the institution.

To successfully develop innovation capacity in ecosystems around the country through increased commercialization activity, one must recognize that communities have dramatically different levels of resources dedicated to these activities, and thus, “best practices” developed at leading universities are seldom replicable in smaller markets.

Principle 4: Successful commercialization pipelines include interventions at the individual, institutional, and ecosystem level.

As we’ve discussed at length in our FAS “systems-thinking” blog series, which includes a post on innovation ecosystems, a systems lens is fundamental to how we see the world. Thinking in terms of systems helps us understand the structural changes that are needed to change the conditions that we see playing out around us every day. When thinking about the structure of commercialization processes, we believe that intervention at various structural levels of a system is necessary to create progres on challenges that seem insurmountable at first—such as changing the cultural expectations of “success” that are so influential in the academic systems. Below we have identified some good practices and programs for supporting commercialization at the individual, institutional, and ecosystem level, with an emphasis on pathways to start-ups and entrepreneurship.

Practices and Programs Targeted at Individuals

University tech transfer programs are often reliant on individuals taking the initiative to register new IP with their TTOs. This requires individuals to be both interested enough in commercialization and knowledgeable enough about the commercialization potential of their research to pursue registration. Universities can encourage faculty to be proactive in pursuing commercialization through recognizing entrepreneurial activities in their hiring, promotion and tenure guidelines and encouraging faculty to use their sabbaticals to pursue entrepreneurial activities. An analog to the latter at national laboratories are Entrepreneurial Leave Programs that allow staff scientists to take a leave of up to three years to start or join a start-up before returning to their position at the national lab.

Faculty and staff scientists are not the only source of IP though; graduate students and postdoctoral researchers produce much of the actual research behind new intellectual property. Whether or not these early-career researchers pursue commercialization activities is correlated with whether they have had research advisors who were engaged in commercialization. For this reason, in 2007, the National Research Foundation of Singapore established a joint research center with the Massachusetts Institute of Technology (MIT) such that by working with entrepreneurial MIT faculty members, researchers at major Singaporean universities would also develop a culture of entrepreneurship. Most universities likely can’t establish programs of this scale, but some type of mentorship program for early-career scientists pre-IP generation can help create a broader culture of translational research and technology transfer. Universities should also actively support graduate students and postdoctoral researchers in putting forward IP to their TTO. Some universities have even gone so far as to create funds to buy back the time of graduate students and postdocs from their labs and direct that time to entrepreneurial activities, such as participating in an iCorps program or conducting primary market research.

Some universities have even gone so far as to create funds to buy back the time of graduate students and postdocs from their labs and direct that time to entrepreneurial activities, such as participating in an iCorps program or conducting primary market research.

Once IP has been generated and licensed, many universities offer mentorship programs for new entrepreneurs, such as MIT’s Venture Mentorship Services. Outside of universities, incubators and accelerators provide mentorship along with funding and/or co-working spaces for start-ups to grow their operation. Hardware-focused start-ups especially benefit from having a local incubator or accelerator, since hard-tech start-ups attract significantly less venture capital funding and support than digital technology start-ups, but require larger capital expenditures as they scale. Shared research facilities and testbeds are also crucial for providing hard-tech start-ups with the lab space and equipment to refine and scale their technologies.

For internationally-born entrepreneurs, an additional consideration is visa sponsorship. International graduate students and postdocs that launch start-ups need visa sponsors in order to stay in the United States as they transition out of academia. Universities that participate in the Global Entrepreneur in Residence program help provide H-1B visas for international entrepreneurs to work on their start-ups in affiliation with universities. The university benefits in return by attracting start-ups to their local community that then generate economic opportunities and help create an entrepreneurial ecosystem.

Practices and Programs Targeted at Institutions

As mentioned in the beginning, one of the biggest challenges for university tech transfer programs is understaffed TTOs and small patent budgets. On average, TTOs have only four people on staff, who can each file a handful of patents a year, and budgets for the legal fees on even fewer patents. Fully staffing TTOs can help universities ensure that new IP doesn’t slip through the cracks due to a lack of capacity for patenting or licensing activities. Developing standard term sheets for licensing agreements can also reduce administrative burden and make it easier for TTOs to establish new partnerships.

Instead of TTOs, some universities have established affiliated technology intermediaries, which are organizations that take on the business aspects of technology commercialization. For example, the Wisconsin Alumni Research Foundation (WARF) was launched as an independent, nonprofit corporation to manage the University of Wisconsin–Madison’s vitamin D patents and invest the resulting revenue into future research at the university. Since its inception 90 years ago, WARF has provided $2.3 billion in grants to the university and helped establish 60 start-up companies.

In general, universities need to be more consistent about collecting and reporting key performance indicators for TTOs outside of the AUTM framework, such as the number of unlicensed patents and the number of products brought to the market using licensed technologies. In particular, universities should disaggregate metrics for licensing and partnerships between companies less than five years old and those greater than five years old so that stakeholders can see whether there is a difference in commercialization outcomes between incumbent and start-up licensees.

Practices and Programs Targeted at Innovation Ecosystems

Innovation ecosystems are made up of researchers, entrepreneurs, corporations, the workforce, government, and sources of capital. Geographic proximity through co-locating universities, corporations, start-ups, government research facilities, and other stakeholder institutions can help foster both formal and informal collaboration and result in significant technology-driven economic growth and benefits. Co-location may arise organically over time or result from the intentional development of research parks, such as the NASA Research Park. When done properly, the work of each stakeholder should advance a shared vision. This can create a virtuous cycle that attracts additional talent and stakeholders to the shared vision and can integrate with more traditional attraction and retention efforts. One such example is the co-location of the National Bio- and Agro-Defense Facility in Manhattan, KS, near the campus of Kansas State University. After securing that national lab, the university made investments in additional BSL-2, 3 and 3+ research facilities including the Biosecurity Research Institute and its Business Development Module. The construction and maintenance of those facilities required the creation of new workforce development programs to train HVAC technicians that manage the independent air handling capabilities of the labs and train biomanufacturing workers, which was then one of the selling points for the successful campaign for the relocation of corporation Scorpius Biologics to the region. At best, all elements of an innovation ecosystem are fueled by a research focus and the commercialization activity that it provides.

For regions that find themselves short of the talent they need, soft-landing initiatives can help attract domestic and international entrepreneurs, start-ups, and early-stage firms to establish part of their business in a new region or to relocate entirely. This process can be daunting for early-stage companies, so soft-landing initiatives aim to provide the support and resources that will help an early-stage company acclimatize and thrive in a new place. These initiatives help to expand the reach of a community, create a talent base, and foster the conditions for future economic growth and benefits.

Alongside the creation of innovation ecosystems should be the establishment of “scale-up ecosystems” focused on developing and scaling new manufacturing processes necessary to mass produce the new technologies being developed. This is often an overlooked aspect of technology development in the United States, and supply chain shocks over the past few years have shone a light on the need to develop more local manufacturing supply chains. Fostering the growth of manufacturing alongside technology innovation can (1) reduce the time cycling between product and process development in the commercialization process, (2) capture the “learning by doing” benefits from scaling the production of new technologies, and (3) replenish the number of middle-income jobs that have been outsourced over the past few decades.

Any way you slice it, commercialization capacity is one clear and critical input to a successful innovation ecosystem. However, it’s not the only element that’s important. A strong startup commercialization effort, standing alone, without the corporate, workforce, or government support that it needs to build a vibrant ecosystem around its entrepreneurs, might wane with time or simply be very successful at shipping spinouts off to a coastal hotspot. Building a commercialization pipeline is not, nor has it ever been, a one-size-fits-all solution for ecosystem building.

It may even be something we’ve over-indexed on, given the widespread adoption of tech-based economic development strategies. One significant reason for this is the fact that entrepreneurship via commercialization is most open to those who already have access to a great deal of privilege–who have attained, or are on the path to, graduate degrees in STEM fields critical to our national competitiveness. If you’ve already earned a Ph.D. in machine learning, chances are your future is looking pretty bright—with or without entrepreneurial opportunity involved. To truly reap the economic benefits of commercialization activity (and the startups it creates), we need to aggressively implement programs, training, and models that change the demographics of who gets to commercialize technology, not just how they do it. To shape this, we’ll need to change the conditions for success for early-career researchers and reconsider the established model of how we mentor and train the next generation of scientists and engineers–you’ll hear more from us on these topics in future posts!

Risk and Reward in Peer Review

This article was written as a part of the FRO Forecasting project, a partnership between the Federation of American Scientists and Metaculus. This project aims to conduct a pilot study of forecasting as an approach for assessing the scientific and societal value of proposals for Focused Research Organizations. To learn more about the project, see the press release here. To participate in the pilot, you can access the public forecasting tournament here.

The United States federal government is the single largest funder of scientific research in the world. Thus, the way that science agencies like the National Science Foundation and the National Institutes of Health distribute research funding has a significant impact on the trajectory of science as a whole. Peer review is considered the gold standard for evaluating the merit of scientific research proposals, and agencies rely on peer review committees to help determine which proposals to fund. However, peer review has its own challenges. It is a difficult task to balance science agencies’ dual mission of protecting government funding from being spent on overly risky investments while also being ambitious in funding proposals that will push the frontiers of science, and research suggests that peer review may be designed more for the former rather than the latter. We at FAS are exploring innovative approaches to peer review to help tackle this challenge.

Biases in Peer Review

A frequently echoed concern across the scientific and metascientific community is that funding agencies’ current approach to peer review of science proposals tends to be overly risk-averse, leading to bias against proposals that entail high risk or high uncertainty about the outcomes. Reasons for this conservativeness include reviewer preferences for feasibility over potential impact, contagious negativity, and problems with the way that peer review scores are averaged together.

This concern, alongside studies suggesting that scientific progress is slowing down, has led to a renewed effort to experiment with new ways of conducting peer review, such as golden tickets and lottery mechanisms. While golden tickets and lottery mechanisms aim to complement traditional peer review with alternate means of making funding decisions — namely individual discretion and randomness, respectively — they don’t fundamentally change the way that peer review itself is conducted.

Traditional peer review asks reviewers to assess research proposals based on a rubric of several criteria, which typically include potential value, novelty, feasibility, expertise, and resources. These criteria are given a score based on a numerical scale; for example, the National Institutes of Health uses a scale from 1 (best) to 9 (worst). Reviewers then provide an overall score that need not be calculated in any specific way based on the criteria scores. Next, all of the reviewers convene to discuss the proposal and submit their final overall scores, which may be different from what they submitted prior to the discussion. The final overall scores are averaged across all of the reviewers for a specific proposal. Proposals are then ranked based on their average overall score and funding is prioritized for those ranked before a certain cutoff score, though depending on the agency, some discretion by program administrators is permitted.

The way that this process is designed allows for the biases mentioned at the beginning—reviewer preferences for feasibility, contagious negativity, and averaging problems—to influence funding decisions. First, reviewer discretion in deciding overall scores allows them to weigh feasibility more heavily than potential impact and novelty in their final scores. Second, when evaluations are discussed reviewers tend to adjust their scores to better align with their peers. This adjustment tends to be greater when correcting in the negative direction than in the positive direction, resulting in a stronger negative bias. Lastly, since funding tends to be quite limited, cutoff scores tend to be quite close to the best score. This means that even if almost all of the reviewers rate a proposal positively, one very negative review can potentially bring the average below the cutoff.

Designing a New Approach to Peer Review

In 2021, the researchers Chiara Franzoni and Paula Stephan published a working paper arguing that risk in science results from three sources of uncertainty: uncertainty of research outcomes, uncertainty of the probability of success, and uncertainty of the value of the research outcomes. To comprehensively and consistently account for these sources of uncertainty, they proposed a new expected utility approach to peer review evaluations, in which reviewers are asked to

- Identify the primary expected outcome of a research proposal and, optionally, a potential secondary outcome;

- Assess the probability between 0 to 1 of achieving each expected outcome (P(j); and

- Assess the value of achieving each expected outcome (uj) on a numerical scale (e.g., 0 to 100).

From this, the total expected utility can be calculated for each proposal and used to rank them.1 This systematic approach addresses the first bias we discussed by limiting the extent to which reviewers’ preferences for more feasible proposals would impact the final score of each proposal.

We at FAS see a lot of potential in Franzoni and Stephan’s expected value approach to peer review, and it inspired us to design a pilot study using a similar approach that aims to chip away at the other biases in review.

To explore potential solutions for negativity bias, we are taking a cue from forecasting by complementing the peer review process with a resolution and scoring process. This means that at a set time in the future, reviewers’ assessments will be compared to a ground truth based on the actual events that have occurred (i.e., was the outcome actually achieved and, if so, what was its actual impact?). Our theory is that if implemented in peer review, resolution and scoring could incentivize reviewers to make better, more accurate predictions over time and provide empirical estimates of a committee’s tendency to provide overly negative (or positive) assessments, thus potentially countering the effects of contagion during review panels and helping more ambitious proposals secure support.

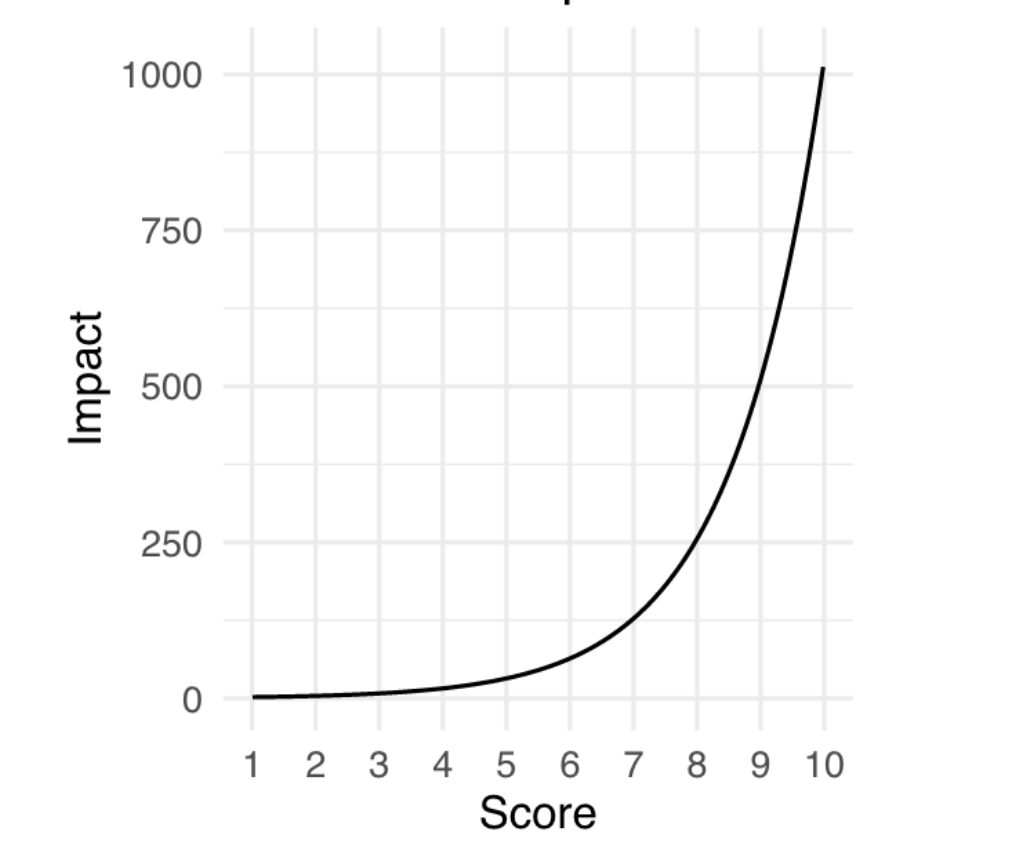

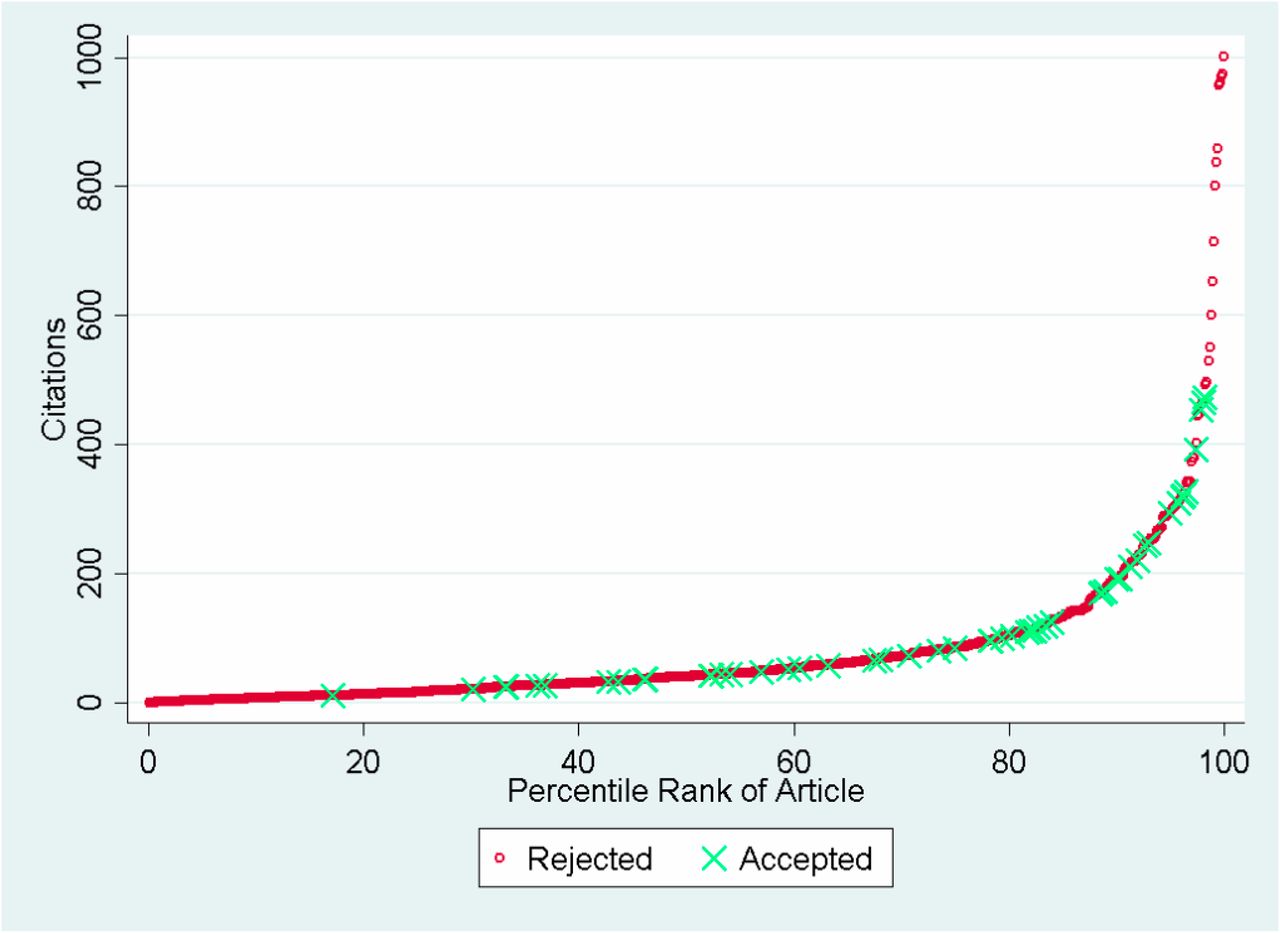

Additionally, we sought to design a new numerical scale for assessing the value or impact of a research proposal, which we call an impact score. Typically, peer reviewers are free to interpret the numerical scale for each criteria as they wish; Franzoni and Stephan’s design also did not specify how the numerical scale for the value of the research outcome should work. We decided to use a scale ranging from 1 (low) to 10 (high) that was base 2 exponential, meaning that a proposal that receives a score of 5 has double the impact of a proposal that receives a score of 4, and quadruple the impact of a proposal that receives a score of 3.

The choice of an exponential scale reflects the tendency in science for a small number of research projects to have an outsized impact (Figure 2), and provides more room at the top end of the scale for reviewers to increase the rating of the proposals that they believe will have an exceptional impact. We believe that this could help address the last bias we discussed, which is that currently, bad scores are more likely to pull a proposal’s average below the cutoff than good scores are likely to pull a proposal’s average above the cutoff.

Source: Siler, Lee, and Bero (2014)

We are now piloting this approach on a series of proposals in the life sciences that we have collected for Focused Research Organizations, a new type of non-profit research organization designed to tackle challenges that neither academia or industry is incentivized to work on. The pilot study was developed in collaboration with Metaculus, a forecasting platform and aggregator, and will be hosted on their website. We welcome subject matter experts in the life sciences — or anyone interested! — to participate in making forecasts on these proposals here. Stay tuned for the results of this pilot, which we will publish in a report early next year.

Collaboration for the Future of Public and Active Transportation

Summary

Public and active transportation are not equally accessible to all Americans. Due to a lack of sufficient infrastructure and reliable service for public transportation and active modes like biking, walking, and rolling, Americans must often depend on personal vehicles for travel to work, school, and other activities. During the past two years, Congress has allocated billions of dollars to equitable infrastructure, public transportation upgrades, and decreasing greenhouse gas pollution from transportation across the United States. The Department of Transportation (DOT) and its agencies should embrace innovation and partnerships to continue to increase active and public transportation across the country. The DOT should require grant applications for funding to discuss cross-agency collaborations, partner with the Department of Housing and Urban Development (HUD) to organize prize competitions, encourage public-private partnerships (P3s), and work with the Environmental Protection Agency (EPA) to grant money for transit programs through the Greenhouse Gas Reduction Fund.

Challenge and Opportunity

Historically, U.S. investment in transportation has focused on expanding and developing highways for personal vehicle travel. As a result, 45% of Americans do not have access to reliable and safe public transportation, perpetuating the need for single-use vehicles for almost half of the country. The EPA reports that transportation accounts for 29% of total U.S. greenhouse gas emissions, with 58% of those emissions coming from light-duty cars. This large share of nationwide emissions from personal vehicles has short- and long-term climate impacts.

Investments in green public and active transit should be a priority for the DOT in transitioning away from a personal-vehicle-dominated society and meeting the Biden Administration’s “goals of a 100% clean electrical grid by 2035 and net-zero carbon emissions by 2050.” Public and active transportation infrastructure includes bus systems, light rail, bus rapid transit, bike lanes, and safe sidewalks. Investments in public and active transportation should go towards a combination of electrifying existing public transportation, such as buses; improving and expanding public transit to be more reliable and accessible for more users; constructing bike lanes; developing community-owned bike share programs; and creating safe walking corridors.

In addition to reducing carbon emissions, improved public transportation that disincentivizes personal vehicle use has a variety of co-benefits. Prioritizing public and active transportation could limit congestion on roads and lower pollution. Fewer vehicles on the road result in less tailpipe emissions, which “can trigger health problems such as aggravated asthma, reduced lung capacity, and increased susceptibility to respiratory illnesses, including pneumonia and bronchitis.” This is especially important for the millions of people who live near freeways and heavily congested roads.

Congestion can also be financially costly for American households; the INRIZ Global Traffic Scorecard reports that traffic congestion cost the United States $81 billion in 2022. Those costs include vehicle maintenance, fuel cost, and “lost time,” all of which can be reduced with reliable and accessible public and active transportation. Additionally, the American Public Transportation Association reports that every $1 invested in public transportation generates $5 in economic returns, measured by savings in time traveled, reduction in traffic congestion, and business productivity. Thus, by investing in public transportation, communities can see improvements in air quality, economy, and health.

Public transportation is primarily managed at the local and state level; currently, over 6000 local and state transportation agencies provide and oversee public transportation in their regions. Public transportation is funded through federal, state, and local sources, and transit agencies receive funding from “passenger fares and other operating receipts.” The Federal Transit Administration (FTA) distributes funding for transit through grants and loans and accounts for 15% of total income for transit agencies, including 31% of capital investments in transit infrastructure. Local and state entities often lack sufficient resources to improve public transportation systems because of the uncertainty of ridership and funding streams.

Public-private partnerships can help alleviate some of these resource constraints because contracts can allow the private partner to operate public transportation systems. Regional and national collaboration across multiple agencies from the federal to the municipal level can also help alleviate resource barriers to public transit development. Local and state agencies do not have to work alone to improve public and active transportation systems.

The following recommendations provide a pathway for transportation agencies at all levels of government to increase public and active transportation, resulting in social, economic, and environmental benefits for the communities they serve.

Plan of Action

Recommendation 1. The FTA should require grant applicants for programs such as the Rebuilding American Infrastructure with Sustainability and Equity (RAISE) to define how they will work collaboratively with multiple federal agencies and conduct community engagement.

Per the National Blueprint for Transportation Decarbonization, FTA staff should prioritize funding for grant applicants who successfully demonstrate partnerships and collaboration. This can be demonstrated, for example, with letters of support from community members and organizations for transit infrastructure projects. Collaboration can also be demonstrated by having applicants report clear goals, roles, and responsibilities for each agency involved in proposed projects. The FTA should:

- Develop a rubric for evaluating partnerships’ efficiency and alignment with national transit decarbonization goals.

- Create a tiered metrics system within the rubric that prioritizes grants for projects based on collaboration and reduction of greenhouse gas emissions in the transit sector.

- Add a category to their Guidance Center on federal-state-local partnerships to provide insight on how they view successful collaboration.

Recommendation 2. The DOT and HUD should collaborate on a prize competition to design active and/or public transportation projects to reduce traffic congestion.

Housing and transportation costs are related and influence one another, which is why HUD is a natural partner. Funding can be sourced from the Highway Trust Fund, which the DOT has the authority to allocate up to “1% of the funds for research and development to carry out . . . prize competition program[s].”

This challenge should call on local agency partners to provide a design challenge or opportunity that impedes their ability to adopt transit-oriented infrastructure that could reduce traffic congestion. Three design challenges should be selected and publicly posted on the Challenge.gov website so that any individual or organization can participate.

The goal of the prize competition is to identify challenges, collaborate, and share resources across agencies and communities to design transportation solutions. The competition would connect the DOT with local and regional planning and transportation agencies to solicit solutions from the public, whether from individuals, teams of individuals, or organizations. The DOT and HUD should work collaboratively to design the selection criteria for the challenge and select the winners. Each challenge winner would be provided with a financial prize of $250,000, and their idea would be housed on the DOT website as a case study that can be used for future planning decisions. The local agencies that provide the three design challenges would be welcome to implement the winning solutions.

Recommendation 3. Federal, state, and local government should increase opportunities for public-private partnerships (P3s).

The financial investment required to develop active and public transportation infrastructure is a hurdle for many agencies. To address this issue, we make the following recommendations:

- Currently, only 36 out of the 50 states have policies that allow the use of P3s. The remaining 14 states should pass legislation authorizing the use of P3s for public transportation projects so that they too can benefit from this financing model and access federal P3 funding opportunities.

- In 2016, the DOT launched the Build America Bureau to assist with financing transportation projects. The Bureau administers the Transportation Infrastructure Finance and Innovation Act (TIFIA) program, which provides financial assistance through low-interest loans for infrastructure projects and leverages public-private partnerships to access additional private-sector funding. Currently, only about 30% of all loans through the TIFIA are used for public transit projects while 66% are used on tolls and highways. Local and regional agencies should use the TIFIA loan more to fund public and active transit projects.

- EPA should specify in its Greenhouse Gas Reduction Fund guidelines that public and active transit projects are eligible for investment from the fund and can leverage public and private partnerships. EPA is set to distribute $27 billion through the Fund for carbon pollution reduction: $20 billion will go towards nonprofit entities, such as green banks, that will leverage public and private investment to fund emissions reduction projects, with $8 billion allocated to projects in low-income and disadvantaged communities; $7 billion will go to state and local agencies and nonprofits in the form of grants or technical assistance to low-income and disadvantaged communities. EPA should encourage applicants to include public and active transportation projects, which can play a significant role in reducing carbon emissions and air pollution, in their portfolios.

Conclusion

The road to decarbonizing the transportation sector requires public and active transportation. Federal agencies can allocate funding for public and active transit more effectively through the recommendations above. It’s time for the government to recognize public and active transportation as the key to equitable decarbonization of the transportation sector throughout the United States.

Most P3s in the United States are for highways, bridges, and roads, but there have been a few successful public transit P3s. In 2018 the City of Los Angeles joined LAX and LAX Integrated Express Solutions in a $4.9 billion P3 to develop a train system within the airport. This project aims to launch in 2024 to “enhance the traveler experience” and will “result in 117,000 fewer vehicle miles traveled per day” to the airport. This project is a prime example of how P3s can help reduce traffic congestion and enable and encourage the use of public transportation.

In 2021, the Congressional Research Service released a report about public-private partnerships (3Ps) that highlights the role the federal government can play by making it easier for agencies to participate in P3s.

The state of Michigan has a long history with its Michigan Saves program, the nation’s first nonprofit green bank, which provides funding for projects like rooftop solar or energy efficiency programs.

In California the California Alternative Energy and Advanced Transportation Financing Authority works “collaboratively with public and private partners to provide innovative and effective financing solutions” for renewable energy sources, energy efficiency, and advanced transportation and manufacturing technologies.

The Rhode Island Infrastructure Bank provides funding to municipalities, businesses, and homeowners for projects “including water and wastewater, roads and bridges, energy efficiency and renewable energy, and brownfield remediation.”

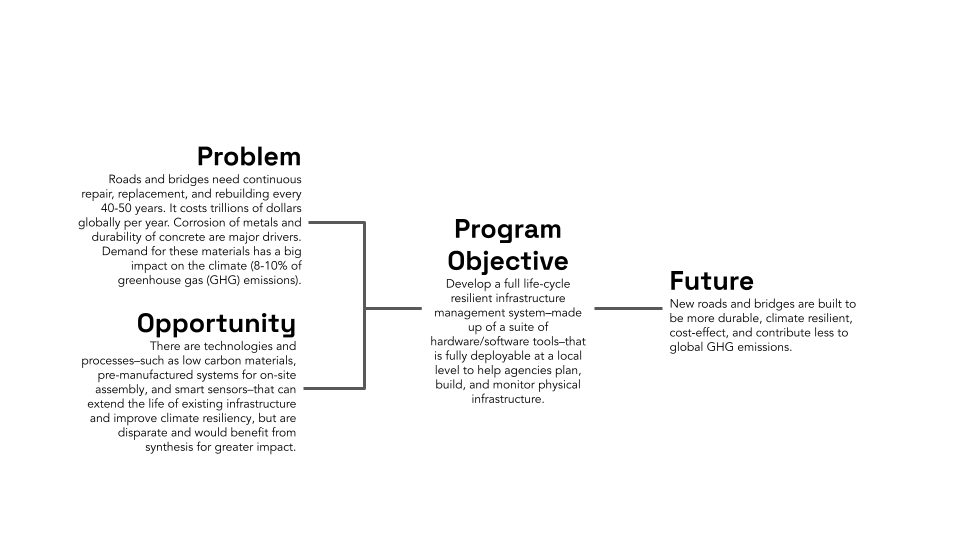

Applying ARPA-I: A Proven Model for Transportation Infrastructure

Executive Summary

In November 2021, Congress passed the Infrastructure Investment and Jobs Act (IIJA), which included $550 billion in new funding for dozens of new programs across the U.S. Department of Transportation (USDOT). Alongside historic investments in America’s roads and bridges, the bill created the Advanced Research Projects Agency-Infrastructure (ARPA-I). Building on successful models like the Defense Advanced Research Projects Agency (DARPA) and the Advanced Research Program-Energy (ARPA-E), ARPA-I’s mission is to bring the nation’s most innovative technology solutions to bear on our most significant transportation infrastructure challenges.

ARPA-I must navigate America’s uniquely complex infrastructure landscape, characterized by limited federal research and development funding compared to other sectors, public sector ownership and stewardship, and highly fragmented and often overlapping ownership structures that include cities, counties, states, federal agencies, the private sector, and quasi-public agencies. Moreover, the new agency needs to integrate the strong culture, structures, and rigorous ideation process that ARPAs across government have honed since the 1950s. This report is a primer on how ARPA-I, and its stakeholders, can leverage this unique opportunity to drive real, sustainable, and lasting change in America’s transportation infrastructure.

How to Use This Report

This report highlights the opportunity ARPA-I presents; orients those unfamiliar with the transportation infrastructure sector to the unique challenges it faces; provides a foundational understanding of the ARPA model and its early-stage program design; and empowers experts and stakeholders to get involved in program ideation. However, individual sections can be used as standalone tools depending on the reader’s prior knowledge of and intended involvement with ARPA-I.

- If you are unfamiliar with the background, authorization, and mission of ARPA-I, refer to the section “An Opportunity for Transportation Infrastructure Innovation.”

- If you are relatively new to the transportation infrastructure sector, refer to the section “Unique Challenges of the Transportation Infrastructure Landscape.”

- If you have prior transportation infrastructure experience or expertise but are new to the ARPA model, you can move directly to the sections beginning with “Core Tenets of ARPA Success.”

An Opportunity for Transportation Infrastructure Innovation

In November 2021, Congress passed the Infrastructure Investment and Jobs Act (IIJA) authorizing the U.S. Department of Transportation (USDOT) to create the Advanced Research Projects Agency-Infrastructure (ARPA-I), among other new programs. ARPA-I’s mission is to advance U.S. transportation infrastructure by developing innovative science and technology solutions that:

- lower the long-term cost of infrastructure development, including costs of planning, construction, and maintenance;

- reduce the life cycle impacts of transportation infrastructure on the environment, including through the reduction of greenhouse gas emissions;

- contribute significantly to improving the safe, secure, and efficient movement of goods and people; and

- promote the resilience of infrastructure from physical and cyber threats.

ARPA-I will achieve this goal by supporting research projects that:

- advance novel, early-stage research with practicable application to transportation infrastructure;

- translate techniques, processes, and technologies, from the conceptual phase to prototype, testing, or demonstration;

- develop advanced manufacturing processes and technologies for the domestic manufacturing of novel transportation-related technologies; and

- accelerate transformational technological advances in areas in which industry entities are unlikely to carry out projects due to technical and financial uncertainty.

ARPA-I is the newest addition to a long line of successful ARPAs that continue to deliver breakthrough innovations across the defense, intelligence, energy, and health sectors. The U.S. Department of Defense established the pioneering Defense Advanced Research Projects Agency (DARPA) in 1958 in response to the Soviet launch of the Sputnik satellite to develop and demonstrate high-risk, high-reward technologies and capabilities to ensure U.S. military technological superiority and confront national security challenges. Throughout the years, DARPA programs have been responsible for significant technological advances with implications beyond defense and national security, such as the early stages of the internet, the creation of the global positioning system (GPS), and the development of mRNA vaccines critical to combating COVID-19.

In light of the many successful advancements seeded through DARPA programs, the government replicated the ARPA model for other critical sectors, resulting in the Intelligence Advanced Research Projects Activity (IARPA) within the Office of the Director of National Intelligence, the Advanced Research Projects Agency-Energy within the Department of Energy, and, most recently, the Advanced Research Projects Agency-Health (ARPA-H) within the Department of Health and Human Services.

Now, there is the opportunity to bring that same spirit of untethered innovation to solve the most pressing transportation infrastructure challenges of our time. The United States has long faced a variety of transportation infrastructure-related challenges, due in part to low levels of federal research and development (R&D) spending in this area; the fragmentation of roles across federal, state, and local government; risk-averse procurement practices; and sluggish commercial markets. These challenges include:

- Roadway safety. According to the National Highway Traffic Safety Administration, an estimated 42,915 people died in motor vehicle crashes in 2021, up 10.5% from 2020.

- Transportation emissions. According to the U.S. Environmental Protection Agency, the transportation sector accounted for 27% of U.S. greenhouse gas (GHG) emissions in 2020, more than any other sector.

- Aging infrastructure and maintenance. According to the 2021 Report Card for America’s Infrastructure produced by the American Society of Civil Engineers, 42% of the nation’s bridges are at least 50 years old and 7.5% are “structurally deficient.”

The Fiscal Year 2023 Omnibus Appropriations Bill awarded ARPA-I its initial appropriation in early 2023. Yet even before that, the Biden-Harris Administration saw the potential for ARPA-I-driven innovations to help meet its goal of net-zero GHG emissions by 2050, as articulated in its Net-Zero Game Changers Initiative. In particular, the Administration identified smart mobility, clean and efficient transportation systems, next-generation infrastructure construction, advanced electricity infrastructure, and clean fuel infrastructure as “net-zero game changers” that ARPA-I could play an outsize role in helping develop.

For ARPA-I programs to reach their full potential, agency stakeholders and partners need to understand not only how to effectively apply the ARPA model but how the unique circumstances and challenges within transportation infrastructure need to be considered in program design.

Unique Challenges of the Transportation Infrastructure Landscape

Using ARPA-I to advance transportation infrastructure breakthroughs requires an awareness of the most persistent challenges to prioritize and the unique set of circumstances within the sector that can hinder progress if ignored. Below are summaries of key challenges and considerations for ARPA-I to account for, followed by a deeper analysis of each challenge.

- Federal R&D spending on transportation infrastructure is considerably lower than other sectors, such as defense, healthcare, and energy, as evidenced by federal spending as a percentage of that sector’s contribution to gross domestic product (GDP).

- The transportation sector sees significant private R&D investment in vehicle and aircraft equipment but minimal investment in transportation infrastructure because the benefits from those investments are largely public rather than private.

- Market fragmentation within the transportation system is a persistent obstacle to progress, resulting in reliance on commercially available technologies and transportation agencies playing a more passive role in innovative technology development.

- The fragmented market and multimodal nature of the sector pose challenges for allocating R&D investments and identifying customers.

Lower Federal R&D Spending in Transportation Infrastructure

Federal R&D expenditures in transportation infrastructure lag behind those in other sectors. This gap is particularly acute because, unlike for some other sectors, federal transportation R&D expenditures often fund studies and systems used to make regulatory decisions rather than technological innovation. The table below compares actual federal R&D spending and sector expenditures for 2019 across defense, healthcare, energy, and transportation as a percentage of each sector’s GDP. The federal government spends orders of magnitude less on transportation than other sectors: energy R&D spending as a percentage of sector GDP is nearly 15 times higher than transportation, while health is 13 times higher and defense is nearly 38 times higher.

Public Sector Dominance Limits Innovation Investment

Since 1990, total investment in U.S. R&D has increased by roughly 9 times. When looking at the source of R&D investment over the same period, the private and public sectors invested approximately the same amount of R&D funding in 1982, but today the rate of R&D investment is nearly 4 times greater for the private industry than the government.

While there are problems with the bulk of R&D coming from the private sector, such as innovations to promote long-term public goods being overlooked because of more lucrative market incentives, industries that receive considerable private R&D funding still see significant innovation breakthroughs. For example, the medical industry saw $161.8 billion in private R&D funding in 2020 compared to only $61.5 billion from federal funding. More than 75% of this private industry R&D occurred within the biopharmaceutical sector where corporations have profit incentives to be at the cutting edge of advancements in medicine.

The transportation sector has one robust domain for private R&D investment: vehicle and aircraft equipment manufacturing. In 2018, total private R&D was $52.6 billion. Private sector transportation R&D focuses on individual customers and end users, creating better vehicles, products, and efficiencies. The vast majority of that private sector R&D does not go toward infrastructure because the benefits are largely public rather than private. Put another way, the United States invests more than 50 times the amount of R&D into vehicles than the infrastructure systems upon which those vehicles operate.

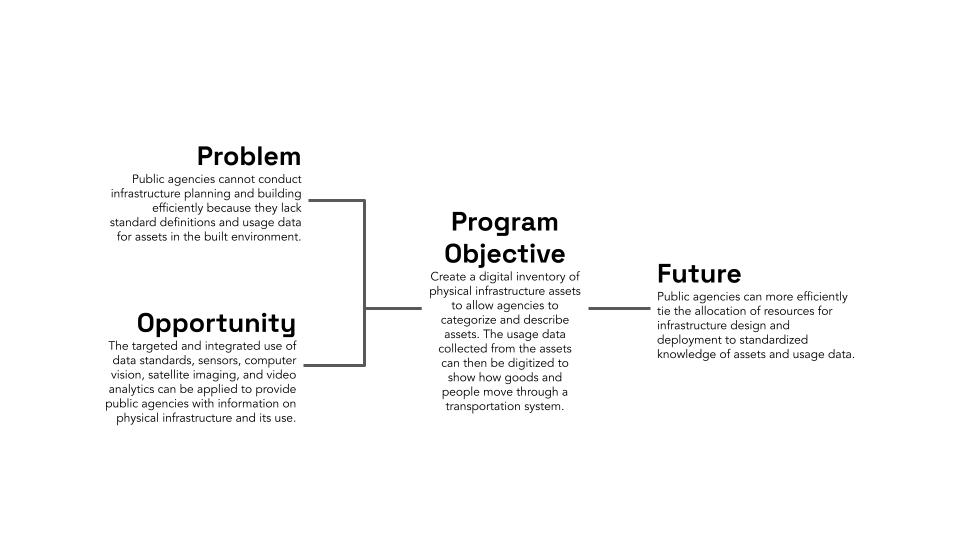

Market Fragmentation across Levels of Government

Despite opportunities within the public-dominated transportation infrastructure system, market fragmentation is a persistent obstacle to rapid progress. Each level of government has different actors with different objectives and responsibilities. For instance, at the federal level, USDOT provides national-level guidance, policy, and funding for transportation across aviation, highway, rail, transit, ports, and maritime modes. Meanwhile, the states set goals, develop transportation plans and projects, and manage transportation networks like the interstate highway system. Metropolitan planning organizations take on some of the planning functions at the regional level, and local governments often maintain much of their infrastructure. There are also local individual agencies that operate facilities like airports, ports, or tollways organized at the state, regional, or local level. Programs that can use partnerships to cut across this tapestry of systems are essential to driving impact at scale.

Local agencies have limited access and capabilities to develop cross-sector technologies. They have access to limited pools of USDOT funding to pilot technologies and thus generally rely on commercially available technologies to increase the likelihood of pilot success. One shortcoming of this current process is that both USDOT and infrastructure owner-operators (IOOs) play a more passive role in developing innovative technologies, instead depending on merely deploying market-ready technologies.

Multiple Modes, Customers, and Jurisdictions Create Difficulties in Efficiently Allocating R&D Resources

The transportation infrastructure sector is a multimodal environment split across many modes, including aviation, maritime, pipelines, railroads, roadways (which includes biking and walking), and transit. Each mode includes various customers and stakeholders to be considered. In addition, in the fragmented market landscape federal, state, and local departments of transportation have different—and sometimes competing—priorities and mandates. This dynamic creates difficulties in allocating R&D resources and considering access to innovation across these different modes.

Customer identification is not “one size fits all” across existing ARPAs. For example, DARPA has a laser focus on delivering efficient innovations for one customer: the Department of Defense. For ARPA-E, it is less clear; their customers range from utility companies to homeowners looking to benefit from lower energy costs. ARPA-I would occupy a space in between these two cases, understanding that its end users are IOOs—entities responsible for deploying infrastructure in many cases at the local or regional level.

However, even with this more direct understanding of its customers, a shortcoming of a system focused on multiple modes is that transportation infrastructure is very broad, occupying everything from self-healing concrete to intersection safety to the deployment of electrified mobility and more. Further complicating matters is the rapid evolution of technologies and expectations across all modes, along with the rollout of entirely new modes of transportation. These developments raise questions about where new technologies and capabilities fit in existing modal frameworks, what actors in the transportation infrastructure market should lead their development, and who the ultimate “customers” or end users of innovation are.

Having a matrixed understanding of the rapid technological evolution across transportation modes and their potential customers is critical to investing in and building infrastructure for the future, given that transportation infrastructure investments not only alter a region’s movement of people and goods but also fundamentally impact its development. ARPA-I is poised to shape learnings across and in partnership with USDOT’s modes and various offices to ensure the development and refinement of underlying technologies and approaches that serve the needs of the entire transportation system and users across all modes.

Core Tenets of ARPA Success

Success using the ARPA model comes from demonstrating new innovative capabilities, building a community of people (an “ecosystem”) to carry the progress forward, and having the support of key decision-makers. Yet the ARPA model can only be successful if its program directors (PDs), fellows, stakeholders, and other partners understand the unique structure and inherent flexibility required when working to create a culture conducive to spurring breakthrough innovations. From a structural and cultural standpoint, the ARPA model is unlike any other agency model within the federal government, including all existing R&D agencies. Partners and other stakeholders should embrace the unique characteristics of an ARPA.

Cultural Components

ARPAs should take risks.

An ARPA portfolio may be the closest thing to a venture capital portfolio in the federal government. They have a mandate to take big swings so should not be limited to projects that seem like safe bets. ARPAs will take on many projects throughout their existence, so they should balance quick wins with longer-term bets while embracing failure as a natural part of the process.

ARPAs should constantly evaluate and pivot when necessary.

An ARPA needs to be ruthless in its decision-making process because it has the ability to maneuver and shift without the restriction of initial plans or roadmaps. For example, projects around more nascent technology may require more patience, but if assessments indicate they are not achieving intended outcomes or milestones, PDs should not be afraid to terminate those projects and focus on other new ideas.

ARPAs should stay above the political fray.

ARPAs can consider new and nontraditional ways to fund innovation, and thus should not be caught up in trends within their broader agency. As different administrations onboard, new offices get built and partisan priorities may shift, but ARPAs should limit external influence on their day-to-day operations.

ARPA team members should embrace an entrepreneurial mindset.

PDs, partners, and other team members need to embrace the creative freedom required for success and operate much like entrepreneurs for their programs. Valued traits include a propensity toward action, flexibility, visionary leadership, self-motivation, and tenacity.

ARPA team members must move quickly and nimbly.

Trying to plan out the agency’s path for the next two years, five years, 10 years, or beyond is a futile effort and can be detrimental to progress. ARPAs require ultimate flexibility from day to day and year to year. Compared to other federal initiatives, ARPAs are far less bureaucratic by design, and forcing unnecessary planning and bureaucracy on the agency will slow progress.

Collegiality must be woven into the agency’s fabric.

With the rapidly shifting and entrepreneurial nature of ARPA work, the federal staff, contractors, and other agency partners need to rely on one another for support and assistance to seize opportunities and continue progressing as programs mature and shift.

Outcomes matter more than following a process.

ARPA PDs must be free to explore potential program and project ideas without any predetermination. The agency should support them in pursuing big and unconventional ideas unrestricted by a particular process. While there is a process to turn their most unconventional and groundbreaking ideas into funded and functional projects, transformational ideas are more important than the process itself during idea generation.

ARPA team members welcome feedback.

Things move quickly in an ARPA, and decisions must match that pace, so individuals such as fellows and PDs must work together to offer as much feedback as possible. Constructive pushback helps avoid blind alleys and thus makes programs stronger.

Structural Components

The ARPA Director sets the vision.

The Director’s vision helps attract the right talent and appropriate levels of ambition and focus areas while garnering support from key decision-makers and luminaries. This vision will dictate the types and qualities of PDs an ARPA will attract to execute within that vision.

PDs can make or break an ARPA and set the technical direction.

Because the power of the agency lies within its people, ARPAs are typically flat organizations. An ARPA should seek to hire the best and most visionary thinkers and builders as PDs, enable them to determine and design good programs, and execute with limited hierarchical disruption. During this process, PDs should engage with decision-makers in the early stages of the program design to understand the needs and realities of implementers.

Contracting helps achieve goals.

The ARPA model allows PDs to connect with universities, companies, nonprofits, organizations, and other areas of government to contract necessary R&D. This allows the program to build relationships with individuals without needing to hire or provide facilities or research laboratories.

Interactions improve outcomes.

From past versions of ARPA that attempted remote and hybrid environments, it became evident that having organic collisions across an ARPA’s various roles and programs is important to achieving better outcomes. For example, ongoing in-person interactions between and among PDs and technical advisors are critical to idea generation and technical project and program management.

Staff transitions must be well facilitated to retain institutional knowledge.

One of ARPA’s most unique structural characteristics is its frequent turnover. PDs and fellows are term-limited, and ARPAs are designed to turn over those key positions every few years as markets and industries evolve, so having thoughtful transition processes in place is vital, including considering the role of systems engineering and technical assistance (SETA) contractors in filling knowledge gaps, cultivating an active alumni network, and staggered hiring cycles so that large numbers of PDs and fellows are not all exiting their service at once.

Scaling should be built into the structure.